Submitted:

12 October 2023

Posted:

13 October 2023

You are already at the latest version

Abstract

Keywords:

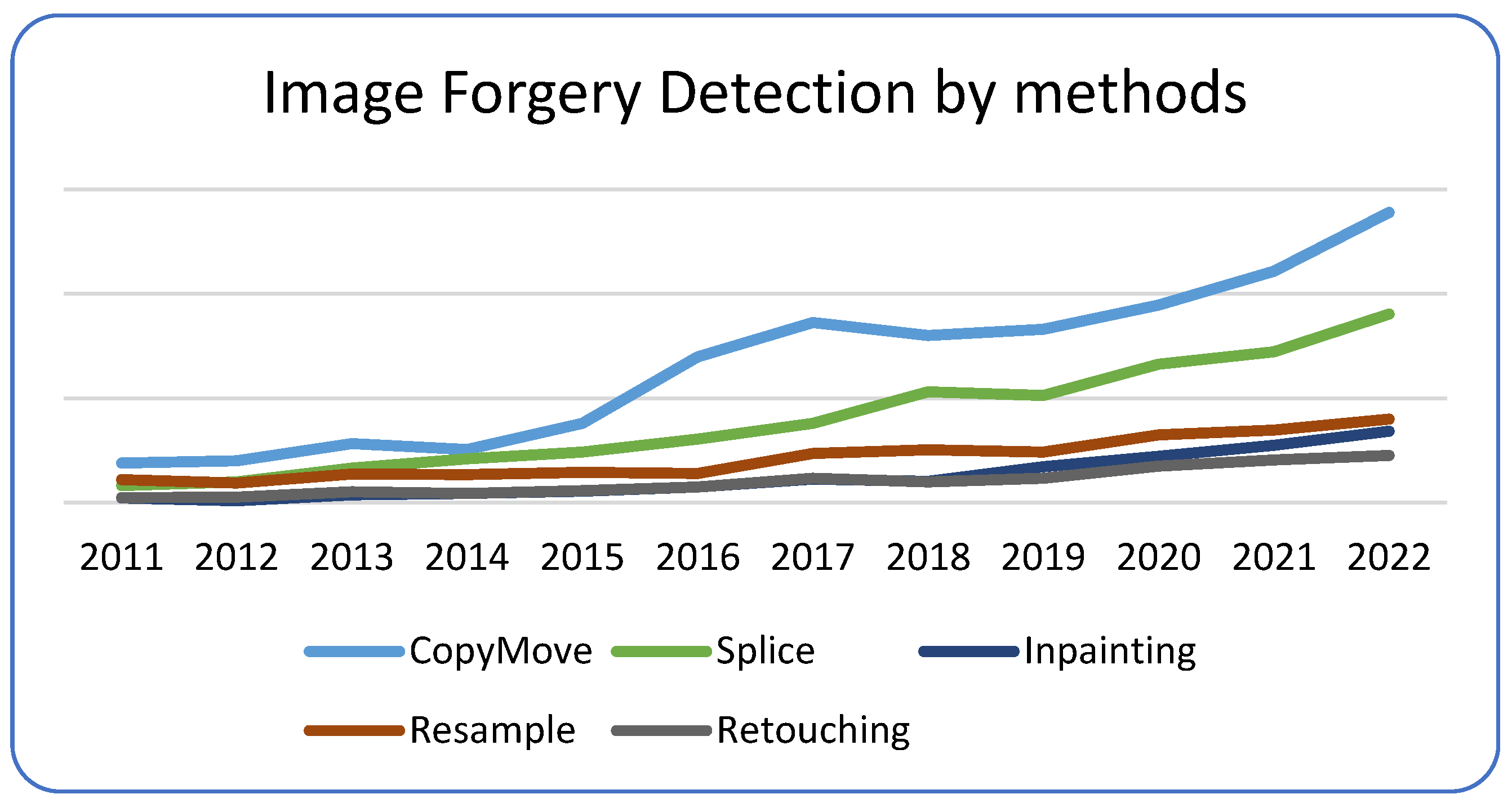

1. Introduction

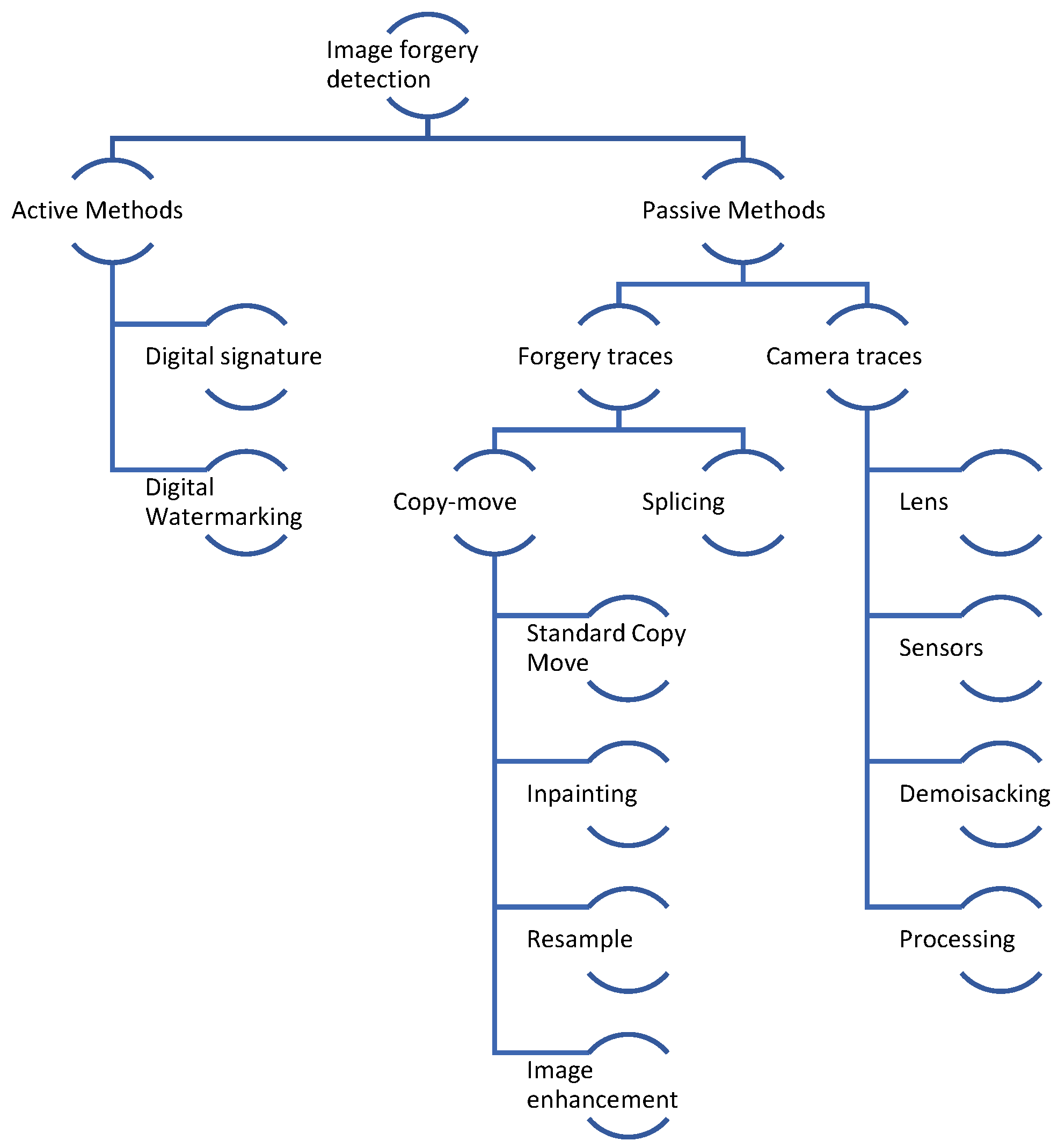

- Active methods: briefly, the main idea here is to incorporate various information that can be validated later on, in the moment of image acquisition.

- Passive methods: here the area is quite big. Some of these methods focus on the peculiarities of image capturing, camera identification, noise detection, image inconsistencies, or on some specific type of traces which are usually introduced by the forgery mechanism – for e.g. for a copy-paste forgery (combining information multiple images) – some traces like inconsistent coloring, noising, blur, etc., might be noticed.

- Copy-paste methods: in this case the picture is altered by copying parts of the original image into the same image. Of course, things like re-sample & rescaling can be included; usually both resampling and rescaling at their core are not methods of altering but are rather used as a step to apply copy-paste or splicing methods.

- Splicing: the forged media is obtained by combining several images into one; e.g., taking the picture of someone and adding it within another one;

2. Inpainting methods

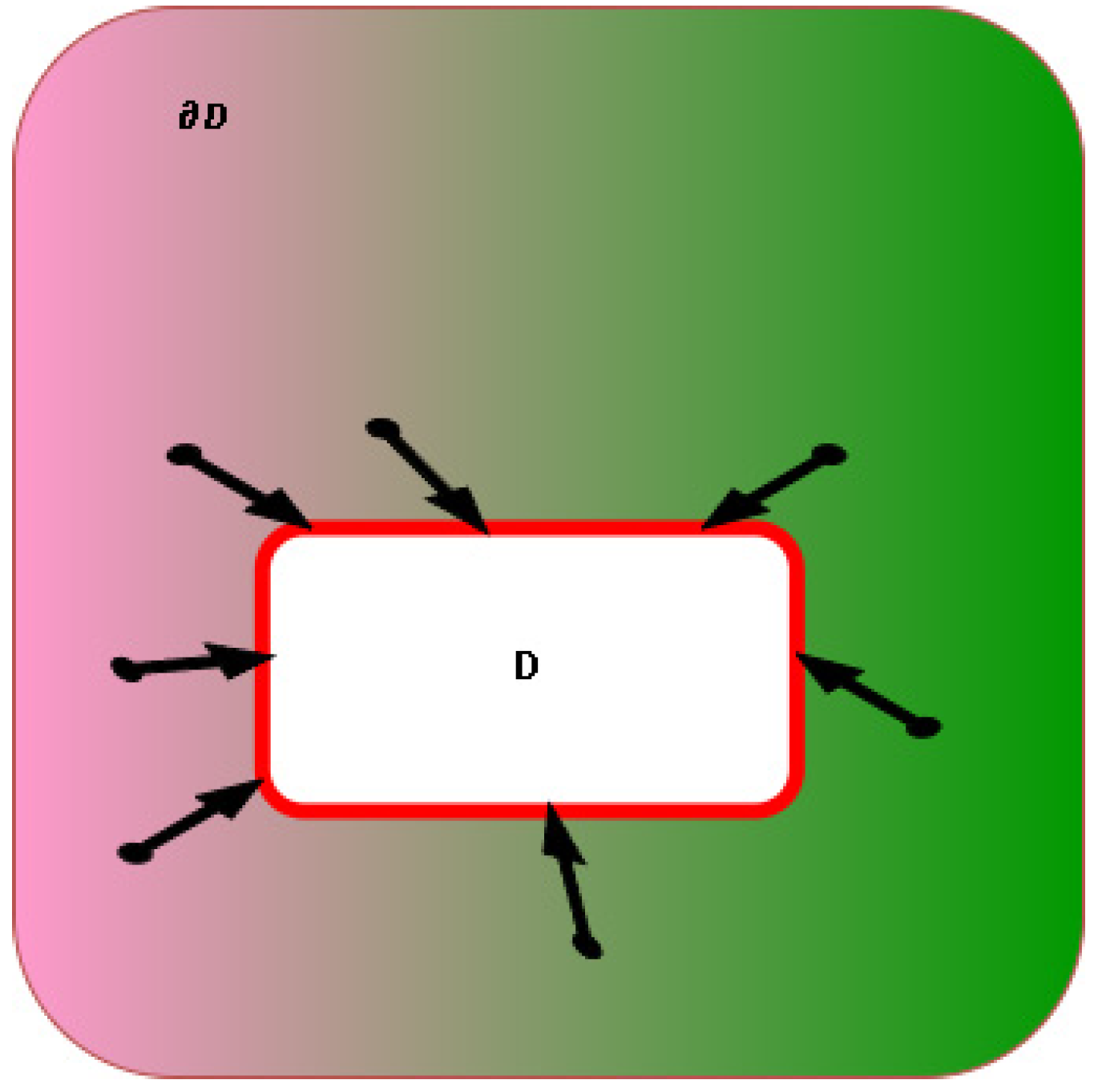

- each pixel is defined as follows: n represents the coordinate system-usually 2

- m represents the color space representation of that pixel (usually 3 for an RGB space)

- Diffusion based or sometimes called Partial Differential Equations based

- Exemplar based or patch based as it refers in some other papers

- Machine Learning based, usually we shall address all machine learning algorithms inside this category although [9] splits the machine learning based into several categories based on the model construction

2.1. Diffusion based methods

- Global image properties enforce how to fill in the missing area

- The layout of the area is continued into (all edges are preserved)

- The area D is split into regions and each region is filled with the color matching (the color information is preserved from the bounding area into the rest of the D area)

- Texture is added

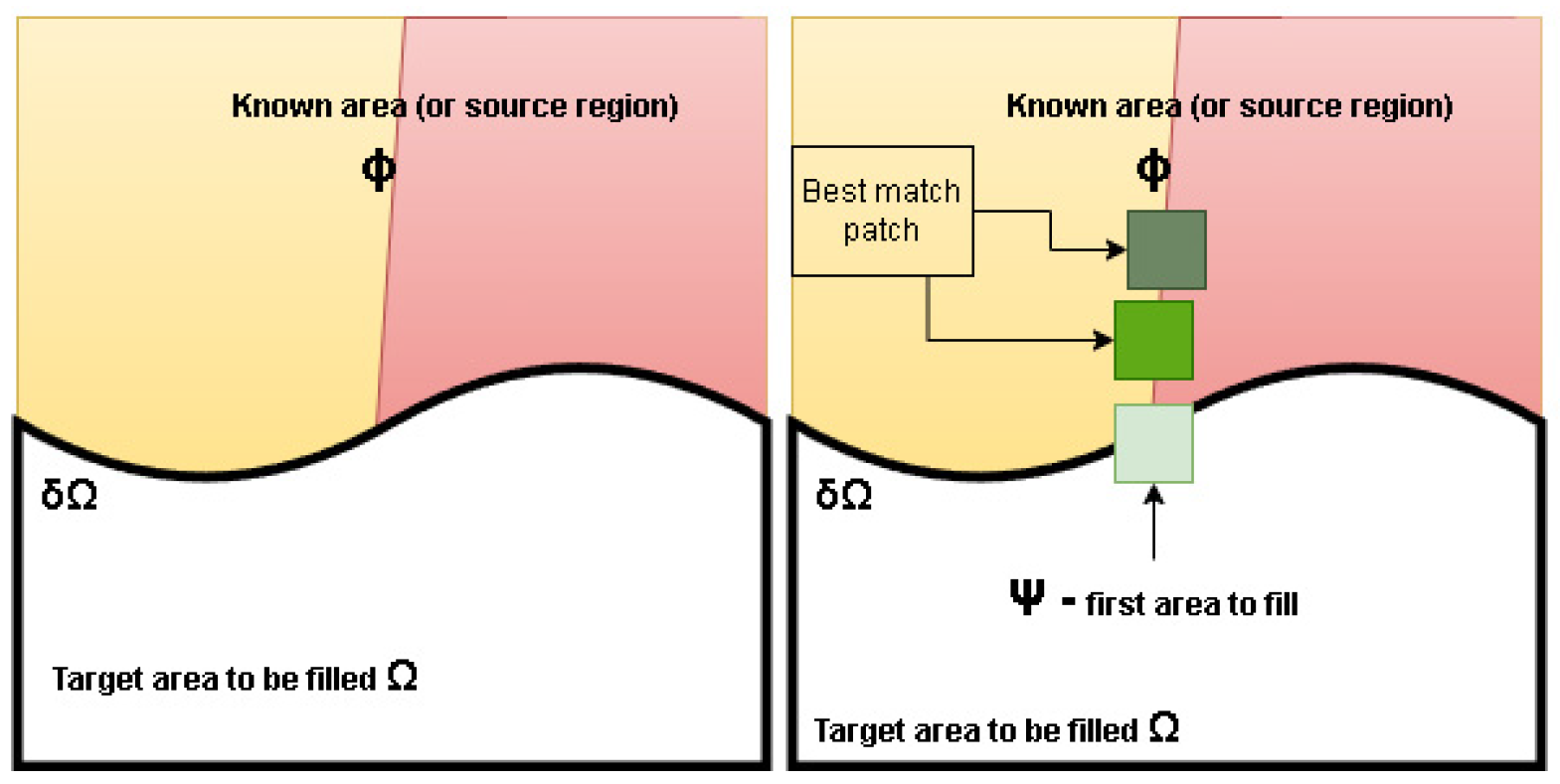

2.2. Exemplar based methods

- find the best order to fill the missing area

- find the best patch that approximates that area

- try to apply if needed some processing on the copied patch in order to ensure that both local and global characteristics are maintained

2.3. Machine learning based methods

3. Inpainting forgery detection mechanism

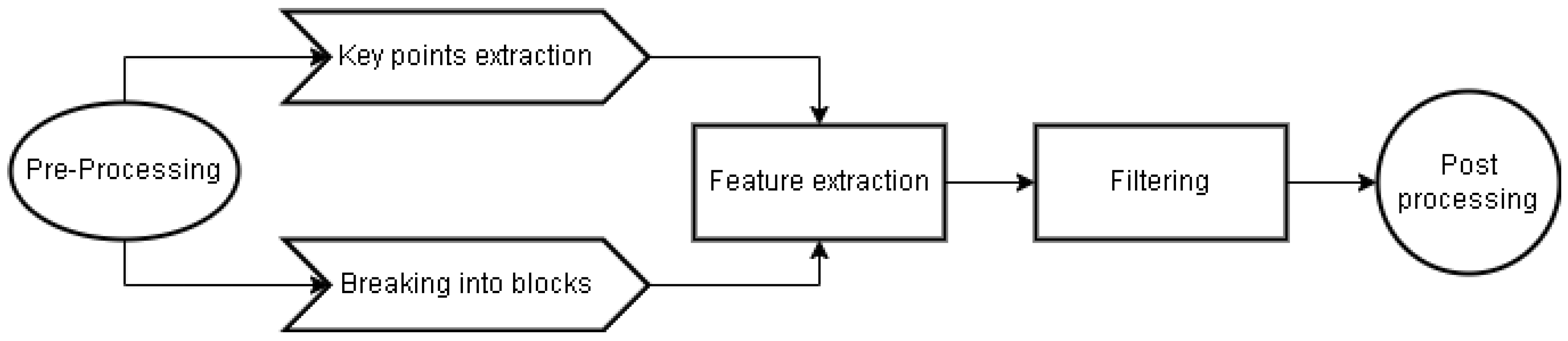

- Feature extraction (either via block-based or using some variants of key points detection like SURF/SIFT). From their analysis, it seems that Zernike moments feature extraction gives the best overall results. Also the algorithm is less influenced when the copied area is either shrunk and/or rotated. Additionally, the algorithm (feature extraction mechanism), seems to work on various attacking vectors, like resizing, jpeg compression, blurring, etc.

- Matching – here they’ve suggested a variety of methods – kNN, brute force, etc. Based on the analysis of the authors in [62], kNN gave the best results

- Filtering – to ensure the “estimated” blocks do not violate some other constraints (like the distance between them etc.)

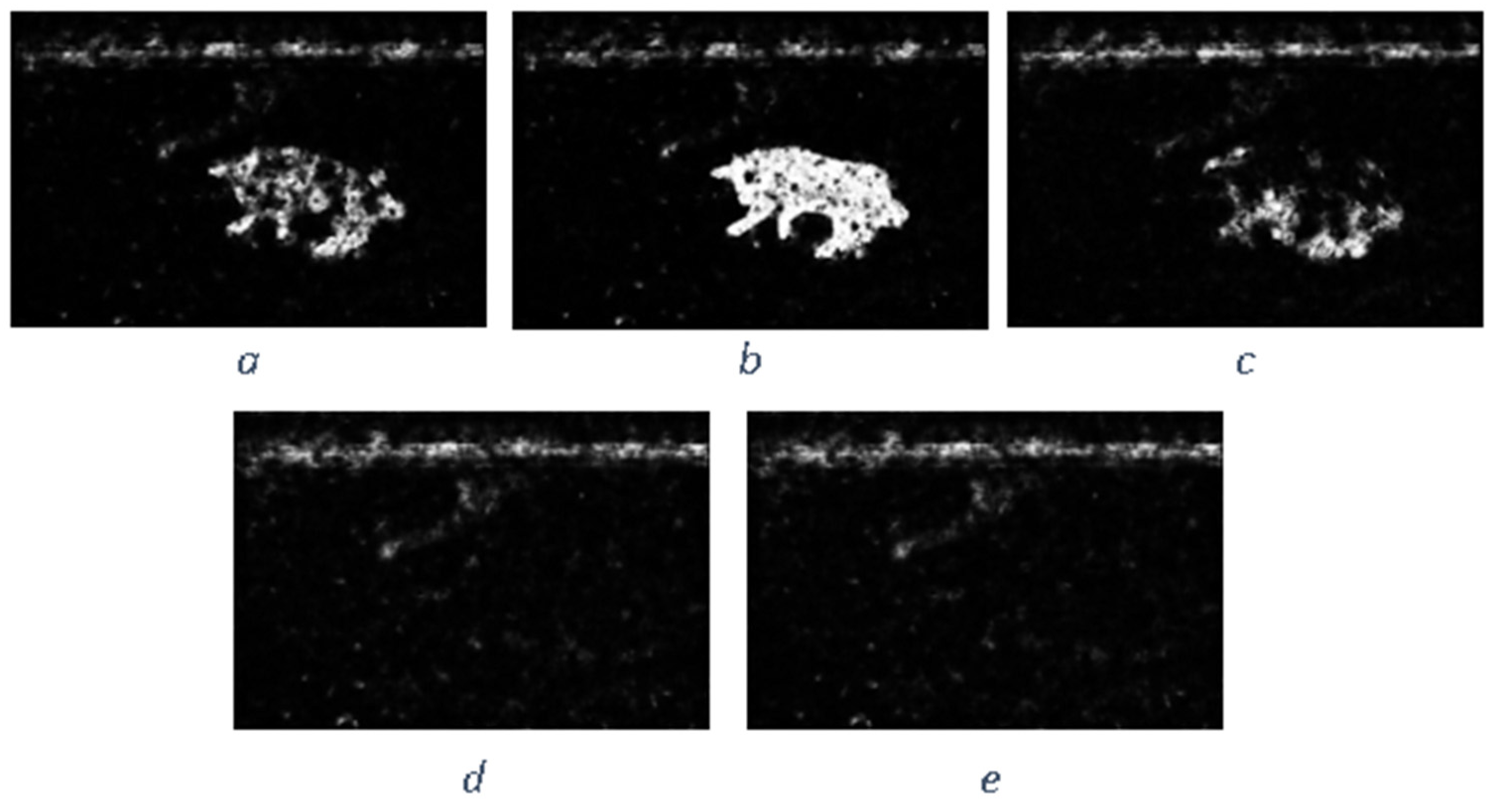

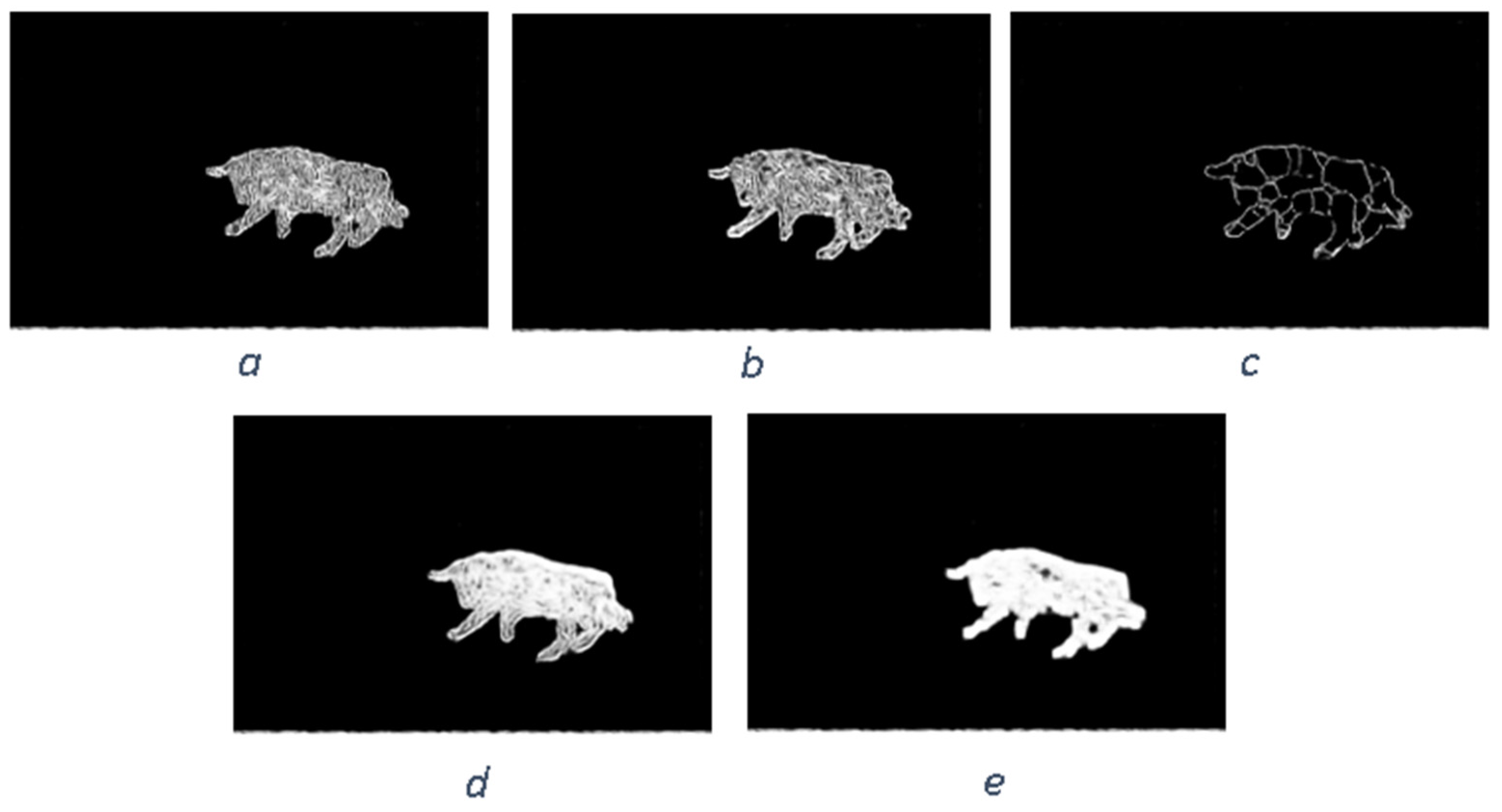

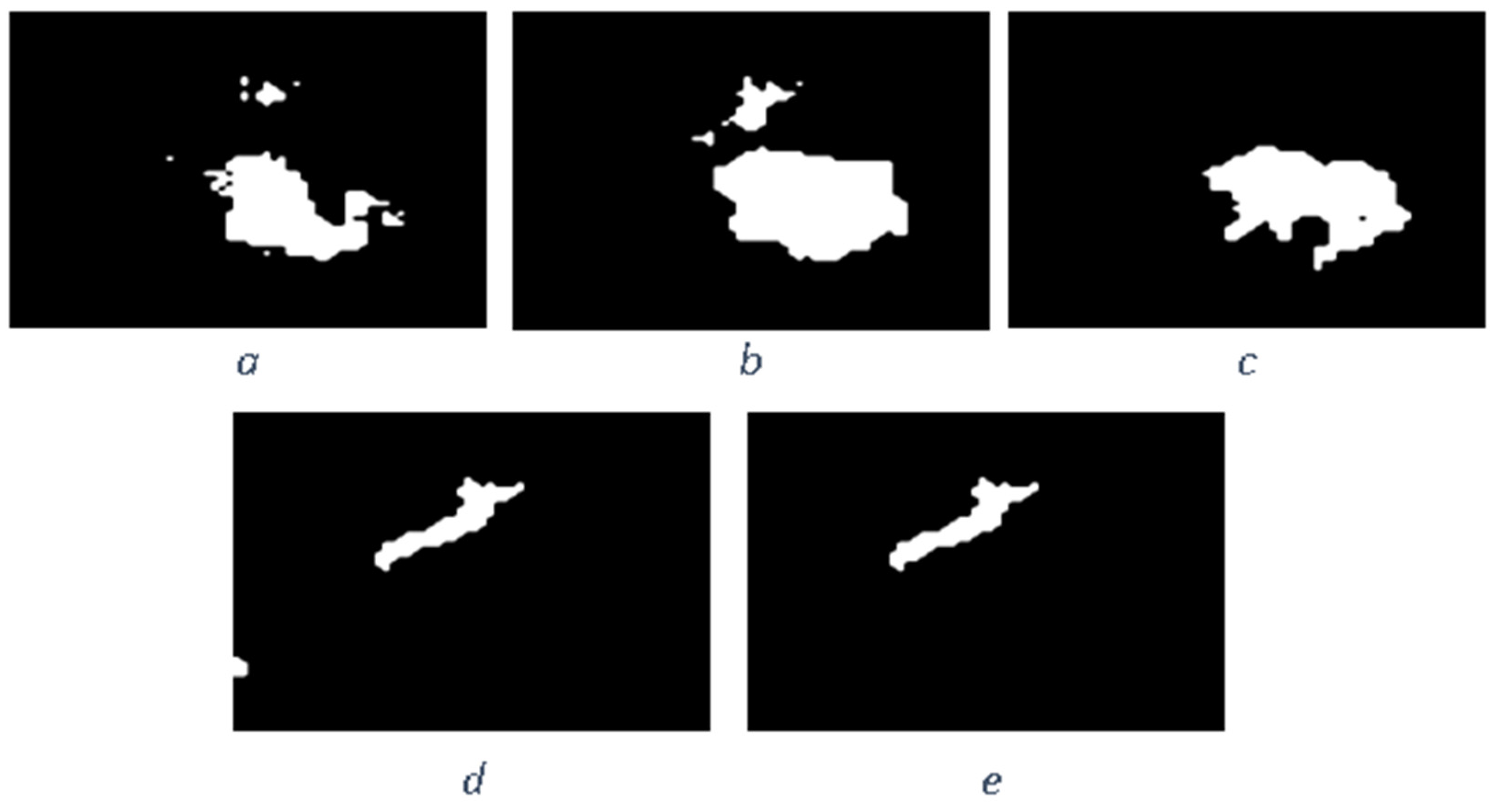

3.1. Image Inpainting detection

- Diffusion-based/PDE/Variational based – they are not suitable for filling large areas, and usually they do not copy patches, but rather propagate smaller information into the area to be reconstructed, thus they do not copy patches, but rather fill the area with information diffused from the known region. So applying a block-based detection will yield no results, as there are no copy regions, but rather diffused areas. Still, some traces can be analyzed, but they are more in the area of inconsistencies in blurring, CFA, and other camera/lens properties.

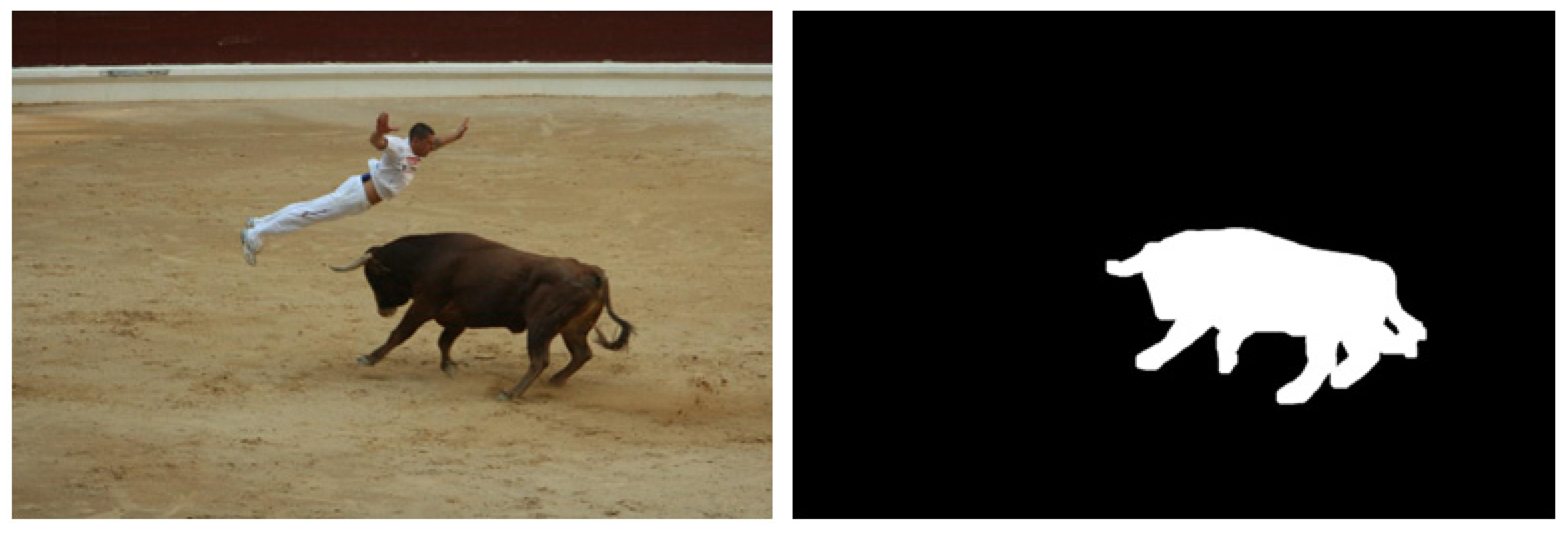

- Patch-based – at first they seem rather well suited to the above-mentioned framework. They work rather well if the region forged contains a lot of texture, but fail in case the object removed was rather large or surrounded by a continuous area (like sky, water, grass, etc). But at a closer look, the method may give unreliable results, due to the inpainting procedures: usually, patch-based methods reconsider the filling area at each step, and the currently selected patch may vary in location from the previously selected patch. Thus, if for the forgery method, we select a larger area that contains several inpainting patches we will not be able to properly determine a similar area. On the other hand, if for the forgery method, we select a smaller patch, we might need to confront 2 aspects: one will be the speed of the method and the other will be the necessity to add some other mechanism to remove false positives.

3.1.1. Classical Image forensic techniques

- o

- Compute all patches from the image (or from ROI). They are called the suspicious patches

- o

-

For each patch in the suspicious patches apply the following algorithm:

- ▪

- Compute all the other image patches and compare each one of them to the suspicious path:

- ▪

- Create a difference between the two patches

- ▪

- Binarize the difference matrix

- ▪

- Find the longest connectivity (either 4way or 8way) inside the binarized matrix

- ▪

- Compare the obtained value with the maximum longest connectivity obtained for the suspicious patch

- o

- In the end, apply fuzzy logic to exclude some of the false positive cases.

- They have tested only on recent Crimini’s variation paper, not on state-of-the-art (at that time) methods for inpainting (and especially for object removal) – they’ve used [67].

- The computation effort is still very high. Again, applying the GZL in the middle-forged area seems a little too exhaustive and probably will not affect the overall results.

| Reference article | Year | Observations |

|---|---|---|

| [63] | 2008 | The first found method which tackles inpainting methods. They test against Crimini datset. Method relies on detecting similar patches and applies a fuzzy logic for similar patches. |

| [64] | 2013 | They continue the work on [63] and add several mechanism to exclude a lot of false positives |

| [65] | 2015 | The authors come with 2 proposals – first do not compute the block differences – only compare the central pixel (this improves performance and the accuracy is not that much affected). Secondly they proposed an improved method comparing to [64] of filtering – eliminating false postives. |

| [66] | 2013 | Similar method as the on in [64]. For better results / faster computation – they suggest a jump patch best approach. |

| [68] | 2018 | The same authors which proposed [65], come with an additional step which consists of an ensemble learning. They rely on Generalized Gaussian Distribution between the DCT coefficients of various blocks |

| [69] | 2015 | The authors took the CMFD framework proposed in [62] but use as feature extractions the Gabor magnitude |

| [70] | 2018 | The authors took the CMFD framework proposed in [62] but use as feature extractions the color correlations between patches |

| [71] | 2020 | The focus was on analyzing the reflectance of the forged and non-forged areas |

3.1.2. Machine learning-based methods

- For blur inconsistencies, one of the most cited paper is [72]. They rely on the assumption that if the targeted original image contains some blur, by combining parts from other images the blur will be inconsistent. They are proposing a method to analyze the gradients and detect inconsistencies among them. Of course, the method does not give good results in case the target digital image does not contain some blur artifacts.

- Some other researchers focused on other camera properties like lens traces. The authors in [73], postulated that in some cases it is possible to detect especially copy-move forgeries by analyzing the lens discrepancies at block level. Their method basically detects edges and extracts distorted lines and use this in classical block based approach to analyze discrepancies within the image. The problem with this approach is that if the targeted area is either to big – or too small the results yielded are not very satisfactory, also there is another problem with low resolution images, because they tend to yield false positive results.

- A very good camera-specific parameter that was heavily studied was the noise generated at image acquisition. Several authors have proposed different mechanisms to detect inconsistencies at block noise levels. Some authors even went in the direction that based on noise patterns they are able to uniquely identify the camera model. To name a few of the most cited works [74,75]. For e.g. in[75], the authors suggested computing noise for non-overlapping blocks and then unifying regions which have similar noise – thus partitioning the image into areas of the same noise intensity. The authors suggested using wavelet + a median filter approach on the grayscale image to compute the noise. Of course, the main limitations of these methods vary from false positives to the impossibility of the methods to detecting if noise level degradation is very small (a lot of anti-forgery mechanisms can exploit this method).

- Color filter array methods or demosaicking methods(CFA) – rely on the observation that most cameras capture only one color per pixel and then use some algorithms to interpolate these values. The forgery detection mechanism based on CFA – basically detects inconsistencies at block levels between the patterns generated by CFA. One of the most cited work is [76], in which the authors are proposing to use a small block (up 2x2) to detect inconsistencies in the CFA pattern. They are extracting the green channel from the image and calculate a prediction error and analyze the variance of the errors in order to mark the non-overlapping block as forged or not. The method yield good results as the original image does not suffer some post-processing operations like color normalization.

| Reference article | Year | Observations |

|---|---|---|

| [77] | 2017 | The main idea was to use a SVM classifier composed of the following features: local binary pattern features, gray-level co-occurrence matrix features, and gradient features (actually they suggest to use fourteen features extracted from patches). |

| [78] | 2018 | Standard CNN model on which they trained original / altered patches. |

| [79] | 2019 | Same as principle ideea as [78] but they choose a Resnet model |

| [80] | 2020 | The authors employed a combination of Resnet + LSTM to better protray the differences between altered vs non-altered regions. All the above methods were mainly tested against Criminisi’s initial paper, thus not having to “compete” with latest image inpainting methods at that time. |

| [81] | 2021 | A tweaked version of a VGG model architecture |

| [83] | 2022 | A CNN model with focus on detecting noise inconsistencies |

| [84] | 2022 | A U-NET VGG model which adds an enhancement block of 5 filters (4 SRM + Laplacian) to be able to better detect inpainted areas. |

| [85] | 2022 | The authors suggest to use of three enhancements blocks: Steganalysis Rich Model – to enhance noise inconsistency, Pre-Filtering to enhance discrepancy in high-frequency components, and a Bayar filter to enable the adaptive acquisition of low-level prediction residual features similar to the Mantranet architecture. |

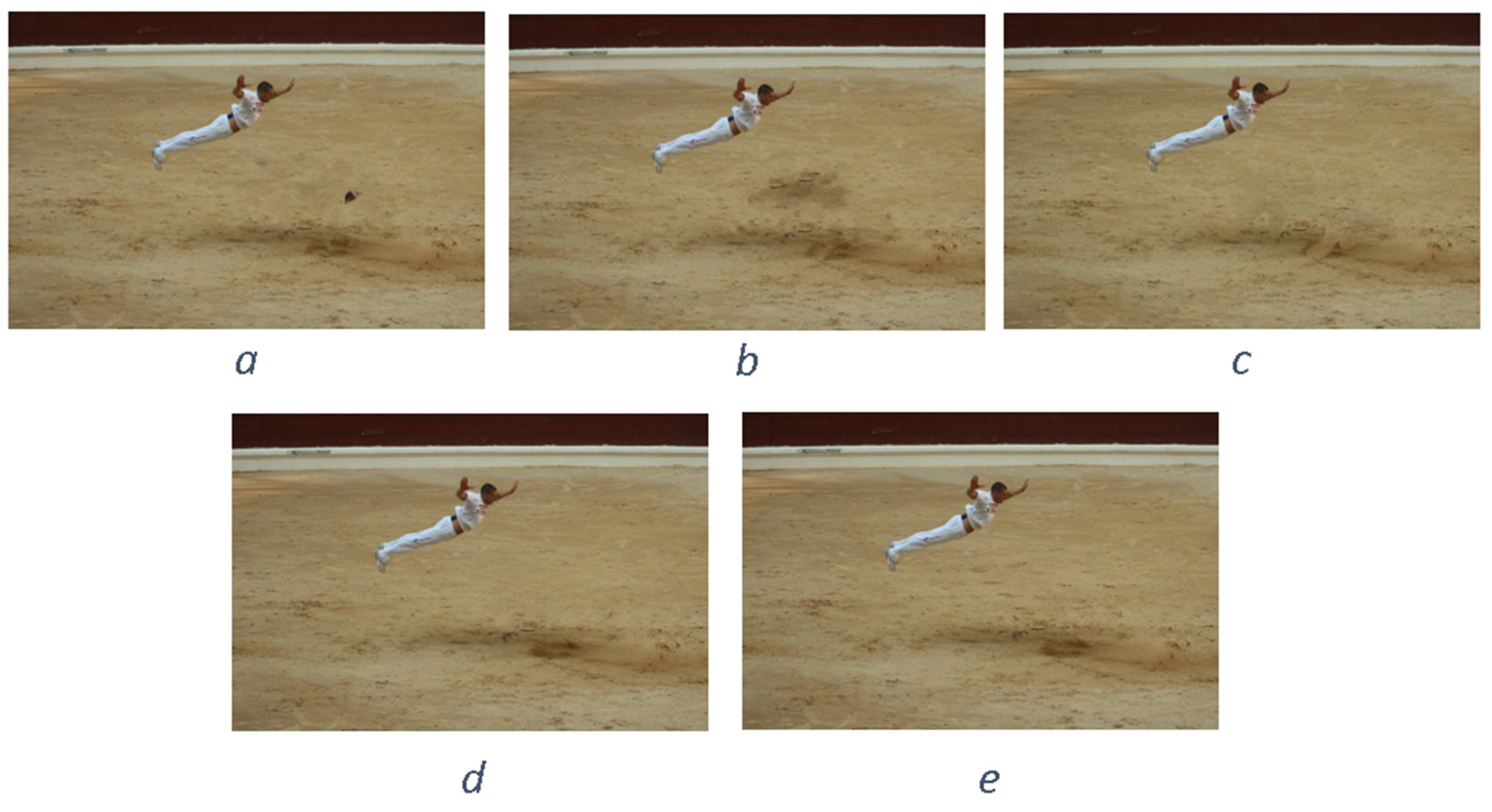

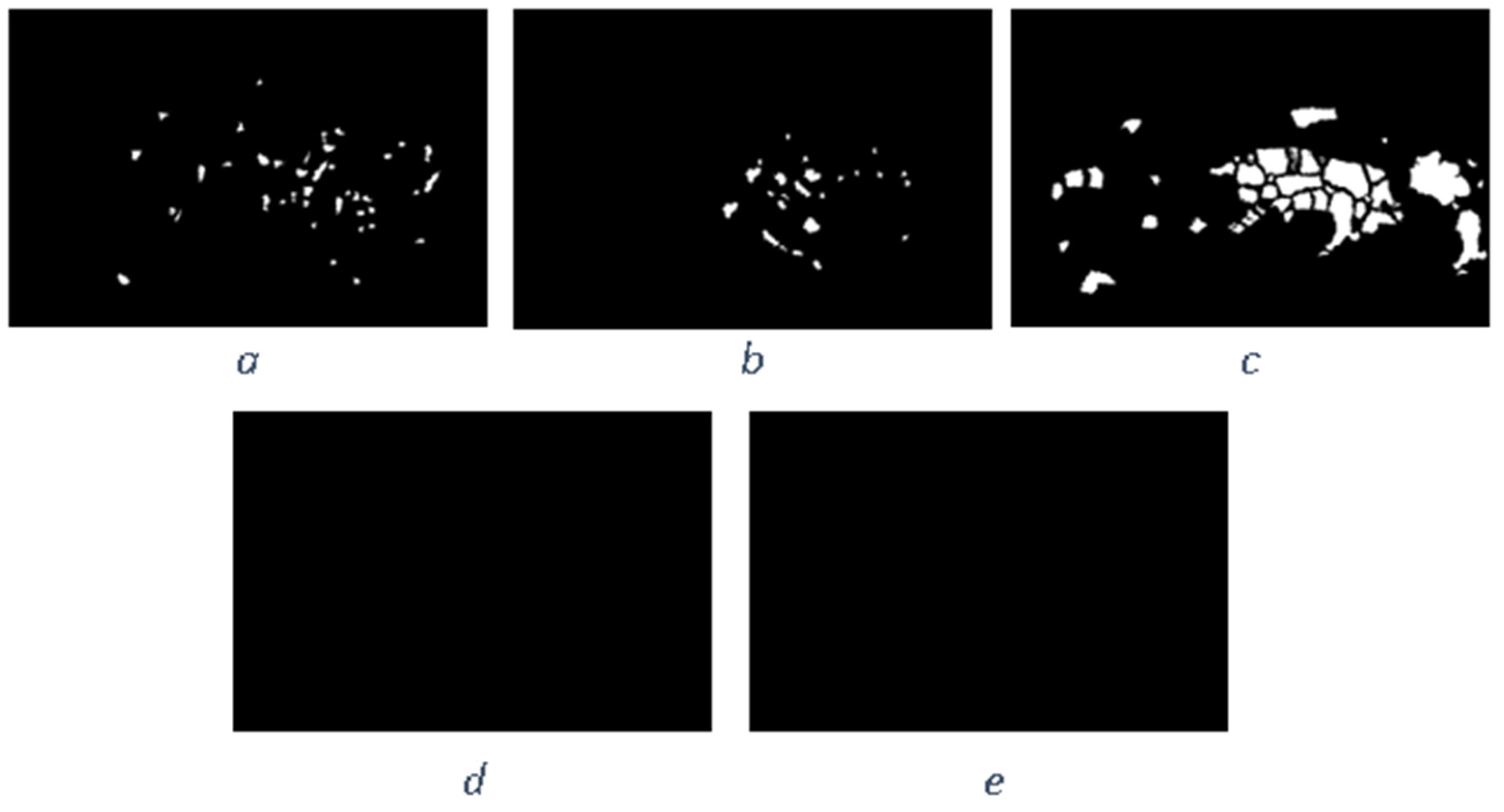

3.2. Video Object removal detection

- Decode the video frames and apply already established image algorithms on the frames of the videos .

- Apply at the encoded video a mechanism to detect if frames have been tampered with (removed)

4. Image inpainting datasets Datasets

4.1. General forgery datasets

| Name | Dataset Size (GB) | Number of pristine / forged pictures | Image size(s) | Type* | Mode | Observation |

|---|---|---|---|---|---|---|

| MICC | 6.5 GB | 1850 / 978 | 722 x 480 to 2048 x 1536 | CM/OR | M | Some of the images are not very realistic, but it tries to generate several types of copy-move by applying rotation and scaling. The problem is that always the forged area is a rectangular |

| CMFD | 1 GB | 48 / 48 | 3264 x 2448 3888 x 2592 3072 x 2304 3039 x 2014 |

CM/OR | M | Very realistic dataset. Some of the images due to the fact that they use professional tools – are a mixing of copy-move, object removal, and sampling. Group by camera type. Important thing to notice is that there is no post processing operations done on the image, but because of the high-quality / size researches can do their on post processing. Images were processed using GIMP |

| CoMoFoD | 3 GB | 200 + 60 / 200 + 60 |

512 x 512 3000x2000 |

CM/OR | M | Canon camera used only. They’ve used 6 post processing operations, for e.g. JPEG compression with 9 various quality level, or changing brighness, noise etc. The operations was done in Photoshop. 3Gb is only the small variant of the dataset |

| CASIA | 3GB V2 | 7491 / 5123 | 160x240 to 900x600 |

CM+S | N | Contains different type of copied areas like resize, rotate and post-processing of the forged area. |

| COVERAGE | 150MB | 100/100 | 235x340 to 752x472 |

CP | N | Original images already contain similar objects, thus makes harder to detect. The forged is relative large – 60% of the images have at least 10% forged area. |

| Realistic Tampering Dataset | 1.5 GB | 220/220 | 1920x1080 | CP/OR | M/A | The dataset contains 4 different types of camera, and focus on the inconsistencies at noise level between patches. The images were pre/post processed with GIMP |

| MFC | 150GB | 16k / 2 M | All sizes | CP/OR | N | They’ve used a series of techniques like simple copy-move, to content aware fill, seam carving etc. |

4.2. Image inpainting specific datasets

| Name | Dataset Size (GB) | Number of pristine / forged pictures | Image size(s) | Observation |

|---|---|---|---|---|

| DEFACTO INPAINTING | 13 GB | 10312 / 25000 (they’ve applied inpainting for same image but for different areas) |

180x240 to 640x640 | Some of the images are not very realistic inpainted due to the automatic randomized selection of the area from the MSCOCO dataset |

| IMD2020 | 38GB | 35k / 35K | 640x800 to 1024x920 | Some of the images are not very realistic, and the forged image underwent some additional changes (probably some noise filtering / color unifirmatization). An interesting fact is that they manualy selecting area and than using an automated algorthim, that means no post-processing / enhacements |

| IID-NET | 1.2GB | 11k / 11k | 256x256 | Random masks (based on MSCOCO) + 11 different automated algorithms of filling / removing object/ The idea is interesting to try to tackle different inpainting algorithms, still there are some problems in how the mask inpainted area is choosen. Also another problem is that altough several inpainting algoritms are tested, they are applied on different images. |

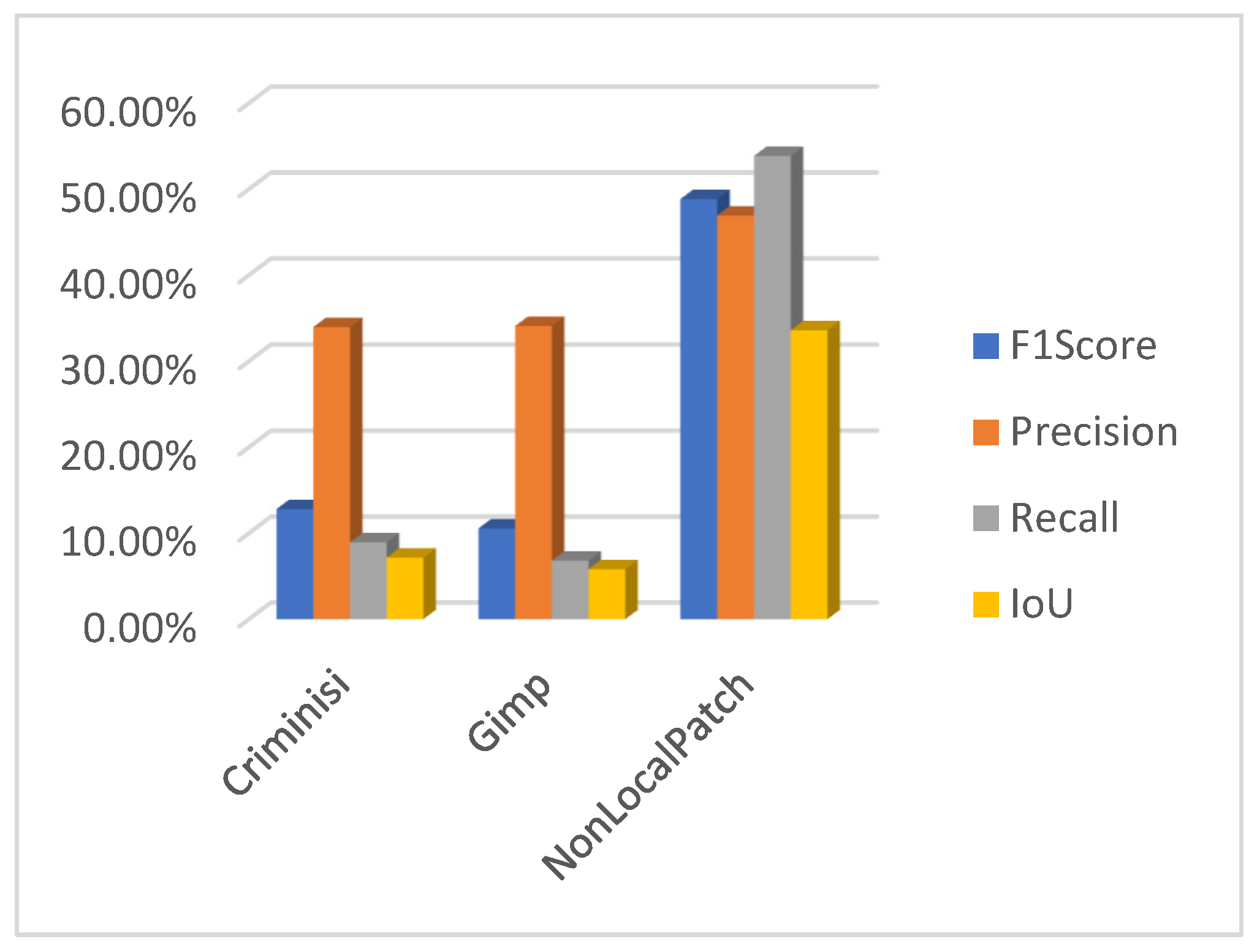

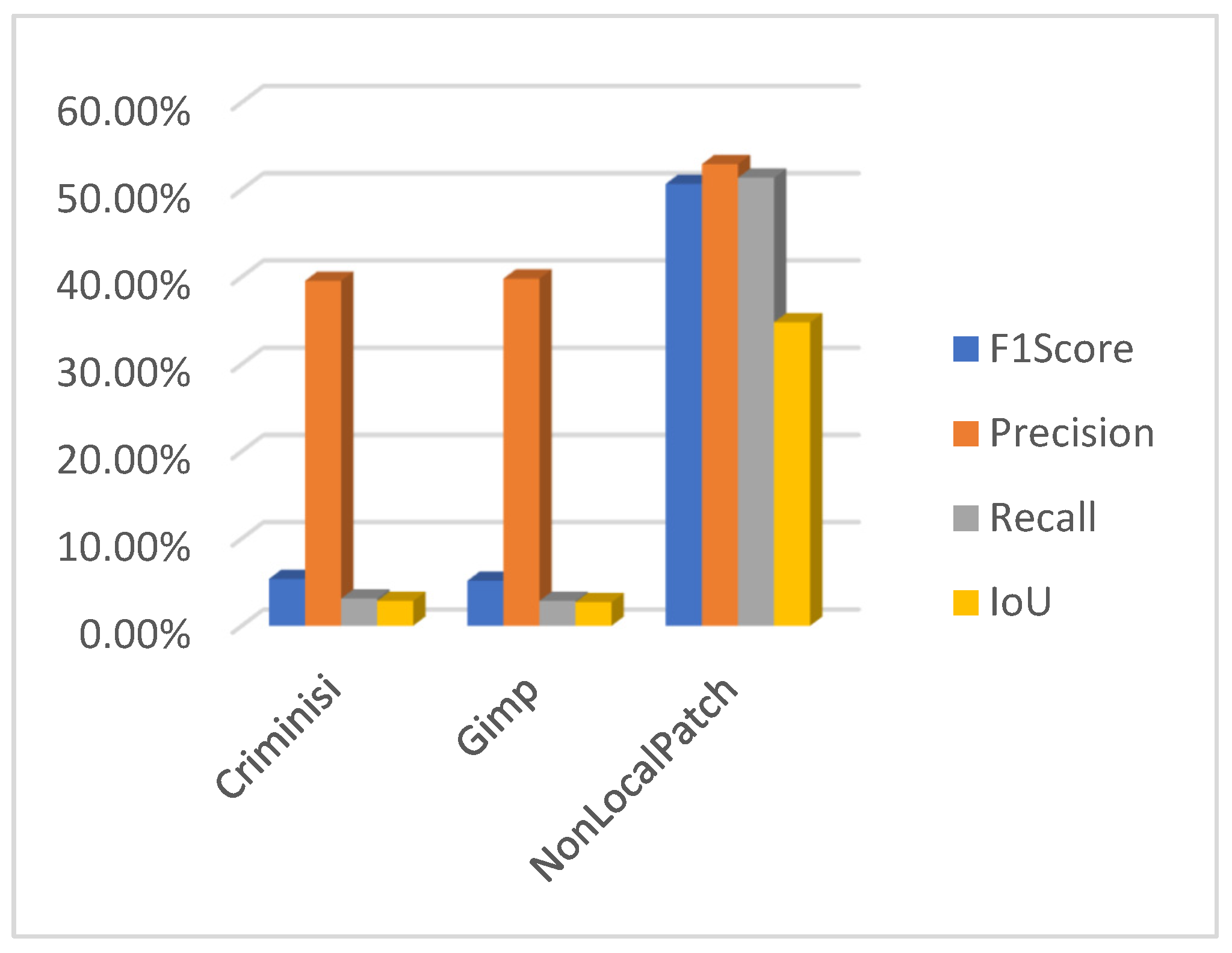

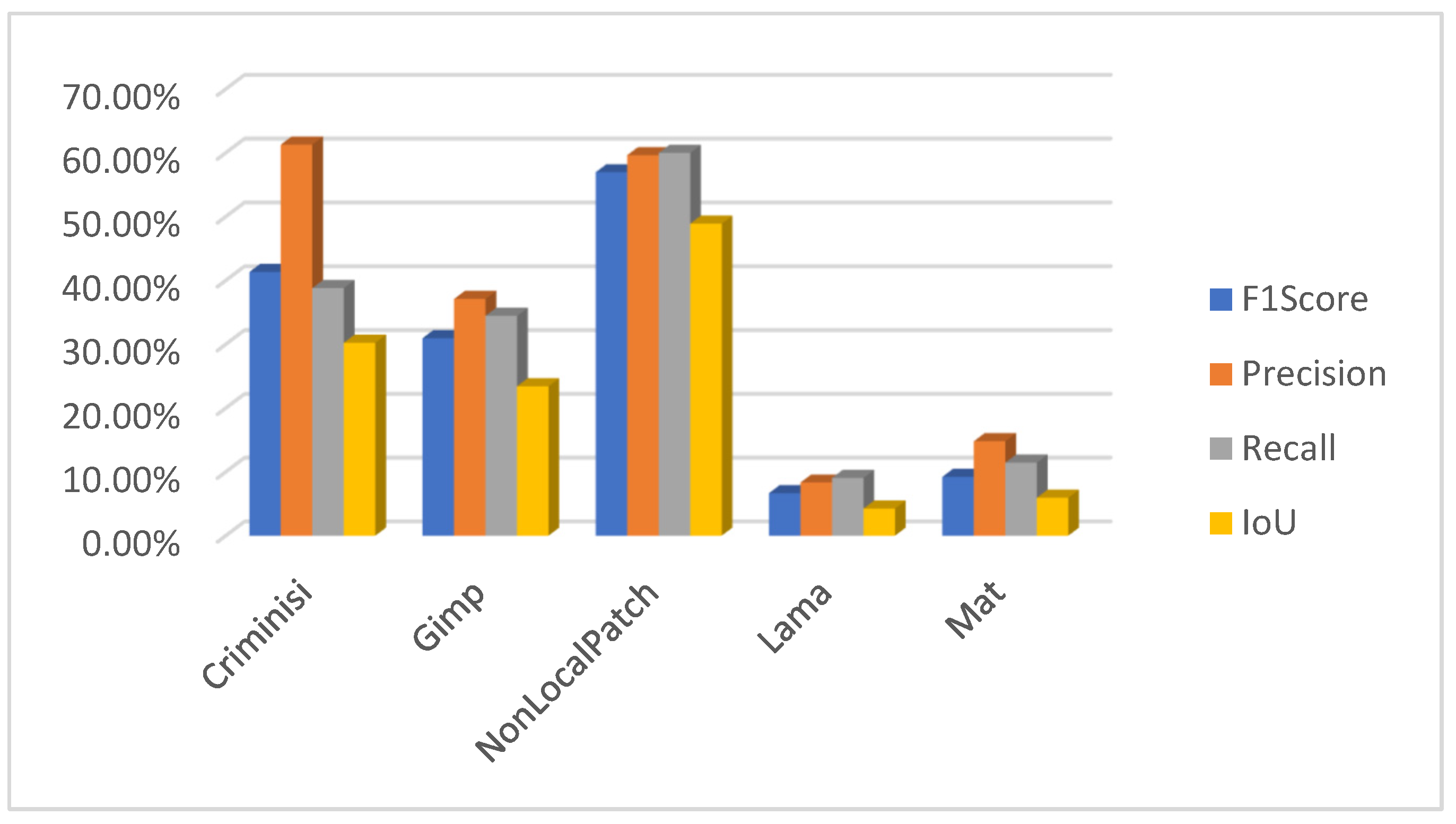

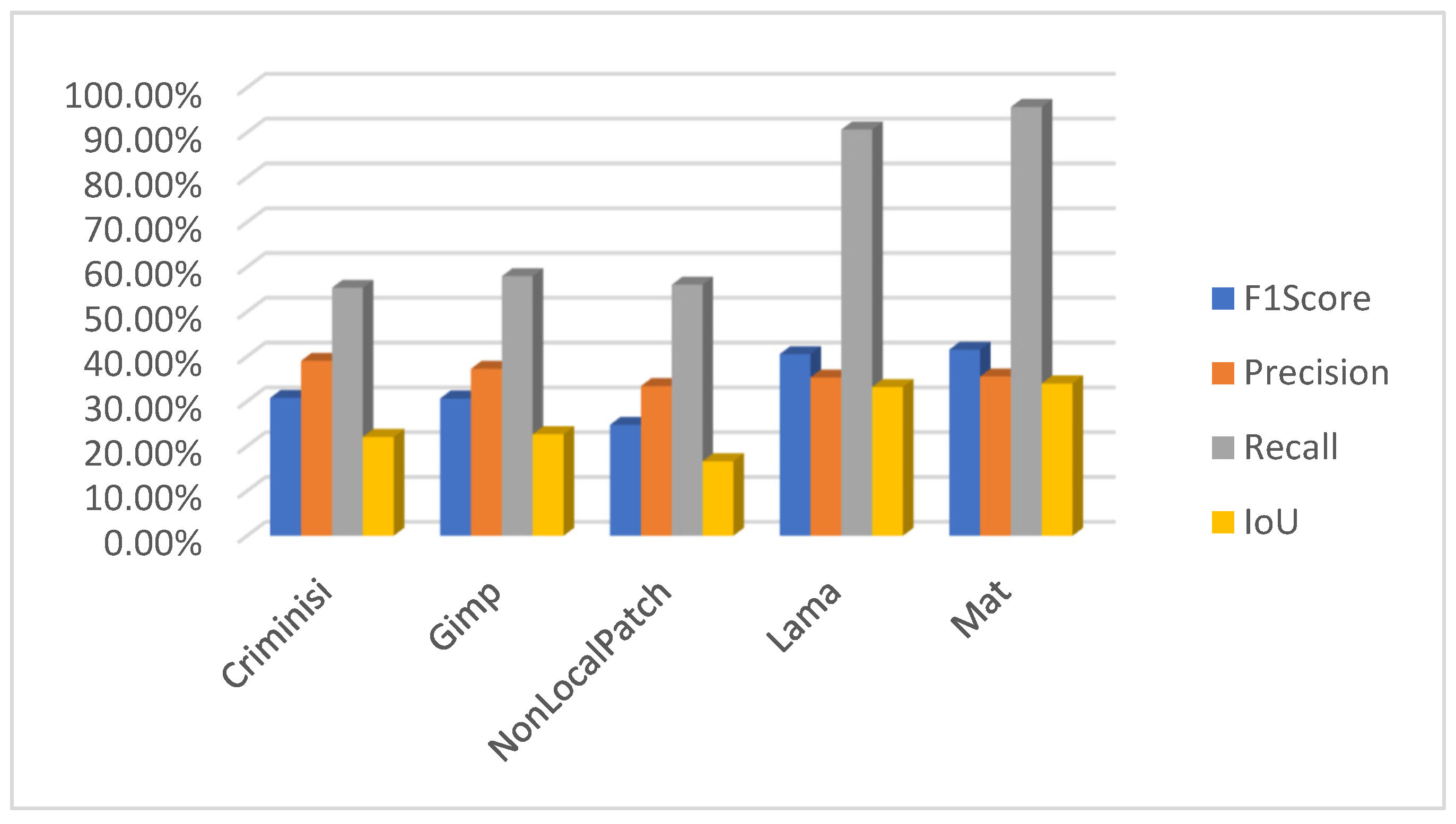

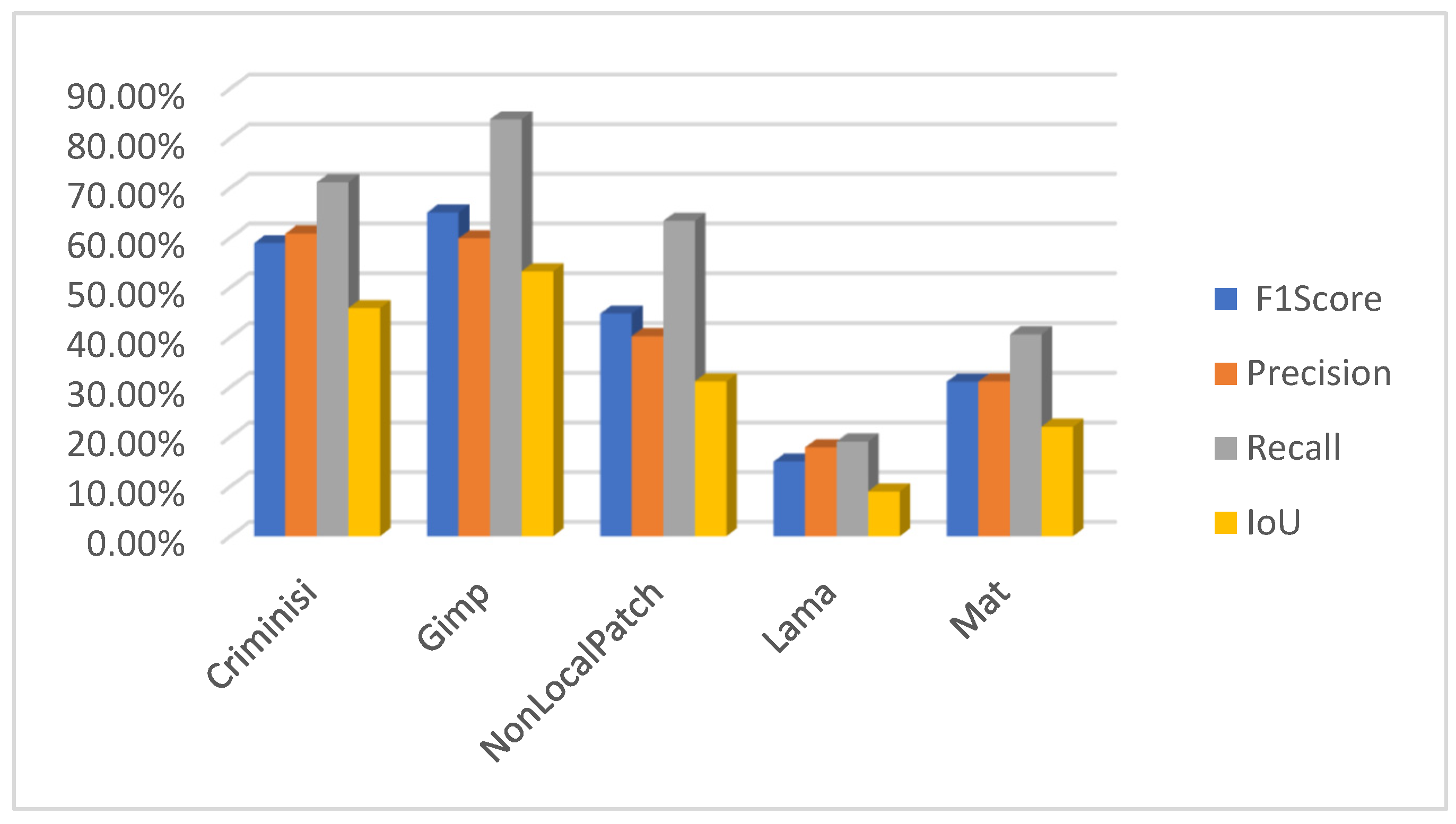

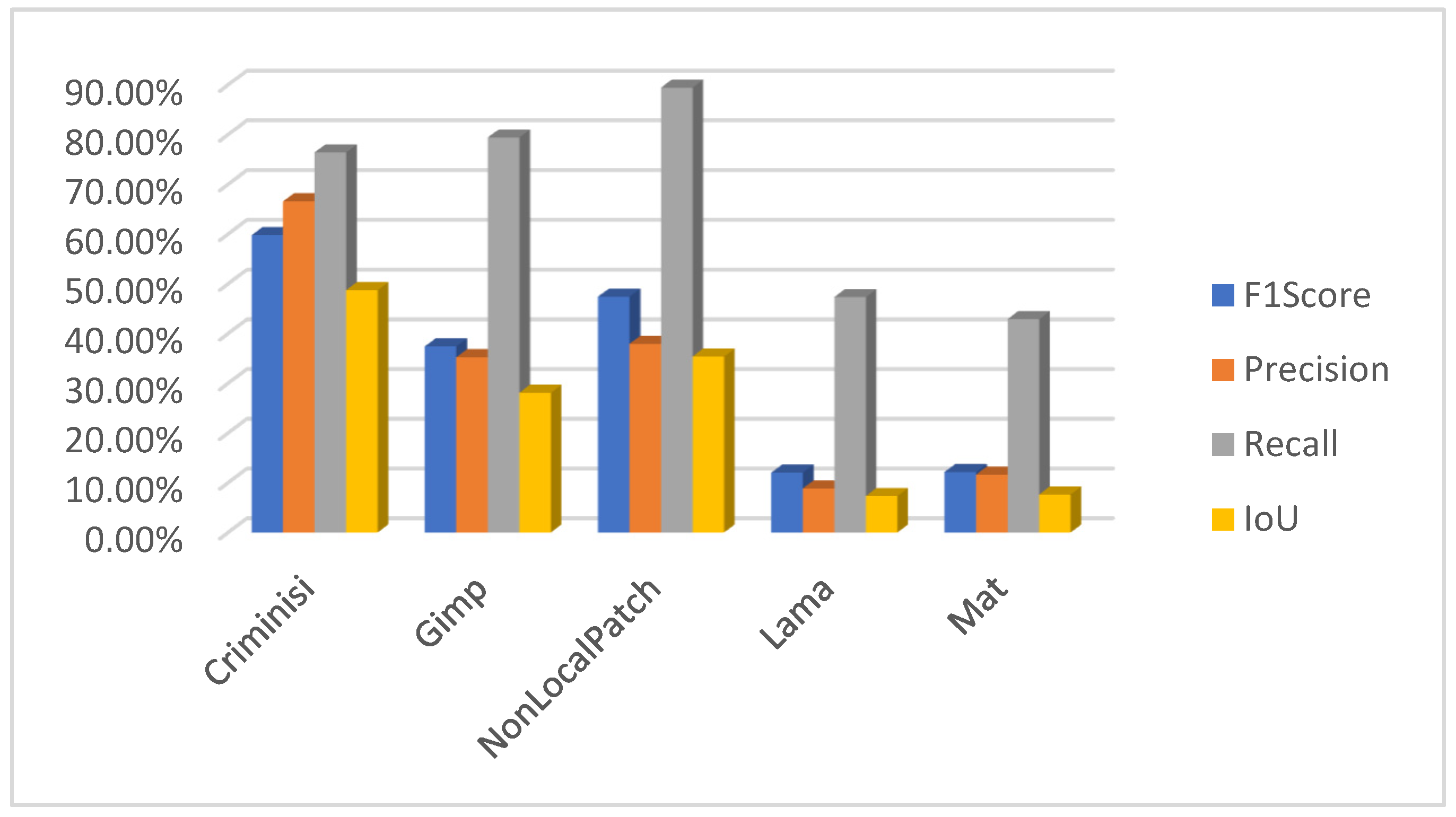

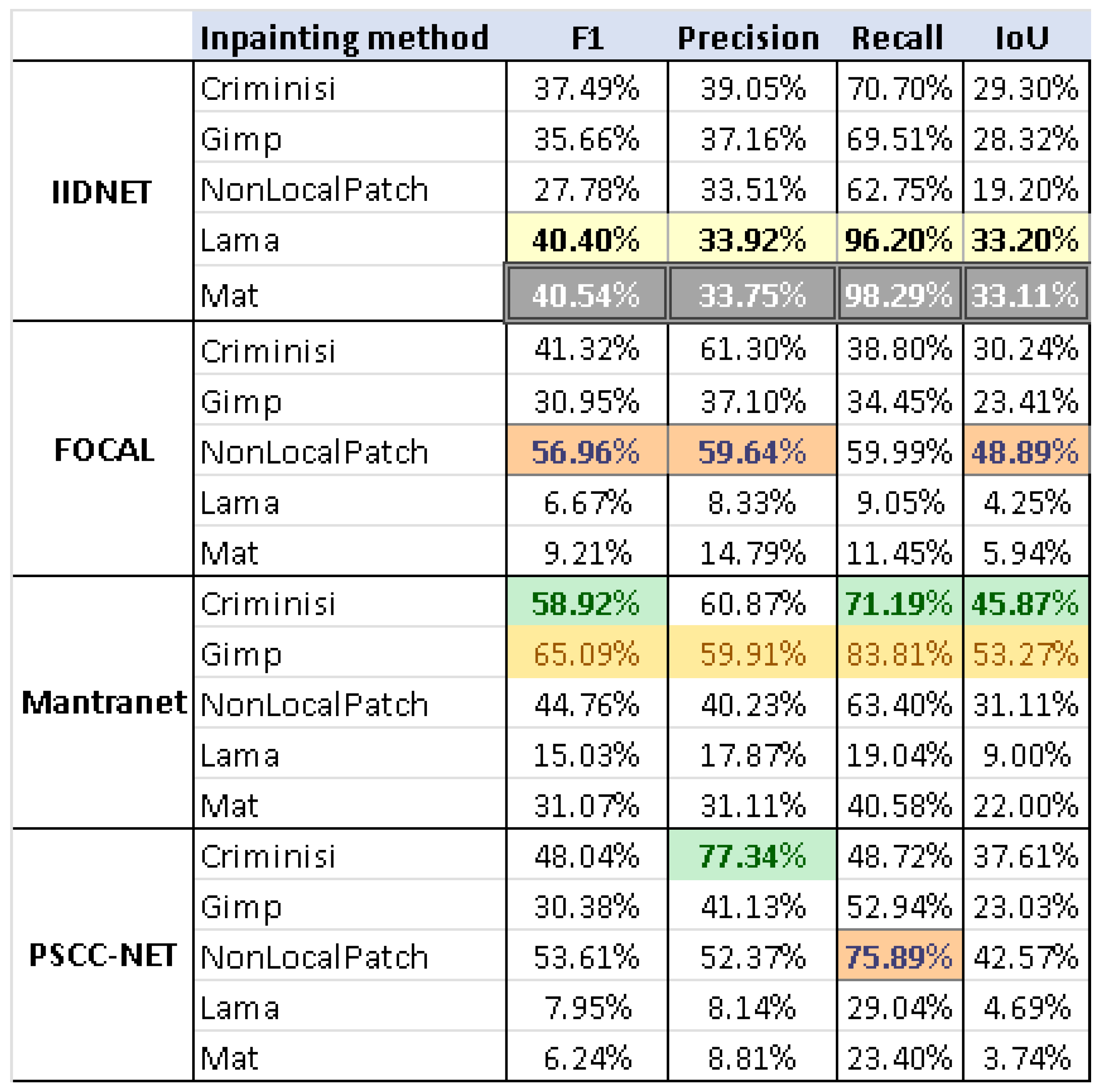

5. Results and Discussion

Author Contributions

Data Availability Statement

Conflicts of Interest

References

- M. A. Qureshi and M. Deriche, “A bibliography of pixel-based blind image forgery detection techniques,” Signal Process Image Commun, vol. 39, pp. 46–74, 2015. [CrossRef]

- A. Gokhale, P. Mulay, D. Pramod, and R. Kulkarni, “A Bibliometric Analysis of Digital Image Forensics,”. [CrossRef]

- F. Casino et al., “Research Trends, Challenges, and Emerging Topics in Digital Forensics: A Review of Reviews,” IEEE Access, vol. 10, pp. 25464–25493, 2022. [CrossRef]

- NOVA, “NOVA | scienceNOW | Profile: Hany Farid | PBS.” https://www.pbs.org/wgbh/nova/sciencenow/0301/03.html (accessed Sep. 09, 2021).

- P. Korus, “Digital image integrity – a survey of protection and verification techniques,” Digit Signal Process, vol. 71, pp. 1–26, Dec. 2017. [CrossRef]

- K. Liu, J. Li, S. Sabahat, and H. Bukhari, “Overview of Image Inpainting and Forensic Technology,” 2022. [CrossRef]

- O. Elharrouss, N. Almaadeed, S. Al-Maadeed, and Y. Akbari, “Image Inpainting: A Review,” Neural Processing Letters 2019 51:2, vol. 51, no. 2, pp. 2007–2028, Dec. 2019. [CrossRef]

- D. J. B. Rojas, B. J. T. Fernandes, and S. M. M. I. Fernandes, “A Review on Image Inpainting Techniques and Datasets,” Proceedings - 2020 33rd SIBGRAPI Conference on Graphics, Patterns and Images, SIBGRAPI 2020, pp. 240–247, Nov. 2020. [CrossRef]

- J. Jam, C. Kendrick, K. Walker, V. Drouard, J. G. S. Hsu, and M. H. Yap, “A comprehensive review of past and present image inpainting methods,” Computer Vision and Image Understanding, vol. 203, p. 103147, Feb. 2021. [CrossRef]

- Z. Tauber, Z. N. Li, and M. S. Drew, “Review and preview: Disocclusion by inpainting for image-based rendering,” IEEE Transactions on Systems, Man and Cybernetics Part C: Applications and Reviews, vol. 37, no. 4, pp. 527–540, Jul. 2007. [CrossRef]

- M. Bertalmio, G. Sapiro, V. Caselles, and C. Ballester, “Image inpainting,” in Proceedings of the 27th annual conference on Computer graphics and interactive techniques - SIGGRAPH ’00, New York, New York, USA: ACM Press, 2000, pp. 417–424. [CrossRef]

- M. Bertalmío, A. L. Bertozzi, and G. Sapiro, “Navier-Stokes, fluid dynamics, and image and video inpainting,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 1, 2001. [CrossRef]

- M. Bertalmío, “Contrast invariant inpainting with a 3RD order, optimal PDE,” Proceedings - International Conference on Image Processing, ICIP, vol. 2, pp. 775–778, 2005. [CrossRef]

- T. F. Chan and J. Shen, “Nontexture Inpainting by Curvature-Driven Diffusions,” J Vis Commun Image Represent, vol. 12, no. 4, pp. 436–449, Dec. 2001. [CrossRef]

- T. F. Chan, S. H. Kang, and J. Shen, “Euler’s Elastica and Curvature-Based Inpainting,” vol. 63, no. 2, pp. 564–592, Jul. 2006. [CrossRef]

- D. Tschumperlé, “Fast anisotropic smoothing of multi-valued images using curvature-preserving PDE’s,” Int J Comput Vis, vol. 68, no. 1, pp. 65–82, Jun. 2006. [CrossRef]

- A. Telea, “An Image Inpainting Technique Based on the Fast Marching Method,”. vol. 9, no. 1, pp. 23–34, Jan. 2012. [CrossRef]

- C. B. Schönlieb’ and A. Bertozzi, “Unconditionally stable schemes for higher order inpainting,” Commun Math Sci, vol. 9, no. 2, pp. 413–457, 2011. [CrossRef]

- P. Jidesh and S. George, “Gauss curvature-driven image inpainting for image reconstruction,” vol. 37, no. 1, pp. 122–133, Jan. 2014. [CrossRef]

- G. Sridevi and S. Srinivas Kumar, “p-Laplace Variational Image Inpainting Model Using Riesz Fractional Differential Filter,” International Journal of Electrical and Computer Engineering (IJECE), vol. 7, no. 2, pp. 850–857, Apr. 2017. [CrossRef]

- G. Sridevi and S. Srinivas Kumar, “Image Inpainting and Enhancement using Fractional Order Variational Model,” Def Sci J, vol. 67, no. 3, pp. 308–315, Apr. 2017. [CrossRef]

- G. Sridevi and · S Srinivas Kumar, “Image Inpainting Based on Fractional-Order Nonlinear Diffusion for Image Reconstruction,” Circuits Syst Signal Process, vol. 38, pp. 3802–3817, 2019. [CrossRef]

- S. Gamini, V. V. Gudla, and C. H. Bindu, “Fractional-order Diffusion based Image Denoising Model,” International Journal of Electrical and Electronics Research, vol. 10, no. 4, pp. 837–842, 2022. [CrossRef]

- A. A. Efros and T. K. Leung, “Texture Synthesis by Non-parametric Sampling,” 1999.

- A. Criminisi, P. Pérez, and K. Toyama, “Region filling and object removal by exemplar-based image inpainting,” IEEE Transactions on Image Processing, vol. 13, no. 9, pp. 1200–1212, Sep. 2004. [CrossRef]

- N. Kumar, L. Zhang, and S. Nayar, “What is a good nearest neighbors algorithm for finding similar patches in images?,” Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 5303 LNCS, no. PART 2, pp. 364–378, 2008. [CrossRef]

- “PatchMatch: A Randomized Correspondence Algorithm for Structural Image Editing.” https://gfx.cs.princeton.edu/pubs/Barnes_2009_PAR/ (accessed May 19, 2023).

- T. Ružić and A. Pižurica, “Context-aware patch-based image inpainting using Markov random field modeling,” IEEE Transactions on Image Processing, vol. 24, no. 1, pp. 444–456, Jan. 2015. [CrossRef]

- K. H. Jin and J. C. Ye, “Annihilating Filter-Based Low-Rank Hankel Matrix Approach for Image Inpainting,” IEEE Transactions on Image Processing, vol. 24, no. 11, pp. 3498–3511, Nov. 2015. [CrossRef]

- N. Kawai, T. Sato, and N. Yokoya, “Diminished Reality Based on Image Inpainting Considering Background Geometry,” IEEE Trans Vis Comput Graph, vol. 22, no. 3, pp. 1236–1247, Mar. 2016. [CrossRef]

- Q. Guo, S. Gao, X. Zhang, Y. Yin, and C. Zhang, “Patch-Based Image Inpainting via Two-Stage Low Rank Approximation,” IEEE Trans Vis Comput Graph, vol. 24, no. 6, pp. 2023–2036, Jun. 2018. [CrossRef]

- H. Lu, Q. Liu, M. Zhang, Y. Wang, and X. Deng, “Gradient-based low rank method and its application in image inpainting,” Multimed Tools Appl, vol. 77, no. 5, pp. 5969–5993, Mar. 2018. [CrossRef]

- L. Shen, Y. Xu, and X. Zeng, “Wavelet inpainting with the ℓ0 sparse regularization,” Appl Comput Harmon Anal, vol. 41, no. 1, pp. 26–53, Jul. 2016. [CrossRef]

- B. M. Waller, M. S. Nixon, and J. N. Carter, “Image reconstruction from local binary patterns,” Proceedings - 2013 International Conference on Signal-Image Technology and Internet-Based Systems, SITIS 2013, pp. 118–123, 2013. [CrossRef]

- Hong-an Li, Liuqing Hu, Jun Liu, Jing Zhang & Tian Ma (2023) A review of advances in image inpainting research, The Imaging Science Journal. [CrossRef]

- D. Rasaily and M. Dutta, “Comparative theory on image inpainting: A descriptive review,” 2017 International Conference on Energy, Communication, Data Analytics and Soft Computing, ICECDS 2017, pp. 2925–2930, Jun. 2018. [CrossRef]

- B. Shen, W. Hu, Y. Zhang, and Y. J. Zhang, “Image inpainting via sparse representation,” ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing - Proceedings, pp. 697–700, 2009. [CrossRef]

- Z. Xu and J. Sun, “Image inpainting by patch propagation using patch sparsity,” IEEE Transactions on Image Processing, vol. 19, no. 5, pp. 1153–1165, May 2010. [CrossRef]

- P. Tiefenbacher, M. Sirch, M. Babaee, and G. Rigoll, “Wavelet contrast-based image inpainting with sparsity-driven initialization,” Proceedings - International Conference on Image Processing, ICIP, vol. 2016-August, pp. 3528–3532, Aug. 2016. [CrossRef]

- A. Bugeau, M. Bertalmío, V. Caselles, and G. Sapiro, “A comprehensive framework for image inpainting,” IEEE Transactions on Image Processing, vol. 19, no. 10, pp. 2634–2645, Oct. 2010. [CrossRef]

- J. F. Aujol, S. Ladjal, and S. Masnou, “Exemplar-Based Inpainting from a Variational Point of View,” vol. 42, no. 3, pp. 1246–1285, May 2010. [CrossRef]

- W. Casaca, M. Boaventura, M. P. De Almeida, and L. G. Nonato, “Combining anisotropic diffusion, transport equation and texture synthesis for inpainting textured images,” Pattern Recognit Lett, vol. 36, no. 1, pp. 36–45, Jan. 2014. [CrossRef]

- D. Pathak, P. Krahenbuhl, J. Donahue, T. Darrell, and A. A. Efros, “Context Encoders: Feature Learning by Inpainting,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2016-December, pp. 2536–2544, Dec. 2016. [CrossRef]

- Z. Qin, Q. Zeng, Y. Zong, and F. Xu, “Image inpainting based on deep learning: A review,” Displays, vol. 69, p. 102028, Sep. 2021. [CrossRef]

- R. Suvorov et al., “Resolution-robust Large Mask Inpainting with Fourier Convolutions,” Proceedings - 2022 IEEE/CVF Winter Conference on Applications of Computer Vision, WACV 2022, pp. 3172–3182, 2022. [CrossRef]

- Z. Lu, J. Jiang, J. Huang, G. Wu, and X. Liu, “GLaMa: Joint Spatial and Frequency Loss for General Image Inpainting,” IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, vol. 2022-June, pp. 1300–1309, May 2022. [CrossRef]

- P. Shamsolmoali, M. Zareapoor, and E. Granger, “Image Completion Via Dual-Path Cooperative Filtering,” ICASSP 2023 - 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1–5, Jun. 2023. [CrossRef]

- Z. Liu, P. Luo, X. Wang, and X. Tang, “Deep Learning Face Attributes in the Wild *,” 2015. [CrossRef]

- B. Zhou, A. Lapedriza, A. Khosla, A. Oliva, and A. Torralba, “Places: A 10 Million Image Database for Scene Recognition,” IEEE Trans Pattern Anal Mach Intell, vol. 40, no. 6, pp. 1452–1464, Jun. 2018. [CrossRef]

- A. Lugmayr, M. Danelljan, A. Romero, F. Yu, R. Timofte, and L. Van Gool, “RePaint: Inpainting using Denoising Diffusion Probabilistic Models,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2022-June, pp. 11451–11461, Jan. 2022. [CrossRef]

- R. K. Cho, K. Sood, and C. S. C. Channapragada, “Image Repair and Restoration Using Deep Learning,” AIST 2022 - 4th International Conference on Artificial Intelligence and Speech Technology, 2022. [CrossRef]

- Y. Chen, R. Xia, K. Yang, and K. Zou, “DGCA: High resolution image inpainting via DR-GAN and contextual attention,” Multimed Tools Appl, pp. 1–21, May 2023. [CrossRef]

- P. Jeevan, D. S. Kumar, and A. Sethi, “WavePaint: Resource-efficient Token-mixer for Self-supervised Inpainting,” Jul. 2023, Accessed: Sep. 10, 2023. [Online]. Available: https://arxiv.org/abs/2307.00407v1.

- P. Esser, R. Rombach, and B. Ommer, “Taming transformers for high-resolution image synthesis,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 12868–12878, 2021. [CrossRef]

- H. Farid, “Image forgery detection,” IEEE Signal Process Mag, vol. 26, no. 2, pp. 16–25, 2009. [CrossRef]

- M. Zanardelli, F. Guerrini, R. Leonardi, and N. Adami, “Image forgery detection: A survey of recent deep-learning approaches,” Multimed Tools Appl, vol. 82, no. 12, pp. 17521–17566, May 2022. [CrossRef]

- N. T. Pham and C. S. Park, “Toward Deep-Learning-Based Methods in Image Forgery Detection: A Survey,” IEEE Access, vol. 11, pp. 11224–11237, 2023. [CrossRef]

- H. Li, W. Luo, and J. Huang, “Localization of Diffusion-Based Inpainting in Digital Images,” IEEE Transactions on Information Forensics and Security, vol. 12, no. 12, pp. 3050–3064, Dec. 2017. [CrossRef]

- Y. Zhang, F. Ding, S. Kwong, and G. Zhu, “Feature pyramid network for diffusion-based image inpainting detection,” Inf Sci (N Y), vol. 572, pp. 29–42, Sep. 2021. [CrossRef]

- Y. Zhang, T. Liu, C. Cattani, Q. Cui, and S. Liu, “Diffusion-based image inpainting forensics via weighted least squares filtering enhancement,” Multimed Tools Appl, vol. 80, no. 20, pp. 30725–30739, Aug. 2021. [CrossRef]

- A. K. Al-Jaberi, A. Asaad, S. A. Jassim, and N. Al-Jawad, “Topological Data Analysis for Image Forgery Detection,” Indian Journal of Forensic Medicine & Toxicology, vol. 14, no. 3, pp. 1745–1751, Jul. 2020. [CrossRef]

- V. Christlein, C. Riess, J. Jordan, C. Riess, and E. Angelopoulou, “IEEE TRANSACTIONS ON INFORMATION FORENSICS AND SECURITY An Evaluation of Popular Copy-Move Forgery Detection Approaches”, Accessed: Feb. 06, 2022. [Online]. Available: http://www5.cs.fau.de/our-team.

- Q. Wu, S. J. Sun, W. Zhu, G. H. Li, and D. Tu, “Detection of digital doctoring in exemplar-based inpainted images,” Proceedings of the 7th International Conference on Machine Learning and Cybernetics, ICMLC, vol. 3, pp. 1222–1226, 2008. [CrossRef]

- I. C. Chang, J. C. Yu, and C. C. Chang, “A forgery detection algorithm for exemplar-based inpainting images using multi-region relation,” Image Vis Comput, vol. 31, no. 1, pp. 57–71, Jan. 2013. [CrossRef]

- Z. Liang, G. Yang, X. Ding, and L. Li, “An efficient forgery detection algorithm for object removal by exemplar-based image inpainting,” J Vis Commun Image Represent, vol. 30, pp. 75–85, Jul. 2015. [CrossRef]

- K. S. Bacchuwar, Aakashdeep, and K. R. Ramakrishnan, “A jump patch-block match algorithm for multiple forgery detection,” Proceedings - 2013 IEEE International Multi Conference on Automation, Computing, Control, Communication and Compressed Sensing, iMac4s 2013, pp. 723–728, 2013. [CrossRef]

- J. Wang, K. Lu, D. Pan, N. He, and B. kun Bao, “Robust object removal with an exemplar-based image inpainting approach,” Neurocomputing, vol. 123, pp. 150–155, Jan. 2014. [CrossRef]

- D. Zhang, Z. Liang, G. Yang, Q. Li, L. Li, and X. Sun, “A robust forgery detection algorithm for object removal by exemplar-based image inpainting,” Multimed Tools Appl, vol. 77, pp. 11823–11842, 2018. [CrossRef]

- J. C. Lee, “Copy-move image forgery detection based on Gabor magnitude,” J Vis Commun Image Represent, vol. 31, pp. 320–334, Aug. 2015. [CrossRef]

- X. Jin, Y. Su, L. Zou, Y. Wang, P. Jing, and Z. J. Wang, “Sparsity-based image inpainting detection via canonical correlation analysis with low-rank constraints,” IEEE Access, vol. 6, pp. 49967–49978, Aug. 2018. [CrossRef]

- G. Mahfoudi, F. Morain-Nicolier, F. Retraint, and M. Pic, “Object-Removal Forgery Detection through Reflectance Analysis,” 2020 IEEE International Symposium on Signal Processing and Information Technology, ISSPIT 2020, Dec. 2020. [CrossRef]

- P. Kakar, N. Sudha, and W. Ser, “Exposing digital image forgeries by detecting discrepancies in motion blur,” IEEE Trans Multimedia, vol. 13, no. 3, pp. 443–452, Jun. 2011. [CrossRef]

- H. R. Chennamma and L. Rangarajan, “Image Splicing Detection Using Inherent Lens Radial Distortion,” IJCSI International Journal of Computer Science Issues, vol. 7, pp. 1694–0814, May 2011, Accessed: Jun. 05, 2023. [Online]. Available: https://arxiv.org/abs/1105.4712v1.

- J. Lukáš, J. Fridrich, and M. Goljan, “Detecting digital image forgeries using sensor pattern noise,” vol. 6072, pp. 362–372, Feb. 2006. [CrossRef]

- B. Mahdian and S. Saic, “Using noise inconsistencies for blind image forensics,” Image Vis Comput, vol. 27, no. 10, pp. 1497–1503, Sep. 2009. [CrossRef]

- P. Ferrara, T. Bianchi, A. De Rosa, and A. Piva, “Image forgery localization via fine-grained analysis of CFA artifacts,” IEEE Transactions on Information Forensics and Security, vol. 7, no. 5, pp. 1566–1577, 2012. [CrossRef]

- L. Shen, G. Yang, L. Li, and X. Sun, “Robust detection for object removal with post-processing by exemplar-based image inpainting,” ICNC-FSKD 2017 - 13th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery, pp. 2730–2736, Jun. 2018. [CrossRef]

- X. Zhu, Y. Qian, X. Zhao, B. Sun, and Y. Sun, “A deep learning approach to patch-based image inpainting forensics,” Signal Process Image Commun, vol. 67, pp. 90–99, Sep. 2018. [CrossRef]

- H. Li and J. Huang, “Localization of deep inpainting using high-pass fully convolutional network,” Proceedings of the IEEE International Conference on Computer Vision, vol. 2019-October, pp. 8300–8309, Oct. 2019. [CrossRef]

- M. Lu and S. Niu, “A Detection Approach Using LSTM-CNN for Object Removal Caused by Exemplar-Based Image Inpainting,” Electronics 2020, Vol. 9, Page 858, vol. 9, no. 5, p. 858, May 2020. [CrossRef]

- N. Kumar and T. Meenpal, “Semantic segmentation-based image inpainting detection,” Lecture Notes in Electrical Engineering, vol. 661, pp. 665–677, 2021. [CrossRef]

- A. Li et al., “Noise Doesn’t Lie: Towards Universal Detection of Deep Inpainting,” IJCAI International Joint Conference on Artificial Intelligence, pp. 786–792, Jun. 2021. [CrossRef]

- X. Zhu, J. Lu, H. Ren, H. Wang, and B. Sun, “A transformer–CNN for deep image inpainting forensics,” Visual Computer, pp. 1–15, Aug. 2022. [CrossRef]

- Y. Zhang, Z. Fu, S. Qi, M. Xue, Z. Hua, and Y. Xiang, “Localization of Inpainting Forgery With Feature Enhancement Network,” IEEE Trans Big Data, Jun. 2022. [CrossRef]

- H. Wu and J. Zhou, “IID-Net: Image Inpainting Detection Network via Neural Architecture Search and Attention,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 32, no. 3, pp. 1172–1185, Mar. 2022. [CrossRef]

- B. Bayar and M. C. Stamm, “Constrained Convolutional Neural Networks: A New Approach Towards General Purpose Image Manipulation Detection,” IEEE Transactions on Information Forensics and Security, vol. 13, no. 11, pp. 2691–2706, Nov. 2018. [CrossRef]

- P. Zhou, X. Han, V. I. Morariu, and L. S. Davis, “Learning Rich Features for Image Manipulation Detection,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 1053–1061, Dec. 2018. [CrossRef]

- Y. Zhou, H. Wang, Q. Zeng, R. Zhang, and S. Meng, “A Discriminative Multi-Channel Noise Feature Representation Model for Image Manipulation Localization,” ICASSP 2023 - 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1–5, Jun. 2023. [CrossRef]

- X. Liu, Y. Liu, J. Chen, and X. Liu, “PSCC-Net: Progressive Spatio-Channel Correlation Network for Image Manipulation Detection and Localization,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 32, no. 11, pp. 7505–7517, Nov. 2022. [CrossRef]

- J. Wang et al., “Deep High-Resolution Representation Learning for Visual Recognition,” IEEE Trans Pattern Anal Mach Intell, vol. 43, no. 10, pp. 3349–3364, Oct. 2021. [CrossRef]

- Y. Wu, W. Abdalmageed, and P. Natarajan, “Mantra-net: Manipulation tracing network for detection and localization of image forgeries with anomalous features,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2019-June, pp. 9535–9544, Jun. 2019. [CrossRef]

- X. Hu, Z. Zhang, Z. Jiang, S. Chaudhuri, Z. Yang, and R. Nevatia, “SPAN: Spatial Pyramid Attention Network for Image Manipulation Localization,” Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 12366 LNCS, pp. 312–328, 2020. [CrossRef]

- H. Sharma, N. Kanwal, and R. S. Batth, “An Ontology of Digital Video Forensics: Classification, Research Gaps & Datasets,” Proceedings of 2019 International Conference on Computational Intelligence and Knowledge Economy, ICCIKE 2019, pp. 485–491, Dec. 2019. [CrossRef]

- J. Zhang, Y. Su, and M. Zhang, “Exposing digital video forgery by ghost shadow artifact,” 1st ACM Workshop on Multimedia in Forensics - MiFor’09, Co-located with the 2009 ACM International Conference on Multimedia, MM’09, pp. 49–53, 2009. [CrossRef]

- S. L. Das Gopu Darsan Shreyas Divya Devan, “Blind Detection Method for Video Inpainting Forgery,” Int J Comput Appl, vol. 60, no. 11, pp. 975–8887, 2012.

- C. S. Lin and J. J. Tsay, “A passive approach for effective detection and localization of region-level video forgery with spatio-temporal coherence analysis,” Digit Investig, vol. 11, no. 2, pp. 120–140, 2014. [CrossRef]

- S. Bai, H. Yao, R. Ni, and Y. Zhao, “Detection and Localization of Video Object Removal by Spatio-Temporal LBP Coherence Analysis,” Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 11903 LNCS, pp. 244–254, 2019. [CrossRef]

- M. Aloraini, M. Sharifzadeh, and D. Schonfeld, “Sequential and Patch Analyses for Object Removal Video Forgery Detection and Localization,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 31, no. 3, pp. 917–930, Mar. 2021. [CrossRef]

- M. Aloraini, M. Sharifzadeh, C. Agarwal, and D. Schonfeld, “Statistical sequential analysis for object-based video forgery detection,” IS and T International Symposium on Electronic Imaging Science and Technology, vol. 2019, no. 5, p. 543, Jan. 2019. [CrossRef]

- X. Jin, Z. He, J. Xu, Y. Wang, and Y. Su, “Object-Based Video Forgery Detection via Dual-Stream Networks,” in 2021 IEEE International Conference on Multimedia and Expo (ICME), 2021, pp. 1–6. [CrossRef]

- A. V. Subramanyam and S. Emmanuel, “Video forgery detection using HOG features and compression properties,” 2012 IEEE 14th International Workshop on Multimedia Signal Processing, MMSP 2012 - Proceedings, pp. 89–94, 2012. [CrossRef]

- R. Dixit and R. Naskar, “Review, analysis and parameterisation of techniques for copy–move forgery detection in digital images,” IET Image Process, vol. 11, no. 9, pp. 746–759, Sep. 2017. [CrossRef]

- G. Kaur, N. Singh, and M. Kumar, Image forgery techniques: A review, no. May. Springer Netherlands, 2022. [CrossRef]

- S. Teerakanok and T. Uehara, “Copy-Move Forgery Detection: A State-of-the-Art Technical Review and Analysis,” IEEE Access, vol. 7, pp. 40550–40568, 2019. [CrossRef]

- Amerini, L. Ballan, R. Caldelli, A. Del Bimbo, and G. Serra, “A SIFT-based forensic method for copy-move attack detection and transformation recovery,” IEEE Transactions on Information Forensics and Security, vol. 6, no. 3 PART 2, pp. 1099–1110, Sep. 2011. [CrossRef]

- “CoMoFoD — New database for copy-move forgery detection | IEEE Conference Publication | IEEE Xplore.” https://ieeexplore.ieee.org/document/6658316 (accessed Jun. 06, 2023).

- Dong, W. Wang, and T. Tan, “CASIA image tampering detection evaluation database,” 2013 IEEE China Summit and International Conference on Signal and Information Processing, ChinaSIP 2013 - Proceedings, pp. 422–426, 2013. [CrossRef]

- N. T. Pham, J.-W. Lee, G.-R. Kwon, and C.-S. Park, “Hybrid Image-Retrieval Method for Image-Splicing Validation”. [CrossRef]

- B. Wen, Y. Zhu, R. Subramanian, T. T. Ng, X. Shen, and S. Winkler, “COVERAGE - A novel database for copy-move forgery detection,” Proceedings - International Conference on Image Processing, ICIP, vol. 2016-August, pp. 161–165, Aug. 2016. [CrossRef]

- P. Korus and J. Huang, “Multi-Scale Fusion for Improved Localization of Malicious Tampering in Digital Images,” IEEE Transactions on Image Processing, vol. 25, no. 3, pp. 1312–1326, Mar. 2016. [CrossRef]

- P. Korus and J. Huang, “Multi-Scale Analysis Strategies in PRNU-Based Tampering Localization,” IEEE Transactions on Information Forensics and Security, vol. 12, no. 4, pp. 809–824, Apr. 2017. [CrossRef]

- D. T. Dang-Nguyen, C. Pasquini, V. Conotter, and G. Boato, “RAISE - A raw images dataset for digital image forensics,” Proceedings of the 6th ACM Multimedia Systems Conference, MMSys 2015, pp. 219–224, Mar. 2015. [CrossRef]

- H. Guan et al., “MFC datasets: Large-scale benchmark datasets for media forensic challenge evaluation,” Proceedings - 2019 IEEE Winter Conference on Applications of Computer Vision Workshops, WACVW 2019, pp. 63–72, Feb. 2019. [CrossRef]

- H. Guan et al., “NISTIR 8377 User Guide for NIST Media Forensic Challenge (MFC) Datasets”. [CrossRef]

- G. Mahfoudi, B. Tajini, F. Retraint, F. Morain-Nicolier, J. L. Dugelay, and M. Pic, “Defacto: Image and face manipulation dataset,” European Signal Processing Conference, vol. 2019-September, Sep. 2019. [CrossRef]

- M. Daisy, P. Buyssens, D. Tschumperle, and O. Lezoray, “A smarter exemplar-based inpainting algorithm using local and global heuristics for more geometric coherence,” 2014 IEEE International Conference on Image Processing, ICIP 2014, pp. 4622–4626, Jan. 2014. [CrossRef]

- T. Y. Lin et al., “Microsoft COCO: Common Objects in Context,” Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 8693 LNCS, no. PART 5, pp. 740–755, May 2014. [CrossRef]

- A. Novozamsky, S. Saic, and B. Mahdian, “IMD2020: A Large-Scale Annotated Dataset Tailored for Detecting Manipulated Images,” Proceedings - 2020 IEEE Winter Conference on Applications of Computer Vision Workshops, WACVW 2020, pp. 71–80, Mar. 2020. [CrossRef]

- Yu, Z. Lin, J. Yang, X. Shen, X. Lu, and T. S. Huang, “Generative Image Inpainting with Contextual Attention,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 5505–5514, Jan. 2018. [CrossRef]

- R. Benenson, G. Research, and V. Ferrari, “From colouring-in to pointillism: Revisiting semantic segmentation supervision,” Oct. 2022, Accessed: Sep. 25, 2023. [Online]. Available: https://arxiv.org/abs/2210.14142v2.

- A. Newson, A. Almansa, Y. Gousseau, and P. Pérez, “Non-Local Patch-Based Image Inpainting,” Image Processing On Line, vol. 7, pp. 373–385, Dec. 2017. [CrossRef]

- The GIMP Development Team. GIMP. Retrieved from https://www.gimp.org.

- W. Li, Z. Lin, K. Zhou, L. Qi, Y. Wang, and J. Jia, “MAT: Mask-Aware Transformer for Large Hole Image Inpainting,” 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), vol. 2022-June, pp. 10748–10758, Jun. 2022. [CrossRef]

- H. Wu, Y. Chen, and J. Zhou, “Rethinking Image Forgery Detection via Contrastive Learning and Unsupervised Clustering,” Aug. 2023, Accessed: Sep. 28, 2023. [Online]. Available: https://arxiv.org/abs/2308.09307v1.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).