Submitted:

27 September 2023

Posted:

28 September 2023

You are already at the latest version

Abstract

Keywords:

I. Introduction

- Simple Activity Recognition This [1] refers to the videos where a single human performs a task like walking or running, etc.

- Temporal Activity Recognition (Localization) This [1] refers to the videos where a single human is performing multiple activities at a different time span that we need to recognize. Here we need to localize each individual activity within a different time span and note this.

- Spatio-Temporal Activity Recognition This [1] refers to the videos where multiple numbers of persons are engaged in performing multiple actions within a time span. Here we need to localize each person separately performing multiple actions and also we need to monitor the time span.

- Single-Frame CNN. This process [4] uses the image classification model or CNN for each frame of the videos and then averages the individual probabilities to calculate the final probability.

- Late Fusion. In this process [4] after getting the individual probabilities the average is done in the network itself in the Fusion Layer and then produce the output or the final probability.

- Early Fusion. This process operates [4] before passing the images to the CNN models. Here the temporal and RGB dimensions of the image get fused and converted to Tensors first and then those Tensors pass into the CNN model.

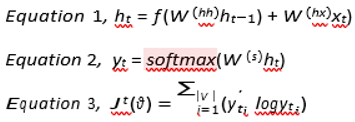

- CNN with LSTM. This process uses [4] CNN and with that LSTM, First, it uses CNN to identify the image and then gets fed into the LSTM (Many to ONE network) to predict the output. For the Long term dependencies means if we want to take the past information of previous frames into consideration for better prediction then RNN is not useful due to the Vanishing Gradient Problem so LSTM or GRU is the rescue here.

- 3D CNN / Slow Fusion. This process uses [4] 3-Dimensional CNN as it preserves the temporal information of the input signal by producing the output volume whereas 2D Conv loses the temporal information and produces a single output image. 3D CNN process the spatial and temporal information slowly to the individual CNN layer and produce the output. To learn the temporal information in the adjacent layers it uses four-dimensional tensors of two spatial dimensions, one channel dimension, and one temporal dimension (shape H W C T) are passed to the CNN model.

HAR With Device

- Tags. Tags are chips small in size attached to an object for collecting information and it is of two types active and passive, active tags are with a battery, and passive tags are without a battery. Active tags use the battery for the power whereas passive tags take power from the reader’s radio waves.

- Readers. Readers take the information from the tags by using an antenna.

- Accelerometer. Accelerometer is a device used to measure acceleration in multi-direction, x,y,z. It can be useful for action recognition, fall recognition, etc.

- Magnetometer. Magnetometer is a device to measure the magnetic field. It is useful for the recognition of gestures.

- Motion Based Sensors. Motion Sensors are useful in sensing the motions happening in an area.

- Proximity sensors. Proximity Sensors are useful in recognizing activities happening in near proximity without physical contact.

HAR Without Device

- Posture Recognition. Examples can be walking, standing, etc.

- Gesture Recognition. This is a simple activity [2] that happens in a short period of time. Examples can be showing hand signals, waving hands, or doing facial expressions.

- Behavior Recognition. Examples can be smiling or being angry recognition.

- Fall Detection. This is very useful for elderly people monitoring in hospitals.

- Daily Activities. This involves Doing Household chores, eating, drinking, etc.

- Assisted Activities. This involves walking with someone or something. This is again very helpful for monitoring people in the hospital.

- Group Activities This is a more complex type of activity [2] that needs more than 2 people and some objects and this can be a combination of gestures, interaction, and actions. Example A football match.

- Human-Human Activities. Examples can be Wrestling or Fighting

- Human-Object Activities. Examples can be talking on the phone, working on a computer, etc,

- Tracking. Tracking is a very crucial part of HAR. It can be done by Global Positioning System or GPS. It is useful in Augmented reality, supply chain management, and many more.

- Motion Detection. Motion Detection is the process of recognizing or capturing the movement of any object or person. It is useful in security surveillance.

- People Counting. People counting is the process of counting people in a closed or open environment to estimate the total number. It is useful in crowd management.

II. Background

- A.

- Data Collection

- 1)

-

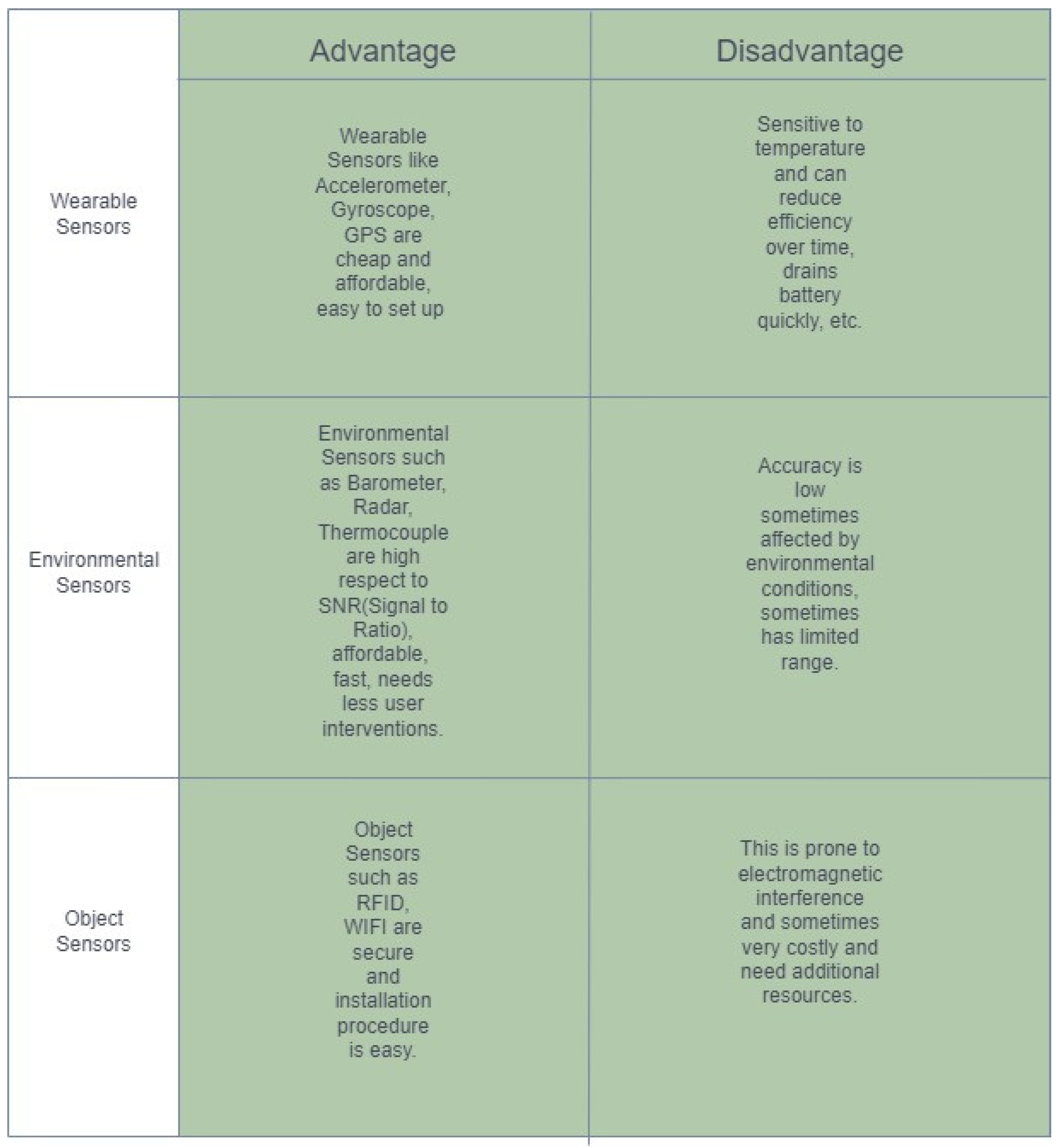

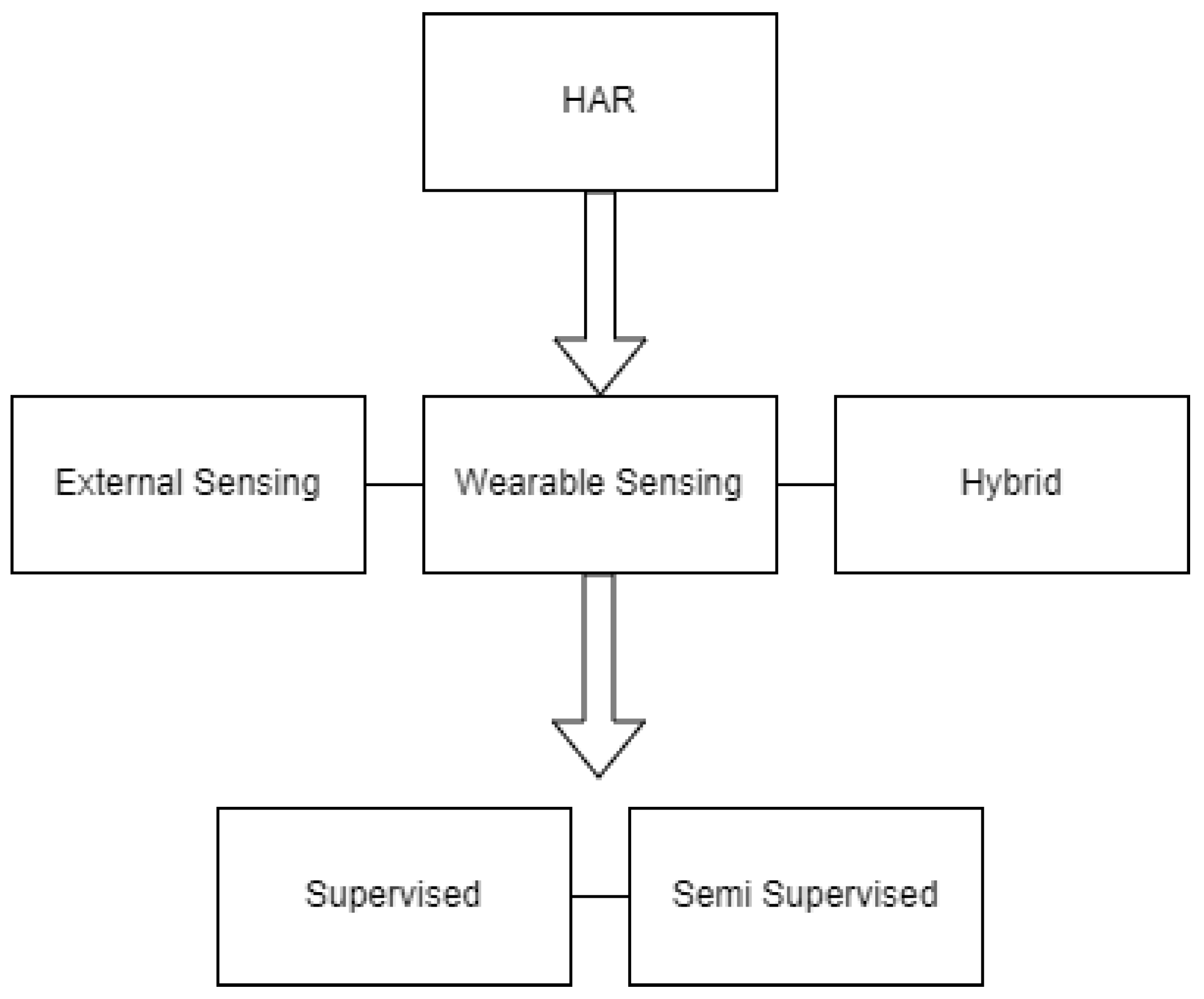

Sensor-Based HAR : Sensor-based HAR [2] is widely used in various real-world applications and the huge data gathers from Wireless Sensor Networks can be classified as Wearable Sensors, Object Sensors, Environmental Sensors, and Hybrid Sensors, which we have discussed broadly in the previous section. According to Lara and Miguel [31], there are several issues in HAR such as the Selection of Attributes and Sensors, Data Collection Protocols, Obtrusiveness, Flexibility, Recognition Performance, Energy Consumption, Processing, etc.

- (i)

- Selection of Attributes and Sensors: The sensors can be selected depending on some of the attributes such as Location, Environment, Acceleration, and Physiological Signals. Environmental attributes such as temperature [31], and humidity can interfere with the measurements, although only environmental attribute is not enough to select a sensor other conditions need to consider. Acceleration is a major sensor used such as the Triaxial Accelerometer, which is inbuilt in most mobile phones and smart watches nowadays, useful to recognize walking, running, and other activities. Due to its inexpensive and low battery consumption, the accelerometer is the best choice to carry. The subject can wear it on the wrist, or use it under the trouser or chest. Several papers have studied the Triaxial Accelerometer and found the detection accuracy very high almost 98% [32]. The major drawback of this is that it has to be placed properly to get higher detection if you want to recognize activities in a computer the device should be placed accordingly. So, the position of the device matters the most for better accuracy. For the ambulation activities, the amplitude varies from 2g to 6g [32]. The location can be tracked by the Global Positioning System or GPS which can track human activities from anywhere if location sharing is enabled, To maintain privacy encryption, obfuscation [31] and other modes of security should be enabled. GPS can be tracked outside on a real-time basis, it is not useful in indoor monitoring so, it is often used with accelerometers for better prediction. Physiological Signals such as vital signs like ECG, BP rate, Hear rate, etc require more sensors and higher energy for wireless communication to predict better.

- (ii)

- (iii)

- Obtrusiveness: It is necessary to use small devices for HAR as more devices are hard to carry and manage. There are some devices that require more accelerometers [34] to carry whereas there are other HAR systems that require only a strap or a small device. According to a paper [34] by Bao et al two accelerometers are enough to get a good prediction of daily activities (ADL) and ambulation, it is to mention that they used the accelerometers in the chest, arm, leg, and other parts of the body too.

- (iv)

- Flexibility: In any model if there is no flexibility the model will not do the job properly as it can be time- consuming. To refer to this statement the classification model should be independent of gender, age, and other factors. In the paper [35] it is mentioned to use a specific recognition model for each individual whereas in the paper [34] it is mentioned to be one monolithic recognition model [31]. In the first experiment. In the first experiment, many models were developed according to the subject, and in the 2nd experiment, only one model was developed and used cross-validation to use for all the subjects. So in certain conditions, it is difficult to add new users, and in different cases, the model can act differently that is another situation. So, the monolithic recognition model is the better approach by sorting the same characteristics of the users.

- (v)

- Recognition Performance: The performance of the recognition can be measured by some metrics such as Precision, ROC curve, Recall, F-measures, etc. By changing the attributes the prediction can be changed, the more complex the situation is the hard to recognize the activity [31].

- (vi)

- Energy Consumption: For delivering critical information energy consumption is very required. Battery life can be extended as well as short-range wireless networks can be used over long-range wireless networks. Moreover, data aggregation and compression are the popular energy-saving mechanisms discussed by Oscar D. Lara and Miguel A. Labrador in the paper [31].

- (vii)

-

Processing: The main concern of processing according to Oscar D. Lara and Miguel A. Labrador in the paper [31] is whether the processing of the recognition task should happen in the integration device or in the server. Sometimes it is beneficial to use it in the mobile device to ease the implementation but for the complex processing and storage perspective it is required to keep it in the server.

- 2)

-

Vision-Based HAR : Vision-Based HAR can be divided into two types depending on the data types, such as RGB data and RGB-D data. According to a paper [31], RGB-D data achieves higher accuracy because multi-modal data provides more information but RGB data is widely used due to its less configuration and computation complexity.

- a)

- RGB Data: According to papers [31], [37], RGB data is the combination of RED-GREEN-BLUE colors from warm orange to cool blue 0-255 ranges of 8 bits each data in the visible spectrum combined in various proportions to get color on the digital screen. This data is highly produced and widely available so very affordable.

- b)

- RGB-D Data: According to paper [26], RGB-D data is the combination of the RED-GREEN-BLUE with Depth data captured by the RGB-D sensors and cameras provides the per-pixel depth information [36] helps to recognize hu- man activities more accurately. It has several advantages such as working very well in pitch-dark environments [31].

- B.

- Pre-processing

- ∙

- Denoising. Denoising is the technique of reducing noise from the input signal. Signals can be noisy due to several reasons like malfunction or mishandling. The denoising can be done with Singular Value Decomposition or SVD or by using filters such as low pass filter, high pass filter, etc.

- ∙

- Feature Selection. Feature extraction process extracts the core information from the raw data for processing with some process like Principal Component Analysis or PCA and Independent Component Analysis or ICA. The feature selection process selects the feature which is of utmost importance and essential for the prediction by getting rid of other redundant information.

- ∙

-

Normalisation. This process normalizes or scales the feature to get a neutral mean and 1 variance. There are several techniques of Normalization such as Scaling to a Range, Clipping Log scaling, and z-score. The most popular is the z-score.The z-score of a point x is x’ =

where X’ is the z-score of xµ is meanσ is Standard Deviation

where X’ is the z-score of xµ is meanσ is Standard Deviation- ∙

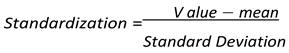

- Dimensionality Reduction. For getting rid of redundant features dimensional reduction is very useful and this can be achieved by using Principal Component Analysis or PCA technique, where the principal components [40] are perpendicular to each other. To calculate PCA we need to choose the top pairs of eigenvalues and eigenvectors of highest values. According to [39], to get the maximum variance spread the eigenvectors are needed, most precisely eigenvectors spread the direction the maximum value indicates. eigenvalues are the coefficients and proportions of the eigenvectors that produce the magnitude of the vectors. Principal Components display the direction of the maximum amount of the variance of the data. According to article [41], we need to know Standardization and Cov matrix to calculate the eigenvalues and eigenvectors.

- 1)

- Standardization:

- 2)

-

Covariance Matrix Computation:If COV(X, Y) is positive, then x, and y increase or decrease together.If COV(X, Y) is negative, one variable increases and the other variable decreases.

- ∙

- Missing Value Imputation. This process fills the missing values (happen due to mishandling or device malfunction) of features by using the median or mean.

- C.

- Feature Extraction and Segmentation

- 1)

- Segmentation. This process extracts more meaningful and essential information from the videos by creating individual segments and analyzing those. According to the article [42] on Image Segmentation, the different types of image segmentation are Instance Segmentation, Semantic Segmentation, and Panoptic Segmentation based on the information extracted from the image.

- ∙

- Instance Segmentation: This segments each object in an image. It is useful in segmenting individual objects in an image and it works similarly to object detection but in addition to the object boundaries [41], by separating the overlapping object in an image.

- ∙

- Semantic Segmentation: This identifies different objects in the image and creates segmentation by dense labeling [42] each pixel in the image with respect to the class by taking the image as an input and then creating the segmentation map with the pixel values from 0 to 255 and classifying the pixels 0 to n number of class labels.

- ∙

- Panoptic Segmentation: This is a combination of Instance Segmentation and Semantic Segmentation [42], and identifies each object in the image by labeling each pixel and classifying it.

- a)

-

Traditional Techniques: There are some Traditional Techniques to extract the information from the input image.

- i)

- Thresholding: This process changes the input im- age into a binary image [42]. The two commonly used categories of Thresholding are Global Thresh- olding and Adaptive Thresholding.

- ∙

- Global Thresholding: For images without varying illumination and contrast [42], Global Thresholding is a good choice. This process changes the input image into foreground and background regions according to the threshold value, if the pixel intensity value is above the threshold then it will consider foreground, otherwise background.

- ∙

-

Adaptive Thresholding: This process is suitable for varying illumination and contrast, useful for digital scanning [42]. This process segments the pixel intensity values by taking small regions or blocks and classifying them as foreground and background with respect to the threshold value.

- ii)

- Region-based Segmentation: This process is done by segmenting each pixel by grouping it into re- gions or clusters according to the size, texture [42], and other attributes. This process can be done com- monly in two ways Split and merge segmentation and Graph-based segmentation.

- ∙

- Split and merge segmentation: For images without the complexity and irregular regions, this process is used where the grouping is done recursively with respect to the similarity in texture, size, etc to form a larger region.

- ∙

-

Graph-based segmentation: This process segments the input image as a graph where the nodes are the pixels and the edges are the similarity between the pixels.

- iii)

- Edge-based Segmentation: This process segments the edges from the images. The commonly used categories are Canny edge detection, Sobel edge detection, and Laplacian of Gaussian (LoG) edge detection.

- ∙

- Canny edge detection: This process involves a multi-stage algorithm [41]. First uses Gaussian filter for edge smoothing then uses non- maximum suppression to thin the edges and hysteresis thresholding to remove the weaker edges.

- ∙

- Sobel edge detection: This process extracts the horizontal and vertical edge information from the input image by using a Sobel operator to compute the gradient magnitude and direction of the image [42].

- ∙

-

Laplacian of Gaussian (LoG) edge detection: For images without complex edges this process works well. It first removes noise by applying the Gaussian filter and then highlights the edges by applying the Laplacian operator [42].

- iv)

- Clustering: This process involves grouping pixels into clusters or segments with respect to similar characteristics. There are several commonly used methods of Clustering such as K means clustering, mean shift clustering, hierarchical clustering, and fuzzy clustering [42].

- ∙

- K-mean clustering: This process is achieved by treating the input image as the data points and partitioning them into K clusters with respect to the similarity. By using the Euclidean distance matrix, this algorithm first selects the k-centroids [42] and then groups the nearest pixels to the nearest centroid, and then updates the centroids by the mean. Thus the process continues till it converges.

- b)

- Deep Learning Techniques: This process uses neural network approaches with the help of encoder-decoder, the encoder encodes the input image and uses Transfer Learning to extract the features for the segmentation and then the decoder uses the output of the encoder and uses this for computing the segmented mask which resembles the pixel resolution as the input image. The commonly used techniques are the U-net and Seg-net based on the Convolutional Neural network. U-net uses the encoder and decoder whereas Seg-net is designed for semantics pixel-wise segmentation [42].

- ∙

-

Pixel Accuracy: It determines the overall accuracy of the segment by calculating the ratio of the correctly classified pixels to the total number of pixels.

- ∙

-

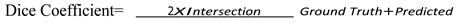

Dice Coefficient: This predicts the similarities of the ground label segmentation to the predicted segmentation by calculating the intersection of these two.

- ∙

-

Jaccard Index (IOU): The intersection over union (IoU) score predicts the similarity of the ground truth and predicted segmentation.

- 2)

- Feature Extraction: Features are the parts or patterns [38] of the image that help in better identification. For example, the corners and the edges are the features of the rectangle and square. There are some traditional and Deep Learning techniques of feature detection. There are some traditional feature detection techniques such as Harris Corner Detection, Shi-Tomasi Corner Detector, Scale-Invariant Feature Transform (SIFT), Speeded-Up Robust Features (SURF), Features from Accelerated Segment Test (FAST), Binary Robust Independent Elementary Features (BRIEF), Oriented FAST and Rotated BRIEF (ORB). For detecting small corners Harris Corner is used and for detecting larger corners Scale-Invariant Feature Transform (SIFT) is used. Speeded-Up Robust Features (SURF) is the faster version of SIFT. The deep learning techniques include D2-Net, LF-net, and Superpoint based on the Convolutional Neural Network.

- a)

-

Harris Corner Detection: This process uses the Gaussian window function to detect the corners. The equation [38] that determines whether the image contains the corner or not is as follows.where, λ1 and λ2 = eigenvalues of M det(M ) = λ1 λ2

trace(M ) = λ1 + λ2

trace(M ) = λ1 + λ2

- b)

-

Shi-Tomasi Corner Detector: This is the upgradation of the previous procedure. This procedure detects corners by calculating the minimum of the eigenvalues.R = min(λ1, λ2)when the eigenvalues are greater than the threshold value λmin it is considered as the corner.

- D.

- Model Selection

- 1)

-

Convolutional Layer: This is the first layer of CNN it extracts the features from the images and by using a kernel size K X K, slides over the image and does the dot product, and sent the output to the next layer [45]

- 2)

- Pooling Layer: This 2nd layer is the layer between Convolution Layer and the Fully Connected Layer, it is very cost-effective [45] as it decreases the connection of the layers and reduces the size of the feature map. The different types of pooling are the max-pooling and the average-pooling, sum-pooling. As the name implies max-pooling computes the maximum feature value and uses it, whereas the average-pooling takes the average value of the feature map and sum-pooling takes the sum values of the feature map.

- 3)

- Fully Connected Layer: This layer fattens the input and classifies. The neuron in this layer consists of weight and bias [45]. Two or more Fully Connected Layers perform better than one.

- 4)

- Dropout Layer: The dropout layer is introduced in CNN to reduce the overfitting [45] issue. This layer cut some connections by dropping the weight values during training to improve performance.

- 5)

- Activation Function: This function forwards [45] the information by firing. The commonly used activation functions are sigmoid, tanh, softmax, and ReLu, where the tanh and sigmoid are widely used for binary classification and softmax is for multi-class classification.

- 1)

- R-CNN. We generally face two types of problems [5], [6] in videos, first, we need to the objects and then classify them. So for the detection, it’s very essential to draw the correct bounding box around the object we want to detect. But sometimes it’s not possible to determine how many bounding boxes you need to draw from the beginning so the best approach is to use a huge number of regions which is not an effective solution to persist, so R-CNN comes into effect. Here we use the Selective Search algorithm to generate the region proposals of only 2000 numbers.

The Selective Search algorithm

- a)

- We generate many sub-segmentation to get the candi- date regions.

- b)

- Next we combine all the candidate regions with respect to the color, size, texture, etc recursively to get a larger one.

- c)

- Then we use the larger regions for object detection.

- 2)

- Fast R-CNN. R-CNN [6] is very slow as it takes a good amount of time to predict the object separately from the 2000 region proposals. So it’s quite difficult to test this in real-time. And moreover, as the Selective Search algorithm is not a learning algorithm so it can produce unhealthy candidate proposals. The main difference between R-CNN and Fast R-CNN is that here in Fast R-CNN the input images are directly fed into the CNN, not like R-CNN where the region proposals are get fed into the CNN. After getting the Conv feature map we use Selective Search Algorithm to identify the region proposals and then these region proposals are warped into squares. Now these squares get fed into the ROI Pooling Layer to get a fixed shape and then into the Fully Connected Layer or FC Layer to get the ROI feature vector, which then gets fed into the softmax Layer to predict the object and also gets fed into the BBox Regressor to get the offset values for the bounding box. It is fast than the R-CNN because here the 2000 candidate region proposals are not get fed into the Conv layer every time, here the input images get fed into the Conv layer to produce a feature map per image.

- 3)

- Faster R-CNN. The R-CNN and Fast R-CNN [6] use the Selective Search Algorithm to generate the feature maps but here in Faster R-CNN it doesn’t use Selective search instead it feeds the original image into the Conv Layer like Fast R-CNN and produces the Conv Feature Map which then gets fed into a different network which is called Region Proposal Network and then the output proposals feeds into the ROI Pooling Layer to classify the image from the proposed region and also produces the offset values for the BBOX.

- 4)

- YOLO. YOLO [6] or You Only Look Once is different than the other Region Based algorithms we discussed before. This is an object detection algorithm. Here the input image is converted to an SxS grid and m bounding boxes within this grid. From each of the Bounding boxes we get the output class probability map and the offset values for the BBox, now if the class probability value of the BBox is above the threshold value then it gets selected and use for object detection. YOLO is not useful to detect small objects in the image.

- 1)

-

FORGET GATE: This gate forgets or removes the information which is unwanted. The output of the forget gate = activation function ((Current timestep input X weight associated with the current input) + (hidden state output of the previous timestep X weight associated with previous hidden timestep))ft = σ(Xt ∗ Uf + Ht−1 ∗ Wf)

- 2)

-

INPUT GATE: This gate adds new information or modifies it. Here is the new information = activation function ((current input X weight associated with the current input) + ( The previous hidden state output X Weight associated with the hidden state input ))it = σ(Xt ∗ Ui + Ht−1 ∗ Wi)Ct = (ft ∗ Ct−1 + it ∗ Nt) [Updated Cell State]

- 3)

-

OUTPUT GATE: This gate produces the final output of the network which is between 0 and 1 as the sigmoid activation function is used. The output from the output gate = activation function ((Input at the current timestep X weight associated with the input) + ( The hidden state output of the previous timestep X Weight associated with the input to the hidden state))Ot = σ(Xt ∗ Uo + Ht−1 ∗ Wo)

- ∙

- True Positives (TP): Number of positive instances classi- fied as positive.

- ∙

- True Negatives (TN): Number of negative instances clas- sified as negative.

- ∙

- False Positives (FP): Number of negative instances clas- sified as positive.

- ∙

-

False Negatives (FN): Number of positive instances classified as negative.

- E.

- Model Deployment

- 1)

- External Sensing Deployment Here the cameras are placed outside to capture the activities.

- 2)

- On-body Sensing Deployment Here the cameras are worn by humans to capture their activities.

III. Datasets

- 1)

- Sensor-Based dataset Sensor-based data is collected by the sensors like an accelerometer, Gyroscope, Magne- tometer, GPS, etc. Here are some of the datasets men- tioned.

- a)

- WISDM Dataset This dataset is created by using an accelerometer sensor, which contains data on Downstairs, Jogging, Sitting, Standing, Upstairs, and Walking [43], has a total of a total of 1,098,207 instances, collected by 36 users.

- b)

- UCI HAR Dataset This dataset is created by using an accelerometer and gyroscope sensor and contains Standing, Sitting, Lying, Walking, Downstairs, and Walking Upstairs, a total of 10929 instances [43].

- 2)

- Vision-Based dataset .The vision-based dataset is col- lected without the sensors. Here are some of the datasets mentioned.

- a)

- b)

- c)

- MEVA Dataset MEVA [22] is a very popular dataset that contains 37 types of activity tracks of 176 actors for 144 hours. The total is 66172 annotated activities. MEVID is the subset of MEVA where the extended activities are gathered. MEVID contains activities monitored over 73 days. It contains hundreds of hired actors with their consent of taking videos for hundreds of hours engaged in indoor and outdoor staged activities taken with 30 ground-level cameras. 176 actors wore over 2237 unique outfits for three weeks over two months. The train set contains 104 identities with 485 outfits in the 6338 tracklets and the test set contains 54 identities with the 113 outfits in the 1754 tracklets with that 316 query tracklets. The tracks have an average of 592.6 frames over the span of 1 to 1000. The MEVID contains Re-identification data or ReID for 289 clips of MEVA data and 158 global identities staged in 17 locations from 33 viewpoints and collected more than 1.7 million BBOXes and 10.46 million frames.

- d)

- e)

- f)

- g)

IV. Related Study

V. Improvements and Conclusion

References

- Hussain, Z., Sheng, M., & Zhang, W. E. (2019). Different approaches for human activity recognition: A survey. arXiv preprint arXiv:1906.05074. [CrossRef]

- Dang, L. M., Min, K., Wang, H., Piran, M. J., Lee, C. H., & Moon, H. (2020). Sensor-based and vision-based human activity recognition: A comprehensive survey. Pattern Recognition, 108, 107561. [CrossRef]

- Beddiar, D. R., Nini, B., Sabokrou, M., & Hadid, A. (2020). Vision- based human activity recognition: A survey. Multimedia Tools and Applications, 79(41-42), 30509-30555. [CrossRef]

- V7labs official website. “Human Activity Recognition” https://www.v7labs.com/blog/human-activity- recognition.

- Medium official website “C3D” https://sh-tsang.medium.com/ paper-c3d-learning-spatiotemporal-features-with- 3d-convolutional-networks-video-classification- 72b49adb4081.

- Towards Data Science official website. “R-CNN, Fast R-CNN, Faster R-CNN, YOLO” https://towardsdatascience.com/r-cnn-fast-r-cnn-faster-r-cnn-yolo-object-detection-algorithms-36d53571365e.

- Gupta, S. (2021). Deep learning based human activity recognition (HAR) using wearable sensor data. International Journal of Information Management Data Insights, 1(2), 100046. [CrossRef]

- Ullah, A., Ahmad, J., Muhammad, K., Sajjad, M., & Baik, S. W. (2017). Action recognition in video sequences using deep bi-directional LSTM with CNN features. IEEE access, 6, 1155-1166. [CrossRef]

- Montes, A., Salvador, A., Pascual, S., & Giro-i-Nieto, X. (2016). Temporal activity detection in untrimmed videos with recurrent neural networks. arXiv preprint arXiv:1608.08128. [CrossRef]

- iMerit official website “Using Neural Networks for Video Classification”https://imerit.net/blog/using-neural-networks-for-video-classification-blog-all-pbm/.

- Orozco, C. I., Buemi, M. E., & Berlles, J. J. (2019). Cnn-lstm architecture for action recognition in videos. In I Simposio Argentino de Imagenes y Vision (SAIV 2019)-JAIIO 48 (Salta).

- LearnOpenCV official website “Introduction to Video Classification and Human Activity Recognition” https://learnopencv.com/introduction-to-video- classification-and-human-activity-recognition/.

- Tran, D., Bourdev, L., Fergus, R., Torresani, L., & Paluri, M. (2015). Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE international conference on computer vision (pp. 4489-4497). [CrossRef]

- Girshick, R., Donahue, J., Darrell, T., & Malik, J. (2014). Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 580-587). [CrossRef]

- Ullah, A., Ahmad, J., Muhammad, K., Sajjad, M., & Baik, S. W. (2017). Action recognition in video sequences using deep bi-directional LSTM with CNN features. IEEE access, 6, 1155-1166. [CrossRef]

- Ji, S., Xu, W., Yang, M., & Yu, K. (2012). 3D convolutional neural networks for human action recognition. IEEE transactions on pattern analysis and machine intelligence, 35(1), 221-231. [CrossRef]

- Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R., & Fei-Fei, L. (2014). Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition (pp. 1725-1732). [CrossRef]

- Yao, R., Lin, G., Xia, S., Zhao, J., & Zhou, Y. (2020). Video object segmentation and tracking: A survey. ACM Transactions on Intelligent Systems and Technology (TIST), 11(4), 1-47. [CrossRef]

- O¨ zyer, T., Ak, D. S., & Alhajj, R. (2021). Human action recognition approaches with video datasets—A survey. Knowledge-Based Systems, 222, 106995. [CrossRef]

- twine official website “Best Human Action Video Datasets of 2022”https://www.twine.net/blog/top-human-action-video-datasets/.

- AVA official website “AVA Dataset”https://research.google.com/ava/.

- Davila, D., Du, D., Lewis, B., Funk, C., Van Pelt, J., Collins, R., ... & Clipp, B. (2023). MEVID: Multi-view Extended Videos with Identities for Video Person Re-Identification. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (pp. 1634-1643). [CrossRef]

- Li, A., Thotakuri, M., Ross, D. A., Carreira, J., Vostrikov, A., & Zisserman, A. (2020). The ava-kinetics localized human actions video dataset. arXiv preprint arXiv:2005.00214. [CrossRef]

- Abdallah, Z. S., Gaber, M. M., Srinivasan, B., & Krishnaswamy, S. (2018). Activity recognition with evolving data streams: A review. ACM Computing Surveys (CSUR), 51(4), 1-36. [CrossRef]

- Ramasamy Ramamurthy, S., & Roy, N. (2018). Recent trends in machine learning for human activity recognition—A survey. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, 8(4), e1254. [CrossRef]

- Wang, P., Li, W., Ogunbona, P., Wan, J., & Escalera, S. (2018). RGB-D-based human motion recognition with deep learning: A survey. Computer vision and image understanding, 171, 118-139. [CrossRef]

- Yang, X., & Tian, Y. (2016). Super normal vector for human activity recognition with depth cameras. IEEE transactions on pattern analysis and machine intelligence, 39(5), 1028-1039. [CrossRef]

- Ke, S. R., Thuc, H. L. U., Lee, Y. J., Hwang, J. N., Yoo, J. H., & Choi,K. H. (2013). A review on video-based human activity recognition. Computers, 2(2), 88-131. [CrossRef]

- Aggarwal, J. K., & Xia, L. (2014). Human activity recognition from 3d data: A review. Pattern Recognition Letters, 48, 70-80. [CrossRef]

- Wang, J., Chen, Y., Hao, S., Peng, X., & Hu, L. (2019). Deep learning for sensor-based activity recognition: A survey. Pattern recognition letters, 119, 3-11. [CrossRef]

- Lara, O. D., & Labrador, M. A. (2012). A survey on human activity recognition using wearable sensors. IEEE communications surveys & tutorials, 15(3), 1192-1209. [CrossRef]

- Khan, A. M., Lee, Y. K., Lee, S. Y., & Kim, T. S. (2010). A triaxial accelerometer-based physical-activity recognition via augmented-signal features and a hierarchical recognizer. IEEE transactions on information technology in biomedicine, 14(5), 1166-1172. [CrossRef]

- Foerster, F., Smeja, M., & Fahrenberg, J. (1999). Detection of posture and motion by accelerometry: A validation study in ambulatory monitoring. Computers in human behavior, 15(5), 571-583. [CrossRef]

- Bao, L., & Intille, S. S. (2004, April). Activity recognition from user- annotated acceleration data. In International conference on pervasive computing (pp. 1-17). Berlin, Heidelberg: Springer Berlin Heidelberg. [CrossRef]

- Berchtold, M., Budde, M., Schmidtke, H. R., & Beigl, M. (2010). An extensible modular recognition concept that makes activity recognition practical. In KI 2010: Advances in Artificial Intelligence: 33rd Annual German Conference on AI, Karlsruhe, Germany, September 21-24, 2010. Proceedings 33 (pp. 400-409). Springer Berlin Heidelberg. [CrossRef]

- Shaikh, M. B., & Chai, D. (2021). Rgb-d data-based action recognition: A review. Sensors, 21(12), 4246. [CrossRef]

- TechTarget official website “RGB data”https://www.techtarget.com/whatis/definition/RGB-red-green-and-blue.

- TowardsDataScience official website “Feature Extraction”https://towardsdatascience.com/image-feature-extraction-traditional-and-deep-learning-techniques.

- Medium official website “PCA” https://medium.com/ analytics-vidhya/dimensionality-reduction-principal-component-analysis/.

- Pareek, P., & Thakkar, A. (2021). A survey on video-based human action recognition: Recent updates, datasets, challenges, and applications. Artificial Intelligence Review, 54, 2259-2322. [CrossRef]

- Builtin official website “PCA” https://builtin.com/data-science/step-step-explanation-principal-component-analysisencord official website “Segmentation”https://encord.com/blog/image-segmentation-for-computer-vision-best-practice-guide/.

- Khare, S., Sarkar, S., & Totaro, M. (2020, June). Comparison of sensor- based datasets for human activity recognition in wearable IoT. In 2020 IEEE 6th World Forum on Internet of Things (WF-IoT) (pp. 1-6). IEEE.

- Fan, L., Zhang, F., Fan, H., & Zhang, C. (2019). Brief review of image denoising techniques. Visual Computing for Industry, Biomedicine, and Art, 2, 1-12. [CrossRef]

- Chatterjee, S. (2023). Network Intrusion Detection and Deep Learning Mechanisms (Doctoral dissertation, Florida Atlantic University).

- dos Santos, S. F., Sebe, N., & Almeida, J. (2019, October). CV- C3D: Action recognition on compressed videos with convolutional 3d networks. In 2019 32nd SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI) (pp. 24-30). IEEE.

- Maurer, U., Smailagic, A., Siewiorek, D. P., & Deisher, M. (2006, April). Activity recognition and monitoring using multiple sensors on different body positions. In International Workshop on Wearable and Implantable Body Sensor Networks (BSN’06) (pp. 4-pp). IEEE. [CrossRef]

- Lara, O. D., & Labrador, M. A. (2012, January). A mobile platform for real-time human activity recognition. In 2012 IEEE consumer communications and networking conference (CCNC) (pp. 667-671). IEEE. [CrossRef]

- Riboni, D., & Bettini, C. (2011). COSAR: Hybrid reasoning for context-aware activity recognition. Personal and Ubiquitous Computing, 15, 271-289. [CrossRef]

- Kao, T. P., Lin, C. W., & Wang, J. S. (2009, July). Development of a portable activity detector for daily activity recognition. In 2009 ieee international symposium on industrial electronics (pp. 115-120). IEEE. [CrossRef]

- Parkka, J., Ermes, M., Korpipaa, P., Mantyjarvi, J., Peltola, J., & Korhonen, I. (2006). Activity classification using realistic data from wearable sensors. IEEE Transactions on information technology in biomedicine, 10(1), 119-128. [CrossRef]

- V7labs official website “Transfer Learning”https://www.v7labs.com/blog/transfer-learning-guide.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).