Submitted:

12 September 2023

Posted:

14 September 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

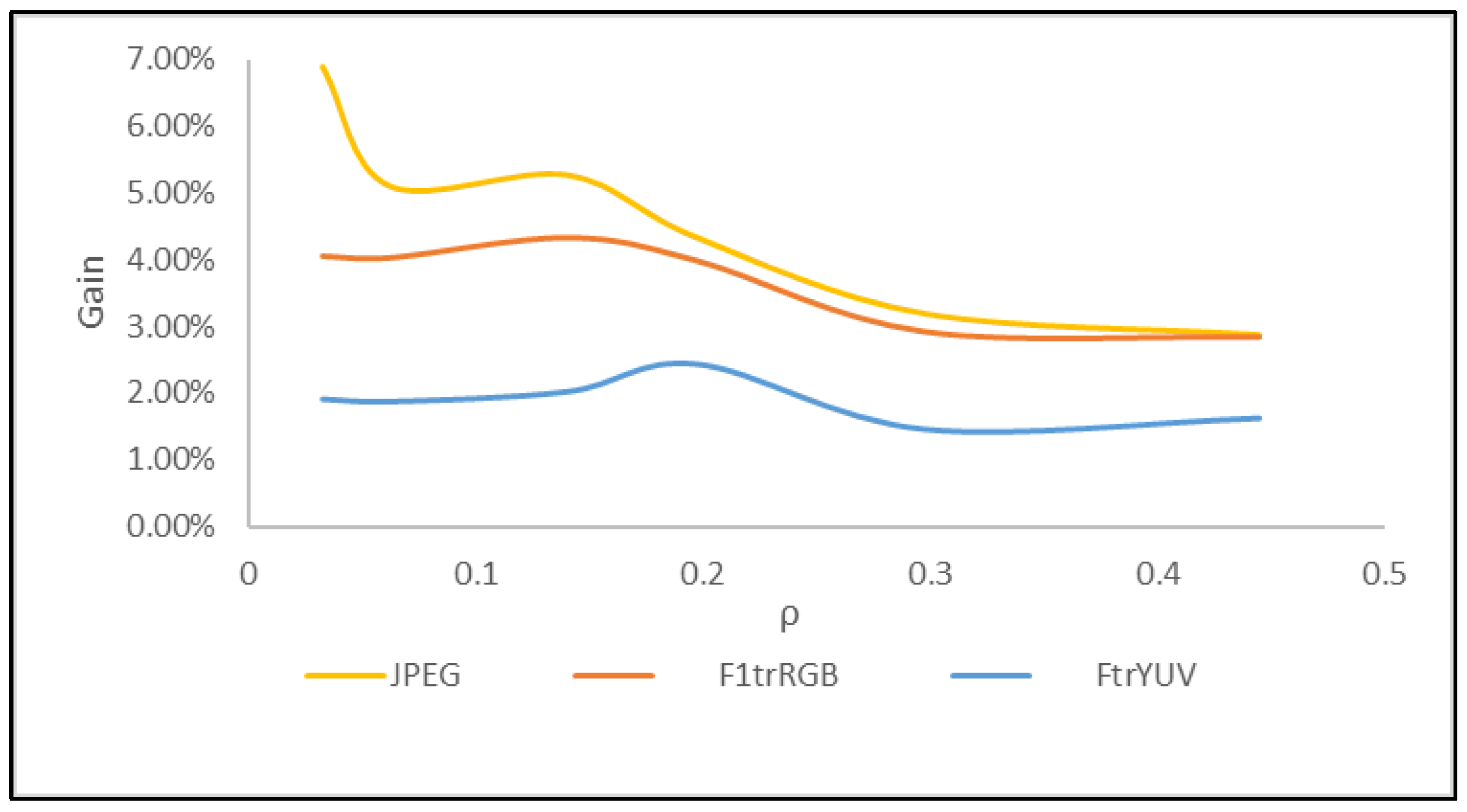

- the use of the bi-dimensional F1-transform represents a trade-off between the quality of the compressed image and the CPU times. It reduces the information loss obtained by compressing the image with the same compression rate using the F-transform algorithm with acceptable coding/decoding CPU time;

- the compression of the color images is carried out in the YUV space to guarantee a high visual quality of the color images and solve the criticality of the F1-transform color image compression method on the RGB space [18] which needs a larger memory to allocate the information of the compressed image. In fact, by performing a high compression of the two chrominance channels, the size of the matrices in which the information of the compressed image is contained, is reduced in these two channels, and this allows to reduce the memory allocation and CPU times.

2. Preliminaries

2.1. The bi-dimensional F-Transform

2.2. The bi-dimensional F1-Transform

2.3. Coding/decoding images using the bi-dimensional F and F1-Transforms

| Algorithm 1a. F1-transform image compression |

|

Input: N×M Image I with L grey levels Size of the blocks of the source image N(B)×M(B) Size of the compressed blocks n(B)× m(B) |

| Output: n×m compressed image IC |

|

| Algorithm 1b. F-transform image decompression |

| Input: n×m compressed image Ic |

| Output: N×M decoded image ID |

|

| Algorithm 2a. F1-transform image compression |

|

Input: N×M Image I with L grey levels Size of the blocks of the source image N(B)×M(B) Size of the compressed blocks n(B)× m(B) |

| Output: n×m matrices of the direct F1-transform coefficients |

|

| Algorithm 2b. F1-transform image decompression |

|

Input: n×m matrices of the direct F1-transform coefficients coefficients Size of the blocks of the decoded image N(B)×M(B) Size of the blocks of the coded image n(B)×m(B) |

| Output: N×M decoded image ID |

|

3. The YUV-based F1-transform color image compression method

| Algorithm 3a. YUV F1-transform color image compression |

|

Input: N×M color image I with L grey levels Size of the blocks of the source image N(B)×M(B) Size of the compressed blocks in the Y channel nY(B)× mY(B) Size of the compressed blocks in the U and V channels nUV(B)×mUV(B) |

| Output: n×m matrices of thedirect F1-transform coefficients in the Y, U and channels |

|

| Algorithm 3b. YUV F1-transform image decompression |

|

Input: n×m matrices of the direct F1-transform coefficients coefficients in the Y, U and V channels Size of the blocks of the decoded image N(B)×M(B) Size of the compressed blocks in the Y channel nY(B)× mY(B) Size of the compressed blocks in the U and V channels nUV(B)×mUV(B) |

| Output: N×M decoded image ID |

|

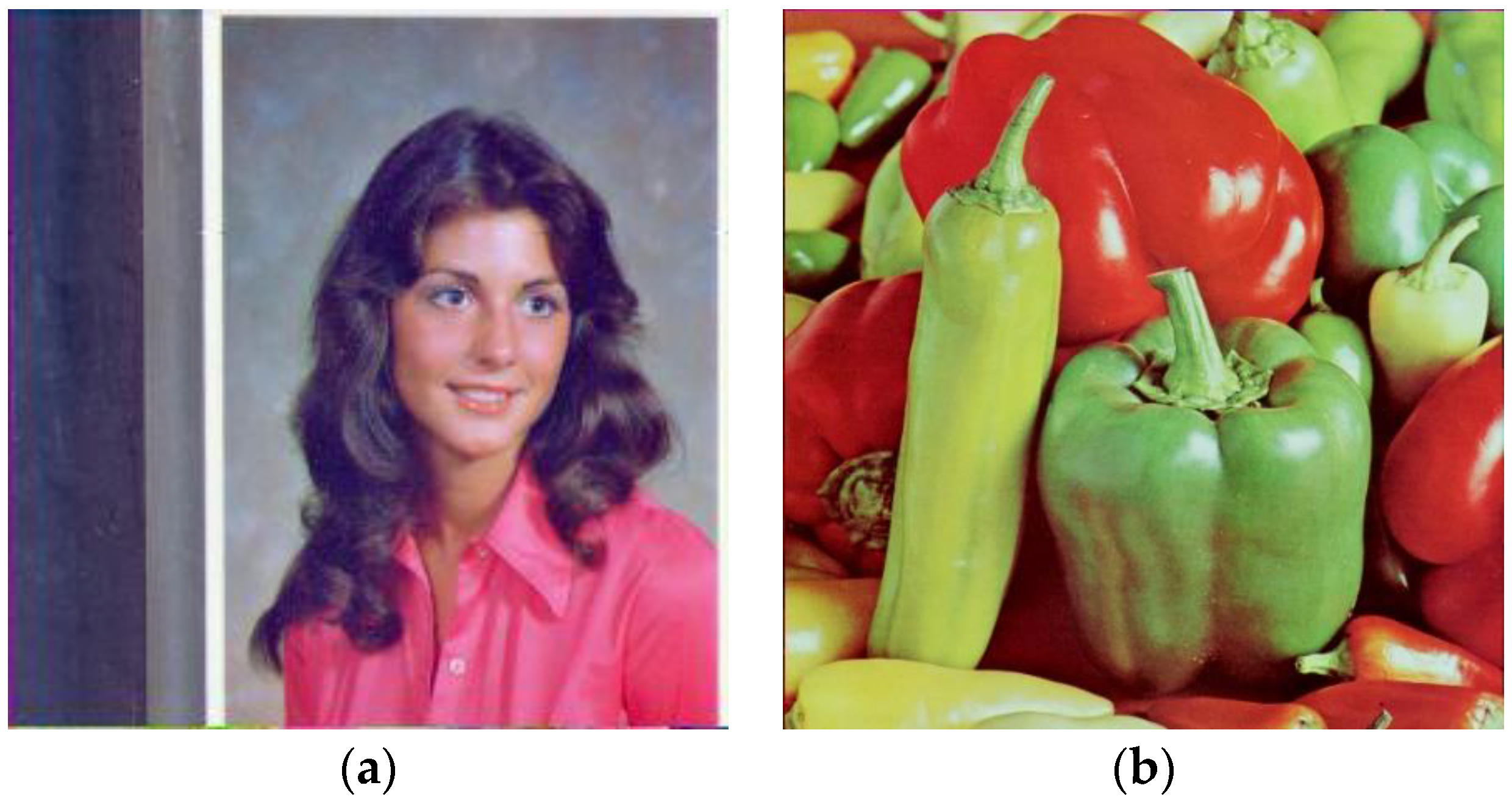

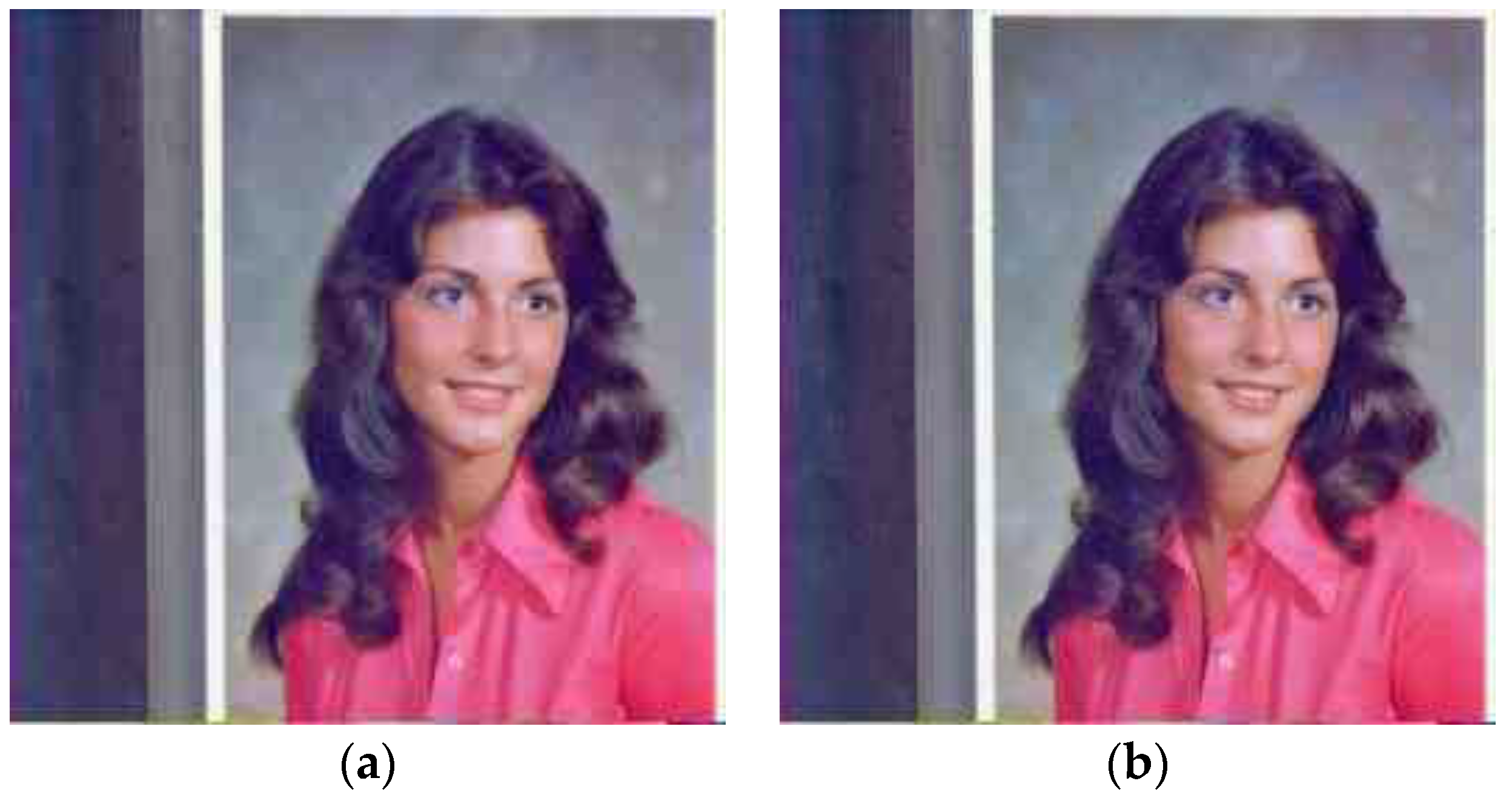

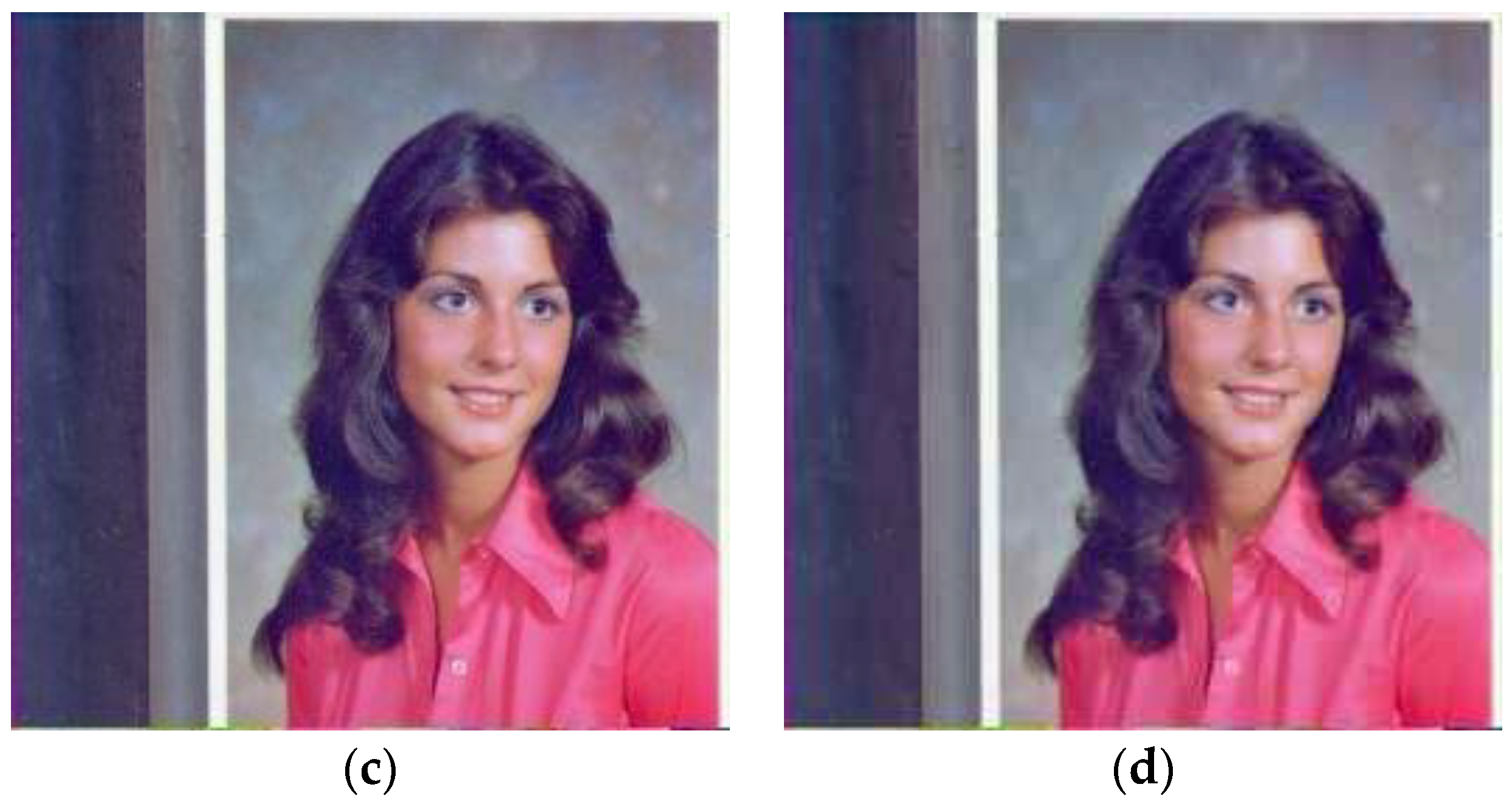

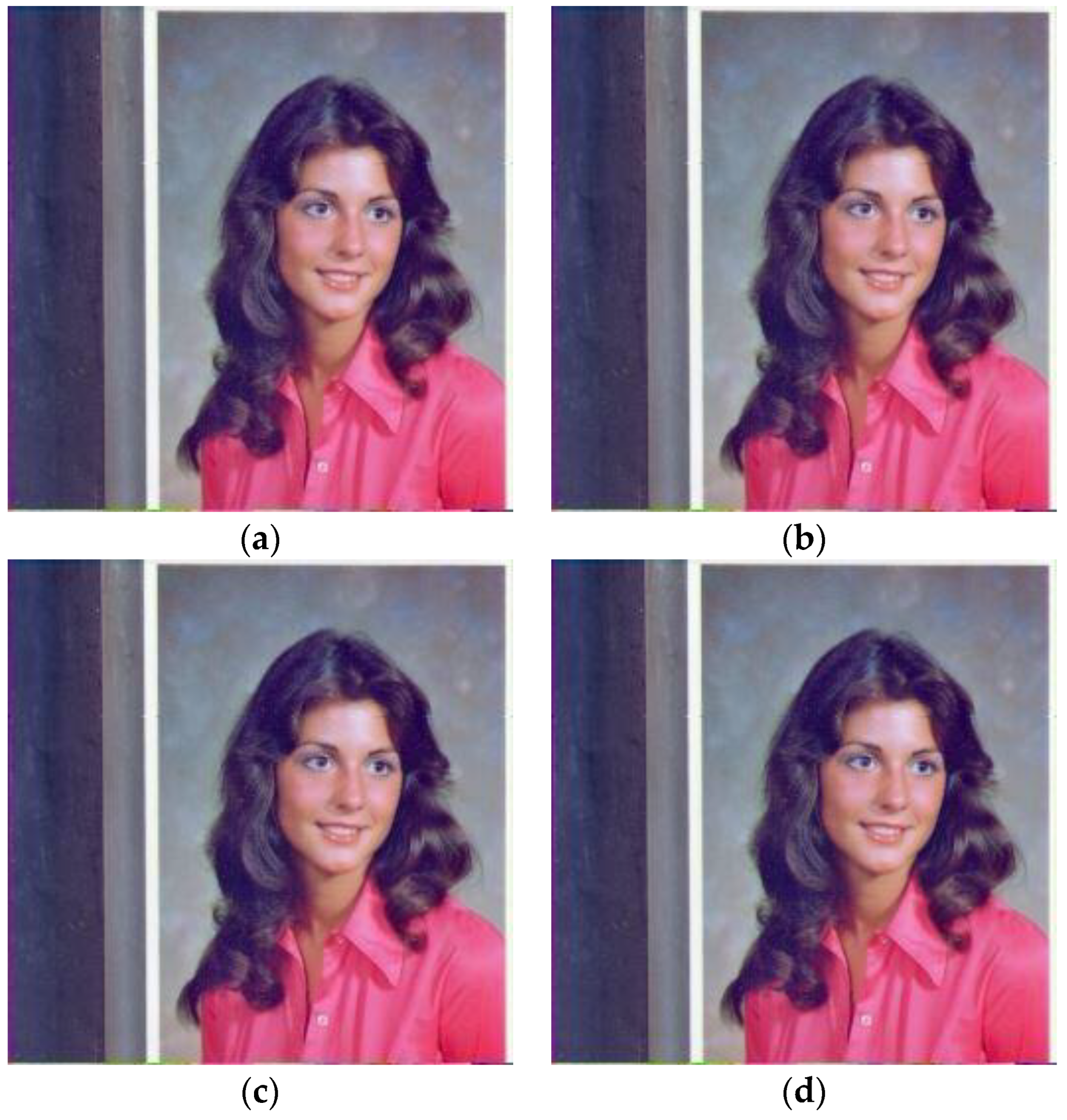

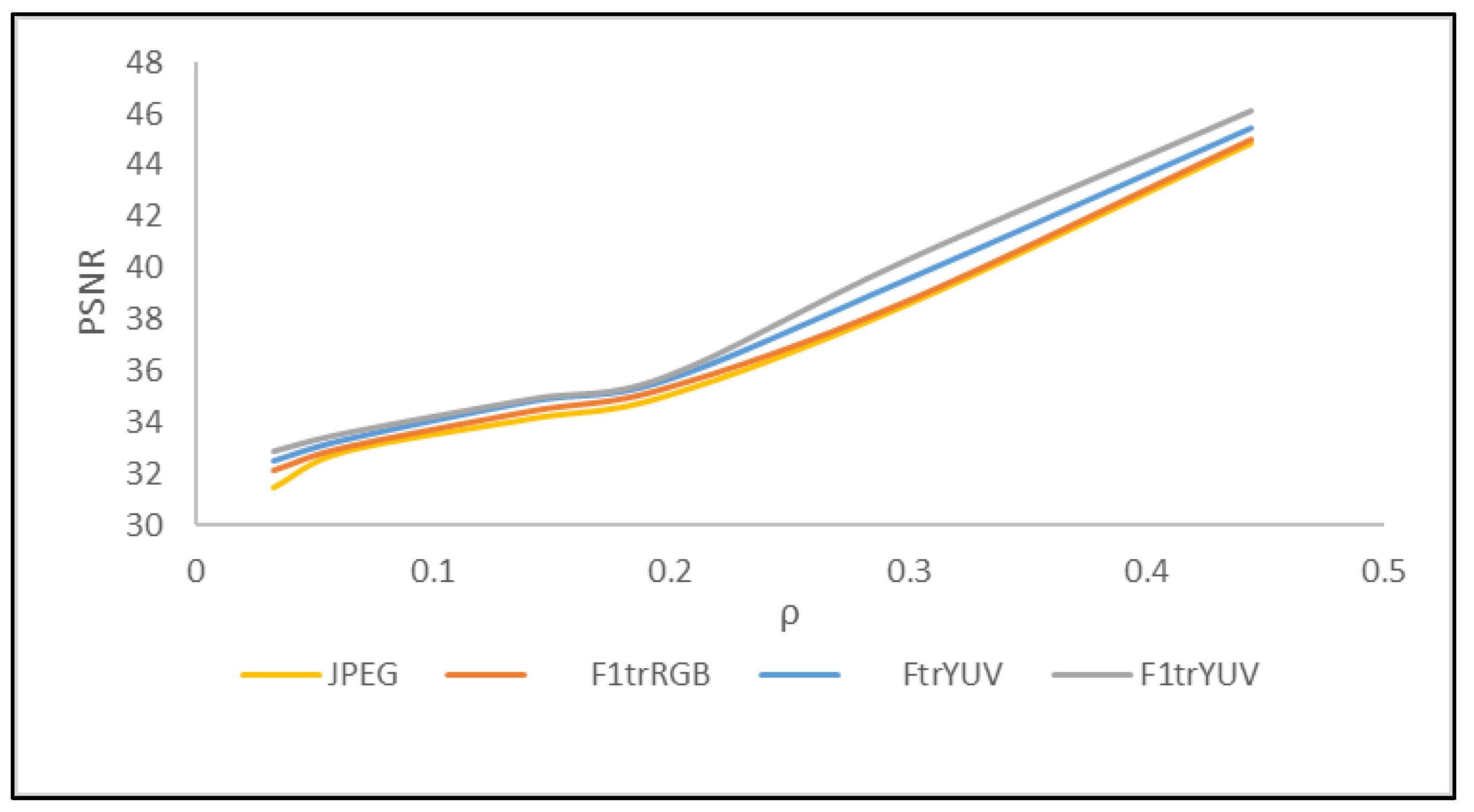

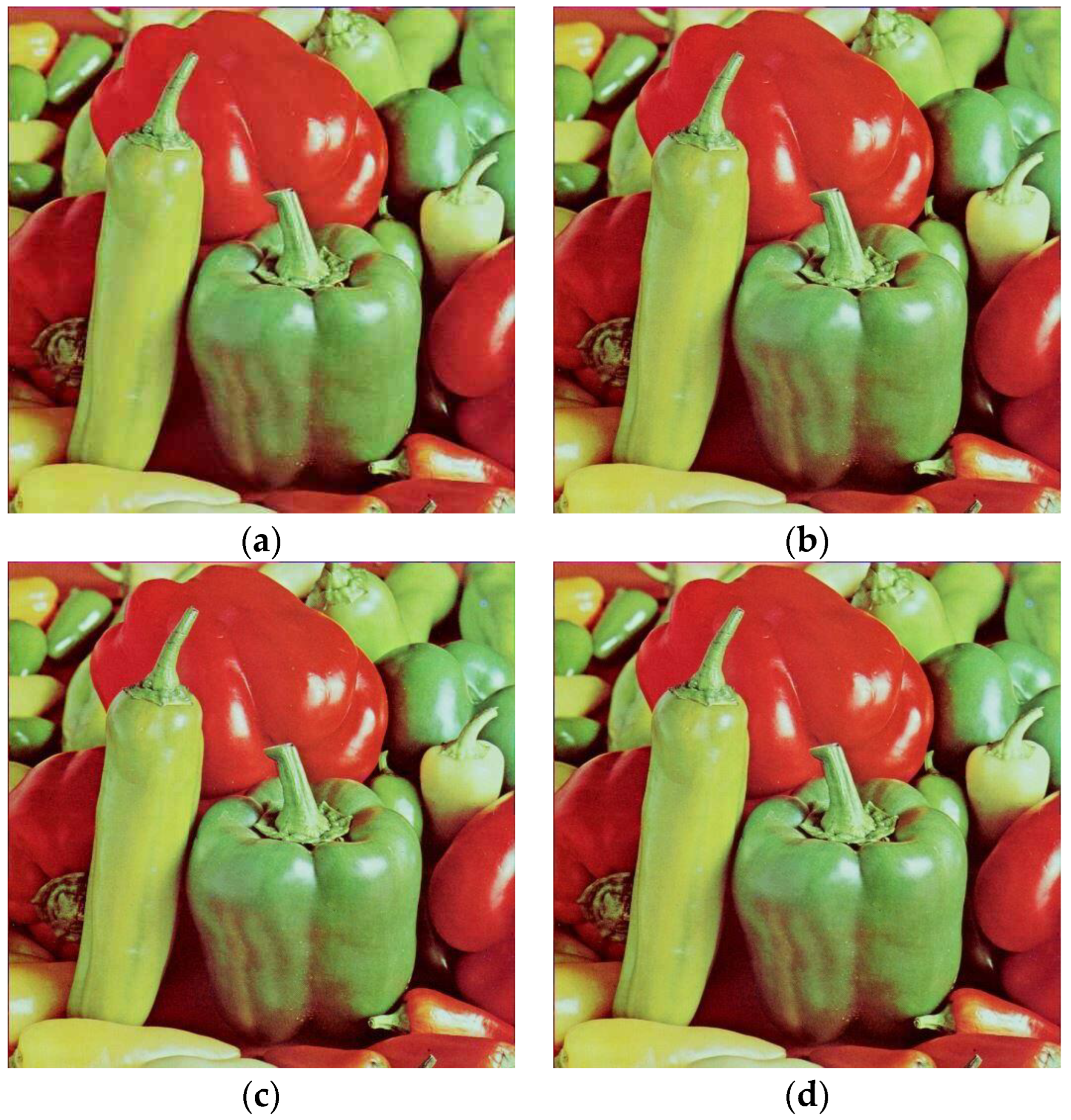

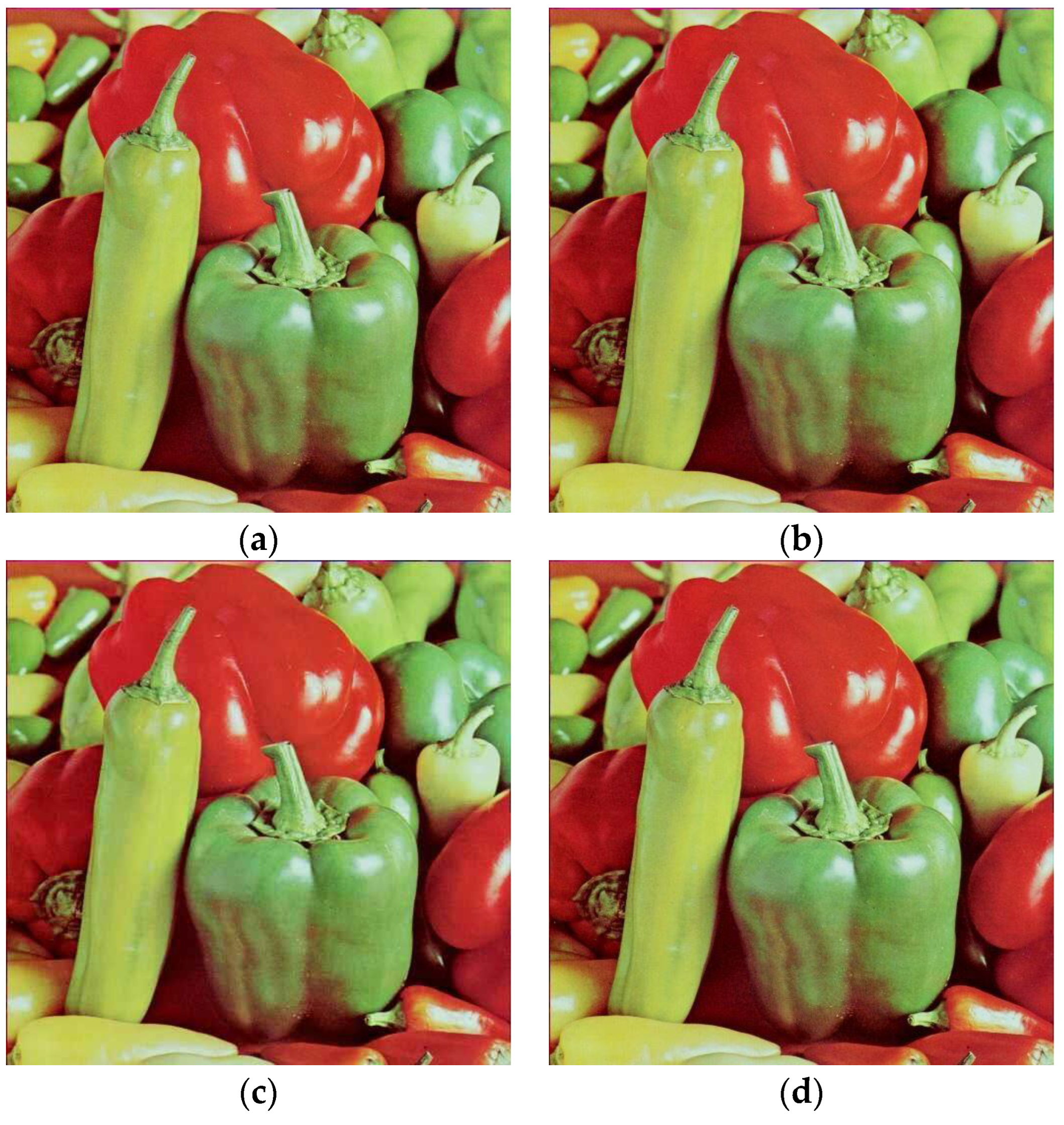

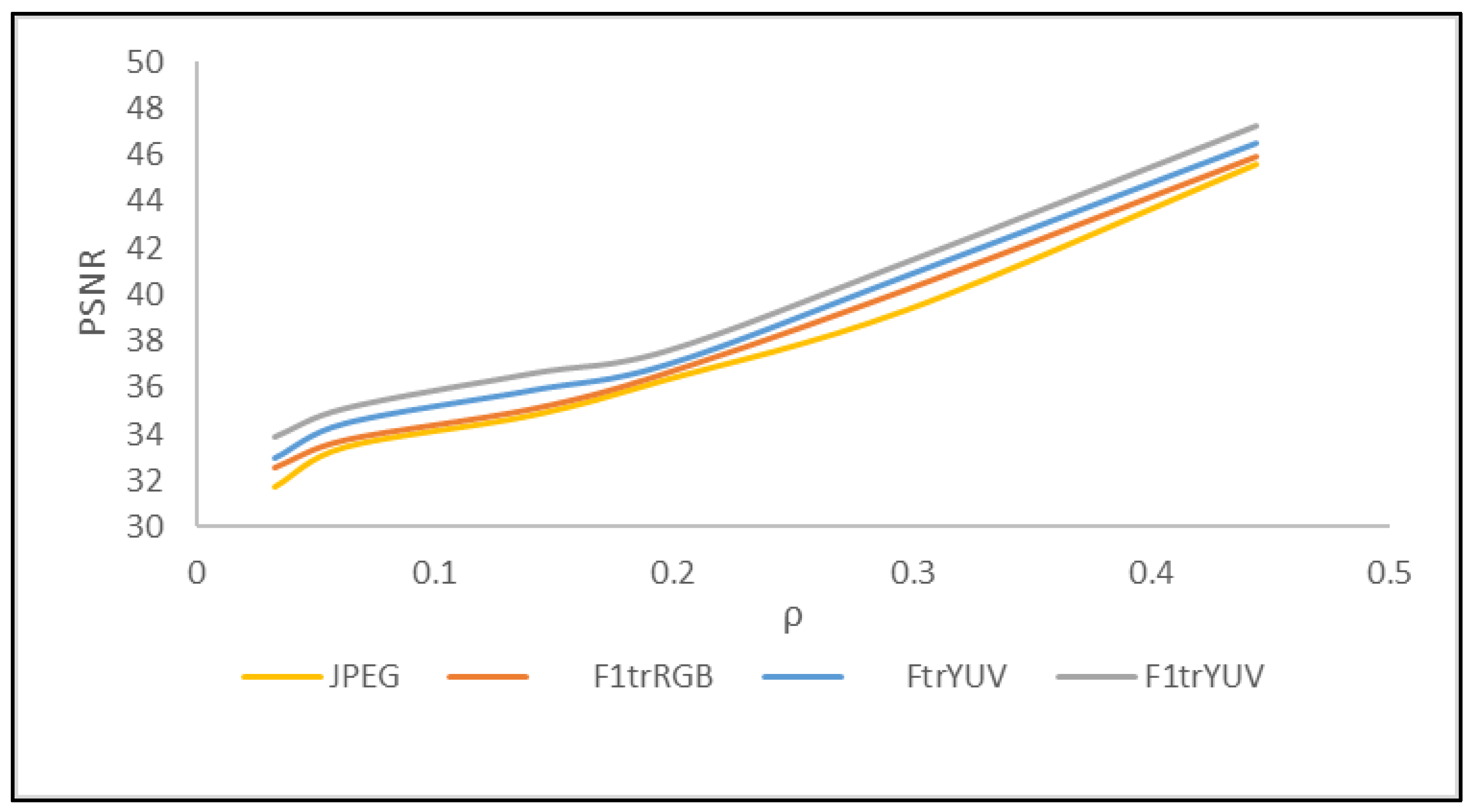

4. Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wallace, G. The JPEG still picture compression standard. IEEE Transactions on Consumer Electronics 1992, 38(1), xviii–xxxiv. [CrossRef]

- Raid, A. M.; Khedr, W. M.; El-Dosuky, M. A.: Ahmed, W. Jpeg image compression using discrete cosine transform-A survey. International Journal of Computer Science & Engineering Survey (IJCSES) 2014, 5(2), 39-47. [CrossRef]

- Mostafa, A.; Wahid, K.; Ko, S.B. An Efficient YUV-based Image Compression Algorithm for Wireless Capsule Endoscopy, IEEE CCECE 2011 2011 24th Canadian Conference on Electrical and Computer Engineering (CCECE) 8-11 May 2011, Niagara Falls, Ontario, Canada., pp 943-946. [CrossRef]

- Nobuhara, H.; Pedrycz, W.; Hirota, K. Relational image compression: optimizations through the design of fuzzy coders and YUV color space, Soft Computing 2005, 9(6), 471–479. [CrossRef]

- Nobuhara, H.; Hirota, K.; Di Martino, F.; Pedrycz, W.; Sessa S. Fuzzy relation equations for compression/decompression processes of colour images in the RGB and YUV colour spaces, Fuzzy Optimization and Decision Making 2005, 4(3), 235–246. [CrossRef]

- Di Martino, F.; Loia, V.; Sessa, S. Direct and Inverse Fuzzy Transforms for Coding/Decoding Color Images in YUV Space, Journal of Uncertain Systems 2009, 3(1), 11-30.

- Perfilieva, I. Fuzzy Transform: Theory and Application. Fuzzy Sets and Systems 2006, 157, 993-1023. [CrossRef]

- Di Martino, F.; Loia, V.; Perfilieva, I.; Sessa, S. An Image Coding/Decoding Method Based on Direct and Inverse Fuzzy Transforms. International Journal of Approximate Reasoning 2008, 48, 110-131. [CrossRef]

- Son, T. N.; Hoang, T. M.; Dzung, N. T.; Giang, N. H. Fast FPGA implementation of YUV-based fractal image compression, 2014 IEEE Fifth International Conference on Communications and Electronics (ICCE), Danang, Vietnam, 2014, pp. 440-445. [CrossRef]

- Podpora, M.; Korbas, G. P.; Kawala-Janik, A. YUV vs RGB – Choosing a Color Space for Human-Machine Interaction, 2014 Federated Conference on Computer Science and Information Systems, Warsaw, Poland, 7 - 10 September 2014, vol. 3 pp. 29–34. [CrossRef]

- Ernawan, F.; Kabir, N.; Zamli, K.Z., An efficient image compression technique using Tchebichef bit allocation. Optik, 2017, 148, 106-119.

- Zhu, S.; Cui, C.; Xiong, R.; Guo, U.; Zeng, B. Efficient Chroma Sub-Sampling and Luma Modification for Color Image Compression, in IEEE Transactions on Circuits and Systems for Video Technology, vol. 29, no. 5, pp. 1559-1563, May 2019. [CrossRef]

- Sun, H.; Liu, C.; Katto, J.; Fan, Y. An Image Compression Framework with Learning-Based Filter, Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 14-19 June 2020, Seattle, WA, USA, pp. 152-153.

- Malathkar, N.V.; Soni, S.K. High compression efficiency image compression algorithm based on subsampling for capsule endoscopy. Multimed Tools Appl 2021, 80, 22163–22175. [CrossRef]

- Ma, H.; Liu, D.; Yan, N.; Li, H.; Wu, F. End-to-End Optimized Versatile Image Compression With Wavelet-Like Transform IEEE Transactions on Pattern Analysis and Machine Intelligence 2022, 44, (3),. 1247-1263. [CrossRef]

- Yin, Z.; Chen, L.;Lyu, W.;Luo, B. Reversible attack based on adversarial perturbation and reversible data hiding in YUV colorspace. Pattern Recognition Letters 2023, 166, 1-7. [CrossRef]

- Di Martino, F.; Sessa, S.; Perfilieva, I. First Order Fuzzy Transform for Images Compression. Journal of Signal Information Processing 2017, 8, 178-94. [CrossRef]

- Di Martino, F.; Sessa, S. Fuzzy Transforms for Image Processing and Data Analysis - Core Concepts, Processes and Applications; Springer Nature: Cham, Switzerland, 2020, pp. 217. [CrossRef]

- Perfilieva, I.; Dankova, M.; Bede, B. Towards a higher degree F-transform. Fuzzy Sets and Systems 2011, 180, 3–19. [CrossRef]

- Technical Committee ISO/IEC JTC 1/SC 29 Coding of audio, picture, multimedia and hypermedia information, ISO/IEC 10918-1:1994 - Information technology — Digital compression and coding of continuous-tone still images: Requirements and guidelines, 1994, 182 pp.

- Wang, Y.; Tohidypour, H.R.; Pourazad, M.T.; Nasiopoulo, P.; Leung, V.C.M. Comparison of Modern Compression Standards on Medical Images for Telehealth Applications. In 2023 IEEE International Conference on Consumer Electronics (ICCE), 2023, pp. 1-6.

- Prativadibhayankaram, S.; Richter, T.; Sparenberg, H.; Fößel, S. Color Learning for Image Compression. arXiv preprint arXiv, 2023, 2306.17460.

- Yin, Z.; Chen, L.;Lyu, W.;Luo, B. Reversible attack based on adversarial perturbation and reversible data hiding in YUV colorspace. Pattern Recognition Letters 2023, 166, 1-7. [CrossRef]

| CPU time | JPEG | F1trRGB | FtrYUV | F1trYUV | |

|---|---|---|---|---|---|

| Coding | 256x256 | 2.76 | 2.78 | 2.41 | 3.09 |

| 512x512 | 5.75 | 5.88 | 5.66 | 6.01 | |

| Decoding | 256x256 | 5.82 | 5.86 | 5.04 | 5.73 |

| 512x512 | 9.52 | 9.85 | 9.12 | 9.56 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).