Submitted:

31 August 2023

Posted:

05 September 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Big Data and Hadoop Architectural

2.1. Big Data Characteristics

2.2. Common Use Ecosystem Tools

- 1.

-

Hadoop distributed file system (HDFS)Storage system it’s schema designed to be distributed and resilient, designed to let the use of MapReduce easy, but need other components (like Spark, BI tools, and MongoDB, etc.) need Apache YARN to make the interaction with the HDFS easily [4]. HDFS provides aggregation increasing bandwidth by bonding the cluster to boost the throughout [5]. Master/Slave is the design of HDFS, the master called the NameNode and the Slave is the DataNode, each cluster has one NameNode and more than two DataNode. Data split in HDFS for several blocks and each block replicated multiple times each block has a default size of (128/64MB), then each block of the same data replicas stored in a different node [6]. NameNode is responsible for making the decision of how the replication for the blocks and where each block is stored. NameNode holds the metadata of all the data in the same cluster. HDFS allows you to write once but read many [4].The reason for HDFS is write-once-read-many when Google Developed HDFS the only purpose is to receive data for once, and do the process on them many times, in other words, the batch process. For that, using HBase on top of HDFS is necessary when you want to be able to write and read randomly in the HDFS. HBase is a non-rotational database run on top of HDFS, let random/querying capabilities. Also, it stores the data as key-value pairs unlike HDFS it stores data as a flat-file. HBase can help other resources through the shell command in like Java, Avro or Thrift.

- 2.

-

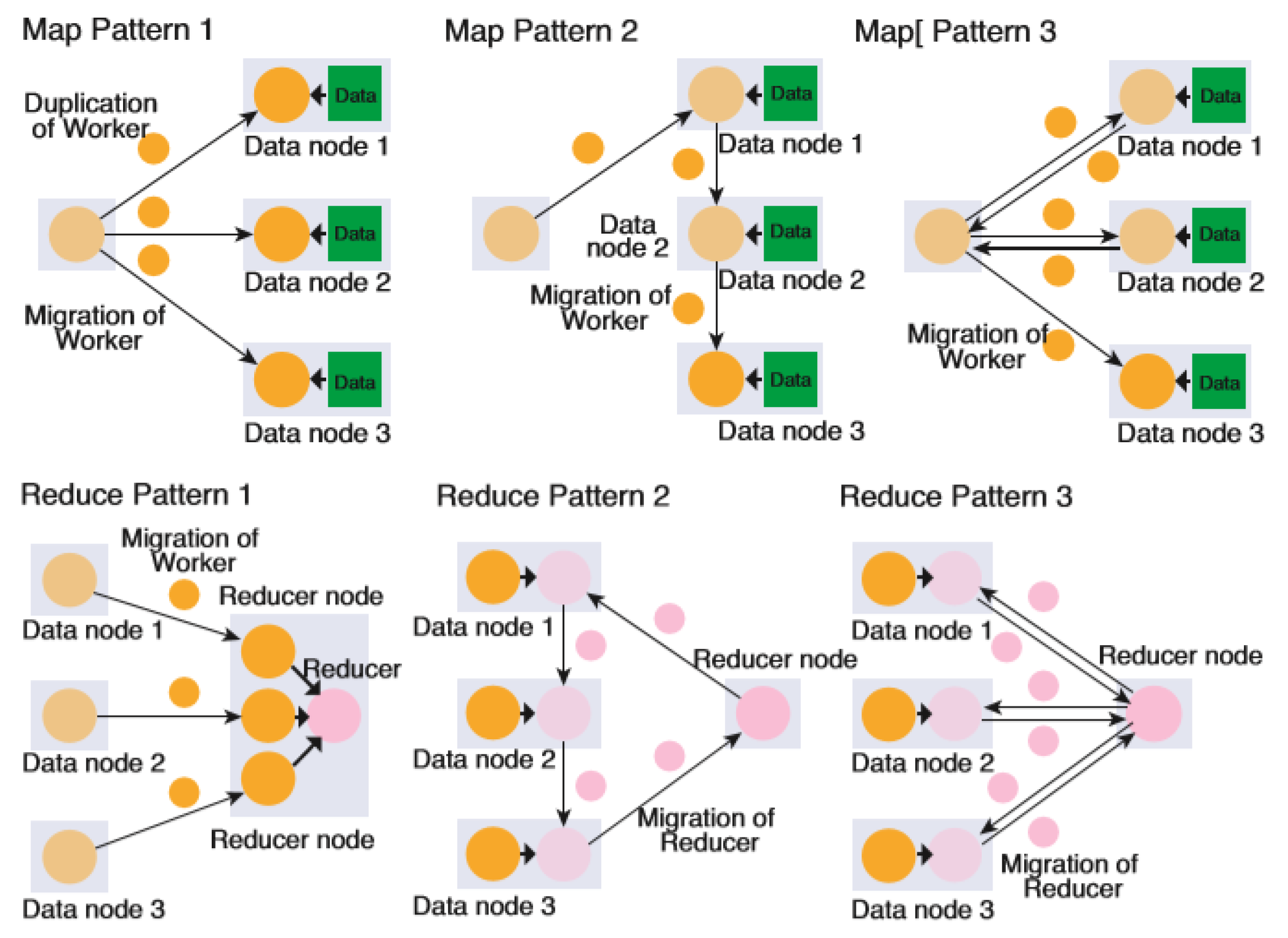

MapReduce Open source under the developed by Google [4], based on java, MapReduce model is based on two phases/tasks, the map task is dividing the data into several blocks, the result of dividing will be two blocks one of them will be the input key of the data and the other block will be the data itself and is called the value, in the end, the document will contain all the (key, value) in it for all the data [7]. MapReduce’s main task is to allow the system compatible with the large sets of data, especially in a distributed manner [6]. The ability of MapReduce is to process data and the fact that the query of execution is shorter compared with SQL/RDBMS.Satoh, I. [8] propose an architecture for MapReduce in IoT applications, it’s slightly different from the original one the mapper and reducer node, where there are three nodes the mapper and reducer, also adding the worker node. Figure 1 shows the integration between the three nodes. Each connected in its way and every connection has its own capability. Mapper and Worker are the response for the duplications and deployment of tasks. Worker alone is the response for three function application data processing like reading, process and store data, each one is standalone can be called without the other functions. The last one, Worker and Reducer for reducing data process results the is the response on how the data stored. According to Abdallat et al. [9], Hadoop MapReduce can be viewed as a dynamic ecosystem to be studied for the job scheduling algorithms to draw a clear image.

- 3.

- YARN Stands for Yet Another Resource Negotiator, allows the different ecosystem to be integrated with HDFS, the task of YARN is resource management and scheduling in Hadoop distributed processing frameworks [10]. Used for allowing the system to be able to deal like storing and processing data by newer process methods like stream process, graph process.

- 4.

-

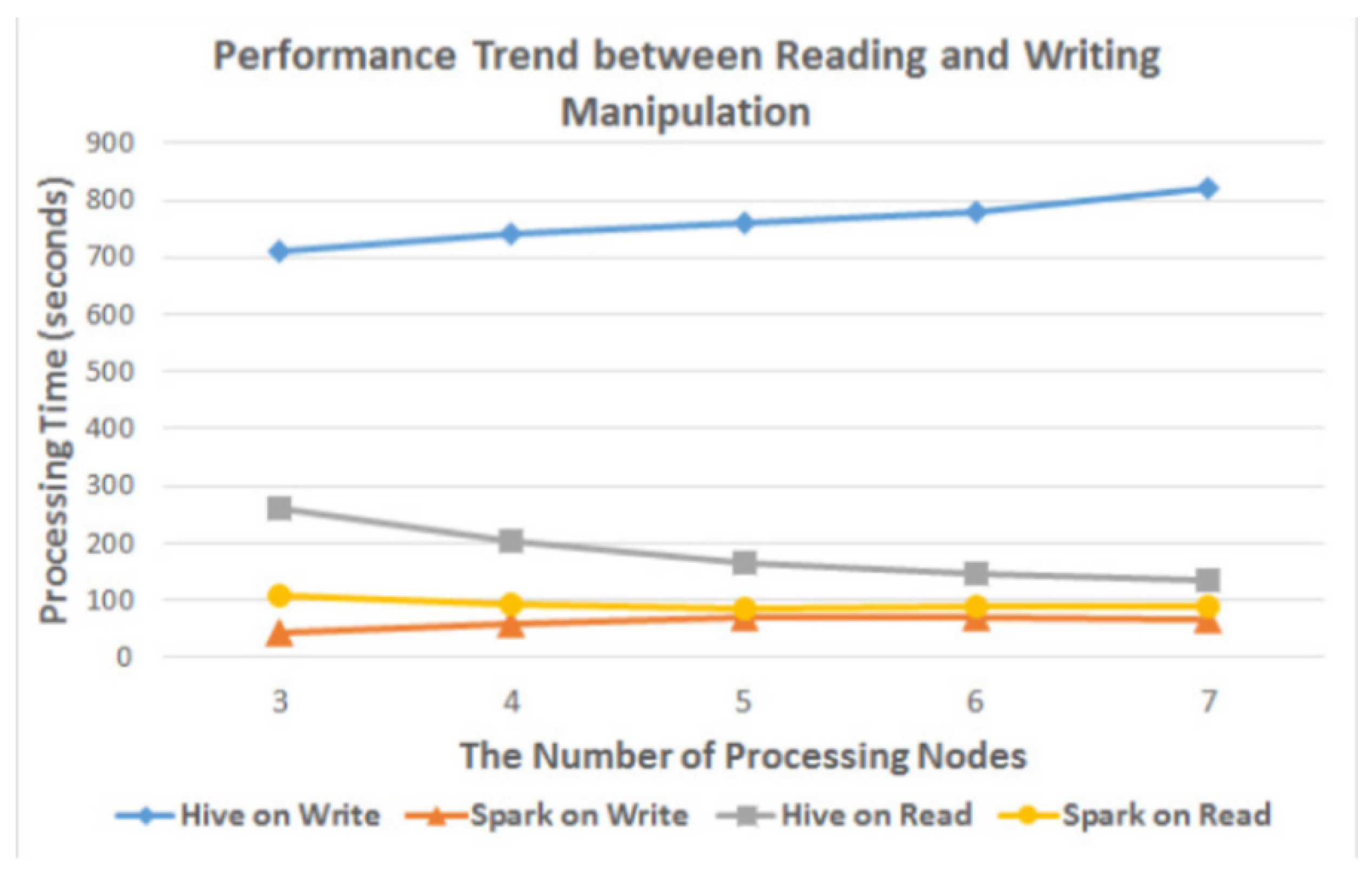

Spark Ounacer et al. said it is open-source under the license of Apache Foundation, spark includes multiple tools for different propose, it’s used in distributed computing. Spark is way better than MapReduce, the spark is faster in both batch and in-memory processing/analysis. Spark contains not only the MapReduce look-alike function but also it has other tools integrated to it, they are: Spark SQL, Spark Streaming, MLib, GraphX and SparkR, all these tools are built on top of the Apache Spark Core API.The process of Big Data in a real-time or stream online, Spark integrated with Kafka is part of the architecture of the system [11]. Spark can run in 3 cluster mode types the standalone (native spark structure), Hadoop YARN, or Mesos. Also, Spark support distributed storage includes HDFS, Cassandra, Amazon S3 [12]. Spark Streaming is part of the Apache Spark that responsible for handling computing the different data which is coming to fast to the system [13], it allows doing several functions on the streamed data (like obtain data, joining the stream, filtering stream, etc.) [5].

- 5.

- Hive Used to handle the reading and writing, moreover the managing of large data using SQL like interface. Hive is an SQL engine that integrated with the Hadoop HDFS and runs MapReduce jobs, most likely hive use for the analytical queries [14].

2.3. Ecosystem

3. IoT Architectural

-

Data perception layer:It has the ability to perceive, detect, and collect information and objects, and collect data. using different devices such as sensors and RFID [18]. The data come from different sources such as sensors, RFID as induction loop detectors, microwave radars, video surveillance, remote sensing, radio frequency identification data, and GPS [19]. This layer handles data through different IoT sources and afterward totals that data. In some cases data have security, protection, and quality prerequisites. Likewise, in sensor information, the metadata is constantly more noteworthy than the genuine measure. So, filtration methods are connected in this layer, which spot the pointless metadata [20]. According to Abuqabita et al., the main challenge facing big data is IOT information acquisition. The IOT device’s need for infrastructure produces ongoing data streams and develops methods to derive good information from these data, analyzing them through machine learning and artificial intelligence approach is the only way to work with IOT prospective big data.

-

Network communication layer:This layer facilitates the information between sensors [20]. It transfers collected data from the perception layer to the following layer using communication and internet technologies [18]. This layer is divided into two sub-layers which are the data exchange sub-layer that handles the transfer of data and information integration sub-layer which aggregates, cleans and fuses collected data [21].

-

Data processing layer:The bulk of IoT architecture is in this layer. It is in charge of preparing the information [20]. In a big data concept, information is processed in parallel using Hadoop distributed file system. Apache Spark with its different components is commonly used as well. For further information about this layer, the reader is referred to section IV.

-

Application layer:It aims to create a smart environment. Hence, it receives the information and process content to deliver intelligent services to different users [18]. It may also include a support layer. The final decision is often made in this layer. This layer commonly uses interface oriented setting which enables the entire IoT system to perform under high efficiency.

4. Cloud Services

-

Software as a Service (SaaS)Gives a utility of managing from the Clint’s side, and the remote server, also its on-demand applications which means it can be accessible from the internet.

-

Platform as a Service (PaaS)Provided more flexible system, developers can build there customized system, and they don’t need to have any thinking about the like where to store the data, or how is the connection between the components in the system and the maintaining, all of this is been take care of by the provider of the service.

-

Infrastructure as a Service (IaaS)Its more scalable than the other services, provides the authorization to develop and make the change on the storage and monitor the computers in the network, and other services, in other words, it’s on-demand resources.

5. Big Data Frameworks and Applications on IoT Domains

5.1. Healthcare

- Intelligent building- it houses the collection, processing unit, aggregation result unit, and the application layer services. It is the primary part that incorporates the intelligent system to capture big high-speed data from the sensors. It receives processes and analyses medical data from the multiple sensors attached to the patient body.

- Collection point- this is the avenue where the collected data enters the system. It gathers data from all the registered individuals of the BAN network. It is served by one server that collects and filters data from the people.

- Hadoop processing unit- it consists of the master nodes and multiple data nodes. It stores data in blocks. It has data mappers that assess the data to determine its validity, whether normal or abnormal. Normal data requires analysis, while necessary data is discarded.

- Decision servers- they are built with an intelligent medical expert system, machine learning classifiers, together with some complex medical issue detection algorithms for detailed evaluation and making choices. Their function is to analyse the information from the processing unit.

- Amazon Kinesis stream – captures and stores terabytes of data per hour. The tool provides a 24-hour access of data from the time it is included in a stream.

- Amazon Elastic Compute Cloud (EC2) offers the user the chance to launch the servers of choice.

- AWS IoT core- the ore helps in connecting to the IoT devices, receiving data through the MQTT, and publishing the message to a given topic.

- Elastic MapReduce (EMR) - allows quick processing and storage of big data using the Apache Hadoop application.

- Conventional Neural Network – extracts vision-based characteristics through the use of hidden layers. The hidden layers in the CNN help in establishing chronic diseases in living things connected to the IoT.

- Long short term memory (LSTM) - controls the accessibility to memory cells and prevents the breakdown in connection arising from additional inputs. It works on three neuron gates, namely forget, read and write neurons.

- Restricted Boltzmann Machine (RBM) - comprises of both hidden and input layer. Aims at maximising the probability results of the visible layer.

- Deep Belief Network (DBNs) - they comprise of both the visible and hidden layers. They help in reconstructing and disarticulating training data that is presented hierarchically.

- Deep Reinforcement Learning (DRL) - focuses on enabling software agents to self-learn to generate useful policies to provide long term satisfaction of the medical needs. The problem associated with this system is that it does not have moderate data sets and cannot detect signals in offline mode.

-

Management Processes’ coordination:Here the report identifies the blackboard pattern to be the reference point for coordinating management processes. This function is achieved through the other four patterns which are;

- Knowledge pattern that focuses on the implementation of automatic computing. It solves the challenges of scalability and limitations during the centralization if knowledge in healthcare. The challenge can be eliminated through the decomposition of the knowledge for the better management of the IoT system into three categories. The sensory, context and procedural knowledge

- Cognitive monitoring management pattern is designed for specific devices that generate data in one unit through the use of syntax and representation. It focuses on solving the problem of the new IoT devices that require new software to be created to necessitate its expensive functionality. The cognitive monitoring management pattern makes it easier by developing a system that uses human visualization to retrieve and receive notifications for any changes in the situation. Human visualization of the system allows the management of the system through setting modification and allowing the IoT system to get help from the specialists and acquire knowledge.

- Predictive Cognitive Management pattern – it is an extension of the above pattern where it focuses on modeling and coordinating the monitoring, analysis and expert trends. It dissociates the interaction of the overseeing process with the sensory and setting data to provide new insights about the elements under examination.

-

Semantic integration:Semantic integration is another category of the system pattern that has the following subcategory:

- Semantic knowledge mediator is used in a setting to enhance better management of all systems with IoT technology. It is based on the integration of several sources to obtain a detailed and better understanding of the business, setting, system and the environmental knowledge. The author stresses that the system would eliminate the challenges of heterogeneity associated with distributing and representing knowledge. The system can solve this problem by providing resources that foster the collaboration of various types of providers when analyzing the knowledge. It eliminates heterogeneity by providing flexible and extensive devices to contain new sources of know-how.

-

Big data and Scalability:Focuses on monitoring and assessing the primary processes facing big data problems. This is done by the big data stream detection pattern that focuses on reducing the velocity and volume of data to ensure its integration for real-time integration. This pattern solves these problems effectively and at low costs as opposed to the manual process that is time-consuming and costly. The Big data analytic predictive pattern also enhances the scalability. It is an extension of the above pattern. It focuses on supporting big data to manage batch processing. It borrows heavily from the human mind and how it processes information. It operates by importing data from external databases and harmonizes it in the long-term memory. The authors also argue for the management process multi-tenant pattern that instills scalability in data through dissociating and deployment of the primary functions[29]. Ma’arif, Setiawan, Priyanto and Cahyo [30] are other contributors in the issue of big data in the health care setting. They focus on developing a cost-effective machine that would help health centres to monitor and analyse sensor data effectively. The authors advocate for a system with the following architecture.

- Sensing unit – this unit collects vital information from the patient’s body. It is constructed using the ESP2866 controlled by the NodeMCU. The NodeMCU gathers data not limited to heart rate, body temperature, and blood pressure.

- Data processing unit – this unit is developed according to the lambda architecture. Lambda architecture refers to a framework that is used to design data in a manner that allows the system to handle a substantial piece of data. The architecture combines the batch processing and fault-tolerance aspects that allow the system to secure latency and ensure real-time access to data respectively.

- The lambda architecture depends on three layers that increase its efficiency in handling massive data on time. The layers are speed, batch and serving. The speed layer focuses on the immediate data, assures a real-time view of the patient’s important sign indicators as registered by the sensors. The batch layer manages historical data while the serving layer that indexes the batch view.

- Presentation unit – this unit contains mobile and web applications that help the user to access the data in the system. Our users here are health care providers.

- Communication protocol – this element has various parts specialised to offer coordination between the components of the system. For instance, the interface mediates the interaction between sensing and data processing unit; Application Programming interface connects processing unit and the presentation unit to ensure the user can access the information which is the result of the whole process.

5.2. Transportation

6. Conclusion

References

- Saha, A. K., K.A.T.V.; Das, S. Big Data and Internet of Things: A Survey. 2018 International Conference on Advances in Computing, Communication Control and Networking (ICACCCN). Greater Noida (UP), India, 2018, pp. 150–156. [CrossRef]

- Lu, S.Q.; Xie, G.; Chen, Z.; Han, X. The management of application of big data in internet of thing in environmental protection in China. 2015 IEEE First International Conference on Big Data Computing Service and Applications. IEEE, 2015, pp. 218–222. [CrossRef]

- Landset, S.; Khoshgoftaar, T.M.; Richter, A.N.; Hasanin, T. A survey of open source tools for machine learning with big data in the Hadoop ecosystem. Journal of Big Data 2015, 2, 24. [Google Scholar] [CrossRef]

- Apache Hadoop. https://hadoop.apache.org/, 2019.

- Ounacer, S., T.M.A.A.S.D.A.; Azouazi, M. A New Architecture for Real Time Data Stream Processing. INTERNATIONAL JOURNAL OF ADVANCED COMPUTER SCIENCE AND APPLICATIONS 2017, 8, 44–51. [Google Scholar] [CrossRef]

- Machines, I.B. Internet of Things, 2019.

- Karve, R.; Dahiphale, D.; Chhajer, A. Optimizing cloud MapReduce for processing stream data using pipelining. 2011 UKSim 5th European Symposium on Computer Modeling and Simulation. IEEE, 2011, pp. 344–349. [CrossRef]

- Satoh, I. MapReduce-based data processing on IoT. 2014 IEEE International Conference on Internet of Things (iThings), and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom). IEEE, 2014, pp. 161–168. [CrossRef]

- Jafar, M.J.; Babb, J.S.; Abdullat, A. Emergence of data analytics in the information systems curriculum. Information Systems Education Journal 2017, 15, 22. [Google Scholar]

- Jesse, N. Internet of things and big data–the disruption of the value chain and the rise of new software ecosystems. IFAC-PapersOnLine 2016, 49, 275–282. [Google Scholar] [CrossRef]

- Ahmed, E., Y.I.H.I.A.T.K.I.A.A.I.A.I.M.; Vasilakos, A.V. The role of big data analytics in Internet of Things. Computer Networks 2017, 129, 459–471. [Google Scholar] [CrossRef]

- Chou, S.C.; Yang, C.T.; Jiang, F.C.; Chang, C.H. The Implementation of a Data-Accessing Platform Built from Big Data Warehouse of Electric Loads. 2018 IEEE 42nd Annual Computer Software and Applications Conference (COMPSAC) 2018, 2, 87–92. [Google Scholar] [CrossRef]

- Maarala, A.I.; Rautiainen, M.; Salmi, M.; Pirttikangas, S.; Riekki, J. Low latency analytics for streaming traffic data with Apache Spark. 2015 IEEE International Conference on Big Data (Big Data). IEEE, 2015, pp. 2855–2858. [CrossRef]

- Apache Hive TM. http://hive.apache.org/, 2019.

- Ta-Shma, P., A.A.G.G.G.H.G.C.F.; Moessner, K. An ingestion and analytics architecture for iot applied to smart city use cases. IEEE Internet of Things Journal 2017, 5, 765–774. [Google Scholar] [CrossRef]

- Miorandi, D., S.S.D.P.F.; Chlamtac, I. Internet of things: Vision, applications and research challenges. Ad hoc networks 2012, 10, 1497–1516. [Google Scholar] [CrossRef]

- Malik, V.; Singh, S. Cloud, Big Data & IoT: Risk Management. 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon). Faridabad, India, 2019, pp. 258–262. [CrossRef]

- Sassi, M. S. H., J.F.G.; Fourati, L.C. A New Architecture for Cognitive Internet of Things and Big Data. Procedia Computer Science 2019, 159, 534–543. [Google Scholar] [CrossRef]

- L. Zhu, F. R. Yu, Y.W.B.N.; Tang, T. Big Data Analytics in Intelligent Transportation Systems: A Survey. IEEE Transactions on Intelligent Transportation Systems 2019, 20, 383–398. [Google Scholar] [CrossRef]

- Yadav, P.; Vishwakarma, S. Application of Internet of Things and Big Data towards a Smart City. 2018 3rd International Conference On Internet of Things: Smart Innovation and Usages (IoT-SIU). Bhimtal, 2018, pp. 1–5. [CrossRef]

- Ma, H.D. Internet of things: Objectives and scientific challenges. Journal of Computer Science and Technology 2011, 26, 919–924. [Google Scholar] [CrossRef]

- Collins, E. Intersection of the cloud and big data. IEEE Cloud Computing 2014, 1, 84–85. [Google Scholar] [CrossRef]

- Elshawi, R.; Sakr, S.; Talia, D.; Trunfio, P. Big data systems meet machine learning challenges: Towards big data science as a service. Big data research 2018, 14, 1–11. [Google Scholar] [CrossRef]

- Rathore, M. M., A.A.; Paul, A. The Internet of Things based medical emergency management using Hadoop ecosystem. 2015 IEEE SENSORS. IEEE, 2015, pp. 1–4. [CrossRef]

- Taher, N. C., M.I.A.N.; El-Mawass, N. An IoT-Cloud Based Solution for Real-Time and Batch Processing of Big Data: Application in Healthcare. 2019 3rd International Conference on Bio-engineering for Smart Technologies (BioSMART). IEEE, 2019, pp. 1–8. [CrossRef]

- Chhowa, T.T.; Rahman, M.A.; Paul, A.K.; Ahmmed, R. A Narrative Analysis on Deep Learning in IoT based Medical Big Data Analysis with Future Perspectives. 2019 International Conference on Electrical, Computer and Communication Engineering (ECCE) 2019, pp. 1–6. [CrossRef]

- A. Zameer, M. Saqib, V.R.N.; Ahmed, I. IoT and Big Data for Decreasing Mortality rate in Accidents and Critical illnesses. 2019 4th MEC International Conference on Big Data and Smart City (ICBDSC). Muscat, Oman, 2019, pp. 1–5. [CrossRef]

- Rei, J., B.C.; Sousa, A. Assessment of an IoT Platform for Data Collection and Analysis for Medical Sensors. 2018 IEEE 4th International Conference on Collaboration and Internet Computing (CIC). Philadelphia, PA, 2018, pp. 405–411. [CrossRef]

- Mezghani, E., E.E.; Drira, K. A model-driven methodology for the design of autonomic and cognitive IoT-based systems: Application to healthcare. IEEE Transactions on Emerging Topics in Computational Intelligence 2017, 1, 224–234. [Google Scholar] [CrossRef]

- Ma’arif, M. R., P.A.S.C.B.; Cahyo, P.W. The Design of Cost Efficient Health Monitoring System based on Internet of Things and Big Data. 2018 International Conference on Information and Communication Technology Convergence (ICTC) 2018, pp. 52–57. [CrossRef]

- Nkenyereye, L.; Jang, J.W. Integration of big data for querying CAN bus data from connected car. 2017 Ninth International Conference on Ubiquitous and Future Networks (ICUFN). IEEE, 2017, pp. 946–950. [CrossRef]

- Xie, J.; Luo, J. Construction for the city taxi trajectory data analysis system by Hadoop platform. 2017 IEEE 2nd International Conference on Big Data Analysis (ICBDA)( ). IEEE, 2017, pp. 527–531. [CrossRef]

- Prathilothamai, M., L.A.M.S.; Viswanthan, D. Cost Effective Road Traffic Prediction Model using Apache Spark. 9 (May) 2016. [Google Scholar] [CrossRef]

- Amini, S.; Gerostathopoulos, I.; Prehofer, C. Big data analytics architecture for real-time traffic control. 2017 5th IEEE International Conference on Models and Technologies for Intelligent Transportation Systems (MT-ITS) 2017, pp. 710–715. [Google Scholar] [CrossRef]

- Zeng, G. Application of big data in intelligent traffic system. IOSR Journal of Computer Engineering 2015, 17, 01–04. [Google Scholar] [CrossRef]

- Liu, D. Big Data Analytics Architecture for Internet-of-Vehicles Based on the Spark. 2018 International Conference on Intelligent Transportation, Big Data & Smart City (ICITBS). IEEE, 2018, pp. 13–16. [CrossRef]

- Guo, L.; Dong, M.; Ota, K.; Li, Q.; Ye, T.; Wu, J.; Li, J. A secure mechanism for big data collection in large scale Internet of vehicle. IEEE Internet of Things Journal 2017, 4, 601–610. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).