1. Introduction

Populations in low and middle-income countries (LMICs), such as those in sub-Saharan Africa, are undergoing a triple burden of malnutrition, characterised by long-term steady rise in undernutrition, micronutrient deficiency and emergence of obesity and non-communicable diseases such as hypertension and type 2 diabetes [

1,

2], with poor dietary intake being a primary contributor. The traditional diets of these populations are mostly composed of starchy foods, served with small amounts of green leafy vegetables with little-to-no animal sourced foods [

3]. As the accessibility and affordability of processed foods increase globally, ingredients such as refined flour, sugar, rice, cooking oil, saturated and trans fats have been added to the diets [

4]. In addition, affluent urban populations in these countries are being enticed by western type nutritionally poor fast foods, producing a malnutrition spectrum comprised of both undernutrition and overnutrition (obesity and overweight), with serious adverse health and economic implications [

5].

Despite a good general understanding of the types of foods eaten in LMICs, existing knowledge of the amounts, energy and nutritional content (i.e., carbohydrate, fat, protein, vitamins and minerals) of the foods consumed across different populations, households and individuals is inadequate, contributing to a significant nutritional data gap [

6]. Among other things, the gap in nutrition data is attributable to the limited availability of nutritional assessment tools including dietary intake assessment methods. Accurate assessment of dietary intake is very challenging, even in high income countries. Most current methods rely on self-report of intake, which are affected with random and sometimes systematic errors [

7]. Efforts such as transitioning from traditional pen and paper-based methods, relying on sheer memory, to using web and computer-based methods with picture aids for portion size estimation have made significant strides in the quest to accurately estimate dietary intake, albeit reporting errors remain a challenge [

8]. However, deploying web and computer-based methods to LMICs is problematic due to issues relating to cost, low literacy, low computer ownership and reliable internet connectivity. Other methods, such as mobile phone-based methods, look promising [

9]. But these methods too require an understanding of the commands and instructions set in the phones to capture food images, which might be difficult for low literate populations. In addition, mobile phone capture of food images might be burdensome for large households, especially those with younger children, where parents or caregivers are expected to take images of their own food intake and those of the children, presenting a challenge to their use in LMICs. Methods that are easy to use in a low literate population, do not require computer and internet connectivity, and are less burdensome on the user might be valuable alternatives to the challenges associated with current dietary intake assessment methods.

An objective, passive image-based dietary intake assessment method was developed for use in LMIC households [

10]. This method uses wearable camera devices to progressively take images of food intake during eating occasions and custom software to estimate the amount of food eaten and its nutritional content. Individuals in households are assigned a wearable camera device that when switched on automatically captures images of food intake without a direct/active role of the wearer in the image capture. Manual and automated approaches are then used on the captured food images to identify foods, estimate portion size, and calculate nutrient intake, thus providing an objective, passive, image-based dietary intake assessment method.

This paper reports the findings of a pilot study designed to assess the acceptability and functionality of wearable camera devices in food image capture, and relative validity of the passive image-based method in estimating portion size and nutrient intake compared to weighed food records among adults and children of Ghanaian and Kenyan origin living in London, United Kingdom (UK) to provide evidence to support the deployment and further testing of the devices in households in LMICs.

2. Materials and Methods

2.1. Study Population

This study was carried out between December 2018 to July 2019. Adults and children living in London, UK who identified themselves as of Ghanaian or Kenyan origin were recruited. Recruitment was carried out through poster adverts, word of mouth and referrals. Adults and children were recruited and enrolled separately into two sub-studies.

For the adult sub-study, interested adults were invited to the National Institute for Health Research (NIHR) Clinical Research Facility (CRF) at Hammersmith Hospital, Imperial College London, UK for eligibility assessment and informed consent. A potential participant was eligible if s/he was an adult (≥18 years) of Ghanaian or Kenyan origin, eats food of Ghanaian or Kenyan origin, had no known food allergies and was willing to wear a camera device during eating. Eligible adults were given a participant information sheet and consent form to read, discuss with study staff and, if possible, provide informed consent. Potential participants were given up to 48 hours from the visit date to provide informed consent. Consenting adults were enrolled into the study and allocated to a study group.

Participants for the child sub-study were enrolled through recruitment of households in London. Households that showed interest were visited by study staff for eligibility assessment and informed consent. Households were eligible if they were of Ghanaian or Kenyan origin, had a child or children aged 0-17 years, cooked foods of Ghanaian or Kenyan origin, the child/children had no food allergies, and the child/children were willing to wear a camera device during eating. Household heads (mothers mainly) in eligible households were given a participant information sheet to read and discuss with project staff and an informed consent form. Assent was sought from minors (aged 13-17 years) who were able to read. Consenting households were enrolled into the study and assigned a household identification number. The study is registered at

www.clinicaltrials.gov as NCT03723460.

2.2. Study Design

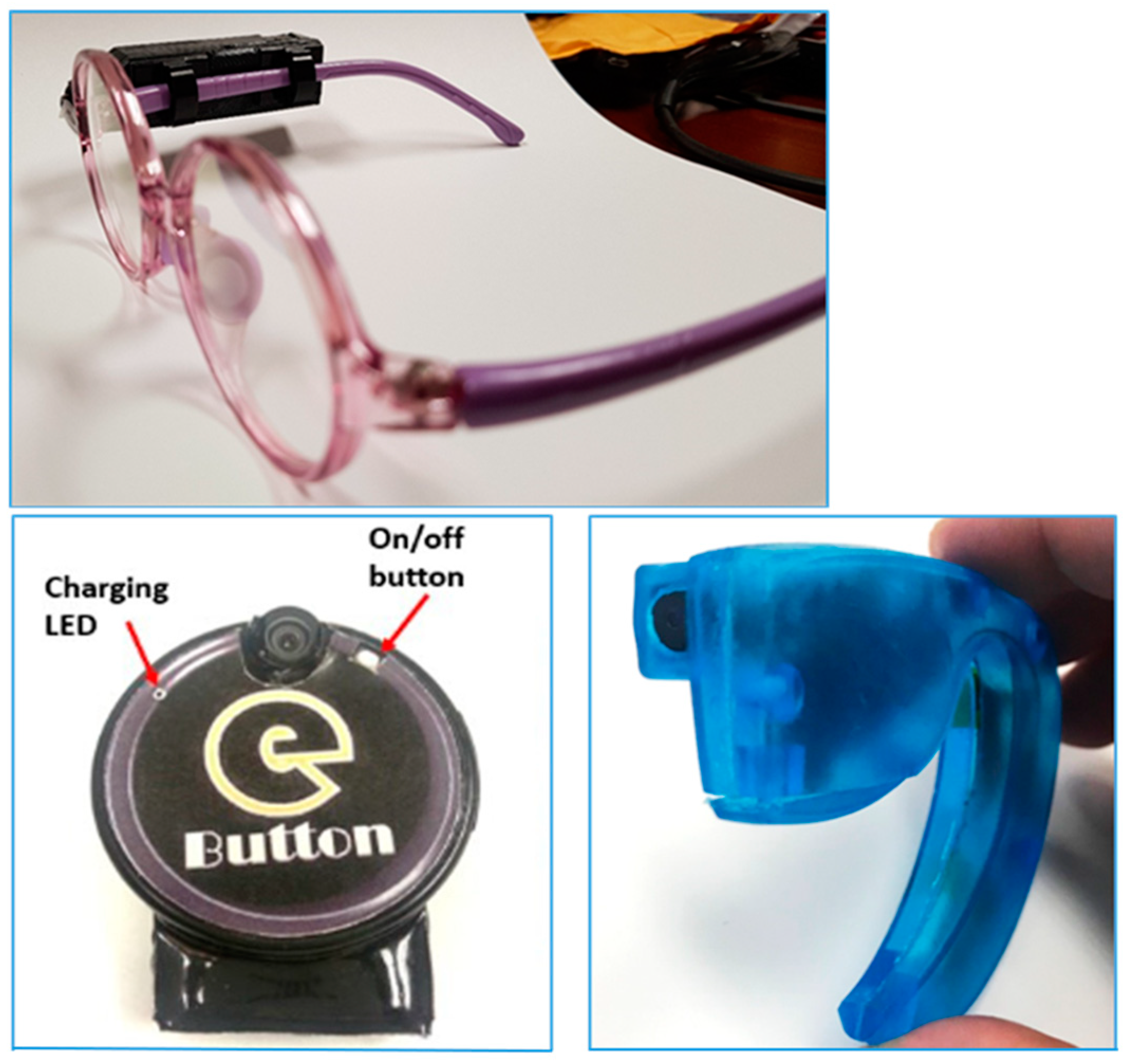

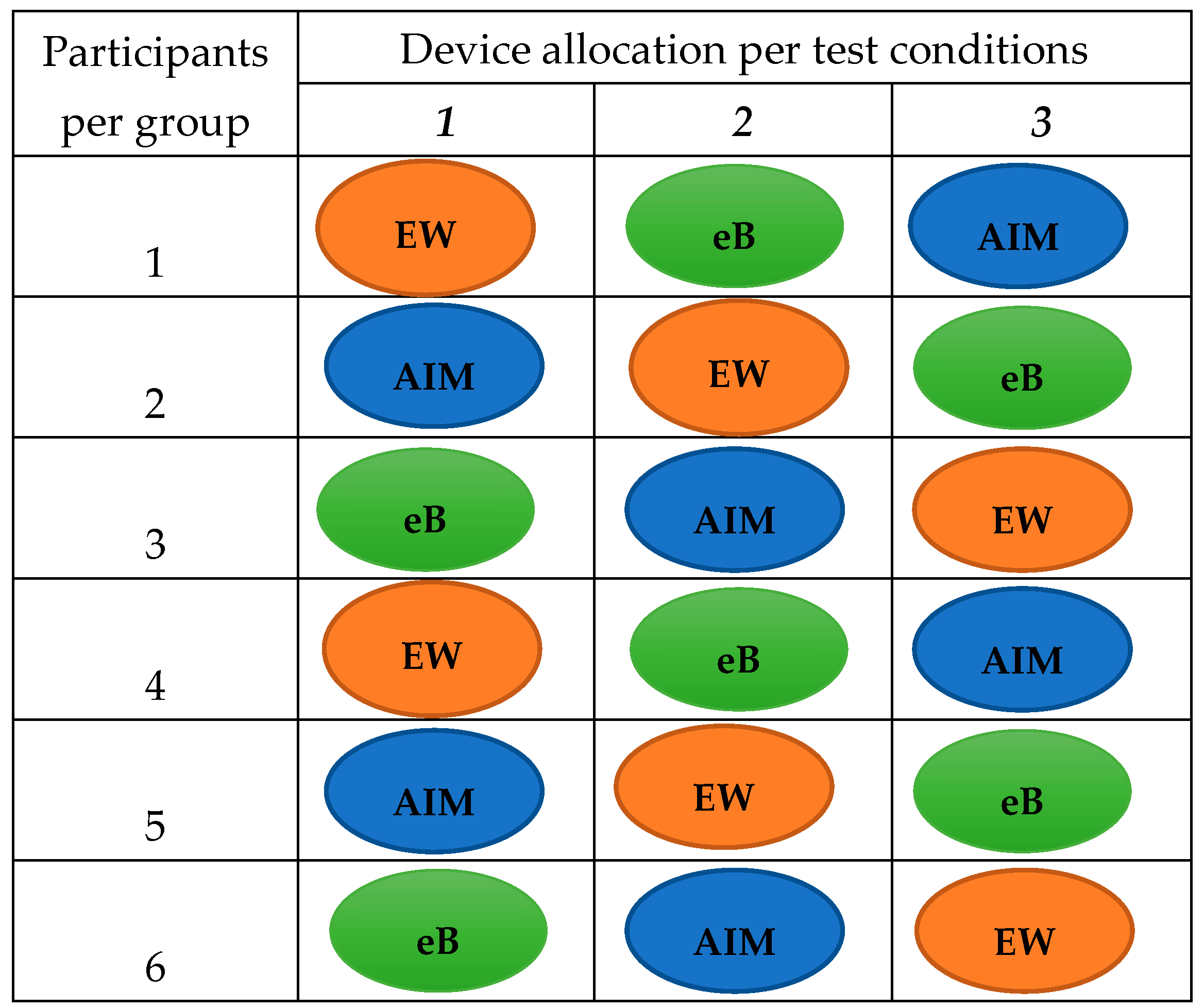

This study was designed to test the acceptability and functionality of wearable camera devices in passive image capture of food intake during eating episodes, and validity of using the captured images to estimate food portion size and nutrient intake in comparison to observed weighed food records. The devices were tested in representative populations of LMICs in London, under conditions similar to those in LMICs: such as using indigenous foods, testing in a dimly lit room (mimicking a condition of inadequate electricity availability) and eating from shared plates (i.e., where two or more people eat from a single plate of food). Wearable camera devices; a) AIM (Automatic Ingestion Monitor) – a micro camera device attached to the frame of eyeglasses, b) eButton – a circular camera device attachable onto clothing, and c) ear-worn – a micro-camera device worn on the ear, resembling a Bluetooth headset (

Figure 1), were used by participants during eating occasions to capture images of their food intake [

11,

12]. The devices captured images at every 5-15 seconds. The study was carried out in laboratory and household settings among adults and children, respectively, to understand the strengths and weaknesses of the camera devices, and to provide evidence to support their deployment in LMICs. The outcomes of interest were: a) participant’s grading of acceptability of the devices, b) an independent assessor’s grading of functionality of the devices, and c) estimates of food portion size and nutrient intake from weighed food records and food images captured by the wearable devices.

2.3. Testing of the devices in adults

A detailed protocol describing the procedure for testing the devices in the study population was previously published [

10]. Briefly, adult participants were divided into groups and each group visited the CRF at Imperial College London three times, once a week within a three-week period. On each visit, participants were provided with pre-weighed (weighed using Salter Brecknell, Smethwick, UK) foods of Ghanaian and Kenyan origin and a wearable camera device (AIM, eButton or ear-worn). During the three visits, participants completed three study activities: a) ate a meal in a well-lit room, b) ate a meal in a poorly lit room, and c) ate their meal using a shared plate. Participants completed one activity using only one device per visit (

Figure 2). Participants were asked to eat a pre-weighed meal until full, and leftover foods were weighed and recorded for completion of observed weighed food records. At the end of each eating episode, images captured by the wearable camera devices were uploaded onto a computer and transferred to a secure cloud storage for ensuing estimation of functionality of the devices and food portion sizes.

At the end of each visit, participants were given a questionnaire to assess their perception of the acceptability of each device. The questionnaire asked participants to rate from 1-5 (low to high) ease of use, convenience, likelihood of using a similar device in the future, and choosing their preferred device among the three devices. Study staff did not interact with the participants during completion of the questionnaire to prevent implicit bias.

2.4. Testing of the devices in children

Households that consented and enrolled in the study were visited twice (two days) by study staff. On each day, a meal of Ghanaian or Kenyan origin was cooked. Before and during cooking, study staff and the household cook used a weighing scale (Salter Brecknell, Smethwick, UK) to weigh and record weights of all the ingredients that went into the cooking. At end of cooking, a pre-weighed portion of the meal was dished onto a plate, recorded and given to the child/children in the household to eat. A wearable camera device, eButton or AIM, was placed on the child/children to take images of the eating episode. Only one camera device was used per child during each visit. The ear-worn device was not included for testing in children. Children were asked to eat ad libitum. At the end of the eating occasion, leftover foods were weighed (post-weight) and recorded for completion of weighed food records. Images captured by the devices were uploaded onto a laptop computer and subsequently transferred onto secure cloud storage. The stored images were then used for assessment of functionality of the devices and estimation of portion size and nutrient intake.

At the end of each visit, participants were given a questionnaire to assess the acceptability of the devices. The questionnaire asked for a grading, ranging from 1-5 (low to high) of ease of use, convenience, interference with their eating, likelihood of using similar device in the future, and their preferred choice of device. Parents were allowed to complete the questionnaire for younger children unable to provide coherent answers. Study staff did not interact with the participants during completion of the acceptability questionnaire to prevent bias.

2.5. Assessment of functionality of the camera devices

Access to the secure cloud storage containing the food images was given to engineers with experience in image processing at the National Electronic and Computer Technology Center (NECTEC), Thailand to provide an independent (i.e., not present during data collection) assessment of functionality of the devices. To visually estimate portion size of foods captured on images, a trained dietitian/nutritionist would need clear images of the food plate at the start, during and end of eating. Functionality was thus estimated as an indication of the ability of the wearable camera devices to progressively capture quality images of an entire eating occasion from start to end.

Images of eating episodes were labelled for study activity and device used. The images were chronologically arranged to allow viewing of the images from the beginning to the end of an eating occasion. An assessor went through the captured image files from each device and assessed them for clarity, ability to see the full food plate at the beginning of eating, ability to see the progression of eating, and ability to see the full food plate at the end of eating. These functional characteristics were assigned a numerical value ranging from 1-5 (i.e., low to high imaging quality) to facilitate comparison between the devices.

2.6. Assessment of portion size of food captured on images

Prior to using the captured images for assessment of portion size, images of complete eating episodes were processed to remove artifacts inherent in wearable camera imaging. These artefacts include barrel distortion, motion blur and dark images due to poor lighting. Blurred images were manually removed. Barrel distortion and enhancement of dark images were corrected using previously described protocols [

13] .

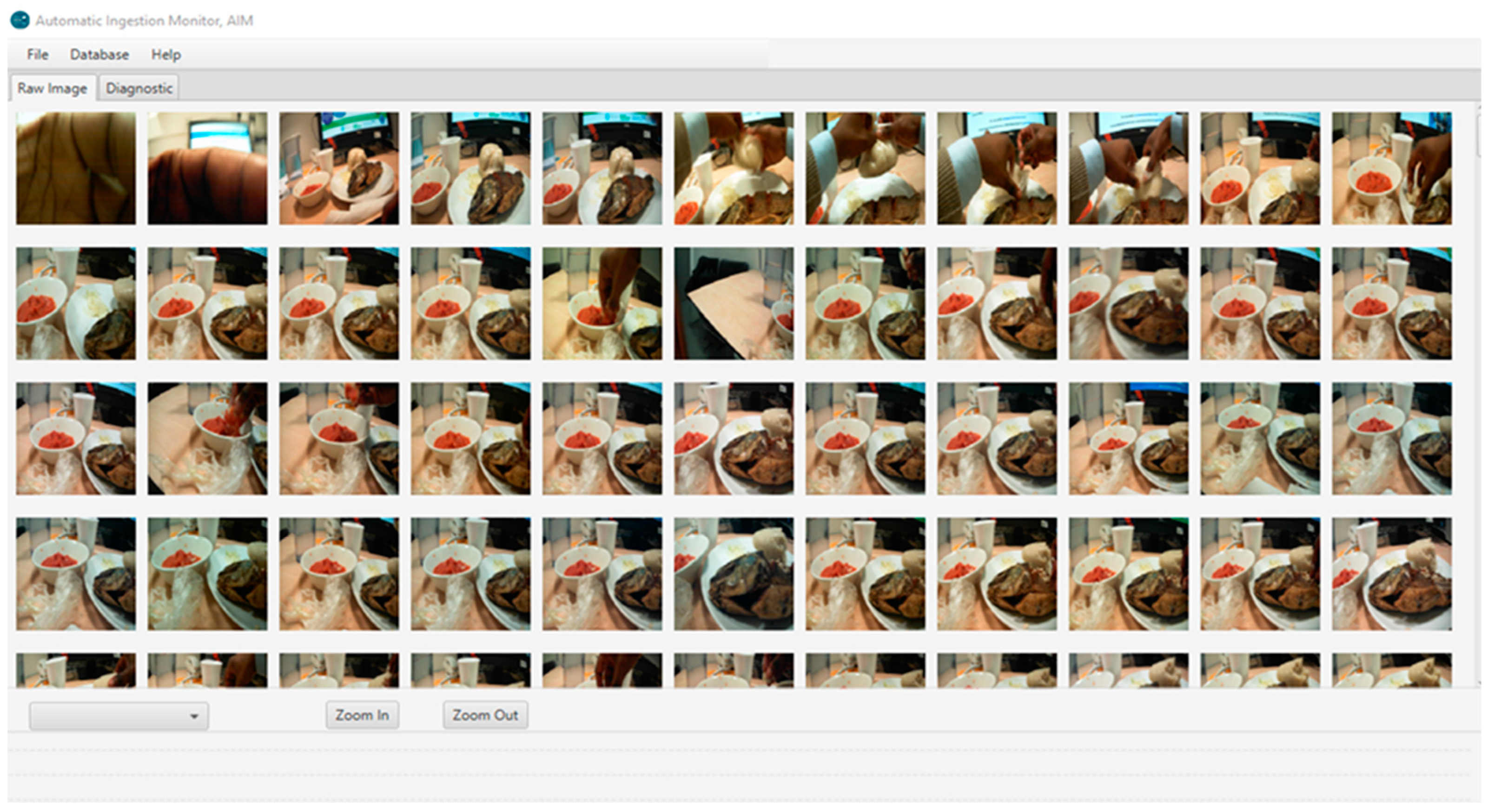

Portion size estimation from food images was conducted by a trained dietitian different from the one who conducted the weighed food records. Images for each eating occasion were viewed using a custom JAVA-based software, available in the AIM software [

14], which allowed for both simultaneous or sequential review of all images from each eating occasion and zooming in and out on particular food items (

Figure 3). Prior to estimation, all images within a meal were reviewed sequentially to gain an overview of the meal process, e.g., whether additional portions were added to the plate or bowl, whether all food items were consumed in full or there were leftovers. The best quality images at the start and end of the meal, any others as needed, were selected for estimation of portion size. In some cases, more than one image was used to gain the best view of each item. Portion size aids (Hess book [

15], Kenyan[

16] and Ghanaian Food Atlases [

17]) and comparison of food with common reference objects were used to estimate portion sizes. Reference objects included serve ware (plates, bowls, cups, eating utensils etc.) and hands/fingers appearing in images next to the foods. Most foods were estimated as a volume in ml and converted to weight using estimated density (grams/ml) from INFOODS (International Network of Food Data Systems) [

18], while some foods such as meats were estimated directly in ounces and converted to grams (1 oz = 28.4 g). For each food item, if there was a leftover uneaten portion, the estimate was subtracted from the initial portion to obtain the estimate of portion consumed.

2.7. Assessment of nutrient content of foods

Analyses of energy and nutrient intake were only conducted on the child cohort where complete record of household recipe information were available. Analyses of food and nutrient intake were conducted on discrete eating occasions; shared plates were not included. Study specific, standardized recipes were developed for each eating occasion using the recipe information collected in the households (child sub-study). The nutritional composition of each recipe was calculated using nutritional analysis software Dietplan7.0 (Forest field Software Ltd, Horsham, UK) based on the McCance and Widdowson’s 7th Edition Composition of Foods UK Nutritional Dataset (UKN) [

19]. Ingredients were matched to appropriate UKN food code within 10% of energy and macronutrient content. Ingredients within each recipe were entered in their ‘raw’ form. Recipe ingredients not found within the UKN were added to the database as a study specific food. West African [

20] and Kenyan [

16] food composition tables were used to estimate the nutrient composition, per 100g, of the study specific foods. A factor was applied to each recipe to take into consideration ingredient weight change because of water loss or gained through the cooking process. Nutrient intake from food consumptions was estimated for each participant using the Dietplan 7.0 software [

21] and using portion size estimates from weighed food records and food images.

2.8. Statistical analysis

The outcomes of interest included: a) acceptability of the devices, b) functionality of the devices, c) relative validity of estimation of intake of food (portion size), energy and 19 nutrients. Acceptability and functionality were assessed using one-way ANOVA (analysis of variance), Tukey post-hoc test and independent (unpaired) samples t-test to calculate and compare the mean ratings of the different acceptability and functionality characteristics of the devices. A device was determined acceptable if it received a mean acceptability rating of ≥3. Participants’ preferred choice of device was determined using the highest proportion of choice.

Food and nutrient intake data were log-transformed and back-transformed for analyses. Relative validity of estimates of food portion size and nutrient intake were established using Pearson correlation coefficient, Intraclass correlation coefficient, Bland-Altman test, and mean percentage differences. Pearson correlation coefficient was used to show the strength of a linear relationship between estimates of portion size, energy, and nutrient intake from the two methods (i.e., weighed food record and passive image-based method). ICC (two-way mixed, absolute agreement type) and 95% CI (confidence interval) were used to evaluate the level of agreement between the two methods. The estimated agreement of the methods was interpreted using the cut-offs: poor (ICC <0.5), moderate (ICC 0.5-0.75), good (ICC 0.75-0.90) and excellent agreement (ICC >0.9) [

22]. Bland-Altman analysis indicating the mean difference and 95% limits of agreement (LOA) (i.e. mean difference ± 1.96 x SD (standard deviation) of mean difference) was conducted to determine level of agreement between estimates of the two methods [

23]. Linear regression of the differences and means of estimates of portion size, energy, and nutrient intake from the two methods was further incorporated to investigate the degree of proportional bias. Perfect agreement was taken as zero [

24], indicating no bias. Mean percentage difference was used to establish the difference in estimates of absolute intake of portion size, energy, and nutrients between weighed food record and passive image-based method.

Power calculation of method comparison studies depends on the statistical method chosen, and currently there is no consensus on the best statistical method for assessing validity of dietary assessment tools. For Bland-Altman limit of agreement analysis, at least 50 pairs of measurements are considered desirable for analysis of agreement between methods [

23,

25]. Thus, the study attempted to conduct a direct comparison of weighed food record with the passive image-based method in estimating portion size and nutrient intake of a minimum of 50 food items in each cohort. All statistical analyses were conducted using IBM SPSS Statistics 26 (IBM Corps) [

26].

P<0.05 was considered statistically significant.

3. Results

3.1. Participant characteristics

Characteristics of the study population are reported in

Table 1. The study was conducted between December 2018 and July 2019, during which time 35 participants, 18 adults and 17 children were enrolled. In the adult cohort, the mean (range) age of the adults was 37.8 (20 – 71 y) years. Most adults were female (72.2%) and of Ghanaian origin (77.8%). In the child cohort, the mean (range) age of the children was 9 (1 – 17 y) years. There was a similar proportion of female and male children. Most of the children were from households identified as of Ghanaian origin (82.4%). BMI (body mass index), socioeconomic status, educational attainment, etc., were not collected since they were not pertinent to the study’s primary objectives.

3.2. Assessment of acceptability of the devices

The devices received high acceptability ratings. The response to the questions had mean ratings higher than 3, the a priori assigned cut-off for determining acceptability. In the adult cohort, the mean rating of ease of use of the devices was higher for the AIM and eButton devices compared to the ear-worn device (4.6, 4.7 vs 3.7, respectively,

P=0.005). Likewise, the mean ratings on convenience of the devices were higher for AIM and eButton devices compared to the ear-worn device (

P=0.04). A similar trend was observed in the ratings on likelihood of future use of the devices (

P=0.004) (

Table 2). Most adults (67%) preferred the eButton as their primary choice of wearable device compared to 28% and 5% for AIM and ear-worn devices, respectively (

Table 2).

The acceptability of AIM and eButton devices were further tested in children. The ear-worn device was excluded from the testing in children owing to its disapproval among the adult participants. In addition to the set of acceptability questions asked in the adult cohort, testing in children included a question to determine whether the devices interfered with a normal eating process. Overall, AIM and eButton devices had very similar acceptability among children. The mean ratings on ease of use, convenience, and likelihood of future use were very similar for the two devices. Children reported that both devices had minimal interference on their normal eating process. However, the AIM device had less interference with normal eating than the eButton (

Table 2). In addition, the AIM device had somewhat higher preferability than eButton among the children (

Table 2).

3.3. Assessment of functionality of the devices

The results of the independently verified functional capacity showed some variability in the imaging quality of the wearable devices but not to a statistically significant level. In the adult cohort, the AIM and ear-worn devices had higher image clarity (

P=0.09), and higher visibility of food plate at the onset (

P=0.06) and during eating than the eButton device. The AIM device had higher visibility of food plate at the end of eating than both ear-worn and eButton, which performed similarly (

Table 3). In the child cohort, the eButton had a higher image clarity than AIM device; mean ratings 3.7 vs 3.3. However, the AIM device had a higher quality of visibility of the food plate at onset, during and end of eating (

Table 3).

3.4. Assessment of validity of food portion size estimation

Dietary data were collected from 70 eating occasions, 36 and 34 in the adult and child cohorts, respectively. The eating occasions included 199 food items, 121 in the adult cohort and 78 in the child cohort. Some of the indigenous foods provided in the study were, Ghanaian foods: Plantain, Yam, Banku, Jollof Rice, Fufu; and Kenyan foods: Ugali, Pilau, Chapati, etc.

In the adult cohort, images were not available in 27.8% (10/36) of the eating occasions, where the ear-worn device failed to capture 7 eating occasions and eButton failed in 3 eating occasions. In the available 26 eating occasions, containing 84 food items, Pearson correlation coefficient showed a significant positive correlation between food portion sizes estimated by weighed food record and the passive image-based method (

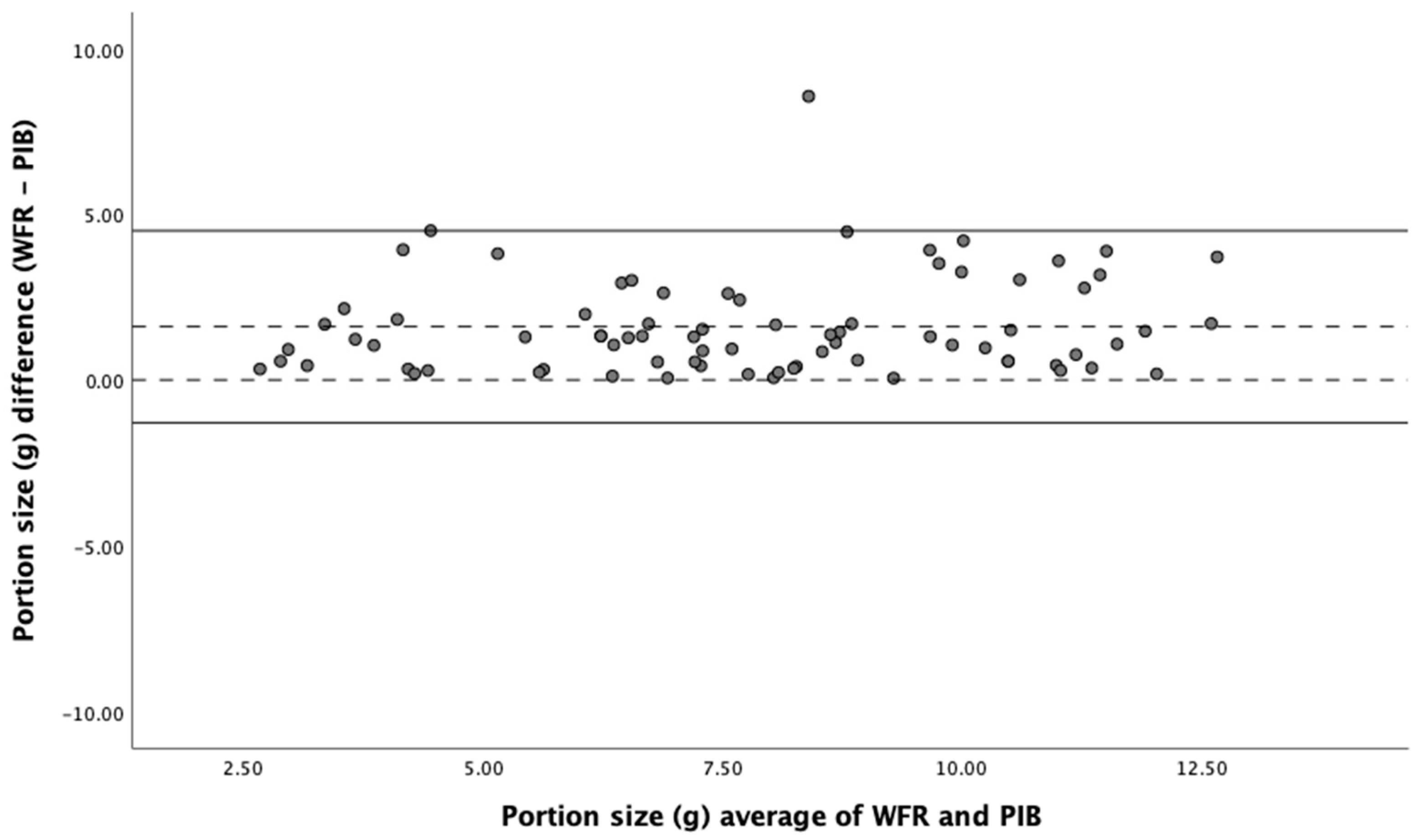

r=0.71, p=0.01); the ICC value was 0.83, indicating good reliability of the passive image-based method. In addition, Bland-Altman analysis showed a good degree of agreement between the two methods (

Figure 4) with no significant bias. All devices had very similar agreement with the weighed food record in a Bland-Altman analysis.

In the child cohort, images were not available in only 8.8% (3/34) of the eating occasions, where eButton failed to capture the complete eating episodes of all 3. In the available 31 eating occasions, containing 68 food items, there was significant positive correlation between estimates of food intake (portion size) (

r=0.75, p=0.01), and the Bland-Altman analysis showed good degree of agreement with no significant bias between the two methods (

Table 4). The ICC value was 0.75 showing a good degree of agreement (reliability) between the two methods for estimating portion size. However, the mean percentage difference in portion size estimation between the two methods is somewhat large indicating an under-estimation of up to 14% in the passive image-based method.

Bland-Altman plot of agreement between weighed food record and passive image-based method in estimation of portion size. Solid lines represent upper and lower LOA (limits of agreement), dotted lines represent mean difference and zero. WFR (Weighed Food Record), PIB (Passive Image-Based).

3.5. Assessment of validity of nutrient intake estimation (child study)

Estimates of intake of energy and of the 19 nutrients from two eating occasions of children are presented in

Table 4. Pearson correlation coefficients (

r) of estimated intake of energy and nutrient between the weighed food records and passive image-based method ranged from 0.60 for carbohydrates to 0.95 for zinc, showing a significant positive relationship between the two dietary intake assessment methods. The

r results were further supported by ICC, which ranged from 0.67 for SFA and monounsaturated fatty acid (MUFA) to 0.90 for zinc, indicating acceptable to excellent agreement between the two methods. Furthermore, Bland-Altman test showed good degree of agreement with no significant bias between the two methods for most of the nutrients. However, the mean percentage difference showed that the passive image-based method consistently under-estimated intakes. The mean percentage difference in estimates of fats (fat, SFA, MUFA, PUFA) and sodium showed under-estimation of around 20% in the passive image-based method. Iodine and selenium had the lowest mean percentage difference, -9% and -4%, respectively, which are within the recommended acceptable mean percentage difference range (± 10%) [

28].

4. Discussion

The findings of this study show that using wearable camera devices to capture food images during eating episodes is highly acceptable. Majority of the study participants (70-88%, analysis not included) reported that the devices were convenient, easy to use, and did not interfere with their eating. However, comparative analysis showed that the devices had different levels of acceptability. In the adult cohort, participants perceived that the AIM and eButton devices were easier to use, more convenient and the participants would be more likely to use them in future studies than the ear-worn device. Most (67%) adult participants selected the eButton as their primary choice of wearable device. The popularity of the eButton could be due to its simple design. It is attached to the chest area of an upper garment using a pin or magnet, making it easier and comfortable to wear, even for an extended period. In contrast, the AIM device is attached to the temple of eyeglasses; using it requires wearing eyeglasses during eating episodes, which might be challenging, especially for non-eyeglass wearers. Likewise, the ear-worn device is similar to a Bluetooth headset; using it during food intake might be uncomfortable for some people.

All the devices were able to capture images of food intake during eating episodes. However, in comparing the mean ratings of functional capacities of the devices, the AIM and ear-worn devices had higher image clarity, better imaging of the full food plate at the beginning and during eating than the eButton. In addition, the AIM device outperformed the other two devices at capturing images of full food plates at the ending of eating events – a critical functional capacity of a wearable camera device for accurate dietary intake assessment. The diminished ability of the eButton in capturing images of the entire eating event was due to problems with misalignment. As the device is attached to the upper garment, it tends to move out of view with the slightest movement of the wearer. In participants wearing light clothing, such as the silky satin type, the weight of the device slightly pulled the clothing down, taking the device out of alignment with the food plate. This was a unique weakness of eButton. In the child study eButton was worn around the neck on a lanyard to improve its imaging.

Despite the somewhat good imaging quality of the ear-worn device, it was highly unpopular. Only 5% of adult participants selected it as their primary choice of device. Its unpopularity, which stemmed from challenges in getting it to properly fit ears of some participants, and the fact that it missed seven eating occasions resulted in a decision to not include it in testing in children. In the child cohort, the eButton and AIM devices performed similarly in assessment of acceptability of the devices. However, the children narrowly favoured AIM as their primary choice of wearable device, and the AIM device had lesser effect on interference of their eating than the eButton. In the child study, the eButton device had higher image quality, but the AIM device performed better at tracking the progression of entire eating occasions. The AIM device sits on eyeglasses worn by the wearer, and humans instinctively look at food when eating. As a result, the device is consistently in view of the food plate, contributing to its robust functional capacity.

This study used a restaurant in London experienced in African cuisine to provide foods used in the adult sub-study but had difficulty getting reliable recipe information from the restaurant. Consequently, we only compared estimates of portion size from the two methods in the adult study. No data on intakes of energy and nutrients were available. Recipe information from Ghanaian and Kenyan food books could have been used, but these might be highly variable from the recipes used in the London restaurant. In the child study, however, detailed information on ingredients used during cooking were collected to facilitate comprehensive nutritional analysis. Multiple statistical approaches were used to assess the relative validity of the passive image-based method in comparison to observed weighed food records. There was significant positive correlation between estimates of food (portion size), energy and nutrient intake (

Table 4), with Pearson correlation coefficient ranging from 0.60 to 0.95, indicating a good relationship between the two methods. In studies of validation of dietary assessment methods, a correlation coefficient of ≥0.50 is considered a good outcome [

28]. In addition, the range of correlation coefficient obtained in this study are higher than those published in some validation studies of self-report dietary intake assessment methods such as FFQ (Food Frequency Questionnaire) [

29,

30] and 24-hour recall [

31]. Since evidence of an association does not necessarily denote agreement, ICC was used to further test for agreement between the methods. The test indicates that the passive image-based method has a good agreement with weighed food record, ranging from moderate to excellent agreement across estimates of portion size, energy and 19 nutrients. The ICC obtained in this study is higher than those reported for on-line 24-hour dietary recall tools (myfood24 and Oxford WebQ) in validation against biomarkers [

32,

33]. In the current study, assessment of agreement between the two methods was further investigated using the Bland-Altman test which also showed a good degree of agreement, indicating that the passive image-based method is accurate. However, comparison of absolute intakes of portion size, energy and nutrients between the methods showed that the passive image-based method systematically under-estimated intake. Under-estimation of fat, SFA, MUFA, PUFA and sodium intakes were high (>20%) in the current study. However, the mean percent difference reported for fat and SFA in our study is much lower than those reported elsewhere using FFQ [

30]. The mean percentage under-estimation of energy in the current study was 16%, which is higher than the energy intake under-reporting (10-12%) reported for mobile Food Record (mFR) – an image-based dietary assessment tool for mobile phone device [

34], and also higher than the energy under-reporting (8-9%) reported for a wearable camera device (SenseCam) used in addition to 24-hour recall in an image-assisted dietary recall method [

35]. Conversely, under-estimation of energy intake in the current passive image-based method is comparable to ASA24 (Automated Self-Administered 24) and much lower than FFQ and 4DFR (4-day Food Records) [

36]. Furthermore, the under-estimation of protein and micronutrients is much lower in the current passive image-based method than most self-report assessment of dietary intake [

30,

32,

36]. Although estimate of intakes of energy and nutrient were not available for the adult sub-study, Pearson correlation coefficient, ICC, and Bland-Altman analysis of portion size estimates from weighed food records and passive image-based method were similar to those reported in the child study. If the recipe information were available, it is highly likely the nutritional outcome would have been similar to that reported in the child study.

A strength of this study is the use of weighed food records collected by staff to validate an objective image-based method for estimating food, energy and 19 nutrient intakes. Dietary assessment from the weighed food records and food images was performed by two different nutritionists/dietitian in different locations to eliminate bias. Multiple statistical methods preferred in dietary assessment studies were used [

28]. Objective passive image-based method offers the potential of minimizing misreporting errors associated with self-report and volitional memory limitation in mobile phone-based methods of dietary intake assessment. The objective method reported in this study is simple, easy and can be used in different settings. It does not require the extensive, and often time-consuming designing of food intake questionnaires involved in self-report methods, the complexity of which varies between different settings and populations.

The primary limitation of this study is the small sample size and short duration. A sample size of 35 is smaller than most studies of validation of dietary assessment tools, and the devices were used for capturing food images of two eating occasions in two days. Extensive use of the devices to take images of whole day (morning to night) food intake might reveal challenges that were not encountered in this pilot study. Image capture of eating occasions in households is considered intrusive and raises questions about privacy. We are committed to maintaining privacy of study participants, and we have incorporated measures in our protocol to achieve it. These measures include allowing participants to review the captured images and delete any image that they are uncomfortable with. In addition, the image analysis software used in this study is able to distinguish between food and non-food images. As a result, all non-food images including images of faces that might have been inadvertently captured during imaging are easily deleted. Furthermore, the captured images are stored in a secure server accessible only to the study investigators.

5. Conclusions

In conclusion, this study provides evidence that passive food imaging using wearable camera devices and subsequent analysis of the images is an acceptable, reliable, and accurate tool for dietary intake assessment. The method of estimation of food intake from food images reported in this study is based on visual estimation of portion sizes by a trained analyst. We are currently automating this process using AI (artificial intelligence) algorithms. The devices are currently undergoing further testing in households in Ghana and Uganda to determine the feasibility, acceptability, and validity of using our method in large population-based dietary assessment in LMICs.

Author Contributions

The study design was conceived by MLJ and GF. Recruitment of participants and data collection were conducted by MLJ, KKT and JQ. The wearable devices were made by engineers: BL, EZ, MS and WJ. MAM conducted visual estimation of portion sizes from food images. JPG conducted nutritional analysis using DietPlan. MLJ conducted the statistical analyses and was responsible for preparation of the manuscript. KM, AKA, and TB reviewed the manuscript and provided valuable insights. All the authors read and approved the final version of the manuscript.

Funding

This research project was funded by Bill & Melinda Gates Foundation; funding ID OPP1171395.

Institutional Review Board Statement

Ethics approval for this study was provided by the Imperial College Research Ethics Committee (ICREC); approval references 18IC4780 and 18IC4795 for the adult and children sub-studies, respectively. The research was conducted in accordance with the guidelines prescribed in the Helsinki Declaration of 1975 as revised in 1983. Participants provided written informed consent before participating, and a small renumeration was given for participation in the study.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data supporting the evidence reported in this paper is available upon request.

Acknowledgments

We would like to thank all the study participants, especially families that welcomed us into their homes. We are grateful to the support of staff at NIHR Imperial CRF for allowing us to use their facility. We would like to acknowledge the support of Dr Surapa Thiemjarus and her colleagues at the National Electronic and Computer Technology Center (NECTEC), Thailand for their help with analysing images for assessment of functionality of the devices.

Conflicts of Interest

All authors declared no conflict of interest.

References

- Wells, J.C.; Sawaya, A.L.; Wibaek, R.; Mwangome, M.; Poullas, M.S.; Yajnik, C.S.; Demaio, A. The double burden of malnutrition: Aetiological pathways and consequences for health. Lancet 2020, 395, 75–88. [Google Scholar] [CrossRef]

- Popkin, B.M.; Corvalan, C.; Grummer-Strawn, L.M. Dynamics of the double burden of malnutrition and the changing nutrition reality. Lancet 2020, 395, 65–74. [Google Scholar] [CrossRef]

- Imamura, F.; Micha, R.; Khatibzadeh, S.; Fahimi, S.; Shi, P.; Powles, J.; Mozaffarian, D. Dietary quality among men and women in 187 countries in 1990 and 2010: A systematic assessment. Lancet Global Health 2015, 3, e132–e142. [Google Scholar] [CrossRef]

- Popkin, B.M.; Adair, L.S.; Ng, S.W. Global nutrition transition and the pandemic of obesity in developing countries. Nutr. Rev. 2012, 70, 3–21. [Google Scholar] [CrossRef] [PubMed]

- Nugent, R.; Levin, C.; Hale, J.; Hutchinson, B. Economic effects of the double burden of malnutrition. Lancet 2020, 395, 156–164. [Google Scholar] [CrossRef] [PubMed]

- Coates, J.C.; Colaiezzi, B.A.; Bell, W.; Charrondiere, U.R.; Leclercq, C. Overcoming Dietary Assessment Challenges in Low-Income Countries: Technological Solutions Proposed by the International Dietary Data Expansion (INDDEX) Project. Nutrients 2017, 9, 289. [Google Scholar] [CrossRef] [PubMed]

- Gibson, R.S.; Charrondiere, U.R.; Bell, W. Measurement Errors in Dietary Assessment Using Self-Reported 24-Hour Recalls in Low-Income Countries and Strategies for Their Prevention. Adv. Nutr. 2017, 8, 980–991. [Google Scholar] [CrossRef] [PubMed]

- Timon, C.M.; van den Barg, R.; Blain, R.J.; Kehoe, L.; Evans, K.; Walton, J.; Flynn, A.; Gibney, E.R. A review of the design and validation of web- and computer-based 24-h dietary recall tools. Nutr. Res. Rev. 2016, 29, 268–280. [Google Scholar] [CrossRef]

- Boushey, C.J.; Spoden, M.; Zhu, F.M.; Delp, E.J.; Kerr, D.A. New mobile methods for dietary assessment: Review of image-assisted and image-based dietary assessment methods. Proc. Nutr. Soc. 2017, 76, 283–294. [Google Scholar] [CrossRef]

- Jobarteh, M.L.; McCrory, M.A.; Lo, B.; Sun, M.; Sazonov, E.; Anderson, A.K.; Jia, W.; Maitland, K.; Qiu, J.; Steiner-Asiedu, M.; et al. Development and Validation of an Objective, Passive Dietary Assessment Method for Estimating Food and Nutrient Intake in Households in Low- and Middle-Income Countries: A Study Protocol. Curr. Dev. Nutr. 2020, 4, nzaa020. [Google Scholar] [CrossRef]

- Doulah, A.; Ghosh, T.; Hossain, D.; Imtiaz, M.H.; Sazonov, E. “Automatic Ingestion Monitor Version 2”—A Novel Wearable Device for Automatic Food Intake Detection and Passive Capture of Food Images. IEEE J. Biomed. Health Inform. 2020, 25, 568–576. [Google Scholar] [CrossRef] [PubMed]

- Sun, M.; Burke, L.E.; Baranowski, T.; Fernstrom, J.D.; Zhang, H.; Chen, H.C.; Bai, Y.; Li, Y.; Li, C.; Yue, Y.; et al. An exploratory study on a chest-worn computer for evaluation of diet, physical activity and lifestyle. J. Healthc. Eng. 2015, 6, 1–22. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [PubMed]

- Fontana, J.M.; Farooq, M.; Sazonov, E. Automatic Ingestion Monitor: A Novel Wearable Device for Monitoring of Ingestive Behavior. IEEE Trans. Biomed. Eng. 2014, 61, 1772–1779. [Google Scholar] [CrossRef] [PubMed]

- Hess, M.A. Portion Photos of Popular Foods, 1st ed.; The American Dietetic Association: Providence, RI, USA; Center for Nutrition Education, University of Wisconsin, Stout: Menomonie, WI, USA, 1997; 128p. [Google Scholar]

- Murugu, D.; Kimani, A.; Mbelenga, E.; Mwai, J. Kenya Food Composition Tables; FAO: Rome, Italy, 2018. [Google Scholar]

- Al Marzooqi, H.M.; Burke, S.J.; Al Ghazali, M.R.; Duffy, E.; Yousuf, M.H.S.A. The development of a food atlas of portion sizes for the United Arab Emirates. J. Food Compos. Anal. 2015, 43, 140–148. [Google Scholar] [CrossRef]

- Charrondiere, U.R. FAO/INFOODS Database (Version 2.0); Food and Agriculture Organization: Rome, Italy, 2012. [Google Scholar]

- McCance and Widdowson’s Composition of Food Integrated Dataset (CoFID). 2019. Available online: https://wwwgovuk/government/publications/composition-of-foods-integrated-dataset-cofid (accessed on 30 January 2021).

- Stadlmayr, B.; Enujiugha, V.N.; Bayili, G.R.; Fagbohound, E.G.; Samb, B.; Addy, P.; Ingrid; Barikmo; Ouattara, F.; Oshaug, A.; et al. West African Food Composition Table; FAO: Rome, Italy, 2012. [Google Scholar]

- Forestfield Software Limited. Dietplan 7. 2020. Available online: http://wwwforesoftcouk/indexhtml (accessed on 2 February 2023).

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef]

- Bland, J.M.; Altman, D.G. Measuring agreement in method comparison studies. Stat. Methods Med. Res. 1999, 8, 135–160. [Google Scholar] [CrossRef]

- Ekstrom, E.-C.; Hyder, S.M.Z.; Chowdhury, A.M.R.; Chowdhury, S.A.; Lönnerdal, B.; Habicht, J.-P.; Persson, L.Ã. Efficacy and trial effectiveness of weekly and daily iron supplementation among pregnant women in rural Bangladesh: Disentangling the issues. Am. J. Clin. Nutr. 2002, 76, 1392–1400. [Google Scholar] [CrossRef]

- Cade, J.; Thompson, R.; Burley, V.; Warm, D. Development, validation and utilisation of food-frequency questionnaires—A review. Public Health Nutr. 2002, 5, 567–587. [Google Scholar] [CrossRef]

- IBM Service Corps. IBM SPSS Statistics. 2020. Available online: https://wwwibmcom/analytics/spss-statistics-software (accessed on 2 February 2023).

- Baumgartner, J.; Smuts, C.M.; Aeberli, I.; Malan, L.; Tjalsma, H.; Zimmermann, M.B. Overweight impairs efficacy of iron supplementation in iron-deficient South African children: A randomized controlled intervention. Int. J. Obes. 2012, 37, 24–30. [Google Scholar] [CrossRef]

- Lombard, M.J.; Steyn, N.P.; Charlton, K.E.; Senekal, M. Application and interpretation of multiple statistical tests to evaluate validity of dietary intake assessment methods. Nutr. J. 2015, 14, 40. [Google Scholar] [CrossRef] [PubMed]

- Judd, A.L.; Beck, K.L.; McKinlay, C.; Jackson, A.; Conlon, C.A. Validation of a Complementary Food Frequency Questionnaire to assess infant nutrient intake. Matern. Child Nutr. 2020, 16, e12879. [Google Scholar] [CrossRef] [PubMed]

- Masson, L.F.; McNeill, G.; Tomany, J.O.; Simpson, J.A.; Peace, H.S.; Wei, L.; Grubb, D.A.; Bolton-Smith, C. Statistical approaches for assessing the relative validity of a food-frequency questionnaire: Use of correlation coefficients and the kappa statistic. Public Health Nutr. 2003, 6, 313–321. [Google Scholar] [CrossRef] [PubMed]

- Savard, C.; Lemieux, S.; Lafrenière, J.; Laramée, C.; Robitaille, J.; Morisset, A.S. Validation of a self-administered web-based 24-hour dietary recall among pregnant women. BMC Pregnancy Childbirth 2018, 18, 112. [Google Scholar] [CrossRef]

- Greenwood, D.C.; Hardie, L.J.; Frost, G.S.; Alwan, N.A.; Bradbury, K.E.; Carter, M.; Elliott, P.; Evans, C.E.L.; Ford, H.E.; Hancock, N.; et al. Validation of the Oxford WebQ Online 24-Hour Dietary Questionnaire Using Biomarkers. Am. J. Epidemiol. 2019, 188, 1858–1867. [Google Scholar] [CrossRef]

- Wark, P.A.; Hardie, L.J.; Frost, G.S.; Alwan, N.A.; Carter, M.; Elliott, P.; Ford, H.E.; Hancock, N.; Morris, M.A.; Mulla, U.Z.; et al. Validity of an online 24-h recall tool (myfood24) for dietary assessment in population studies: Comparison with biomarkers and standard interviews. BMC Med. 2018, 16, 136. [Google Scholar] [CrossRef]

- Boushey, C.J.; Spoden, M.; Delp, E.J.; Zhu, F.; Bosch, M.; Ahmad, Z.; Shvetsov, Y.B.; DeLany, J.P.; Kerr, D.A. Reported Energy Intake Accuracy Compared to Doubly Labeled Water and Usability of the Mobile Food Record among Community Dwelling Adults. Nutrients 2017, 9, 312. [Google Scholar] [CrossRef]

- Gemming, L.; Rush, E.; Maddison, R.; Doherty, A.; Gant, N.; Utter, J.; Ni Mhurchu, C. Wearable cameras can reduce dietary under-reporting: Doubly labelled water validation of a camera-assisted 24 h recall. Br. J. Nutr. 2015, 113, 284–291. [Google Scholar] [CrossRef]

- Park, Y.; Dodd, K.W.; Kipnis, V.; Thompson, F.E.; Potischman, N.; Schoeller, D.A.; Baer, D.J.; Midthune, D.; Troiano, R.P.; Bowles, H.; et al. Comparison of self-reported dietary intakes from the Automated Self-Administered 24-h recall, 4-d food records, and food-frequency questionnaires against recovery biomarkers. Am. J. Clin. Nutr. 2018, 107, 80–93. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).