Submitted:

28 August 2023

Posted:

30 August 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- We propose a novel approach by redefining document binarization as an iterative diffusion-denoising problem for degraded document images. This is the first work to introduce a latent diffusion model to document binarization to generate high-quality clean binarized document images.

- We use gated convolution in the latent space for elaborate text strokes extraction. This makes it easier to distinguish text from background by updating the gating value as a guide for the text region.

- The proposed model generates high-quality clean binarized document images and outperforms existing methods on several (H-)DIBCO datasets.

2. Related Work

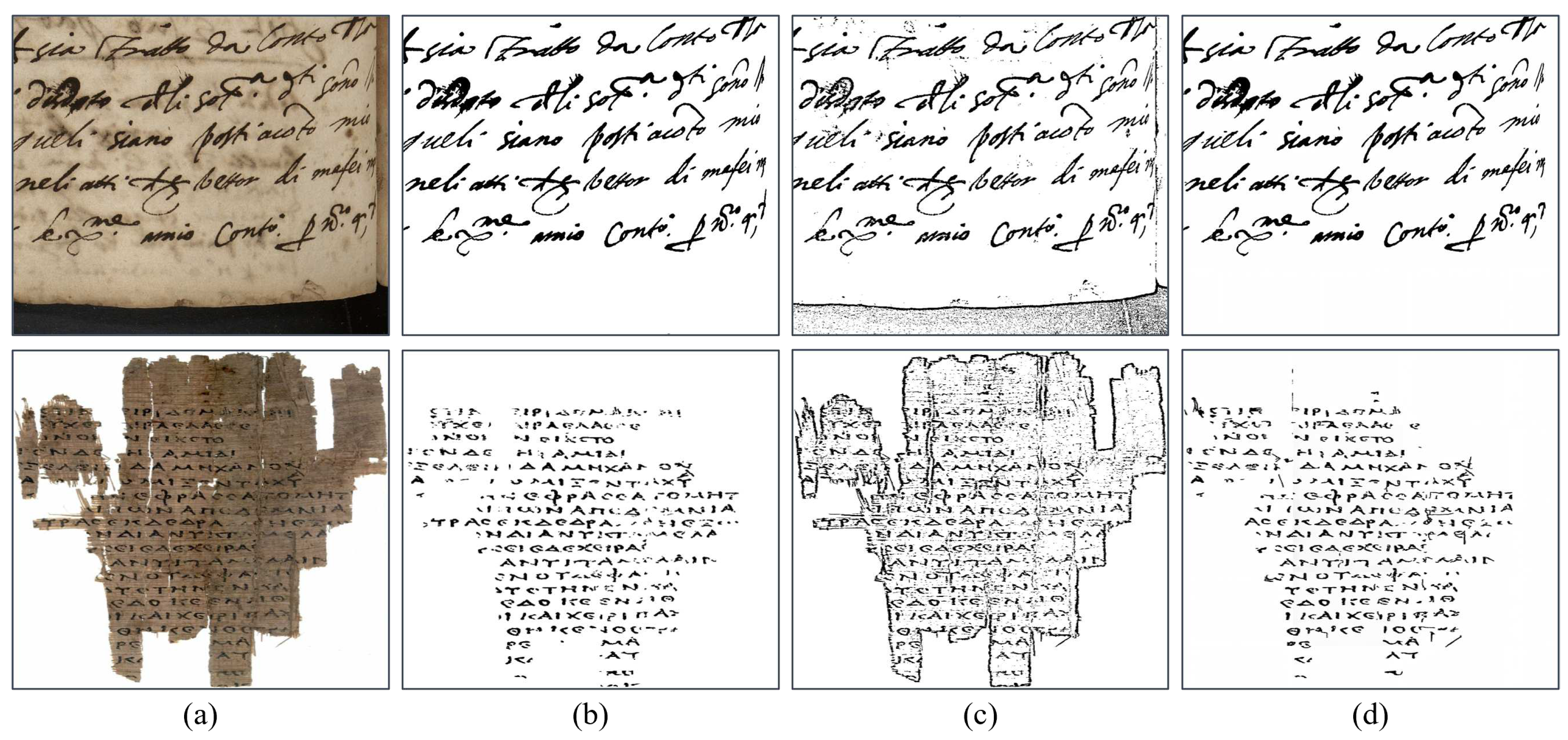

2.1. Document Binarization

2.2. Diffusion Model for image-to-image Task

2.3. Gated Convolutions

3. Method

3.1. Preliminaries

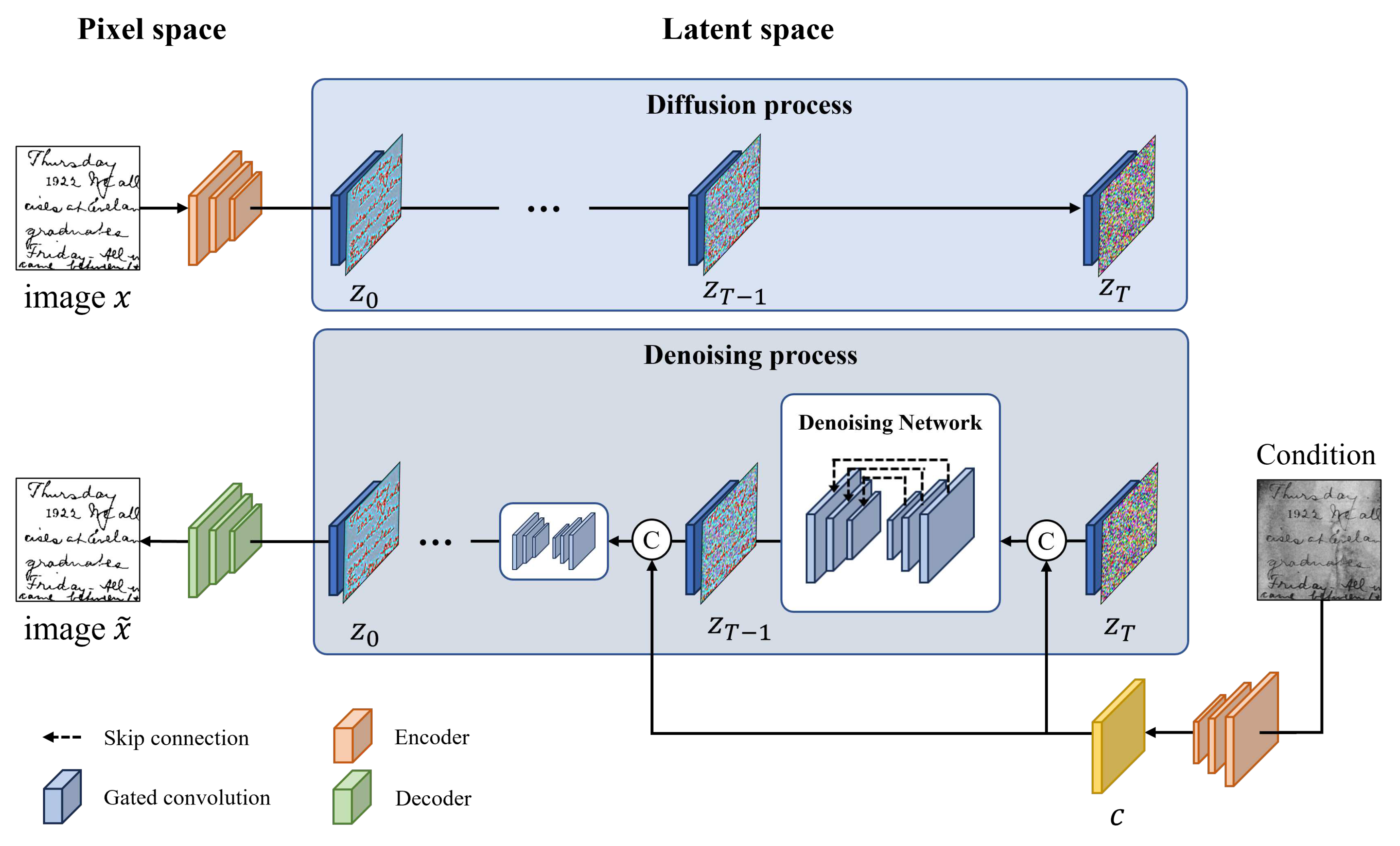

3.2. Network Architecture

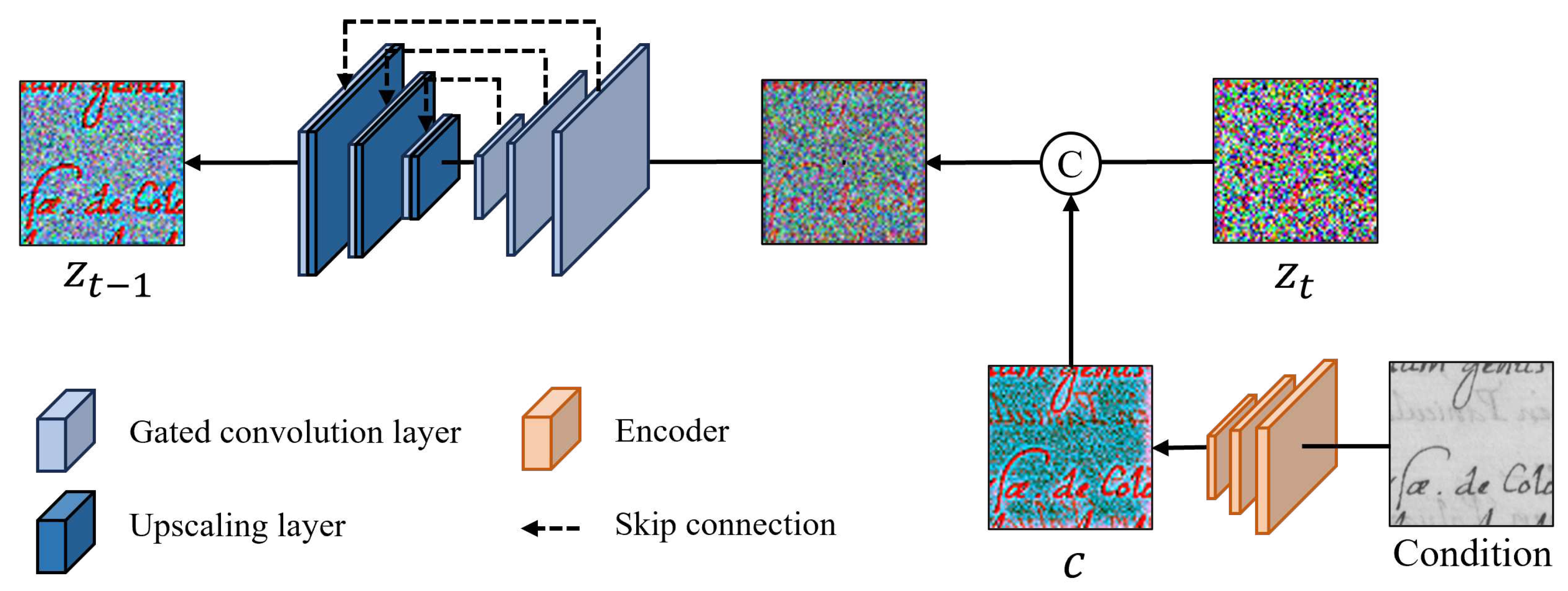

3.3. Document Diffusion-Denoising Network

3.3.1. Document Image Compression

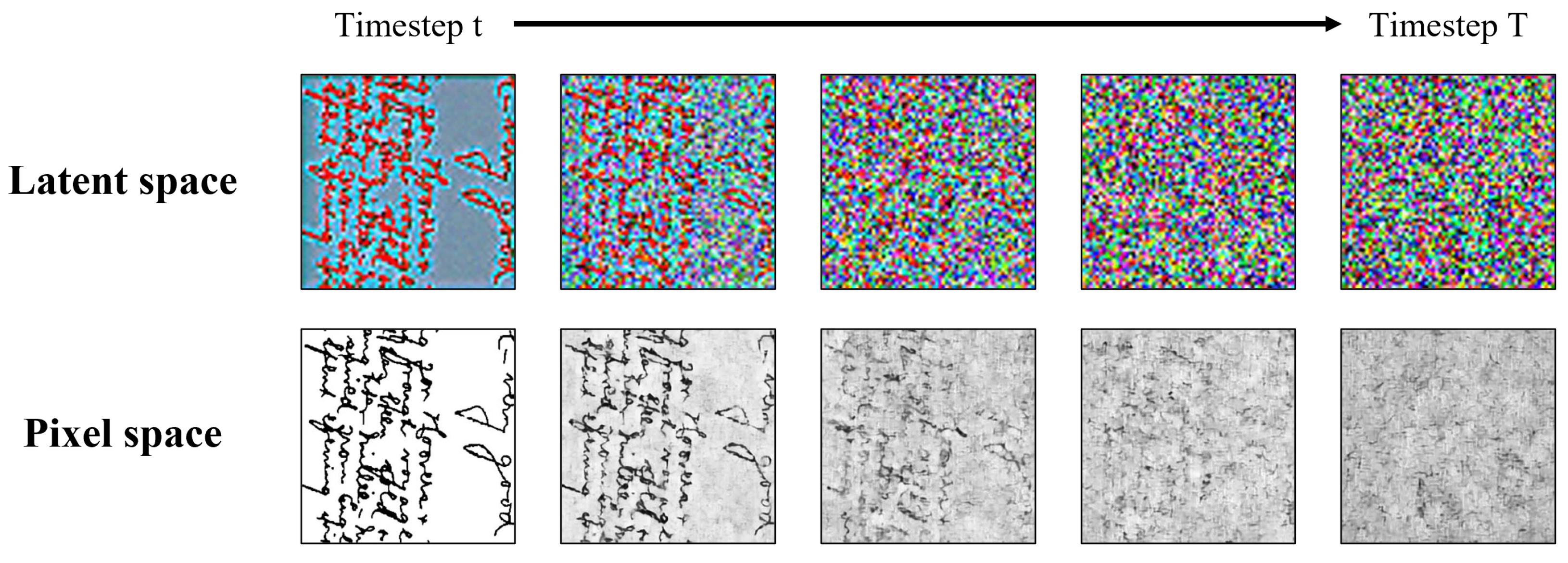

3.3.2. Diffusion-Denoising Process

3.4. Gated Convolution in Latent Space

4. Experiments and Results

4.1. Dataset and Implementation details

4.2. Evaluation Metrics

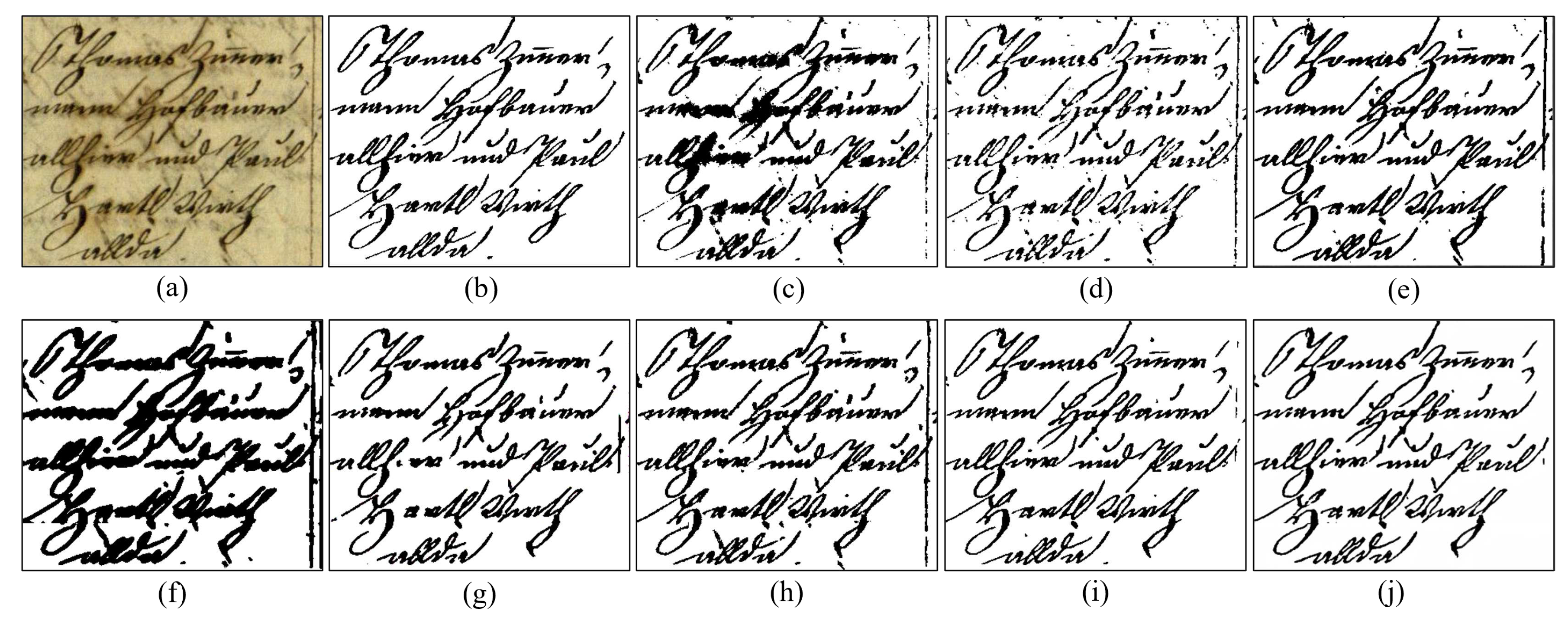

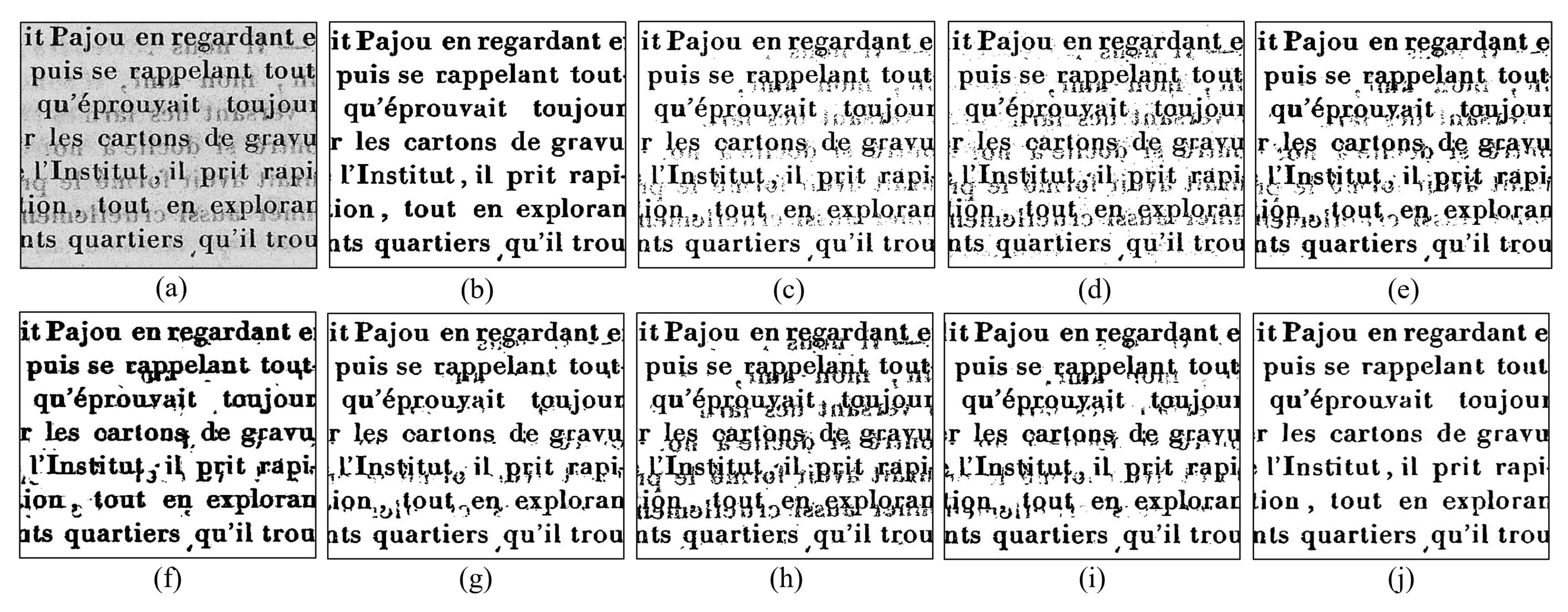

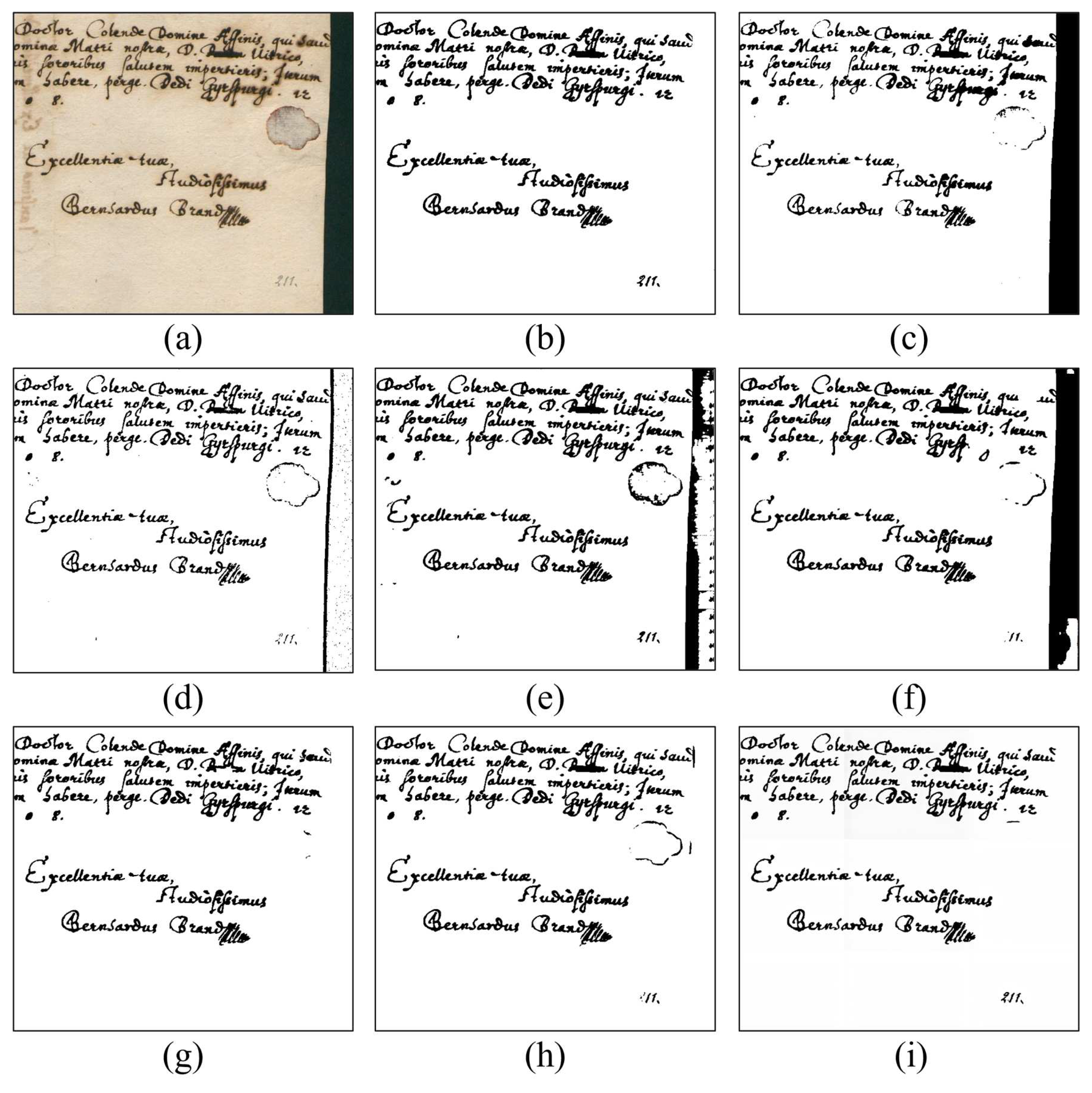

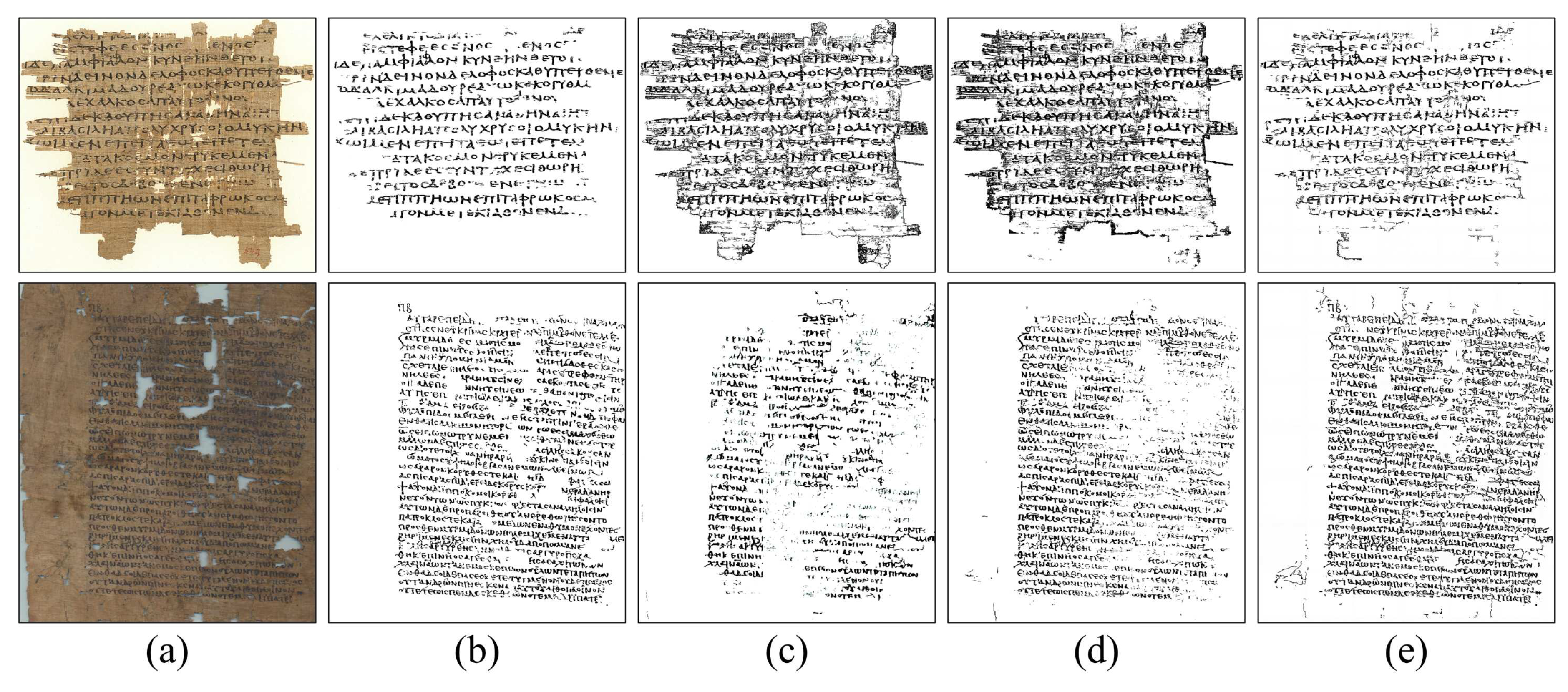

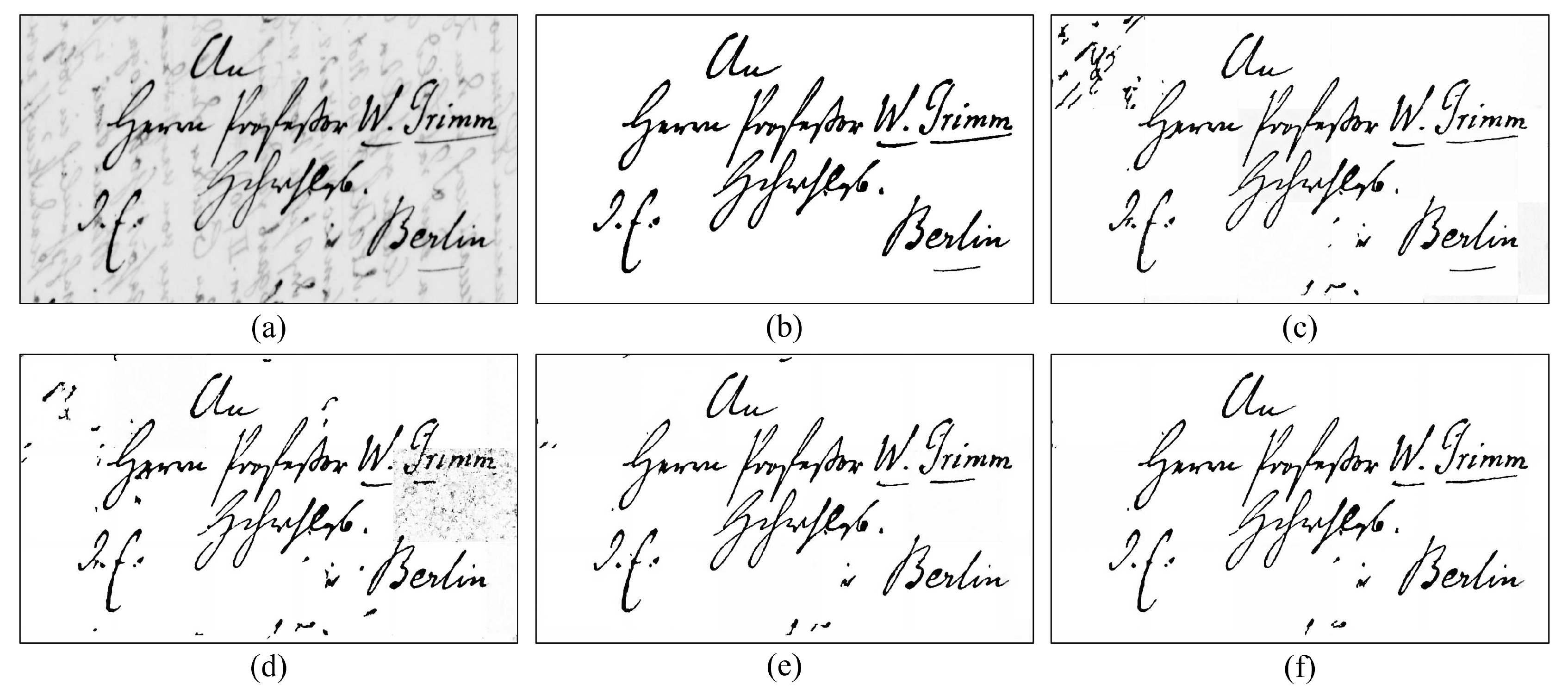

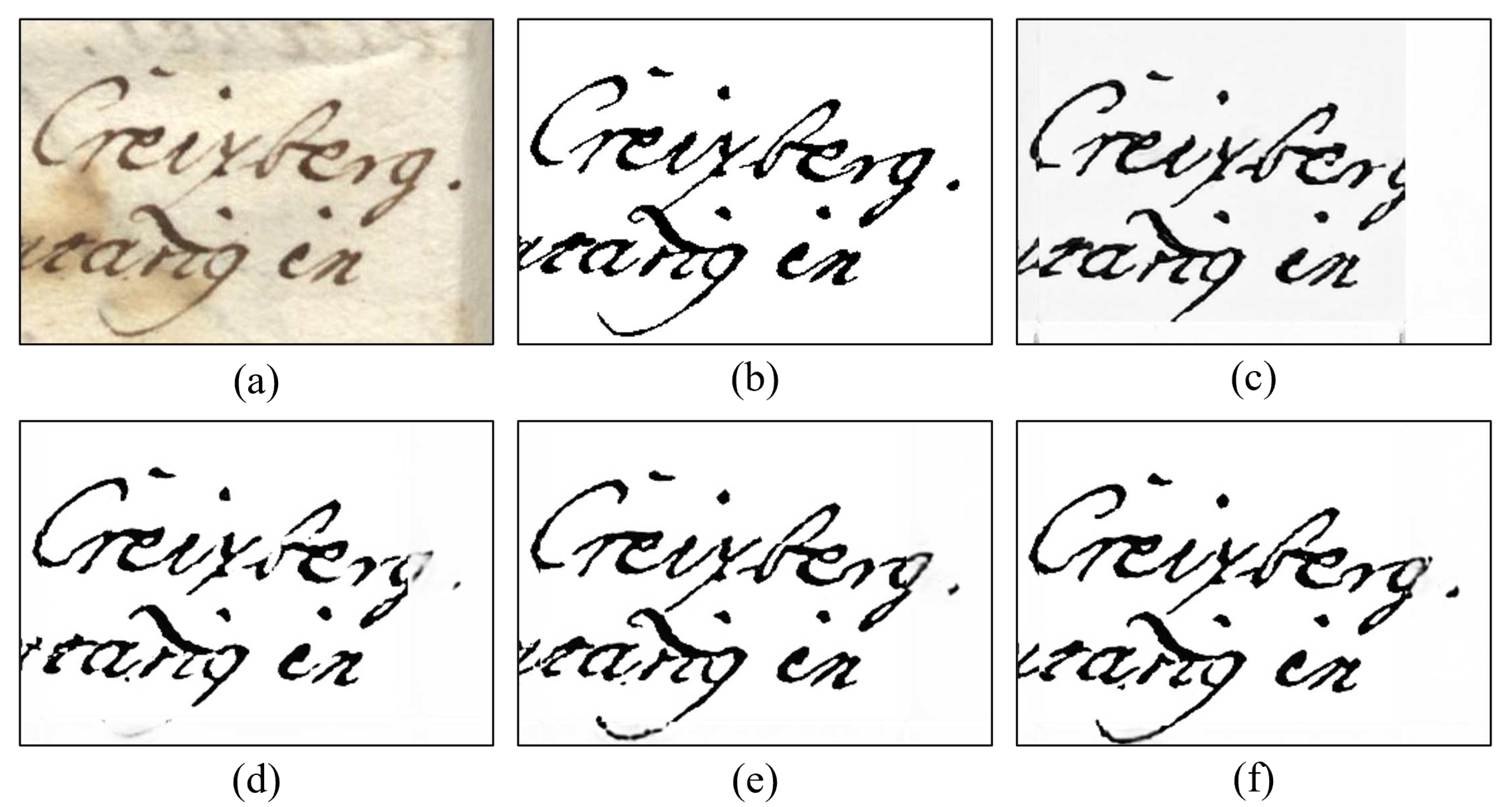

4.3. Quantitative and Qualitative Comparison

4.4. Ablation study

5. Conclusion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Sulaiman, A.; Omar, K.; Nasrudin, M.F. Degraded historical document binarization: A review on issues, challenges, techniques, and future directions. Journal of imaging 2019, 5, 48. [Google Scholar] [CrossRef] [PubMed]

- Farahmand, A.; Sarrafzadeh, H.; Shanbehzadeh, J. Document image noises and removal methods 2013.

- Mustafa, W.A.; Kader, M.M.M.A. Binarization of document images: A comprehensive review. Journal of Physics: Conference Series. IOP Publishing, 2018, Vol. 1019, p. 012023.

- Chauhan, S.; Sharma, E.; Doegar, A. ; others. Binarization techniques for degraded document images—A review. 2016 5th international conference on reliability, infocom technologies and optimization (Trends and Future Directions)(ICRITO). IEEE, 2016, pp. 163–166.

- Sauvola, J.; Seppanen, T.; Haapakoski, S.; Pietikainen, M. Adaptive document binarization. Proceedings of the fourth international conference on document analysis and recognition. IEEE, 1997, Vol. 1, pp. 147–152.

- Otsu, N. A threshold selection method from gray-level histograms. IEEE transactions on systems, man, and cybernetics 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Niblack, W. An introduction to digital image processing; Strandberg Publishing Company, 1985.

- He, S.; Schomaker, L. DeepOtsu: Document enhancement and binarization using iterative deep learning. Pattern recognition 2019, 91, 379–390. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 3431–3440.

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, -9, 2015, Proceedings, Part III 18. Springer, 2015, pp. 234–241. 5 October.

- Westphal, F.; Lavesson, N.; Grahn, H. Document image binarization using recurrent neural networks. 2018 13th IAPR International Workshop on Document Analysis Systems (DAS). IEEE, 2018, pp. 263–268.

- Tensmeyer, C.; Martinez, T. Document image binarization with fully convolutional neural networks. 2017 14th IAPR international conference on document analysis and recognition (ICDAR). IEEE, 2017, Vol. 1, pp. 99–104.

- Zhao, J.; Shi, C.; Jia, F.; Wang, Y.; Xiao, B. Document image binarization with cascaded generators of conditional generative adversarial networks. Pattern Recognition 2019, 96, 106968. [Google Scholar] [CrossRef]

- De, R.; Chakraborty, A.; Sarkar, R. Document image binarization using dual discriminator generative adversarial networks. IEEE Signal Processing Letters 2020, 27, 1090–1094. [Google Scholar] [CrossRef]

- Suh, S.; Kim, J.; Lukowicz, P.; Lee, Y.O. Two-stage generative adversarial networks for binarization of color document images. Pattern Recognition 2022, 130, 108810. [Google Scholar] [CrossRef]

- Dhariwal, P.; Nichol, A. Diffusion models beat gans on image synthesis. Advances in neural information processing systems 2021, 34, 8780–8794. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2022, pp. 10684–10695.

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Advances in neural information processing systems 2020, 33, 6840–6851. [Google Scholar]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T.S. Free-form image inpainting with gated convolution. Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 4471–4480.

- Pratikakis, I.; Zagoris, K.; Barlas, G.; Gatos, B. ICFHR2016 handwritten document image binarization contest (H-DIBCO 2016). 2016 15th International Conference on Frontiers in Handwriting Recognition (ICFHR). IEEE, 2016, pp. 619–623.

- Pratikakis, I.; Zagoris, K.; Barlas, G. ; Gatos. ICDAR2017 competition on document image binarization (DIBCO 2017). 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR). IEEE, 2017, Vol. 1, pp. 1395–1403.

- Pratikakis, I.; Zagori, K.; Kaddas, P.; Gatos, B. ICFHR 2018 Competition on Handwritten Document Image Binarization (H-DIBCO 2018). 2018 16th International Conference on Frontiers in Handwriting Recognition (ICFHR), 2018, pp. 489–493. [CrossRef]

- Pratikakis, I.; Zagoris, K.; Karagiannis, X.; Tsochatzidis, L.; Mondal, T.; Marthot-Santaniello, I. ICDAR 2019 Competition on Document Image Binarization (DIBCO 2019). 2019 International Conference on Document Analysis and Recognition (ICDAR), 2019, pp. 1547–1556. [CrossRef]

- Peng, X.; Cao, H.; Natarajan, P. Using convolutional encoder-decoder for document image binarization. 2017 14th IAPR international conference on document analysis and recognition (ICDAR). IEEE, 2017, Vol. 1, pp. 708–713.

- Calvo-Zaragoza, J.; Gallego, A.J. A selectional auto-encoder approach for document image binarization. Pattern Recognition 2019, 86, 37–47. [Google Scholar] [CrossRef]

- Kang, S.; Iwana, B.K.; Uchida, S. Complex image processing with less data—Document image binarization by integrating multiple pre-trained U-Net modules. Pattern Recognition 2021, 109, 107577. [Google Scholar] [CrossRef]

- Akbari, Y.; Al-Maadeed, S.; Adam, K. Binarization of degraded document images using convolutional neural networks and wavelet-based multichannel images. IEEE Access 2020, 8, 153517–153534. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 1125–1134.

- Souibgui, M.A.; Kessentini, Y. De-gan: A conditional generative adversarial network for document enhancement. IEEE Transactions on Pattern Analysis and Machine Intelligence 2020, 44, 1180–1191. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.S.; Ju, R.Y.; Chen, C.C.; Lin, T.Y.; Chiang, J.S. Three-stage binarization of color document images based on discrete wavelet transform and generative adversarial networks. arXiv preprint arXiv:2211.16098, arXiv:2211.16098 2022.

- Song, J.; Meng, C.; Ermon, S. Denoising diffusion implicit models. arXiv preprint arXiv:2010.02502, arXiv:2010.02502 2020.

- Dhariwal, P.; Nichol, A. Diffusion models beat gans on image synthesis. Advances in neural information processing systems 2021, 34, 8780–8794. [Google Scholar]

- Wolleb, J.; Sandkühler, R.; Bieder, F.; Valmaggia, P.; Cattin, P.C. Diffusion models for implicit image segmentation ensembles. International Conference on Medical Imaging with Deep Learning. PMLR, 2022, pp. 1336–1348.

- Kim, B.; Oh, Y.; Ye, J.C. Diffusion adversarial representation learning for self-supervised vessel segmentation. arXiv preprint arXiv:2209.14566, arXiv:2209.14566 2022.

- Chen, S.; Sun, P.; Song, Y.; Luo, P. Diffusiondet: Diffusion model for object detection. arXiv preprint arXiv:2211.09788, arXiv:2211.09788 2022.

- Duan, Y.; Guo, X.; Zhu, Z. Diffusiondepth: Diffusion denoising approach for monocular depth estimation. arXiv preprint arXiv:2303.05021, arXiv:2303.05021 2023.

- Li, X.; Zhao, H.; Han, L.; Tong, Y.; Tan, S.; Yang, K. Gated fully fusion for semantic segmentation. Proceedings of the AAAI conference on artificial intelligence, 2020, Vol. 34, pp. 11418–11425.

- Wang, H.; Wang, Y.; Zhang, Q.; Xiang, S.; Pan, C. Gated convolutional neural network for semantic segmentation in high-resolution images. Remote Sensing 2017, 9, 446. [Google Scholar] [CrossRef]

- Dauphin, Y.N.; Fan, A.; Auli, M.; Grangier, D. Language modeling with gated convolutional networks. International conference on machine learning. PMLR, 2017, pp. 933–941.

- Lin, X.; Ma, L.; Liu, W.; Chang, S.F. Context-gated convolution. Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, –28, 2020, Proceedings, Part XVIII 16. Springer, 2020, pp. 701–718. 23 August.

- Zhang, Y.; Fang, J.; Chen, Y.; Jia, L. Edge-aware U-net with gated convolution for retinal vessel segmentation. Biomedical Signal Processing and Control 2022, 73, 103472. [Google Scholar] [CrossRef]

- Kwon, M.; Jeong, J.; Uh, Y. Diffusion models already have a semantic latent space. arXiv preprint arXiv:2210.10960, arXiv:2210.10960 2022.

- Esser, P.; Rombach, R.; Ommer, B. Taming transformers for high-resolution image synthesis. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2021, pp. 12873–12883.

- Pratikakis, I.; Gatos, B.; Ntirogiannis, K. H-DIBCO 2010-handwritten document image binarization competition. 2010 12th International Conference on Frontiers in Handwriting Recognition. IEEE, 2010, pp. 727–732.

- Pratikakis, I.; Gatos, B. ; Ntirogiannis. ICFHR 2012 competition on handwritten document image binarization (H-DIBCO 2012). 2012 international conference on frontiers in handwriting recognition. IEEE, 2012, pp. 817–822.

- Ntirogiannis, K.; Gatos, B.; Pratikakis, I. ICFHR2014 competition on handwritten document image binarization (H-DIBCO 2014). 2014 14th International conference on frontiers in handwriting recognition. IEEE, 2014, pp. 809–813.

- Gatos, B.; Ntirogiannis, K.; Pratikakis, I. ICDAR 2009 document image binarization contest (DIBCO 2009). 2009 10th International conference on document analysis and recognition. IEEE, 2009, pp. 1375–1382.

- Pratikakis, I.; Gatos, B.; Ntirogiannis, K. ICDAR 2011 Document Image Binarization Contest (DIBCO 2011). 2011 International Conference on Document Analysis and Recognition, 2011, pp. 1506–1510. [CrossRef]

- Pratikakis, I.; Gatos, B. ; Ntirogiannis. ICDAR 2013 document image binarization contest (DIBCO 2013). 2013 12th International Conference on Document Analysis and Recognition. IEEE, 2013, pp. 1471–1476.

- Deng, F.; Wu, Z.; Lu, Z.; Brown, M.S. Binarizationshop: a user-assisted software suite for converting old documents to black-and-white. Proceedings of the 10th annual joint conference on Digital libraries, 2010, pp. 255–258.

- Nafchi, H.Z.; Ayatollahi, S.M.; Moghaddam, R.F.; Cheriet, M. An efficient ground truthing tool for binarization of historical manuscripts. 2013 12th International Conference on Document Analysis and Recognition. IEEE, 2013, pp. 807–811.

- Hedjam, R.; Cheriet, M. Historical document image restoration using multispectral imaging system. Pattern Recognition 2013, 46, 2297–2312. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101, arXiv:1711.05101 2017.

- Ntirogiannis, K.; Gatos, B.; Pratikakis, I. Performance evaluation methodology for historical document image binarization. IEEE Transactions on Image Processing 2012, 22, 595–609. [Google Scholar] [CrossRef]

- Lu, H.; Kot, A.C.; Shi, Y.Q. Distance-reciprocal distortion measure for binary document images. IEEE Signal Processing Letters 2004, 11, 228–231. [Google Scholar] [CrossRef]

| Dataset | Metric | Otsu [6] |

Sauvola [5] |

Competition winner |

SAE [25] |

cGANs [13] |

Akbari [27] |

Souibgui [29] |

Suh [15] |

Ours |

|---|---|---|---|---|---|---|---|---|---|---|

| 2016 | FM | 86.64 | 79.57 | 88.72 | 88.11 | 91.67 | 90.48 | 84.45 | 91.11 | 88.95 |

| pFM | 89.99 | 86.84 | 91.84 | 91.55 | 94.59 | 93.26 | 84.73 | 95.22 | 95.45 | |

| PSNR | 17.80 | 16.90 | 18.45 | 18.21 | 19.64 | 19.27 | 16.18 | 19.34 | 19.38 | |

| DRD | 5.52 | 6.76 | 3.86 | 4.51 | 2.82 | 3.94 | 7.25 | 3.25 | 3.74 | |

| 2017 | FM | 80.63 | 73.86 | 91.04 | 85.72 | 90.73 | 85.59 | 80.63 | 89.33 | 89.36 |

| pFM | 80.85 | 84.78 | 92.86 | 87.85 | 92.58 | 87.56 | 80.85 | 91.41 | 94.02 | |

| PSNR | 13.84 | 14.30 | 18.28 | 16.09 | 17.83 | 16.39 | 13.84 | 17.91 | 18.33 | |

| DRD | 9.85 | 8.30 | 3.40 | 6.53 | 3.58 | 7.99 | 9.85 | 3.83 | 3.83 | |

| 2018 | FM | 51.56 | 64.04 | 88.34 | 75.77 | 87.73 | 76.51 | 77.59 | 91.86 | 88.43 |

| pFM | 53.58 | 72.13 | 90.24 | 77.95 | 90.60 | 80.09 | 85.74 | 96.25 | 93.73 | |

| PSNR | 9.76 | 13.98 | 19.11 | 14.79 | 18.37 | 17.01 | 16.16 | 20.03 | 19.28 | |

| DRD | 59.07 | 13.96 | 4.92 | 13.30 | 4.58 | 8.11 | 7.93 | 2.60 | 3.95 | |

| 2019-B | FM | 22.47 | 50.57 | 67.99 | 47.57 | 61.64 | 47.00 | 49.83 | 66.83 | 72.71 |

| pFM | 22.47 | 54.48 | 67.88 | 48.55 | 62.52 | 47.60 | 49.97 | 68.32 | 75.24 | |

| PSNR | 2.61 | 10.85 | 12.14 | 10.84 | 11.77 | 9.18 | 8.55 | 12.91 | 14.37 | |

| DRD | 213.58 | 33.73 | 26.87 | 32.00 | 24.11 | 70.50 | 53.18 | 19.80 | 14.39 | |

| Mean Values |

FM | 59.61 | 67.01 | 84.02 | 74.29 | 82.94 | 74.90 | 71.64 | 84.78 | 84.86 |

| pFM | 61.53 | 74.56 | 85.71 | 76.47 | 85.07 | 77.13 | 71.85 | 87.80 | 89.61 | |

| PSNR | 11.01 | 14.01 | 17.00 | 14.98 | 16.90 | 15.46 | 12.86 | 17.55 | 17.84 | |

| DRD | 73.42 | 15.69 | 9.76 | 14.09 | 8.77 | 22.65 | 23.43 | 7.37 | 6.48 |

| H-DIBCO 2016 | FM | pFM | PSNR | DRD |

|---|---|---|---|---|

| Baseline | 88.25 | 94.44 | 19.09 | 4.05 |

| Ours w/o | 88.13 | 95.03 | 19.08 | 4.05 |

| Ours w/o Gated U-Net | 88.48 | 95.03 | 19.08 | 3.93 |

| Ours | 88.93 | 95.44 | 19.37 | 3.74 |

| H-DIBCO 2016 / epoch 6 | FM | pFM |

|---|---|---|

| Baseline | 77.93 | 85.66 |

| Ours w/o | 79.53 | 85.84 |

| Ours w/o Gated U-Net | 85.93 | 93.60 |

| Ours | 86.15 | 94.17 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).