1. Introduction

Artificial intelligence has been an active field of research, and neural networks have emerged as a prominent branch due to their intelligence characteristics and potential for real-world applications. Neural networks have revolutionized the field of artificial intelligence by enabling computers to process and analyze large volumes of complex data with remarkable accuracy. These models are based on the structure and processes of the brain, where neurons are interconnected and communicated by using electrical signals. Neural networks are characterized by their remarkable ability to learn from data and improve their performance over time, without being explicitly programmed. Neural networks have evolved over time, with various models developed to address different types of problems. For example Cohen-Grossberg neural networks [

1], Hopfield neural networks [

2] and cellular neural networks [

3].

Kosko’s bidirectional associative memory neural networks (BAMNNs) are noteworthy extension of the traditional single-layer neural networks [

4]. The BAMNNs consist of two layers of neurons that are not interconnected within their own layer. In contrast, the neurons in different layers are fully connected, allowing for bidirectional information flow between the two layers. This unique structure enables the BAMNNs to function as both input and output layers, providing powerful information storage and associative memory capabilities. In signal processing, the BAMNNs can be used to filter signals or extract features, while in pattern recognition, it can classify images or recognize speech. In optimization, the BAMNNs can be used to identify the optimal solution, while in automatic control, it can be used to regulate or stabilize a system. The progress of artificial intelligence and the evolution of neural networks have created novel opportunities to tackle intricate issues in diverse domains. In summary, these advancements have paved the way for innovative problem-solving approaches that were previously unattainable. The BAMNNs’ unique architecture and capabilities make its a powerful tool for engineering applications, and its is anticipated that this technology will maintain its importance in future research and development, and continue to make substantial contributions to various fields.

Due to the restriction of network size and synaptic elements, the functions of artificial neural networks are greatly limited. If common connection weights and the self-feedback connection weights of BAMNNs are established by memristor [

5], then its model can be built in a circuit. Memristor [

6,

7] is a circuit element in electronic circuit theory that behaves nonlinearly and has two terminals. Its unique feature has led to its widespread use and potential application in a variety of fields, including artificial intelligence, data storage, and neuromorphic computing. Adding memristor to neural networks makes it possible for artificial neural networks to simulate human brain on the circuit, which makes the research on memristor neural networks more meaningful. Therefore, the resistance in the traditional neural networks is replaced by memristor, and the BAMNNs based on memristor is formed (MBAMNNs). Compared with traditional neural networks, Memristor neural networks have stronger learning and associative memory abilities, allowing for more efficient processing and storage of information, thereby improving the efficiency and accuracy of artificial intelligence. Additionally, due to the nonlinear characteristics of memristors and their applications in circuits, Memristor neural networks also have lower energy consumption and higher speed. Therefore, the development of Memristor neural networks have broad application prospects in the field of artificial intelligence.

However, due to the limitation of amplifier conversion speed, the phenomenon of time delay in neural network systems is inevitable. Research indicates that the presence of time delay is a significant factor contributing to complex dynamic behaviors such as system instability and chaos [

8]. To enhance the versatility and efficiency of the BAMNNs, Ding and Huang [

9] developed a novel BAMNNs model in 2006. The focus of their investigation was on analyzing the global exponential stability of the equilibrium point and studying its characteristics in this model. The time delay BAMNNs has been advanced by its positive impact on its development [

10,

11,

12,

13].

Fractional calculus extends the traditional differentiation and integration operations to non-integer orders [

14], and has been introduced into neural networks to capture the characteristics of memory and inheritance [

15,

16,

17]. The emergence of fractional-order calculus has spurred the development of neural networks [

6,

7,

18,

19], which have found applications in diverse areas, including signal detection, fault diagnosis, optimization analysis, associative memory, and risk assessment. The fractional-order memristive neural networks (FMNN) are the specific type of fractional-order neural networks, which have been widely studied for their stability properties. For instance, scholars have investigated the asymptotic stability of FMNNs with delay using Caputo fractional differentiation and Filippov solution properties [

20], and also have investigated the asymptotic stability of FMNNs with delay by leveraging the properties of Filippov solutions and Leibniz theorem [

21].

As one of the significant research directions in the nonlinear systems field, synchronization includes quasi-consistent synchronization [

22], projective synchronization [

23], full synchronization [

24], Mittag-Leffler synchronization [

25], global synchronization [

26] and many other types. And it is widely used in cryptography [

27], image encryption [

28], secure communication [

29]. In engineering applications, people want to realize synchronization as soon as possible, so the concept of finite time synchronization is proposed. Due to its ability to achieve faster convergence speed in network systems, finite-time synchronization has become a crucial aspect in developing effective control strategies for realizing system stability or synchronization [

30,

31].

This paper addresses the challenge of achieving finite-time synchronization. The definition of finite-time synchronization used in this article is that the synchronization error be kept within a certain range for a limited time interval. However, dealing with the time delay term in this context is challenging. Previous studies have utilized the Hölder inequality [

32] and the generalized Gronwall inequality [

33,

34] to address the finite-time synchronization problem of fractional-order time delay neural networks, providing valuable insights into the problem. However, this paper proposes a new criterion based on the quadratic fractional-order Gronwall inequality with time delay and comparison principle, offering a fresh perspective on the problem.

This paper presents significant contributions towards the study of finite-time synchronization in fractional-order stochastic MBAMNNs with time delay. The key contributions are as follows:

(1) We improved Lemma 2 in [

34] by deriving a quadratic fractional-order Gronwall inequality with time delay, which is a crucial tool for analyzing the finite-time synchronization problem in stochastic neural networks.

(2) A novel criterion for achieving finite-time synchronization is proposed, which allows for the computation of the required synchronization time

T. This criterion provides a new approach to analyze finite-time synchronization and has the potential to be widely applicable in the field of neural networks research. The paper is structured as follows:

Section 2 introduces relevant concepts and presents the neural networks model used in this study.

Section 3 proposes a novel quadratic fractional-order Gronwall inequality that takes into account time delay. This inequality is useful for studying the finite-time synchronization problem in fractional-order stochastic MBAMNNs with time delay, and by utilizing differential inclusion and set-valued mapping theory, a new criterion for determining the required time

T for finite-time synchronization is derived.

Section 4 provides a numerical example that demonstrates the effectiveness of the proposed results. Finally, suggestions for future research are presented.

2. Preliminaries and model

This section provides an overview of the necessary preliminaries related to fractional-order derivatives and the model of fractional-order stochastic MBAMNNs. We begin by introducing the fundamental concepts related to fractional-order derivatives and then move on to describe the fractional-order stochastic MBAMNNs model. And the definition of finite-time synchronization is provided.

2.1. Preliminaries

Notations: The norm and absolute value of vectors and matrices are defined as follows. Let N and denote the sets of positive integers and real numbers, respectively.

The norm of a vector is given by . Similarly, the norm of a vector is defined as .The induced norm of a matrix is denoted by .The absolute value of a vector is defined as .

Following that, we provide a review and introduction of several definitions and lemmas related to fractional calculus.

Definition 1.

[35] A fractional-order integral of a function with order α can be defined as

where and .

Especially, for

Definition 2.

[35] Suppose , where ι is a positive integer. The Caputo derivative of order α of the function can be expressed as:

where

For the convenience, we use to represent

Lemma 1.

[36] Let , and satisfies

Then, we have

2.2. Model

We investigate a kind of fractional-order differential equations that capture the dynamics of fractional-order stochastic MBAMNNs with time delays. These equations are viewed as the driving system (

1), which models the interactions between neurons in MBAMNNs and accounts for the influence of discontinuous jumps and time delays. Through examining the stability and analytical solutions of these equations, this study aims to enhance the comprehension of the behavior of MBAMNNs, ultimately leading to more comprehensive analysis for practical applications of this model.

where

In this system, the positive parameters and represent the rates of neuron self-inhibition, while and denote the state variables of the -th and -th neuron, respectively. The activation functions without time delay are denoted by and , and those with time delay are denoted by and . The neural connection memristive weights matrices are represented by , , , and . Stochastic terms representing Brownian motion are denoted by and . The constant input vectors are represented by and . The time delay parameters and satisfy and , where is a constant.

The initial conditions of fractional-order stochastic MBAMNNs (

1) are given by

, where

and

. Here,

and

are continuous functions on

and

.

Then, the corresponding system of drive system (

1) is gived by

The initial conditions of the corresponding system (

2) are

;

and

are the following controllers:

where

and

are both positive numbers called the control gain.

The synchronization error, as defined by systems (

1) and (

2), can be expressed as:

Then we obtain synchronization error is And denote

Definition 3.

If there is a real number such that for any and , the synchronization error satisfies

then it can be inferred that the drive system (1) and the response system (2) achieve finite-time synchronization at T.

Remark 1. To obtain a sufficient condition for achieving finite-time synchronization between systems (1) and (2), it is necessary to identify an appropriate evaluation function . This function should satisfy

The next section will apply Lemma 1, which provides a quadratic fractional Gronwall inequality with time delay. This inequality is used to analyze the behavior of the MBAMNNs system.

3. Main results

This section presents a novel approach to obtain the evaluation function

by improving quadratic fractional Gronwall inequality with time delay. We then utilize Theorem 1 to convert this inequality into a format that is consistent with Lemma 2, enabling us to derive a synchronization criterion for the drive system (

1) and corresponding system (

2) in finite time. Specifically, the application of Lemma 2 leads to the novel criterion for finite-time synchronization.

Quadratic fractional Gronwall inequality with time delay is given below.

Lemma 2.

Let and , , , and be continuous functions that are nonnegative and defined on . Let be a nonnegative continuous function defined on and suppose

Assume and are nondecreasing on , is nondecreasing on and

(1) If then

(2) If then

where and they are all constants.

Proof (Proof). Define a function

by

As shown in Equation (

3), we get

When

and the function

, then

By using inequalities (

4) and (

5), we have

Assume are nondecreasing on , is nondecreasing on and .

(1) If

, then

(2) If

, similar to case (1), we obtain

For

, we get

Then, by utilizing Lemma 1 and inequality (

5), we arrive at

Similarly, assume are nondecreasing on , is nondecreasing on and .

(1) If

, then

(2) If

, then

Based on the above analysis, from Lemma 2 and when and are non-decreasing functions for , is a non-decreasing function for with we get the following results.

(1) If

then

(2) If

then

□

Theorem 1.

Assume and non-negative continuous functions , , , , and defined on . Let be a non-negative continuous function defined on and suppose

Assume and are nondecreasing functions on , and is a nondecreasing function on with .

(1) If then

(2) If then

where and such that and

Proof (Proof). By using the Hölder inequality, it follows that

Let

then from Lemma 2 we obtain

The proof is completed. □

To analyze the solutions of the discontinuous systems represented by equations (

1) and (

2), Filippov regularization is used. This involves transforming the equations into differential inclusions and set-valued maps.

The drive system represented by equation (

1) can be expressed in terms of a differential inclusion, which is a powerful tool in the theory of differential inclusions. By using this approach, we can study the behavior of the system even when it experiences discontinuities or impulses.

Overall, Filippov regularization allows us to analyze the solutions of discontinuous systems like Equations (

1) and (

2) in a rigorous and systematic way, providing insights into their behavior and enabling us to make informed decisions about their design and operation.

According to the definition of set-valued maps, we obtain

where

the switching jumps

are positive contants,

,

,

,

,

,

,

,

,

,

,

and

are all contant numbers.

,

,

,

,

,

are all compact, closed and convex.

By modifying the drive system (

1), we can achieve

Similarly, let

by employing the similar method, we can modify the corresponding system (

2) as follows:

Assumption A1.

Let function satisfy the Lipschitz condition, there exist positive constants such that

where are positive Lipschitz constants and assume functions and satisfy this condition equally.

Assumption A2.

Lemma 3.

[37] Under Assumption A1 and Assumption A2, we know for any

where

The synchronization error system, by Assumption A1 and Lemma 3, can be expressed as:

where denote

and

For the sake of convenience, we can express inequality (

8) as

where

and

Remark 2. To ensure that , we can simply find an evaluation function that satisfies with This will guarantee that remains below ϵ, while it also will ensure that is never less than

Lemma 4.

[38] [Burkholder-Davis-Gundy inequality] For any then

where .

Theorem 2. Assume Assumption A1, Assumption A2 and Remark 2 hold and the following conditions are satisfied.

(1) If then

(2) If then

where and ().

Then the drive system (1) and the corresponding system (2) are finite-time synchronized.

Proof (Proof).

By Definition 1, for

, we can obtain the following integral inequalities:

By taking the norm on both sides of inequality (

10) simultaneously, we obtain

Assuming

we can square both sides to obtain

. By using Lemma 4, we can then rewrite the expression as follows

From Lemma 2, we define the following functions: , , , and . The initial value and . It is easy to see that all of these functions are non-negative and continuous. Additionally, and are non-decreasing on , is non-decreasing on and .

By using Lemma 2, we can obtain the following results.

(1) If

then

(2) If

then

where

Therefore, based on the hypothesis conditions, it can be concluded that systems (

1) and (

2) can achieve synchronization. □

So when , Remark 2 indicates that the evaluation function can be determined as follows.

(1) If

then

(2) If

then

In

Section 4, we will present a numerical example to provide a more visual demonstration of the finite-time synchronization achieved between systems (

1) and (

2).

4. Numerical examples

Compared to conventional neural networks, neural networks incorporating stochasticity possess greater adaptability and robustness in achieving finite-time synchronization. This is because stochasticity can increase the complexity of the system, endowing it with enhanced fault-tolerance and adaptability, thus facilitating more efficient adaptation to diverse environments and application scenarios. Moreover, neural networks with stochasticity exhibit advantageous characteristics in handling nonlinear and complex problems. In practical applications, the parameters and states of the neural network systems are often uncertain, owing to the presence of uncertainty. Stochasticity can more effectively model such uncertainty and bolster the reliability of the neural network systems, thereby elevating its performance in practical applications. Therefore, investigating neural networks with stochasticity may contribute to enhancing the application capabilities and performance of neural networks.

We illustrate the practical application of Theorem 2 in achieving finite-time synchronization between the systems (

1) and (

2) through a numerical example. By showing this example, we can validate the effectiveness of the proposed synchronization method. Specifically, the example involves simulating the behavior of the systems with varying initial conditions, and analyzing the resulting trajectories. The insights gained from this example are used to serve as evidence to show the practical relevance of the finite-time synchronization approach presented in this paper.

Example

Consider the fractional-order stochastic MBAMNNs with time delay

where

Let

Then, assume

and

in corresponding system (

2).

Let

such that

It is easy to calculate that

and

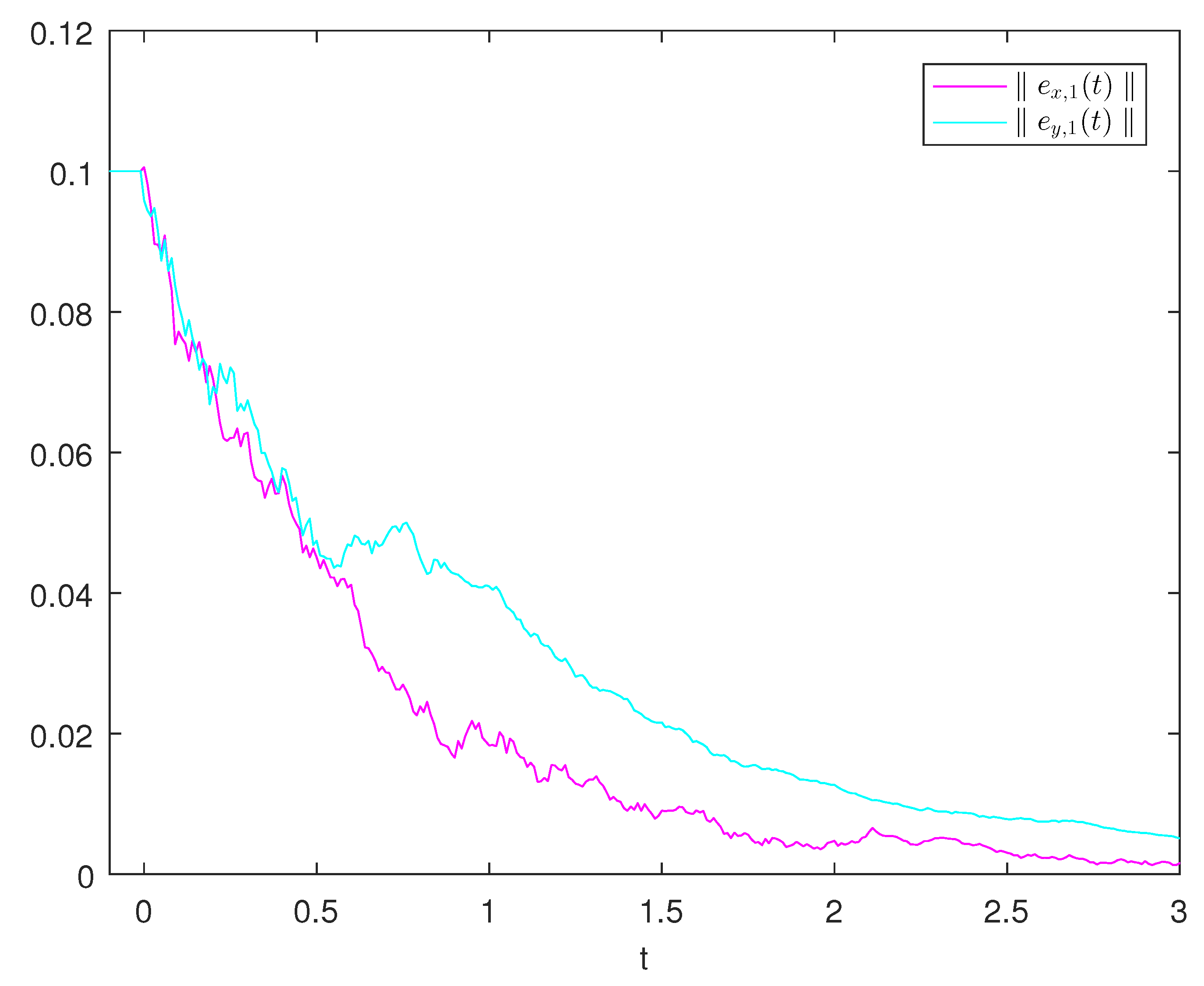

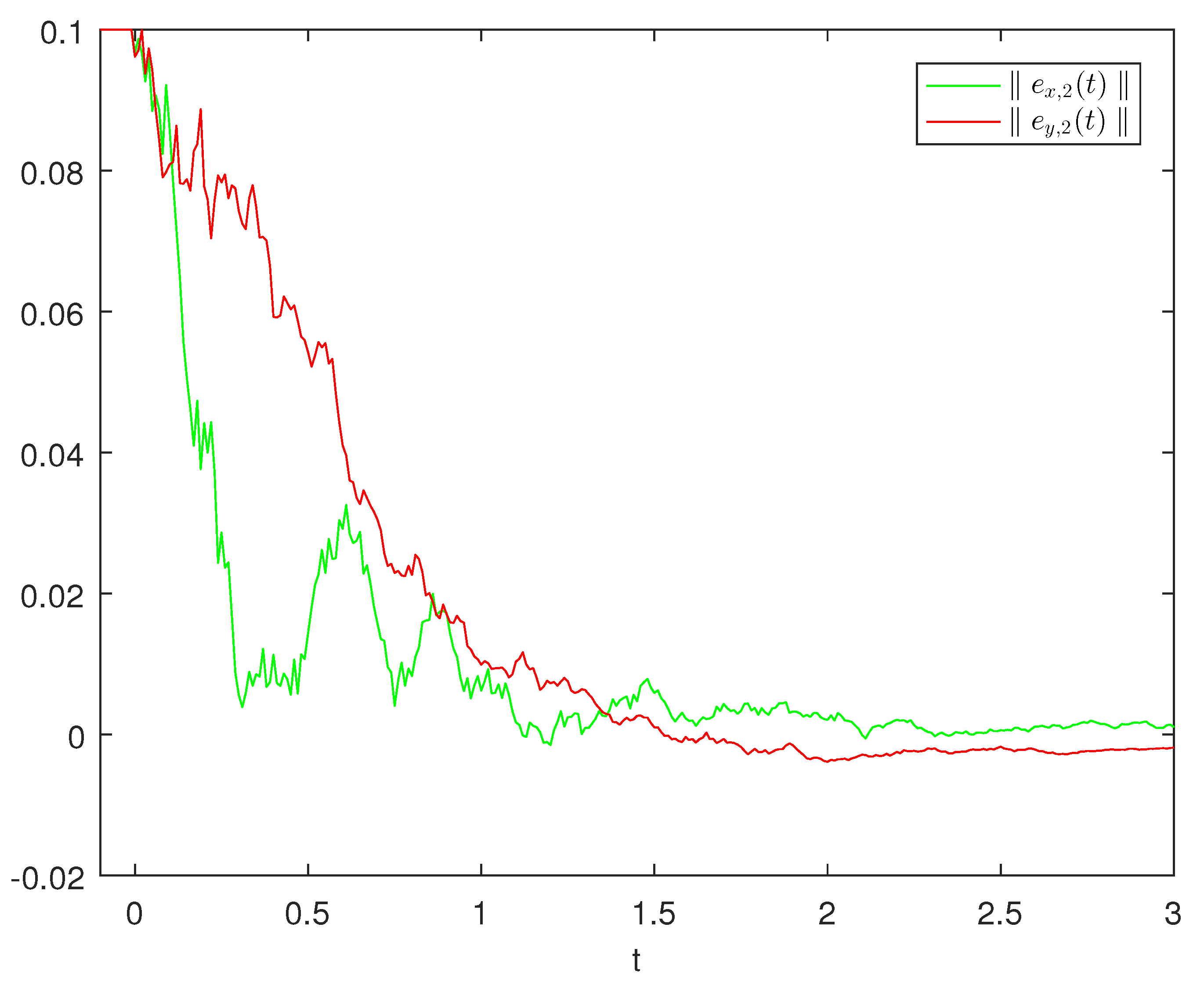

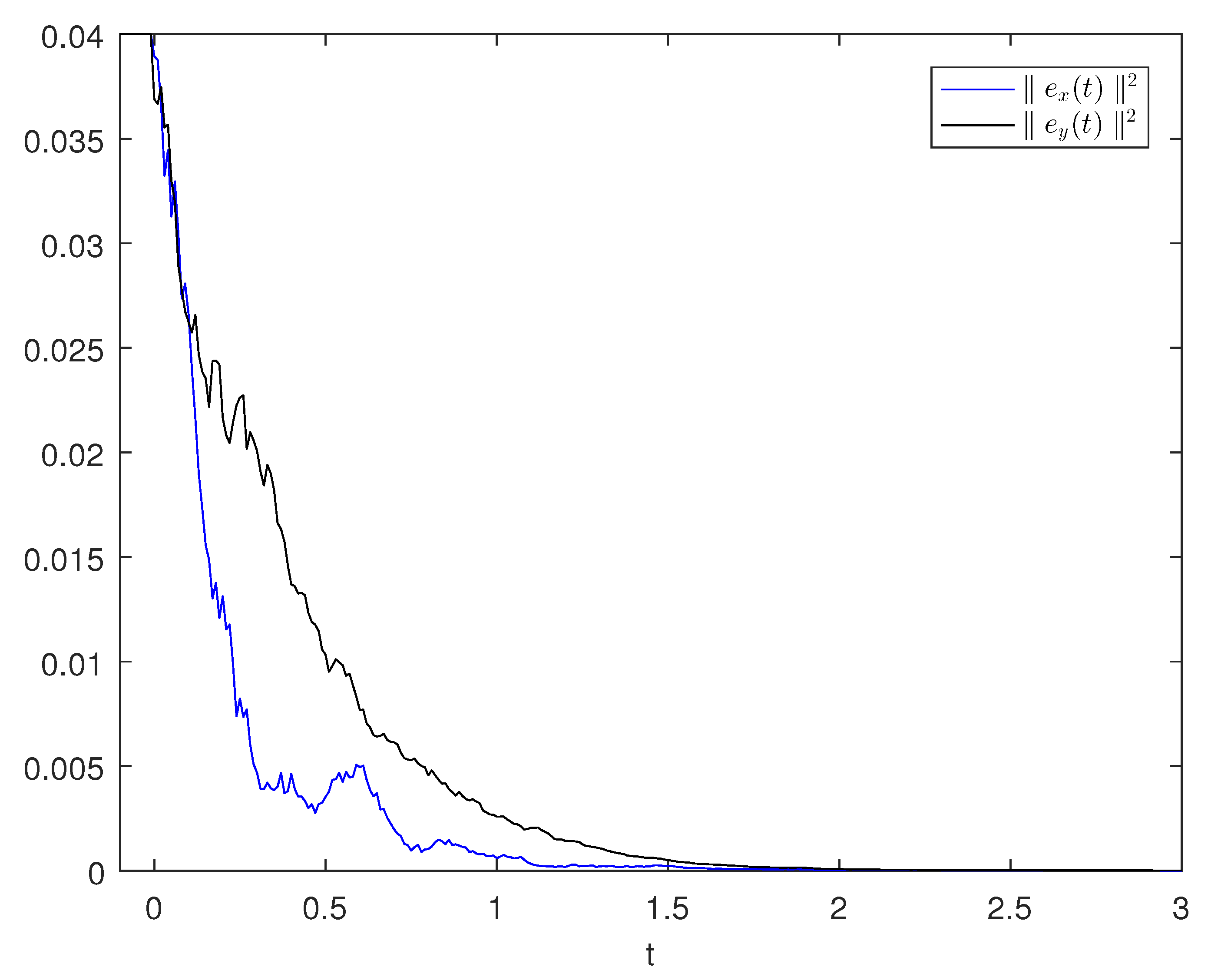

By analyzing

Figure 1 and

Figure 2, we can obtain the error components

and

of systems (

1) and (

2) when

and

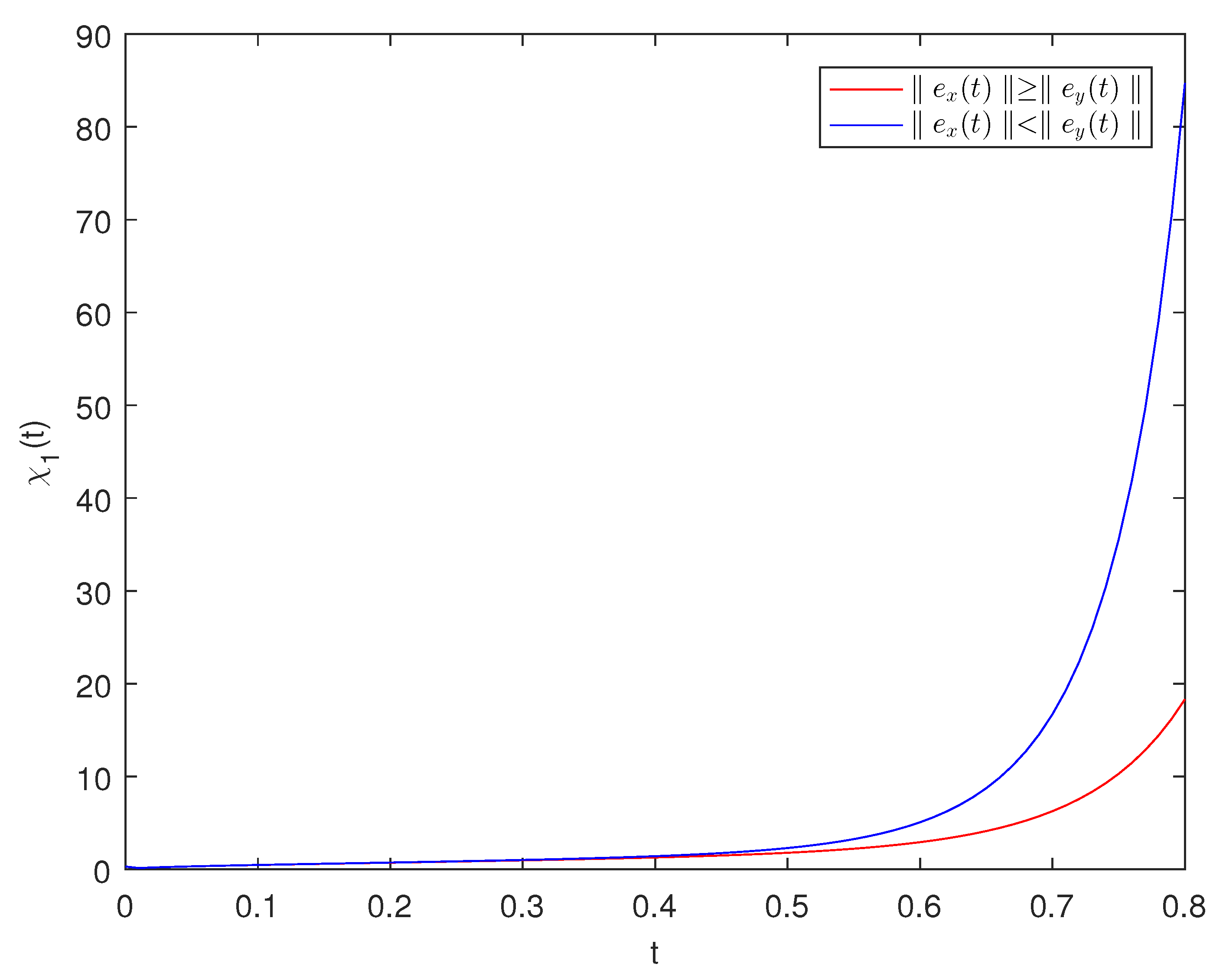

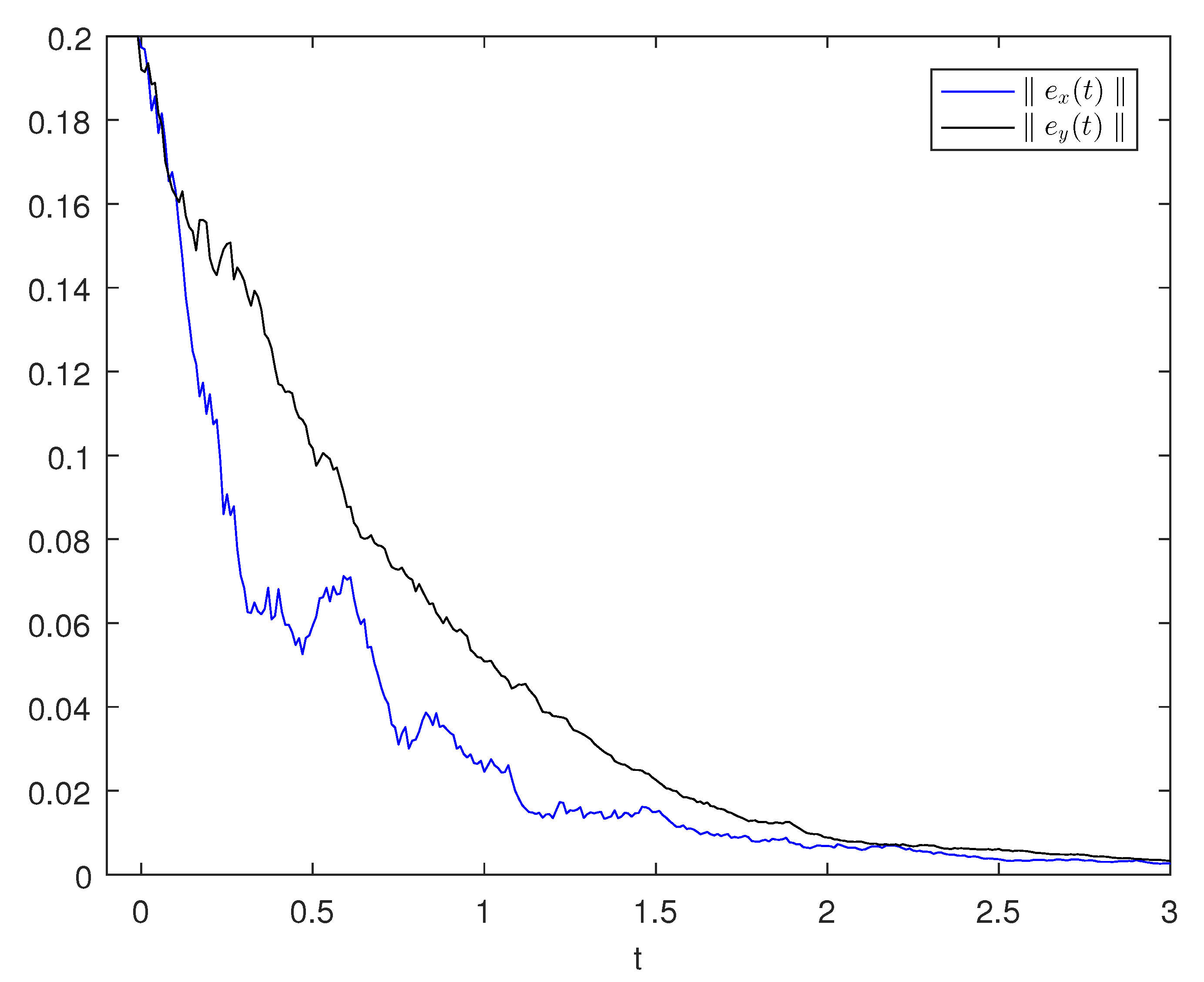

. By using

Figure 3 and

Figure 4, we can calculate the error and square of error of systems (

1) and (

2). Finally, based on

Figure 5 and Theorem 2, we can determine the finite-time synchronization time

.