Submitted:

09 August 2023

Posted:

09 August 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- We propose an improved adaptive activation function. Each layer of deep learning generates different activation functions, improves the generalization performance of deep learning models, and has strong adaptability to different deep learning models.

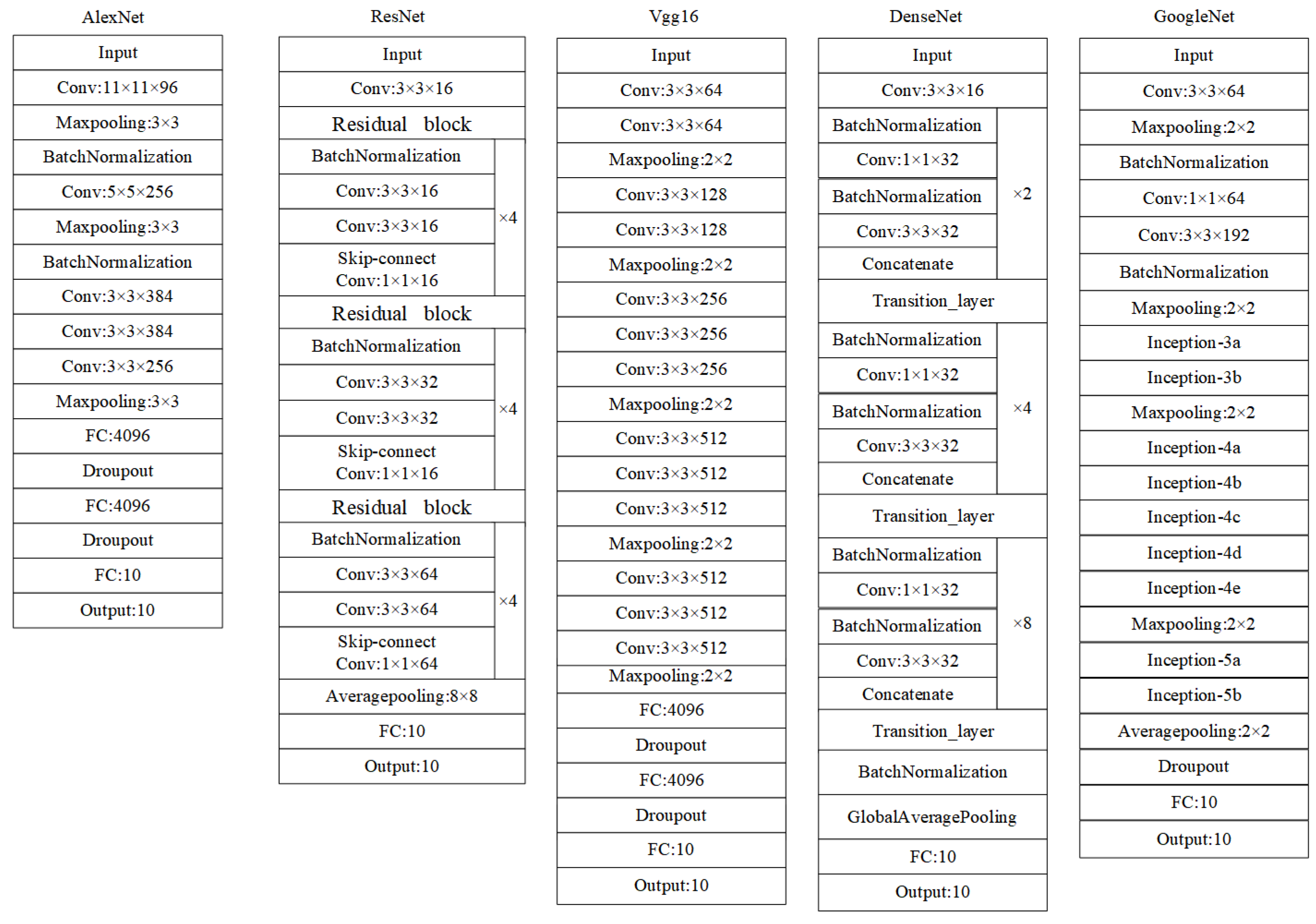

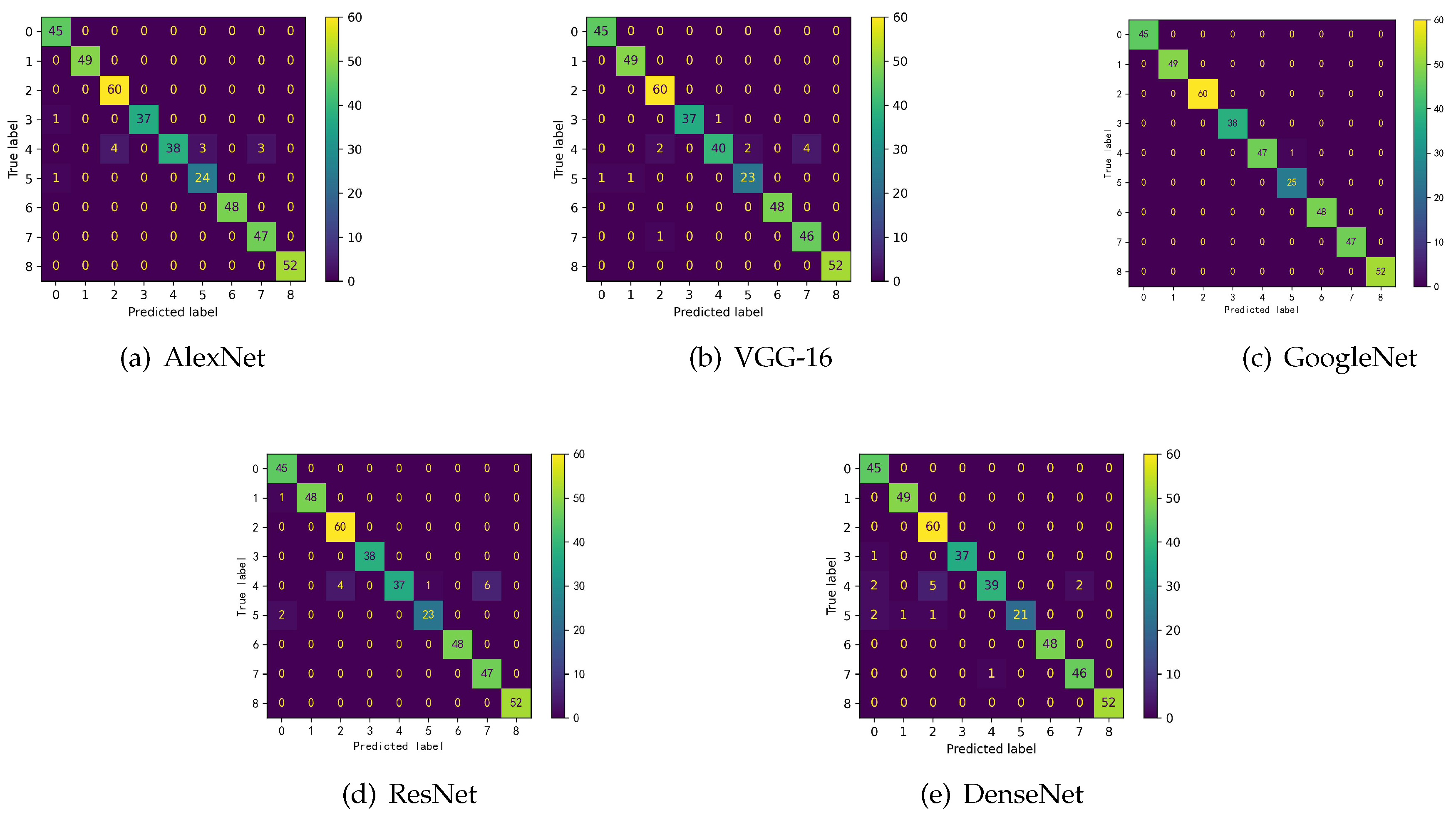

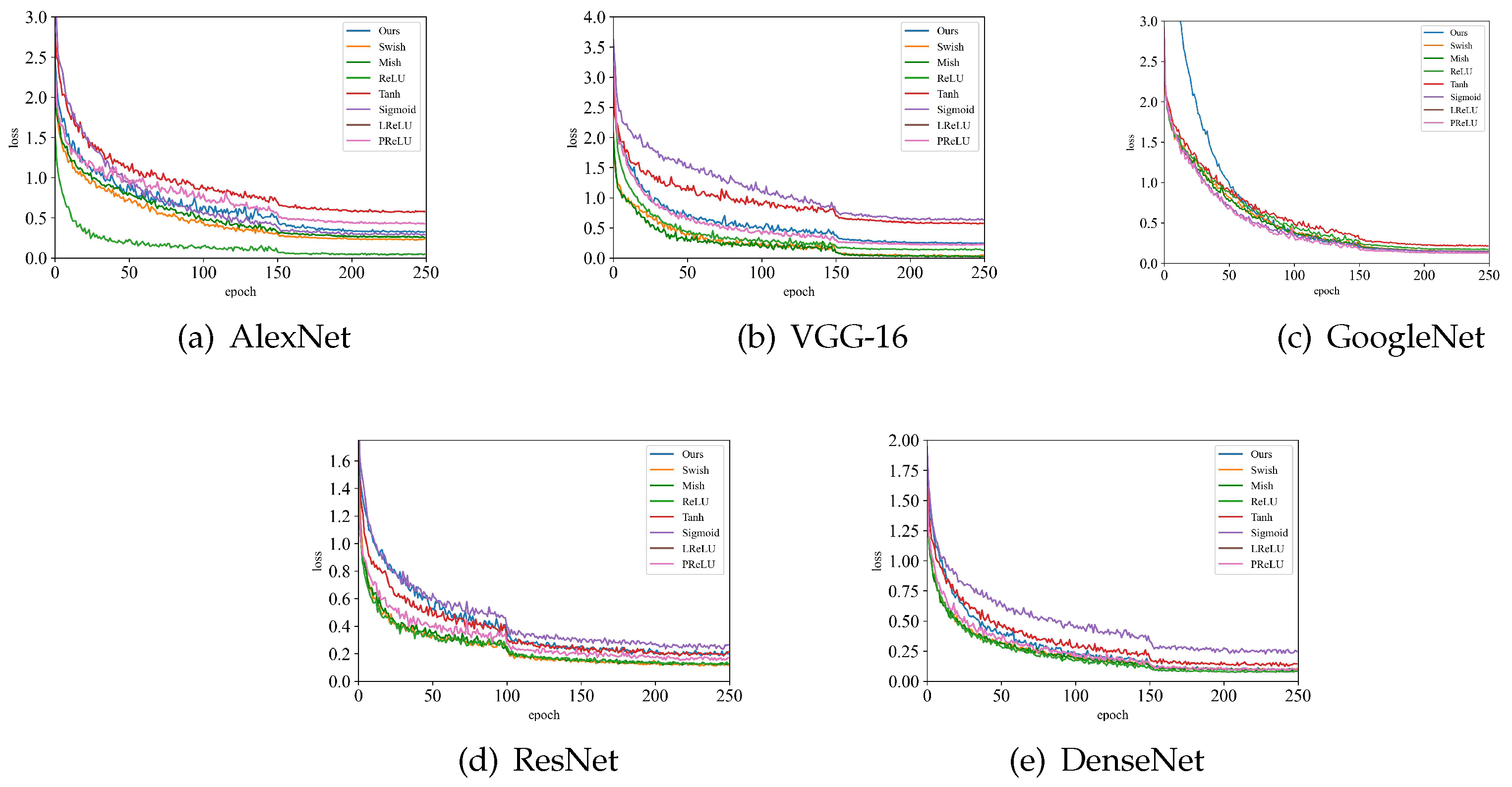

- We apply the proposed activation function to the fault diagnosis of pumping unit, so as to better extract features from the contours of the indicator diagram. The proposed activation function improves the accuracy of fault diagnosis and has a better search ability, which is verified and comprared with AlexNet[20], VGG-16[21], GoogleNet[22], ResNet[23] and DenseNet[24].

- The propose activation function is extended to the public datasets CIFAR10 which proves that the proposed activation function is suitable and universal.

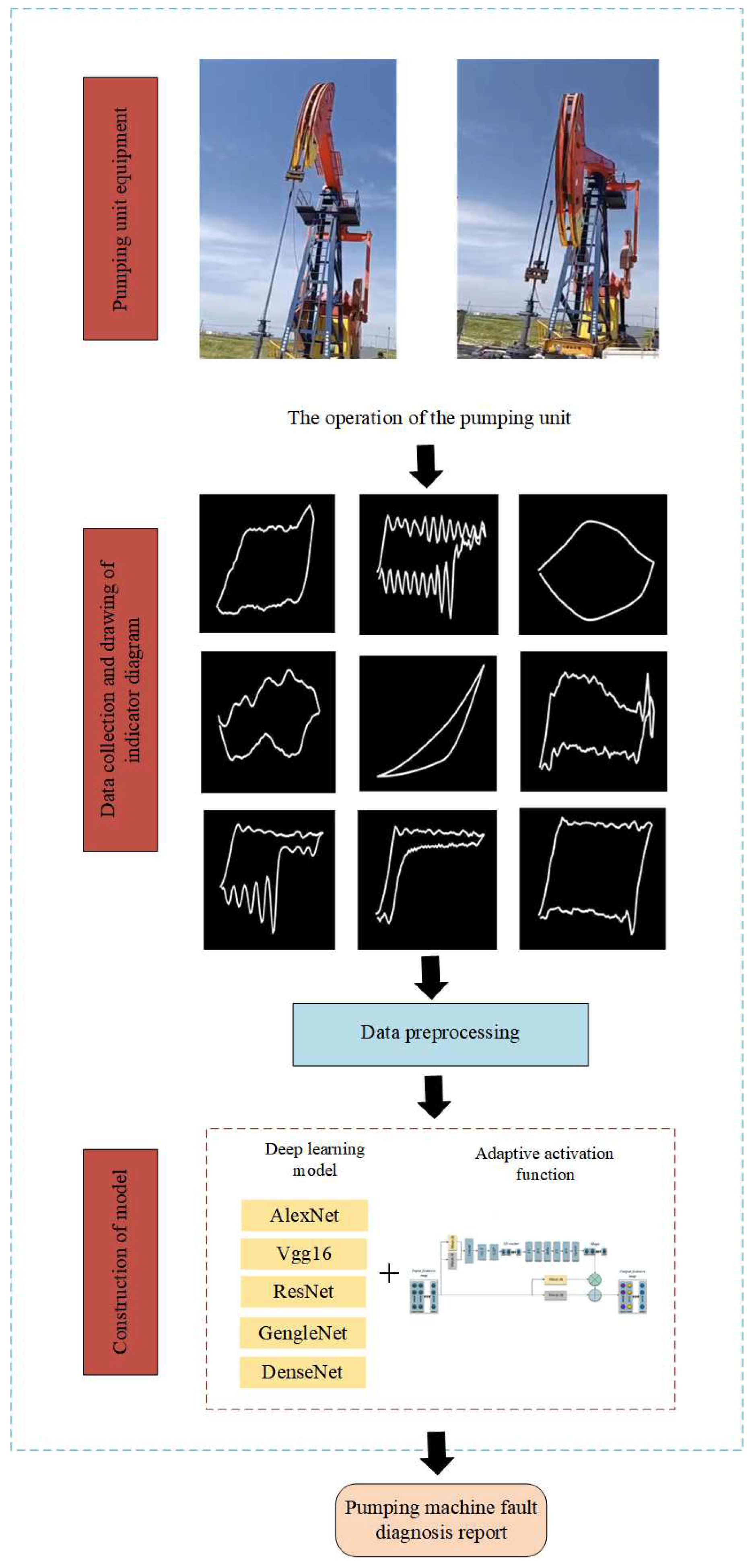

2. Experiment Design and Measurement

2.1. Introduction to pumping unit

2.2. Fault types of pumping unit

-

NormalThe pump work diagram made by normal operation refers to the position shift of the end suspension point relative to the lower dead point as the transverse setting mark, the self-weight force of the rod and the cumulative load received by the pump plug as the longitudinal setting mark. Drawn in parallel quadrilateral shape.

-

Insufficient fluid supplyThe shortage of liquid supply is due to the insufficient amount of crude oil in the well, and the plunger pump inhales a large amount of air while drawing crude oil each time. As a result, a large amount of gas in the pump cannot be fully operated.

-

Contain sandBecause the well contains sand, the plunger creates an additional resistance in an area during movement. The additional resistance on the up stroke increases the load at the suspension point and on the down stroke at the same position. The increased resistance reduces the load at the suspension point. Because the distribution of sand particles in the pump barrel is not the same, its influence on the load varies greatly in various places, so it will lead to severe fluctuations in the load in a short time.

-

Piston stuckWhen the pump plunger is stuck near the bottom dead point, the rod is in a stretched state during the up stroke and the down stroke since the whole stroke is actually the process of elastic deformation of the rod, the well work diagram at this time is approximately an oblique line.

-

Gas interferenceThe gas interference is the situation that the gas precent in the oil of the pumping well is high, while the crude oil precent is relatively low. This causes the pump barrel to extract most of the gas, resulting in a significant difference between the actual load and the theoretical load.

-

Pump down touchWhen the anti-impact distance is too large, the piston running up is approaching the upper dead point, and the continuous upward movement of the piston collides with the moving val, which leads to the sudden loading of the piston and the bunching at the upper dead point.

-

Pump up touchWhen the anti-impact distance is too small, it is attached to the lower dead point, and the piston moves down and collides with the fixed Val, resulting in sudden unloading of the piston and bunching at the lower dead point.

-

Double valve leakageDouble valve leakage refers to the situation where both the moving valve leakage and the fixed valve leakage happen at the same time, and the leakage may be caused by a combination of multiple faults.

-

Pumping rod detachmentThe pumping unit’s power cannot be transmitted to the pump due to the detachment of the sucker rod, resulting in the inability to extract oil.

3. Theoretical Analysis

3.1. Common adaptive activation functions

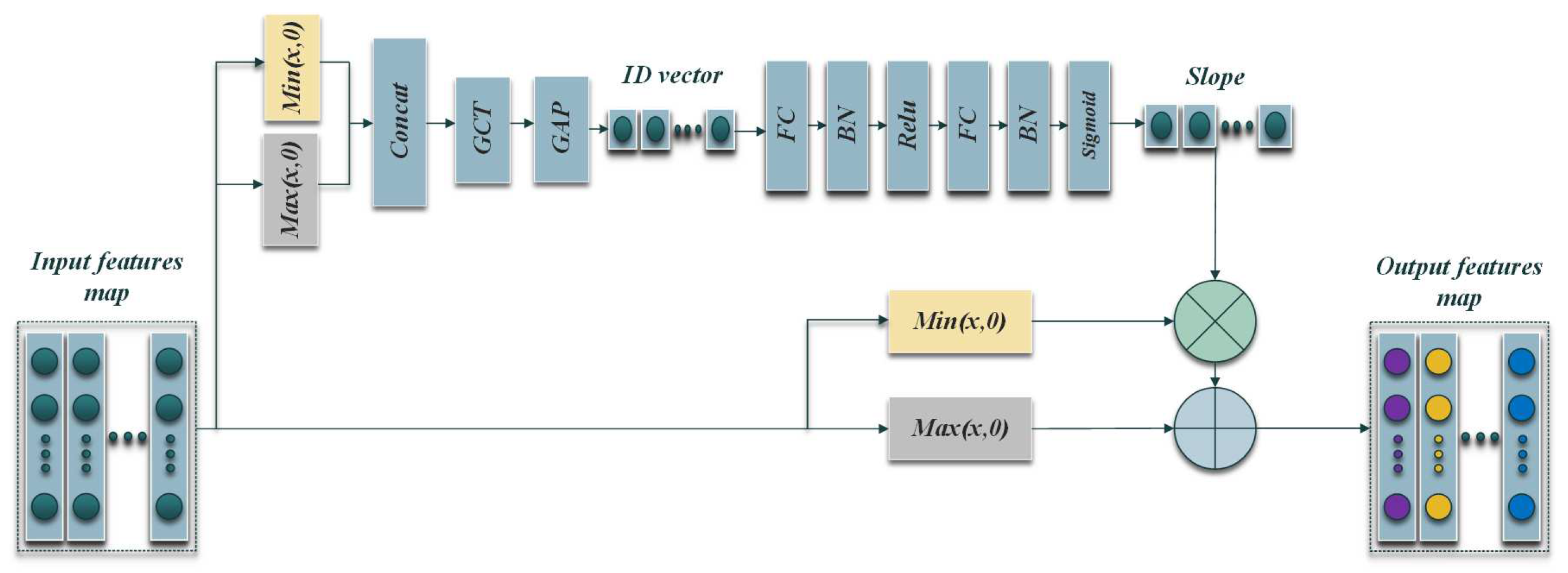

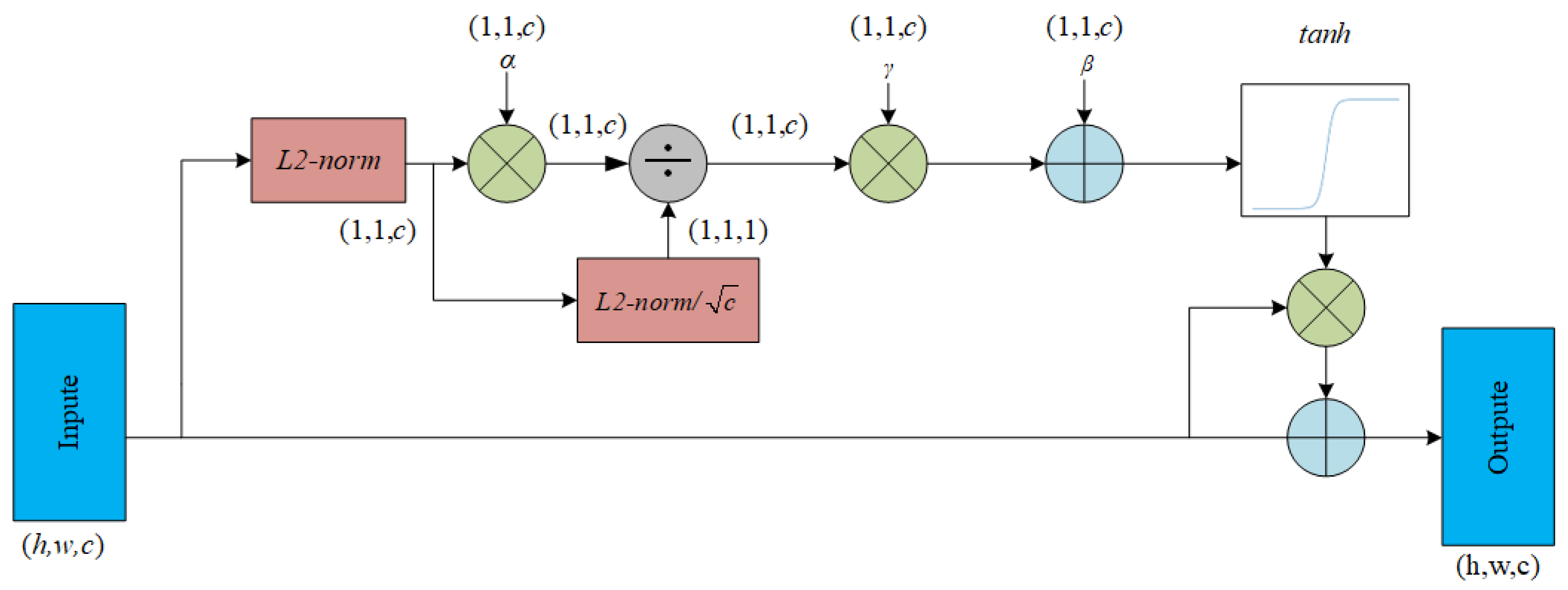

3.2. The structure of adaptive activation functions

4. Experimental simulation

4.1. The dataset of pumping

4.2. CIFAR10

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- J. Du, a. K. S. J. Du, a. K. S. Zhigang Liu, and E. Yang, Fault diagnosis of pumping machine based on convolutional neural network, Journal of University of Electronic Science and Technology of China (2020), 751-757.

- Gao, Z.; Cecati, C.; Ding, S.X. A Survey of Fault Diagnosis and Fault-Tolerant Techniques—Part I: Fault Diagnosis With Model-Based and Signal-Based Approaches. IEEE Trans. Ind. Electron. 2015, 62, 3757–3767. [Google Scholar] [CrossRef]

- Gao, Z.; Cecati, C.; Ding, S.X. A Survey of Fault Diagnosis and Fault-Tolerant Techniques Part II: Fault Diagnosis with Knowledge-Based and Hybrid/Active Approaches. IEEE Trans. Ind. Electron. 2015, 62, 1–1. [Google Scholar] [CrossRef]

- Tang, A.; Zhao, W. A Fault Diagnosis Method for Drilling Pump Fluid Ends Based on Time–Frequency Transforms. Processes 2023, 11, 1996. [Google Scholar] [CrossRef]

- Fu, Z.; Zhou, Z.; Yuan, Y. Fault Diagnosis of Wind Turbine Main Bearing in the Condition of Noise Based on Generative Adversarial Network. Processes 2022, 10, 2006. [Google Scholar] [CrossRef]

- Agarwal, P.; Gonzalez, J.I.M.; Elkamel, A.; Budman, H. Hierarchical Deep LSTM for Fault Detection and Diagnosis for a Chemical Process. Processes 2022, 10, 2557. [Google Scholar] [CrossRef]

- Xu, G.; Liu, M.; Jiang, Z.; Shen, W.; Huang, C. Online Fault Diagnosis Method Based on Transfer Convolutional Neural Networks. IEEE Trans. Instrum. Meas. 2019, 69, 509–520. [Google Scholar] [CrossRef]

- Y. Duan et al., Improved alexnet model and its application in well dynamogram classification, Computer Applications and Software (2018), 226-230+272.

- Sang, J. Research on pump fault diagnosis based on pso-bp neural network algorithm. 2019, 1748–1752. [CrossRef]

- Zhang, L.; Du, Q.; Liu, T.; Li, J. A Fault Diagnosis Model of Pumping Unit Based on BP Neural Network. 2020, 454–458. [CrossRef]

- Hu, H.; Li, M.; Dang, C. Research on the fault identification method of oil pumping unit based on residual network. 2022, 940–943. [CrossRef]

- Bai, T.; Li, X.; Ding, S. Research on Electrical Parameter Fault Diagnosis Method of Oil Well Based on TSC-DCGAN Deep Learning. 2022, 753–761. [CrossRef]

- V. Nair and G. E. Hinton, Rectified linear units improve restricted boltzmann machines, International Conference on Machine Learning.

- L. Maas, Rectifier nonlinearities improve neural network acoustic models.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the International Conference on Computer Vision, Las Condes, Chile, 11–18 December 2015; pp. 1026–1034. [Google Scholar]

- P. Ramachandran, B. P. Ramachandran, B. Zoph, and Q. V. Le, Swish: a self-gated activation function, arXiv: Neural and Evolutionary Computing (2017).

- Hu, H.; Liu, A.; Guan, Q.; Qian, H.; Li, X.; Chen, S.; Zhou, Q. Adaptively Customizing Activation Functions for Various Layers. IEEE Trans. Neural Networks Learn. Syst. 2022, 34, 6096–6107. [Google Scholar] [CrossRef] [PubMed]

- Zhao, M.; Zhong, S.; Fu, X.; Tang, B.; Dong, S.; Pecht, M. Deep Residual Networks With Adaptively Parametric Rectifier Linear Units for Fault Diagnosis. IEEE Trans. Ind. Electron. 2020, 68, 2587–2597. [Google Scholar] [CrossRef]

- Yang, Z.; Zhu, L.; Wu, Y.; Yang, Y. Gated Channel Transformation for Visual Recognition. 2020, 11791–11800. [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- K. Simonyan and A. Zisserman, Very deep convolutional networks for large-scale image recognition, (2015).

- C. Szegedy et al., Going deeper with convolutions, 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2014), 1–9.

- K. He et al., Deep residual learning for image recognition, 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2015), 770–778.

- G. Huang, Z. G. Huang, Z. Liu, and K. Q.Weinberger, Densely connected convolutional networks, 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016), 2261–2269.

- Cao, L.; Zhao, T. Pumping Unit Design and Control Research. 2019, 1738–1743. [CrossRef]

- D. Misra, Mish: A self regularized non-monotonic neural activation function, ArXiv abs/1908.08681 (2019).

- M. Lin, Q. Chen, and S. Yan, Network in network, ArXiv abs/1312.4400 (2013).

- S. Ioffe and C. Szegedy, Batch normalization: Accelerating deep network training by reducing internal covariate shift, ArXiv abs/1502.03167 (2015).

| Methods | AlexNet(%) | VGG-16(%) | GoogleNet(%) | ResNet(%) | DenseNet(%) |

|---|---|---|---|---|---|

| Ours | 97.91±0.4997 | 97.57±0.2658 | 99.13±0.1941 | 97.82±0.4061 | 97.52±0.4706 |

| ReLU | 97.33±0.5534 | 96.94±0.9537 | 98.56±0.3220 | 96.12±0.6140 | 96.89±0.0970 |

| Sigmoid | 94.51±0.4930 | 96.41±0.3567 | 96.17±0.2831 | 92.14±0.2145 | 94.17±0.6584 |

| Tanh | 96.41±0.3220 | 97.04±0.4706 | 97.91±0.3632 | 94.66±0.3070 | 95.05±0.4231 |

| LReLU | 97.48±0.8209 | 97.14±0.5405 | 98.74±0.2830 | 95.28±0.9029 | 96.36±0.5091 |

| PReLU | 97.43±0.6254 | 97.04±0.3220 | 98.74±0.6584 | 96.36±0.5091 | 97.04±0.3883 |

| Mish | 97.23±0.6063 | 96.02±0.6254 | 98.74±0.2830 | 96.07±0.3883 | 97.17±0.1144 |

| Swish | 97.72±0.3292 | 95.15±0.8547 | 98.74±0.1816 | 96.26±0.3943 | 96.75±0.5661 |

| The type of fault | AlexNet | VGG-16 | GoogleNet | ResNet | DenseNet |

|---|---|---|---|---|---|

| Pump up touch | 0.96 | 0.98 | 0.90 | 0.96 | 0.90 |

| Pumping rod detachment | 1.00 | 0.98 | 0.96 | 0.98 | 1.00 |

| Insufficient liquid supply | 0.94 | 0.95 | 0.93 | 0.95 | 0.91 |

| Contain sand | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| Piston stuck | 1.00 | 0.98 | 0.98 | 1.00 | 0.97 |

| Gas influence | 0.89 | 0.92 | 0.98 | 0.92 | 1.00 |

| Double valve leakage | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| Pump down touch | 0.94 | 0.92 | 0.93 | 0.90 | 0.96 |

| Normal | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| Methods | AlexNet(%) | VGG-16(%) | GoogleNet(%) | ResNet(%) | DenseNet(%) |

|---|---|---|---|---|---|

| Ours | 91.10±0.0445 | 93.86±0.0406 | 90.35±0.1070 | 91.73±0.0231 | 92.30±0.0681 |

| ReLU | 89.26±0.0576 | 90.76±0.0987 | 89.00±0.0337 | 90.27±0.0034 | 90.42±0.0846 |

| Sigmoid | 85.65±0.0365 | 89.32±0.1127 | 87.23±0.0485 | 88.06±0.0835 | 82.67±0.2110 |

| Tanh | 86.99±0.2432 | 89.41±0.0189 | 83.69±0.0402 | 88.68±0.0414 | 87.20±0.0745 |

| LReLU | 90.31±0.2147 | 93.38±0.0414 | 89.70±0.0527 | 91.24±0.1059 | 91.23±0.0684 |

| PReLU | 88.36±0.0436 | 91.84±0.0633 | 89.31±0.0454 | 91.08±0.0637 | 91.69±0.0755 |

| Mish | 89.07±0.0847 | 89.92±0.0577 | 88.64±0.0729 | 91.12±0.7960 | 91.97±0.0758 |

| Swish | 89.19±0.0628 | 89.19±0.0618 | 88.94±0.1161 | 90.93±0.0850 | 91.95±0.0893 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).