Submitted:

08 June 2023

Posted:

09 June 2023

Read the latest preprint version here

Abstract

Keywords:

1. Introduction

2. Preliminaries

2.1. Lattice

2.2. Lattice basis reduction

- ①

- (Size condition) , for all ;

- ②

- (Lovász condition) , for all , where .

- (1)

- ;

- (2)

- , for ;

- (3)

- , for ;

3. Algorithms to solve ACD problem

3.1. Simultaneous Diophantine approximation (SDA)

3.2. Orthogonal Lattice (OL) based approach

3.2.1. OL-∧ algorithm

- ①

- Let , then

- ②

- Using BKZ- alogrithm, reduce the lattice matrix . Let be the i-th reduce basis of , Then

3.2.2. OL-∨ algorithm

- condition 1: N is a large random integer with bits;

- condition 2: ;

- condition 3: ;

- condition 4: ;

- condition 5: .

- ①

- Let , Then

- ②

- Using BKZ- alogrithm, reduce the basic matrix . Let be the i-th reduce basis of , Then

3.2.3. Recover or

3.2.3. An improved algorithm of OL-∨

| Algorithm 1 An improved OL algorithm for GACD |

|

Input: An appropriate positive integer and ACD samples . Output: Integer p. 1. Randomly choose . 2. Reduce lattice by LLL algorithm with . Let the reduced basis be , where 3. If , where , then solve the integer linear system with t unknowns as follows 4. . 5. Compute . Return p. |

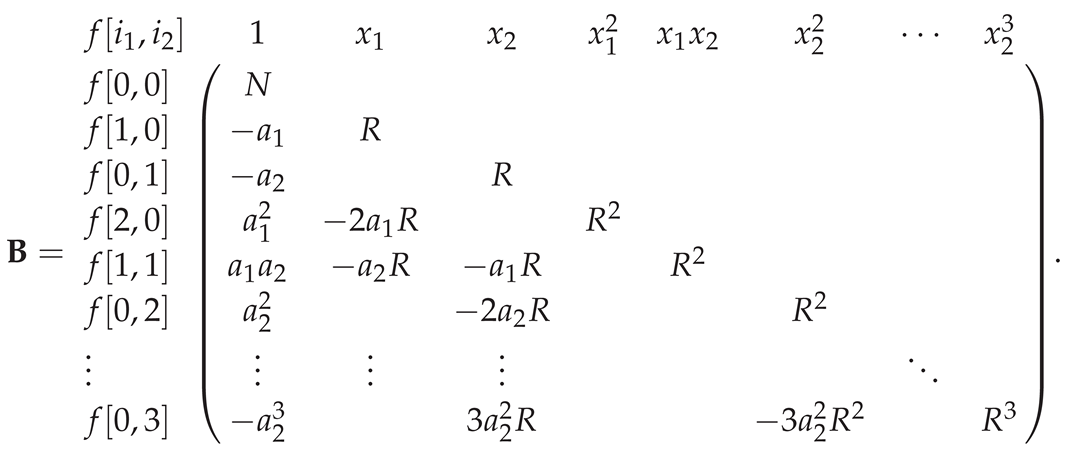

3.3. Multivariate polynomial (MP) equations method

3.3.1 The heuristic analysis results of the MP algorithm in [25]

3.3.2 The heuristic analysis results of the MP algorithm in [36]

3.3.3. The heuristic analysis results of the MP algorithm in [37]

3.4. Pre-processing of the ACD samples

3.4.1 Preserving the sample size

3.4.2 Aggressive shortening

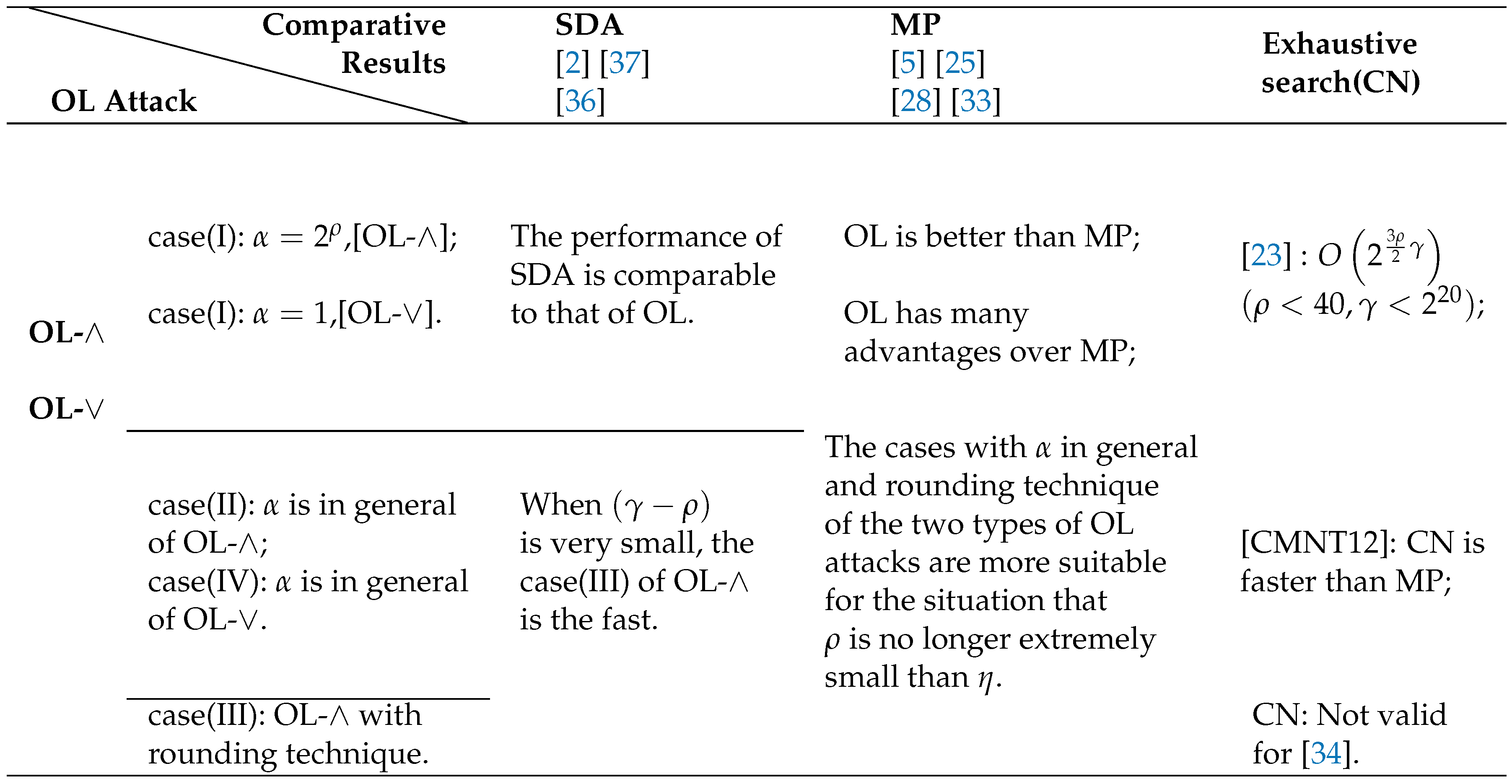

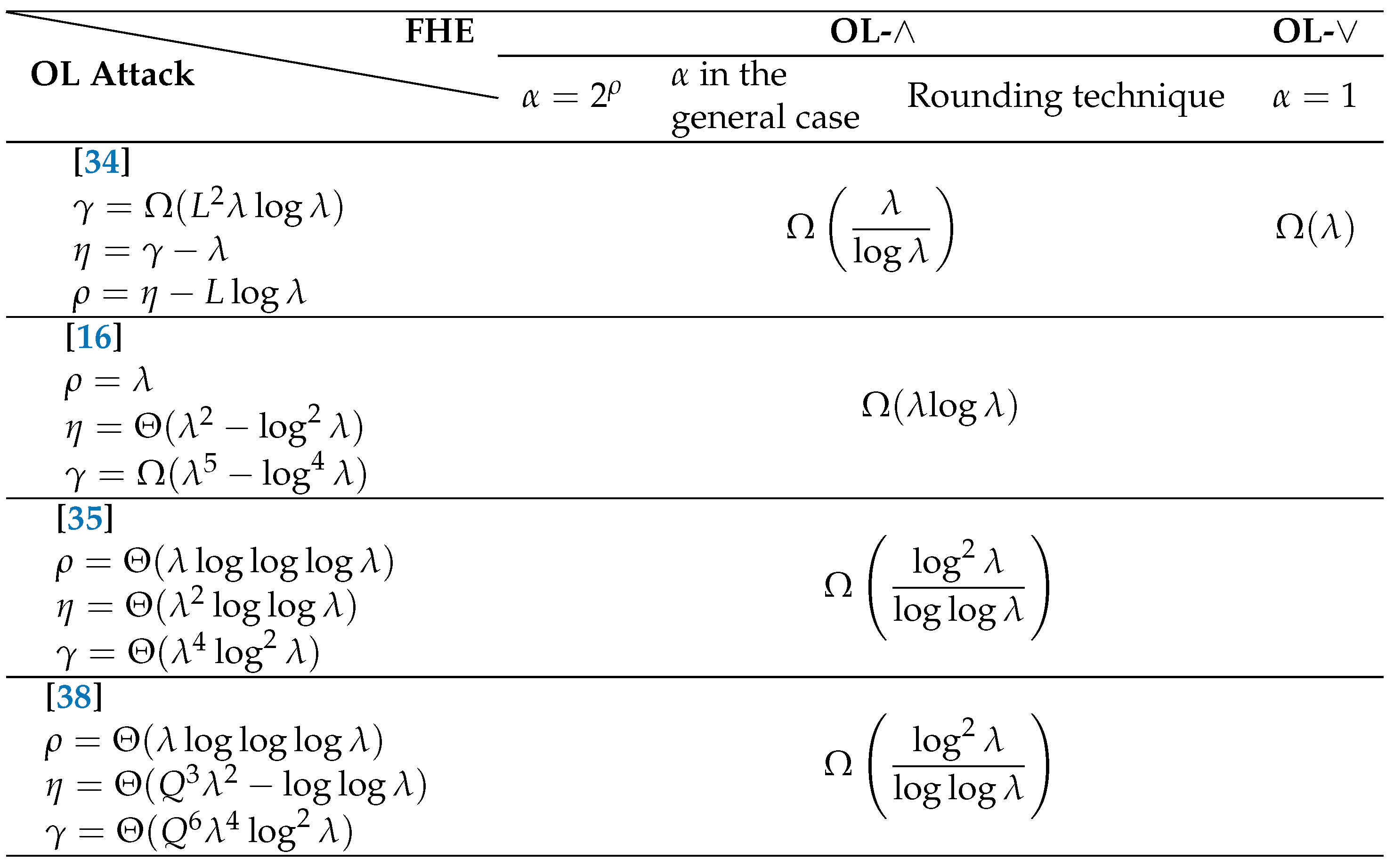

4. Comparisons of OL with SDA and MP algorithms

4.1 Comparision with the SDA algorithm

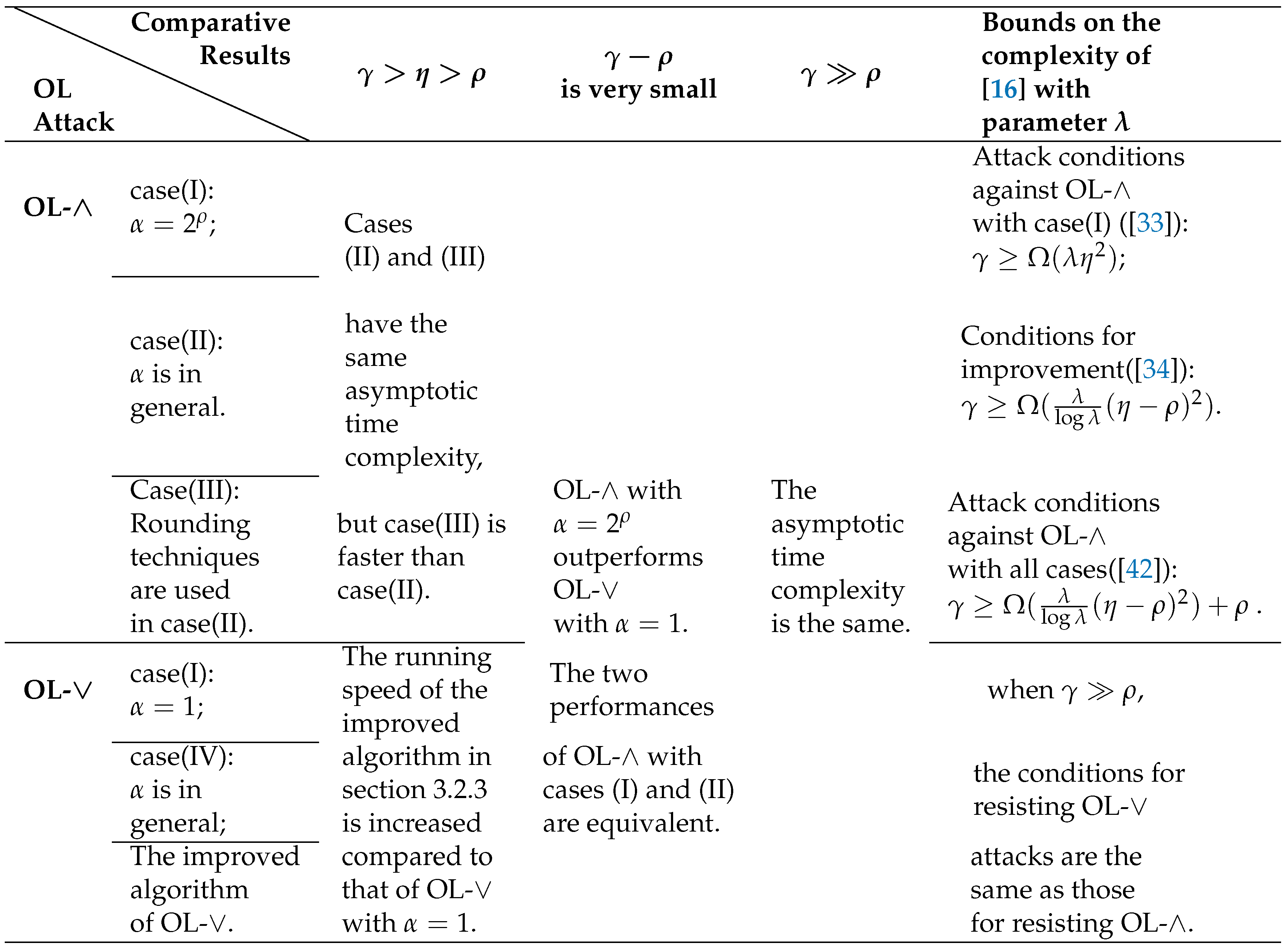

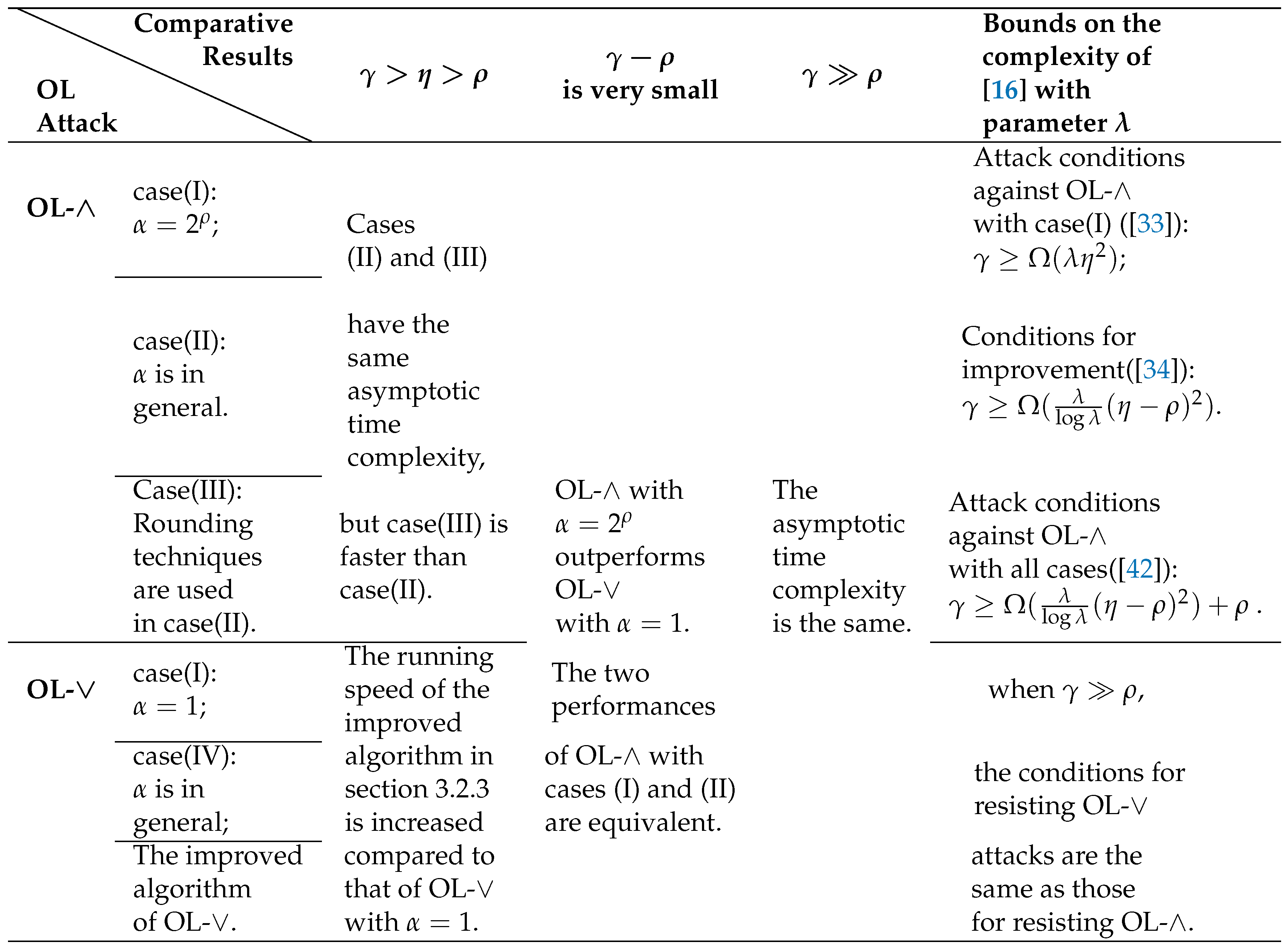

4.2. Comparison of the two types of OL attacks

4.3. Comparision with the MP Algorithm

4.4. Brief summary

- ①

- The OL-∧ with : ;

- ②

- The OL-∨ with : .

5. Cryptanalysis of OL attacks in ACD-based FHE Schemes

6. Prospects

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- A. K. Lenstra; H. W. Lenstra; and L. Lovász. Factoring polynomials with rational coeffcients. Math. Ann. 1982, 261(4): 515–534.

- J. C. Lagarias. The computational complexity of simultaneous Diophantine approximation problems. SIAM J. Comput. 1985, 14(1): 196–209. [CrossRef]

- Claus-Peter Schnorr and M. Euchner. Lattice basis reduction: Improved practical algorithms and solving subset sum problems. Math. Program. 1994, 66: 181–199. [CrossRef]

- D. E. Knuth. The art of computer programming, seminumerical algorithm[J]. Software: Practice and Experience, 1982, 12(9): 883–884.

- N. Howgrave-Graham. Approximate integer common divisors. Cryptography and Lattices. Springer Berlin Heidelberg, 2001: 51–66.

- P. Q. Nguyen and Jacques Stern. The Two Faces of Lattices in Cryptology. In J. Silverman (ed.), Cryptography and Lattices, Springer LNCS 2146, 2001: 146–180.

- Avrim Blum, Adam Kalai and Hal Wasserman. Noise-tolerant learning, the parity problem, and the statistical query model. Journal of ACM, 2003, 50(4): 506–519. [CrossRef]

- Claus-Peter Schnorr. Lattice reduction by random sampling and birthday methods. In STACS 2003, 20th Annual Symposium on Theoretical Aspects of Computer Science, Berlin, Germany, February 27–March 1, Proceedings, 2003: 145-156.

- Phong, Q. Nguyen and Damien Stehlé. LLL on the Average. In Florian Hess, Sebastian Pauli and Michael E. Pohst (eds.), ANTS-VII, Springer LNCS 4076, 2006: 238-256.

- V. Lyubashevsky. The Parity Problem in the Presence of Noise, Decoding Random Linear Codes, and the Subset Sum Problem. APPROX-RANDOM 2005, Springer LNCS 3624, 2005: 378–389.

- C. Gentry, C. Peikert, V. Vaikuntanathan. Trapdoors for hard lattices and new cryptographic constructions[C], Proceedings of the fortieth annual ACM symposium on Theory of computing. ACM, 2008: 197–206. [CrossRef]

- N. Gama and P. Q. Nguyen. Predicting lattice reduction. In Advances in Cryptology-EUROCRYPT 2008, 27th Annual International Conference on the Theory and Applications of Cryptographic Techniques, Istanbul, Turkey, April 13–17, 2008. Proceedings, 2008: 31-51.

- C. Gentry. A Fully Homomorphic Encryption Scheme. PhD thesis, The department of computer science, Stanford University, Stanford, CA, USA, 2009.

- P. Q. Nguyen, Valle B. The LLL algorithm: survey and applications. Springer Publishing Company, Incorporated, 2009.

- C. Gentry, Toward basing fully homomorphic encryption on worst-case hardness, in: T. Rabin(ed.), Advances in Cryptology-CRYPTO 2010, Lecture Notes in Comput. Sci. Springer, Berlin, Heidelberg, 2010, 6223: 116–137.

- M. Van Dijk, C.Gentry, S. Halevi, V. Vaikuntanathan, Fully homomorphic encryption over the integers, in: H. Gilbert (ed.), Advances in Cryptology—EUROCRYPT 2010, Lecture Notes in Comput. Sci. Springer, Berlin, Heidelberg, 2010, 6110: 24–43.

- G. Hanrot, X. Pujol, and D. Stehlé. Terminating bkz. IACR Cryptology ePrint Archive, 2011: 198.

- H. Cohn, N. Heninger. Approximate common divisors via lattices. CoRR, abs/1108. 2714, 2011.

- J. S. Coron, A. Mandal, D. Naccache, M. Tibouchi, Fully homomorphic encryption over the integers with shorter public keys, in: P. Rogaway (ed.), Advances in Cryptology-CRYPTO 2011, Lecture Notes in Comput. Sci, Springer, Berlin, Heidelberg, 2011, 6841: 487–504.

- A. Novocin, D. Stehlé, and G. Villard. An LLL-reduction algorithm with quasi-linear time complexity: extended abstract. In Proceedings of the 43rd ACM Symposium on Theory of Computing, 2011: 403–412.

- S. D. Galbraith, Mathematics of Public Key Cryptography. Cambridge University Press, 2012.

- Y. Ramaiah, G. Kumari, Efficient public key generation for homomorphic encryption over the integers[C]. Third International conference on advances in communication, network and computing. 2012.

- Y. Chen, P. Q. Nguyen. Faster algorithms for approximate common divisors: Breaking fully homomorphic encryption challenges over the integers. Advances in Cryptology-EUROCRYPT 2012. Springer Berlin Heidelberg, 2012: 502–519.

- J. S. Coron, D. Naccache, M. Tibouchi. Public Key Compression and Modulus Switching for Fully Homomorphic Encryption over the Integers. In D. Pointcheval and T. Johansson (ed.), EUROCRYPT’12, Springer LNCS, 2012, 7237: 446–464.

- H. Cohn, N. Heninger. Approximate common divisors via lattices. In proceedings of ANTS X, vol. 1 of The Open Book Series, 2013: 271–293.

- J. H. Cheon, J. S. Coron, J. Kim, M. S. Lee, T. Lepoint, M. Tibouchi, and A. Yun. Batch fully homomorphic encryption over the integers. In Proc. of EUROCRYPT, Springer LNCS, 2013, 7881: 315-335.

- Y. Chen. Réduction de réseau et sécurité concrète du chiffrement complètement homomorphe. Ph.d theses, Paris 7, June 2013.

- Atsushi Takayasu and Noboru Kunihiro, Better Lattice Constructions for Solving Multivariate Linear Equations Modulo Unknown Divisors, IEICE Transactions 97-A, 2014, 6: 1259-1272.

- J. Hoffstein, J. Pipher, and J. H. Silverman. An Introduction to Mathematical Cryptography. Springer Publishing Company, 2nd edition, 2014.

- J. Ding, C. Tao. A New Algorithm for Solving the General Approximate Common Divisors Problem and Cryptanalysis of the FHE Based on the GACD problem. Cryptology ePrint Archive, Report 2014/042, 2014.

- J. Ding, C. Tao. A New Algorithm for Solving the Approximate Common Divisor Problem and Cryptanalysis of the FHE based on GACD. IACR Cryptol. ePrint Arch, 2014: 42.

- J. S. Coron, T. Lepoint, M. Tibouchi, Scale-Invariant Fully Homomorphic Encryption Over the Integers, in: H. Krawczyk (ed.), Public-Key Cryptography—PKC 2014, Lecture Notes in Comput. Sci. Springer, Berlin, Heidelberg, 2014, 8383: 311-328.

- T. Lepoint. Design and Implementation of Lattice-Based Cryptography. Cryptography and Security [cs.CR]. Ecole Normale Supérieure de Paris-ENS Paris, 2014.

- J. H. Cheon, D. Stehlé. Fully Homomorphic Encryption over the Integers Revisited. In E. Oswald and M. Fischlin (eds.), EUROCRYPT’15, Springer LNCS, 2015, 9056: 513-536.

- K. Nuida, K. Kurosawa. (Batch) Fully Homomorphic Encryption over Integers for Non-Binary Message Spaces. Springer, Berlin, Heidelberg, 2015.

- S. Gebregiyorgis. Algorithms for the Elliptic Curve Discrete Logarithm Problem and the Approximate Common Divisor Problem. PhD thesis, The University of Auckland, Auckland, New Zealand, 2016.

- S. Galbraith, S. Gebregiyorgis, S. Murphy. Algorithms for the approximate common divisor problem. LMS Journal of Computation and Mathematics. 19(A), 2016.: 58-72. [CrossRef]

- Eunkyung Kim and Mehdi Tibouchi. FHE over the integers and modular arithmetic circuits. In Cryptology and Network Security-15th International Conference, CANS 2016, Milan, Italy, November 14-16, 2016, Proceedings, 2016: 435–450.

- D. Benarroch, Z. Brakerski, T. Lepoint. FHE over the Integers: Decomposed and Batched in the Post-Quantum Regime. Springer, Berlin, Heidelberg, 2017.

- J. Dyer, M. Dyer, J. Xu. Order-preserving encryption using approximate integer common divisors,Data Privacy Management, Cryptocurrencies and Blockchain Technology: ESORICS 2017 International Workshops, DPM 2017 and CBT 2017, Oslo, Norway, September 14-15, 2017, Proceedings. Springer International Publishing, 2017: 257–274.

- Xiaoling Yu, Yuntao Wang, Chungen Xu, Tsuyoshi Takagi. Studying the Bounds on Required Samples Numbers for Solving the General Approximate Common Divisors Problem. 2018 5th International Conference on Information Science and Control Engineering. [CrossRef]

- J. Xu, S. Sarkar, L. Hu, Revisiting orthogonal lattice attacks on approximate common divisor problems and their applications. Cryptology ePrint Archive, 2018.

- J. H. Cheon, W. Cho, M. Hhan, Algorithms for CRT-variant of approximate greatest common divisor problem. Journal of Mathematical Cryptology, 2020, 14(1): 397–413. [CrossRef]

- W. Cho, J. Kim, C. Lee. Extension of simultaneous Diophantine approximation algorithm for partial approximate common divisor variants. IET Information Security, 2021, 15(6): 417–427. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).