1. Introduction

Digital watermarking [

2,

3,

4,

5] is the technique of inserting messages into multimedia content for various purposes, including copyright protection, authentication, access control, and broadcast monitoring. Depending on the message used as a watermark and the method of recovery of the message from the host signal, watermarking techniques can be categorized into two methods, namely multibit and zero-bit watermarking. In multibit watermarking, the watermark is extracted as the bit string of the message, whereas the presence of a hidden watermark is checked in the case of zero-bit watermarking. Multibit is termed watermark extraction, and zero-bit is termed watermark detection. To extract a watermark, determining the presence of a watermark in a given host signal is critical because even if no watermark is embedded, a certain message is extracted. To prevent incorrect extraction when no watermark is embedded, checking whether watermark information exists in the host signal, that is, watermark detection, is critical.

1.1. Background

Improvements in computer performance and the generation of the large amount of data have encouraged the development of deep learning technology. In deep neural networks (DNNs), training the weight parameters requires considerable computational resources and a large amount of data for target application. Expert tuning of several hyperparameters is required for obtaining high performance. Therefore, such a DNN model should be the intellectual property of the owner. The model should be protected against violations of ownership and copyright. A promising approach is to embed a watermark into a DNN model [

6]. Originally, watermarking was used for secretly embedding information in multimedia content. In watermark embedding, some forms of redundancy involved in the host signal is exploited such that the perceptual quality of the original content is not degraded. A similar technique is used for DNN watermarking. However, a watermark should be embedded without considerably degrading DNN model performance.

A DNN model consists of several network layers with a large number of weight parameters. Several degrees of freedom exist in the choice of weights. The DNN watermarking technique is based on a slight modification of the weight parameters sampled from many candidates. DNN watermarking is classified into white box and black box techniques based on permissions from the watermark extractor [

7]. In the white box technique, internal parameters that directly correspond to the weights of the DNN models are accessible. However, using the black box technique, only the final output of the DNN model is observed. In this case, the watermark is extracted by querying a set of trigger inputs to the DNN model.

In the white box technique, the weight parameters can be accessed not only by the model owner but also by attackers, which renders protecting against external perturbations difficult. Typically, an attacker steals a DNN model and modifies its parameters or structure to fool watermark detection or cause extraction failure of hidden messages. The primary constraints for the attacker are that the modification of the model should not require more resources than training the DNN model from scratch, and the performance of the attacked model should not decrease considerably.

An example of perturbation is model pruning in which redundant neurons are pruned without compromising the performance of the DNN model. Initially, pruning is performed to remove less important weight parameters from the DNN model, whose contribution to the loss is small [

8]. Attackers can prune some weight parameters to remove the watermark signal. Therefore, robustness against pruning attacks is an important requirement for the white box technique, which ensures that the watermarked parameters are relevant to the original task. In [

9], the watermark does not disappear, even after a pruning attack that prunes 65% of the parameters. Li et al. [

10] achieved robustness against 60% pruning using the spread transform dither modulation watermarking technique. Zhao et al. [

11] embedded watermarks during the pruning process. Tondi et al. [

12] proposed a white box multibit watermarking algorithm that adopted the spread spectrum approach [

4] to spread watermarks over several weight parameters in a DNN model. Prior to training, the amplitude of such parameters is controlled to be sufficiently large to survive retraining and other modifications.

From the perspective of the communication channel, pruning can be considered to be an erasure channel between the watermark transmitter and receiver. To prevent the erasure of symbols, watermark information is encoded using a binary constant weight code (CWC) [

13,

14] to ensure robustness against weight-level pruning attacks in [

1]. In the encoding method, symbol “0” is stable even if the corresponding weight parameter are pruned because the symbol is regarded as “0” when it is pruned or its absolute value is small. By producing the bias of symbols “1” and “0” in the codeword, high robustness can be achieved against pruning attacks. However, the watermark is embedded only in the fully connected (FC) layer in a fine-tuning model in the experiment. To adapt to any DNN model, evaluating its performance quantitatively when a watermark is embedded in the convolution layer is necessary. Furthermore, although the watermark detection approach has been discussed, a statistical model of the watermarked weight parameters is yet to be theoretically analyzed.

1.2. Our Contributions

In this study, we extend the embedding region of the conventional method [

1] to any layer of the DNN model. The convolutional neural network consints of multiple layers, including convolution, pooling, dropout, and batch normalization. The suitability of these types of networks for embedding watermarks was evaluated in terms of the transparency and secrecy of the hidden messages and the performance of the original task assigned to the DNN model.

We theoretically analyzed the distribution of the weight parameters and designed a detector to check for the presence of hidden watermarks for the DNN watermarking method [

1]. The initial weight parameters of the DNN model were assumed to be random sequences following a uniform distribution, and their distributions were assumed to be approximately equivalent, even after model training. Furthermore, the distribution of the pretrained CNN models was approximately Gaussian. However, the distribution of the weight parameters selected for embedding the watermark was biased according to the CWC codeword because the embedding process was performed during DNN model training. In the proposed method, this bias is used to determine whether the selected weight parameter is a CWC codeword or a random sequence.

The contributions of this study are summarized as follows:

In the DNN model, a suitable layer for embedding the watermark is quantitatively evaluated among multiple layers. Because the number of parameters for convolutional operations is considerably larger than for other operations, the secrecy of the choice of weight parameters can be controlled. Even if these weight parameters are modified by embedding the watermark at the initial setup and are fixed during the training phase, local minima whose model performance is close to other local minima can be determined.

Under the assumption that the weight parameters are uniformly distributed or Gaussian, if the watermark is encoded by CWC, the statistical bias of the weight parameters extracted from the watermarked and nonwatermarked DNN models is formulated in the analysis. Therefore, a simple threshold calculated from the statistical distribution can be used to determine the presence of a hidden message.

To protect against overwriting attacks, we introduced a nonfungible token (NFT) in the watermark. This token enables us to check the history of the hidden message; the tokenId of the NFT is encoded in the CWC codeword and embedded as a watermark.

1.3. Organization

The remainder of this paper is organized as follows. In

Section 2, we provide an overview of DNN watermarking and its threats. In

Section 3, we reviewed the conventional method using the CWC. The proposed method is described in detail in

Section 4, and the experimental results are presented in

Section 5. Finally, the conclusion to this paper is presented in

Section 6 and directions for future research are highlighted.

2. Preliminaries

The DNN watermarking is a method of embedding a digital watermark into a DNN model to safeguard intellectual property rights and prohibit unauthorized use or distribution. This section reviews the numerous DNN watermarking strategies and possible attack scenarios.

2.1. DNN Watermarking

Various watermarking techniques have been devised to protect multimedia content, such as audio, still images, video, and text. A watermark signal is inserted into the host signal selected from the multimedia content using a secret key. In the embedding process, the redundancy of the host signal can be mitigated without compromising perceptual quality. This technique can be extended to DNN models. During the training phase, the weight parameters are optimized to minimize the loss function to express the difference between the predicted class labels and the ground truth labels. Because of the large number of weight parameters in a DNN model, many degrees of freedom exist during parameter tuning in the training phase. This phenomenon enabled inserting a watermark without compromising DNN model performance.

Watermarking techniques should control the tradeoff requirements of capacity, robustness, and fidelity [

6]. For the DNN watermark, fidelity refers to the capability of the watermarked DNN model to accomplish a task.

In [

9,

15], a binary sequence of watermark information was embedded into the weight parameters of the convolution layer of a DNN model. The weight parameters were updated during each training process such that multiplication with a secret matrix approached the watermark in parallel with the training of the model. Watermarks can be easily modified by overwriting [

16].

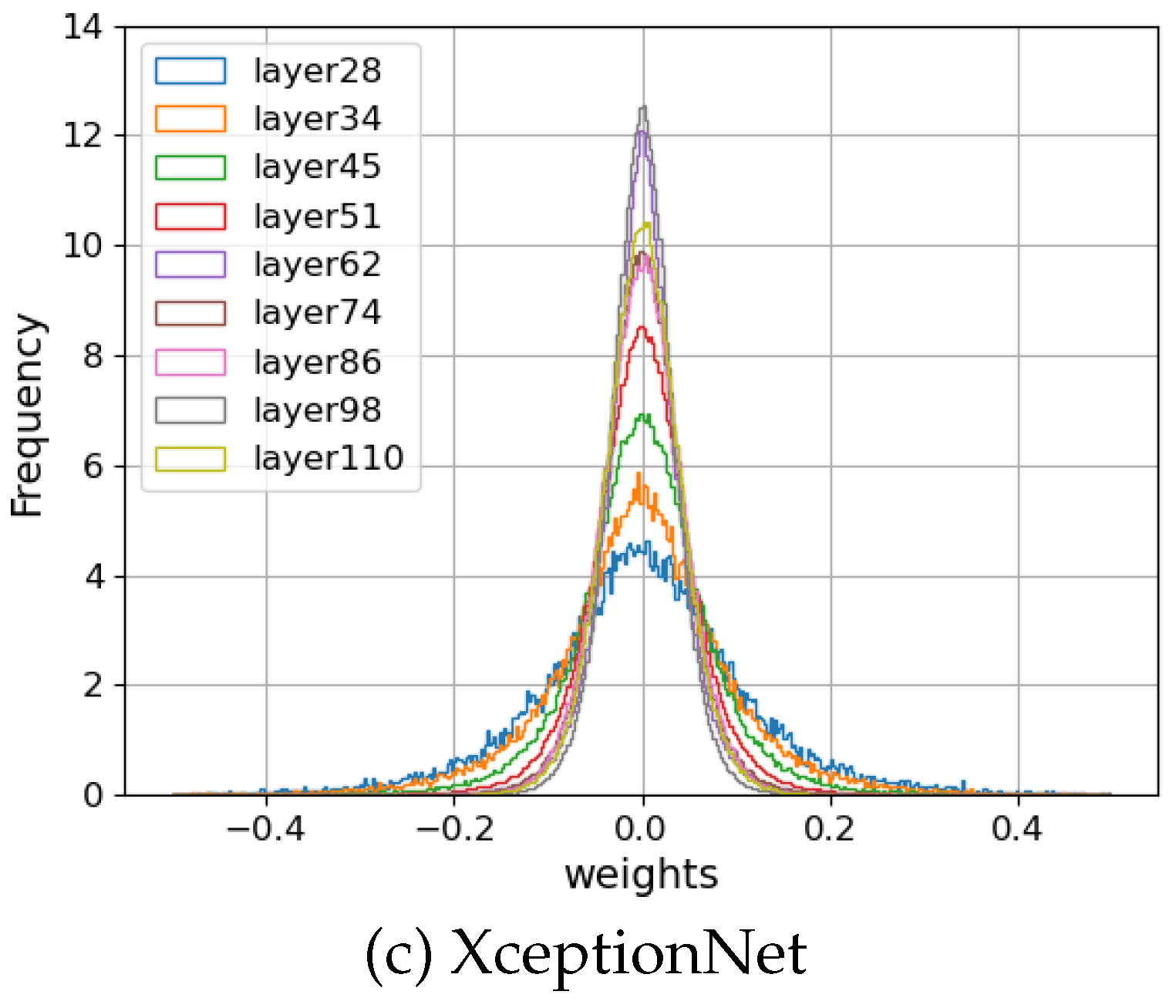

Generally, the weight parameters of the DNN model are initialized before the training process and refined to reach a single local minimum after a series of epochs. With increased parameters, a DNN model has many local minima with similar performances [

17,

18]. Based on these characteristics, a watermark is embedded into the initial values of the selected weight parameters, and the selected parameters are not updated during the training phase in the case of the method presented in [

19]. As displayed in

Figure 1, the watermarking operation moves the initial point in the parameter space according to the secret key. The embedding operation based on the constraint is the initial assignment of weight parameters to a DNN model. The weight change at each epoch is corrected by iteratively performing the operation.

2.2. Threats of DNN Watermark

Similar to multimedia watermarking, certain requirements exist for robustness against potential attacks and indicate the possibility of extracting or detecting a watermark from a perturbed version of the host signal. Attacks include signal processing operations such as adding noise, filtering operations, and lossy compression. For DNN watermarking, threats such as fine-tuning, network pruning, and watermark overwriting, which remove watermarks while maintaining the performance of the DNN model, should be considered.

2.2.1. Transfer Learning and Fine-Tuning

Generally, training a DNN model is extremely expensive in terms of computational resources and large training datasets. Therefore, DNN models that have already been trained are used. To adapt the model pretrained for one task to a new task, the pretrained layers were frozen and replaced with new FC layers. A novel DNN model was trained on the new dataset for the trainable layers above the frozen layer.

If a watermark is embedded in an FC layer, then the watermark is completely removed when the fine-tuning model is created because watermarked weight parameters are replaced, and the weight parameters in the unfrozen layers change. Therefore, the watermark must be robust against the retraining of the unfrozen layer during fine-tuning.

2.2.2. Pruning DNN Model

Even if the DNN model is pretrained, considerable computational resources are required to perform the desired task, and removing redundant weight parameters from the DNN model can efficiently reduce the memory space and computation time [

20]. Several heuristic pruning methods have been developed to identify unimportant components in DNN models and retrain pruned models to recover model performance. Therefore, to create robust DNN watermarking, considering the effects of pruning and changes during retraining are critical.

Pruning methods are roughly classified into three categories. The first method is weight-level pruning, which sets less important weights to zero and does not change the network structure. The other two methods are channel- and layer-level pruning, which can change the network structure, but require large computations to determine efficient network modifications with limited performance loss. Therefore, in this study, we focused on weight-level pruning in which after training, the DNN model is compressed by cutting off parameters with absolute values smaller than the threshold value to zero. The threshold value was set such that the accuracy of the model was not degraded considerably.

2.2.3. Overwriting

A new watermark can be embedded in a previously watermarked model to overwrite the original watermark. In [

6], overwriting was defined as an attack in which an additional watermark is inserted into the model to render the original watermark undetectable.

Even if a watermark is detectable, a DNN model may contain two signals. In such cases, disputes over the ownership of the model may occur. From a third-party perspective, determining the originality of a watermark is difficult.

3. DNN Watermarking Robust against Pruning

Yasui et al. [

1] investigated a channel-coding approach for protecting DNN watermarking against pruning attacks. The

k-bit watermark

b is encoded into a binary code word using CWC.

CWC

with parameters

and

L is a set of binary code words of length

L, all with weight

; CWC has a fixed Hamming weight. Therefore, the codeword

,

of CWC satisfies the condition that

where

denotes a fixed constant. Because of its simplicity, the Schalkwijk’s algorithm [

13] is used in [

1], which does not restrict the use of other algorithms [

14,

21,

22,

23].

The weights corresponding to the symbols "1" become more than a higher threshold, whereas the weights corresponding to symbols "0" become less than a lower threshold. When the watermarked DNN model is pruned, the subsequent weight parameters are pruned because of the low values, which does not affect the judgment of symbol "0" in the codeword.

3.1. Embedding

At the initialization of the DNN model, L weight parameters were selected from N candidates according to a secret key. Let and be lower and higher thresholds, respectively. Subsequently, an encoded watermark c was embedded in w under the following constraints:

If , then ; otherwise, , where and are thresholds satisfying .

During the training process of the DNN model, the weight parameters were iteratively updated to converge to a local minimum. In the proposed method, the changes in weights w selected for embedding c were controlled only by the restrictions during the training process.

First, a

k-bit watermark

b was encoded into the codeword

c. Here, parameters

and

L should satisfy the following conditions:

During the embedding operation, weight parameters

w selected from the DNN model were modified into

w using the two thresholds, namely

and

.

where

3.2. Extraction

The distribution of the selected weights to be embedded is expected to be the same as the distribution of all candidates (Gaussian or uniform distribution). When embedding a watermark, the change in the distribution depends on thresholds and as well as the length L of the encoded watermark.

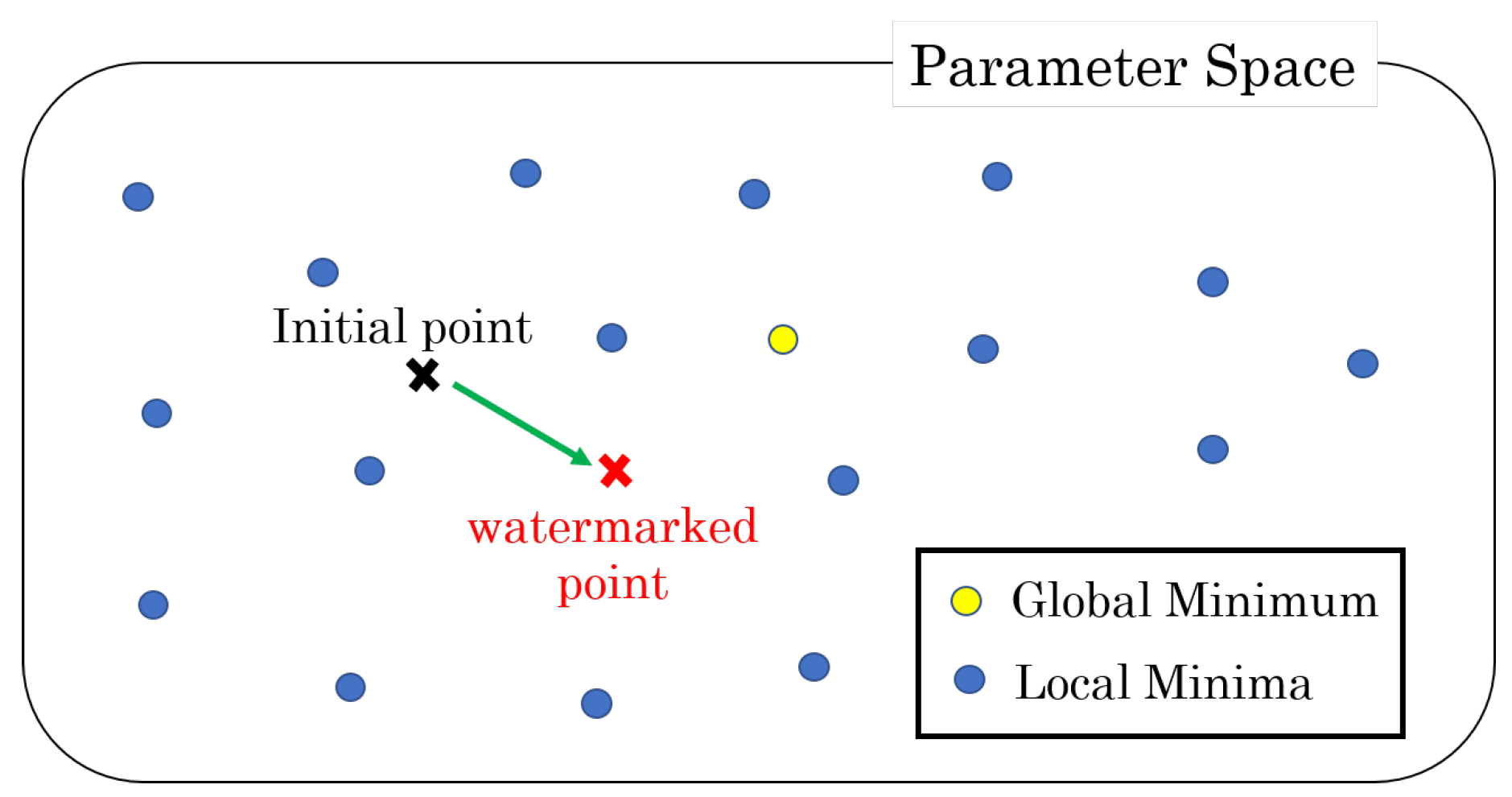

3.3. Design of Detector

If a watermark is embedded in the weight parameters

selected from the DNN model based on the secret key, then top

values and the remaining values follow various distributions. The top

values follow a uniform distribution in the range

because the weight parameters are modified based on the threshold value

.

Figure 2a displays the distribution of values when a watermark is embedded in the weight parameters, where

denotes a uniform distribution in the range

. However, weight parameters, except for the top

follow a uniform distribution in the range

because they are modified based on

. If the watermark is not embedded in the weight parameters, then it follows the original distribution, that is, a uniform distribution in the range

, as displayed in

Figure 2b.

A watermark detector was designed by focusing on the difference in the distribution of the weight parameters with and without the watermark. The distribution when the watermark was embedded in the weight parameters is displayed in

Figure 2a. Therefore, the average value of the top

weight parameters was

, whereas that of the

weight parameters, except for the top

, was

. The distribution when no watermark was embedded is displayed in

Figure 2b. The average value of the weight parameter is

. The proposed detector discriminates differences in the distribution of the weight parameter

extracted from the target DNN model by using the variation in the mean value presented in the previous section as an indicator. The variation index was calculated using the mean square error (MSE) as follows:

where

If the watermark is embedded in the weight parameters, the value of

is smaller; if not, then the value of

is larger.

3.4. Recovery of Watermark

First, the weight parameters were selected from the same DNN model positions, denoted by

. Subsequently, the

th largest element

is determined from

, and codeword

is constructed as follows:

where

, Finally, watermark

is reconstructed from the codeword

.

In the operation, the top-

symbols in

are regarded as "1", and the others are "0". Even if

symbols whose absolute values are smaller than those of the top-

symbols are pruned, the codeword can be correctly reconstructed from the weight parameters

in the pruned DNN model. When the pruning rate

R satisfies the condition

No statistical errors were observed in the aforementioned extraction methods. Because

L weight parameters

are sampled from

N candidates in a DNN model to embed the watermark, the condition does not coincide with the robustness against a pruning attack at rate

R.

4. Proposed DNN Watermarking Method

4.1. Embedding Layers

A novel CNN-based model architecture was designed in a fine-tuning model by replacing the last few parts of network layers, including FC layers. Because several common architectures that follow open-source software have been released, [

1,

19] considered that a common architecture is not subject to protection. Therefore, the FC layers are targeted for embedding the watermark. However, from the perspective of the intellectual property of the DNN model, common architectures should be protected instead of FC layers.

4.1.1. Characteristics of Convolution Layers

In contrast to conventional studies in [

1,

19], we extended the network layer targeted for watermarking to any layer of the CNN model. Two factors should be considered in the context of the imperceptibility—one is the secrecy of the choice of weight parameters, and the other is the effect on model performance for the original task.

Although the FC layers are composed of a large number of weight parameters, some networks in the convolution layer, such as batch normalization, pooling, skip connection, and dropout, have a limited number of candidates for selecting parameters. By contrast, convolution networks such as Conv1D and Conv2D have sufficient parameters to be watermarked. For example, the number of parameters in VGG16 [

24] is enumerated by

Table 1. Therefore, if sufficient candidates exist, embedding a watermark in weight parameters selected from any convolution layer is possible based on a secret key.

In [

17,

18], a DNN model has many local minima that are almost optimal. Even if some parameters are changed slightly, their influence can be adjusted to be small in the remaining parameters. Thus, if the watermark is embedded in the convolution layer and the watermarked weight parameters are frozen during the retraining phase, the corresponding local minima can be determined by adjusting other weight parameters without sacrificing model performance.

On publication of the pretrained model, a watermark is embedded in the weight parameters selected from the convolutional network according to a secret key, and these parameters are frozen during training. To ensure robustness against retraining attacks, these parameters should be selected from the early stages of the convolutional layer. For fine-tuning, the FC layer should be targeted to embed the watermark because it is a newly designed part of the overall DNN model. The FC layer of the fine-tuning model should be protected because the first common convolution layer should be derived from a model that is publicly available or may be licensed by its owner.

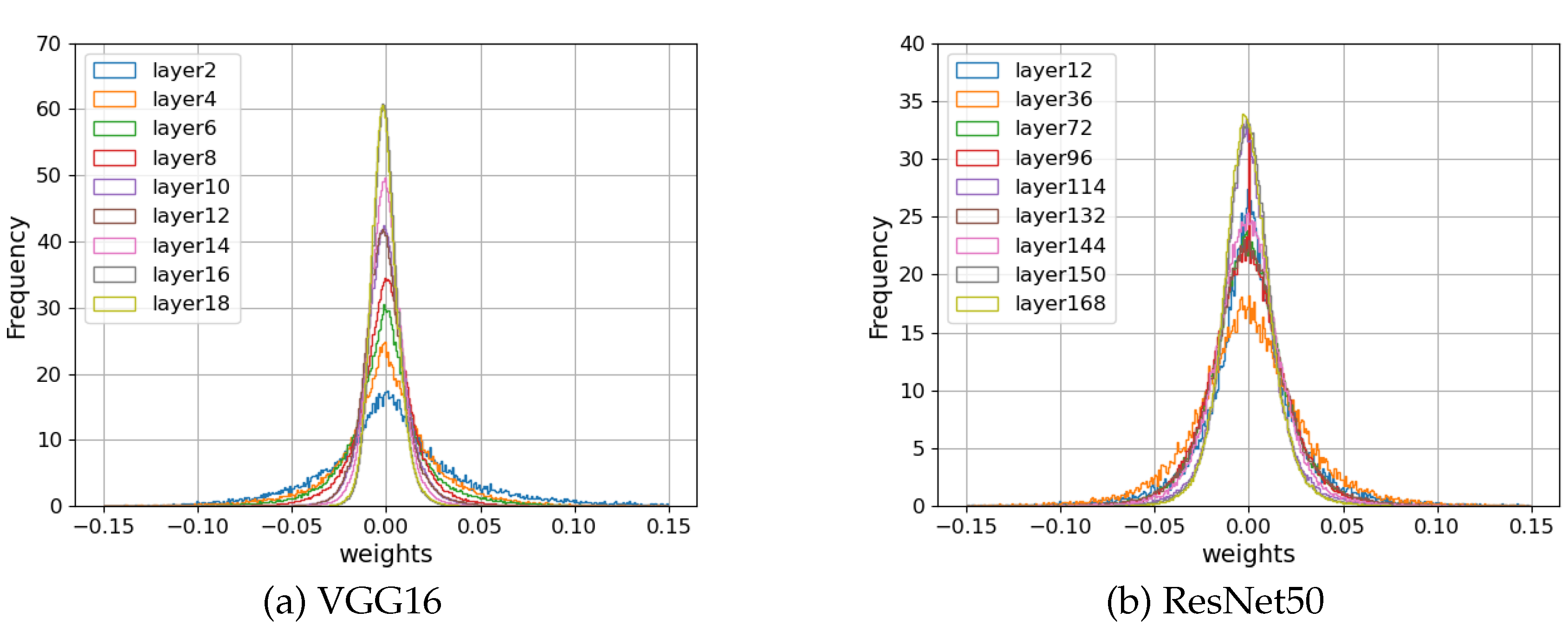

Figure displays the statistical distribution of weight parameters in the three CNN models, VGG16 [

24], ResNet50 [

25], and XceptionNet [

26] trained using the ImageNet dataset [

27]. The distribution at each layer is close to Gaussian, and the variance decreases with the layer depth.

Figure 3.

Comparison of the histogram of weight parameters in various convolution layers in pretrained CNN models.

Figure 3.

Comparison of the histogram of weight parameters in various convolution layers in pretrained CNN models.

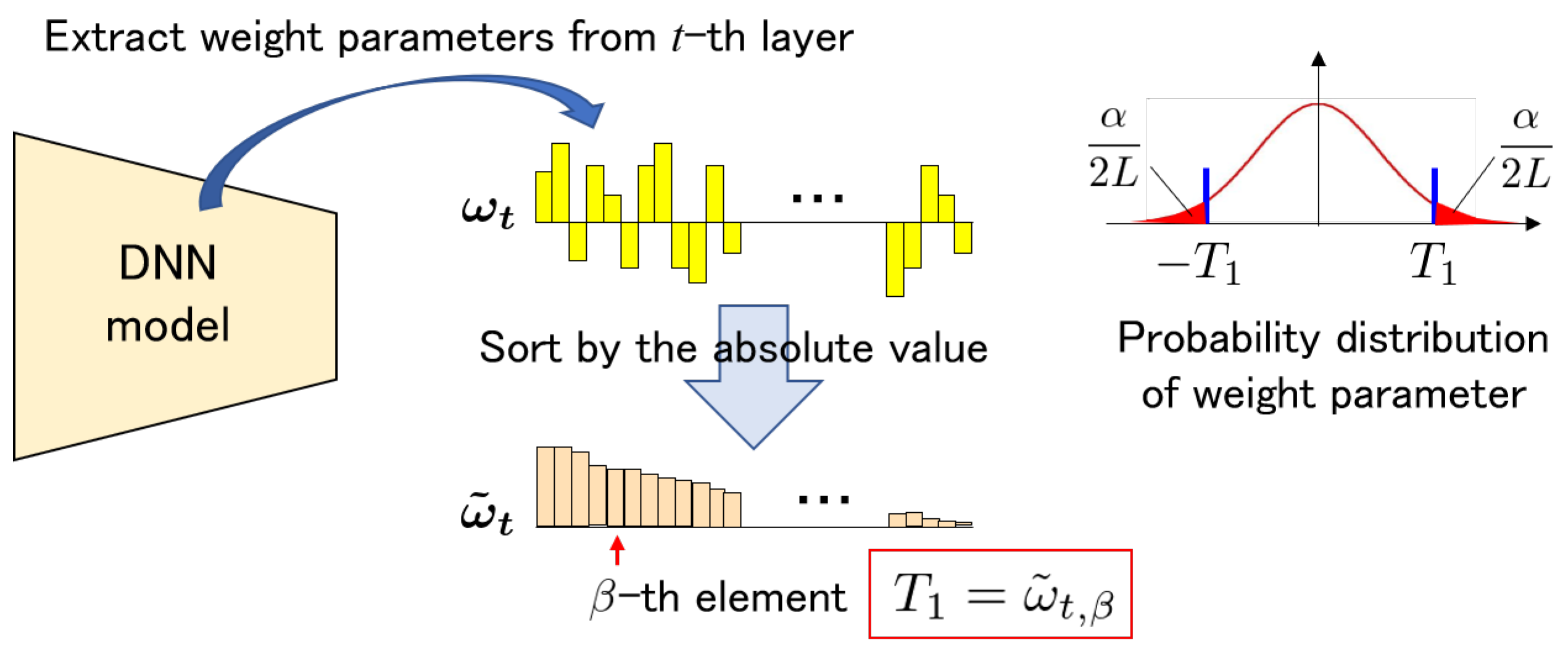

4.1.2. Design of Threshold

Because of the difference in statistical characteristics, the thresholds and are determined when the target convolution layer is selected. According to a secret key, we choose L weight parameters and modify them by an embedding operation such that the absolute value of elements is greater than and that of the others is less than .

Let

be the total number of weight parameters in the

t-th layer. To avoid statistical irregularities, the absolute value of the top

weight parameters is managed to be greater than

, where

satisfies the following equation:

The weight parameters are

. These parameters are sorted by absolute values such that the order of their absolute values is from largest to smallest, as represented by

in the following equation:

Here,

for

. Then, threshold

is determined as follows:

Figure 4 illustrates the procedure for determining the threshold

. The other threshold

is calculated using rate

:

For simplicity,

is used in the following discussion.

From the secrecy perspective, attackers can calculate the threshold by observing the target convolution layer. However, without a secret key, determining the layer to be selected and identifying the elements that satisfy is difficult. Because the number of such weight parameters is , finding among them renders maintaining the model performance difficult. Another approach is to increase the values of selected weight parameters whose values are . Because of the large number of candidates, finding such weights without a secret key is challenging.

4.2. Detector

In the DNN watermarking method [

1] explained in the previous section, the bias of 0 and 1 symbols in a CWC codeword is exploited to check the presence of hidden watermark in a DNN model. However, a theoretical analysis is yet to be performed on detecting whether or not a watermark is embedded in the weight parameters selected from the DNN model based on the secret key. In this study, we theoretically explained the characteristics of the distribution of weight parameters with and without embedded watermarks and proposed a detector that can detect the presence of watermarks.

4.2.1. Measurement

According to the secret key,

L weight parameters are extracted from the target DNN model. The embedding operations are given by Equation (

3). The upper

values of the weight parameters were equal to or greater than

. Furthermore, when the symbol "0" of CWC codeword is embedded, the weight parameters are modified to be less than or equal to

, and the number of such symbols are

. If the watermark is embedded in the selected

L weight parameters, then their values are not expected to be in the range

. Therefore, we proposed a novel measurement method for detecting watermarks.

For detection, we used the same secret key to select

L weight parameters from the specified convolution layer. Here, thresholds

and

are calculated from the selected weight parameters by observing only the distribution with parameter

of CWC code word and rate

. Here,

is determined by the weight parameter with the absolute value of the

th largest, and

is calculated using Equation (

12).

The following figure illustrates how the watermark is detected using the proposed method.

If the watermark is not embedded, then the sorted weight parameters

should satisfy the following conditions:

During the embedding process, for

,

is maintained at less than

. Therefore,

should be close to 0 if the watermark is to be embedded in a DNN model.

4.2.2. Uniform Distribution

The initial weight parameters in a DNN model are assumed to be uniformly distributed in the range , and these absolute values are considered for simplicity.

As displayed in

Figure 2a, if the watermark is embedded, then the probability in the range

should be zero. The theoretical expected value of

can be obtained using the following formula:

-

CWC codword:

-

Random sequence:

-

If (i.e., , then .

4.2.3. Gaussian Distribution

After training, the weight parameters in the DNN model were expected to follow a Gaussian distribution with a mean of zero and variance . For simplicity, we considered the absolute values.

-

CWC codeword:

-

Random sequence:

If

, then we have the following equation:

where

denotes the complementary error function defined by the following equation:

4.3. Non-Fungible Token

Even if a malicious party attempts to overwrite the watermark, then removing the hidden watermark from a watermarked DNN model without a secret key is difficult. When a new watermarked version is created using a different key and watermark, the two watermarks must remain. An nonfungible token (NFT) is introduced to check the operation history of the watermark.

Since the invention of Bitcoin [

28], blockchain has been adopted in various new guaranteed applications based on a consensus algorithm called proof of work (PoW). Bitcoin uses the PoW algorithm to reach an agreement on transaction data in a decentralized network.

When the shared data on the blockchain are confirmed by the most distributed nodes, any changes to the stored data are immutable because all subsequent data become invalid. The most popular blockchain platform for NFT schemes is Ethereum [

29], which provides a secure environment for running smart contracts. In Blockchain-based smart contracts, a turing-complete scripting language is used for complex functionality to perform a thorough replication of state transitions using a consensus algorithm to achieve ultimate consistency. The applications running on top of smart contracts are based on state-transition mechanisms. The state, including instructions and parameters, is shared by all participants, which guarantees transparency in instruction execution. Furthermore, the positions between states should be the same across the distributed nodes; therefore, consistency is critical.

The NFT system [

30] is a blockchain-based application that relies on smart contracts to ensure order-preserving operations. This operation implies that the order of contracts in a blockchain-based application is guaranteed when it is confirmed in the state transitions. Thus, the history of the NFT remains unchanged and ownership is preserved.

A contract address is a unique identifier that comprises a fixed number of alphanumeric characters generated from a pair of public and private keys. To transfer NFTs, the owner sends a transaction to involve smart contracts in the ERC-777 standard

1. Among the token standards related to NFTs, ERC-721

2 introduces a NFT standard, in which every NFT has a uint256 variable called tokenId, and the pair of contract address and uint256 tokenId is globally unique. Thus, tokenId can be represented as a 256-bits binary sequence.

Because tokenId can be used as an input to generate special identifications, it can be connected to intellectual multimedia content and DNN models to be protected from unauthorized copying. When a DNN model is combined with NFT, the corresponding tokenId is embedded as a watermark in our method. If two watermarks are extracted, then the watermark history can be checked by evaluating the NFT corresponding to the takenIds.

5. Simulations

We encoded a watermark using CWC and then embedded the code word into DNN models to evaluate its effects on DNN models.

5.1. Experimental Conditions

Because the bit length of the NFT token Id is 256, the number of watermarks is fixed at

bits in the experiments. Randomly generated 20 binary sequences of length 256 were encoded by the CWC, and the code words were embedded as a watermark to measure the performance in terms of accuracy and loss. According to [

1], the CWC parameters were selected, as listed in

Table 2. We calculated the threshold

according to the method presented in

Section 4.1.2 and fixed the other threshold

at the embedding operation. These thresholds were calculated as explained in

Section 4.2.1 at the detection.

Based on the VGG16 [

24], ResNet50 [

25], and XceptionNet models as pretrained models, we fine-tuned the models with a batch size of 32 by replacing the new FC layers connected to the final convolutional layer, similar to the experiments in [

1,

19]. The number of nodes in the final convolutional layer is 25088 (

) in VGG16, 100352 (

) in ResNet50, and 204800 (

) in XceptionNet. These nodes are connected to new FC layers with 256 nodes. These fine-tuned models are trained using the 17 Category Flower Dataset

3 provided by the Visual Geometry Group of Oxford University—62.5% of images were used as training data, 12.5% as validation data, and 25.0% as test data. We used the stochastic gradient descent optimizer with a learning rate of

and categorical cross-entropy as loss functions in the experiments. Notably, embedding loss was not used in the proposed method. The baseline performances of the fine-tuned model for these three pretrained models are listed in

Table 3.

5.2. Dependency of Embedding Layer

To evaluate the sensitivities of model performance to watermark embedding, we conducted experiments by embedding watermarks into nine individual convolution layers, where the selected layers and their number of weight parameters are listed in

Table 4.

Table 4.

Number of weight parameters involved in each selected layer.

Table 4.

Number of weight parameters involved in each selected layer.

| (a) VGG16 |

| Layer |

2 |

4 |

6 |

8 |

10 |

12 |

14 |

16 |

18 |

| #Param. |

36864 |

73728 |

147456 |

294912 |

589824 |

589824 |

1179648 |

2359296 |

2359296 |

| (b) ResNet50 |

| Layer |

12 |

36 |

72 |

96 |

114 |

132 |

144 |

150 |

168 |

| #Param. |

36864 |

36864 |

147456 |

147456 |

147456 |

147456 |

131072 |

589824 |

262144 |

| (c) XceptionNet |

| Layer |

28 |

34 |

45 |

51 |

62 |

74 |

86 |

98 |

110 |

| #Param. |

32768 |

65536 |

186368 |

529984 |

529984 |

529984 |

529984 |

529984 |

529984 |

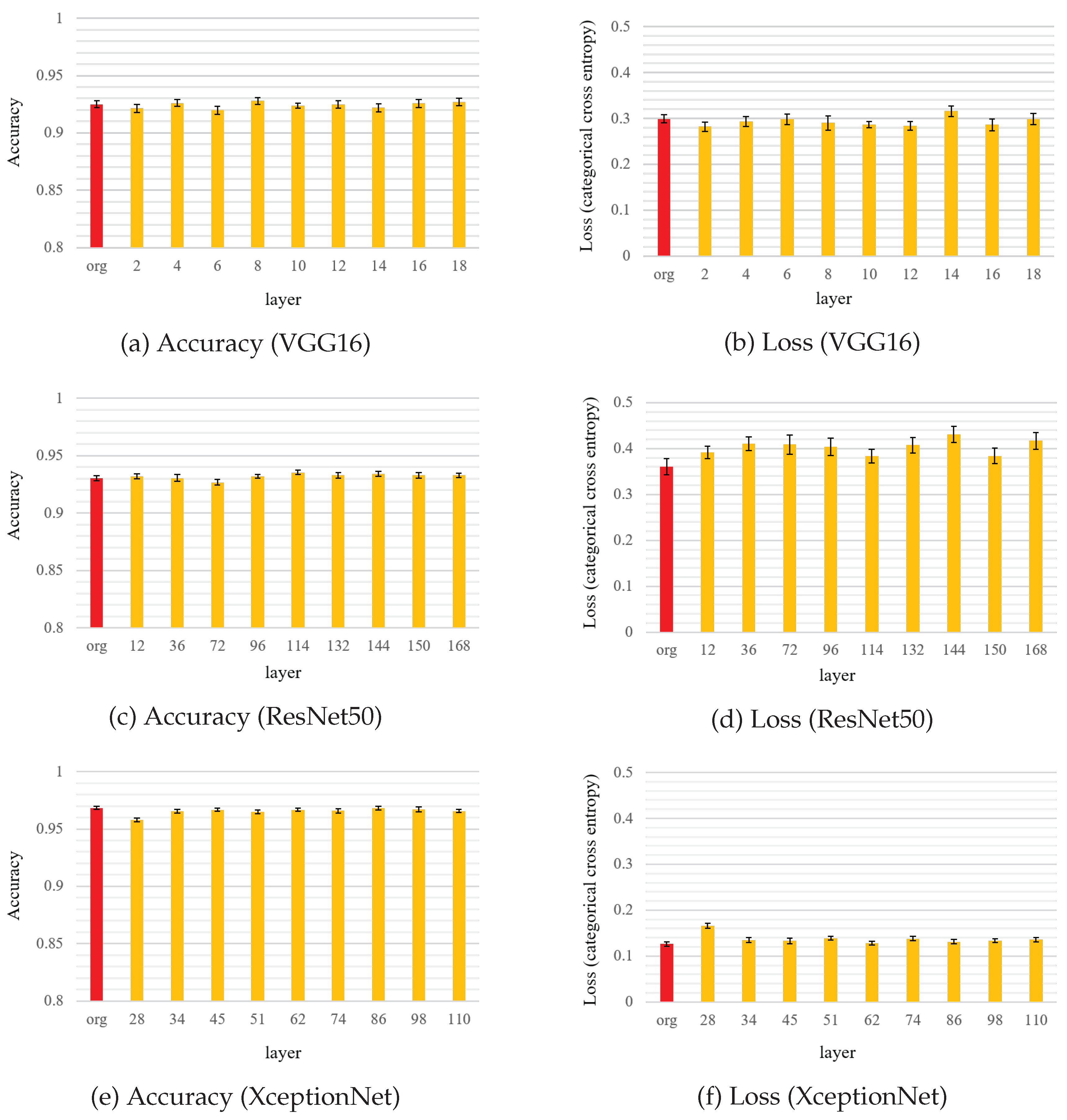

Figure 5 displays the accuracy and loss when the watermark is independently embedded in nine convolution layers in the three DNN models. Compared with the baseline results, no clear difference was observed in performance when the watermark was embedded into the convolution layers in these DNN models. For XceptionNet, a slight decrease in the accuracy was observed when the early stages of the convolutional layers were changed. Embedding a watermark in the first few layers should be avoided. Notably, the loss function does not consider the effect of watermark embedding on the training process.

Next, we compared the performances by changing CWC encoding parameters, the results of which are displayed in

Figure 6. The figure reveals that the accuracy was close to that of the original model in all the cases, although the loss was slightly higher in the case of ResNet50. The difference was within the margin of error when the length of the codeword was changed.

Because many candidates exist for the convolution layers, the selection of weight parameters can be kept confidential. If the early convolution layers are frozen during fine-tuning, the embedded watermark can be correctly extracted. As mentioned in

Section 4.1.2, the secrecy of the weight parameters to be watermarked is managed by appropriately selecting threshold

. Although we used

in the experiments, it can be flexibly changed to control the secrecy and robustness of the watermark. With a decrease in

, the robustness against the addition of noise increases. Because the watermark weight parameter corresponding to symbol 0 is less than

, the noise amplitude should be greater than

to cause a bit flip at the symbol position in the codeword. By contrast, the performance of DNN model may be degraded because most parameters have small values, similar to pruning.

All codewords embedded into DNN models were correctly extracted even if pruning with a maximum rate of 99% was executed because of the effect of encoding by CWC at a rate . Even if the pruned models were retrained with a few epochs, the codewords still survived because the changes in the weight parameters owing to retention were considerably smaller than the gap in the experiments.

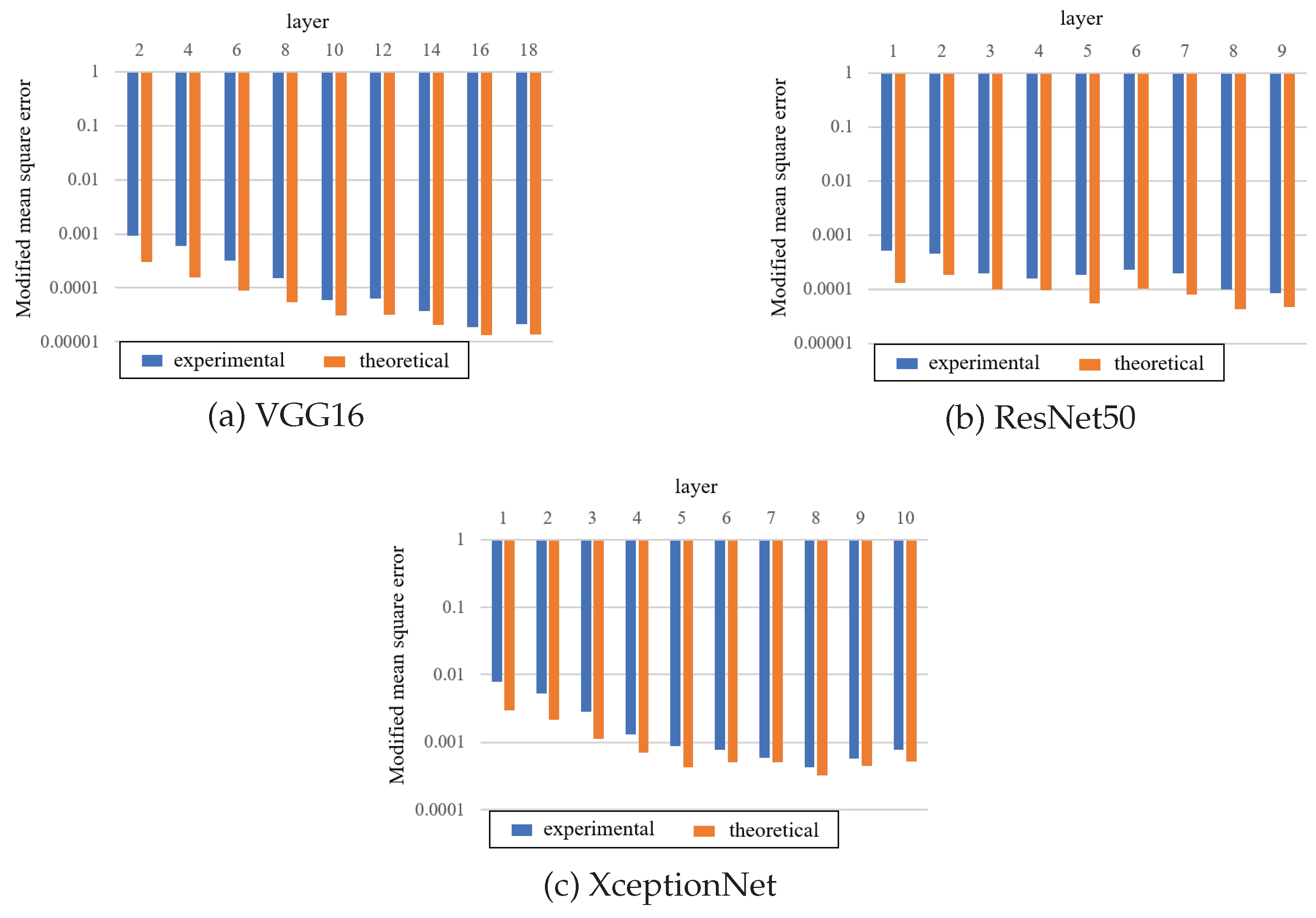

5.3. Detection

The modified

was measured for the fine-tuned model after training. When the watermark was embedded in the model,

in all cases. However, this model varied for the layers in which the watermark was embedded.

Figure 7 displays the experimental

and theoretical values

for each layer, where the standard derivation

is calculated from

L selected weight parameters. The experimental values were slightly lower than the theoretical values because the weight parameters were biased away from a Gaussian distribution. However, the difference between the values of the weight parameters with and without the watermark enables the detection of the presence of the watermark according to theoretical values.

5.4. Comparison

We compared the proposed method with a white box DNN watermarking method [

1,

9,

10,

12,

31,

32,

33]. Considering their robustness against transfer learning and fine-tuning, FC layers should be avoided for embedding watermarks because they are replaced by newly designed ones. With the exception of the method described in [

1], the conventional methods use an embedding loss function to control the distortion of the watermarked model. Therefore, more training epochs were required to converge the watermarked model for the target task. To ensure robustness against pruning attacks, the proposed method can manage a tolerable pruning rate

by selecting

and

L for CWC.

If an attacker attempts to embed a new watermark, then it is likely to overwrite and remove existing watermarks at these bit positions. Conventional methods consider robustness against such attacks by rendering the extraction operation complex and guessing the secret key difficult. Even if the original watermark remains after overwriting, originality cannot be ensured because two individual watermarks are contained in the DNN model. In the proposed method, by introducing NFT to generate the watermark, the time sequence of the watermark and watermark models can be checked.

Table 5.

Comparison with conventional methods.

Table 5.

Comparison with conventional methods.

| |

Embedding |

Embedding |

Fine- |

Pruning |

Overwriting |

| |

Layer |

Loss |

Tuning |

|

|

| Uchida et al. [9] |

Conv. |

Need |

∘ |

|

× |

| Li et al. [10] |

Conv. |

Need |

∘ |

|

× |

| Wang et al. [31] |

MLP/Conv. |

Need |

∘ |

|

× |

| Liu et al. [32] |

Conv. |

Need |

∘ |

|

▵

|

| Wang et al. [33] |

Conv. |

Need |

∘ |

|

▵

|

| Tondi et a. [12] |

Conv. |

Need |

∘ |

|

× |

| Yasui et al. [1] |

FC |

− |

∘ |

|

▵

|

| Proposed |

Conv. |

− |

∘ |

|

∘ |

6. Conclusions

In this study, we investigated the sensitivity of the performance of fine-tuning models when watermarks were embedded in various convolutional layers and trained fine-tuned models with the watermark-embedded layer frozen. Because of the differences in the variance of weight parameters selected from each layer, the thresholds for embedding the watermark were determined using statistical analysis. Even if the loss function did not consider the effects of embedding the watermark, both the accuracy and loss of the watermarked model converged, which is similar to the original model, and no significant degradation in performance was observed in the experiments.

Assuming a Gaussian or uniform distribution of the weight parameters, we can estimate the theoretical value of the measurement . By analyzing the statistical distribution of selected weight parameters, the presence of a watermark can be detected using the watermark model. From the theoretical value of , an appropriate threshold can be determined to detect a watermark.

Author Contributions

M. Kuribayashi, T. Yasui, and A. Malik commonly finished the manuscript. All the authors have read and approved the final manuscript. Conceptualization and Original draft preparation: M. Kuribayashi; Comparative analysis: T. Yasui and A. Malik; Writing—review and editing: all authors.

Funding

This study was supported by the JSPS KAKENHI (22K19777), JST SICORP (JPMJSC20C3), JST CREST (JPMJCR20D3), and ROIS NII Open Collaborative Research (2022-22S1402), Japan.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yasui, T.; Tanaka, T.; Malik, A.; Kuribayashi, M. Coded DNN Watermark: Robustness against Pruning Models Using Constant Weight Code. Journal of Imaging 2022, 8. [Google Scholar] [CrossRef] [PubMed]

- Cox, I.J.; Kilian, J.; Leighton, F.T.; Shamson, T. Secure spread spectrum watermarking for multimedia. IEEE Trans. Image Processing 1997, 6, 1673–1687. [Google Scholar] [CrossRef] [PubMed]

- Chen, B.; G. W.Wornel. Quantization index modulation: a class of provably good methods for digital watermarking and information embedding. IEEE Trans. Inform. Theory 2001, 47, 1423–1443. [Google Scholar] [CrossRef]

- Cox, I.J.; Miller, M.L.; Bloom, J.A. Digital Watermarking; Vol. 53, Morgan Kaufmann Publishers Inc., 2002.

- Cox, I.J.; Miller, M.L.; Bloom, J.A. Digital Watermarking and Steganography, 2nd ed.; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2008. [Google Scholar]

- Li, Y.; Wang, H.; Barni, M. A survey of deep neural network watermarking techniques. Neurocomputing 2021, 461, 171–193. [Google Scholar] [CrossRef]

- Chen, H.; Rouhani, B.D.; Fan, X.; Kilinc, O.C.; Koushanfar, F. Performance Comparison of Contemporary DNN Watermarking Techniques. CoRR 2018, abs/1811.03713, [1811.03713].

- Liu, K.; Dolan-Gavitt, B.; Garg, S. Fine-Pruning: Defending Against Backdooring Attacks on Deep Neural Networks. Research in Attacks, Intrusions, and Defenses; Bailey, M., Holz, T., Stamatogiannakis, M., Ioannidis, S., Eds.; Springer International Publishing: Cham, 2018; pp. 273–294. [Google Scholar]

- Uchida, Y.; Nagai, Y.; Sakazawa, S.; Satoh, S. Embedding watermarks into deep neural networks. Proc. ICMR’17, 2017, pp. 269–277.

- Li, Y.; Tondi, B.; Barni, M. Spread-Transform Dither Modulation Watermarking of Deep Neural Network. Journal of Information Security and Applications 2021, 63, 103004. [Google Scholar] [CrossRef]

- Zhao, X.; Yao, Y.; Wu, H.; Zhang, X. Structural Watermarking to Deep Neural Networks via Network Channel Pruning. CoRR 2021, abs/2107.08688, [2107.08688].

- Tondi, B.; Costanzo, A.; Barni, M. Robust DNN Watermarking via Fixed Embedding Weights with Optimized Distribution. CoRR 2022, abs/2208.10973, [2208.10973].

- Schalkwijk, J.P.M. An algorithm for source coding. IEEE Trans. Information Theory 1972, IT-18, 395–399. [Google Scholar] [CrossRef]

- Brouwer, A.E.; Shearer, J.B.; Sloane, N.J.A.; Smith, W. A new table of constant weight codes. IEEE Trans. Information Theory 1990, 36, 1334–1380. [Google Scholar] [CrossRef]

- Nagai, Y.; Uchida, Y.; Sakazawa, S.; Satoh, S. Digital watermarking for deep neural networks. International Journal of Multimedia Information Retrieval 2018, 7, 3–16. [Google Scholar] [CrossRef]

- Wang, T.; Kerschbaum, F. Attacks on digital watermarks for deep neural networks. Proc. ICASSP’19, 2019, pp. 2622–2626.

- Choromanska, A.; Henaff, M.; Mathieu, M.; Arous, G.B.; LeCun, Y. The loss surfaces of multilayer networks; Artificial Intelligence and Statistics, 2015.

- Dauphin, Y.N.; Pascanu, R.; Gülçehre, Ç.; Cho, K.; Ganguli, S.; Bengio, Y. Identifying and attacking the saddle point problem in high-dimensional non-convex optimization. Proc. NIPS’14, 2014, pp. 2933–2941.

- Kuribayashi, M.; Tanaka, T.; Suzuki, S.; Yasui, T.; Funabiki, N. White-box watermarking scheme for fully-connected layers in fine-tuning model. IHMMsec’21, 2021, pp. 165–170.

- Denil, N.; Shakibi, B.; Dinh, L.; Ranzato, M.A.; Freitas, N.D. Predicting parameters in deep learning. Advances In Neural Information Processing Systems, 2013, pp. 2148–2156.

- Nguyen, Q.A.; Gyorfi, L.; Massey, J.L. Constructions of binary constant-weight cyclic codes and cyclically permutable codes. IEEE Trans. Information Theory 1992, 38, 940–949. [Google Scholar] [CrossRef]

- Bitan, S.; Etzion, T. Constructions for optimal constant weight cyclically permutable codes and difference families. IEEE Trans. Information Theory 1995, 41, 77–87. [Google Scholar] [CrossRef]

- Etzion, T.; Vardy, A. A new construction for constant weight codes. Proc. ISITA’14), 2014, pp. 338–342.

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. Proc. of ICLR’15, 2015.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. Proc. of CVPR’16, 2016.

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. Proc. of CVPR’17, 2017.

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, F.-F. ImageNet: A large-scale hierarchical image database. Proc. CVPR’09, 2009, pp. 248–255. Fei-Fei, L. ImageNet: A large-scale hierarchical image database. Proc. CVPR’09.

- Nakamoto, S. Bitcoin: A Peer-to-Peer Electronic Cash System, 2008.

- Wood, D.D. ETHEREUM: A SECURE DECENTRALISED GENERALISED TRANSACTION LEDGER. Ethererum project yellow paper, 2014, Vol. 151, pp. 1–32.

- Wang, Q.; Li, R.; Wang, Q.; Chen, S. Non-Fungible Token (NFT): Overview, Evaluation, Opportunities and Challenges. CoRR 2021, abs/2105.07447, [2105.07447].

- Wang, J.; Hu, H.; Zhang, X.; Yao, Y. Watermarking in Deep Neural Networks via Error Back-Propagation. IS&T Electronic Imaging, Media Watermarking, Security and Forensics, 2020.

- Liu, H.; Weng, Z.; Zhu, Y. Watermarking Deep Neural Networks with Greedy Residuals. Proceedings of the 38th International Conference on Machine Learning; Meila, M.; Zhang, T., Eds. PMLR, 2021, Vol. 139, Proceedings of Machine Learning Research, pp. 6978–6988.

- Wang, T.; Kerschbaum, F. RIGA: Covert and Robust White-Box Watermarking of Deep Neural Networks. Proceedings of the Web Conference 2021; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).