Submitted:

16 May 2023

Posted:

17 May 2023

Read the latest preprint version here

Abstract

Keywords:

- How do QKE and VQC algorithms compare to classical machine learning methods such as XGBoost, Ridge, Lasso, LightGBM, CatBoost, and MLP regarding accuracy and efficiency on simulated quantum circuits?

- To what extent can randomized search make the performance of quantum algorithms comparable to classical approaches?

- What are the limitations and challenges associated with the current state of quantum machine learning, and how can future research address these challenges to unlock the full potential of quantum computing in machine learning applications?

1. Related Work

2. Methodology

3. Supervised Machine Learning

3.1. Classical Supervised Machine Learning Techniques

-

Lasso and Ridge Regression/Classification: Lasso (Least Absolute Shrinkage and Selection Operator) and Ridge Regression are linear regression techniques that incorporate regularization to prevent overfitting and improve model generalization [10,27]. Lasso uses L1 regularization, which tends to produce sparse solutions, while Ridge Regression uses L2 regularization, which prevents coefficients from becoming too large.Both of these regression algorithms can also be used for classification tasks.

- Multilayer Perceptron (MLP): MLP is a type of feedforward artificial neural network with multiple layers of neurons, including input, hidden, and output layers [14]. MLPs are capable of modeling complex non-linear relationships and can be trained using backpropagation.

- Support Vector Machines (SVM): SVMs are supervised learning models used for classification and regression tasks [28]. They work by finding the optimal hyperplane that separates the data into different classes, maximizing the margin between the classes.

- Gradient Boosting Machines: Gradient boosting machines are an ensemble learning method that builds a series of weak learners, typically decision trees, to form a strong learner [29]. The weak learners are combined by iteratively adding them to the model while minimizing a loss function. Notable gradient boosting machines for classification tasks include XGBoost [9], CatBoost [13], and LightGBM [12]. These three algorithms have introduced various improvements and optimizations to the original gradient boosting framework, such as efficient tree learning algorithms, handling categorical features, and reducing memory usage.

3.2. Quantum Machine Learning

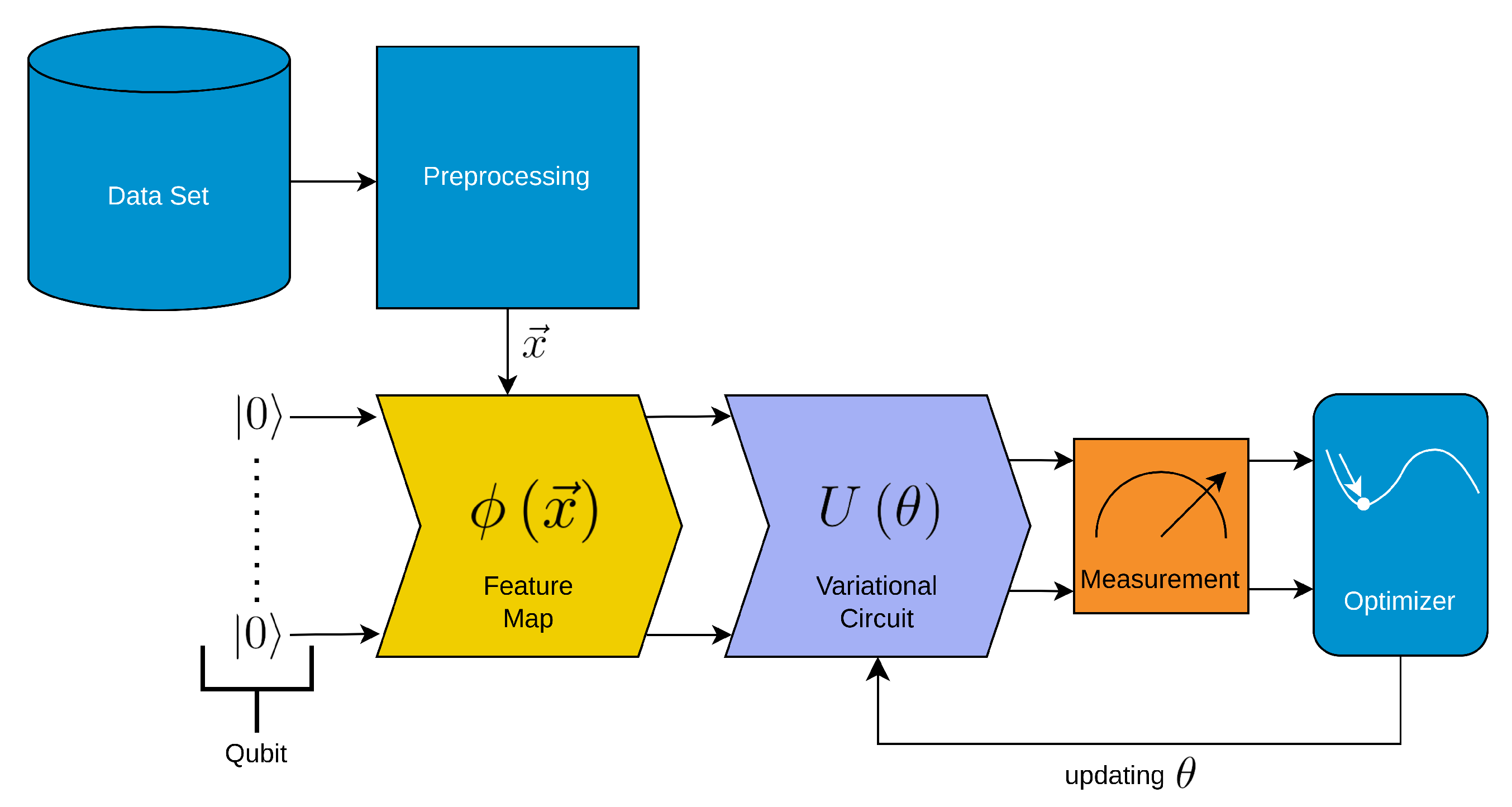

3.2.1. Variational Quantum Classifier (VQC):

- Preprocessing: The data is prepared and preprocessed before being encoded onto qubits.

- Feature Map Encoding (yellow in the figure): The preprocessed data is encoded onto qubits using a feature map.

- Variational Quantum Circuit (Ansatz) (steel-blue in the figure): The encoded data undergoes processing through the variational quantum circuit, also known as the Ansatz, which consists of a series of quantum gates and operations.

- Measurement (orange in the figure): The final state of the qubits is measured, providing probabilities for the different quantum states.

- Parameter Optimization: The variational quantum circuit is optimized by adjusting the parameters , such as the rotations of specific quantum gates, to improve the outcome/classification.

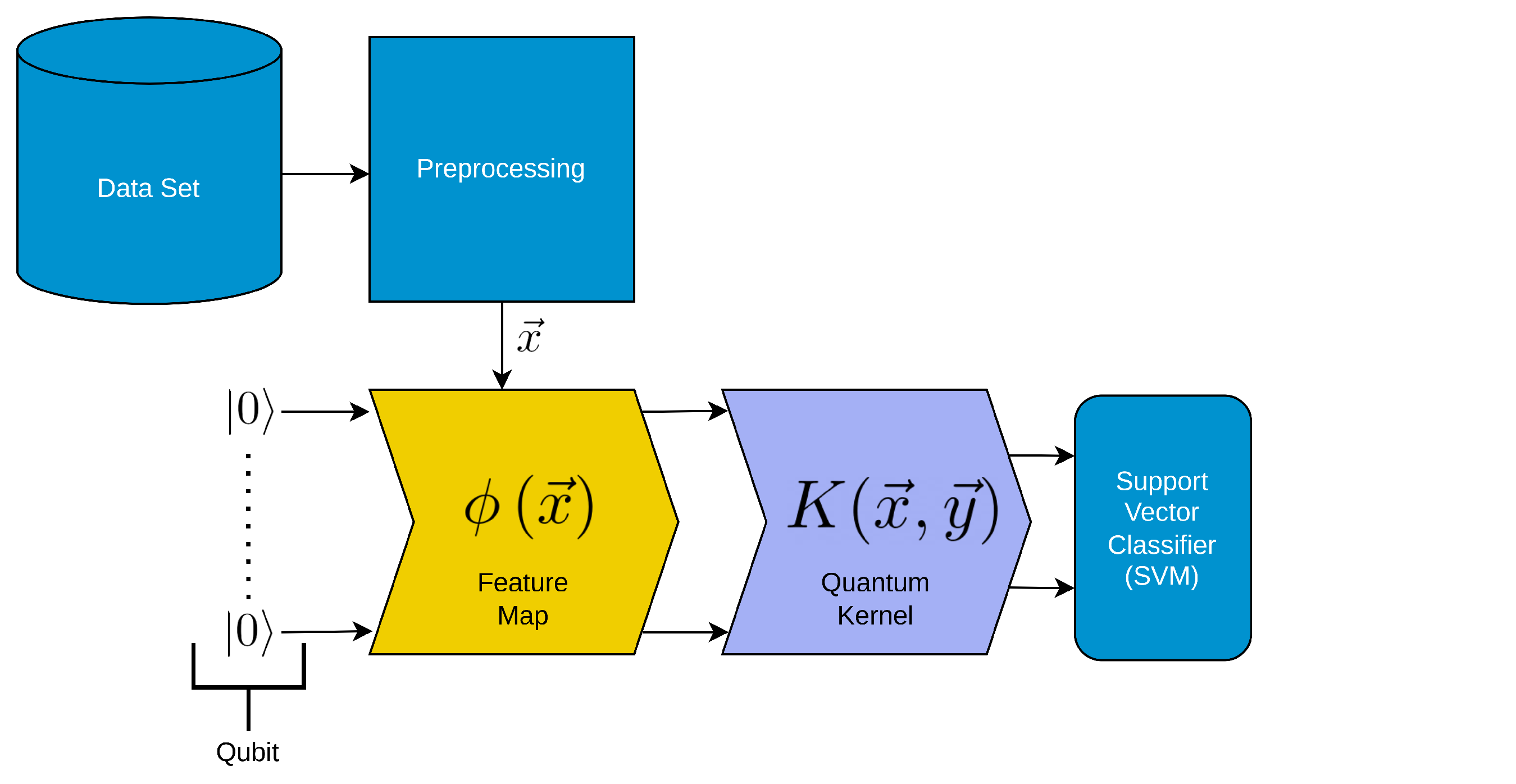

3.2.2. Quantum Kernel Estimator (QKE):

- Data Preprocessing: The input data is preprocessed, which may include tasks such as data cleaning, feature scaling, or feature extraction. This step ensures that the data is in an appropriate format for the following quantum feature maps.

- Feature Map Encoding (yellow in the figure): The preprocessed data is encoded onto qubits using a feature map.

- Kernel Computation (steel-blue in the figure): Instead of directly computing the kernel matrix from the original data, a kernel function is precomputed using the quantum computing capabilities, meaning that the inner product of two quantum states is estimated on a quantum simulator/circuit. This kernel function captures the similarity between pairs of data points in a high-dimensional feature space.

- SVM Training: The precomputed kernel function is then used as input to the SVM algorithm for model training. The SVM aims to find an optimal hyperplane that separates the data points into different classes with the maximum margin.

3.3. Qiskit Machine Learning

3.4. Accuracy Score for Classification

3.5. Data Sets

- Iris Data Set: A widely known data set consisting of 150 samples of iris flowers, each with four features (sepal length, sepal width, petal length, and petal width) and one of three species labels (Iris Setosa, Iris Versicolor, or Iris Virginica). This data set is included in the Scikit-learn library [15].

- Wine Data Set: A popular data set for wine classification, which consists of 178 samples of wine, each with 13 features (such as alcohol content, color intensity, and hue) and one of three class labels (class 1, class 2, or class 3). This data set is also available in the Scikit-learn library [15].

- Indian Liver Patient Dataset (LPD): This data set contains 583 records, with 416 liver patient records and 167 non-liver patient records [31]. The data set includes ten variables: age, gender, total bilirubin, direct bilirubin, total proteins, albumin, A/G ratio, SGPT, SGOT, and Alkphos. The primary task is to classify patients into liver or non-liver patient groups.

- Breast Cancer Coimbra Dataset: This data set consists of 10 quantitative predictors and a binary dependent variable, indicating the presence or absence of breast cancer [32,32]. The predictors are anthropometric data and parameters obtainable from routine blood analysis. Accurate prediction models based on these predictors can potentially serve as a biomarker for breast cancer.

- Teaching Assistant Evaluation Dataset: This data set includes 151 instances of teaching assistant (TA) assignments from the Statistics Department at the University of Wisconsin-Madison, with evaluations of their teaching performance over three regular semesters and two summer semesters [33,34]. The class variable is divided into three roughly equal-sized categories (“low”, “medium”, and “high”). There are six attributes, including whether the TA is a native English speaker, the course instructor, the course, the semester type (summer or regular), and the class size.

- Impedance Spectrum of Breast Tissue Dataset: This data set contains impedance measurements of freshly excised breast tissue at the following frequencies: 15.625, 31.25, 62.5, 125, 250, 500, and 1000 KHz [35,36]. The primary task is to predict the classification of either the original six classes or four classes by merging the fibro-adenoma, mastopathy, and glandular classes whose discrimination is not crucial.

4. Experimental Design

4.1. Artificially Generated Data Sets

4.2. Benchmark Data Sets and Hyperparameter Optimization

5. Results

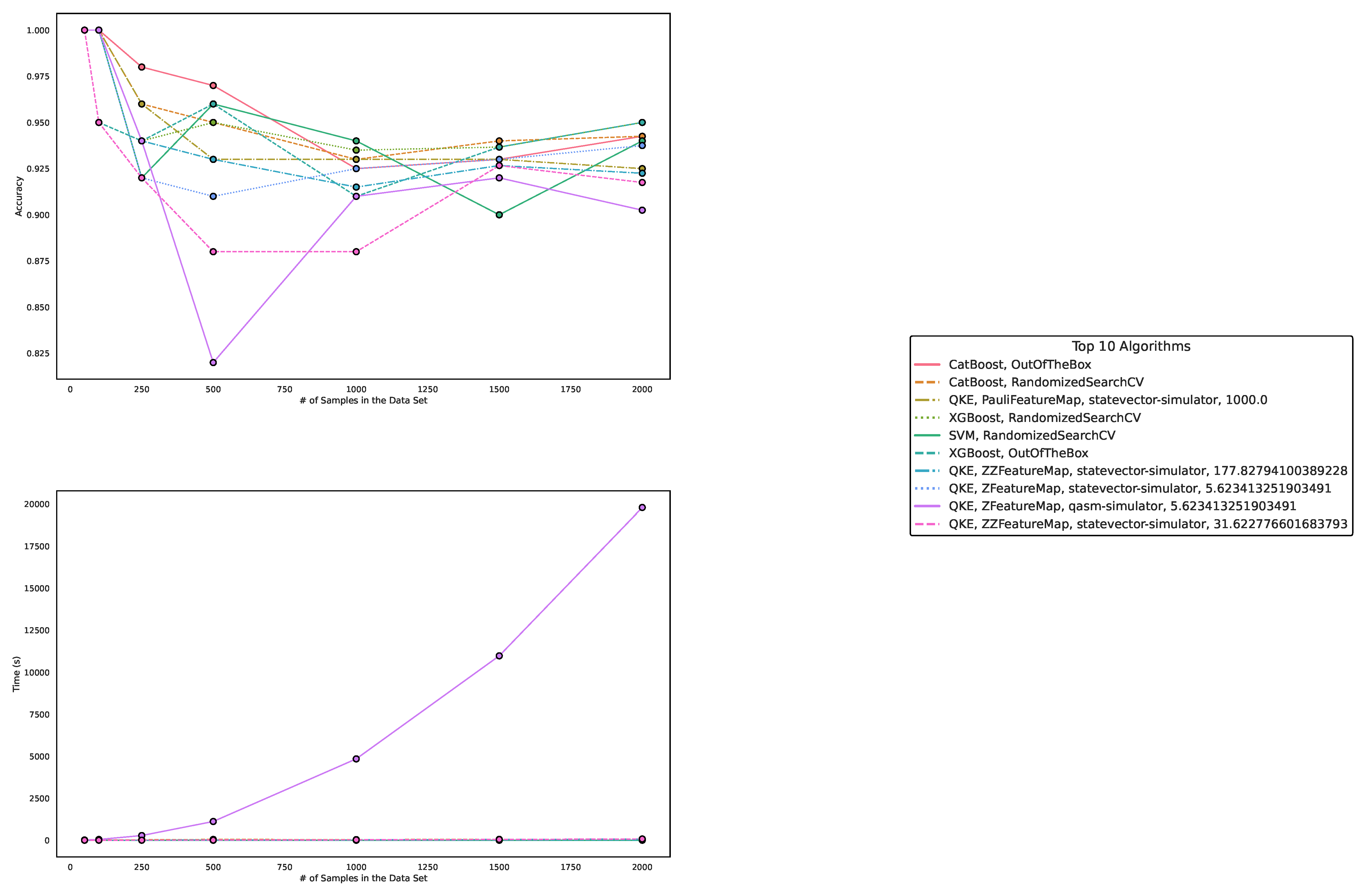

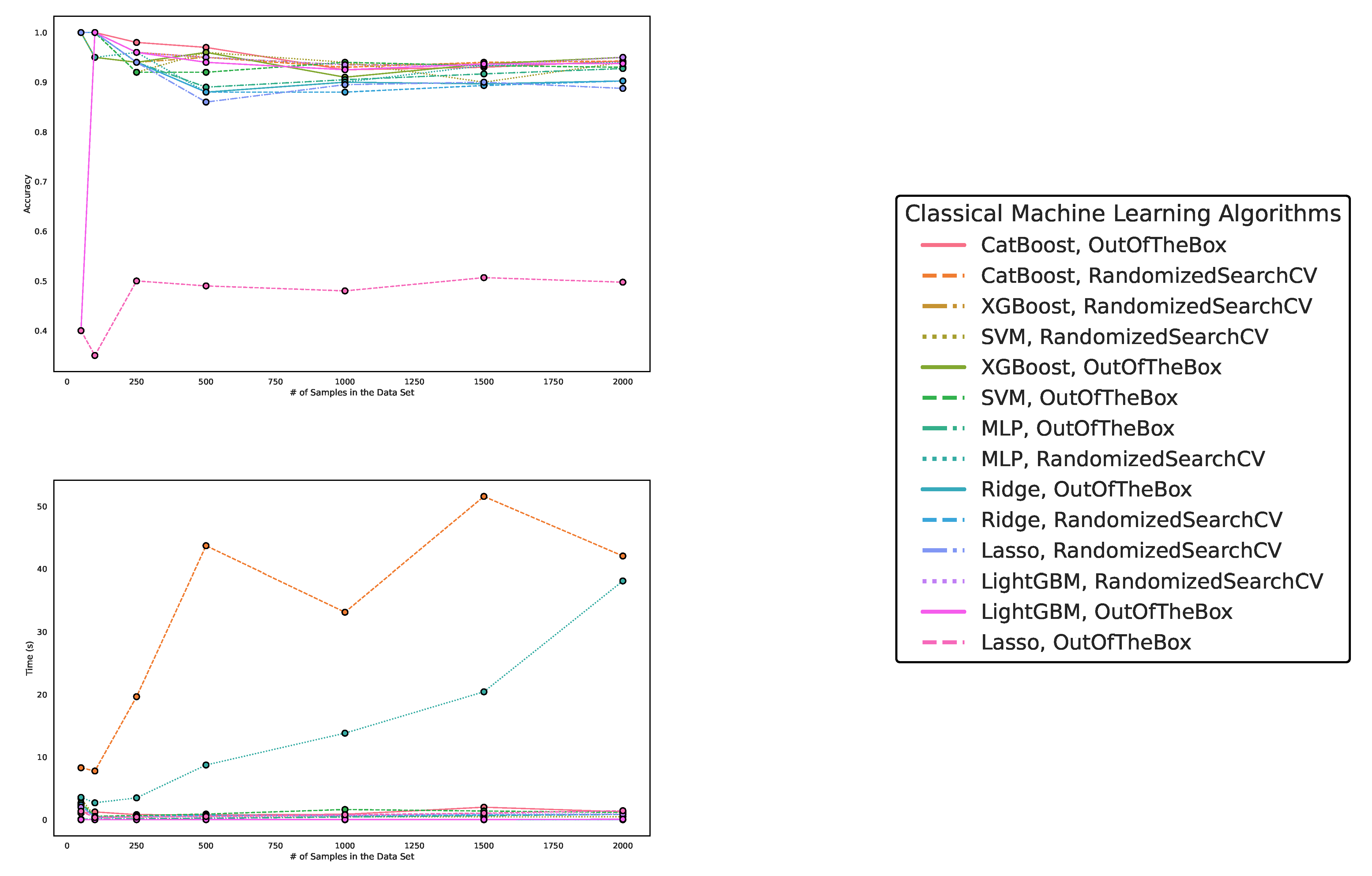

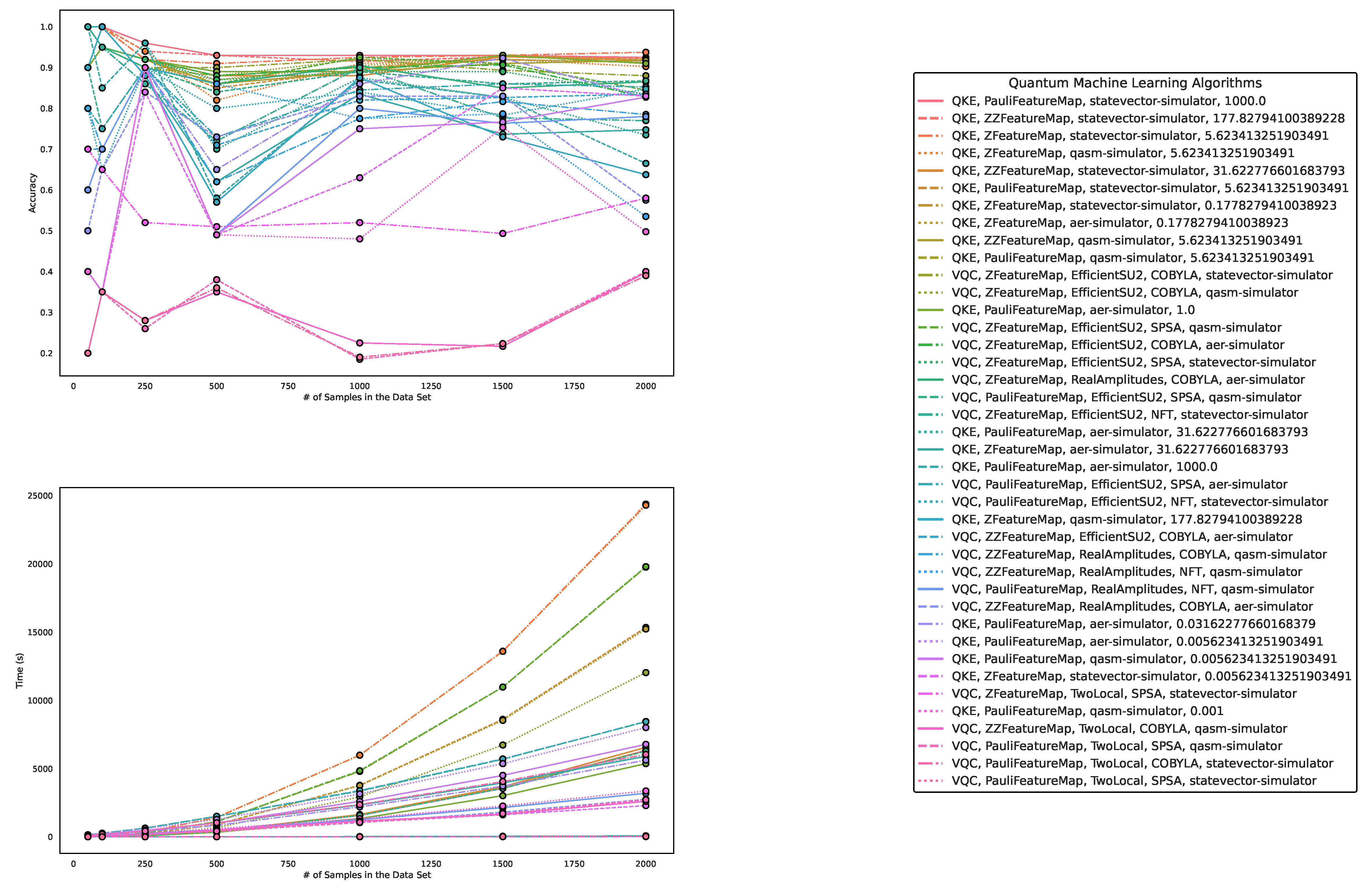

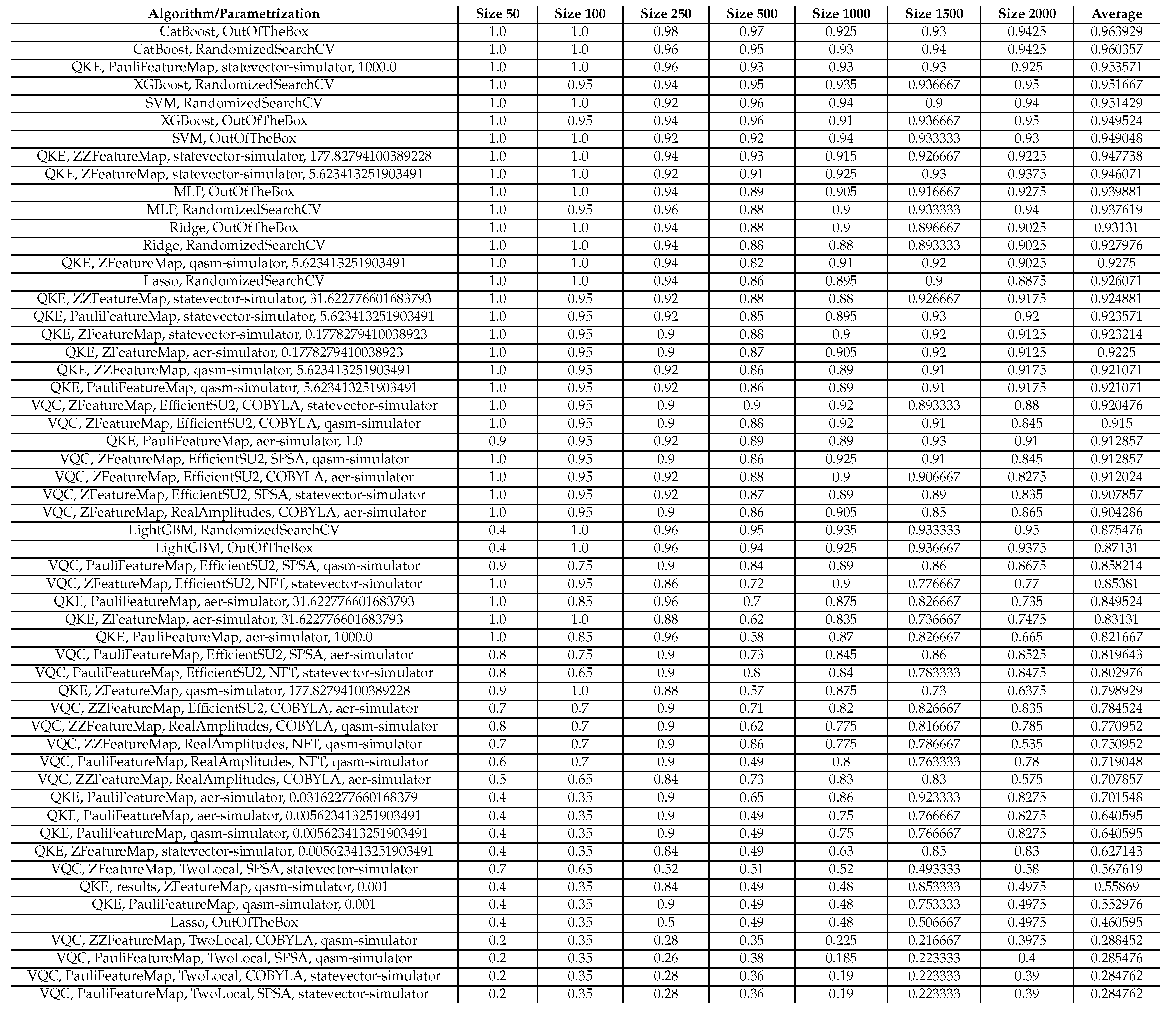

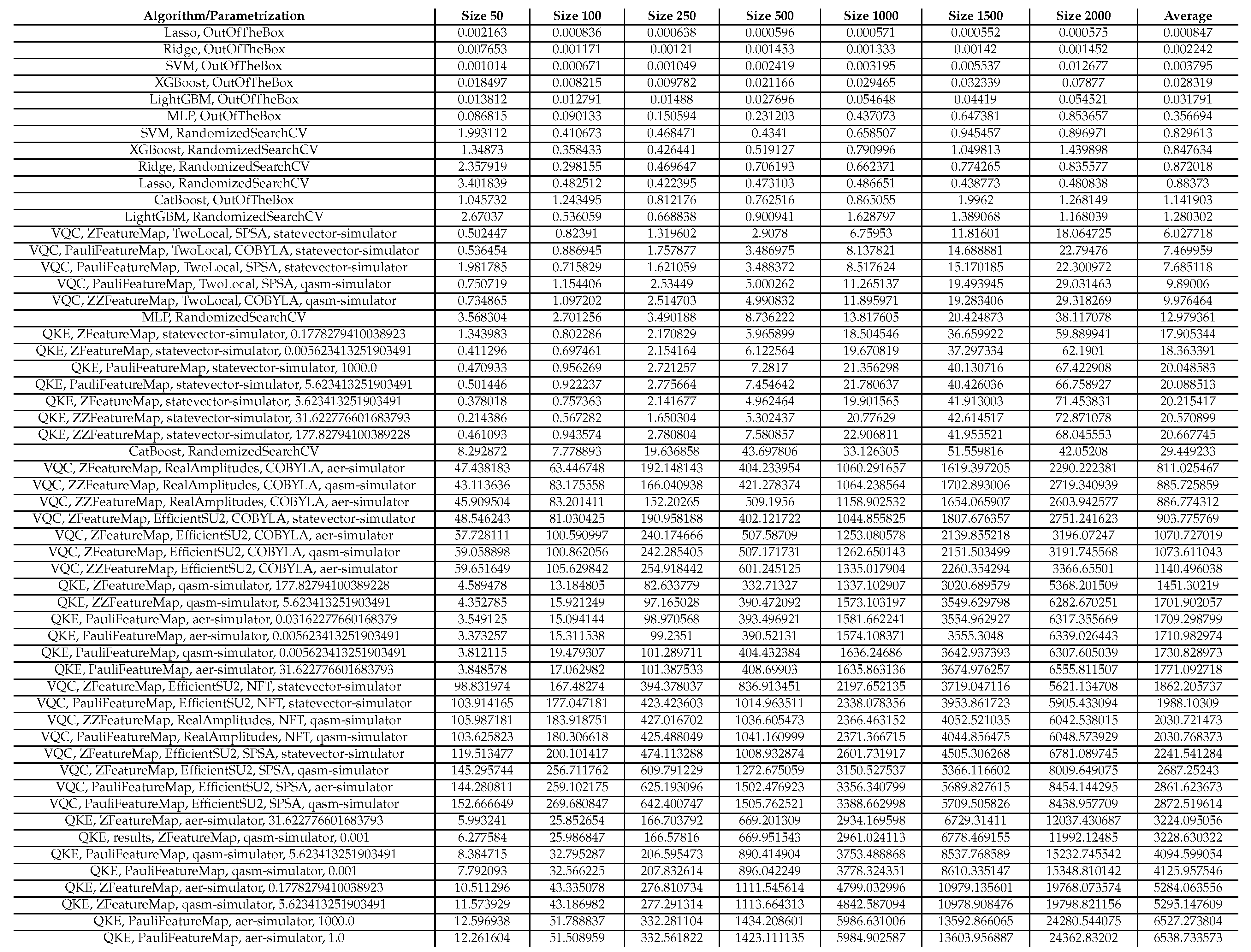

5.1. Performance on Artificially Generated Data Sets

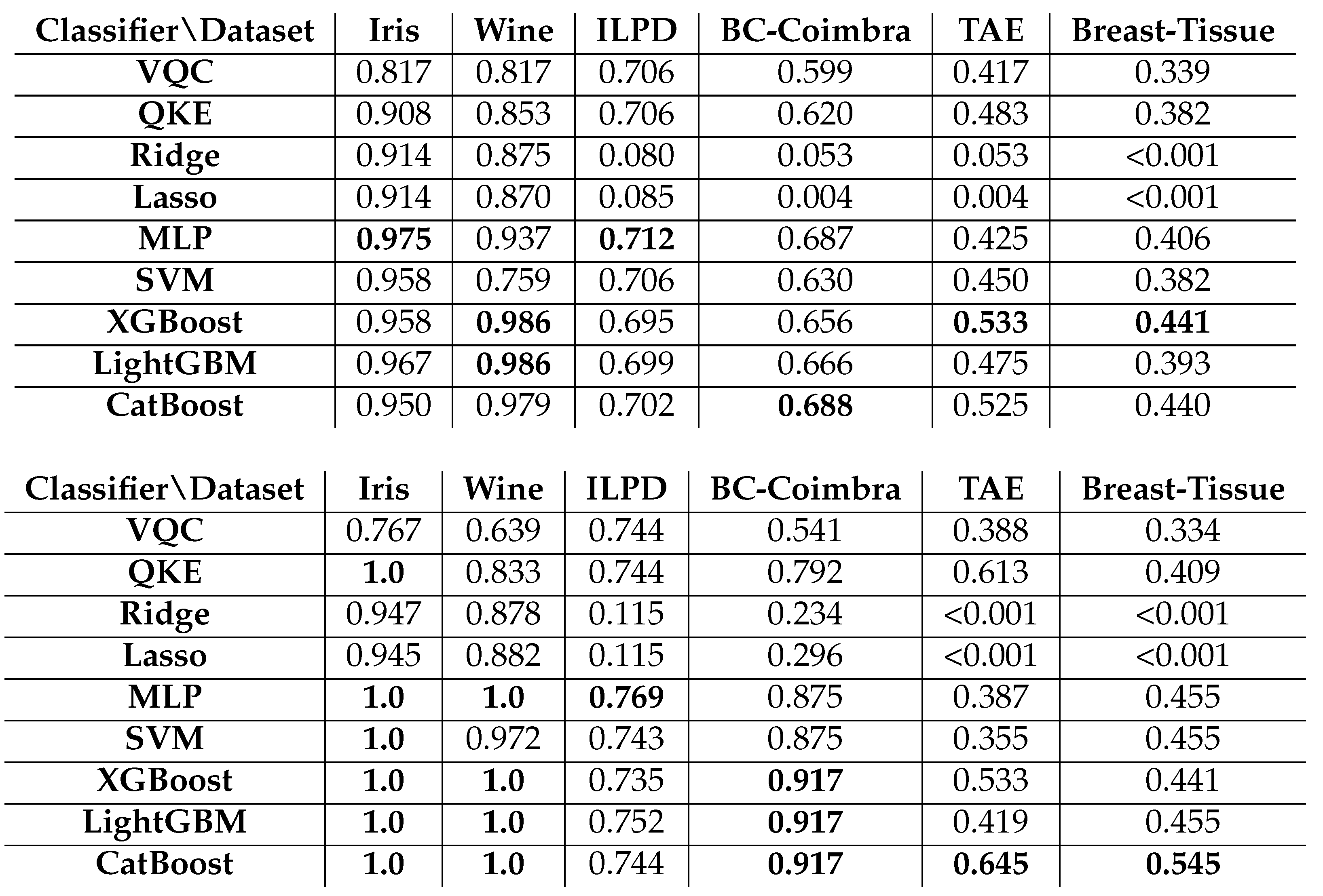

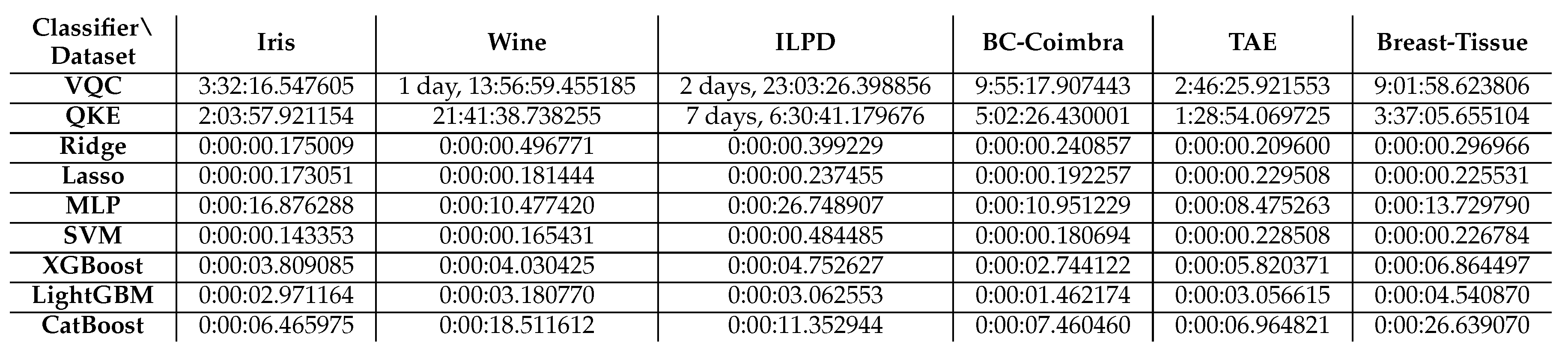

5.2. Results on Benchmark Data Sets

5.3. Comparison and Discussion

6. Conclusions

Acknowledgments

Appendix A. Parametrization

Appendix A.1. Ridge

Appendix A.2. Lasso

Appendix A.3. SVM

Appendix A.4. MLP

Appendix A.5. XGBoost

Appendix A.6. LightGBM

Appendix A.7. CatBoost

Appendix A.8. QKE

Appendix A.9. VQC

References

- Nielsen, M.A.; Chuang, I.L. Quantum Computation and Quantum Information: 10th Anniversary Edition, 10th ed.; Cambridge University Press: USA, 2011. [Google Scholar]

- Biamonte, J.; Wittek, P.; Pancotti, N.; Rebentrost, P.; Wiebe, N.; Lloyd, S. Quantum machine learning. Nature 2017, 549, 195–202. [Google Scholar] [CrossRef] [PubMed]

- Schuld, M.; Sinayskiy, I.; Petruccione, F. An introduction to quantum machine learning. Contemp. Phys. 2015, 56, 172–185. [Google Scholar] [CrossRef]

- Havlíček, V.; Córcoles, A.D.; Temme, K.; Harrow, A.W.; Kandala, A.; Chow, J.M.; Gambetta, J.M. Supervised learning with quantum-enhanced feature spaces. Nature 2019, 567, 209–212. [Google Scholar] [CrossRef] [PubMed]

- Farhi, E., N. H. Classification with quantum neural networks on near term processors. arXiv 2018, arXiv:1802.06002. [Google Scholar]

- Kuppusamy, P.; Yaswanth Kumar, N.; Dontireddy, J.; Iwendi, C. Quantum Computing and Quantum Machine Learning Classification – A Survey. 2022 IEEE 4th International Conference on Cybernetics, Cognition and Machine Learning Applications (ICCCMLA), 2022, pp. 200–204. [CrossRef]

- Blance, A.; Spannowsky, M. Quantum machine learning for particle physics using a variational quantum classifier. J. High Energy Phys. 2021, 2021, 212. [Google Scholar] [CrossRef]

- Abohashima, Z.; Elhoseny, M.; Houssein, E.H.; Mohamed, W.M. Classification with Quantum Machine Learning: A Survey. arXiv 2006, arXiv:abs/2006.12270. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System; Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining; ACM: New York, NY, USA, 2016; KDD ’16, pp. 785–794. [CrossRef]

- Hoerl A.E., K. R. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B (Methodological) 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. Proceedings of the 31st International Conference on Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2017; NIPS’17. 3149–3157.

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased Boosting with Categorical Features. Proceedings of the 32nd International Conference on Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2018; pp. 6639–6649, NIPS’17. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning internal representations by error propagation. Technical report, California Univ San Diego La Jolla Inst for Cognitive Science, 1985.

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; others. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 282–290. [Google Scholar]

- Raubitzek, S. Quantum_Machine_Learning, 2023. [CrossRef]

- Zeguendry, A.; Jarir, Z.; Quafafou, M. Quantum Machine Learning: A Review and Case Studies. Entropy 2023, 25. [Google Scholar] [CrossRef]

- Mitarai, K.; Negoro, M.; Kitagawa, M.; Fujii, K. Quantum circuit learning. Phys. Rev. A 2018, 98, 032309. [Google Scholar] [CrossRef]

- Rebentrost, P.; Mohseni, M.; Lloyd, S. Quantum support vector machine for big data classification. Phys. Rev. Lett. 2014, 113, 130503. [Google Scholar] [CrossRef] [PubMed]

- Liu, D.; Rebentrost, P. Quantum machine learning for quantum anomaly detection. Phys. Rev. A 2019, 100, 042328. [Google Scholar] [CrossRef]

- Broughton, M.; Verdon, G.; McCourt, T.; Martinez, A.J.; Yoo, J.H.; Isakov, S.V.; King, A.D.; Smelyanskiy, V.N.; Neven, H. TensorFlow Quantum: A Software Framework for Quantum Machine Learning. arXiv 2020, arXiv:2003.02989. [Google Scholar]

- Qiskit contributors. Qiskit: An Open-source Framework for Quantum Computing, 2023. [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning (Information Science and Statistics); Springer-Verlag: Berlin, Heidelberg, 2006. [Google Scholar]

- Murphy, K.P. Machine learning : A probabilistic perspective; MIT Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Kotsiantis, S.B. Supervised Machine Learning: A Review of Classification Techniques. Proceedings of the 2007 Conference on Emerging Artificial Intelligence Applications in Computer Engineering: Real Word AI Systems with Applications in EHealth, HCI, Information Retrieval and Pervasive Technologies; IOS Press: NLD, 2007; p. 3–24.

- Liu, L.; Özsu, M.T. (Eds.) Encyclopedia of Database Systems; Springer Reference, Springer: New York, 2009. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression Shrinkage and Selection via the Lasso. J. R. Stat. Soc. Ser. B (Methodological) 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Schuld, M.; Killoran, N. Quantum Machine Learning in Feature Hilbert Spaces. Phys. Rev. Lett. 2019, 122, 040504. [Google Scholar] [CrossRef]

- Ramana, B.V.; Babu, M.S.P.; Venkateswarlu, N.B. N.B. LPD (Indian Liver Patient Dataset) Data Set. https://archive.ics.uci.edu/ml/ datasets/ILPD+(Indian+Liver+Patient+Dataset), 2012.

- Patrício, M.; Pereira, J.; Crisóstomo, J.; Matafome, P.; Gomes, M.; Seia, R.; Caramelo, F. Using Resistin, glucose, age and BMI to predict the presence of breast cancer. BMC Cancer 2018, 18, 29. [CrossRef]

- Crisóstomo, J.; Matafome, P.; Santos-Silva, D.; Gomes, A.L.; Gomes, M.; Patrício, M.; Letra, L.; Sarmento-Ribeiro, A.B.; Santos, L.; Seiça, R. Hyperresistinemia and metabolic dysregulation: A risky crosstalk in obese breast cancer. Endocrine 2016, 53, 433–442. [Google Scholar] [CrossRef]

- Loh, W.Y.; Shih, Y.S. Split Selection Methods for Classification Trees. Stat. Sin. 1997, 7, 815–840. [Google Scholar]

- Lim, T.S.; Loh, W.Y.; Shih, Y.S. A comparison of prediction accuracy, complexity, and training time of thirty-three old and new classification algorithms. Mach. Learn. 2000, 40, 203–228. [Google Scholar] [CrossRef]

- Marques de Sá, J.; Jossinet, J. Breast Tissue Impedance Data Set. 2002. https://archive.ics.uci.edu/ml/datasets/Breast+Tissue.

- Estrela da Silva, J.; Marques de Sá, J.P.; Jossinet, J. Classification of breast tissue by electrical impedance spectroscopy. Med. Biol. Eng. Comput. 2000, 38, 26–30. [Google Scholar] [CrossRef] [PubMed]

- Schuld, M.; Petruccione, F. Quantum ensembles of quantum classifiers. Sci. Rep. 2018, 8, 2772. [Google Scholar] [CrossRef] [PubMed]

- Moiseyev, N. Non-Hermitian Quantum Mechanics; Cambridge University Press, 2011. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).