1. Introduction

This research aims to develop a system that extracts and presents useful knowledge to people who are actually raising children, using texts on childcare posted on SNS. The information is necessary for childcare changes from moment to moment according to the developmental stage of the child. Even in conventional media such as books and magazines, a lot of information about childcare is provided, but it is difficult to accurately obtain the necessary information at that time, and a system that presents appropriate information to the used is needed. For this problem, we aim to develop a system that collects information on childcare and appropriately presents it according to the user's situation, targeting SNS, especially Twitter. On Facebook, there are many long-form posts about business information and more detailed status reports. Compared to Facebook, many people use Twitter for the purpose of catching the latest information because the freshness of information is higher on Twitter. Users in their 20s to 40s, who are particularly sensitive to trends, use it because they can get real-time information. Since it is easy to post short 140-character posts that you can tweet as soon as you think of it, it seems that there is a lot of real-time information about infants posted by parents who are raising children in their 20s to 40s. Many live voices from parents who are actually raising children are posted on SNS, and it is thought that many posts are in the same situation as the user. It is thought that more useful knowledge can be presented to users by using the actual experiences of parents in similar circumstances.

There are still few examples of research on knowledge extraction focusing on child care, for example, there [

1] is research to predicting life events from SNS. They [

2] contributed a codebook to identify life event disclosures and build regression models on the factors that explain life event disclosures for self-reported life events in a Facebook dataset of 14,000 posts. Choudhur et al. [

3] collected the users posted about their "engagement" on Twitter and analyzed the changes in words and posts used. Burke et al. [

4] also analyzed users who have experienced "unemployed" by advertising or email on Facebook, and analyzed the activities on Facebook before changing stress and taking new jobs. but in this research, we collect texts specialized in childcare. We aim to develop a more accurate method by conducting the analysis. As a method, we mainly use natural language processing technology using neural networks, which have been rapidly developing in recent years, especially.

Our contribution can be summarized as follows.

(a) We aim to develop a method that can perform more semantically accurate analysis by using techniques that can accurately handle numerical expressions related to childcare (“2-year-old child”, “37 ° C”, “100 ml”, etc.).

(b) By using the profile information of the user who posted the text together with the text and grasping the attribute information of the poster, we aim to develop a method that emphasizes the text that is closer to the user's situation.

In these two points, we think that the research will be highly novel in terms of method.

2. Related Work

The relationship between numerals and words in text data has received less attention than other areas of natural language processing. Both words and numerals are tokens found in almost every document, but each has different characteristics. However, less attention is paid to numbers in texts. In many systems, numbers treated in an ad-hoc way, documents are just strings like words, normalized to zero, or simply ignored them.

Information Extraction (IE) [

5] is a question-answering task that asks the a priori question, "Extract all dates and event locations in a given document." Since much of the information extracted is numeric, special treatment of numbers often improves performance on IE systems. For example, Anton Bakalov and Fuxman [

6] proposed a system to extract numerical attributes of objects given attribute names, seed entities, and related Web pages and properly distinguish the attributes having similar values.

BERT [

7] is one of the pre-learning models in natural language processing for the large text corpus using the neural network called Pre-training of Bidirectional Trans-formers to fine-tune for each task. The pre-trained BERT model can be fine-tuned with just one additional output layer to create state-of-the-art models for a wide range of tasks, such as question answering and language inference, without substantial task-specific architecture modifications. The method of outputting a fixed-dimensional vector regardless of sentence length has the advantage that the more words input has, the more of each word will be in the output vector. In recent years, it has been attracting attention as a versatile language model because it has demonstrated the highest level of results in a wide range of natural language processing tasks. BERT is based on Transformer. The transformer is a neural machine learning model using the attention mechanism. Manually building large dataset with human attribute labels is ex-pensive, BERT does not need such a human label [

8]. Zhang et al. [

9] identify contextual information in pre-training and numeracy as two key factors affecting their performance, the simple method of canonicalizing numbers can have a significant effect on the results.

3. Proposed Method

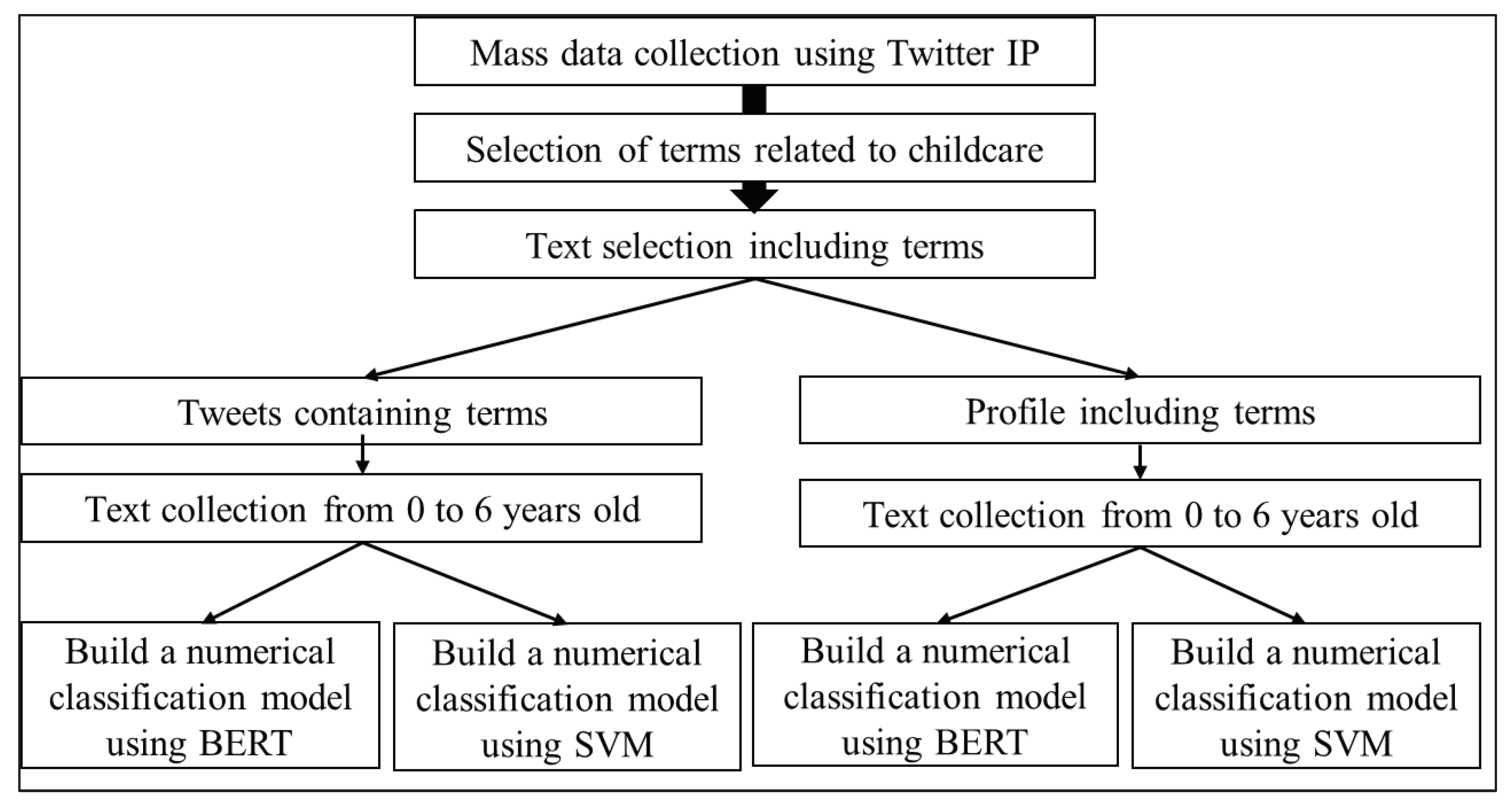

The method proposed in this research classifies tweets about child-rearing posted on Twitter into classes. The procedure is shown below.

●Acquire a large amount of Japanese text data from Twitter.

●Terms related to child care are selected, and two types of texts (tweets) containing those terms and profiles are collected. We created a set of texts divided into children's developmental stages.

●Using SVMs and the neural language model BERT, we build a classification model that predicts numbers from "0" to "6" from sentences.

Figure 1 shows a simple flow for classifying tweets related to child-rearing.

3.1. Data collection

In this research, we use Twitter's API to obtain texts that target child-rearing information from Twitter. Next, we select terms related to parenting and screen texts (tweets) containing those terms. Parenting terms are shown in

Table 1.

Two types of text collections are formed, one for containing terms in tweets and the other for containing terms in profiles. Search for the character string "*-year-old child" and create a set of texts classified by the developmental stage of infants from "0-year-old child" to "6-year-old child".

Table 2 and

Table 3 show part of the tweet text set and profile set created according to the developmental stages of infants.

3.2. Preprocessing

For the data, the tweet text and profile are used as they are. We have not done any processing such as removing hashtags. Also, information such as retweets and mentions are not used.

Data pre-processing is performed in order to perform machine learning on the set of created tweet texts and profiles. In preprocessing, morphological analysis is performed with MeCab, a tool specialized for Japanese language analysis, and the document is vectorized using the Scikit-learn library TfidfVectorizer. we used Unigram features.

Morphological analysis is the task of breaking down the words that make up a sentence into the smallest units and dividing and writing the sentences. Given a set of documents, TfidfVectorizer converts each document into a vector based on the TF-IDF values. TF (Term Frequency) represents the frequency of occurrence of words in a document. IDF (Inverse Document Frequency) is a score that lowers the importance of a word that appears in a large number of documents. TF-IDF is a metric that is a product of TF and IDF [

2].

3.3. Classification Method

BERT+fine tuning is used in this research, so it is neural supervised learning. We used the supervised SVM model to compare with the pre-trained model with fine tuning BERT model. We used SVM because it is a standard non-neural algorithm.

Classifier A and classifier B are created using two classification methods, SVM and BERT.

3.3.1. SVM classification method

The classifier A is constructed using the data divided for each developmental stage of infants. For the text categorization task, we used the SVM algorithm and the implementation used the Python machine learning library Scikit-learn to predict the numbers "0" to "6" from the sentences to build a classifier A. Classifier A using SVM creates two types: Task Ts (tweet), which uses tweet sentences as data, and Task Ps (profile), which uses profiles.

3.3.2. Classification method by BERT

As a language model, the neural language model BERT has been actively studied in recent years. For the constructing classification model B, we used neural language model BERT to classify from "0" to "6”. Two types of classifier B using BERT are created: task Tb (tweet), which uses tweet text as data, and task Pb (Profile), which uses profiles. The BERT model is required to create a classification model. However, it is difficult to prepare a sufficient amount of data set for pre-training to create a model specialized for numerical classification from "0" to "6", so it's a need to fine-tune the pre-trained model. A classifier is created by fine-tuning a pre-trained model.

In BERT, a model specialized for a specific task can be configured by fine-tuning using supervised data for each task, so performance improvement can be expected compared to applying a pre-trained model as it is. As a pretrained model uses a BERT-Base model with 110M parameters and a large model size. The data used for fine-tuning are a set of tweet texts and profile sets created by the developmental stages of infants from 0 to 6 years old. The Task Tb uses the tweet text set, and the Task Pb uses the profile set for fine-tuning.

4. Experimental setup and results

4.1. Classification method by BERT

4.1.1. Data used in experiments

For the experiment, we used tweets about child-rearing collected using Twitter's API. The collection period is from July 19, 2017 to October 31, 2017. At that time, we collected tweet texts and profiles containing any of the words shown in

Table 1.

Regarding the data size, the profile data is 1575 items and the size is 376㎅, The vocabulary size of profile is 78720 words. The data of the tweet text is 953 items, and the size is 238㎅, The vocabulary size of tweet is 55378 words. Items of each category of Tweet data profile data are shown in the

Table 4.

4.1.2. Experiment details

In the classification experiment, Task Tb and Pb described in

Section 3.3.2 are used to classify tweet texts or profiles described in

Section 4.1.1, and the performance is compared with classifiers other than BERT.

Classifier B, which uses BERT, classifies the data as described in the classifier. The Task Pb is fine-tuned on the profile set and classifies the profiles of users who are raising infants aged 0 to 6 years. One of the eight parts of the training data is used for verification and the rest for fine-tuning. We checked the classification results in each case. For each classifier output, the result output by the BERT classifier is the normalized probabilities ranging from 0 to 1 for each label, summing to 1. As for the classification results, the one with the highest probability of each label for the input is taken as the output.

We also use SVM classifier A for performance comparison. Nonlinear SVM is to map nonlinear data to a space that becomes linearly separable and linearly separable on a hyperplane. In order to process the Japanese sentences of the tweets to be classified, the input text is converted to vectors. When we classify with scikit-learn's SVM module, we first normalized with StandardScaler and then used the default parameters. The nonlinear SVM classifies each label from "0" to "6" in the identification space according to which region it belongs.

We performed a 5-fold cross-validation on the train set using the train-test split on the profile data and the tweet body data. 80% of the tweets and profile used in the experiment were used as learning/verification data, and 20% as test data. Then, using stratified 5-fold cross-validation, the training data is divided into 5 so that the ratio of labels in each division is the same as the overall ratio, and 4 of the 5 are training data, 1 is used as validation data. to train and evaluate the model.

Once evaluation and training are completed, one of the four training data and validation data are replaced, and the model is trained and evaluated again. By doing this five times and obtaining the average classification accuracy of the five times, and using that value as the classification accuracy, it is possible to perform a robust evaluation that does not depend on the division of the data.

Regarding the adjustment of the parameters of each machine learning method, the above-mentioned cross-validation is performed for all combinations of parameters specified in advance using grid search, and the parameter model that shows the best classification accuracy is generated. Finally, we tested how well each generated model could classify the test data.

4.2. Experimental results

4.2.1. The classification results of the created classifier A.

We performed a 5-fold cross-validation on the train set using the train-test split on the profile data and the tweet body data. Finally, here are the test set results:

Profile data test set results:

Best score on validation set: 0.5834370306801115

Best parameters: {'gamma': 0.01, 'C': 100}

Test set score with best parameters: 0.5736040609137056

Results from a test set of tweet body data:

Best score on validation set: 0.27586920122131386

Best parameters: {'gamma': 0.01, 'C': 100}

Test set score with best parameters: 0.30962343096234307

The confusion matrix is shown in

Table 5.

Regarding the evaluation of test data, we used three values: accuracy, precision, and recall. accuracy= , recall= , precision=.

Table 6 shows the classification results of the created classifier A. The accuracy of the resulting model can be measured using the entire test data. We classified the profile set with classifier A and found that the average accuracy rate of Task Ts was 30%. After classifying the tweet set with classifier A, we found that the average accuracy rate of Task Ps was 57%. classification results of the classifier A created. The results show on blow

Table 6.

4.2.2. Results of classifier B using BERT

Table 7 shows the classification results of the created classifier B. Classifier B training data, validation data, and evaluation data are randomly divided, so the results change each time the program is run.

Table 7 shows the results obtained after many runs. The accuracy of the resulting model can be measured using the entire test data. Classification of the profile set was performed with classifier B; the average accuracy rate of Task Tb was 60%. We classified the tweet set with classifier B and found that the average accuracy rate of Task Pb was 32.6%.

From these results, it can be seen that the accuracy rate of classifier B is slightly higher than that of classifier A.

5. Conclusions

In this study, we find that the results obtained by classifying the set of tweet texts with BERT are higher than the results obtained by classifying with SVM. the results obtained by classifying the set of profile with SVM are higher than the results obtained by classifying with BERT.

In order to improve the accuracy rate, we increase the amount of data and remove noise in the data preprocessing.

In recent years, there has been a lot of research into visualizing the learning results of neural network models (research on the explain ability of models) to solve the problem of how to present language models that have actually been obtained to users. By incorporating these research results, we plan to present learning results to users.

Future plans are as follows. Collect information about infants from Twitter to increase the size of the data. Data preprocessing is to denoise text data and to remove stopwords. We will try to apply more sophisticated models such as GPT for comparison in the future.

References

- Maryam, Khodabakhsh.; Fattane, Zarrinkalam.; Hossein, Fani.; Ebrahim, Bagheri.; Predicting Personal Life Events from Streaming Social Content, October 2018, the 27th ACM International Conference.

- Koustuv, Saha.; Jordyn, Seybolt.; Stephen, M, Mattingly.; Talayeh, Aledavood.; Chaitanya, Konjeti.; Gonzalo, J, Martinez.; Ted, Grover.; Gloria, Mark.; Munmun De Choudhury.; What Life Events are Disclosed on Social Media, How, When, and By Whom? CHI '21: CHI Conference on Human Factors in Computing Systems, Yokohama Japan, May 8 - 13, 2021.

- Choudhury, M.D.; and Massimi, M.; “She said yes!” Liminality and Engagement Announcements on Twitter, Proc. iConference 2015, Newport Beach, CA. USA, pp.1-13 (2015).

- Burke, M.; and Kraut, R.; Using Facebook after Losing a Job: Differential Benefits of Strong and Weak Ties, Proc. 2013 Conf. Computer Supported Cooperative Work and Social Computing (CSCW 2013), San Antonio, TX, USA, pp.1419-1430 (2013).

- Minoru, Yoshida.; Kenji, Kita.; Mining Numbers in Text: A Survey;2021. [CrossRef]

- Anton, Bakalov.; Ariel, Fuxman.; Partha, Pratim, Talukdar.; Soumen, Chakrabarti.; SCAD: collective discovery of attribute values. WWW 2011: 447-456.

- Jacob, Devlin.; Ming-Wei, Chang.; Kenton, Lee.; and Kristina, Toutanova.; Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint 2018. arXiv:1810.04805.

- Kohei, Yamamoto.; Kazutaka, Shimada.; Acquisition of Periodic Events with Person Attributes. IALP 2020, Kuala Lumpur, Dec 4-6, 2020.

- Xikun, Zhang.; Deepak, Ramachandran.; Ian, Tenney.; Yan, Elazar.; Dan, Roth: Do Language Embeddings capture Scales? EMNLP(Findings) 2020: 4889-4896.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).