Submitted:

26 April 2023

Posted:

27 April 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

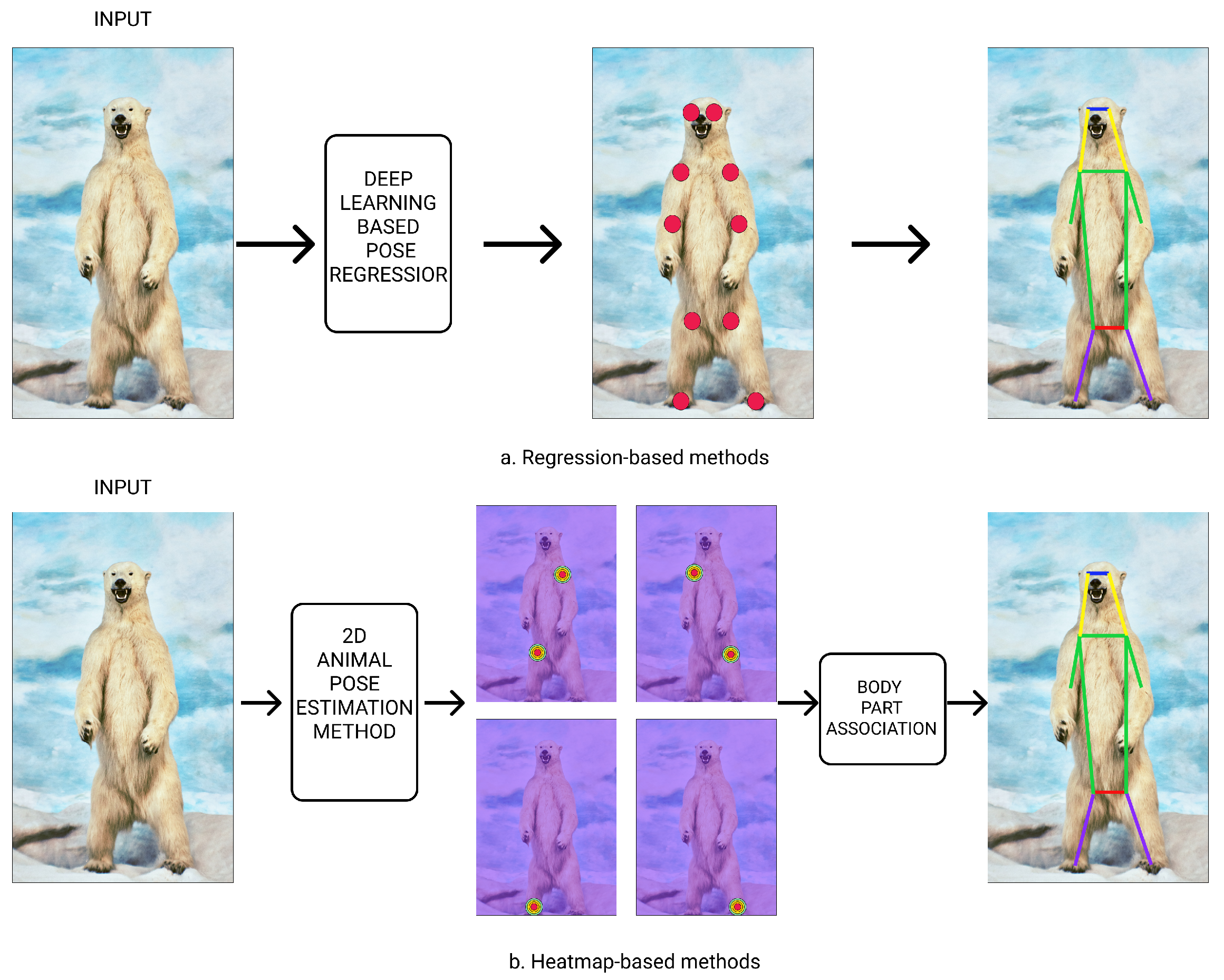

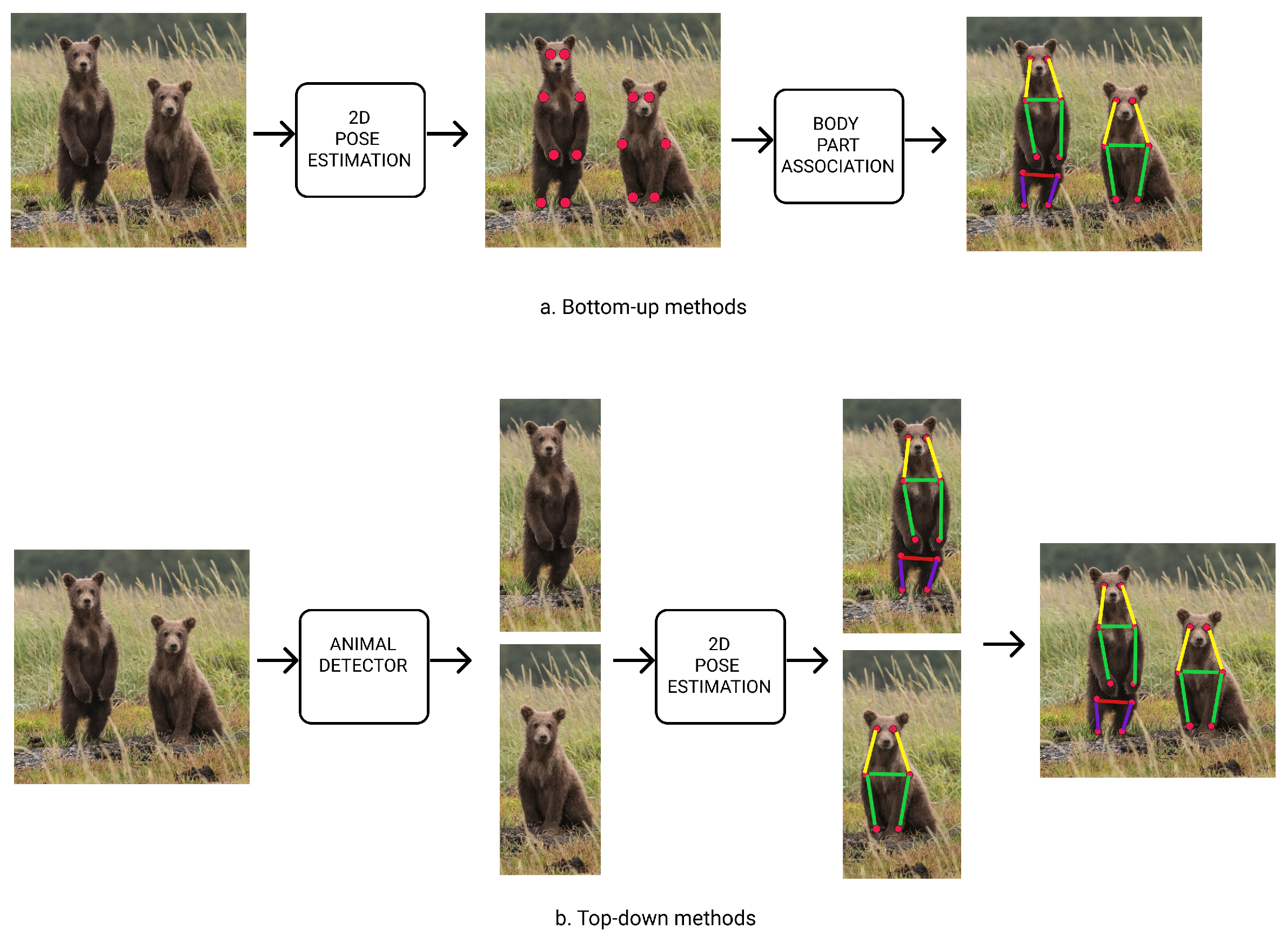

2. Related Work

3. An Overview of Proposed Deep Networks

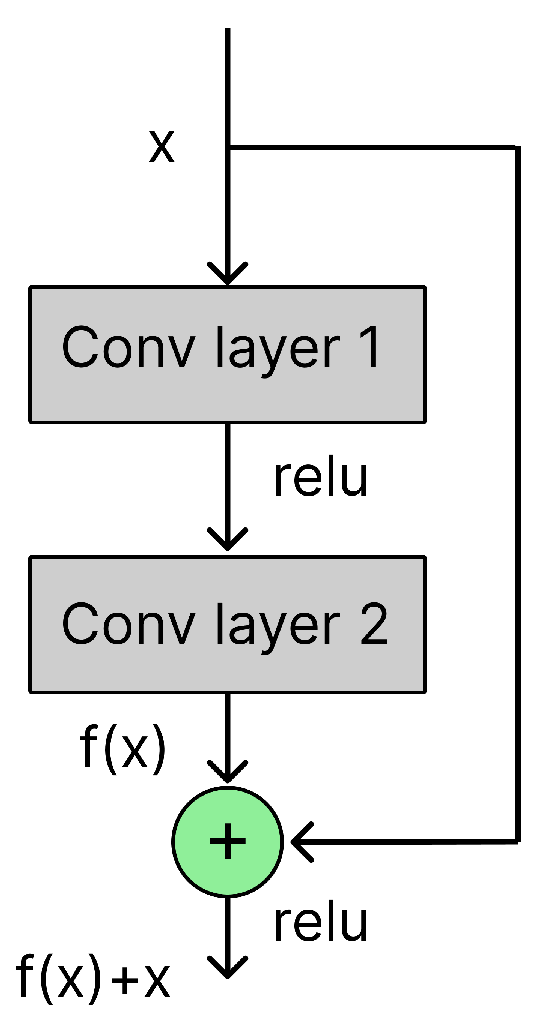

3.1. ResNet

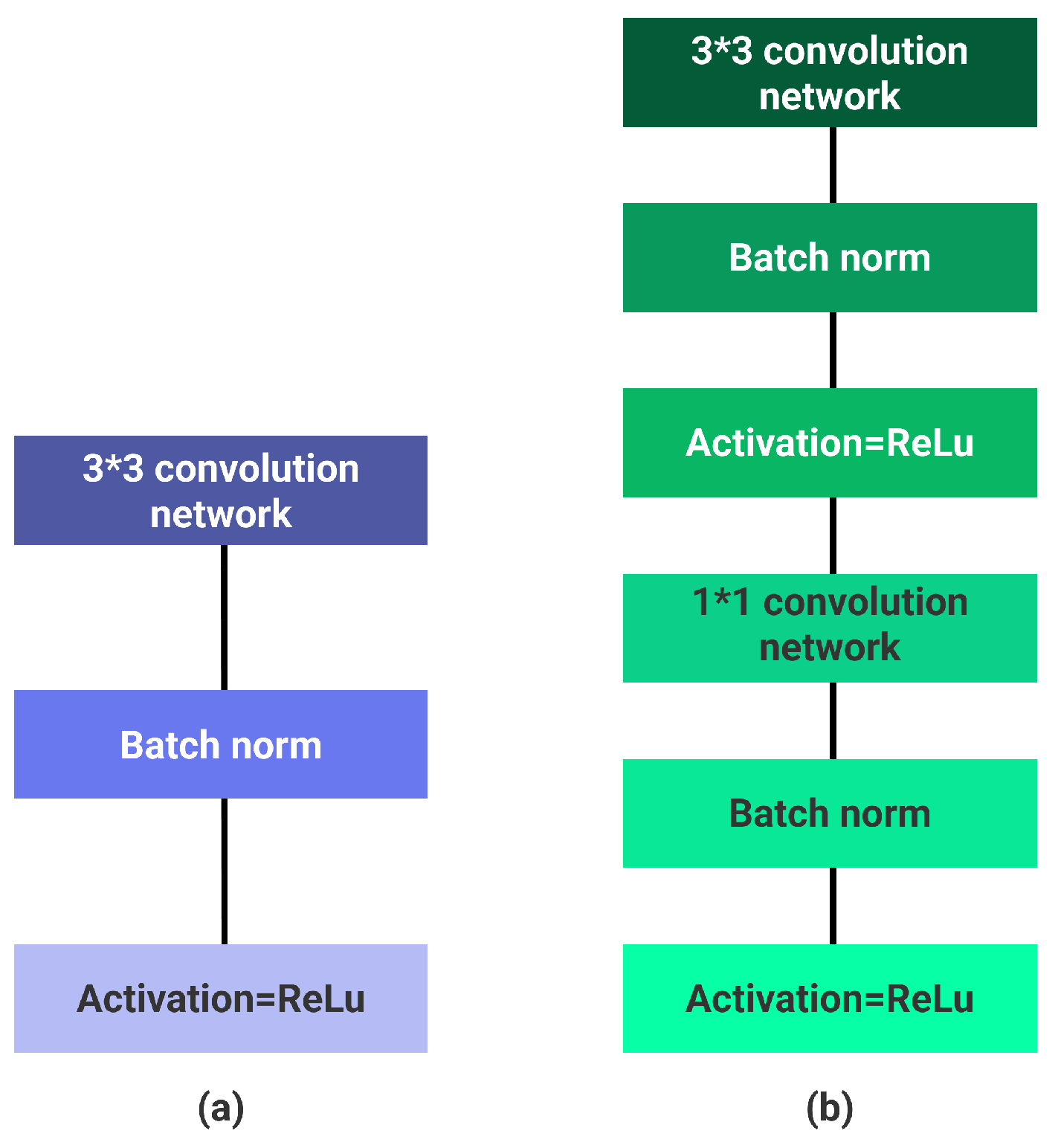

3.2. MobileNet

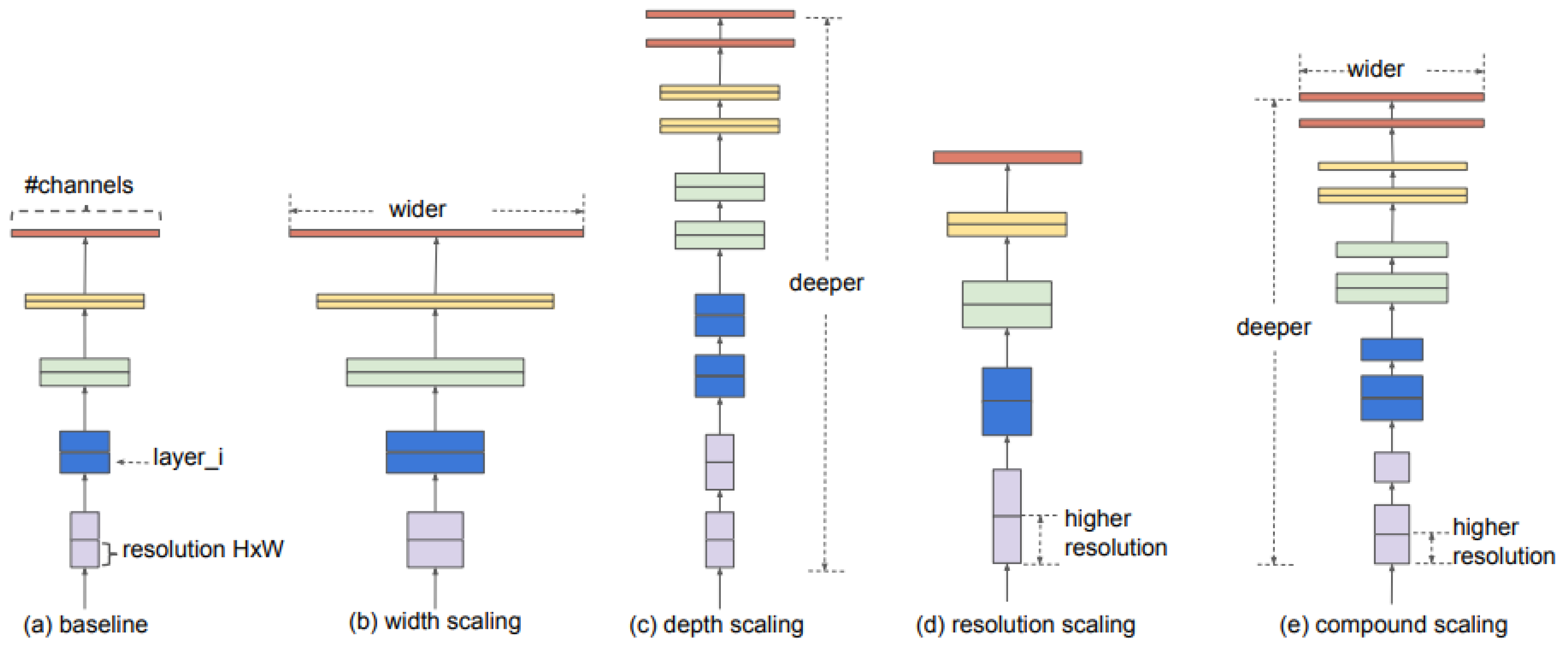

3.3. EfficientNet

3.4. DLCRNet

4. Experimental Setup

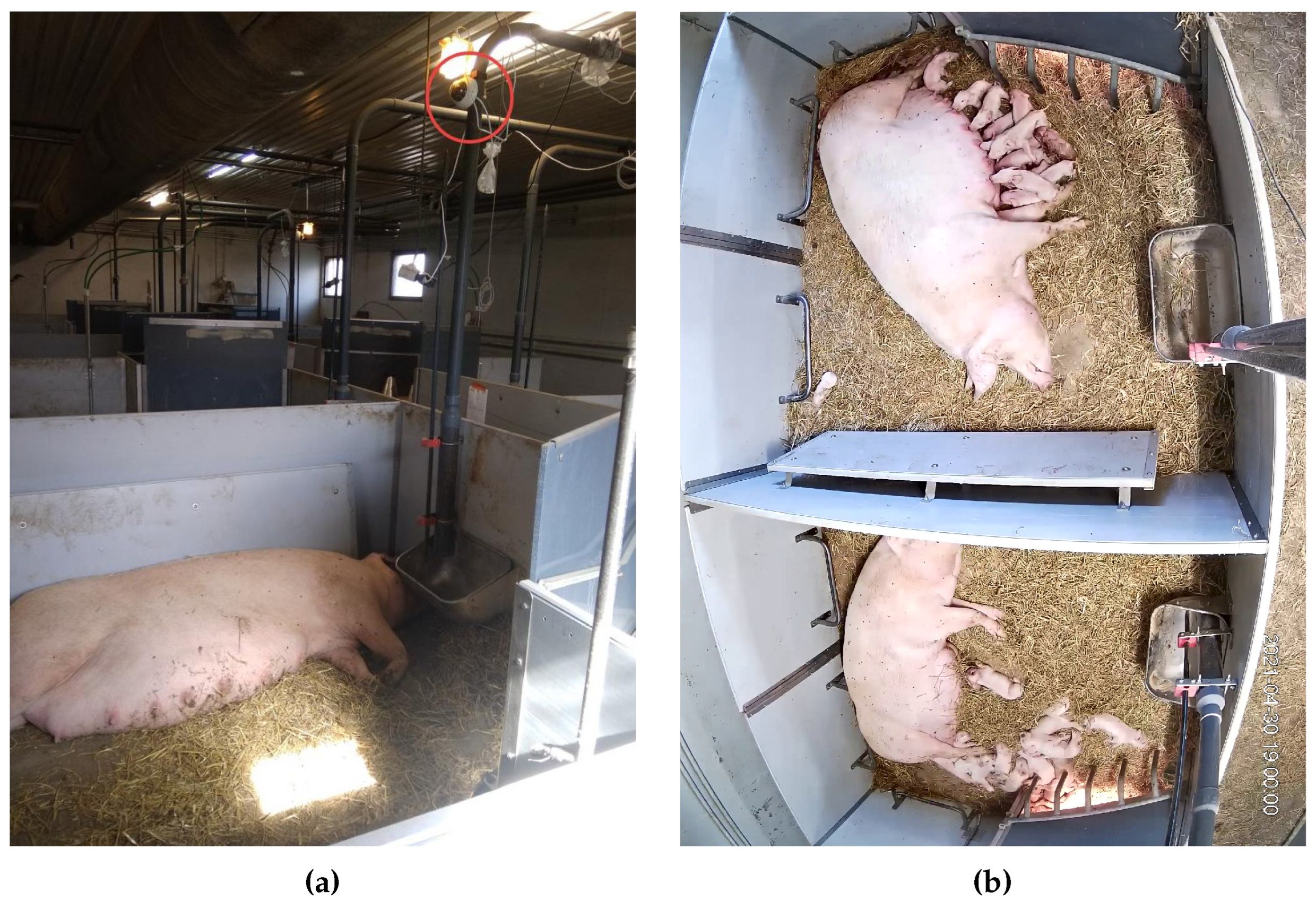

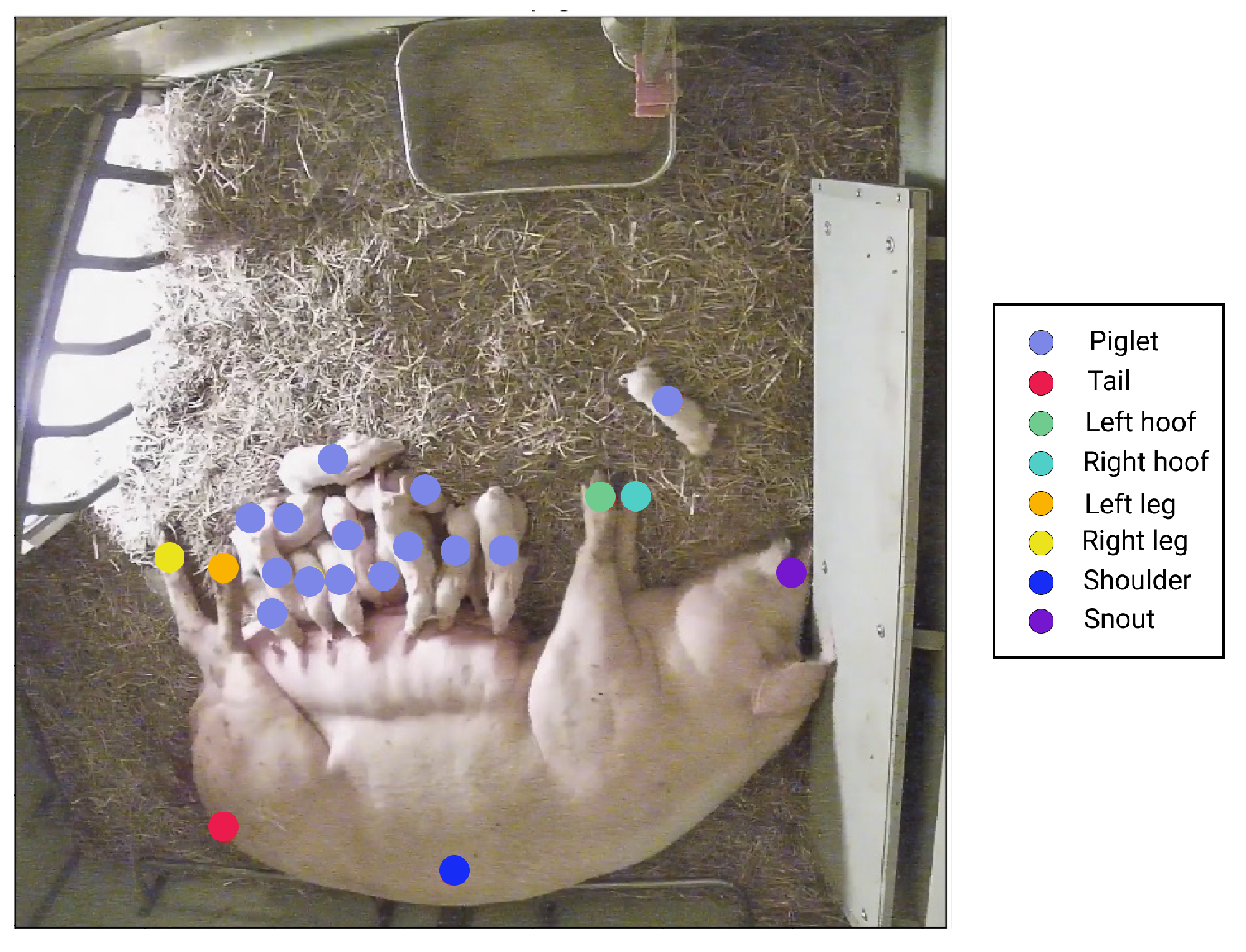

4.1. DataSet

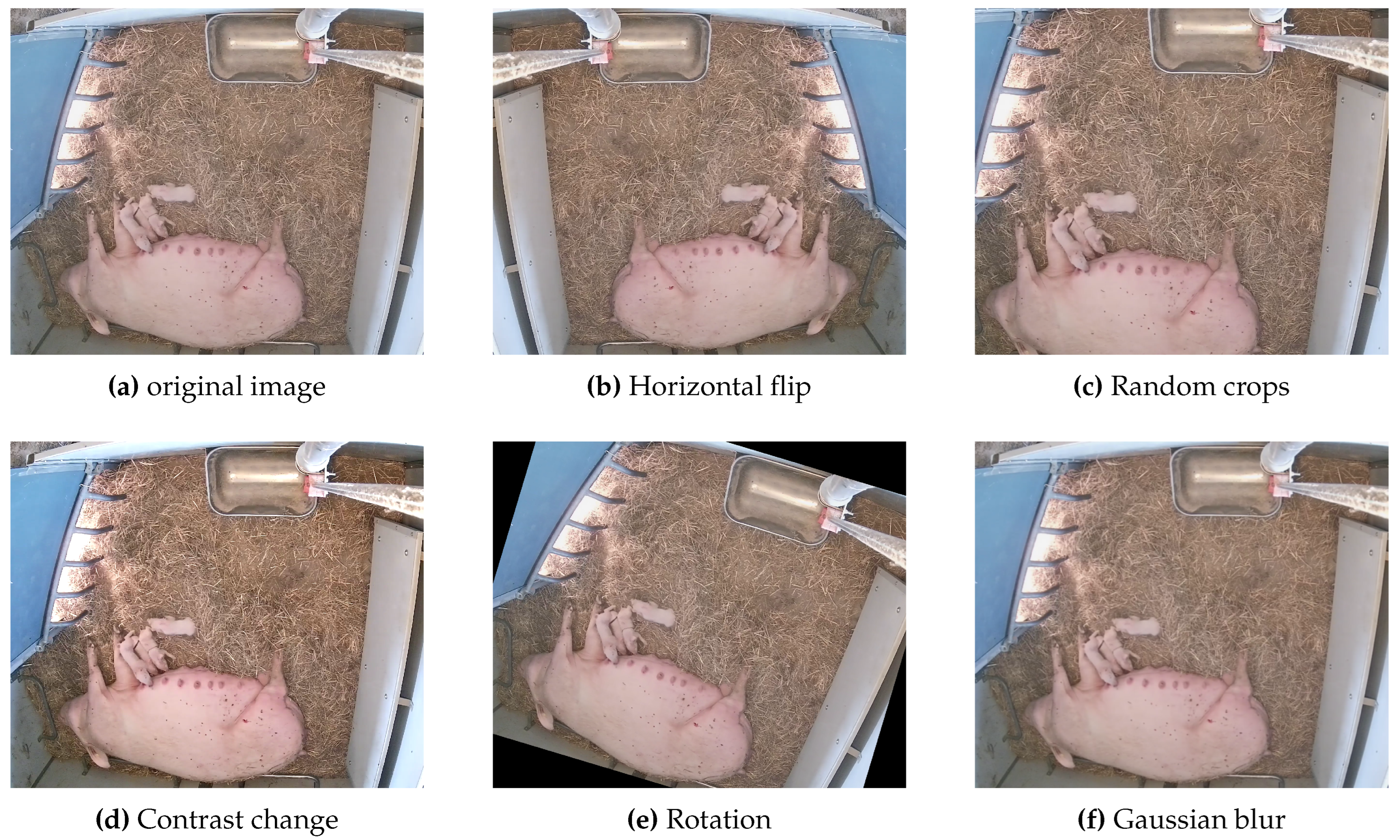

4.2. Implementation Details

5. Experimental Results

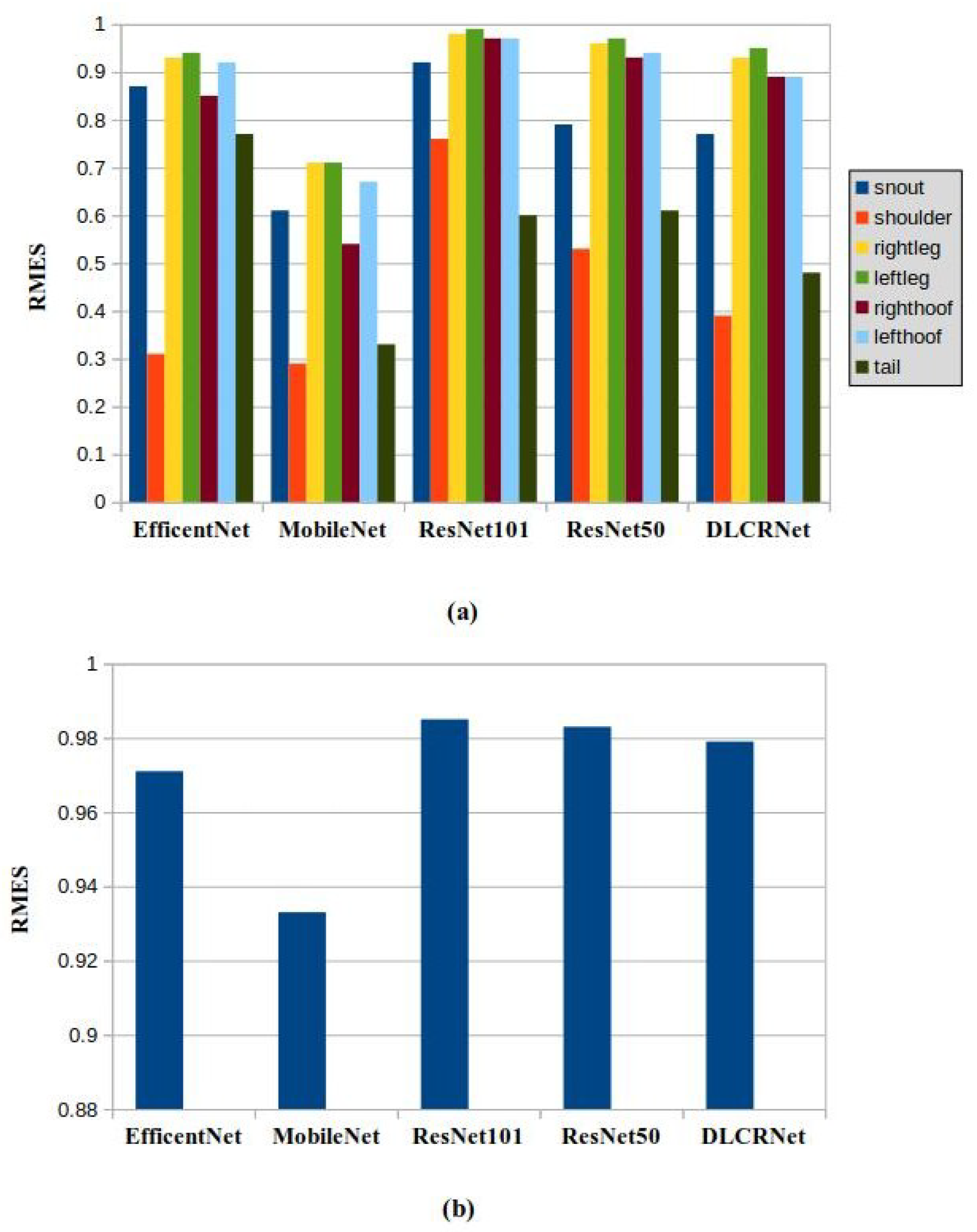

5.1. Root Mean Squared Error (RMSE)

5.2. Error

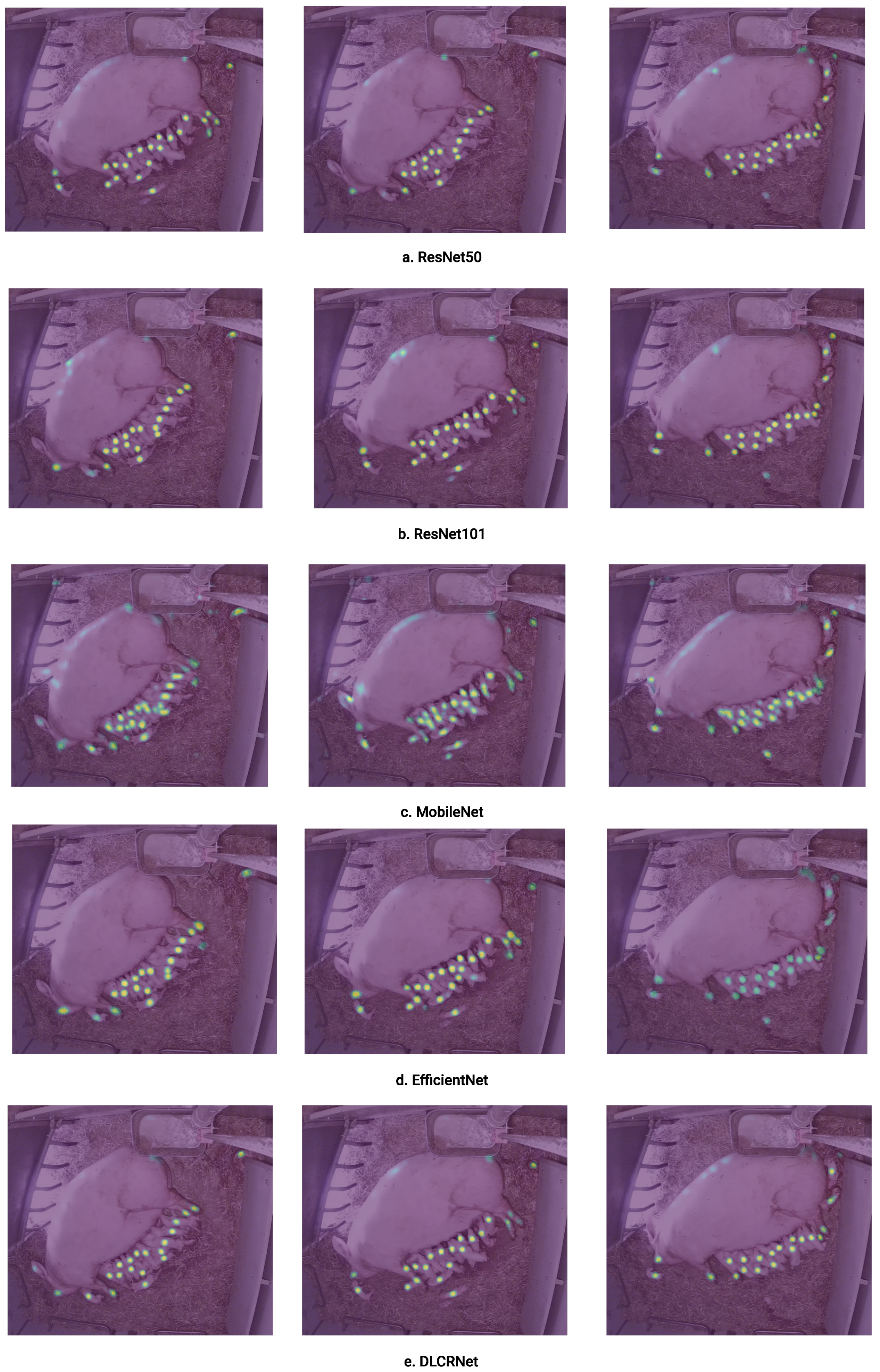

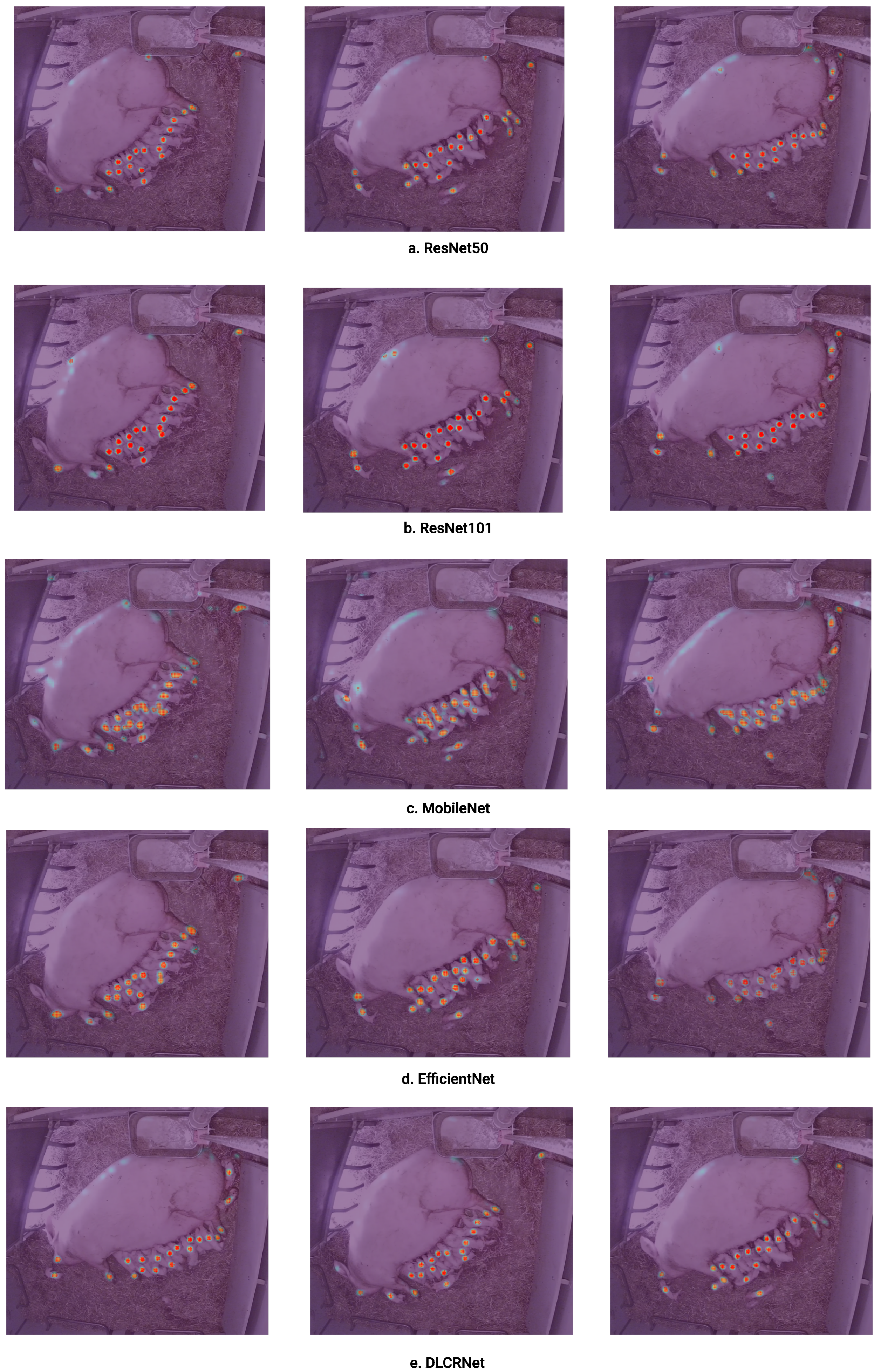

5.3. Score and Location Refinement Maps

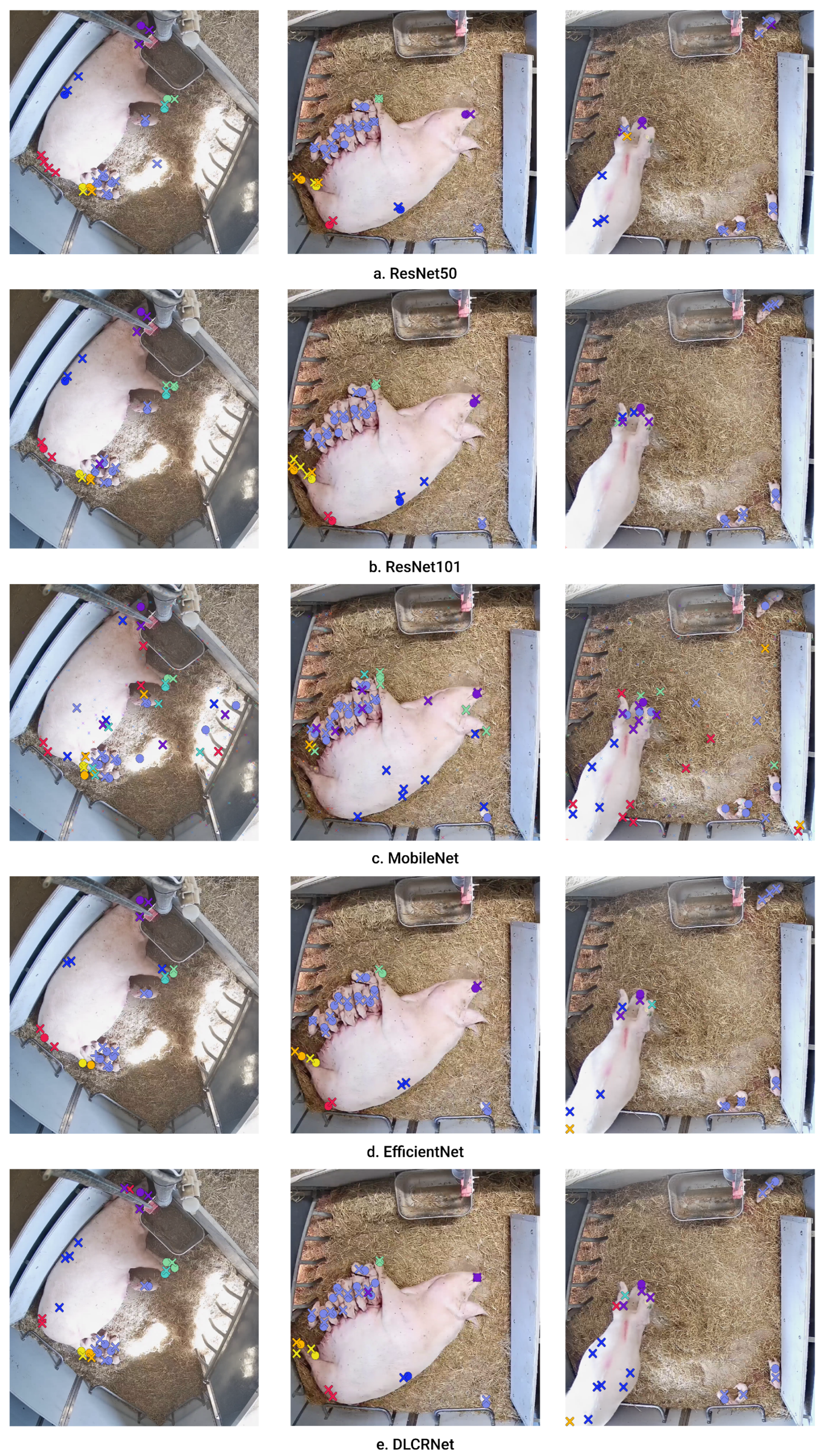

5.4. Qualitative Results

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Berckmans, D. Precision livestock farming technologies for welfare management in intensive livestock systems. Rev Sci Tech 2014, 33, 189–96. [Google Scholar] [CrossRef] [PubMed]

- Berckmans, D. General introduction to precision livestock farming. Animal Frontiers 2017, 7, 6–11. [Google Scholar] [CrossRef]

- Malak-Rawlikowska, A.; Gębska, M.; Hoste, R.; Leeb, C.; Montanari, C.; Wallace, M.; de Roest, K. Developing a Methodology for Aggregated Assessment of the Economic Sustainability of Pig Farms. Energies 2021, 14. [Google Scholar] [CrossRef]

- Kemp, B., D. S.C..S.N.M. Recent advances in pig reproduction: Focus on impact of genetic selection for female fertility. Reproduction in domestic animals = Zuchthygiene, 2018, 33, 28–36. [Google Scholar] [CrossRef]

- iemi JK, Bergman P, O. S.S.A.M.L.; M, H. Modeling the costs of postpartum dysgalactia syndrome and locomotory disorders on sow productivity and replacement. Frontiers in Veterinary Science 2017, 4. [Google Scholar] [CrossRef]

- Baxter, E.; Edwards, S. Piglet mortality and morbidity: Inevitable or unacceptable? In Advances in Pig Welfare; Spinka, M., Ed.; Elsevier: Netherlands, 2018; Elsevier: Netherlands, 2018; pp. 73–100. [Google Scholar] [CrossRef]

- Oliviero, C.; Junnikkala, S.; Peltoniemi, O. The challenge of large litters on the immune system of the sow and the piglets. Reproduction in Domestic Animals 2019, 54, 12–21. [Google Scholar] [CrossRef] [PubMed]

- Zheng, C.; Zhu, X.; Yang, X.; Wang, L.; Tu, S.; Xue, Y. Automatic recognition of lactating sow postures from depth images by deep learning detector. Computers and Electronics in Agriculture 2018, 147, 51–63. [Google Scholar] [CrossRef]

- Peltoniemi, O.; Oliviero, C.; Yun, J.; Grahofer, A.; Björkman, S. Management practices to optimize the parturition process in the hyperprolific sow. Journal of Animal Science 2020, 98, S96–S106. [Google Scholar] [CrossRef]

- Vranken, E.; Berckmans, D. Precision livestock farming for pigs. Animal Frontiers 2017, 7, 32–37. [Google Scholar] [CrossRef]

- Gómez, Y.; Stygar, A.; Boumans, I.; Bokkers, E.; Pedersen, L.; Niemi, J.; Pastell, M.; Manteca, X.; Llonch, P. A Systematic Review on Validated Precision Livestock Farming Technologies for Pig Production and Its Potential to Assess Animal Welfare. Frontiers in Veterinary Science 2021, 8. [Google Scholar] [CrossRef]

- Maselyne, J.; Adriaens, I.; Huybrechts, T.; De Ketelaere, B.; Millet, S.; Vangeyte, J.; Van Nuffel, A.; Saeys, W. Measuring the drinking behaviour of individual pigs housed in group using radio frequency identification (RFID). Animal : an international journal of animal bioscience 2015, 11, 1–10. [Google Scholar] [CrossRef]

- Pray, I.; Swanson, D.; Ayvar, V.; Muro, C.; Moyano, L.m.; Gonzalez, A.; Garcia, H.H.; O’Neal, S. GPS Tracking of Free-Ranging Pigs to Evaluate Ring Strategies for the Control of Cysticercosis/Taeniasis in Peru. PLoS neglected tropical diseases 2016, 10, e0004591. [Google Scholar] [CrossRef] [PubMed]

- Escalante, H.J.; Rodriguez, S.V.; Cordero, J.; Kristensen, A.R.; Cornou, C. Sow-activity classification from acceleration patterns: A machine learning approach. Computers and Electronics in Agriculture 2013, 93, 17–26. [Google Scholar] [CrossRef]

- Brown-Brandl, T.; Rohrer, G.; Eigenberg, R. Analysis of feeding behavior of group housed growing–finishing pigs. Computers and Electronics in Agriculture 2013, 96, 246–252. [Google Scholar] [CrossRef]

- Kashiha, M.; Bahr, C.; Haredasht, S.A.; Ott, S.; Moons, C.P.; Niewold, T.A.; Ödberg, F.O.; Berckmans, D. The automatic monitoring of pigs water use by cameras. Computers and Electronics in Agriculture 2013, 90, 164–169. [Google Scholar] [CrossRef]

- Viazzi, S.; Ismayilova, G.; Oczak, M.; Sonoda, L.; Fels, M.; Guarino, M.; Vranken, E.; Hartung, J.; Bahr, C.; Berckmans, D. Image feature extraction for classification of aggressive interactions among pigs. Computers and Electronics in Agriculture 2014, 104, 57–62. [Google Scholar] [CrossRef]

- Kashiha, M.A.; Bahr, C.; Ott, S.; Moons, C.P.; Niewold, T.A.; Tuyttens, F.; Berckmans, D. Automatic monitoring of pig locomotion using image analysis. Livestock Science 2014, 159, 141–148. [Google Scholar] [CrossRef]

- Jafarzadeh, P.; Virjonen, P.; Nevalainen, P.; Farahnakian, F.; Heikkonen, J. Pose Estimation of Hurdles Athletes using OpenPose. 2021 International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), 2021, pp. 1–6. [CrossRef]

- Farahnakian, F.; Heikkonen, J. Deep Learning Based Multi-Modal Fusion Architectures for Maritime Vessel Detection. Remote Sensing 2020, 12. [Google Scholar] [CrossRef]

- Jmour, N.; Zayen, S.; Abdelkrim, A. Convolutional neural networks for image classification. 2018 International Conference on Advanced Systems and Electric Technologies, 2018, pp. 397–402. [CrossRef]

- Yan, K.; Huang, S.; Song, Y.; Liu, W.; Fan, N. Face recognition based on convolution neural network. 2017 36th Chinese Control Conference (CCC), 2017, pp. 4077–4081. [CrossRef]

- Brünger, J.; Gentz, M.; Traulsen, I.; Koch, R. Panoptic Segmentation of Individual Pigs for Posture Recognition. Sensors 2020, 20. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Sturm, B.; Edwards, S.; Jeppsson, K.H.; Olsson, A.C.; Müller, S.; Hensel, O. Deep Learning and Machine Vision Approaches for Posture Detection of Individual Pigs. Sensors 2019, 19. [Google Scholar] [CrossRef]

- Farahnakian, F.; Heikkonen, J.; Björkman, S. Multi-pig Pose Estimation Using DeepLabCut. 2021 11th International Conference on Intelligent Control and Information Processing (ICICIP), 2021, pp. 143–148. [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition, 2015. [CrossRef]

- Mathis, A.; Mamidanna, P.; Cury, K.; Abe, T.; Murthy, V.; Mathis, M.; Bethge, M. DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nature Neuroscience 2018, 21. [Google Scholar] [CrossRef]

- Zheng, C.; Wu, W.; Chen, C.; Yang, T.; Zhu, S.; Shen, J.; Kehtarnavaz, N.; Shah, M. Deep Learning-Based Human Pose Estimation: A Survey, 2020. [CrossRef]

- Toshev, A.; Szegedy, C. DeepPose: Human Pose Estimation via Deep Neural Networks. 2014 IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2014. [CrossRef]

- Dang, Q.; Yin, J.; Wang, B.; Zheng, W. Deep learning based 2D human pose estimation: A survey. Tsinghua Science and Technology 2019, 24, 663–676. [Google Scholar] [CrossRef]

- Fang, H.S.; Xie, S.; Tai, Y.W.; Lu, C. RMPE: Regional Multi-person Pose Estimation. 2017 IEEE International Conference on Computer Vision (ICCV), 2017, pp. 2353–2362. [CrossRef]

- Nasirahmadi, A.; Edwards, S.A.; Sturm, B. Implementation of machine vision for detecting behaviour of cattle and pigs. Livestock Science 2017, 202, 25–38. [Google Scholar] [CrossRef]

- Mahfuz, S.; Mun, H.S.; Dilawar, M.A.; Yang, C.J. Applications of Smart Technology as a Sustainable Strategy in Modern Swine Farming. Sustainability 2022, 14. [Google Scholar] [CrossRef]

- Chung, Y.; Kim, H.; Lee, H.; Park, D.; Jeon, T.; and, H.H.C. A Cost-Effective Pigsty Monitoring System Based on a Video Sensor. KSII Transactions on Internet and Information Systems 2014, 8, 1481–1498. [Google Scholar] [CrossRef]

- Ott, Sanne and Moons, Christel and Kashiha, Mohammadamin A and Bahr, Claudia and Tuyttens, Frank and Berckmans, Daniel and Niewold, Theo A. Automated video analysis of pig activity at pen level highly correlates to human observations of behavioural activities. LIVESTOCK SCIENCE 2014, 160, 132–137. [Google Scholar] [CrossRef]

- Kashisha, Mohammad Amin and Bahr, Claudia and Ott, Sanne and Moons, Christel and Niewold, Theo A and Tuyttens, Frank and Berckmans, Daniel. Automatic monitoring of pig locomotion using image analysis. LIVESTOCK SCIENCE 2014, 159, 141–148. [Google Scholar] [CrossRef]

- Ahrendt, P.; Gregersen, T.; Karstoft, H. Development of a real-time computer vision system for tracking loose-housed pigs. Computers and Electronics in Agriculture 2011, 76, 169–174. [Google Scholar] [CrossRef]

- Oczak, M.; Maschat, K.; Berckmans, D.; Vranken, E.; Baumgartner, J. Automatic estimation of number of piglets in a pen during farrowing, using image analysis. Biosystems Engineering 2016, 151, 81–89. [Google Scholar] [CrossRef]

- Wutke, M.; Heinrich, F.; Das, P.P.; Lange, A.; Gentz, M.; Traulsen, I.; Warns, F.K.; Schmitt, A.O.; Gültas, M. Detecting Animal Contacts—A Deep Learning-Based Pig Detection and Tracking Approach for the Quantification of Social Contacts. Sensors 2021, 21. [Google Scholar] [CrossRef]

- T. Psota, E.; Schmidt, T.; Mote, B.; C. Pérez, L. Long-Term Tracking of Group-Housed Livestock Using Keypoint Detection and MAP Estimation for Individual Animal Identification. Sensors 2020, 20. [Google Scholar] [CrossRef]

- Zhang, Y.; Cai, J.; Xiao, D.; Li, Z.; Xiong, B. Real-Time Sow Behavior Detection Based on Deep Learning. Comput. Electron. Agric. 2019, 163. [Google Scholar] [CrossRef]

- Liu, C.; Zhou, H.; Cao, J.; Guo, X.; Su, J.; Wang, L.; Lu, S.; Li, L. Behavior Trajectory Tracking of Piglets Based on DLC-KPCA. Agriculture 2021, 11, 1–22. [Google Scholar] [CrossRef]

- Lao, F.; Brown-Brandl, T.; Stinn, J.; Liu, K.; Teng, G.; Xin, H. Automatic recognition of lactating sow behaviors through depth image processing. Computers and Electronics in Agriculture 2016, 125, 56–62. [Google Scholar] [CrossRef]

- Yang, A.; Huang, H.; Zhu, X.; Yang, X.; Pengfei, C.; Shimei, L.; Xue, Y. Automatic recognition of sow nursing behaviour using deep learning-based segmentation and spatial and temporal features. Biosystems Engineering 2018. [Google Scholar] [CrossRef]

- Leonard, S.; Xin, H.; Brown-Brandl, T.; Ramirez, B. Development and application of an image acquisition system for characterizing sow behaviors in farrowing stalls. Computers and Electronics in Agriculture 2019, 163, 104866. [Google Scholar] [CrossRef]

- Küster, S., N. P.M.C.S.B..T.I. Automatic behavior and posture detection of sows in loose farrowing pens based on 2D-video images. Frontiers in Animal Science 2021, 64. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 6517–6525. [CrossRef]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. NIPS; Cortes, C.; Lawrence, N.D.; Lee, D.D.; Sugiyama, M.; Garnett, R., Eds., 2015, pp. 91–99.

- Basodi, S.; Ji, C.; Zhang, H.; Pan, Y. Gradient amplification: An efficient way to train deep neural networks. Big Data Mining and Analytics 2020, 3, 196–207. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications, 2017. [CrossRef]

- Debnath, B.; O’Brien, M.; Yamaguchi, M.; Behera, A. Adapting MobileNets for mobile based upper body pose estimation. 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), 2018, pp. 1–6. [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions, 2016. [CrossRef]

- Huang, Y.; Cheng, Y.; Bapna, A.; Firat, O.; Chen, M.X.; Chen, D.; Lee, H.; Ngiam, J.; Le, Q.V.; Wu, Y.; Chen, Z. GPipe: Efficient Training of Giant Neural Networks using Pipeline Parallelism, 2018. [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks 2019. [CrossRef]

- Chen, L.C.; Yang, Y.; Wang, J.; Xu, W.; Yuille, A.L. Attention to Scale: Scale-Aware Semantic Image Segmentation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016.

- Lauer, J.; Zhou, M.; Ye, S.; Menegas, W.; Nath, T.; Rahman, M.M.; Di Santo, V.; Soberanes, D.; Feng, G.; Murthy, V.N.; Lauder, G.; Dulac, C.; Mathis, M.W.; Mathis, A. Multi-animal pose estimation and tracking with DeepLabCut. bioRxiv, 2021. [Google Scholar] [CrossRef]

- Neverova, N.; Wolf, C.; Taylor, G.W.; Nebout, F. Multi-scale Deep Learning for Gesture Detection and Localization. Computer Vision - ECCV 2014 Workshops; Agapito, L., Bronstein, M.M., Rother, C., Eds.; Springer International Publishing: Cham, 2015; pp. 474–490. [Google Scholar]

- Eigen, D.; Fergus, R. Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. Proceedings of the IEEE international conference on computer vision, 2015, pp. 2650–2658.

- Farabet, C.; Couprie, C.; Najman, L.; LeCun, Y. Learning Hierarchical Features for Scene Labeling. IEEE Transactions on Pattern Analysis and Machine Intelligence 2013, 35, 1915–1929. [Google Scholar] [CrossRef]

- Wang, L.; Yin, B.; Guo, A.; Ma, H.; Cao, J. Skip-Connection Convolutional Neural Network for Still Image Crowd Counting. Applied Intelligence 2018, 48, 3360–3371. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. International Conference on Learning Representations 2014. [Google Scholar]

- Insafutdinov, E.; Pishchulin, L.; Andres, B.; Andriluka, M.; Schiele, B. DeeperCut: A Deeper, Stronger, and Faster Multi-Person Pose Estimation Model. CoRR 2016, abs/1605.03170. [Google Scholar]

| EfficentNet | MobileNet | ResNet101 | ResNet50 | DLCRNet | |

|---|---|---|---|---|---|

| Train error | 12.71 | 16.28 | 5.92 | 6.97 | 8.12 |

| Test error | 34.98 | 24.12 | 28.71 | 37.21 | 33.48 |

| Train error with p-cutoff | 9.12 | 12.2 | 4.72 | 4.96 | 5.04 |

| Test error with p-cutoff | 19.95 | 16.72 | 18.6 | 19.12 | 21.66 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).