1. Introduction

Over the past 20 years, Light Detection and Ranging (LiDAR) technology has rapidly developed. As an active remote sensing technology, LiDAR uses active laser pulse signals to quickly and accurately obtain information about the distance, location, reflectance, and other characteristics of surrounding objects by emitting laser pulses and receiving the reflected signals from objects [

1]. However, laser point cloud is unable to acquire spectral information of target objects and have a single color, which is not conducive to processing and understanding. Conversely, optical images can obtain rich spectral information and texture details of the land surface, which enables rapid identification of surface features and have better visual effects. Point cloud and optical images have complementary representations of target objects. By registering and fusing three-dimensional (3D) point cloud data with two-dimensional (2D) image data [

2], a colored point cloud with rich texture details can be obtained, enhancing the ability to discriminate between different objects. This can be widely applied in various remote sensing fields such as 3D urban modeling, smart city, urban planning, resource utilization, environmental monitoring and disaster assessment.

The current mobile measurement systems are mainly divided into two types: airborne and vehicle-mounted, which integrate multiple devices such as LiDAR, cameras, Global Positioning System (GPS), and Inertial Measurement Unit (IMU). Although these systems have relatively high measurement accuracy, they are costly and complex. Therefore, in this article we establish an automatic point cloud coloring method for an integrated system of LiDAR and GoPro. The aim is to automatically color the point cloud based on video imagery in the absence of POS and IMU. During the experimental process, we mainly encountered three key issues:

- (1)

Due to the significant deformation and motion blur in video images, as well as the existence of trailing phenomena, the video images contained blurred pixels. Therefore, we need to address the issue of reliable selection of corresponding points between adjacent images for relative orientation.

- (2)

The ground mobile LiDAR system without a POS is a loosely coupled integrated system. To achieve automatic colorization of point cloud data and video images, a specific and effective registration strategy is required.

- (3)

During the point cloud coloring process, a 3D point corresponded to multiple video images. Obtaining a uniform and realistic color is the third key issue that this article needs to address.

Based on the three key issues mentioned above, we will briefly describe the methods and processes in the introduction.

1.1. Selecting reliable corresponding points

GoPro cameras are not professional surveying cameras. When used for mobile measurements, nonlinear distortion, motion blur, edge blurring, and other phenomena can often occur in the video images. Therefore, we first calibrate the GoPro camera to compensate for non-linear distortions. Typically, a distortion model is introduced into the central projection imaging equation, and correction coefficients are calculated based on control points or other methods to correct the image [

3]. Brown [

4] first proposed the famous Brown model in 1971, which includes radial and tangential distortion, both of which are non-linear. Melen and Balchen [

5] subsequently proposed an additional parameter to compensate for linear distortion caused by the horizontal and vertical axes of the image not being perpendicular, although this type of distortion is generally negligible [

6]. Fraser [

7] proposed another type of distortion, called prism distortion, which is mainly caused by poor camera lens design and manufacturing, and which can be compensated for by adding a linear factor after the radial and tangential distortion models [

8]. Based on the above models, Gao et al. [

9] proposed a tangential distortion that accounts for higher-order and cross-order terms, which is suitable for more complex optical distortions. Among these types of distortions, radial and tangential distortions have a much greater impact than other distortions [

10,

11]. Therefore, in this article we use radial and tangential distortion models to correct the GoPro action camera based on chessboard images extracted from video streams, effectively reducing the non-linear distortion of the image.

After calibration, the video images may still have problems such as trailing and edge blur, which greatly affect the matching of corresponding points. Therefore, we uniformly adopt the method of feature matching [

12] for the selection of corresponding points. Currently, common methods for feature point extraction include the Moravec operator, Harris [

13] operator, Forstner operator, Scale-invariant Feature Transform (SIFT) [

14] algorithm, Speeded-Up Robust Features (SURF) [

15] algorithm and Oriented FAST and Rotated BRIEF (ORB) [

16] algorithm. Although the Moravec operator has a relatively simple feature extraction process, its performance in extracting edges and noise is poor and it requires manual setting of empirical values. The Forstner operator has high accuracy and fast computation speed, but the algorithm is complex and difficult to implement, requiring continuous experimentation to determine the range of interest and threshold values [

17]. Harris is a signal-based point feature extraction operator [

18] with higher accuracy and reliability in extracting various corner points [

19]. The SIFT algorithm is a feature-based matching method with strong matching ability [

20], stable features, and invariance to rotation, scale, and brightness. However, it is susceptible to external noise and has a slower running speed. The SURF algorithm improves the method of feature extraction and description by using techniques such as integral images and box filters [

21]. It can convert the convolution operation of the image and template into several addition and subtraction operations [

22], making it more efficient, with a detection speed of more than three times that of the SIFT algorithm. The ORB algorithm uses Oriented FAST [

23] for feature extraction to solve the speed problem and Rotated BRIEF [

24] for feature description to solve the problem of spatial redundancy in feature description. Therefore, the ORB algorithm has both speed and accuracy, and is relatively stable. However, the speed of the ORB algorithm is relatively slow and it is not robust enough for rotation and scale changes. Based on the above analysis, this article attempts to use the SURF matching algorithm with distance restriction and random sample consensus (RANSAC). SURF is used for rough matching of the corresponding points, then deleting points with excessively long lines connecting corresponding points. Finally, RANSAC is used to eliminate mismatches to achieve precise matching of corresponding points. After these three steps, corresponding points with high accuracy and located in the central region can be filtered out.

1.2. Registration strategy

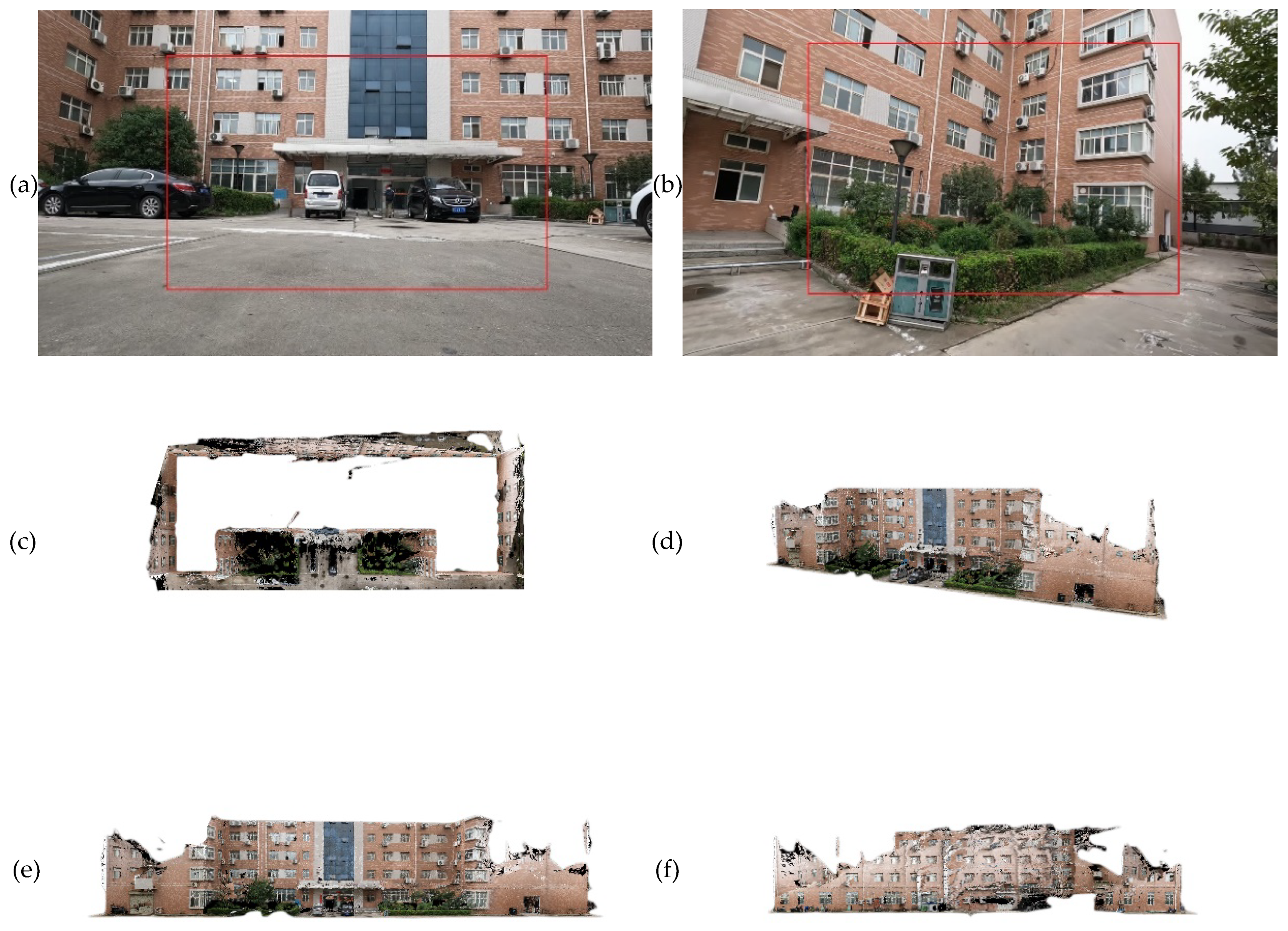

The video stream can be captured and sliced into multiple sequential images. Therefore, this article focuses on the problem of automatic registration between multiple sequential images and point cloud. Since there is no POS and IMU, it is challenging to obtain the exterior orientation elements of each image quickly and accurately. Currently, there are many research methods for the registration of point cloud and multiple images, but most of them are based on direct registration of image-based 3D point cloud. Song and Liu [

25] proposed a method of generating image-based 3D point cloud from sequential images and obtaining accurate exterior orientation elements of each image by registering the image-based 3D point cloud with LiDAR point cloud. Liu et al. [

26] further studied this approach by using the 3D-SIFT algorithm to extract feature points from both the LiDAR point cloud and the image-based 3D point cloud, achieving precise registration of the two types of point cloud using the SICP algorithm. Obviously, generating image-based point cloud is a feasible solution, but it requires high-quality image data. In the above-mentioned methods, digital cameras are usually used. In this study, the video images captured by the GoPro camera often suffer from large boundary deformation, unstable interior orientation elements, and blurred pixels. Therefore, problems such as multiple holes and deformation occurred in the image-based point cloud, as shown in

Figure 1. It is apparent that the quality of the 3D point cloud generated from the sequential images is poor. Therefore, it is not recommended to use point cloud registration for colorization between these 3D point clouds. Ultimately, we decided to adopt a 1-to-N registration strategy, which means first registering the point cloud with the first image to obtain the exterior orientation elements of the first image. Then, by using relative and absolute orientation, the exterior orientation elements of the remaining sequential images can be obtained.

1.2.1. Obtain the exterior orientation elements of the first image

It is clear that achieving high-precision automatic registration of point cloud and the first image has become a key issue. In recent years, scholars have focused more on feature-based registration methods for such problems. Generally, these methods require projecting the point cloud onto a 2D plane to generate an intensity image or a depth image, extracting effective stable and distinguishable feature points from the image, and performing feature matching by calculating the similarity between features [

27]. Feature-based registration methods convert the analysis of the entire image into the analysis of a certain feature of the image, simplifying the process and having good invariance to grayscale changes and image occlusions. Fang [

28] used different projection methods to project point cloud data and generated a point cloud intensity image. Then, using the SIFT algorithm to extract feature points, the automatic registration between the point cloud intensity image and optical image was achieved. Safdarinezhad et al. [

29] used the point cloud intensity and depth to generate an Optical Consistent LiDAR Product (OCLP) and completed automatic registration with high-resolution satellite images using the SIFT feature extraction method. Pyeon et al. [

30] used a method based on point cloud intensity images and RANSAC algorithms to perform rough matching. Then using the nearest point iterative ICP matching, greatly improved on the efficiency of the algorithm. Ding et al. [

31] used constraints based on point and line feature to achieve automatic registration of point cloud intensity images and aerial images. Fan et al. [

32] extracted corner points of windows and doors from point cloud intensity images and optical images, and used the correlation coefficient method to achieve automatic matching of feature points. In the methods above, the conversion of point cloud to image data results in loss of accuracy and requires the point cloud data to be relatively flat with minimal noise. Therefore, in this paper we propose a registration method based on normalized Zernike moments, which is also a point feature-based registration method. Low-order Zernike moments are mainly used to describe the overall shape characteristics of the image [

33], while high-order Zernike moments are mainly used to reflect texture details and other information of the image [

34]. Normalized Zernike moments can reflect features in multiple dimensions [

35], achieving good results even for low-quality images captured by a GoPro action camera and point cloud intensity images.

1.2.2. Obtain the exterior orientation elements of sequential images

After completing the registration of the first image and point cloud, relative and absolute orientation are required to transfer the exterior orientation elements for the rest of the sequential images. Relative orientation refers to using an algorithm to calculate the rotation matrix and displacement vector between the right and left image pairs [

36] based on several corresponding points in the stereo image pairs, so that the coincident rays intersect [

37]. According to this principle, if a continuous relative orientation is performed, the relative positional relationship between all stereo image pairs can be obtained. In traditional photogrammetry, continuous relative orientation is based on initial assumptions, typically assuming the initial values of the three angle elements of the rotation matrix are 0, the first component of the baseline vector is 1, and the other two components are replaced with small values, assuming the stereo image pairs are taken under approximately vertical photography conditions [

38]. However, in digital close-range photogrammetry, multi-baseline convergence photography is mainly used, such as the GoPro camera used in this paper. Moreover, we obtain video images by capturing video streams. At this time, the relationship between the left and right images in the stereo image pair may be a rotation at any angle, and the forms of angle elements and displacement vectors are complex and diverse. Therefore, traditional relative orientation methods pose difficulty for obtaining correct results [

39]. To address this problem, many methods have been proposed. Zhou et al. [

40] proposed a hybrid genetic algorithm and used unit quaternions to represent the matrix, which quickly converges without given initial values. Li et al. [

41] proposed a normalized eight-point algorithm to calculate the essential matrix and used the Gauss-Newton iteration method to solve the two standard orthogonal matrices produced by decomposing the essential matrix; this improves the accuracy of relative orientation. Therefore, this article intends to use a method based on essential matrix decomposition and non-linear optimization for relative orientation. Specifically, the essential matrix is first calculated, and its initial value is obtained by performing singular value decomposition [

42]. Then, nonlinear optimization is used to obtain an accurate solution.

The stereo model obtained from relative orientation is based on the image-space coordinate system, and its scale is arbitrary. To determine the true position of the stereo model in the point cloud coordinate system, the final step is to determine the transformation relationship between the image-space coordinate system and the point cloud coordinate system, that is, absolute orientation.

1.3. Realistic and accurate point cloud coloring method

In the process of coloring point cloud, a LiDAR point often corresponds to multiple video images. Due to the low quality of the video images used in this paper, problems such as blurry pixels, trailing images and significant deformation exist, making it challenging to perform point cloud coloring that is both realistic and uniform. Vechersky et al. [

43] proposed that the color set corresponding to each 3D point follows a Gaussian distribution model. Specifically, the mean and covariance of the weighted Gaussian distribution of the color set are estimated, and the mean value is assigned to the color of the 3D point. Based on this, this paper proposes a Gaussian distribution point cloud coloring method with center area restriction. In simple terms, assuming the color set corresponding to the 3D points follows a Gaussian distribution. Meanwhile, the position information of pixels is statistically analyzed, and only pixels within the central area of the image are selected as valid pixels for coloring. This effectively avoids the phenomenon of blurred edge pixels.

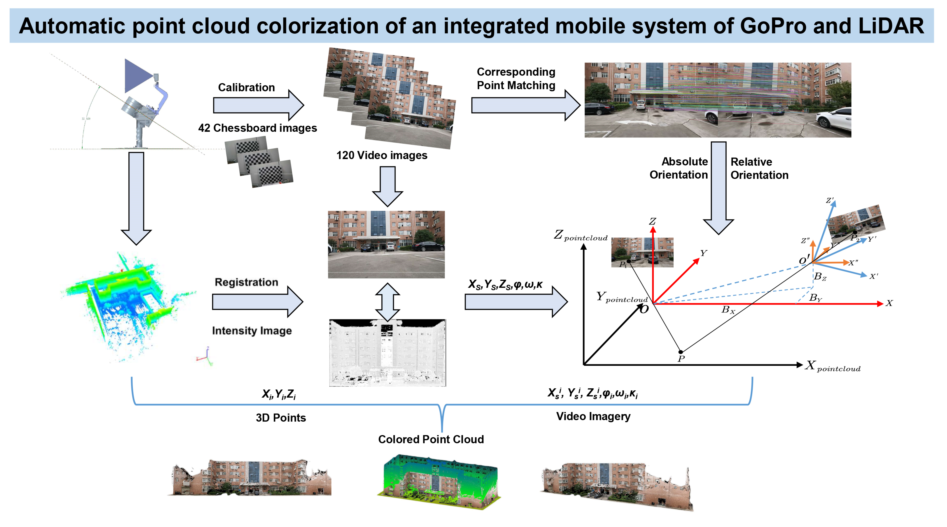

Given the above issues, the chapter arrangement of this article is as follows: Chapter 1 introduces the current research status of the registration between point cloud and images. Chapter 2 briefly introduces the ground mobile LiDAR system and the data used in the experiment. Chapter 3 focuses on the point cloud coloring method based on video images without POS. 3.1 discusses how to handle the problem of non-linear distortion of GoPro cameras, mainly using radial and tangential distortion models for correction. 3.2 describes the principle of automatic registration based on normalized Zernike moments, from Zernike polynomials, to Zernike moments, and then to the derivation of normalized Zernike moments. 3.3 explains how to achieve automatic registration of point cloud and sequential images, including the selection of the corresponding point matching method, relative orientation based on essential matrix decomposition and nonlinear optimization, and absolute orientation. 3.4 provides a detailed description of the steps and strategies for coloring point cloud. Chapter 4 presents experimental results and analysis. Chapter 5 gives the discussion about the results. Chapter 6 summarizes and gives prospects for future work. In summary, the Graphical Abstract of the article is shown in

Figure 2.

2. System and data

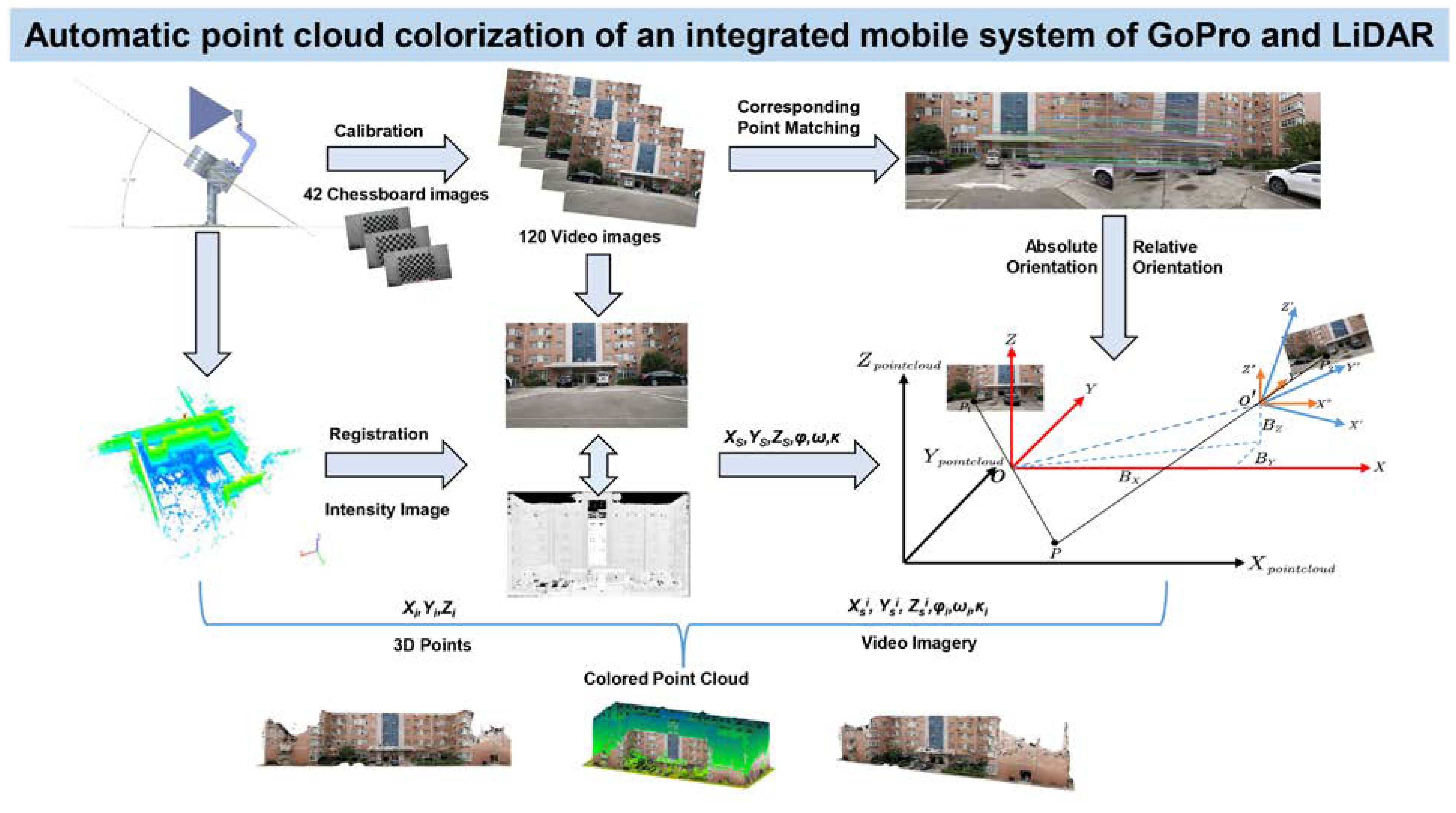

As shown in

Figure 3, we establish a simple ground mobile measurement system integrating a LiDAR and a GoPro camera. Regarding the position where the action camera is fixed, if we take the center of the LiDAR as the origin, the horizontal direction as the y-axis, the vertical direction as the z-axis, and the x-axis perpendicular to the paper and pointing outward, the coordinates of the GoPro camera lens center in the illustrated coordinate system are (20, 145.142, 201.825) in centimeters, and the orientation of the lens field of view (FOV) center is parallel to the y-axis and perpendicular to the x-axis.

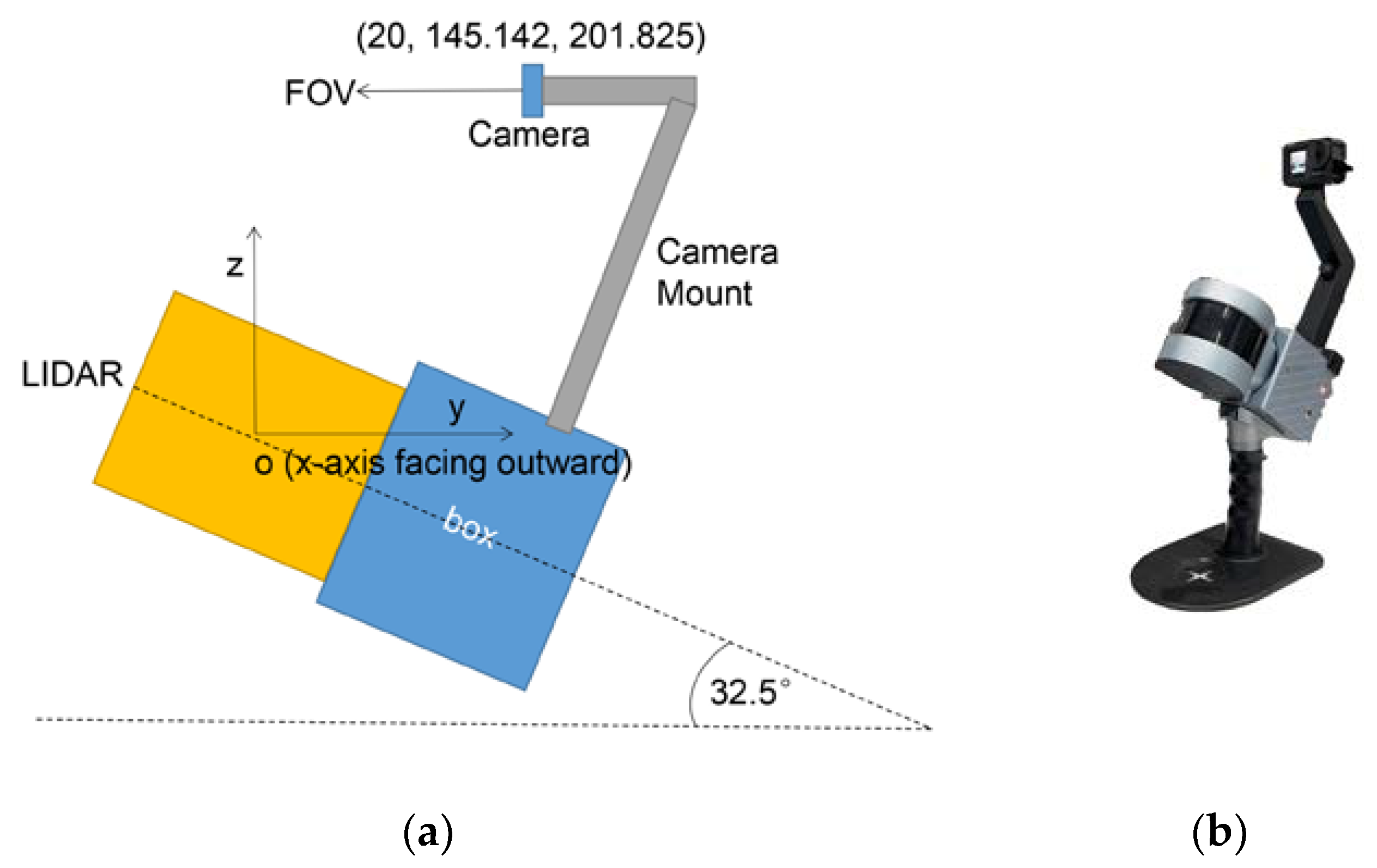

As shown in

Figure 3, the center axis of the LiDAR transmitter is fixed and inclined at an angle of 32.5° to the horizontal ground. During the system's movement, the LiDAR transmitter will continuously rotate along this central axis. At the same time, the GoPro action camera will also rotate around the camera mount to obtain sufficient data for point cloud coloring. The initial point cloud and some captured video images are shown in

Figure 4.

3. Methods

3.1. Camera Calibration

GoPro camera conforms to the pinhole camera model. According to the principle of the pinhole imaging model, the transformation relationship and its abbreviated form between the image pixel coordinate system and the camera coordinate system is shown as follows:

Where: p = (x, y) : the pixel coordinate of point P in the image plane

cx , cy : the coordinate of the principal axis in the pixel coordinate system

f : the camera focal length

dx, dy : the pixel dimensions

λ : the scaling factor

Xc,Yc,Zc : the coordinate of point P in the camera coordinate system

Considering that there exists a 3D similarity transformation between the camera coordinate system and the world coordinate system, the transformation formula in abbreviated form are as follows:

Where: R : the rotation matrix,

T : the translation matrix,

Xw,Yw,Zw : the world coordinate of point P in space

k : the scaling factor between the two coordinate systems

When shooting with a GoPro action camera, significant non-linear distortion may occur.

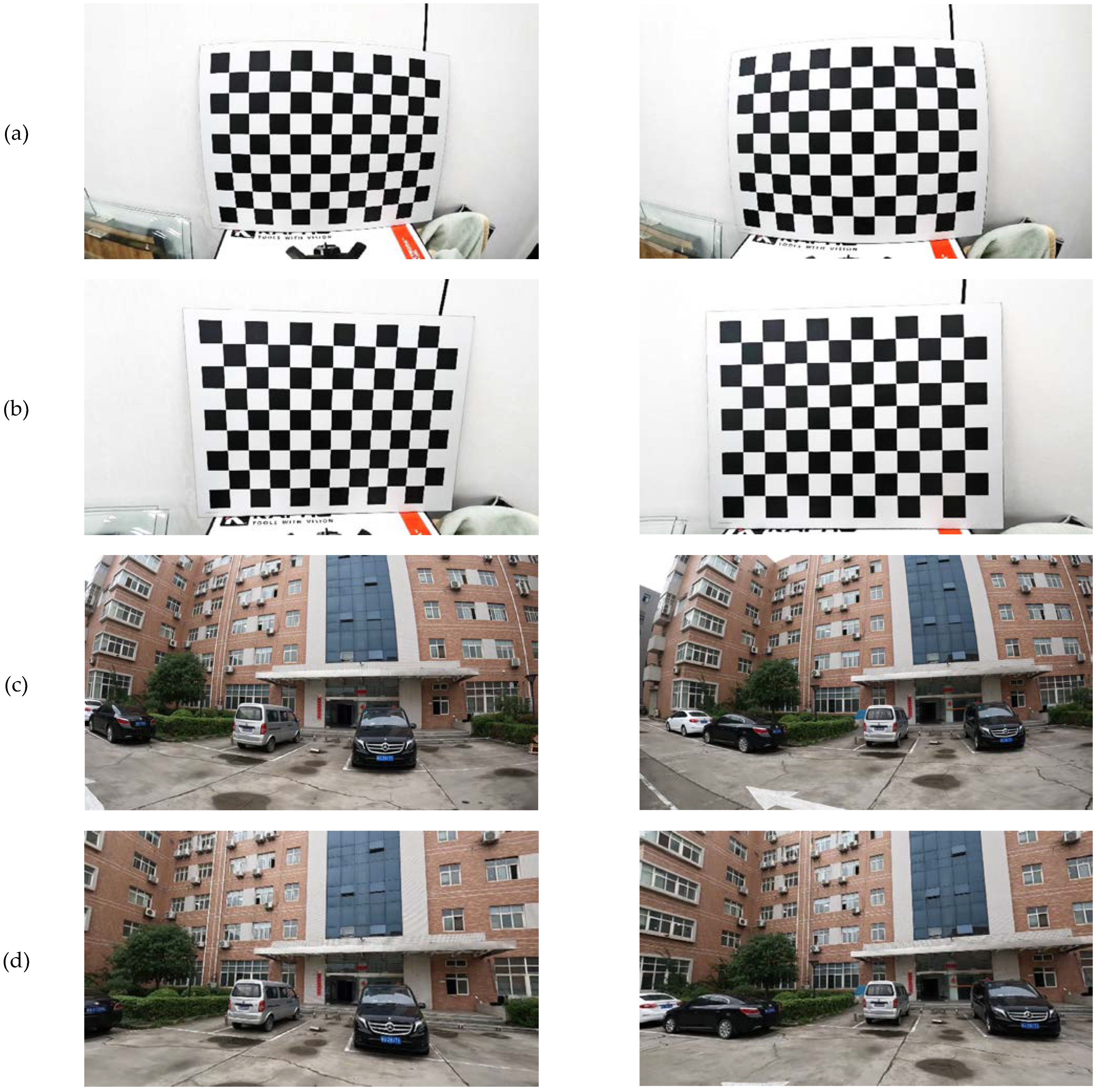

Figure 5a are partial images of the chessboard images captured from the video stream. The degree of distortion around the chessboard is still significant, indicating a substantial level of distortion.

Figure 5c displays video images where the edges of the straight-line houses are all curved, indicating a significant amount of deformation.

Due to the manufacturing errors in the lens of the action camera, the lens of the camera affects the propagation of light, resulting in radial distortion. The radial distortion can be approximated using the Taylor series expansion of several terms around

r=0, where

k1, k2, k3 are radial distortion coefficients and

r2=x2+y2. Typically, only first-order and second-order terms need to be considered. The normalized coordinates after radial distortion are as follows:

In addition to the shape of the camera lens introducing radial distortion, the non-collinear optical centers of the lens groups during assembly introduce tangential distortion, which requires two additional distortion parameters to describe. The tangential distortion coefficients are denoted as

p1 and

p2. The normalized coordinates after tangential distortion are as follows:

Based on the distortion described above, we use 42 chessboard images with a size of 12 by 9, and use Zhang’s camera calibration method to obtain the camera parameters and distortion coefficients, as shown in

Table 1. The images before and after calibration are shown in

Figure 5b,d.

3.2. Registration based on normalized Zernike moments

The essence of registration based on normalized Zernike moments is to perform point feature registration. Firstly, point cloud data needs to be projected to generate a point cloud intensity image, as shown in

Figure 6.

Zernike [

44] introduced a set of negative functions defined on the unit circle in 1934. These functions have completeness and orthogonality, which enables them to represent any square-integrable function inside the unit disk. The Zernike formula can be expressed as follows:

Where: ρ : the vector length from point (x, y) to the origin

θ : the counterclockwise angle between vector ρ and the x-axis

p, q : the order of the Zernike polynomials, ()

Rpq(ρ) : a real-valued radial polynomial.

The Zernike polynomial satisfies orthogonality, which can be expressed as follows:

Due to the orthogonal completeness of Zernike polynomials, any image within the unit circle can be represented as follows:

Where

Zpq is the Zernike moment, which can construct any high-order moment of an image and has the characteristic of rotation invariance. It is currently widely used as a shape descriptor. Its definition is as follows:

It should be noted that in Equation (11), different coordinate systems are used, with the former being the Cartesian coordinate system and the latter being the polar coordinate system. It is necessary to pay attention to coordinate conversion during computation. For discrete digital images, the integral form can also be written in the form of summation as follows:

It can be calculated that the Zernike moments of the image before and after rotation only differ in phase, while the amplitude of the Zernike moments remains unchanged. Therefore, the amplitude of the Zernike moments can be used as a rotation invariant feature of the image. However, Zernike moments only have rotation invariance and not translation and scale invariance, so it is necessary to normalize the image beforehand. The standard moment method is used to normalize an image, and the standard moment is defined as follows:

The "centroid" of the image can be obtained from the standard moment, and by moving the "centroid" of the image to the center of the unit circle, the translation invariance problem can be solved. In fact, m00 represents the image’s “area”, and by transforming the image with, the purpose of size consistency can be achieved. If the image is transformed with, the normalized Zernike moments of the final image will be translation, scale, and rotation invariant. In summary, Zernike moments are region-based shape descriptors based on the orthogonalization of Zernike polynomials. The orthogonal polynomial set used is a complete orthogonal set within the unit circle. Zernike moments are complex moments, and the amplitude of the Zernike moments is generally used to describe the shape of an object. The shape features of a target object can be well represented by a small set of Zernike moments. Low-order moments describe the overall shape of the image target, while high-order moments describe the details of the image target. In this paper, high-order moments are used for registration, and the registration steps are as follows:

Project the 3D point cloud onto the point cloud intensity image.

Use the Harris corner detection algorithm to extract corner features from both the point cloud intensity image and the video image.

Treat the region composed of the pixels with the feature points and their neighboring pixels as the “target image”, center the image at the feature point, transform it into the unit circle in polar coordinates, and resample the pixels to the unit circle.

Calculate the zero-order standard moment and the Zernike moments of various orders for the target image, and normalize the Zernike moments.

- 5.

Calculate the amplitude of the Zernike moments, which can be used as invariant features, as discussed earlier.

- 6.

Construct Zernike moment vectors for feature points in the point cloud intensity image and the video image, respectively, using the normalized Zernike moment amplitudes of orders 2 to 4, as shown in Equation (16).

- 7.

First perform coarse matching of feature points based on Euclidean distance, and then perform matching based on the absolute difference between the two feature point vector descriptors. If the absolute difference is the smallest among all possible results, the matching between feature points is considered successful. Once the matching of corresponding feature points is successful, automatic registration can be performed based on them.

3.3. Registration of point cloud and sequential video images

3.3.1. Selecting reliable corresponding points

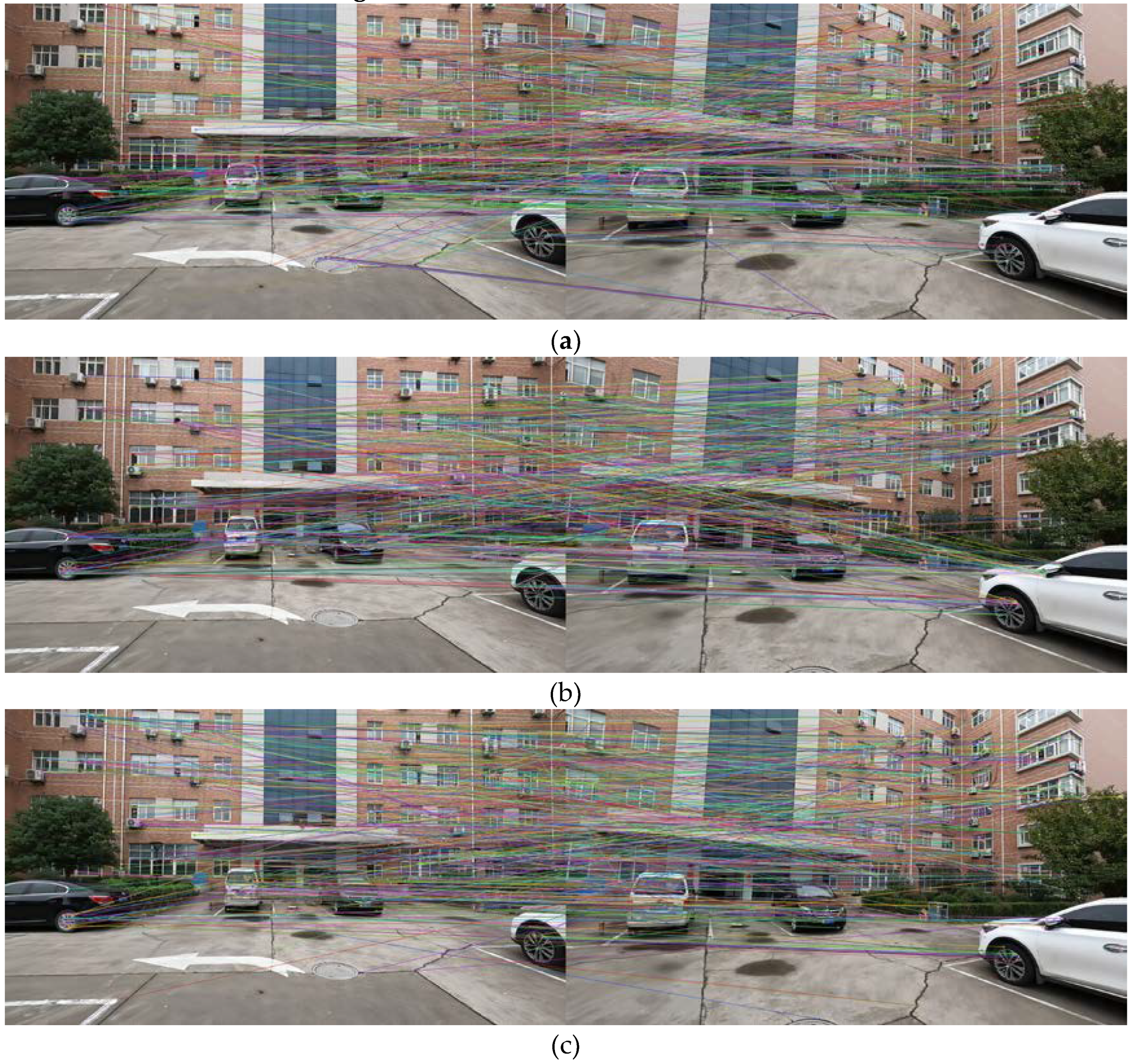

This article adopts a feature-based matching approach for selecting corresponding points. We initially attempted three algorithms, SIFT, ORB, and SURF, and the results are shown in

Figure 7.

For relative orientation, the main considerations are the number and accuracy of selected corresponding points.

Figure 7 shows that the SIFT algorithm can extract sufficient corresponding points, but its accuracy is not high enough; the ORB algorithm has higher accuracy, but fewer corresponding points are extracted; the results of the SURF algorithm have both high accuracy and sufficient corresponding points. Taking into account both the accuracy and number, we ultimately chose to use SURF as the basic algorithm. Of course, we made some improvements based on the SURF algorithm. The specific steps for corresponding point matching are:

- (1)

SURF coarse matching. Firstly, SURF corresponding points coarse matching was conducted on the stereo image pairs.

- (2)

Distance restriction. Due to the characteristics of edge blur and trailing in video images, we also applied a distance restriction after SURF. First, calculating the maximum length of the lines connecting the corresponding points, and then only selecting the corresponding points with connecting line length less than 0.6 times the maximum length. This is a method used in remote sensing to filter out corresponding points that are too far apart from each other.

- (3)

RANSAC precise matching. To eliminate mismatches of feature points, the RANSAC [

45] algorithm was applied after distance restriction to remove incorrect matches.

3.3.2. Relative orientation based on essential matrix decomposition and nonlinear optimization.

The essential matrix [

46] contains information about coordinate translation and rotation. In fact, when solving the coordinate transformation matrix, the essential matrix is first solved, and the rotation and translation parameters are obtained by decomposing the essence matrix. This article also goes through this step in relative orientation using the essence matrix decomposition and nonlinear optimization.

First, establishing a relative orientation model as shown in

Figure 8.

O-XYZ is the spatial rectangular coordinate system with the camera's photographic center as the origin corresponding to the left image;

O'-X'Y'Z' is the spatial rectangular coordinate system with the camera's photographic center as the origin corresponding to the right image, and the three rotation angles of

O'-X'Y'Z' coordinate system relative to

O-XYZ coordinate system are respectively

φ, ω, κ;

O''-X''Y''Z'' is an artificially established auxiliary spatial rectangular coordinate system, and its three axes are parallel to the three axes of the

O-XYZ coordinate system;

OO' is the baseline, and its three displacement components are respectively

BX, BY, BZ. Suppose there is a ground point

P, and the corresponding points in the left and right images are

P1 and

P2. Suppose the coordinates of

P1 in the

O-XYZ coordinate system are

(x, y, z); the coordinates of

P2 in the

O'-X'Y'Z' coordinate system are

(x', y', z'); the coordinates of

P2 in the

O''-X''Y''Z'' auxiliary coordinate system are

(x'', y'', z'').

From the coplanar condition, the relationship equation can be obtained as follows:

Expanding its determinant yields, and the expressions are as follows:

For any pair of corresponding image points between the stereo images, Equation (21) can also be written as follows:

[T]XR is the essence matrix, and [T]X contains three translational parameters BX, BY, BZ and the nine direction cosines in R. It can be seen from the formula that it contains the position and posture relationship of the right image with respect to the camera coordinate system.

In the case of a calibrated camera, there are generally two methods to solve for the essence matrix

E: 1) Hartley et al. [

47] used the normalized eight-point algorithm to solve for the fundamental matrix

F using multiple corresponding image points in the left and right images. Then, using the formula

E=K1-TFK2, the essential matrix is computed. Here,

K1 and

K2 are the internal parameter matrices of the left and right cameras, respectively. In this paper, they are equal since the same GoPro camera is used for both images. 2) The normalized coordinates of multiple corresponding image points in the left and right images are computed in the camera coordinate system, and then lens distortion correction is applied. Finally, the coordinates are directly substituted into Equation (22) to solve for the essential matrix.

To extract the translation and rotation information contained in the essential matrix, Singular Value Decomposition (SVD) is performed on it:

Then the rotation matrix

R and translation vector

t can be represented as follows:

Where α is any nonzero constant. As shown in Equation (24), there are four possible solutions for the rotation matrix R and translation vector t. To determine which solution is correct, we can use the following method: choose a pair of corresponding points and calculate the 3D coordinates of the corresponding object point using each set of R and t. Only the set of R and t that yields positive 3D coordinates for the object point in both left and right camera views (both Z coordinates are positive) is the correct solution. This yields the initial values for the rotation matrix R and translation vector t.

The initial values

R,

t obtained from the decomposition of the essential matrix often have low accuracy due to the presence of noise in the feature points extracted from the images in close-range photogrammetry. Even with a large number of data points, the initial values can be significantly affected by noise, leading to reduced accuracy. To address this issue, nonlinear optimization is often employed to refine the initial values. Nonlinear optimization plays a significant role in digital close-range photogrammetry, as it is used in camera calibration [

48] and bundle adjustment [

49]. In this study, we establish a nonlinear optimization objective function based on the coplanarity constraint equation, with the parameters of the three rotation matrix angles

(φ, ω, κ) and two translation vectors

(BY, BZ) as the optimization variables. We only include two translation vectors as optimization variables because we can set one of the components of the baseline vector

BX to 1 for computational convenience, as changes in the length of the baseline vector only result in a proportional scaling of the stereo image pair model. For a pair of corresponding image points, the coplanarity constraint equation is as follows:

By substituting the initial values of the five parameters obtained from the decomposition of the essential matrix and using the Levenberg-Marquardt algorithm [

50], multiple iterations of optimization can be performed to obtain the final accurate solution.

3.3.3. Absolute orientation

A stereo model established through relative orientation of a stereo pair is based on an image- space coordinate system, in which scale is arbitrary. To determine the correct position of the stereo model in the actual object space coordinate system, it is necessary to transform the photogrammetric coordinates of the model points into the object space coordinates, which requires the use of ground control points to determine the transformation relationship between the image-space coordinate system and the object space coordinate system. The above is the concept of traditional absolute orientation. In the study of point cloud coloring in this paper, the above-mentioned object coordinate system becomes the coordinate system of point cloud data, and the ground control points are actually individual 3D points. Therefore, in this paper, absolute orientation is to determine the transformation relationship between the image-space auxiliary coordinate system and the point cloud coordinate system.

Assuming that the coordinates of an arbitrary image point in the image space coordinate system are represented as

(X, Y, Z), and the coordinates of the corresponding point in the point cloud coordinate system are represented as

(XL, YL, ZL), there exists a spatial similarity transformation relationship between these two coordinates:

Where λ : the scale factor,

ai, bi, ci : the nine direction cosines

ΔX,ΔY,ΔZ : the translation vector

These seven parameters

λ, Φ, Ω, K, ΔX, ΔY, ΔZ constitute the spatial similarity transformation, and absolute orientation is essentially the process of solving these seven parameters. Before solving the parameters, it is generally necessary to perform centroid scaling on the coordinates:

Where: XLg,YLg,ZLg : the centroid coordinates of the point cloud

Xg,Yg,Zg : the centroid coordinates of the image-space coordinate system

n : the number of point cloud control points involved in the calculation

The purpose of centroid scaling is twofold: firstly, to reduce the effective number of decimal places in the coordinates of the model points during the calculation process, in order to ensure the accuracy of the calculation; secondly, by using centroid-scaled coordinates, the coefficients of the normal equations can be simplified, thereby improving the calculation speed.

According to Equation (28), if it is expanded based on Taylor's theorem, three error equations related to the point cloud coordinates can be obtained. This means that one horizontal control point can produce three error equations, and one vertical control point can produce one error equation. Therefore, when there are more than two horizontal control points and one vertical control point, the seven unknown parameters can be solved by the least squares principle.

3.4. Point cloud coloring Method

In this paper, we intend to start from the point cloud to find the corresponding pixels, and then assign values to the point cloud. The reason for choosing to start from the point cloud is that the end of point cloud traversal represents the end of coloring. If we start from the image pixels, it may speed up the coloring process, but it may also result in some point clouds being uncolored, causing discontinuity and missing data in the overall point cloud. Usually, a 3D point in a LiDAR point cloud often corresponds to multiple pixels in multiple images, thus how to color it correctly based on pixels with poor image quality becomes a critical issue. Therefore, we propose a Gaussian distribution-based point cloud coloring method with a central region restriction. The specific steps are as follows:

Finding pixel sets corresponding to 3D points. Starting from the point cloud, traverse through each 3D point, which corresponds to multiple images. Based on the previous results, the pixel coordinates corresponding to each 3D point can be calculated from the images. The nearest neighboring pixels are selected as the corresponding pixel of the 3D point, and their color and position information are recorded.

Applying central area restriction. Based on the location information gathered in step 1, only pixels within the central area of the image are considered valid for coloring the point cloud.

Coloring. We assume that for a valid pixel color set corresponding to a 3D point, any of the RGB channels follow a Gaussian distribution. We estimate the mean of each channel's Gaussian distribution and consider it as the color value of that channel. Finally, we assign the RGB color to the 3D point.

Repeat steps 1, 2 and 3 until all 3D points have been processed.

4. Results

4.1. Registration based on normalized Zernike moments

Registration results based on the collinearity equation and normalized Zernike moments are shown in

Table 2 and

Table 3, and

Figure 9.

Table 2 displays the 3D coordinates of point cloud control points and registration accuracy of the collinearity equation.

Table 3 displays the 3D coordinates of several Harris feature points of the point cloud and registration accuracy of normalized Zernike moments.

Figure 9 is a screenshot of the colored point cloud obtained from the registration result, which can also serve as a reference image for the registration accuracy.

As shown in

Figure 9, the registration results based on the collinearity equation are generally acceptable, but there are still some areas around the edges where registration is not accurate, which is due to the system's speed of 20-30 km/h during mobile measurements, resulting in blurry edges and distortions in video images. On the contrary, normalized Zernike moments utilize information from neighboring pixels when extracting features, which can reflect features in more dimensions and describe the texture details of the image. Therefore, the registration results based on normalized Zernike moments are better. According to

Table 2 and

Table 3, the registration accuracy based on normalized Zernike moments is around 0.5 pixels, which is 1-2 pixels higher than that of the registration accuracy of collinearity equation.

4.2. Corresponding point matching

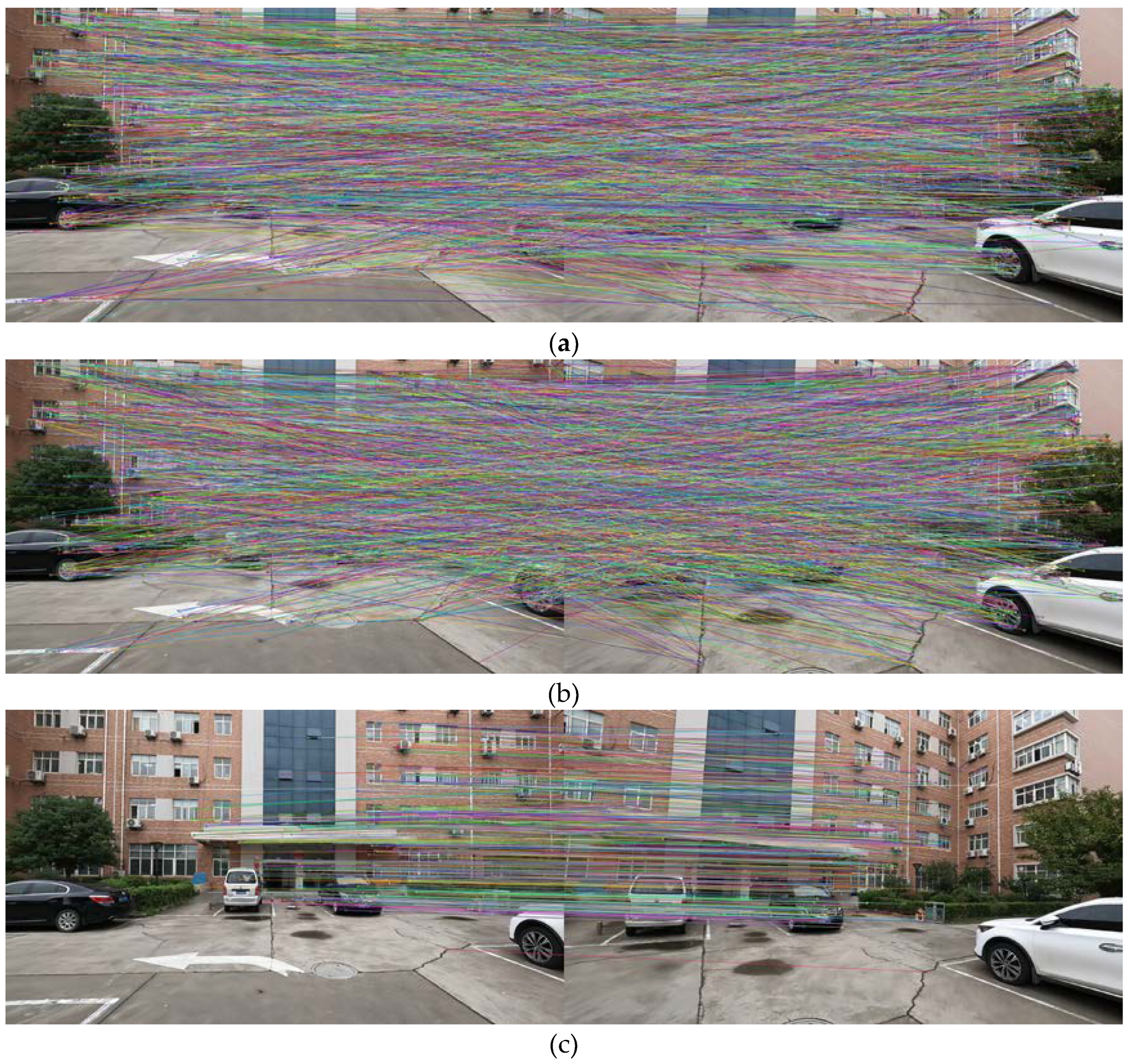

In this article we uniformly adopt the SURF algorithm with distance restriction and RANSAC to select corresponding points. We randomly select a stereo image pair (28-29) as an example, and the result is shown in

Figure 10.

As shown in

Figure 10a, there are 3867 corresponding points selected by the SURF coarse matching, among which there are many mismatched points and corresponding points located at the edges of the image. After applying distance restriction, the number of corresponding points decreased from 3867 to 2904, as shown in

Figure 10b. The corresponding points located at the edges of the image were reduced, but there were still many mismatches. After applying RANSAC, as shown in

Figure 10c, the number of corresponding points dropped sharply from 2904 to 582. The mismatches were mostly eliminated after the RANSAC process. It can be seen that after these three steps, the accuracy of the corresponding points is relatively high, and they are mostly located in the central area of the image, which meets the requirements well. However, after obtaining a series of corresponding points, selecting how many pairs for relative orientation becomes a problem. The number and accuracy of the selected corresponding points will definitely affect the accuracy of relative orientation. Therefore, we statistically analyze the data in

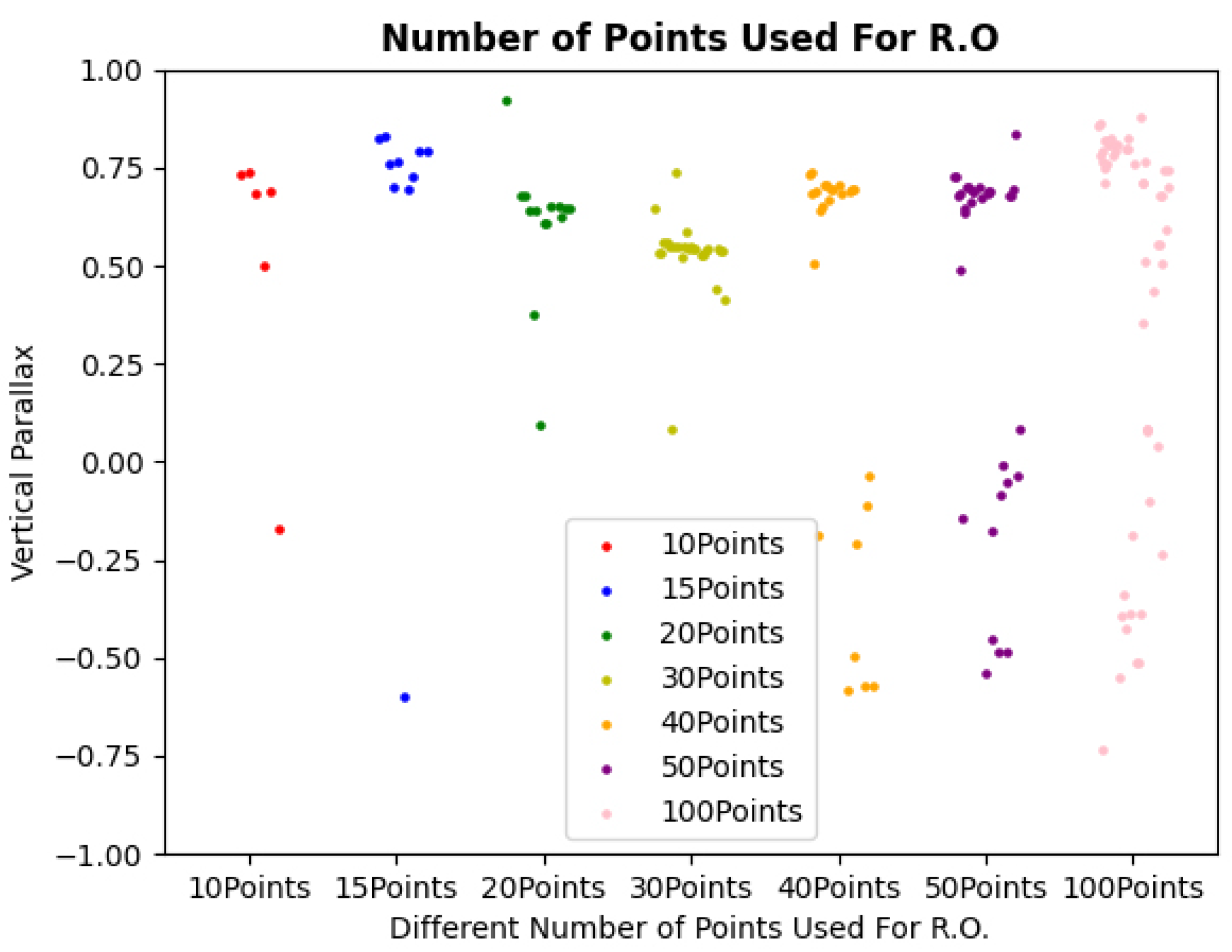

Figure 11 and

Table 4.

Figure 11 shows how the number and accuracy of the corresponding points affect the accuracy of the vertical parallax of relative orientation by selecting the top 10, 15, 20, 40, 60, 80 and 100 corresponding points.

Table 4 summarizes the situations of eight stereo image pairs.

In general, selecting corresponding points with accuracy in the top 10 to 15, 20, or even 100 has a decreasing impact on the accuracy of relative orientation in terms of vertical parallax. This is because corresponding points with lower accuracy definitely result in poorer results. However, it is not necessarily true that selecting corresponding points with higher matching accuracy will lead to better results in relative orientation. For example, the relative orientation results obtained by selecting corresponding points with matching accuracy in the top 10 were not as good as those obtained by the top 15, and the results obtained by the top 15 were not as good as those obtained by selecting the top 20. It is speculated that nonlinear distortion, trailing, and blurred pixels in the video images used in this study may cause significant errors when selecting fewer corresponding points. So, selecting more corresponding points can help to distribute these errors more evenly. However, more corresponding points means lower matching accuracy. Therefore, in this study, we ultimately decided to select corresponding points with matching accuracy in the top 20 for relative orientation.

4.3. Relative orientation

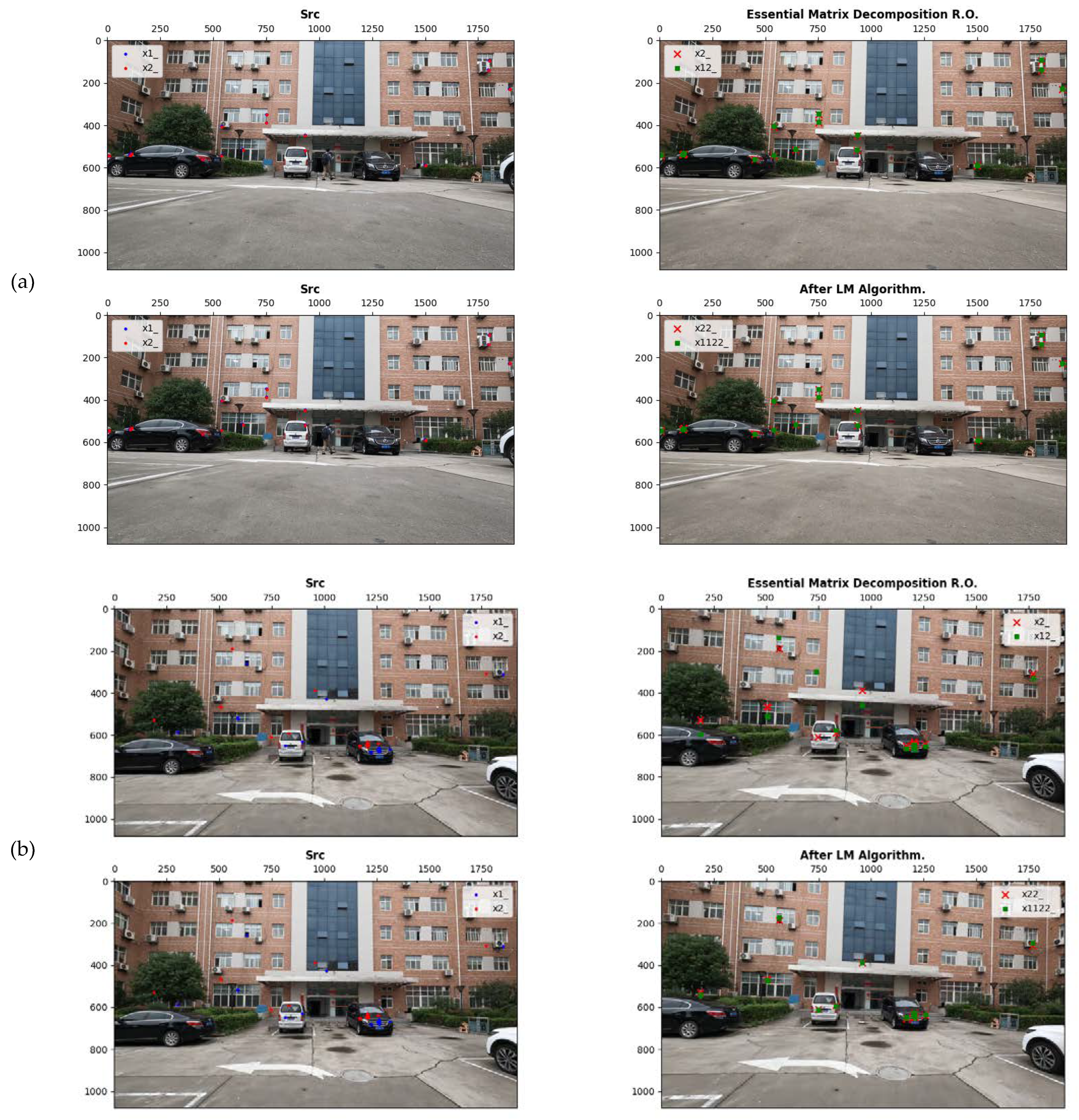

The results of relative orientation based on essential matrix decomposition and nonlinear optimization are shown in

Figure 12.

Figure 12a–c show the results of three stereo image pairs, with the left images being the left image of the stereo pair and the right images being the right image of the stereo pair. The results indicate that this method yields good results for different situations such as: small rotation angles (

Figure 12a), large rotation angles (

Figure 12b) and both large rotation angles and displacements (

Figure 12c).

4.4. Absolute orientation

Absolute orientation is a 3D spatial similarity transformation between the relative orientation stereo model coordinate system and the point cloud coordinate system. Therefore, the accuracy of absolute orientation mainly depends on the accuracy of the relative orientation stereo model and the accuracy of corresponding points between the two coordinate systems. At the same time, since the sequential images are obtained by capturing the video stream, the captured images under the motion state will cause blur in some parts of the images, further leading to measurement errors. Therefore, the main error sources of absolute orientation come from the relative orientation stereo model error and the measurement error of point cloud control points. For the accuracy of absolute orientation, we use the difference between the coordinates obtained by absolute orientation and the coordinates of the corresponding control points in the point cloud and the mean squared error (MSE) of each relative orientation element as the evaluation standards. The results are shown in Table 6.

4.5. Point cloud colorization

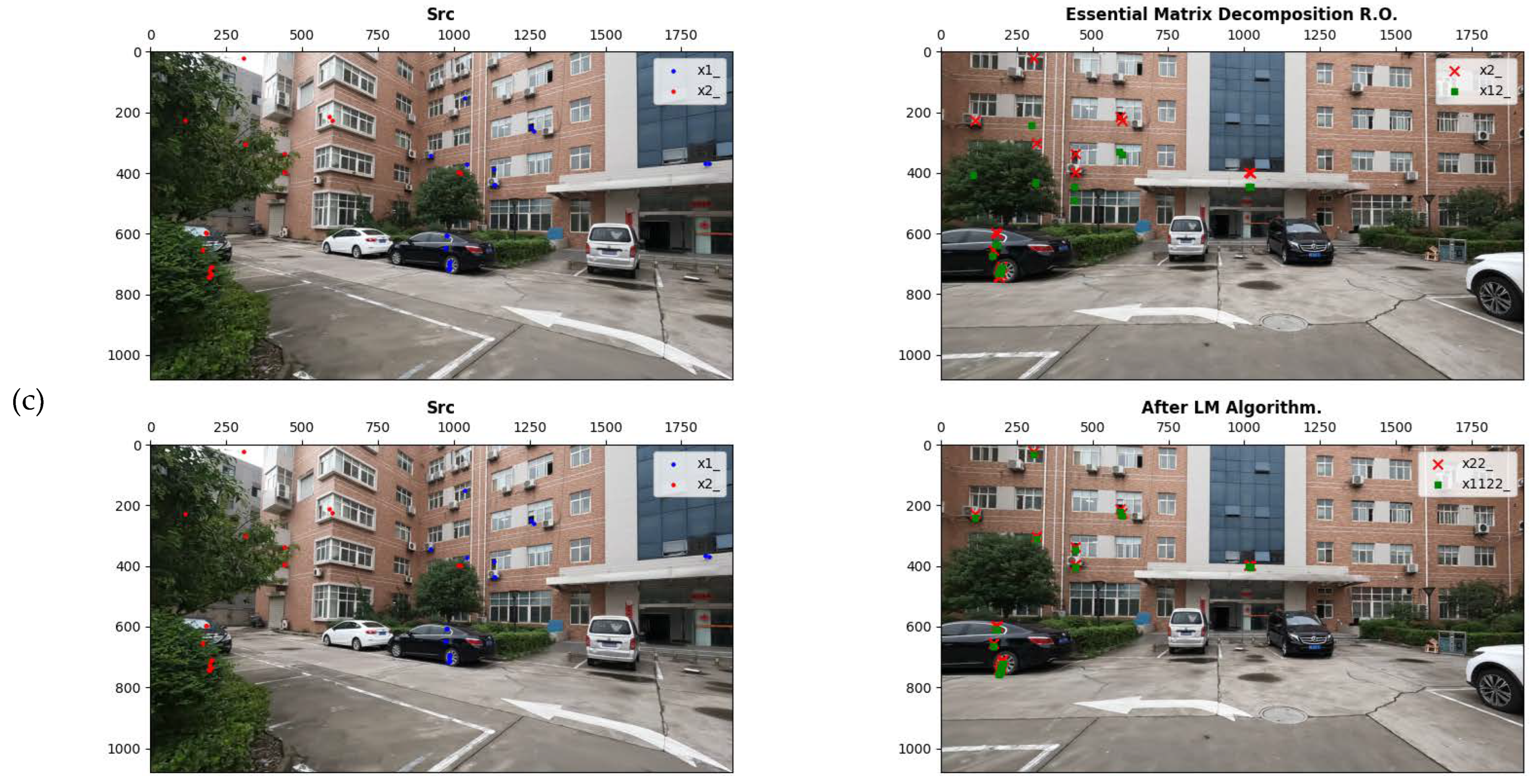

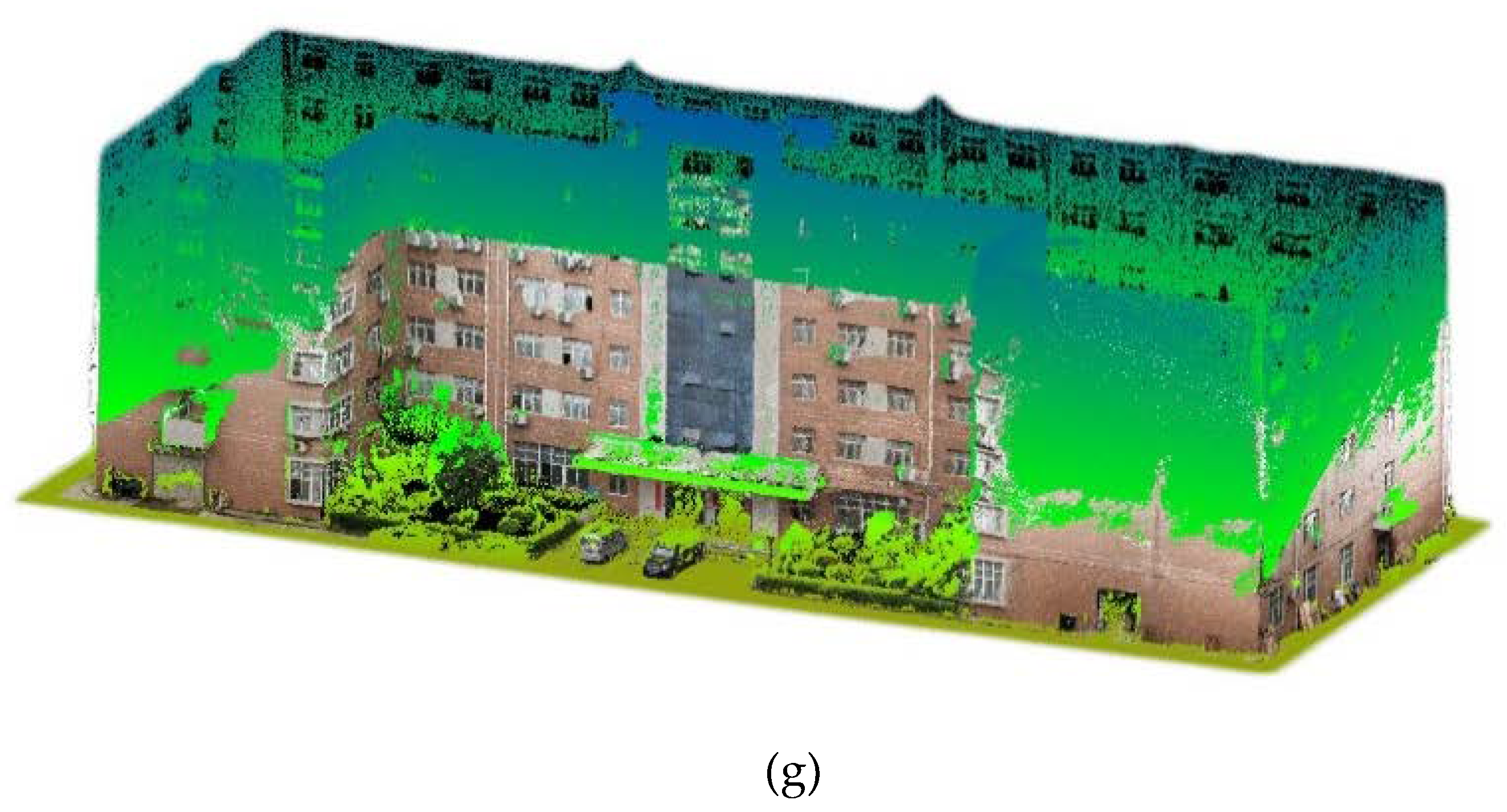

The results of the colored point cloud are shown in

Figure 13.

Figure 13a,b demonstrate the importance of center region restriction in the Gaussian distribution coloring method. It is evident that even after calibration, pixel blurring and uncorrected phenomena still occur in the edge regions of the video image. Therefore, the center region restriction is critical, and only the pixels within the red box are considered qualified and can be used to color the point cloud.

Figure 13c–g show the point cloud coloring results, including the top view, side view, front view, back view and total view.

5. Discussion

To achieve the automatic coloring of point cloud in our system, we went through several steps: 1) Camera calibration. 2) Registration based on normalized Zernike moments. 3) Corresponding point matching with distance restriction. 4) Relative and absolute orientation. 5) Gaussian distribution point cloud coloring with center region restriction. In the experimental process, each step is crucial and the accuracy of each intermediate result will directly or indirectly affect the subsequent results.

As the video images used in this paper were captured from a GoPro camera during the mobile measuring process, they suffer from significant distortion. Therefore, Zhang’s camera calibration method was used to correct the non-linear distortion. As shown in

Figure 5a,c and b,d, it can be seen that the edges of the chessboard and the edges of the house are well corrected. However, the limitation of this method is that it only considers the tangential and radial distortion models which have a greater impact on distortion, but does not consider other types of distortion [

5,

6,

7,

8].

Registration based on normalized Zernike moments is actually registration based on point features [

27,

28,

29,

30,

31,

32], and its accuracy mainly depends on the selection of feature points and the descriptor of the feature region. When selecting feature points, as the registration area is the front of the house with many windows and glasses, this paper uses the Harris operator to extract corner points as feature points. Due to the phenomenon of blurred edges in video images, normalized Zernike moments are used to describe the feature region. Low-order Zernike moments describe the overall shape characteristics of the image, while high-order Zernike moments reflect the texture details of the image [

33,

34,

35]. Normalized Zernike moments are invariant to rotation, translation, and scaling, and can serve as the determining factor for registration. According to

Table 2 and

Table 3, the registration results of this paper have high accuracy, with errors between 0.5-1 pixels, far superior to the results of registration based on collinear equations. Unfortunately, due to the low accuracy of the video images, direct registration between image-based point cloud and laser point cloud cannot be achieved [

25,

26]. In addition, to avoid complex computations and long running times, this paper only uses normalized Zernike moments of 2-4 orders as the vector descriptor of the region, so further progress can be made from higher orders.

Due to the lack of POS and IMU in our system, we cannot directly obtain the exterior orientation elements of the images. Therefore, we adopt a registration strategy from 1 to N, which is to transmit exterior orientation elements through relative and absolute orientation [

36,

37]. The premise of relative orientation is the matching of corresponding points. As shown in

Figure 7, considering both accuracy and quantity, we choose the SURF algorithm. Due to phenomena such as edge blurring in video images, it is necessary to avoid selecting corresponding points in the edge regions. Therefore, we propose a SURF matching method with distance restrictions. As shown in

Figure 10a,b, after applying distance restrictions, the number of corresponding points located on the edge of the image is significantly reduced, but there are still many mismatched points in the edge region. After performing RANSAC, as shown in

Figure 10c

, high-precision corresponding points located in the central region of the image are obtained. In addition, we separately analyzed the influence of the top 10, 15, 20, 30, 40, 50, and 100 corresponding point pairs on relative orientation. As shown in

Figure 11 and

Table 4, selecting the top 20 points yields the smallest vertical parallax and highest accuracy. One area for improvement is that, for convenience, we uniformly selected the top 20 points for relative orientation for each stereo image pair. However, theoretically, each stereo image pair has its optimal number of corresponding points, but this would increase complexity and computation time.

The essence of relative orientation is to solve the essential matrix [

39,

40,

41]. Based on this, this paper uses essential matrix decomposition and non-linear optimization to perform relative orientation [

42], as shown in

Figure 12. The method used in this paper has stronger applicability than traditional relative orientation. Traditional relative orientation is based on the assumption of approximately vertical photography, and can only converge to the correct result when the rotation angle of the image is small. As shown in

Figure 12a–c, good results can be obtained for general cases, large rotation angles, and large displacement. At the same time, we can also see the importance of non-linear optimization. After non-linear optimization, the corresponding points of the left and right images are almost coincident. Absolute orientation is required after relative orientation, and the accuracy of absolute orientation depends on the accuracy of the stereo image model and the precision of control point selection. With the prerequisite of accurate previous steps, good results can also be obtained in absolute orientation. As shown in Table 6, we use 15 image points from a stereo image pair for absolute orientation, and the MSE of X, Y, and Z are all at the centimeter level.

The phenomenon of edge blurring in video images has been present throughout the entire experimental process, and cannot be ignored even in the final step of point cloud coloring. Therefore, this paper adopts a Gaussian distribution point cloud coloring method with central region restrictions. As shown in

Figure 13g, the point cloud coloring results have overall high accuracy. The textures of buildings are very clear, and the corners of the houses are also very obvious. In addition, targets such as windows, grass, trees, and vehicles can be clearly distinguished. The biggest drawback is that due to the limited range of the video images, the high-altitude point cloud of buildings, the crowns of trees, and the roofs of cars are not colored. So, the next research direction is how to colorize point cloud with high-altitude to obtain a complete colored point cloud.

6. Conclusions

In this article, we propose an automatic point cloud colorization method for a ground measurement LiDAR system without POS. The system integrates a LiDAR and a GoPro camera, and has the characteristics of simplicity, low cost, light weight, and portability. As a loosely coupled integrated system, it has the possibility of industrial mass production and can complete automatic point cloud registration and colorization without POS. The method mainly consists of five steps: calibration, registration, relative orientation, absolute orientation, and colorization. To solve the problems of video image motion blur, pixel blur, and nonlinear distortion of GoPro, in preprocessing we use radial and tangential distortion models to correct the GoPro camera based on 42 chessboard images extracted from video streams. To achieve image sequence registration without POS, this article proposes a 1-N registration strategy. We only perform registration between the first video image and the point cloud, and uses the relative and absolute orientation to transfer the exterior orientation elements to all sequential images. In registration, a method based on normalized Zernike moments is proposed to achieve high registration accuracy even for blurry video images. In the corresponding point matching, this article proposes a SURF corresponding point matching method with distance restriction and RANSAC to eliminate corresponding points with blurred edges and mismatches. Finally, in the point cloud colorization, we propose a Gaussian distribution coloring method with a central region restriction, which can complete point cloud colorization realistically and evenly. Based on the results of the final point cloud colorization, we prove the feasibility of the method proposed in this article, which provides a reference for the future point cloud colorization of simple mobile measurement LiDAR systems.

For the blurry video images, the reliability of corresponding points is questionable. Therefore, the following research will be carried out: (1) Using features such as tie-line or parallel-line attributes to achieve registration between point cloud data. (2) Establishing a joint calibration [

51] field for the motion camera and LiDAR to solve the rigid combination between them and achieve automatic matching of both data. (3) Starting from improving the methods of relative and absolute orientation, adopting more accurate and faster methods. For example, Li et al. [

52] proposed a hybrid conjugate gradient algorithm for large-angle stereo image relative orientation, which is independent of initial values and has high accuracy and fewer iterations. Deng et al. [

53] proposed an absolute orientation algorithm based on line features, which reduces the need for control points and tie points and improves accuracy and stability through joint adjustment.

Author Contributions

All authors contributed in a substantial way to the manuscript. Software, Chen Qian; Writing – original draft, Junhao Xu and Jie Wang; Writing – review & editing, Chunjing Yao and Hongchao Ma.

Funding

This research is funded and supported by National Key R&D Program of China (2018YFB0504500), National Natural Science Foundation of China (No. 41101417) and National High Resolution Earth Observations Foundation (11-H37B02-9001-19/22).

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, J. Deformation Monitoring of Wooden Frame Relics Based on 3D Laser Scanning Technology. Beijing Surveying and Mapping. [CrossRef]

- Zhang, J.; Jiang, W.S. Registration between Laser Scanning Point Cloud and Optical Images: Status and Trends. Journal of Geo-information Science. [CrossRef]

- Yang, B.W.; Guo, X.S. Overview of Nonlinear Distortion Correction of Camera Lens. Journal of Image and Graphics. [CrossRef]

- Duane, C. B. Close-range camera calibration. Photogramm. Eng.

- Melen, T.; Balchen, J.G. Modeling and calibration of video cameras. Other Conferences, 2357. [Google Scholar] [CrossRef]

- Heikkilä, J.; Silvén, O. A four-step camera calibration procedure with implicit image correction. Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 1106. [Google Scholar] [CrossRef]

- Fraser, C.S. Digital camera self-calibration. Isprs Journal of Photogrammetry and Remote Sensing,. [CrossRef]

- Hu, H.; Liang, J.; Tang, Z.Z; Guo, X. Global calibration for muti-camera videogrammetric system with large-scale field-of-view. Optics and Precision Engineering.

- Gao, Z.Y.; Gu, Y.Y.; Liu, Y.H.; Xu, Z.B.; Wu, Q.W. Self-calibration based on simplified brown non-linear camera model and modified BFGS algorithm. Optics and Precision Engineering, 2532. [Google Scholar]

- Zou, P.P.; Zhang, Z.L.; Wang, P.; Wang, Q.Y.; Zhou, W.H. Binocular Camera Calibration Based on Collinear Vector and Plane Homography. ACTA OPTICA SINICA. [CrossRef]

- Xie, Z.X. Chi, S.K.; Wang, X.M.; Pan, C.C.; Wei, Z. Calibration Method for Structure-Light Auto-Scanning Measurement System Based on Coplanarity. CHINESE JOURNAL OF LASERS. [CrossRef]

- Yu, J.P.; Lin, J.H.; Zhan, S.H.; Yao, N.W. A Comparative Study of Close-Range Image Feature Points Matching Method. Journal of Guangdong University of Technology. [CrossRef]

- Harris, C.G.; Stephens, M.J. A Combined Corner and Edge Detector. Alvey Vision Conference, 5244. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. International Journal of Computer Vision, 60. [CrossRef]

- Bay, H.; Tuytelaars, T.; Gool, L.V. SURF: Speeded Up Robust Features. European Conference on Computer Vision,. [CrossRef]

- Rublee, E.; Rahaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In 2011 International Conference on Computer Vision. Ieee, 2011, 2564-2571. [CrossRef]

- Zeng, F.Y.; Gu, A.H.; Ma, Y.J.; Xiang, H.D. Analysis and Comparison of several kinds of Feature Points Extraction Operator. Modern Surveying and Mapping. [CrossRef]

- Liu, D.; Wang, Y.M.; Wang, G.L. Feature Point Extraction of Color Digital Image Based on Harris Algorithm. Journal of Beijing University of Civil Engineering and Architecture. [CrossRef]

- Cui, L.; Li, C.; Li, Y. Implementation and Analysis of Harris Algorithm and Susan Algorithm. Computer & Digital Engineering, 2396. [Google Scholar] [CrossRef]

- Jia, F.M.; Kang, Z.Z.; Yu, P. A SIFT and Bayes Sampling method for Image Matching. Acta Geodaetica et Cartographica Sinica.

- Cao, N.; Cai, Y.Y.; Li, X.Y.; Li, L. Research on Feature Point Automatic Matching Method for High-resolution Remote Sensing Images. GEOSPATIAL INFORMATION. [CrossRef]

- Zhang, Y. Research on Point Feature Matching Algorithms of UAV Remote Sensing Images. PLA Information Engineering University.

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, -13, 2006. Proceedings, Part I 9 (pp. 430-443). Springer Berlin Heidelberg. 7 May. [CrossRef]

- Yao, S.K.; Liu, M. ORB feature point extraction and matching study. Electronic Design Engineering. [CrossRef]

- Liu, S.Y. Research on Laser Scanning Point Cloud Data and Digital Image Sequence Registration. Urban Geotechnical Investigation & Surveying. [CrossRef]

- Liu, X.W.; Fu, L.N.; Xu, G. Laser point cloud and image registration method based on 3D-SIFT and SICP. Beijing Surveying and Mapping. [CrossRef]

- Ruan, Q.; Peng, G.; Li, R. Study on image registration and mosaic technology based on surf feature. Computer & Digital Engineering. [CrossRef]

- Fang, W. Research on automatic texture mapping of terrestrial laser scanning data combining photogrammetry techniques. Wuhan: Wuhan University, 2014.

- Safdarinezhad, A.; Mokhtarzade, M.; Valadan Zoej, M.J. An automatic method for precise 3D registration of high resolution satellite images and Airborne LiDAR Data. International Journal of Remote Sensing, 9460. [Google Scholar] [CrossRef]

- Eo, Y.D.; Pueon, M.W.; Kim, S.W.; Kim, J.R.; Han, D.Y. Coregistration of terrestrial lidar points by adaptive scale-invariant feature transformation with constrained geometry. Automation in Construction,. [CrossRef]

- Ding, Y.Z.; Feng, F.J.; Li, J.P.; Wang, G.S.; Liu, X.Y. Automatic registration of airborne LiDAR data and aerial images constrained by point and line features. Journal of China University of Mining & Technology, 1207. [Google Scholar] [CrossRef]

- Fan, S.H.; Wang, Q.; Gou, Z.Y.; Feng, C.Y.; Xia, C.Q.; Jin, H. A simple automatic registration method for Lidar point cloud and optical image. LASER JOURNAL. [CrossRef]

- Zhang, P.Y. Research on Zernike moment in edge detection of high-resolution remote sensing images. Kunming: Kunming University of Science and Technology.

- Toharia, P.; Robles, O.D.; SuáRez, R.; Bosque, J. L.; Pastor, L. Shot boundary detection using Zernike moments in multi-GPU multi-CPU architectures. Jonrnal of Parallel and Distributed Computing, 1127. [Google Scholar] [CrossRef]

- Jin, L.Q.; Huang, H.; Liu, W.W. Registration of LiDAR point cloud with optical images using Zernike polynomial. Science of Surveying and Mapping. [CrossRef]

- Horn, B.K. Relative orientation. International Journal of Computer Vision, 1: 4(1), 59-78. ROI, 1007. [Google Scholar]

- Wang, J.; Dong, M.L.; Li, W.; Sun, P. Camera relative orientation in Large-scale photogrammetry. OPTICAL TECHNIQUE. [CrossRef]

- Luhmann, T.; Robson, S.; Kyle, S.; Harley, I. Close range photogrammetry: principles, techniques and applications (Vol. 3). Dunbeath: Whittles publishing, 2006.

- Lu, J.; Chen, Y.; Zheng, B. Research on Dependent Relative Orientation in Multi-baseline Close range Photogrammetry. JOURNAL OF TONGJI UNIVERSITY (NATURAL SCIENCE). [CrossRef]

- Zhou., Y.J.; Deng, C.H. 40. Zhou. Y.J.; Deng, C.H. A New Method for Relative Orientation with Hybrid Genetic Algorithm and Unit Quaternion. Geomatics and Information Science of Wuhan University. [CrossRef]

- Li, W.; Dong, M.L.; Sun, P.; Wang, J.; Yan, B.X. Relative orientation method for large-scale photogrammetry with local parameter optimization. Chinese Journal of Scientific Instrument, 2053. [Google Scholar] [CrossRef]

- Li, Y.L.; Jiang, L.B. Relative orientation algorithm based on essential matrix decomposition in close-range photogrammetry. Journal of Shandong University of Technology(Natural Science Edition). [CrossRef]

- Vechersky, P.; Cox, M.; Borges, P.; Lowe, T. Colourising Point Clouds Using Independent Cameras. IEEE Robotics and Automation Letters, 3575. [Google Scholar] [CrossRef]

- von F, Z. Beugungstheorie des schneidenver-fahrens und seiner verbesserten form, der phasenkontrastmethode. physica. [CrossRef]

- Matas, J.; Chum, O. Randomized RANSAC with sequential probability ratio test," Tenth IEEE International Conference on Computer Vision (ICCV'05) Volume 1, Beijing, China, 2005, pp. 1727-1732 Vol. 2. [CrossRef]

- Yu, Y.; Huang, G.P.; Xing, K. Direct closed-form solution to general relative orientation. Proceedings of the 2009 Symposium on Advanced Optical Technologies and Their Applications, 2009.

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision. Cambridge university prerss, 2003. [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Transactions on pattern analysis and machine intelligence, 1330. [Google Scholar] [CrossRef]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. (2000). Bundle adjustment—a modern synthesis. In Vision Algorithms: Theory and Practice: International Workshop on Vision Algorithms Corfu, Greece, –22, 1999 Proceedings (pp. 298-372). Springer Berlin Heidelberg. 21 September. [CrossRef]

- Madsen, K.; Nielsen, H.B.; Tingleff, O. Methods for non-linear least squares problems (2nd ed), 2004.

- Li, H.S.; Luo, X.N.; Deng, C.G.; Zhong, Y.R. Joint calibration of sports camera and lidar based on LM algorithm. Journal of Guilin University of Electronic Technology. [CrossRef]

- Li, J.T.; Wang, C.C.; Jia, C.L.; Niu, Y.R.; Wang, Y.; Zhang, W.J.; Wu, H.J.; Li, J. A hybrid conjugate gradient algorithm for solving relative orientation of big rotation angle stereo pair. Acta Geodaetica et Cartographica Sinica. [CrossRef]

- Deng, F.; Yang, L.H.; Yan, Q.S. An absolute orientation method for close-rang images with line feature. Science of Surveying and Mapping. [CrossRef]

Figure 1.

Image-based 3D point cloud.

Figure 1.

Image-based 3D point cloud.

Figure 2.

Graphical abstract.

Figure 2.

Graphical abstract.

Figure 3.

System schematic diagram. (a): Digital model; (b): Physical model.

Figure 3.

System schematic diagram. (a): Digital model; (b): Physical model.

Figure 4.

The data samples used in this paper. (a), (b): LiDAR images; (c), (d): Video images.

Figure 4.

The data samples used in this paper. (a), (b): LiDAR images; (c), (d): Video images.

Figure 5.

Sample images before and after calibration. (a), (b): Chessboard images before and after calibration; (c), (d): Video images before and after calibration.

Figure 5.

Sample images before and after calibration. (a), (b): Chessboard images before and after calibration; (c), (d): Video images before and after calibration.

Figure 6.

Point cloud intensity image.

Figure 6.

Point cloud intensity image.

Figure 7.

Result of corresponding point matching. (a): SIFT; (b): ORB; (c): SURF.

Figure 7.

Result of corresponding point matching. (a): SIFT; (b): ORB; (c): SURF.

Figure 8.

Relative orientation model.

Figure 8.

Relative orientation model.

Figure 9.

Registration result. (a): The colored point cloud image registered based on collinear equation; (b): The colored point cloud image registered based on normalized Zernike moments.

Figure 9.

Registration result. (a): The colored point cloud image registered based on collinear equation; (b): The colored point cloud image registered based on normalized Zernike moments.

Figure 10.

Result of corresponding point matching. (a): SURF coarse matching; (b): Distance restriction; (c): RANSAC.

Figure 10.

Result of corresponding point matching. (a): SURF coarse matching; (b): Distance restriction; (c): RANSAC.

Figure 11.

Vertical parallax for different number of corresponding points.

Figure 11.

Vertical parallax for different number of corresponding points.

Figure 12.

Result of relative orientation based on essential matrix decomposition and nonlinear optimization. (a), (b), (c): represent a stereo image pair respectively.

Figure 12.

Result of relative orientation based on essential matrix decomposition and nonlinear optimization. (a), (b), (c): represent a stereo image pair respectively.

Figure 13.

Result of point cloud coloring. (a), (b): Central region restriction; (c): Top view; (d): Side view; (e): Front view; (f): Back view; (g): Total view.

Figure 13.

Result of point cloud coloring. (a), (b): Central region restriction; (c): Top view; (d): Side view; (e): Front view; (f): Back view; (g): Total view.

Table 1.

Camera parameters and distortion coefficients.

Table 1.

Camera parameters and distortion coefficients.

| Camera parameters |

Value/pixel |

Distortion coefficients |

Value |

| fx |

872.339 |

k1

|

-0.274753 |

| fy |

872.737 |

k2

|

0.121296 |

|

x0

|

965.446 |

k3

|

-0.000277 |

|

y0

|

541.649 |

p1

|

-0.000245 |

| —— |

—— |

p2

|

-0.031056 |

Table 2.

Registration accuracy based on collinearity equation.

Table 2.

Registration accuracy based on collinearity equation.

| NO. |

Control Points (X, Y, Z) |

Image Points (x, y) |

Difference (dx, dy) |

| 0 |

4.58 |

-20.79 |

7.39 |

851.0 |

313.0 |

-0.6 |

0.3 |

| 1 |

-8.07 |

-20.88 |

7.30 |

1374.0 |

348.0 |

0.6 |

-0.8 |

| 2 |

5.35 |

-17.47 |

3.79 |

774.0 |

426.0 |

1.0 |

-0.3 |

| 3 |

-10.41 |

-17.47 |

3.66 |

1561.0 |

483.0 |

-0.8 |

-2.1 |

| 4 |

-8.07 |

-20.81 |

10.82 |

1380.0 |

205.0 |

-0.3 |

-1.1 |

| 5 |

4.68 |

-20.77 |

10.88 |

861.7 |

173.9 |

2.6 |

-1.9 |

| 6 |

0.64 |

-20.54 |

2.70 |

996.0 |

516.0 |

-1.6 |

4.2 |

| 7 |

-8.11 |

-20.89 |

3.09 |

1367.0 |

525.0 |

-0.7 |

2.1 |

Table 3.

Registration accuracy based on Zernike moments.

Table 3.

Registration accuracy based on Zernike moments.

| NO. |

Feature Points (X, Y, Z) |

Image Points (x, y) |

Difference (dx, dy) |

| 0 |

5.36 |

-17.39 |

4.24 |

55.3 |

363.7 |

-0.3 |

0.3 |

| 1 |

-10.42 |

-17.43 |

4.09 |

1044.1 |

478.0 |

0.7 |

-0.4 |

| 2 |

-2.04 |

-15.09 |

0.08 |

646.5 |

684.4 |

0.0 |

-0.4 |

| 3 |

-5.26 |

-20.66 |

6.29 |

776.2 |

372.6 |

-0.0 |

0.4 |

| 4 |

3.27 |

-20.77 |

7.37 |

307.2 |

247.8 |

-0.3 |

0.0 |

| 5 |

-9.43 |

-20.80 |

14.39 |

949.4 |

86.2 |

0.1 |

0.0 |

| 6 |

-13.00 |

-20.87 |

10.79 |

1058.6 |

255.0 |

0.2 |

0.3 |

| 7 |

-14.30 |

-20.87 |

7.29 |

1094.5 |

389.0 |

-0.4 |

-0.3 |

Table 4.

Vertical parallax for different stereo image pairs when using corresponding points in different accuracy.

Table 4.

Vertical parallax for different stereo image pairs when using corresponding points in different accuracy.

| Stereo Image Pairs |

Top 10 |

Top15 |

Top20 |

Top30 |

Top40 |

Top50 |

Top100 |

| 1 |

1.25 |

1.10 |

0.88 |

1.34 |

1.29 |

1.58 |

4.49 |

| 2 |

1.10 |

1.37 |

0.76 |

1.35 |

1.62 |

1.40 |

4.70 |

| 3 |

1.39 |

1.56 |

1.07 |

1.47 |

1.70 |

1.89 |

4.56 |

| 4 |

1.30 |

1.25 |

1.04 |

1.45 |

1.49 |

1.93 |

3.89 |

| 5 |

1.27 |

1.27 |

0.90 |

1.43 |

1.48 |

1.56 |

4.79 |

| 6 |

1.33 |

1.31 |

0.97 |

1.40 |

1.59 |

1.78 |

3.97 |

| 7 |

1.08 |

1.13 |

0.68 |

1.37 |

1.68 |

1.80 |

4.03 |

| Average |

1.24 |

1.28 |

0.90 |

1.40 |

1.55 |

1.71 |

4.34 |

Table 5.

Result and accuracy of absolute orientation.

Table 5.

Result and accuracy of absolute orientation.

| NO. |

Model Point |

Control Point |

Difference |

MSE |

| 1 |

5.86 |

-19.48 |

4.72 |

5.36 |

-17.39 |

4.24 |

0.50 |

-2.09 |

0.48 |

lX:0.23 |

| 2 |

-10.57 |

-17.78 |

4.11 |

-10.42 |

-17.43 |

4.09 |

-0.15 |

-0.35 |

0.02 |

lY:0.44 |

| 3 |

-2.78 |

-14.90 |

0.10 |

-2.04 |

-15.09 |

0.09 |

-0.74 |

0.19 |

0.01 |

lZ:0.14 |

| 4 |

-5.04 |

-18.48 |

5.83 |

-5.26 |

-18.66 |

6.29 |

0.22 |

0.18 |

-0.45 |

λ:0.007 |

| 5 |

2.89 |

-19.72 |

7.32 |

3.27 |

-20.77 |

7.37 |

-0.38 |

1.05 |

-0.05 |

Φ:0.50 |

| 6 |

-9.47 |

-20.94 |

14.37 |

-9.43 |

-20.80 |

14.39 |

-0.04 |

-0.14 |

-0.02 |

Ω:0.19 |

| 7 |

-12.79 |

-21.28 |

10.79 |

-13.00 |

-20.87 |

10.79 |

0.21 |

-0.41 |

0.00 |

K:0.61 |

| 8 |

6.36 |

-20.71 |

3.68 |

5.36 |

-20.39 |

4.24 |

1.00 |

-0.32 |

-0.56 |

-- |

| 9 |

-12.51 |

-20.00 |

6.35 |

-12.42 |

-19.45 |

6.10 |

-0.09 |

-0.55 |

0.25 |

-- |

| 10 |

-4.33 |

-15.07 |

3.13 |

-4.04 |

-15.10 |

3.09 |

-0.29 |

0.03 |

0.04 |

-- |

| 11 |

-5.84 |

-17.27 |

8.01 |

-5.26 |

-17.66 |

7.29 |

-0.58 |

0.39 |

0.72 |

-- |

| 12 |

4.14 |

-19.53 |

7.32 |

3.27 |

-19.77 |

7.37 |

0.87 |

0.24 |

-0.05 |

-- |

| 13 |

-8.67 |

-19.72 |

11.06 |

-8.43 |

-19.80 |

11.39 |

-0.23 |

0.08 |

-0.33 |

-- |

| 14 |

-11.89 |

-20.60 |

8.23 |

-12.00 |

-20.87 |

8.79 |

0.11 |

0.27 |

-0.56 |

-- |

| 15 |

-13.08 |

-20.99 |

6.77 |

-13.40 |

-20.87 |

7.29 |

0.32 |

-0.12 |

-0.52 |

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).