1. Introduction

Waste issue is a global concern and is on the rise due to the growth of urban areas and population, with predictions showing a potential increase of 70% by 2050 if no measures are taken to address it [

1]. The increasing complexity of waste composition and the absence of a standardized waste classification system make waste identification challenging, resulting in disparities in waste generation and management practices across different regions[

2] [

3].

Comprehending household solid waste management practices is essential for the progress of integrated solid waste management[

4]. Identifying waste plays a pivotal role in the waste management process as it enables facilities to manage, recycle, and diminish waste suitably, while ensuring compliance with regulations and monitoring their advancement over time.

Various studies and approaches that utilize deep learning models for efficient waste sorting and management, which can contribute to a more sustainable environment has been done. Models such as RWNet [

5], Garbage Classification Net [

6], Faster Region-Based Convolutional Neural Network [

7], and ConvoWaste [

8] were proposed and evaluated for their high accuracy and precision rates in waste classification. These studies also highlight the importance of accurate waste disposal in fighting climate change and reducing greenhouse gas emissions. Some studies also incorporate IoT [

9] and waste grid segmentation mechanisms [

10] to classify and segregate waste items in real-time.

By integrating machine learning models with mobile devices, waste management efforts can be made more precise, efficient, and effective. One of the research uses an app that utilizes optimized deep learning techniques to instantly classify waste into trash, recycling, and compost with an accuracy of 0.881 on the test set [

11]. While it shows the potentiality the benchmarking with other state of art model is still needed and is limited in classifying waste into three types. In response, we introduce MWaste, a mobile app that utilizes computer vision and deep learning to classify waste materials into trash, plastic, paper, metal, glass, or cardboard types. The app provides users with suggestions on how to manage waste in a more straightforward and fun way.

The app is tested on various neural network architectures and real-world images, achieving highest precision of 92% on the test set. This app can function with or without an internet connection and rewards users by mapping the carbon footprint of the waste they managed. The app’s potential to facilitate efficient waste processing and minimize greenhouse gas emissions that arise from improper waste disposal can help combat climate change. Additionally, the app can furnish valuable data for tracking the waste managed and preserved carbon footprints.

The rest of this paper is structured as follows: Section II explains the system architecture of MWaste. Section III and IV detail the training and experimental evaluations. Finally, Section V summarizes the findings of this research.

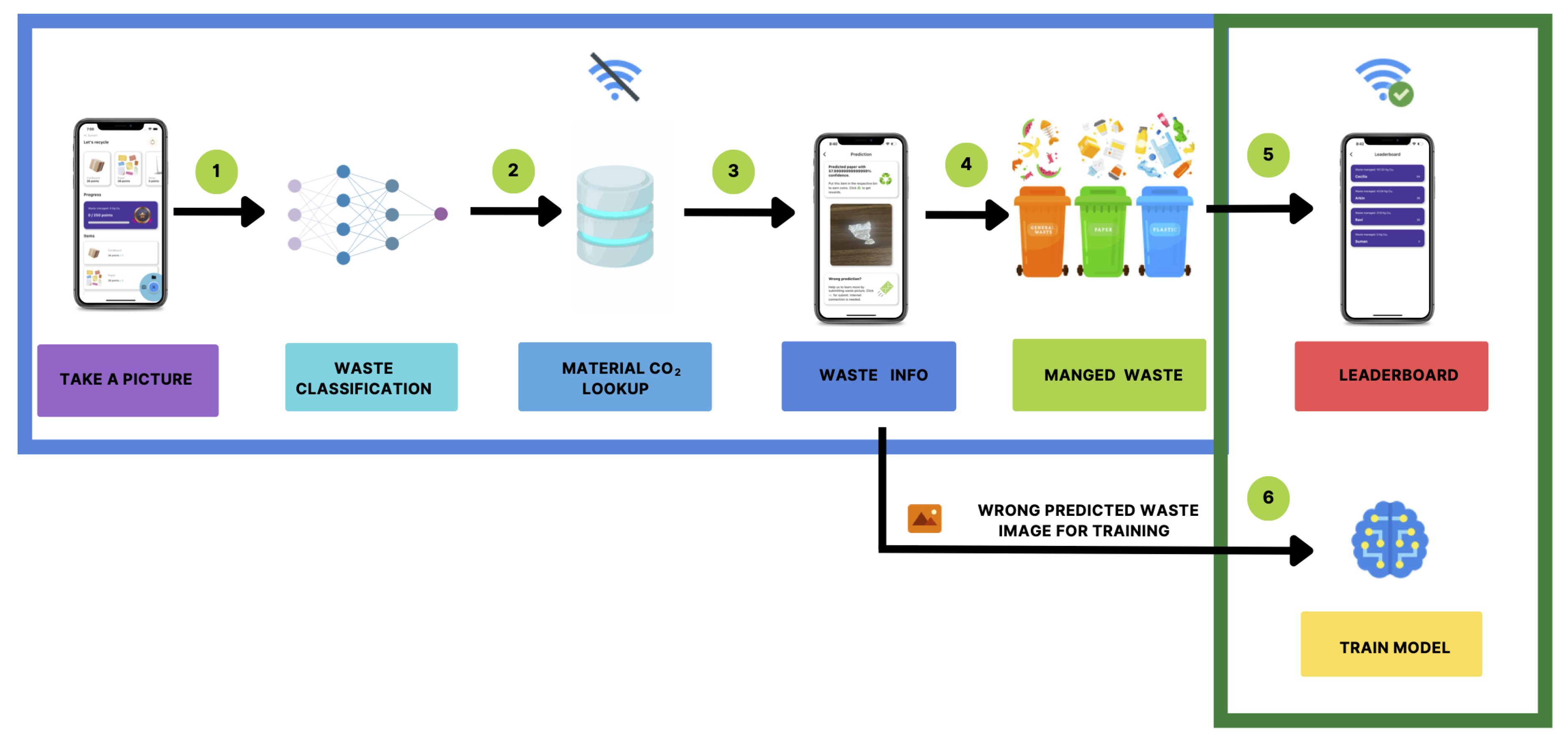

Figure 1.

System Architecture of MWaste

Figure 1.

System Architecture of MWaste

2. Methods

Classifying waste using machine learning is a challenging task since determining the recyclability or compostability of waste based on images is difficult due to the properties of the material being hard to detect from images. Besides, waste can take on various shapes and forms, which requires machine learning techniques to handle such variability and the recyclability of waste depends on the local recycling center’s capabilities, which the app must consider.

Taking those considerations into account, the app is designed in such a way that feedbacks are collected from users and can operate smoothly with or without an internet connection. The waste image is obtained from the gallery or from camera, and is passed through the waste classification model, which is trained to categorize the waste.

The classification model is the result of training a specific CNN model on a dataset of labeled images. Several state-of-the-art convolutional neural network methods is tested in this research, which included Inception V3 [

12], MobileNet V2 [

13], Inception Resnet V2 [

14], Resnet 50 [

15], Mobile Net [

16], and Xception [

17].

The model is then converted into TensorFlow Lite model as they are highly optimized, efficient, and versatile, making them ideal for running real-time predictions on mobile [

18]. Once identified, the model calculates the carbon emissions associated with the material and provides waste management recommendations. For misclassification, user can submit the waste image for further analysis. Managing waste earns reward points, and the amount of carbon footprint saved is also tracked. An internet connection is required to submit wrongly predicted waste images and sync accumulated points.

3. Training

This section describes the training procedure and parameter settings used in this research.

3.1. Datasets

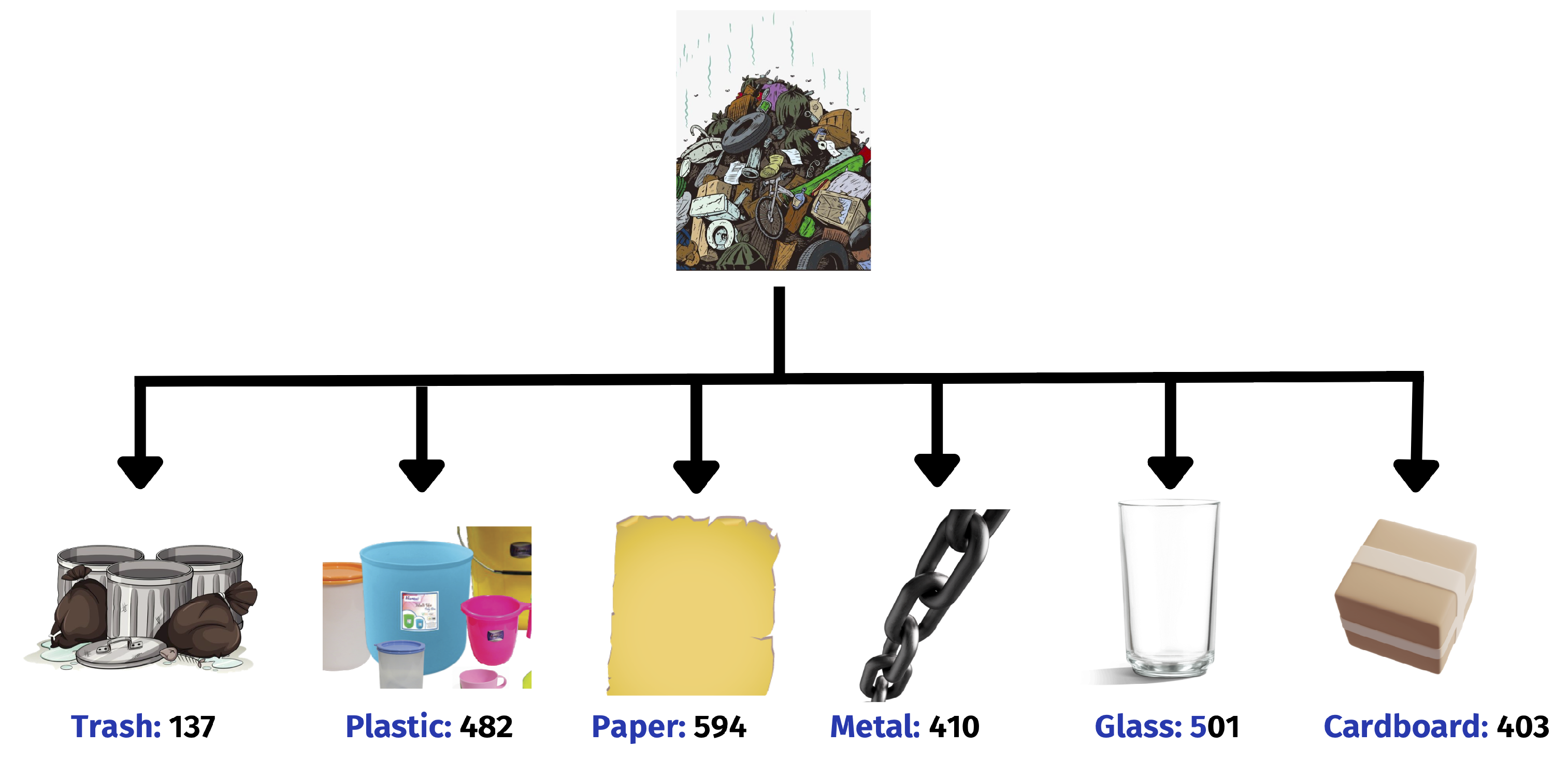

For this research, the publicly available trashnet dataset [

19] is utilized, consisting of 2,527 images across six classes: glass, paper, cardboard, plastic, metal, and trash. These images were captured using Apple iPhone 7 Plus, Apple iPhone 5S, and Apple iPhone SE, with the objects placed on a white posterboard in sunlight or room lighting. The dataset was annotated by experts. To ensure robustness, 60% of the images were used for training, 13% for testing, and 17% for validation.

Figure 2.

Datasets comprising the count of classes

Figure 2.

Datasets comprising the count of classes

3.2. Procedure

The gathered dataset is processed through different models while keeping all parameters constant. Subsequently, the outcomes are attentively analyzed. Categorical cross-entropy is employed to gauge the loss, as it is suitable for multiclass problems [

20]. Meanwhile, accuracy serves as a metric, and Adam is the optimizer of choice, given that it applies momentum and adaptive gradient for computing adaptive learning rates for each parameter [

21].

Global average pooling is added to create one feature map per category in the final convolutional layer for the classification task [

22]. Three dense layers are then employed to learn complex functions and improve the accuracy of classification. To avoid overfitting, dropout is added as a regularization technique [

23]. Softmax is used as an activation function to convert the output values into probabilities [

24].

4. Evaluation

In this section, different evaluation metrics are discussed and the results are compared based on them.

4.1. Evaluation Metrics

The evaluation measures can be used to explain the performance of various models. The study employs the Accuracy Score and F1 Score as evaluation metrics.

4.1.1. Accuracy Score

Classification accuracy is defined as the percentage of accurate predictions out of the total number of samples analyzed. To calculate accuracy in classification, the number of correct predictions is divided by the total number of predictions, and the resulting fraction is expressed as a percentage by multiplying it by 100[

25]. The formula for the accuracy score is as follows:

4.1.2. F1 Score

When attempting to compare two models with contrasting levels of accuracy and recall, such as one with poor precision but strong recall, it can be challenging. Improving accuracy may have an adverse effect on recall, and vice versa, which can result in confusion [

26]. Hence, the F1-Score is utilized as a means of comparing the two sets and serves as a valuable metric for evaluating both recall and precision simultaneously.

The F1-Score is employed when dealing with imbalanced class data situations [

27]. As most real-world classification problems involve uneven case distributions, the F1-score is a more suitable metric for evaluating the model compared to accuracy.

4.2. Model Evaluation

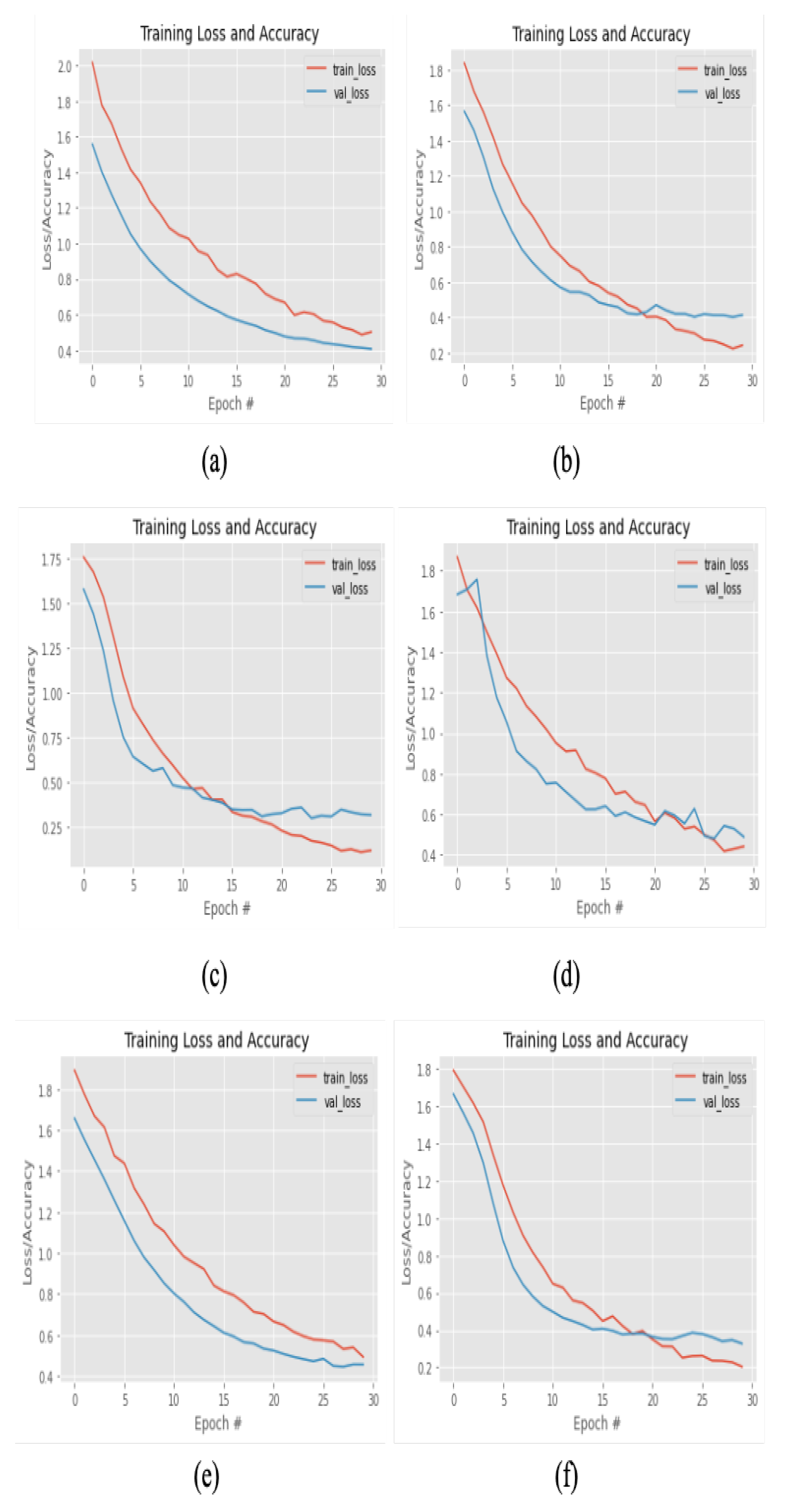

Models are evaluated with same settings and their outputs are measured using evaluation metrics: accuracy score, and f1 -score. After comparing the models as shown in

Table 1, it can be seen that InceptionResNetV2 and Xception have higher accuracy, but the loss is higher for InceptionResNetV2 and Inception V3 models.

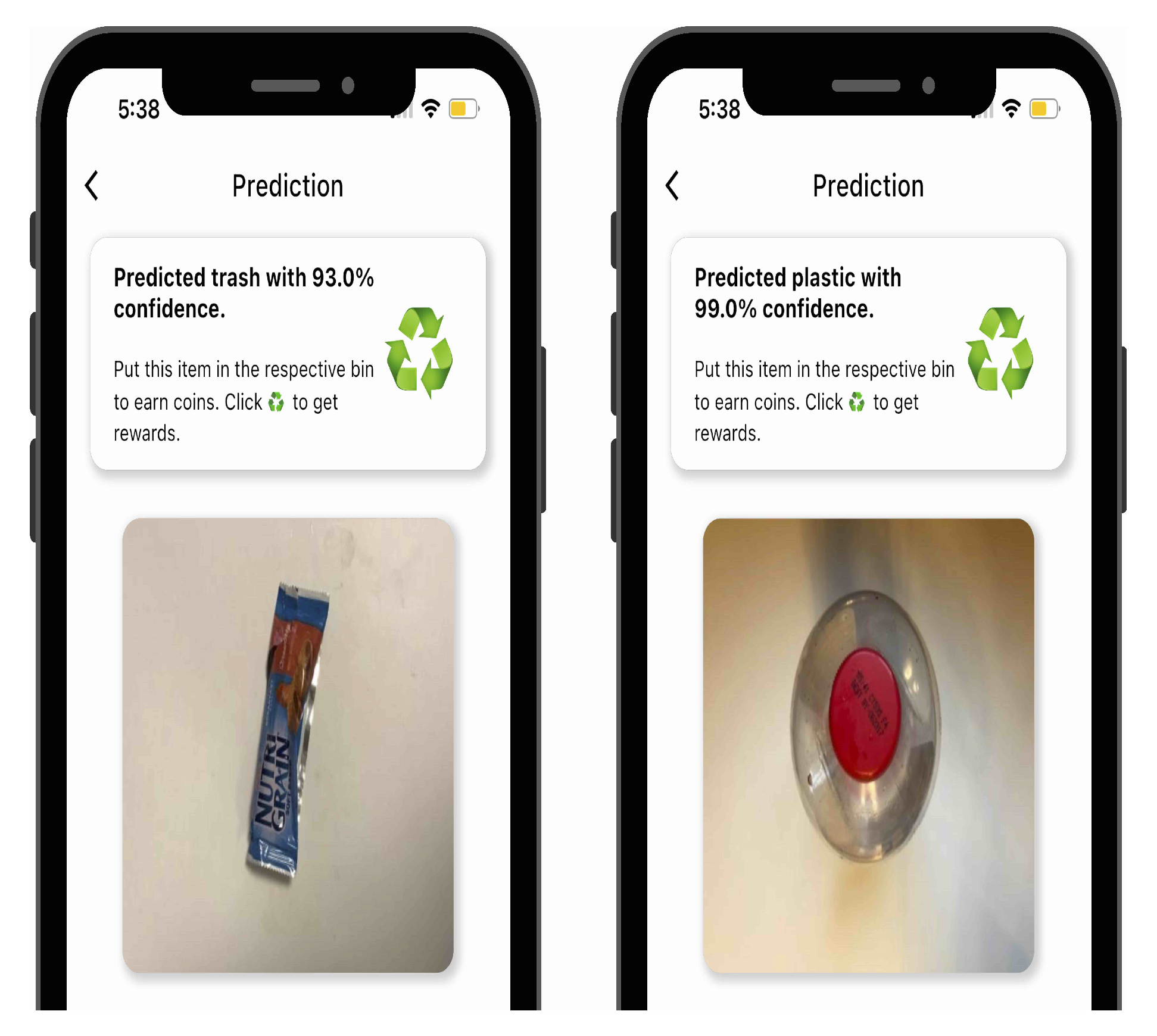

Figure 3 illustrates the classification result of a waste material from the given training set.

Accuracy and loss of each model during training is shown in

Figure 4.

5. Conclusion and Future Work

This study presents a mobile application that utilizes deep learning techniques to classify waste in real-time. The app categorizes waste into six groups, including plastic, paper, metal, glass, cardboard, and trash, and is publicly available with a trained model (

https://github.com/sumn2u/deep-waste-app). The app incorporates gamification strategies, such as a leaderboard based on waste management points, to motivate users to dispose of waste properly.

The team plans to improve the accuracy of the classification system, form partnerships with local recycling companies, and expand the dataset to raise awareness of environmental impacts and reduce incorrect waste disposal.

Acknowledgments

My heartfelt appreciation goes out to Gary Thung and Mindy Yang for sharing the TrashNet dataset on Github for public use. This dataset has proven to be an invaluable asset for my research or project on waste management and classification, and I am deeply thankful for their hard work in gathering and disseminating this information to a larger audience.

References

- S. Kaza, L. C. Yao, P. Bhada-Tata, and F. Van Woerden, What a Waste 2.0. Washington, DC: World Bank, Sep. 2018. [Online]. Available: http://hdl.handle.net/10986/30317.

- N. Ferronato and V. Torretta, “Waste mismanagement in developing countries: a review of global issues,” International Journal of Environmental Research and Public Health, vol. 16, no. 6, p. 1060, Mar. 2019. [Online]. Available: https://www.mdpi.com/1660-4601/16/6/1060.

- M. N. Shazia Iqbal*, Tayyaba Naz, “Challenges and opportunities linked with waste management under global perspective: a mini review,” Journal of Quality Assurance in Agricultural Sciences, pp. 9–13, Aug. 2021. [Online]. Available: http://isciencepress.com/index.php/Jqaas/article/view/5.

- W. Fadhullah, N. I. N. Imran, S. N. S. Ismail, M. H. Jaafar, and H. Abdullah, “Household solid waste management practices and perceptions among residents in the East Coast of Malaysia,” BMC Public Health, vol. 22, no. 1, p. 1, Dec. 2022. [Online]. Available: https://bmcpublichealth.biomedcentral.com/articles/10.1186/s12889-021-12274-7. 1186.

- K. Lin, Y. Zhao, X. Gao, M. Zhang, C. Zhao, L. Peng, Q. Zhang, and T. Zhou, “Applying a deep residual network coupling with transfer learning for recyclable waste sorting,” Environmental Science and Pollution Research, vol. 29, no. 60, pp. 91 081–91 095, Dec. 2022. [Online]. Available: https://link.springer.com/10.1007/s11356-022-22167-w. 1007.

- W. Liu, H. Ouyang, Q. Liu, S. Cai, C. Wang, J. Xie, and W. Hu, “Image recognition for garbage classification based on transfer learning and model fusion,” Mathematical Problems in Engineering, vol. 2022, pp. 1–12, Aug. 2022. [Online]. Available: https://www.hindawi.com/journals/mpe/2022/4793555/.

- J. Rashida, R. Hamzah, K. A. Fariza Abu Samah, and S. Ibrahim, “Implementation of faster region-based convolutional neural network for waste type classification,” in 2022 International Conference on Computer and Drone Applications (IConDA), 2022, pp. 125–130.

- M. S. Nafiz, S. S. Das, M. K. Morol, A. A. Juabir, and D. Nandi, “Convowaste: An automatic waste segregation machine using deep learning,” 2023 3rd International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), pp. 181–186, 2023.

- S. M. Cheema, A. Hannan, and I. M. Pires, “Smart waste management and classification systems using cutting edge approach,” Sustainability, 2022.

- S. M, N. V., J. Katyal, and R. R, “Technical solutions for waste classification and management: A mini-review.” Waste management & research : the journal of the International Solid Wastes and Public Cleansing Association, ISWA, p. 734242X221135262, 2022.

- Y. Narayan, “Deepwaste: Applying deep learning to waste classification for a sustainable planet,” 2021.

- J.-w. Feng and X.-y. Tang, “Office garbage intelligent classification based on inception-v3 transfer learning model,” Journal of Physics: Conference Series, vol. 1487, no. 1, p. 012008, Mar. 2020. [Online]. Available: https://iopscience.iop.org/article/10.1088/1742-6596/1487/1/012008.

- L. Yong, L. Ma, D. Sun, and L. Du, “Application of MobileNetV2 to waste classification,” PLOS ONE, vol. 18, no. 3, p. e0282336, Mar. 2023. [Online]. Available: https://dx.plos.org/10.1371/journal.pone.0282336.

- S.-W. Lee, “Novel classification method of plastic wastes with optimal hyperparameter tuning of Inception_resnetv2,” in 2021 4th International Conference on Information and Communications Technology (ICOIACT). Yogyakarta, Indonesia: IEEE, Aug. 2021, pp. 274–279. [Online]. Available: https://ieeexplore.ieee.org/document/9563917/.

- A. S. Girsang, R. Yunanda, M. E. Syahputra, and E. Peranginangin, “Convolutional neural network using res-net for organic and anorganic waste classification,” in 2022 IEEE International Conference of Computer Science and Information Technology (ICOSNIKOM), 2022, pp. 01–06.

- I. F. Nurahmadan, R. M. Arjuna, H. D. Prasetyo, P. A. Hogantara, I. N. Isnainiyah, and R. Wirawan, “A mobile based waste classification using mobilenets-v1 architecture,” in 2021 International Conference on Informatics, Multimedia, Cyber and Information System (ICIMCIS, 2021, pp. 279–284.

- Rismiyati, S. N. Endah, Khadijah, and I. N. Shiddiq, “Xception architecture transfer learning for garbage classification,” in 2020 4th International Conference on Informatics and Computational Sciences (ICICoS), 2020, pp. 1–4.

- “Tensorflow lite.” [Online]. Available: https://www.tensorflow.org/lite/guide.

- M. Y. Gary Thung, “Trashnet,” 2017. [Online]. Available: https://github.com/garythung/trashnet.

- Y. Ho and S. Wookey, “The real-world-weight cross-entropy loss function: modeling the costs of mislabeling,” IEEE Access, vol. 8, pp. 4806–4813, 2020. [Online]. Available: https://ieeexplore.ieee.org/document/8943952/.

- D. P. Kingma and J. Ba, “Adam: a method for stochastic optimization,” Jan. 2017, arXiv:1412.6980 [cs]. [Online]. Available: http://arxiv.org/abs/1412.6980. arXiv:1412.6980 [cs]. [Online]. Available: http://arxiv.org/abs/1412.

- B. Zhang, Q. Zhao, W. Feng, and S. Lyu, “Alphamex: A smarter global pooling method for convolutional neural networks,” Neurocomputing, vol. 321, pp. 36–48, 2018. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0925231218310610.

- H. Salehinejad and S. Valaee, “Ising-dropout: A regularization method for training and compression of deep neural networks,” in ICASSP 2019 - 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2019, pp. 3602–3606.

- Y. Zhou, X. Wang, M. Zhang, J. Zhu, R. Zheng, and Q. Wu, “Mpce: a maximum probability based cross entropy loss function for neural network classification,” IEEE Access, vol. 7, pp. 146 331–146 341, 2019. [Online]. Available: https://ieeexplore.ieee.org/document/8862886/.

- J. Li, M. Gao, and R. D’Agostino, “Evaluating classification accuracy for modern learning approaches,” Statistics in Medicine, vol. 38, no. 13, pp. 2477–2503, Jun. 2019. [Online]. Available: https://onlinelibrary.wiley.com/doi/10.1002/sim.8103.

- H. Uzen, M. Turkoglu, and D. Hanbay, “Surface defect detection using deep u-net network architectures,” in 2021 29th Signal Processing and Communications Applications Conference (SIU). Istanbul, Turkey: IEEE, Jun. 2021, pp. 1–4. [Online]. Available: https://ieeexplore.ieee.org/document/9477790/.

- N. W. S. Wardhani, M. Y. Rochayani, A. Iriany, A. D. Sulistyono, and P. Lestantyo, “Cross-validation metrics for evaluating classification performance on imbalanced data,” in 2019 International Conference on Computer, Control, Informatics and its Applications (IC3INA). Tangerang, Indonesia: IEEE, Oct. 2019, pp. 14–18. [Online]. Available: https://ieeexplore.ieee.org/document/8949568/.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).