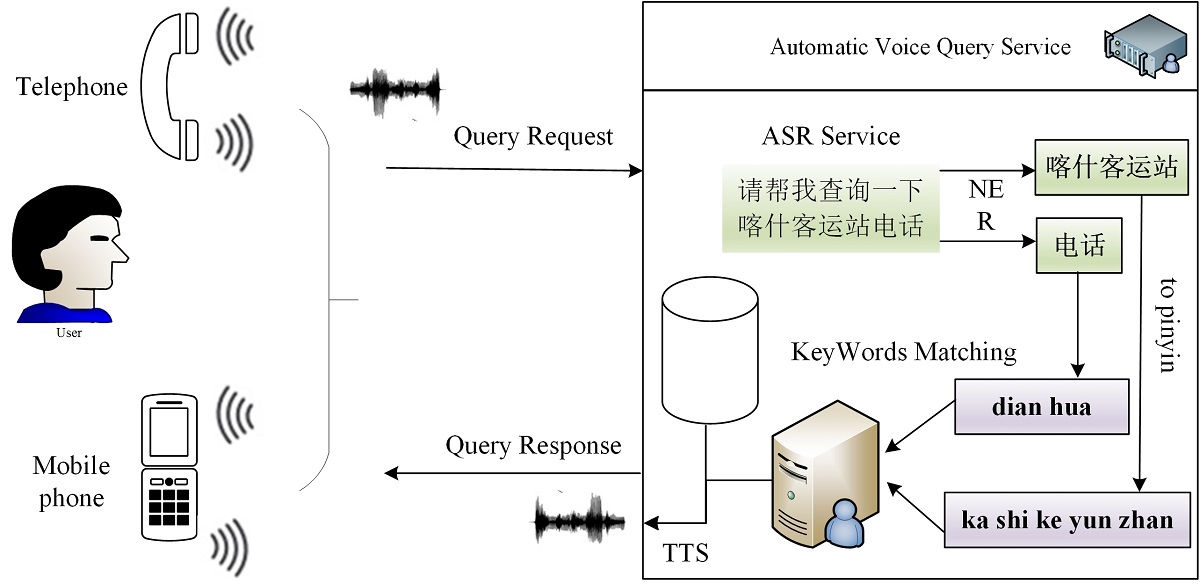

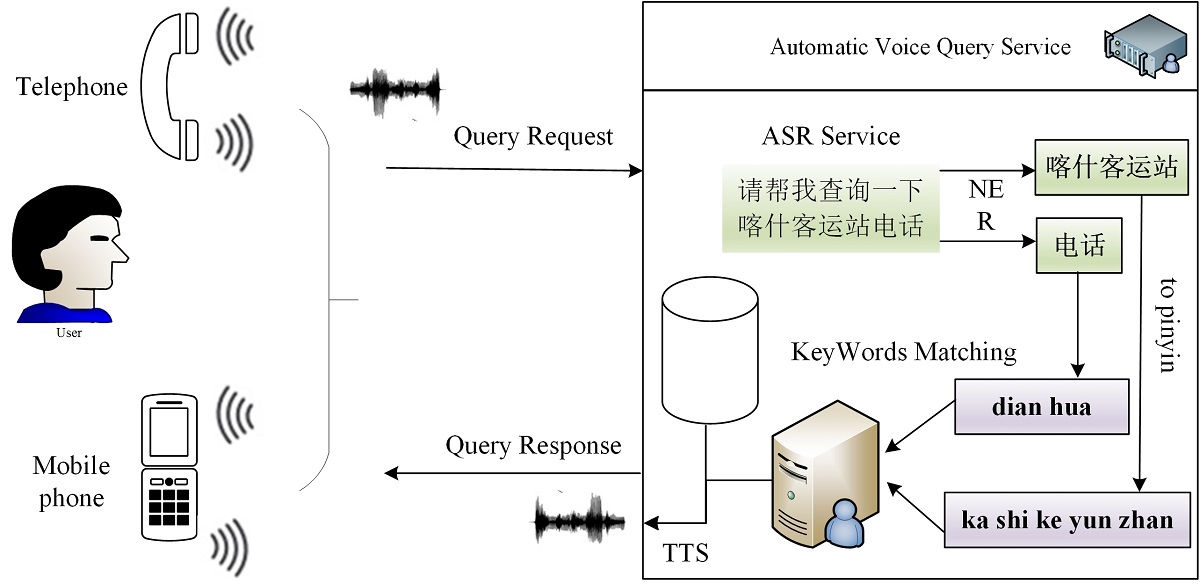

Automatic Voice Query Service can extremely reduce the artificial cost, which could improve the response efficiency for users. The automatic speech recognition (ASR) is one of the important component in AVQS. However, many dialect areas in China make the AVQS have to response the multi-accented Mandarin users by single acoustic model in ASR. This problem severely limits the accuracy of ASR for multi-accented speech in the AVQS. In this paper, a new framework for AVQS is proposed to improve the accuracy of response. Firstly, the fusion feature including iVector and filterbank acoustic features is used to train the Transformer-CTC model. Secondly, the transformer-CTC model is used to construct the end-to-end ASR. Finally, key words matching algorithm for AVQS based on fuzzy mathematic theory is proposed to further improve the accuracy of response. The results show that the final accuracy in our proposed framework for AVQS arrives at 91.5%. The proposed framework for AVQS can satisfy the service requirement of different areas in mainland of China. This research has a great significance for exploring the application value of artificial intelligence in the real scene.