Submitted:

25 December 2025

Posted:

23 January 2026

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature Review

3. Methodology

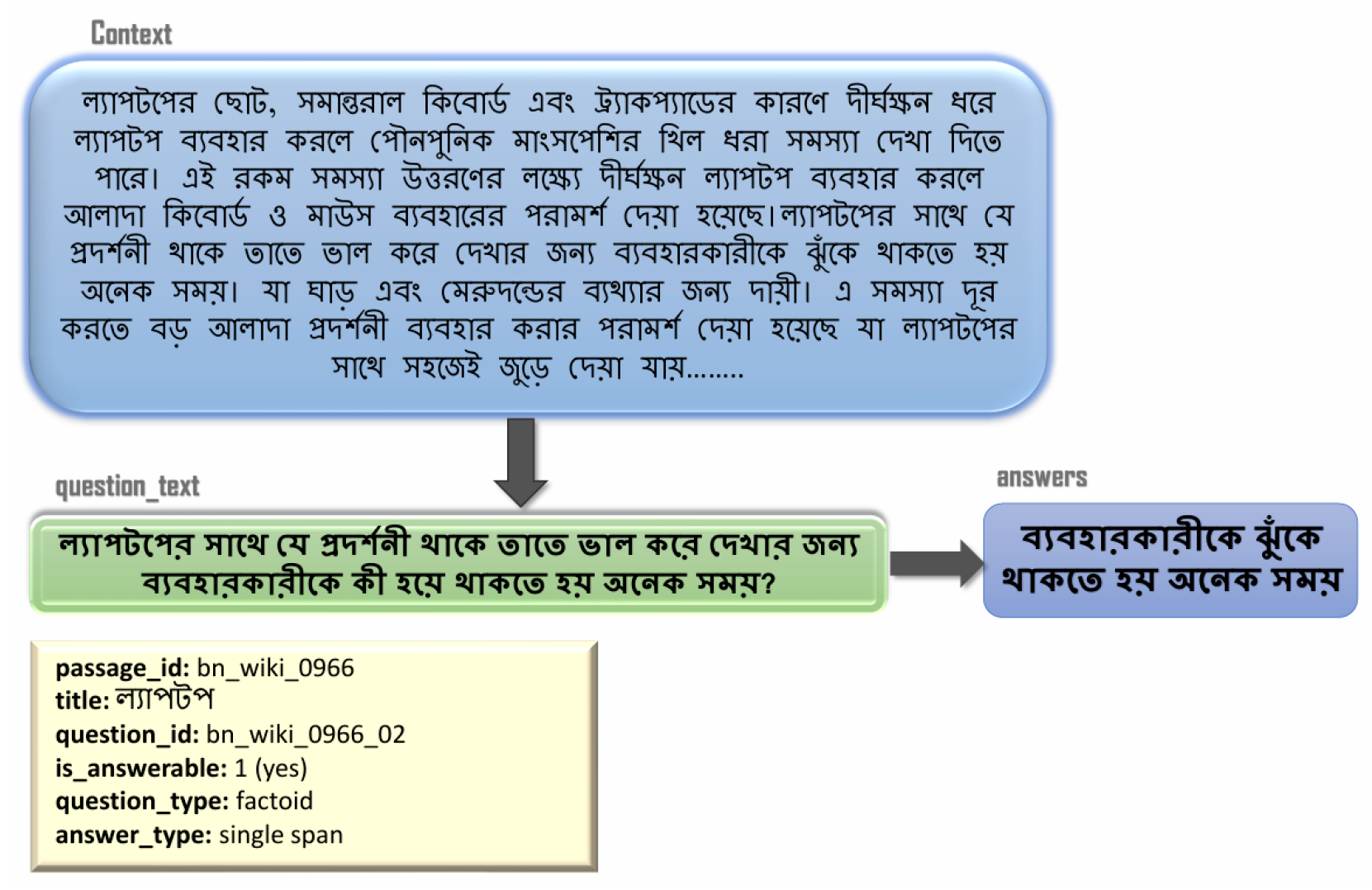

3.1. Dataset Overview

- Context Passages: The foundation comprises 3,000 text passages from Bangla Wikipedia, carefully selected for broad domain coverage, and cleaned to maintain quality and consistency by removing hyperlinks, citations, and non-Bangla text.

-

Question Types: The passages generated 14,889 questions, categorized into four types to address diverse challenges.

- −

- Factoid: Questions that ask for specific facts, often beginning with interrogatives like "কী (What), "কে (Who)," "কখন (When)," "কোথায় (Where)," or "কোনটি (Which)"

- −

- Causal: Questions that inquire about reasons or methods, typically starting with "কেন (Why)" or "কিভাবে (How)"

- −

- Confirmation: Questions that can be answered definitively with either "হ্যাঁ (Yes)" or "না (No)" often requiring inference.

- −

- List: This type of question contains keywords like কী কী/কোনগুেলা (What are), কারা কারা (Who are), etc.

Questions that require enumerating multiple items or facts from the passage. Crucially, 3,631 of these questions are unanswerable, meaning the context passage does not contain the information required to answer them. -

Answer Types: Answer collection was done by human annotators with 4 categories of answers respected to the questions. For answerable questions, the answers fall into three formats:

- −

- Single-Span: A single, contiguous segment of text extracted directly from the passage.

- −

- Multiple Spans: An answer composed of several text segments from different parts of the passage, combined and separated by semicolons.

- −

- Yes/No: A direct binary response of either"হ্যাঁ (Yes)" or "না (No)".

3.2. Models

- GPT-4-Turbo: Developed by OpenAI, this model is an advanced GPT iteration that leverages deep contextual analysis to generate precise, intent-aware responses across diverse linguistic tasks.

- Meta-Llama-3.1-8B: This compact model from Meta features 8 billion parameters. Key specifications include 40 attention heads, an expanded vocabulary size of 128,000 tokens.

- Qwen2.5-72B-Instruct: Alibaba’s substantial language model incorporates 72 billion parameters specifically tuned for instruction-based tasks.

- Mixtral-8x22B-Instruct-v0.1: Mixtral uses eight specialized components with 22 billion parameters, handling diverse queries through parallel processing pathways, and ensuring quality response generation through distinct capabilities for question analysis.

- Google-Gemma-7B: Google’s 7-billion parameter model provides balanced performance in language understanding and generation tasks. The specialization in domain-specific sectors is made possible by its more compact architecture compared to larger models.

- Mistral-7B-v0.1: This foundational model from Mistral AI (7 billion parameters) serves as a capable base for various language processing applications.

3.3. Prompt Design and Evaluation

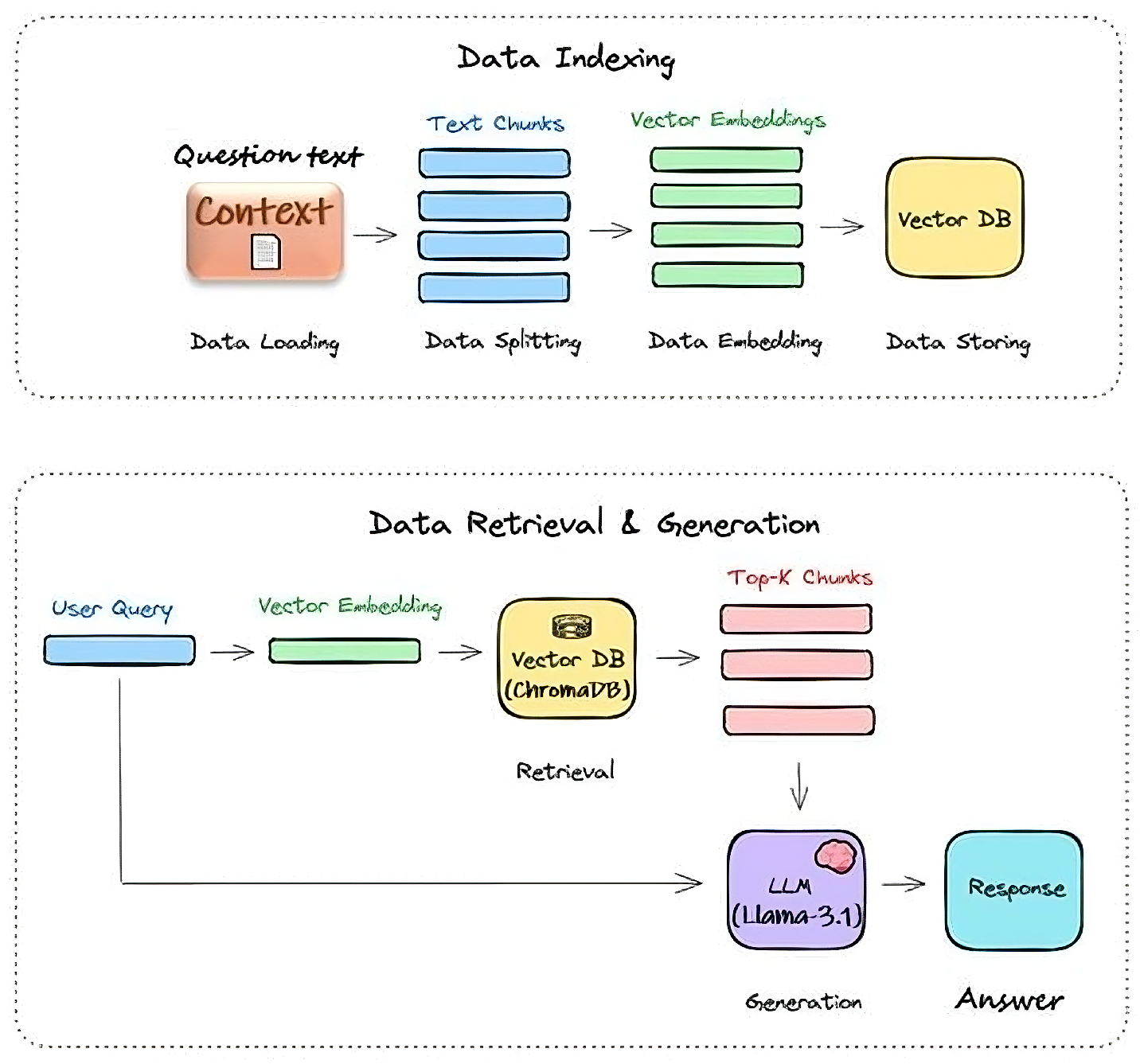

3.4. Retrieval-Augmented Generation (RAG) Framework:

4. Results and Discussion

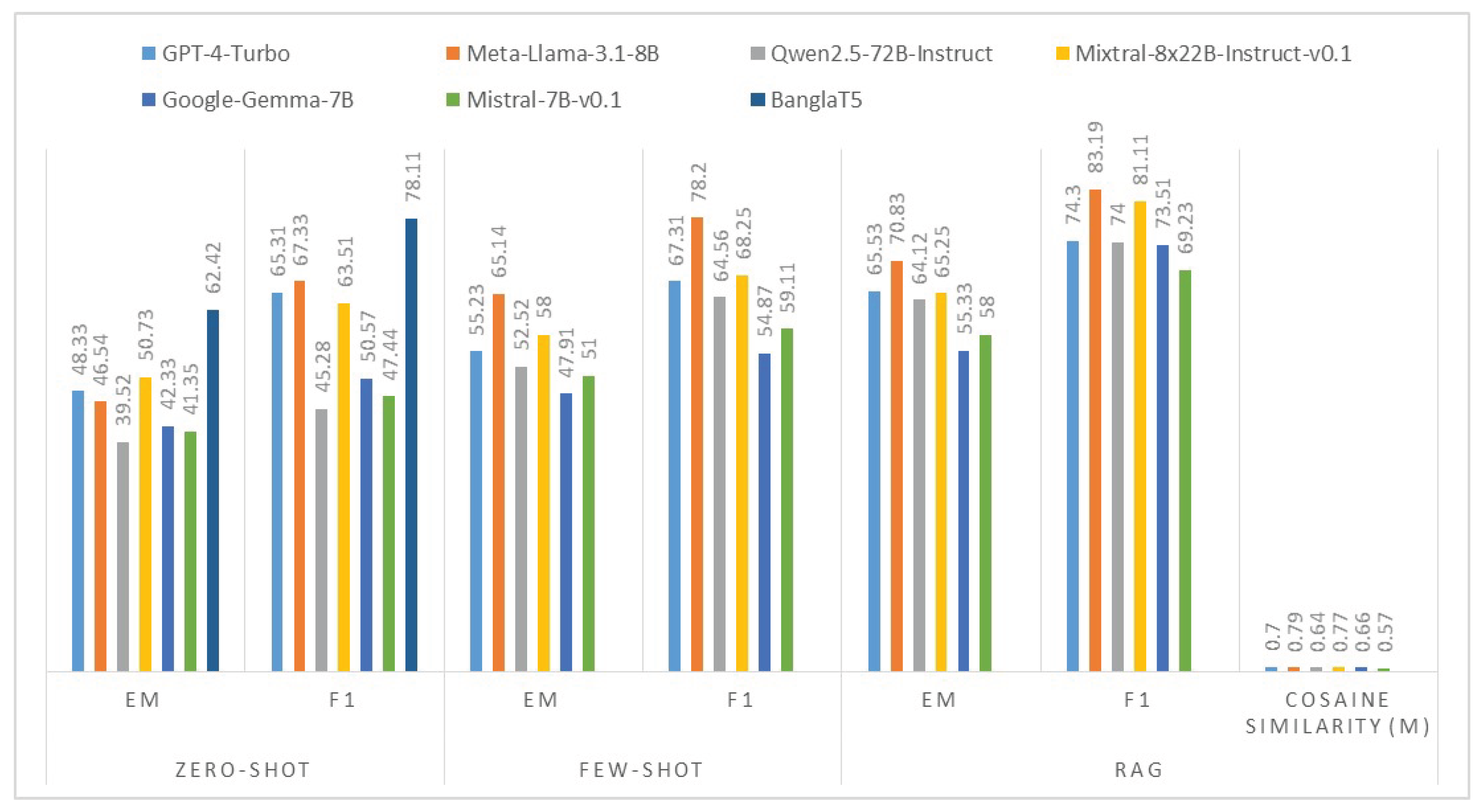

4.1. Performance Evaluation (BHRE and RAG)

4.2. Performance on Different Types of Questions

4.3. Performance on Different Types of Answers

4.4. Impact of RAG Components

5. Conclusions

References

- Rajpurkar, P.; Zhang, J.; Lopyrev, K.; Liang, P. Squad: 100,000+ questions for machine comprehension of text. arXiv 2016, arXiv:1606.05250. [Google Scholar]

- Yang, Z.; Qi, P.; Zhang, S.; Bengio, Y.; Cohen, W. W.; Salakhutdinov, R.; Manning, C. D. Hotpotqa: A dataset for diverse, explainable multi-hop question answering. arXiv 2018, arXiv:1809.09600. [Google Scholar]

- Raihan, N.; Zampieri, M. Tigerllm-a family of bangla large language models. arXiv 2025, arXiv:2503.10995. [Google Scholar]

- Mahfuz, T.; Dey, S. K.; Naswan, R.; Adil, H.; Sayeed, K. S.; Shahgir, H. S. Too late to train, too early to use? a study on necessity and viability of low-resource bengali llms. arXiv 2024, arXiv:2407.00416. [Google Scholar] [CrossRef]

- Ekram, S. M. S.; Rahman, A. A.; Altaf, M. S.; Islam, M. S.; Rahman, M. M.; Rahman, M. M.; Hossain, M. A.; Kamal, A. R. M. Banglarqa: A benchmark dataset for under-resourced bangla language reading comprehension-based question answering with diverse question-answer types. In Findings of the Association for Computational Linguistics: EMNLP 2022; Association for Computational Linguistics, 2022; pp. 2518–2532. [Google Scholar]

- Bhattacharjee, A.; Hasan, T.; Ahmad, W. U.; Shahriyar, R. Banglanlg and banglat5: Benchmarks and resources for evaluating low-resource natural language generation in bangla. arXiv 2022, arXiv:2205.11081. [Google Scholar]

- Xue, L.; Constant, N.; Roberts, A.; Kale, M.; Al-Rfou, R.; Siddhant, A.; Barua, A.; Raffel, C. mt5: A massively multilingual pre-trained text-to-text transformer. arXiv 2020, arXiv:2010.11934. [Google Scholar]

- OpenAI, Gpt-4 turbo documentation. 2025. Available online: https://platform.openai.com/docs/models/gpt-4-turbo.

- M. LLaMA, “Llama 3.1-8b.”. 2025. Available online: https://huggingface.co/meta-llama/Llama-3.1-8B (accessed on 11 September 2025).

- Q. Team, “Qwen2.5-72b-instruct.”. 2025. Available online: https://huggingface.co/Qwen/Qwen2.5-72B-Instruct (accessed on 11 September 2025).

- Mistral AI, Mixtral-8x22b-instruct-v0.1. 2025. Available online: https://huggingface.co/mistralai/Mixtral-8x22B-Instruct-v0.1 (accessed on 11 September 2025).

- Google, Gemma-7b-it. 2025. Available online: https://huggingface.co/google/gemma-7b-it (accessed on 11 September 2025).

- Mistral AI, Mistral-7b-v0.1. 2025. Available online: https://huggingface.co/mistralai/Mistral-7B-v0.1 (accessed on 11 September 2025).

- Sultana, S.; Muna, S. S.; Samarukh, M. Z.; Abrar, A.; Chowdhury, T. M. Banglamedqa and banglammedbench: Evaluating retrieval-augmented generation strategies for bangla biomedical question answering. arXiv 2025, arXiv:2511.04560. [Google Scholar]

- Chang, C.-C.; Li, C.-F.; Lee, C.-H.; Lee, H.-S. Enhancing low-resource minority language translation with llms and retrieval-augmented generation for cultural nuances. Intelligent Systems Conference, 2025; Springer; pp. 190–204. [Google Scholar]

- Abrar, A.; Oeshy, N. T.; Maheru, P.; Tabassum, F.; Chowdhury, T. M. Faithful summarization of consumer health queries: A cross-lingual framework with llms. arXiv 2025, arXiv:2511.10768. [Google Scholar] [CrossRef]

- Abrar, A.; Oeshy, N. T.; Kabir, M.; Ananiadou, S. Religious bias landscape in language and text-to-image models: Analysis, detection, and debiasing strategies. arXiv 2025, arXiv:2501.08441. [Google Scholar] [CrossRef]

- Rony, M. R. A. H.; Shaha, S. K.; Hasan, R. A.; Dey, S. K.; Rafi, A. H.; Sirajee, A. H.; Lehmann, J. Banglaquad: A bengali open-domain question answering dataset. arXiv 2024, arXiv:2410.10229. [Google Scholar]

- Veturi, S.; Vaichal, S.; Jagadheesh, R. L.; Tripto, N. I.; Yan, N. Rag based question-answering for contextual response prediction system. arXiv 2024, arXiv:2409.03708. [Google Scholar] [CrossRef]

- Faieaz, W. W.; Jannat, S.; Mondal, P. K.; Khan, S. S.; Karmaker, S.; Rahman, M. S. Advancing bangla nlp: Transformer-based question generation using fine-tuned llm. 2025 International Conference on Electrical, Computer and Communication Engineering (ECCE), 2025; IEEE; pp. 1–7. [Google Scholar]

- Ipa, A. S.; Rony, M. A. T.; Islam, M. S. Empowering low-resource languages: Trase architecture for enhanced retrieval-augmented generation in bangla. In in Proceedings of the 1st Workshop on Language Models for Underserved Communities (LM4UC 2025), 2025; pp. 8–15. [Google Scholar]

- Shafayat, S.; Hasan, H.; Mahim, M.; Putri, R.; Thorne, J.; Oh, A. Benqa: A question answering benchmark for bengali and english. In Findings of the Association for Computational Linguistics ACL 2024; 2024; pp. 1158–1177. [Google Scholar]

- Fahad, A. R.; Al Nahian, N.; Islam, M. A.; Rahman, R. M. Answer agnostic question generation in bangla language. International Journal of Networked and Distributed Computing 2024, vol. 12(no. 1), 82–107. [Google Scholar] [CrossRef]

- Roy, S. C.; Manik, M. M. H. Question-answering system for bangla: Fine-tuning bert-bangla for a closed domain. arXiv 2024, arXiv:2410.03923. [Google Scholar]

- Rakibul Hasan, M.; Majid, R.; Tahmid, A. Bangla-bayanno: A 52k-pair bengali visual question answering dataset with llm-assisted translation refinement. arXiv e-prints 2025, arXiv–2508. [Google Scholar]

- E. AI, “Generative ai api.”. 2025. Available online: https://www.edenai.co/technologies/generative-ai (accessed on 11 September 2025).

- Chroma, Chroma: An open-source search and retrieval database for ai applications. 2025. Available online: https://www.trychroma.com/ (accessed on 13 September 2025).

| Context passages | 3000 |

| Question-answer pairs | 14889 |

| Average word count | 215 |

| Factoid (69.8%) | 10388 |

| Casual (9.6%) | 1433 |

| Confirmation (10.3%) | 1531 |

| List (10.3%) | 1537 |

| Model Name | Zero-shot | Few-shot | RAGzero-shot | Cosine Similarity () | |||

|---|---|---|---|---|---|---|---|

| EM | F1 | EM | F1 | EM | F1 | ||

| GPT-4-Turbo | 48.33 | 65.31 | 55.23 | 67.31 | 65.53 | 74.30 | 0.70 |

| Meta-Llama-3.1-8B | 46.54 | 67.33 | 65.14 | 78.20 | 70.83 | 83.19 | 0.79 |

| Qwen2.5-72B-Instruct | 39.52 | 45.28 | 52.52 | 64.56 | 64.12 | 74.00 | 0.64 |

| Mixtral-8x22B-Instruct-v0.1 | 50.73 | 63.51 | 58.00 | 68.25 | 65.25 | 81.11 | 0.77 |

| Google-Gemma-7B | 42.33 | 50.57 | 47.91 | 54.87 | 55.33 | 73.51 | 0.66 |

| Mistral-7B-v0.1 | 41.35 | 47.44 | 51.00 | 59.11 | 58.00 | 69.23 | 0.57 |

| BanglaT5 | 62.42 | 78.11 | |||||

| Factiod | Causal | Confirmation | list | |

|---|---|---|---|---|

| EMRAG | 77.30 | 68.53 | 91.88 | 52.50 |

| F1RAG | 85.67 | 80.31 | 91.88 | 76.66 |

| single span | multiple spans | yes/no | |

|---|---|---|---|

| EMRAG | 76.66 | 51.31 | 92.21 |

| F1RAG | 86.33 | 71.22 | 92.21 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.