1. Introduction

The ability of machines to see and comprehend their surroundings by recognizing and locating objects within images or video streams, referred to as object detection, is fundamental in modern Artificial Intelligence (AI) [

1]. In the rapidly advancing field of computer vision, object detection has emerged as a cornerstone task with significant implications across numerous domains, from autonomous driving and surveillance to healthcare and robotics [

2]. The central goal of object detection is to accurately locate and classify objects within an image or video stream, a task once dominated by computationally intensive algorithms dependent on handcrafted features [

3].

In its early stages, object detection relied heavily on feature-engineering techniques such as Scale-Invariant Feature Transform (SIFT) and Histogram of Oriented Gradients (HOG), paired with classical classifiers like Support Vector Machines (SVMs) [

4]. These methods struggled with common challenges such as occlusion, scale variation, background clutter, and lighting changes. Despite incremental improvements, the pipeline remained modular, involving distinct stages for region proposal, feature extraction, and classification, each contributing to inefficiency and latency. The advent of deep learning, and particularly Convolutional Neural networks (CNNs), marked a turning point. End-to-end models such as R-CNN, Fast R-CNN, and Faster R-CNN significantly enhanced accuracy and learning capability by leveraging CNNs for automatic feature extraction and joint optimization [

5,

6]. However, these region proposal-based approaches still involved computationally intensive steps that limited their suitability for real-time applications.

A paradigm shift occurred with the introduction of the “You Only Look Once” (YOLO) framework by [

7] – a watershed moment for real-time object detection. Unlike its predecessors, YOLO approached detection as a regression problem, unifying object classification and localization into a single neural network. The model divided the image into a grid, where each cell was responsible for predicting bounding boxes and class probabilities. This elegant single-stage architecture eliminated the costly region proposal step and enabled real-time inference speeds, achieving up to 45 frames per second on benchmarks like PASCAL VOC—without compromising significantly on accuracy [

7]. YOLO’s real-time capability quickly positioned it as a preferred choice for latency-sensitive domains, such as autonomous navigation, drone vision, and live surveillance. Its architectural simplicity and speed-performance trade-off inspired further innovations that gradually enhanced robustness, accuracy, and scalability.

Over time, the YOLO framework evolved through a series of meticulously engineered upgrades. YOLOv2 and YOLOv3 tackled scale variance and feature resolution with multi-scale predictions and residual connections [

8]. YOLOv4 and YOLOv5 introduced CSPDarknet and novel data augmentation strategies like Mosaic, pushing the envelope of performance and generalization. Further advancements in YOLOv6 and YOLOv7 incorporated deeper backbones, quantization-aware training, and Transformer-inspired components, enhancing detection robustness and hardware adaptability [

8]. With YOLOv8, the architecture adopted an anchor-free design, integrated a more efficient CSPNet backbone, and utilized a FPN+PAN neck to bolster multi-scale detection [

9]. The innovation continued with YOLOv9, which addressed information loss in deep networks via Programmable Gradient Information (PGI) and the lightweight yet powerful GELAN backbone [

10]. YOLOv10 advanced efficiency on edge devices by eliminating the need for non-maximum suppression (NMS) through dual-consistent assignments, while embracing a holistic, unified architecture [

11].

Most recently, YOLOv12 represents the cutting edge in the series. Integrating attention mechanisms, Transformer-CNN hybrids, and cross-scale fusion, YOLOv12 achieves a delicate balance between precision and speed. It excels in small-object detection, improves dynamic label assignment, and pushes the limits of real-time detection capabilities, demonstrating how far YOLO has come from its origins [

12,

13].

This review aims to provide a comprehensive exploration of the YOLO algorithm’s journey, beginning with its foundational design principles and traversing through its evolution up to the state-of-the-art YOLOv12. In addition to dissecting architectural advancements, we will analyze comparative benchmarks, latency-accuracy trade-offs, and practical deployments in real-world settings. By reflecting on the trajectory from YOLOv1 to YOLOv12, this article not only chronicles a technological evolution but also highlights the underlying scientific innovations that continue to drive forward the capabilities of real-time object detection. The literature indicates that YOLO’s sustained prominence in academic and industrial domains stems from its balance between architectural simplicity and performance sophistication. Whether used for pedestrian detection in autonomous vehicles or embedded within mobile applications for precision agriculture, YOLO continues to demonstrate versatility and robustness across diverse contexts.

This review article comprehensively examines the evolution and advancement of the YOLO algorithms. We begin by establishing the fundamentals of object detection and the context of traditional models that preceded the deep learning revolution in

Section 2. We then, in

Section 3, delve into the core principles that define the YOLO approach. The heart of the review traces the detailed evolution of the YOLO family, analyzing the key architectural innovations, training methodologies, and performance improvements introduced in each major version from YOLOv1 to the latest YOLOv12 in

Section 4. Finally, we explore the vast landscape of real-world applications where YOLO algorithms are driving tangible impact, demonstrating how this once-novel approach has become an indispensable tool for enabling machines to perceive and interact with the world in real-time in

Section 6. A concluding remark, including our goal is provided in

Section 7 for both a technical and contextual understanding of how YOLO has evolved into a benchmark framework that encapsulates the aspirations of real-time computer vision systems.

2. Object Detection Fundamentals

The evolution of object detection in the last two decades is widely conceptualized in the academic literature as a bifurcated paradigm shift, denoted by the advent of deep learning methodologies

Figure 1. This progression is broadly categorized into two epochs: the traditional era (pre

), characterized by handcrafted feature engineering and heuristic-driven methods, and the deep learning revolution (post

), marked by data-driven, end-to-end learning frameworks that redefined performance benchmarks and scalability [

14].

During the traditional era, foundational approaches relied on manually engineered features such as Haar-like cascades [

15], Histogram of Oriented Gradients [

16], and Scale-Invariant Feature Transform [

17], combined with sliding-window detection pipelines. These methods, though theoretically interpretable, were constrained by their computational inefficiency, limited scale, and occlusion robustness, and dependence on domain-specific expertise for feature design. Detection frameworks like Deformable Part Models [

18] exemplified iterative refinements within this paradigm but struggled to generalize across diverse real-world scenarios.

The deep learning revolution, catalyzed by breakthroughs in convolutional neural networks [

19], precipitated a tectonic shift. Architectures such as Region-based CNNs [

20], Faster R-CNN [

21], and Single Shot MultiBox Detectors [

22] introduced end-to-end trainable systems that autonomously learned hierarchical feature representations. The introduction of You Only Look Once (YOLO) [

7] further democratized real-time detection by unifying localization and classification into a singular regression task. These models achieved unprecedented gains in accuracy, speed, and adaptability, driven by scalable optimization on large annotated datasets (e.g., ImageNet, COCO) and hardware acceleration (e.g., GPUs). Critically, this era redefined the role of human intervention, transitioning from feature engineering to architectural innovation and loss function design.

Academic discourse attributes this paradigm shift to the confluence of algorithmic advancements, data availability, and computational infrastructure. The post-2014 landscape has since been shaped by iterative refinements in attention mechanisms, transformer-based architectures [

23], and self-supervised learning, further solidifying deep learning’s dominance in both theoretical and applied contexts. This transition underscores a broader trend in computer vision: the ascendancy of data-centric, learnable systems over heuristic-driven methodologies.

2.1. Traditional Object Detection Models

The development of real-time object detection frameworks underwent significant advancements in the early 2000s. Viola and Jones (2001) pioneered a breakthrough in real-time face detection by introducing a cascaded classifier architecture that eliminated reliance on restrictive heuristics such as skin-color segmentation. Their algorithm, optimized for a 700 MHz Pentium III processor, demonstrated computational efficiency surpassing contemporary methods by orders of magnitude while maintaining competitive accuracy. This innovation marked a paradigm shift in real-time detection, balancing computational tractability with robust performance despite the hardware constraints of the era [

15].

Subsequent work by Dalal and Triggs (2005) introduced the Histogram of Oriented Gradients (HOG) descriptor, which employed overlapping contrast normalization and a dense grid of cells to achieve invariance to geometric transformations (e.g., translation, scaling). HOG’s focus on pedestrian detection popularized multi-scale detection pipelines, where images were resampled while maintaining fixed window sizes to localize objects across varying scales. This approach became a cornerstone of traditional detection frameworks due to its robustness and reproducibility [

16].

Further advancements emerged with Deformable Part Models (DPM), a hierarchical, sliding-window framework proposed by Felzenszwalb et al. (2010). DPM decomposed detection into modular stages: (1) extraction of fixed feature representations (e.g., HOG), (2) classification of candidate regions using latent SVM, and (3) refinement of bounding boxes through spatial constraints on part-based components. While DPM achieved state-of-the-art performance on benchmarks such as PASCAL VOC, its reliance on handcrafted features and multi-stage optimization limited scalability [

18].

2.2. Deep Learning Revolutions

Following the stagnation of handcrafted feature-based methods after 2010 (Zou et al., 2023) [

14], the advent of convolutional neural networks (CNNs) (Krizhevsky et al., 2012) [

19] catalyzed a paradigm shift in object detection. This era bifurcated detectors into two-stage and one-stage architectures, each addressing distinct trade-offs between accuracy and computational efficiency.

2.2.1. Two-Stage Architectures

Two-stage frameworks, exemplified by R-CNN [

20], Fast R-CNN [

6], Faster R-CNN [

21], and Feature Pyramid Networks (FPN) [

24], decompose detection into sequential stages: (1) generating region proposals and (2) refining classification and localization.

R-CNN (Girshick et al., 2014) pioneered the use of region proposals [

20] (via Selective Search; Uijlings et al., 2013) [

25] and CNN-based feature extraction. However, its multi-stage pipeline—combining CNNs, SVMs, and bounding-box regression—suffered from inefficiency (

s/image) and calibration complexity.

Fast R-CNN (Girshick, 2015) streamlined computation by introducing RoI pooling, enabling shared feature extraction and reducing inference time by

[

6].

Faster R-CNN (Ren et al., 2015) unified proposal generation and refinement via a Region Proposal Network (RPN), achieving near real-time performance (17 FPS) while maintaining high accuracy (

% mAP on PASCAL VOC) [

21].

FPN (Lin et al., 2017) enhanced multi-scale detection through a top-down architecture with lateral connections, boosting Faster R-CNN’s COCO mAP to

% by leveraging hierarchical feature fusion [

24].

While two-stage detectors excel in accuracy, particularly for small objects, their sequential pipelines incur computational latency and limit real-time applicability [

21]. Hence, the necessity of an alternative architecture, such as One-stage, arises to overcome the limitations from the perspective of practical applications.

2.2.2. One-Stage Architectures

One-stage detectors, such as SSD [

22] and YOLO [

7], prioritize speed by unifying localization and classification into a single forward pass.

SSD (Liu et al., 2016) introduced multi-scale feature map predictions, enabling efficient detection across object sizes without region proposals [

22].

YOLO (Redmon et al., 2016) redefined real-time detection by processing entire images in a single pass, achieving 45 FPS with

% mAP [

7]. Its grid-based design traded marginal accuracy for unprecedented speed, outperforming traditional methods like DPM (Sadeghi et al., 2014), which achieved only

% mAP at 30 FPS [

26].

YOLO’s efficiency (e.g., Fast YOLO: 155 FPS,

% mAP) made it ideal for latency-sensitive applications like autonomous driving, though its accuracy lagged behind two-stage counterparts [

14].

3. The YOLO Algorithm: An Overview

Convolutional Neural Networks (CNNs), a class of deep learning models optimized for grid-structured data [

27], revolutionized computer vision through hierarchical feature extraction. By preserving spatial relationships via convolutional operations, CNNs autonomously learn low-to-high-level features, enabling breakthroughs in tasks like object detection [

28]. Among CNN-based detectors,

You Only Look Once (YOLO) [

7] redefined real-time performance by framing detection as a unified regression problem.

A CNN processes input tensors (e.g.,

) through convolutional layers that apply learnable filters

via:

where

defines the kernel size. Non-linear activations (e.g., ReLU [

29]), pooling layers, and fully connected (FC) layers follow, culminating in task-specific outputs [

30].

Neural networks minimize loss functions (e.g., cross-entropy

) via backpropagation [

31]. Regularization techniques like dropout [

32] mitigate overfitting by randomly deactivating neurons during training.

YOLO [

7] partitions input images into

grids, where each cell predicts

B bounding boxes with confidence scores

and

C class probabilities. The final output tensor

combines localization and classification:

where

IOU refers to Intersection Over Union.

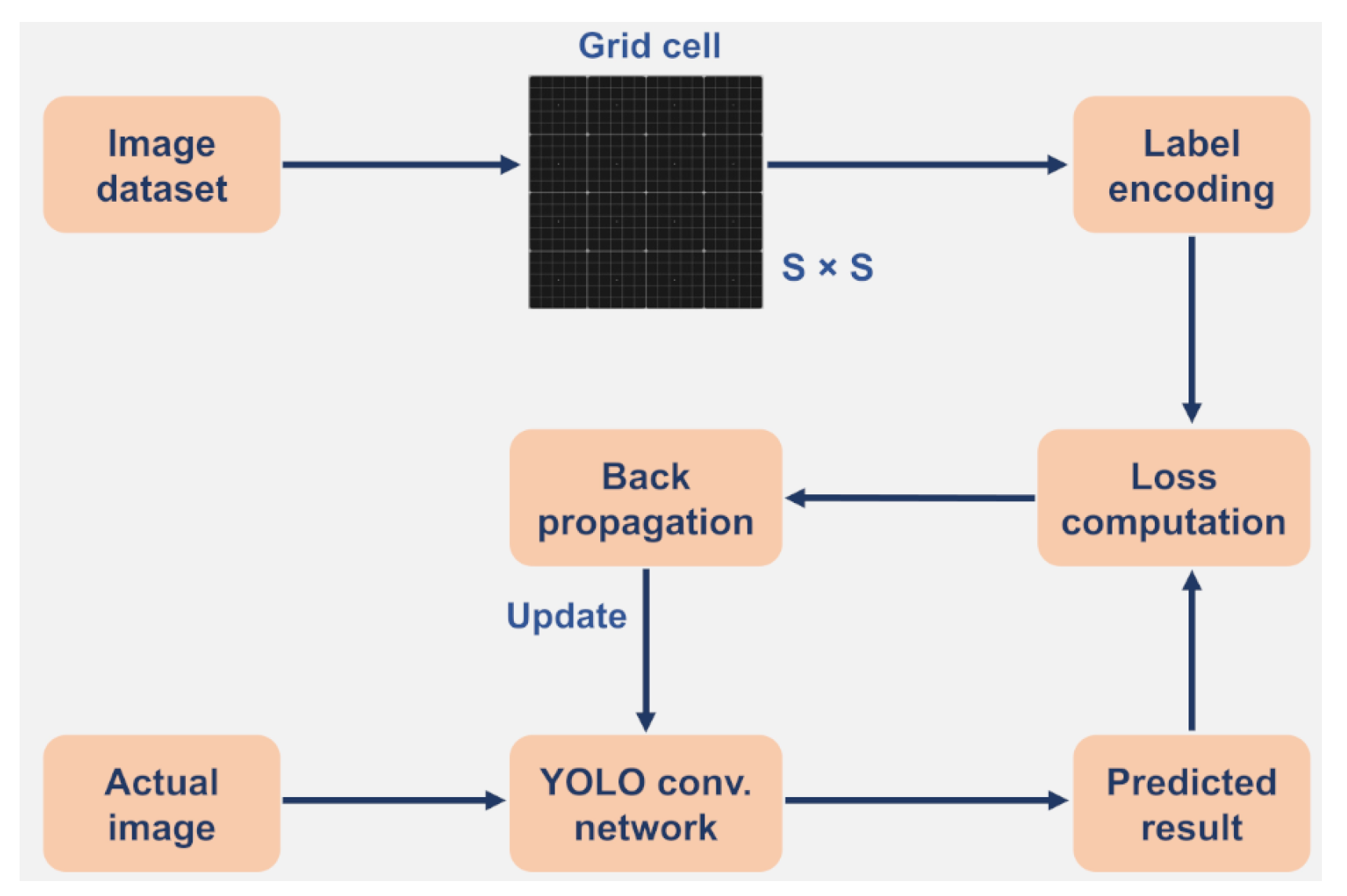

Figure 2.

YOLO’s grid-based detection workflow. Each cell predicts bounding boxes and class probabilities conditioned on object presence.

Figure 2.

YOLO’s grid-based detection workflow. Each cell predicts bounding boxes and class probabilities conditioned on object presence.

On PASCAL VOC [

33], YOLOv1 achieved

% mAP at 45 FPS, outperforming DPM (

% mAP at 30 FPS) while leveraging global context to halve background errors compared to region-based methods like Fast R-CNN. Its compact architecture (24 convolutional + 2 FC layers) enables real-time inference on GPUs, critical for autonomous systems [

7].

YOLO’s regression-based approach eliminates multi-stage pipelines, achieving 150 FPS on optimized variants. However, its spatial grid constraints limit accuracy for small objects and dense scenes. Subsequent iterations (e.g., YOLOv2–YOLOv8) address these via anchor boxes and multi-scale predictions [

14].

4. Evolution of YOLO: From YOLOv1 to YOLOv12

4.1. YOLOv1 (2015)

YOLOv1, a unified framework for real-time object detection,[

7], introduced in 2016, revolutionized object detection by reformulating it as a unified regression task, eliminating the computational bottlenecks of multi-stage pipelines like R-CNN [

20]. By processing the entire image in a single forward pass, YOLOv1 achieved real-time inference speeds while leveraging global contextual information, significantly reducing background false positives compared to region-based methods. Its regression-based design also enhanced generalizability, enabling robust performance across diverse domains.

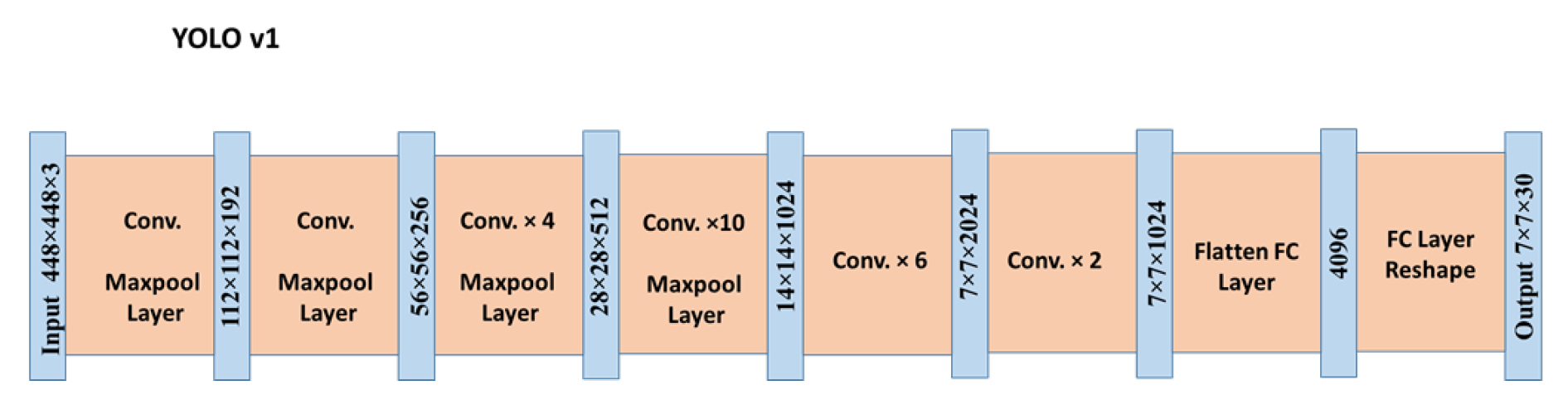

According to the architecture and methodology,

Grid-Based Detection: YOLOv1 partitions input images into an

grid (

for PASCAL VOC [

33]), where each cell predicts

B bounding boxes (coordinates

) and confidence scores

. Class probabilities

are conditioned on object presence, yielding a final output tensor

for 20-class VOC detection.

Network Design: The architecture comprises 24 convolutional layers for hierarchical feature extraction, followed by 2 fully connected layers for regression (

Figure 3). Inspired by GoogLeNet, it employs

reduction layers to minimize computational overhead.

Limitations and trade-offs are, despite its speed (45 FPS on a Titan × GPU), YOLOv1 exhibited three key limitations:

Spatial Coarseness: The grid struggled with small objects and dense scenes due to limited spatial resolution.

Localization Errors: The squared error loss equally penalizes misalignments in large/small boxes, impairing precise localization.

Scale Sensitivity: Fixed grid cells hindered the detection of objects with extreme aspect ratios.

According to the Fast YOLO: Optimized for Speed, to further accelerate inference,

Fast YOLO [

7] reduced the original network to 9 convolutional layers, achieving 155 FPS with marginal accuracy loss (

% mAP vs.

% for YOLOv1 on PASCAL VOC). This trade-off prioritized latency-critical applications like video surveillance.

4.2. YOLOv2 (2016)

YOLOv2, an incremental improvements for scalable real-time detection, [

34] systematically addressed limitations of YOLOv1 through four key innovations while maintaining real-time efficiency:

According to the batch normalization and high-resolution fine-tuning, layer-wise batch normalization [

35] stabilized training by reducing internal covariate shift:

This eliminated dropout while improving mean Average Precision (mAP) by

on PASCAL VOC [

33]. Subsequent fine-tuning at

resolution boosted mAP by

through enhanced spatial reasoning.

According to anchor boxes and feature fusion, YOLOv2 replaced fully connected layers with anchor boxes [

21], predicting

boxes per image versus YOLOv1’s 98. A passthrough layer fused fine-grained features

with semantic abstractions

:

This increased recall from 81% to 88% while maintaining 67 FPS.

According to the multi-scale training, dynamic input scaling (320–608px) every 10 batches enhanced robustness. At

resolution, YOLOv2 achieved

% mAP on VOC 2007 while processing images at 67 FPS [

34].

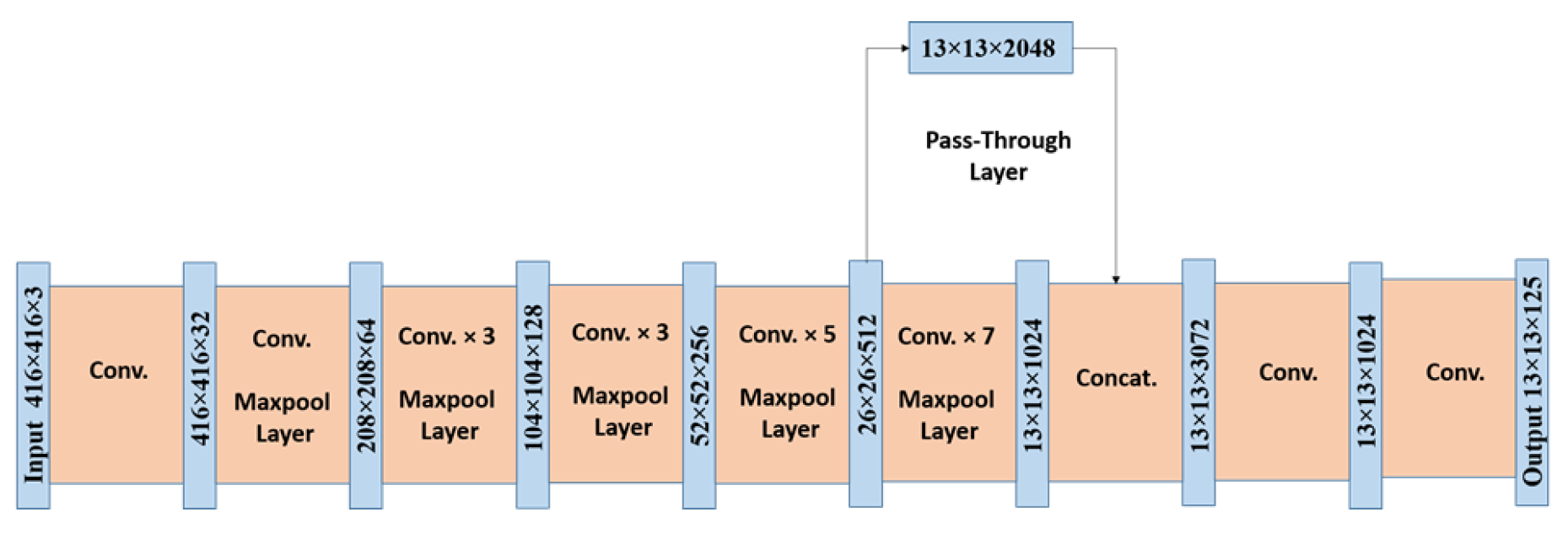

Figure 4.

YOLOv2 architecture (Normal).

Figure 4.

YOLOv2 architecture (Normal).

4.2.1. Faster YOLOv2: Darknet 19

The Darknet

backbone, efficient backbones for multi-scale learning, [

34] reduced computational complexity to

billion FLOPS compared to GoogLeNet’s

billion [

36] through:

where

= kernel size,

= input/output channels, and

= max-pooling overhead. Global average pooling replaced FC layers, reducing parameters by 92% versus VGG-16 [

37].

According to the multi-phase training,

According to the YOLO9000: Hierarchical multi-task learning,

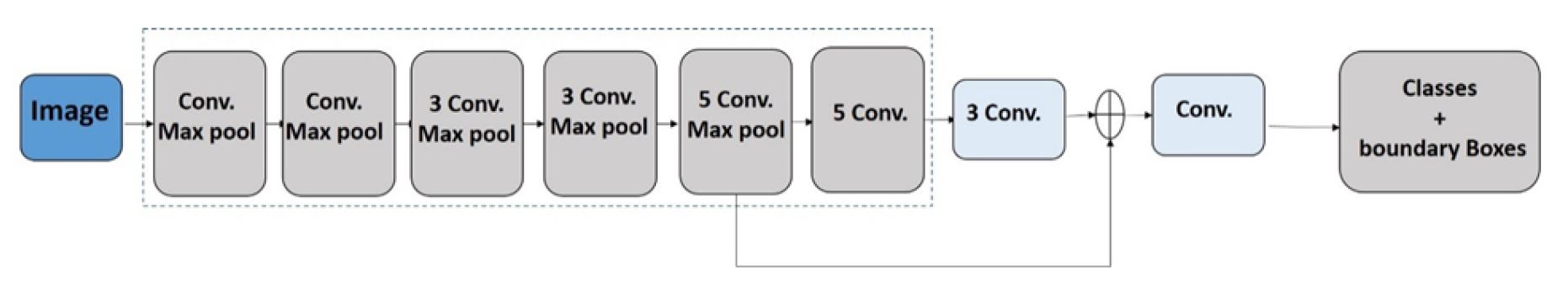

4.3. YOLOv3 (2018)

YOLOv3, an evolutionary leap in multi-scale real-time detection,[

40] introduced Darknet

, a 53-layer network leveraging residual connections [

41] and strided convolutions, achieving

× higher FPS than ResNet

[

41] with comparable accuracy. Batch normalization [

35] and Leaky ReLU activations (

) stabilize training:

According to the multi-scale detection, YOLOv3 employs three detection heads (52×52, 26×26, 13×13) with anchor boxes clustered via k-means (

) [

40]:

Each head predicts 3 anchors, enabling detection across 9 scale/aspect ratio combinations (

Figure 6).

Performance and limitations are on MS COCO [

39], YOLOv3 achieved

AP and

at 20 FPS, outperforming SSD [

22] by 3× in speed with comparable accuracy. However, RetinaNet [

42] retained higher precision (

AP) at lower speeds (5 FPS). The multi-scale design improved small-object recall by

versus YOLOv2 [

34], though medium/large object localization errors persisted.

Variants and adaptations are

Enhanced Precision Variant: Triki et al. [

43] modified YOLOv3’s spatial pyramid pooling (SPP) [

44] layer, increasing DHS dataset mAP by

through feature map upsampling:

YOLOv3-Tiny: Adarsh et al. [

45] pruned Darknet

to 23 layers, achieving 140 FPS on embedded devices with

COCO AP:

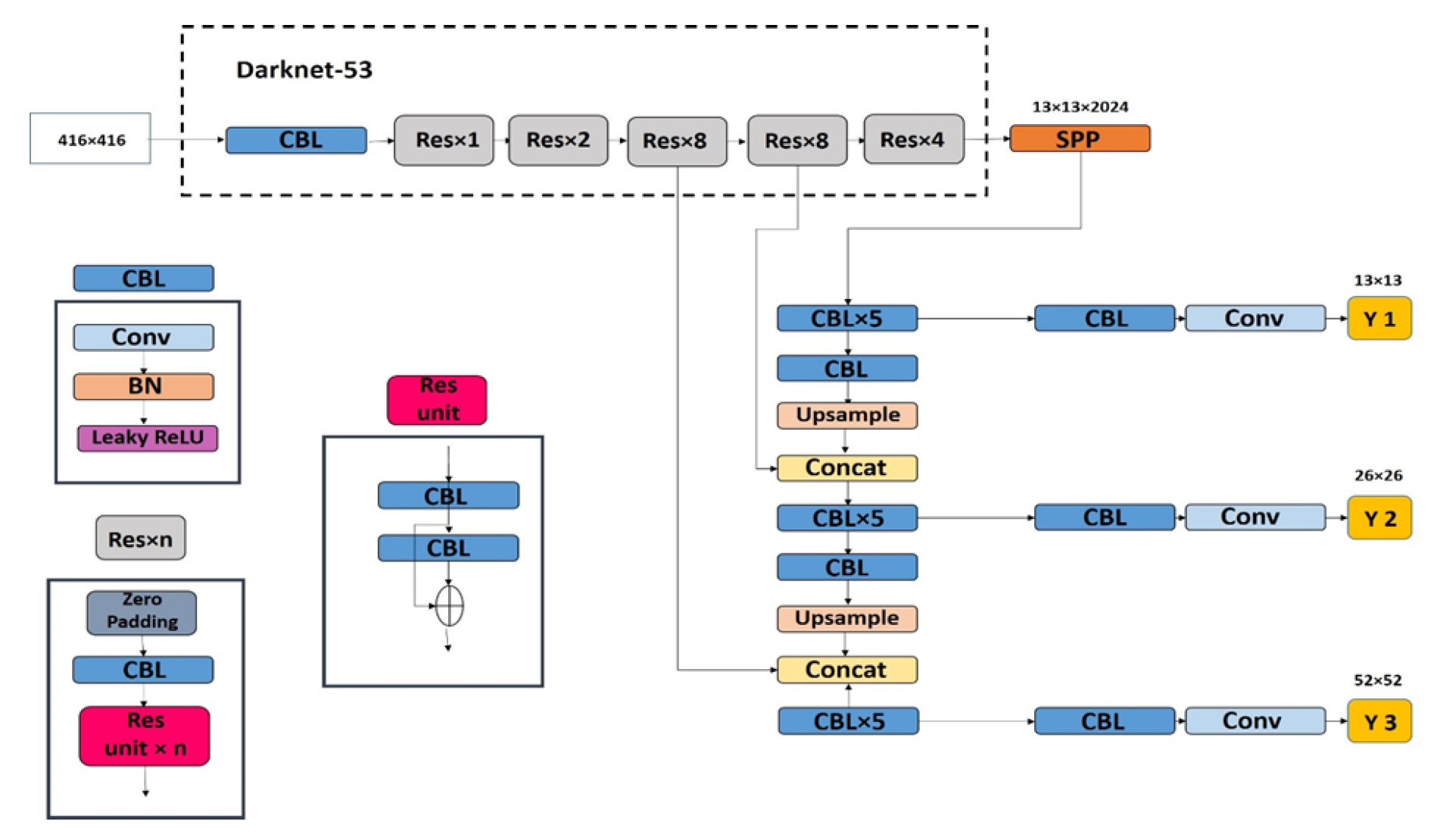

4.4. YOLOv4 (2020)

YOLOv4, the optimal speed-accuracy tradeoff for production-grade detectors,[

46] introduces CSPDarknet53 with cross-stage partial (CSP) connections [

47]:

where

represents dense block operations and ⊕ denotes channel-wise concatenation. This reduces computation by 20% while maintaining

M parameters.

According to neck and head enhancements, the neck combines spatial pyramid pooling (SPP) [

44] with path aggregation network (PANet) [

48]:

The head employs complete-IoU loss [

49]:

where

is center distance,

c diagonal length, and

v aspect ratio consistency term.

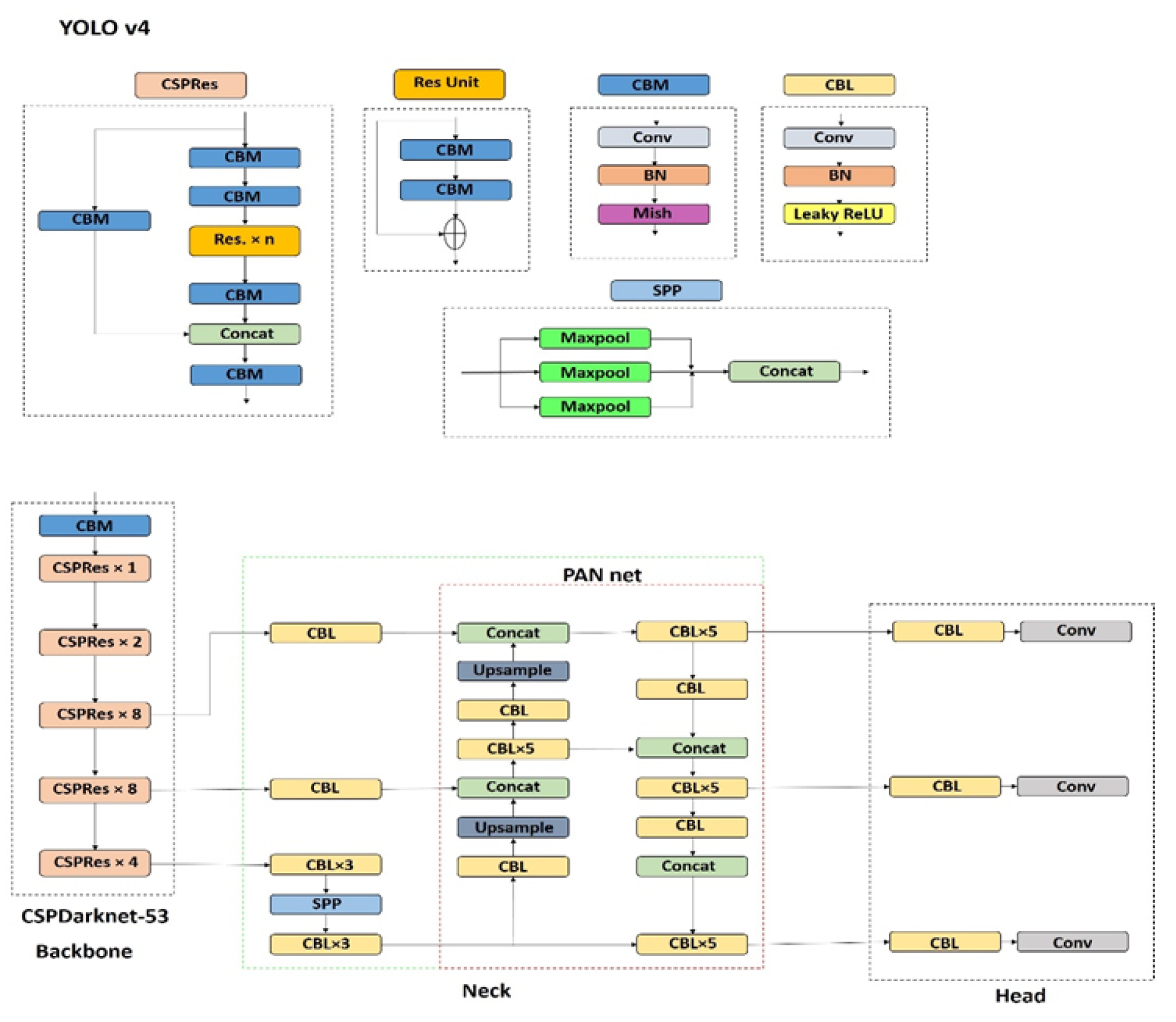

Figure 7.

YOLOv4 architecture with CSPDarknet53 backbone, SPP-PANet neck, and CIoU-optimized head.

Figure 7.

YOLOv4 architecture with CSPDarknet53 backbone, SPP-PANet neck, and CIoU-optimized head.

According to the training methodology, it follows the following two types of training:

-

Bag of Freebies (BoF)

Mosaic augmentation [

46]:

image mosaic with random scaling

DropBlock [

50]: Structured channel dropout

CIoU-aware label smoothing [

49]

-

Bag of Specials (BoS)

Mish activation [

51]:

Modified SAM [

46]: Spatial attention module

DIoU-NMS [

49]: Distance-aware suppression

According to performance analysis, on MS COCO [

39], the achievement of YOLOv4 is shown in

Table 1.

The YOLOv4 achieves

AP improvement over YOLOv3 with

faster inference [

46].

According to YOLOv4-Tiny for edge deployment, the tiny variant [

52] employs:

CSPDarknet53-Tiny: 12 CSP layers with LeakyReLU

Simplified PANet: Single-scale feature fusion

Anchor pruning: 3 anchors per scale vs. 9 in full version

and achieves 270 FPS on Jetson Xavier with mAP.

4.4.1. Scaled YOLOv4 (2021)

Scaled YOLOv4, a compound scaling for optimal object detection, [

53] introduces a principled scaling approach optimizing depth (

d), width (

w), resolution (

r), and structure through:

where

denotes performance metric (AP) and

computational complexity. This compound scaling outperforms EfficientDet’s [

54] neural architecture search (NAS) through:

Depth scaling via CSPDarknet blocks [

47]

Width scaling through channel expansion factors ()

Resolution scaling (px) with aspect ratio preservation

The following architectural innovations are explored as per the literature:

CSP-PAN with Mish Activation: The neck combines cross-stage partial connections with Mish activation [

51]:

where

enhances gradient flow.

Unified Multi-Resolution Training: Contrary to single-scale paradigms, Scaled YOLOv4 employs resolution-agnostic weights:

where

= 384, 512, 768, 1024 and DFL = dynamic focal loss.

As per the performance analysis, benchmark comparisons are as shown in

Table 2:

As per efficiency gains,

× faster training convergence vs YOLOv4

higher AP/layer than EfficientDet at comparable FPS

× FPS improvement for tiny variant with TensorRT

4.4.2. PP-YOLO (2020)

PP-YOLO, a pragmatic enhancement of YOLOv3 for industrial applications, [

55] refines YOLOv3 through systematic integration of production-oriented enhancements while maintaining real-time efficiency:

According to backbone modifications,

According to detection head enhancements,

IoU-aware loss branch [

57]:

CoordConv [

58] spatial encoding:

Matrix NMS [

57] for parallel suppression:

According to training methodology, built on PaddlePaddle framework [

59], PP-YOLO employs:

As per performance analysis, on MS COCO [

39] test-dev shown in

Table 3:

Here the key advantages are

higher AP than YOLOv4 with faster inference

× FPS advantage over RetinaNet

M parameters vs YOLOv4’s M

4.5. YOLOv5 (2020)

YOLOv5, a PyTorch-based Paradigm for accessible real-time detection [

62], transitions from Darknet to PyTorch, enabling:

CSPDarknet53 backbone with SiLU activation [

63]:

SPPF layer with parallel max-pooling:

Scalable variants (n,s,m,l,x) via compound scaling [

64]

As per the optimized detection head,

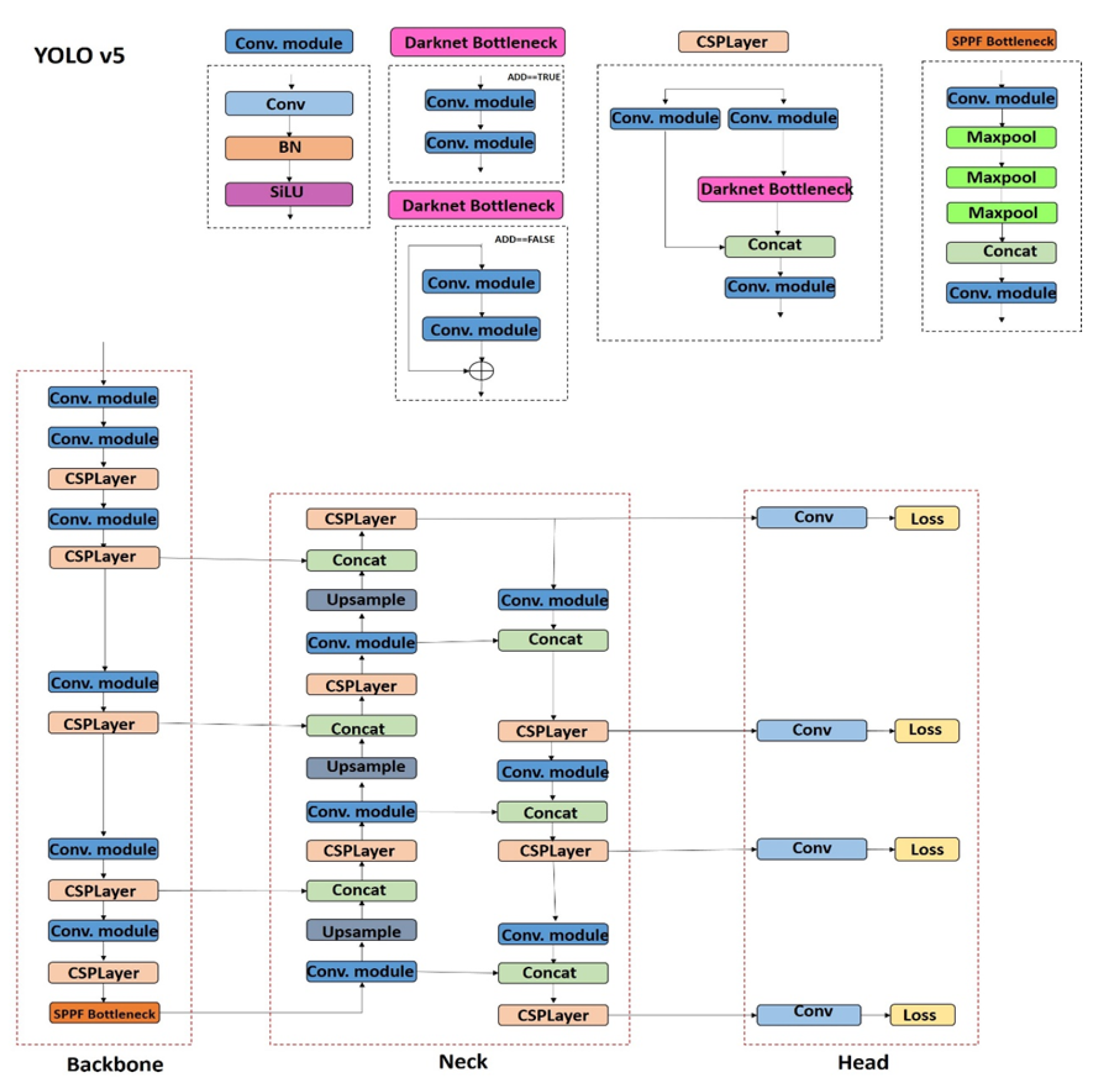

Figure 8.

YOLOv5 architecture featuring modified CSPDarknet53 backbone, SPPF layer, and AutoAnchor-optimized detection head.

Figure 8.

YOLOv5 architecture featuring modified CSPDarknet53 backbone, SPPF layer, and AutoAnchor-optimized detection head.

According to the training methodology for the data augmentation pipeline,

As per the optimization strategy,

Hyperparameter evolution with generation search

EMA weight averaging ()

Multi-scale training (px) with probability

According to the performance analysis, MS COCO Benchmarks as below

Table 4.

Performance comparison at 640px resolution (batch=32).

Table 4.

Performance comparison at 640px resolution (batch=32).

| Model |

AP |

AP50 |

FPS (V100) |

| YOLOv5n |

28.4 |

46.7 |

1426 |

| YOLOv5s |

37.4 |

56.8 |

869 |

| YOLOv5m |

45.4 |

64.1 |

365 |

| YOLOv5l |

49.0 |

67.3 |

204 |

| YOLOv5x |

50.7 |

68.9 |

140 |

| YOLOv4 [46] |

43.5 |

65.7 |

62 |

| EfficientDet-D2 [54] |

43.0 |

62.3 |

56 |

According to the deployment flexibility,

The limitations and considerations are

4.5.1. PP-YOLOE (2022)

PP-YOLOE, an enhanced anchor-free detection via task-aligned learning, [

70] introduces RepResBlocks combining residual and dense connections from TreeNet [

71]:

where

denotes compressed residual path and

parallel residual branches.

According to the task-aligned learning, the ET-head employs task-alignment learning [

72] with dynamic label assignment:

where

are classification/regression scores and

balance factor.

The loss formulation combines Varifocal Loss [

73] and Distribution Focal Loss [

74]:

The scalable architecture design as follows in

Table 5:

According to the performance analysis, based-on MS COCO [

39] test-dev in

Table 6:

The key advantages are

% faster than YOLOX-X with equivalent accuracy

% AP improvement over PP-YOLOv2

45% reduction in training iterations vs anchor-based variants

4.5.2. YOLO-NAS (2023)

YOLO-NAS, a hardware-aware neural architecture search for quantization-robust object detection, [

77] employs Deci’s Automated Neural Architecture Construction (AutoNAC) [

78] to optimize the Pareto frontier between accuracy and latency:

where

denotes target hardware constraints and

tradeoff coefficient.

According ot the quantization-Aware Building Blocks,

Quantization-Scale Propagation (QSP) blocks [

79]:

Quantization-Centric Initialization (QCI) [

79]

RepVGG [

80] structural re-parameterization:

As per the training methodology for the multi-stage learning,

Phase 1: Objects365 [

81] pretraining (

M images)

Phase 2: COCO [

39] fine-tuning with hybrid quantization:

Phase 3: Per-tensor AdaRound [

82] optimization

According to the performance analysis in the quantization robustness shown in

Table 7,

Its comparative benchmarking is as below in

Table 8:

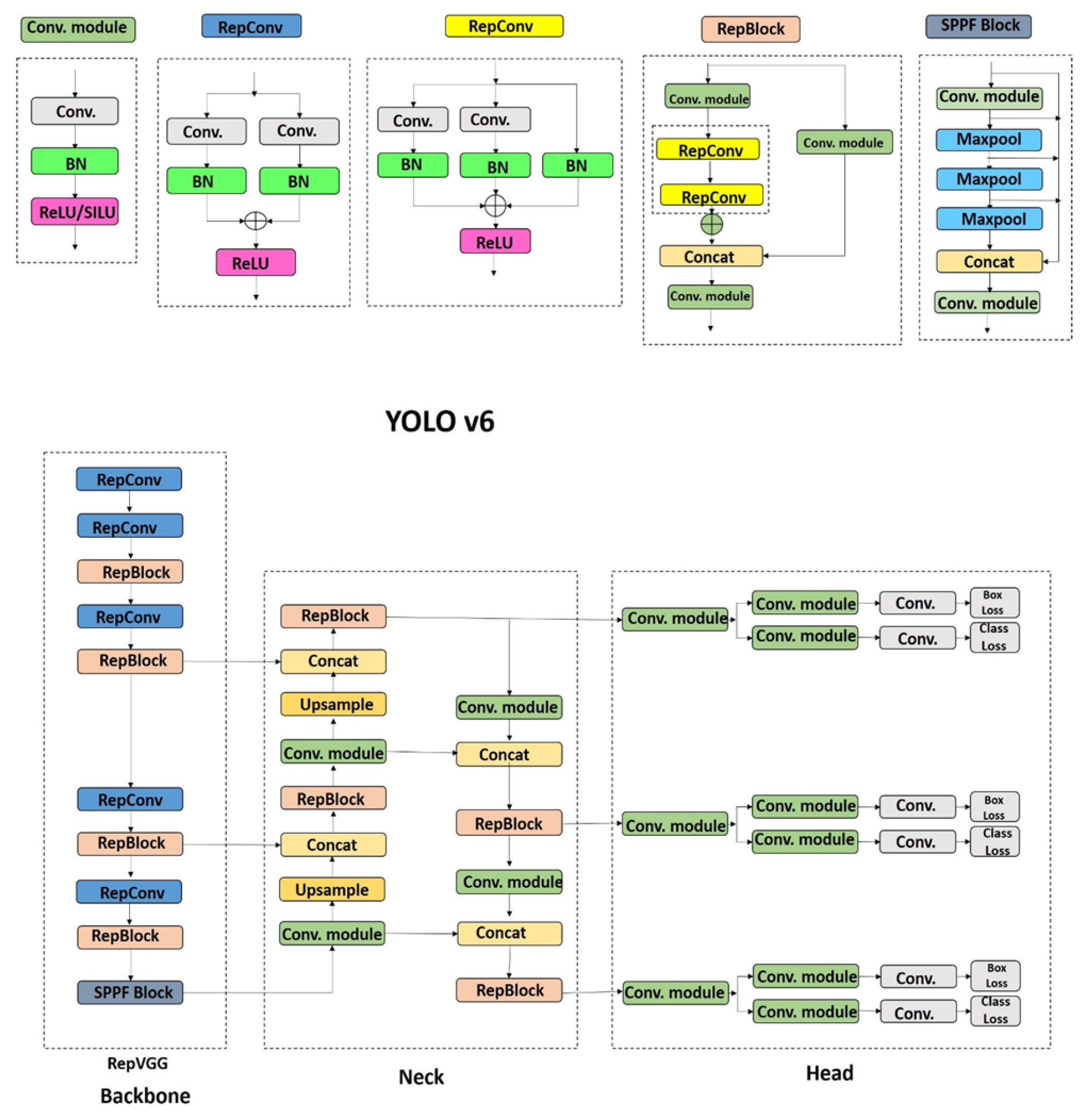

4.6. YOLOv6 (2022)

YOLOv6, a hardware-oriented architecture design for efficient object detection, [

84] employs RepBlocks during training with structural re-parameterization for inference:

Large models use CSPStackRep blocks [

85] combining cross-stage partial connections:

According to the decoupled head optimization, the hybrid-channel head reduces parameters through depth pruning:

where

are classification/regression kernels.

Figure 9.

YOLOv6 architecture with EfficientRep backbone and decoupled head design.

Figure 9.

YOLOv6 architecture with EfficientRep backbone and decoupled head design.

According to the training methodology for the Loss Formulation combines Task-aligned learning [

72] with SIoU [

86]:

where

.

The quantization strategy is as below:

RepOptimizer [

87]: Gradient reparameterization

Channel-wise distillation [

88]:

According to the performance analysis shown in

Table 9,

The limitations are:

latency variance across GPU architectures

AP:FPS ratio degradation from N to L6 variants

Requires per-hardware fine-tuning for optimal quantization

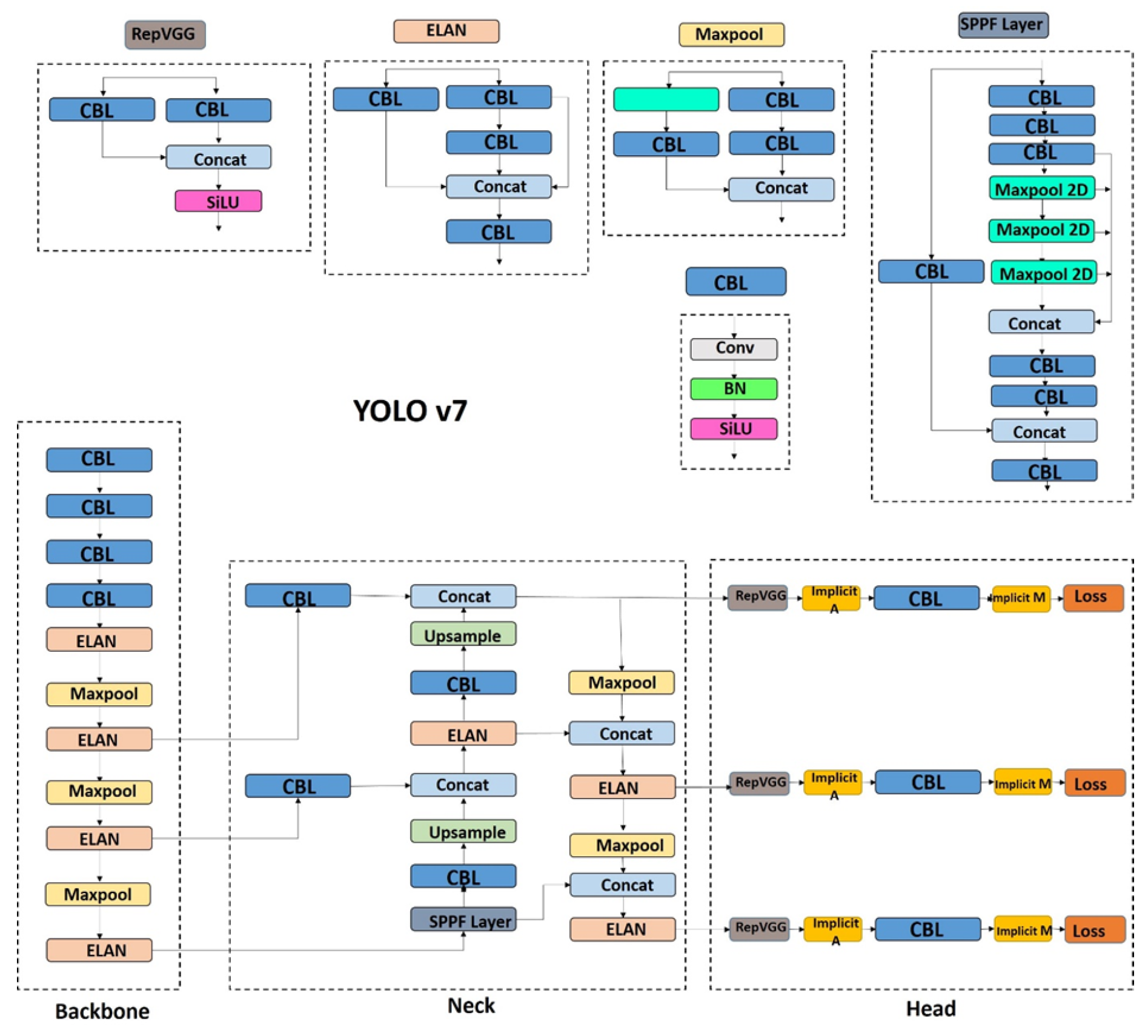

4.7. YOLOv7 (2022)

YOLOv7, an extended-ELAN architecture with compound scaling for state-of-the-art object detection [

89] extends ELAN through controlled cardinality expansion:

where

are parallel computation branches and

gradient-preserving aggregation.

According to the compound scaling strategy, balanced width/depth scaling maintains channel equilibrium:

According to the trainable bag-of-freebies,

RepConvN: Pruned re-parameterization [

80]

Coarse-to-fine label assignment:

Implicit knowledge integration from YOLOR [

90]

Figure 10.

YOLOv7 architecture featuring E-ELAN backbone and compound-scaled detection heads.

Figure 10.

YOLOv7 architecture featuring E-ELAN backbone and compound-scaled detection heads.

As per the performance analysis on MS COCO benchmarks in

Table 10,

The following efficiency gains are observed

36% fewer FLOPS vs YOLOv4 at % higher AP

43% parameter reduction vs YOLOR with AP gain

15% computation reduction in tiny variant

The limitations and considerations of this version are

× longer training time vs YOLOv5

18% AP variance across GPU architectures

AP:FPS ratio degradation for X variant

Requires × more tuning for INT8 quantization

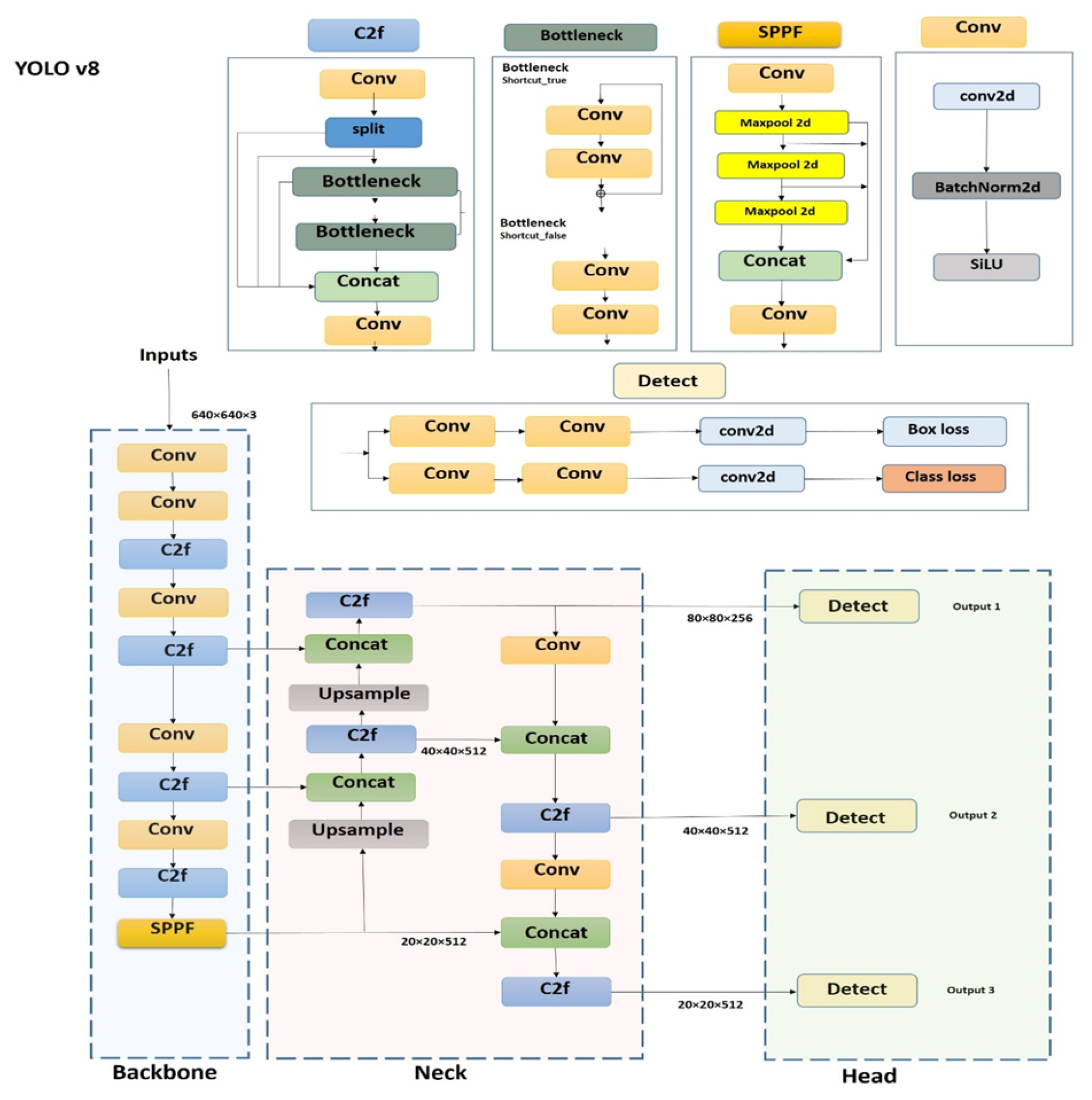

4.8. YOLOv8 (2023)

YOLOv8, a unified architecture for multi-task visual perception, [

83] enhances CSPDarknet

through Contextual Cross-Fusion (C2f) blocks:

where

are bottleneck blocks with residual connections.

According to the decoupled anchor-free head,

Task-specific branches for classification/regression:

Distribution Focal Loss [

74]:

From the multi-task extensions, YOLOv8-seg introduces dual segmentation heads:

According to the performance analysis, the MS COCO benchmarks are as follows

Table 11.

Object detection performance on COCO val2017 [

39].

Table 11.

Object detection performance on COCO val2017 [

39].

| Model |

AP |

AP50 |

FPS (A100) |

| YOLOv8n |

37.3 |

53.2 |

1230 |

| YOLOv8x |

53.9 |

70.4 |

280 |

| YOLOv5x [62] |

50.7 |

68.9 |

140 |

| YOLOv7-X [89] |

51.2 |

69.7 |

110 |

| EfficientDet-D7 [54] |

52.2 |

70.4 |

23 |

According to the segmentation capabilities,

The limitations and considerations of this version are

latency variance across GPU architectures

AP:FPS ratio degradation from nano to x variants

Requires × more tuning for INT8 quantization vs YOLOv5

Sparse documentation for novel C2f modules

Figure 11.

YOLOv8 architecture with C2f backbone and decoupled task heads.

Figure 11.

YOLOv8 architecture with C2f backbone and decoupled task heads.

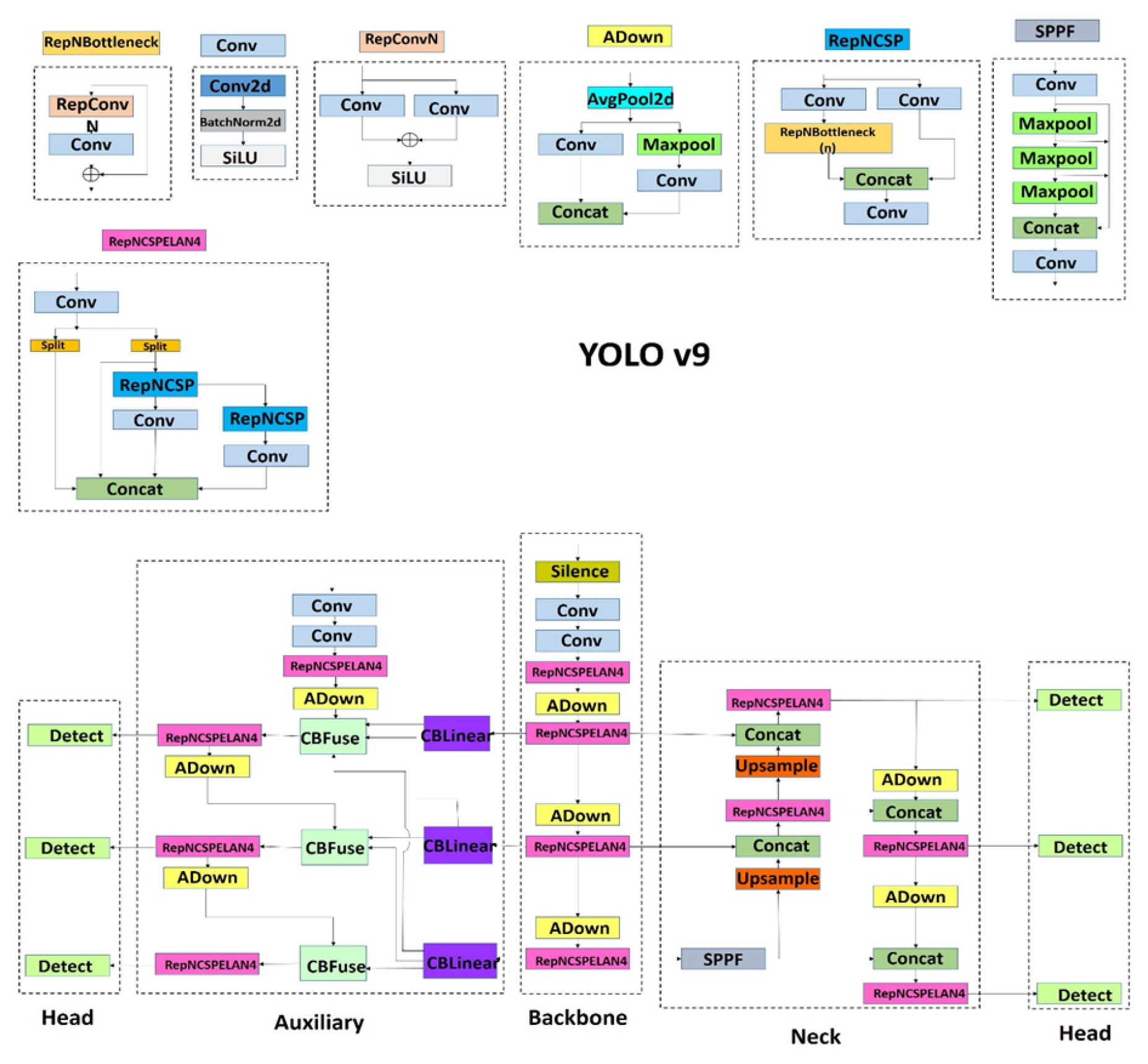

4.9. Yolov9 (2024)

YOLOv9, a lightweight object detector via programmable gradient information and generalized efficient layer aggregator,[

10] introduces PGI to mitigate information bottlenecks in deep networks:

where

controls gradient contribution from layer

l,

is the auxiliary supervision mask, and ⊕ denotes programmable fusion.

Generalized Efficient Layer Aggregation (GELAN) enables dynamic feature fusion across heterogeneous computation blocks are:

where

represents diverse computation branches and

is the adaptive aggregation operator.

The performance analysis based on MS COCO benchmarks are

Table 12.

Performance comparison on COCO val2017 [

39].

Table 12.

Performance comparison on COCO val2017 [

39].

| Model |

AP |

Params (M) |

FLOPS (B) |

| YOLOv9-Nano |

39.1 |

2.1 |

4.3 |

| YOLOv9-Lite |

46.7 |

5.8 |

12.1 |

| YOLOv8-Nano [83] |

38.5 |

3.2 |

8.7 |

| EfficientDet-Lite3 [54] |

40.3 |

4.9 |

15.6 |

According to the efficiency gains,

% AP improvement over YOLOv8 with fewer parameters

reduction in FLOPS vs EfficientDet at comparable accuracy

× faster inference on Jetson Nano vs YOLOv8

The limitations of this network are

accuracy variance across ARM-based edge devices

Requires × more training iterations than YOLOv8

Limited support for ultra-high resolution (4K+) inputs

Early-stage quantization support (INT8 accuracy drop: )

Figure 12.

YOLOv9 architecture with PGI framework and GELAN feature pyramid.

Figure 12.

YOLOv9 architecture with PGI framework and GELAN feature pyramid.

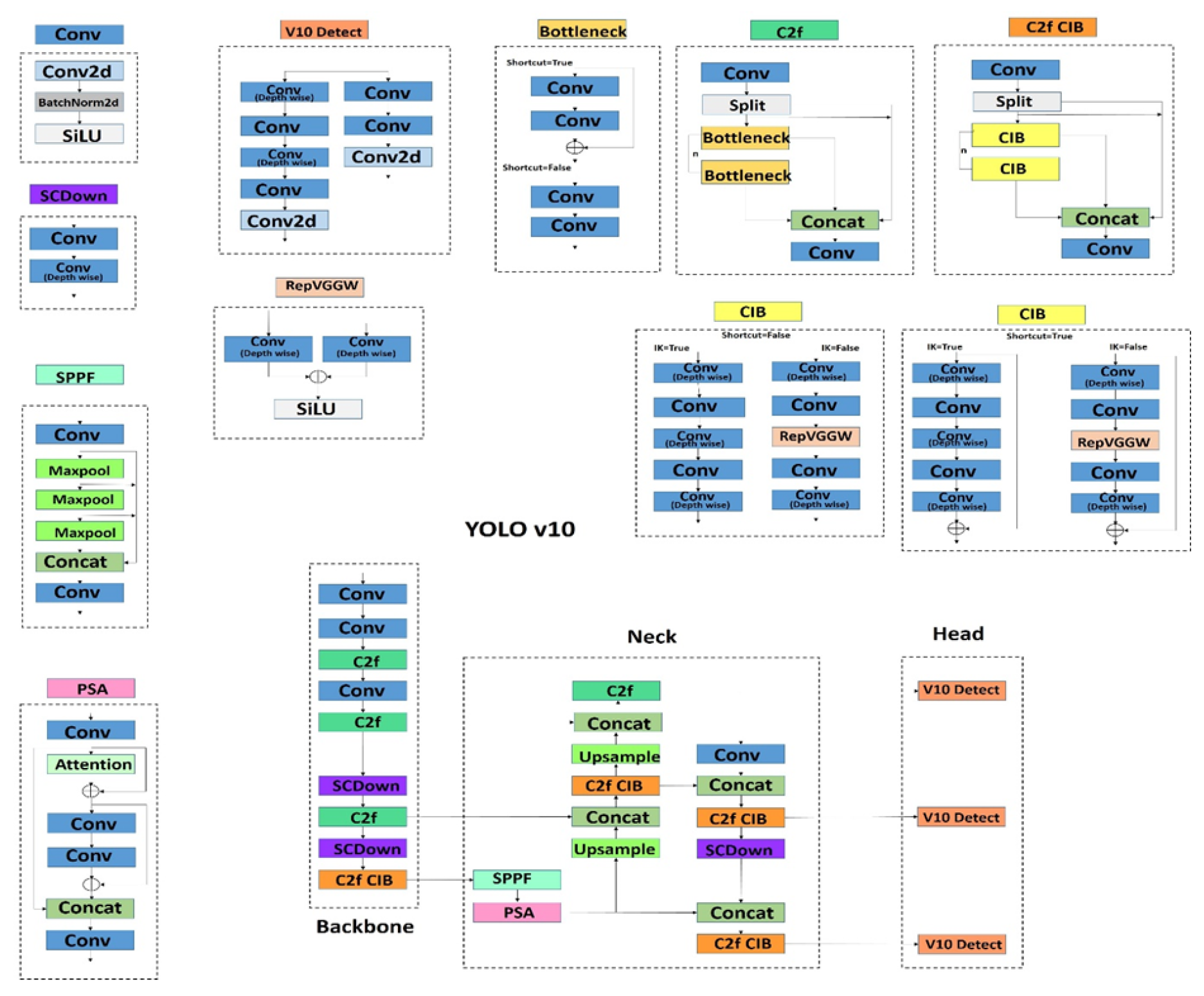

4.10. Yolov10 (2024)

YOLOv10, an NMS-free architecture for real-time edge applicator,[

11] eliminates post-processing dependency through dual assignment supervision:

where

optimizes prediction confidence ordering without NMS.

Spatial-channel decoupled downsampling shows

Rank-guided block design is

where

denotes grouped convolution and

sigmoid gating.

The performance analysis based on MS COCO benchmarks is provided in

Table 13

The technical advancements are

faster inference than YOLOv9 at comparable accuracy

parameter reduction vs YOLO-nano

ms end-to-end latency for 640px inputs

× energy efficiency improvement on edge TPUs

The limitations are

accuracy variance across ARM architectures

longer training convergence time

Limited to 1280px input resolution

Requires custom TensorRT plugins for deployment

Figure 13.

YOLOv10 architecture with NMS-free dual-head design and rank-guided blocks.

Figure 13.

YOLOv10 architecture with NMS-free dual-head design and rank-guided blocks.

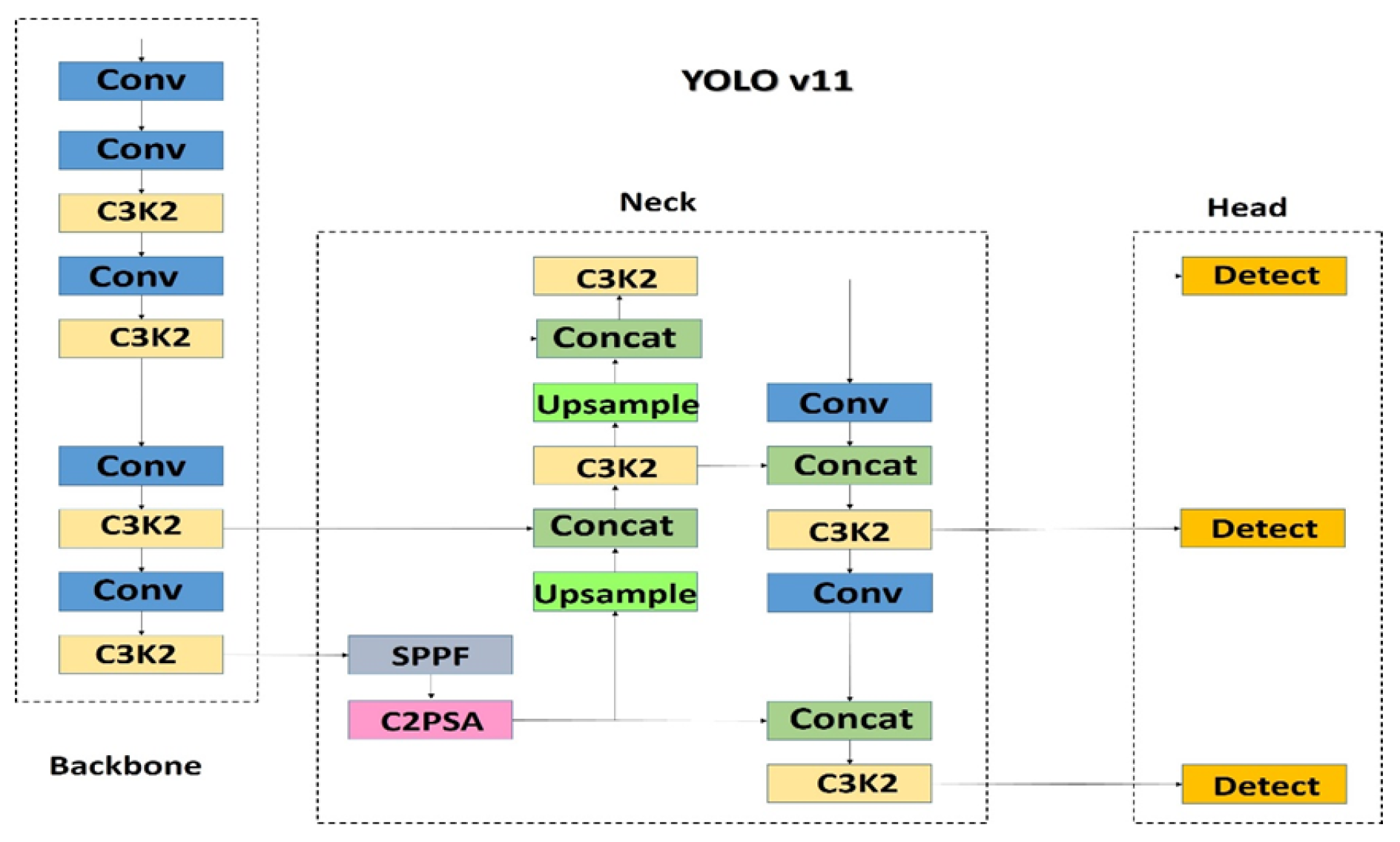

4.11. Yolov11 (2024)

YOLOv11, a unified architecture for multi-task vision with enhanced spatial attention,[

92] introduces compressed C3k2 blocks replacing C2f modules:

SPPF variant uses parallel max-pooling with kernel progression .

C2PSA attention mechanism is

where

denotes sigmoid activation for attention gating.

Figure 14.

YOLOv11 architecture with C3k2 backbone and C2PSA-enhanced neck.

Figure 14.

YOLOv11 architecture with C3k2 backbone and C2PSA-enhanced neck.

The performance analysis based on multi-task benchmarking and the efficiency gains are as follow

Multi-Task Benchmarking is shown in

Table 14

-

Efficiency Gains are

- -

parameter reduction vs YOLOv8m (→M)

- -

faster inference than YOLOv10-S at equivalent accuracy

- -

lower VRAM consumption during training

The limitations are

longer training convergence vs YOLO

accuracy variance across edge AI accelerators

Requires CUDA for optimal performance

Limited documentation for OBB extensions

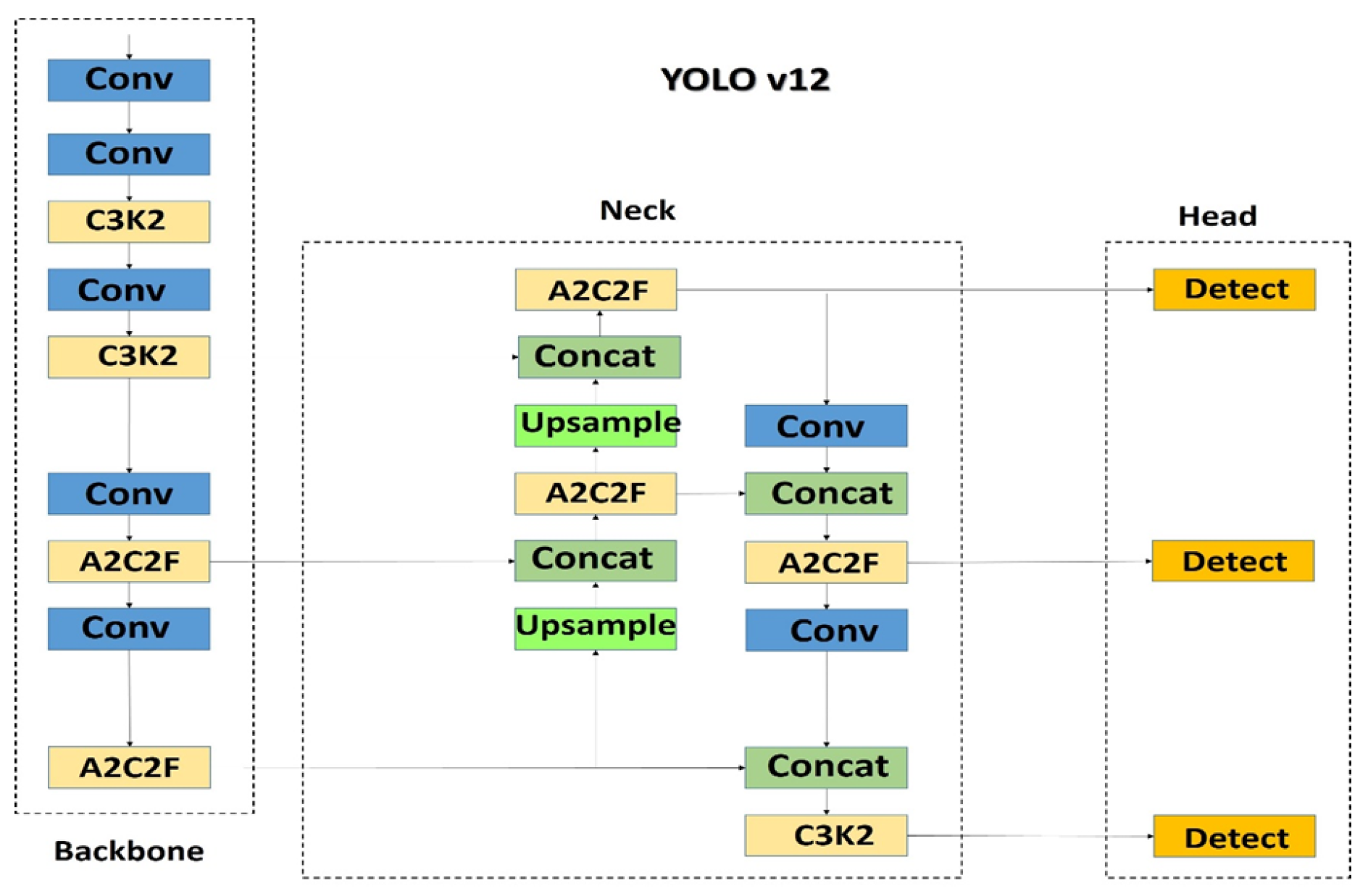

4.12. Yolov12 (2025)

YOLOv12, an attention-optimized real-time detector via area attention and residual ELAN,[

93] introduces area attention with linear complexity:

where

is learnable area projection (

).

Residual ELAN (R-ELAN) is

where

are bottleneck blocks with depthwise separables.

Figure 15.

YOLOv12 architecture featuring area attention and R-ELAN blocks.

Figure 15.

YOLOv12 architecture featuring area attention and R-ELAN blocks.

The performance analysis based on MS COCO benchmarks is shown in

Table 15

The technical advancements are

faster than RT-DETR-R18 with mAP

FLOPs reduction vs YOLOv11-S

fewer memory accesses than vanilla attention

× speedup from FlashAttention [

95]

The limitations are

Requires Turing/Ampere GPUs for optimal performance

longer training time vs YOLOv11

mAP variance across ARM Mali GPUs

No official TensorRT support for area attention

5. Comparative Analysis of the YOLO Models Till Date

The YOLO (You Only Look Once) architecture has undergone significant evolution since its inception. The original YOLOv1 [

7] revolutionized real-time object detection through a unified CNN approach with 24 convolutional layers, achieving

mAP on PASCAL VOC at 45 FPS. YOLOv2 [

34] advanced this foundation with Darknet

and anchor boxes, boosting performance to

mAP. Subsequent iterations introduced critical innovations: YOLOv3 [

40] implemented multi-scale predictions via Darknet

and FPN, while YOLOv4 [

46] combined CSPDarknet53 with SPP/PANet modules and BoF techniques to reach

AP on COCO. The PyTorch-based YOLOv5 [

62] introduced auto-anchor generation and C3 blocks, achieving 280 FPS on A100 GPUs. Industrial-focused YOLOv6 [

84] optimized hardware performance through Rep-PAN neck designs, matching YOLOv4’s accuracy with 22% fewer parameters. YOLOv7 [

89] further enhanced multi-scale detection through E-ELAN architectures and coarse-to-fine labeling. Current versions demonstrate expanding capabilities - YOLOv8[

83] supports multi-task learning with

AP, while YOLOv9 [

10] implements programmable gradient information for enhanced feature preservation. Cutting-edge developments include YOLOv10’s [

11] NMS-free architecture (

ms latency) and YOLOv11’s [

92] C2PSA attention modules for multi-task learning. The latest YOLOv12[

93] pioneers attention optimization through Area Attention mechanisms and FlashAttention integration, achieving

AP with

fewer memory operations than transformers. A summarized view of YOLO evolution, and its architectural changes and performance metrics are shown in

Table 16.

7. Conclusions

The YOLO framework has fundamentally transformed real-time object detection through its continuous architectural evolution spanning twelve generations. From YOLOv1’s groundbreaking unification of localization and classification to YOLOv12’s attention-based cross-scale fusion, this review has documented how successive innovations systematically addressed core challenges: overcoming information loss via PGI (v9), eliminating NMS bottlenecks (v10), and enhancing small-object detection through transformer-CNN hybrids (v12). Benchmark analyses confirm YOLO’s dominance in balancing speed ( FPS) and accuracy ( AP on COCO), achieving 47× faster inference than region-based predecessors while maintaining competitive precision.

Our exploration reveals three critical success factors: 1) Architectural simplicity enabling hardware-aware optimizations, 2) Progressive feature hierarchy refinements for multi-scale robustness, and 3) Strategic adoption of attention mechanisms without compromising throughput. These advancements have propelled YOLO’s adoption across autonomous systems, medical diagnostics, industrial automation, and precision agriculture—domains where latency-accuracy tradeoffs are mission-critical.

Future research should prioritize: 1) Lightweight architectures for ultra-edge deployment, 2) Self-supervised adaptation to long-tail distributions, and 3) Unified frameworks for multimodal detection. As object detection evolves toward embodied AI applications, YOLO’s design philosophy—maximal performance through minimal computational complexity—remains an enduring blueprint for real-time perception systems. This review provides both technical reference and historical context to guide next-generation innovations building upon YOLO’s foundational legacy.