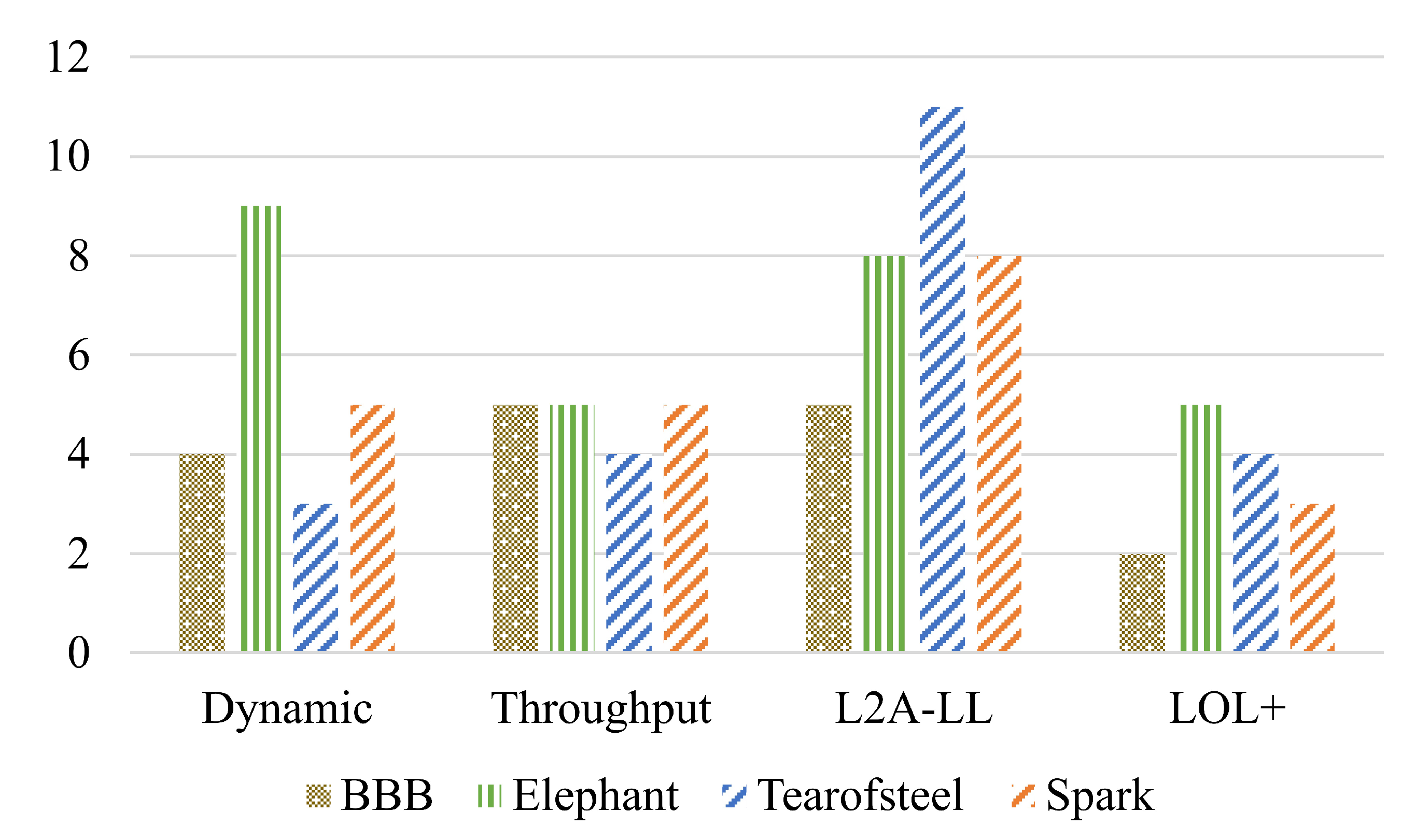

This section focuses on the subjective evaluation of the ABR algorithms. The test content was generated based on the quality levels determined by the ABR algorithms discussed in the preceding section.

4.2.5. Mean Opinion Score

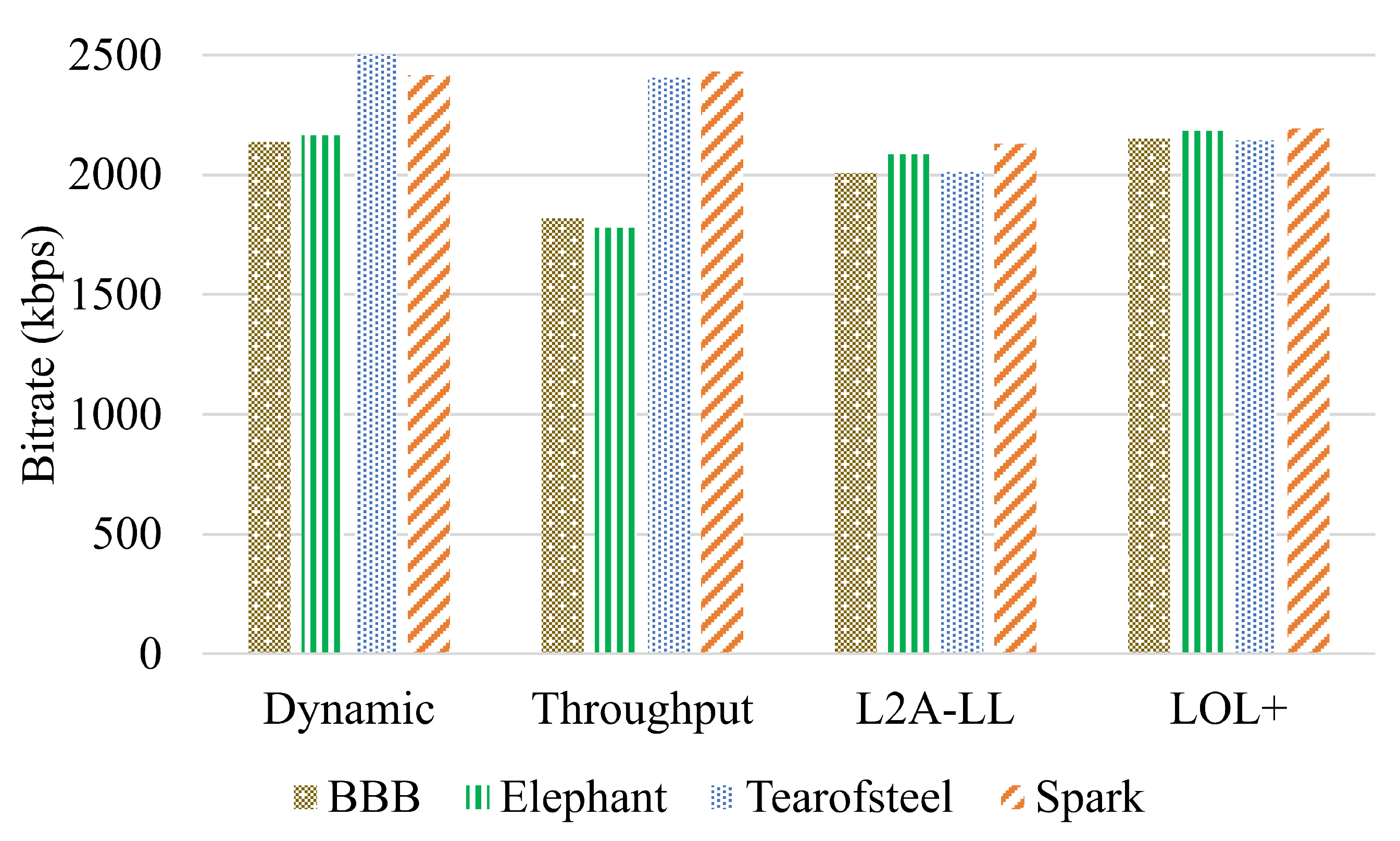

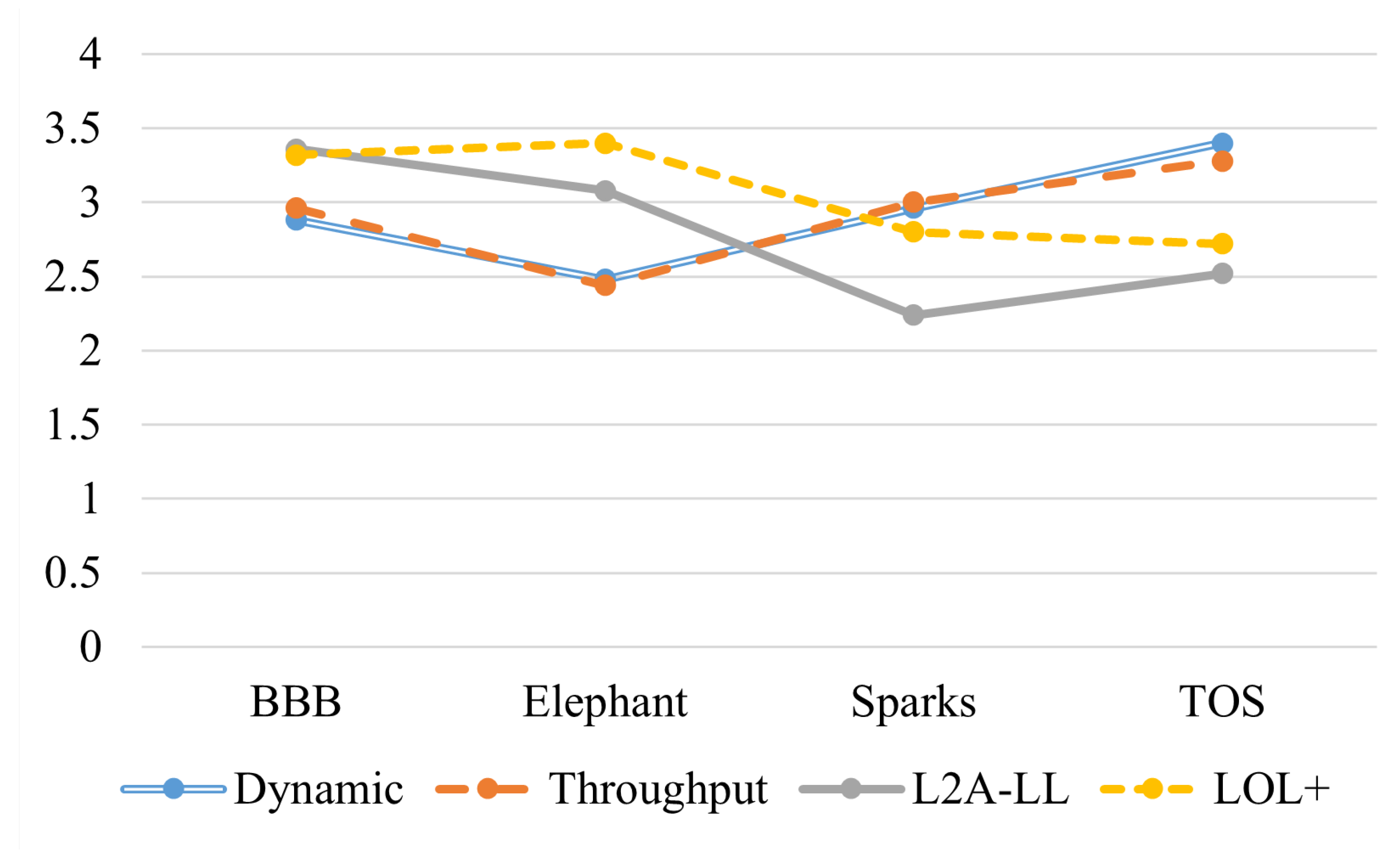

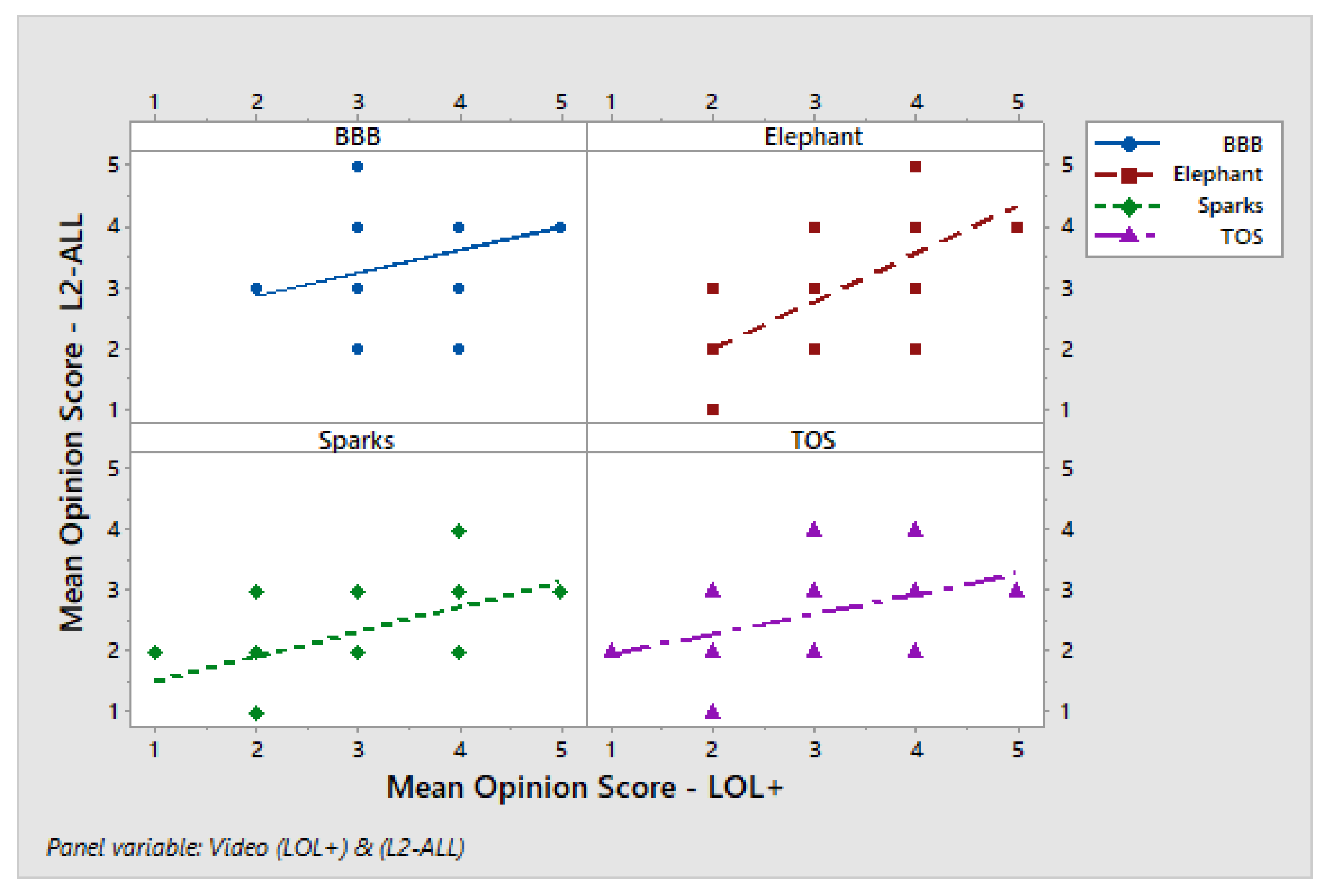

Figure 11 shows the MOS scores for the algorithms evaluated across four video sequences (Big Buck Bunny (BBB), Elephant, Sparks, and Tears of Steel (TOS). Figure depicts that the Dynamic and Throughput algorithms demonstrate comparable performance, maintaining moderate perceptual quality across all videos, with slightly improved ratings for TOS. L2A-LL exhibits the lowest MOS values, particularly for Sparks, indicating instability in quality adaptation and reduced robustness under fluctuating conditions. In contrast, LOL+ consistently achieves the highest MOS scores, especially for BBB and Elephant, suggesting its effectiveness in sustaining high visual quality while minimizing playback degradation.

The results suggest that algorithms emphasizing latency reduction, such as L2A-LL, may compromise perceptual quality when network variability is high. Conversely, LOL+ demonstrates a favorable balance between responsiveness and visual stability, leading to superior user-perceived quality. These findings underscore the importance of designing adaptive algorithms that optimize both QoE and stability rather than focusing solely on latency minimization.

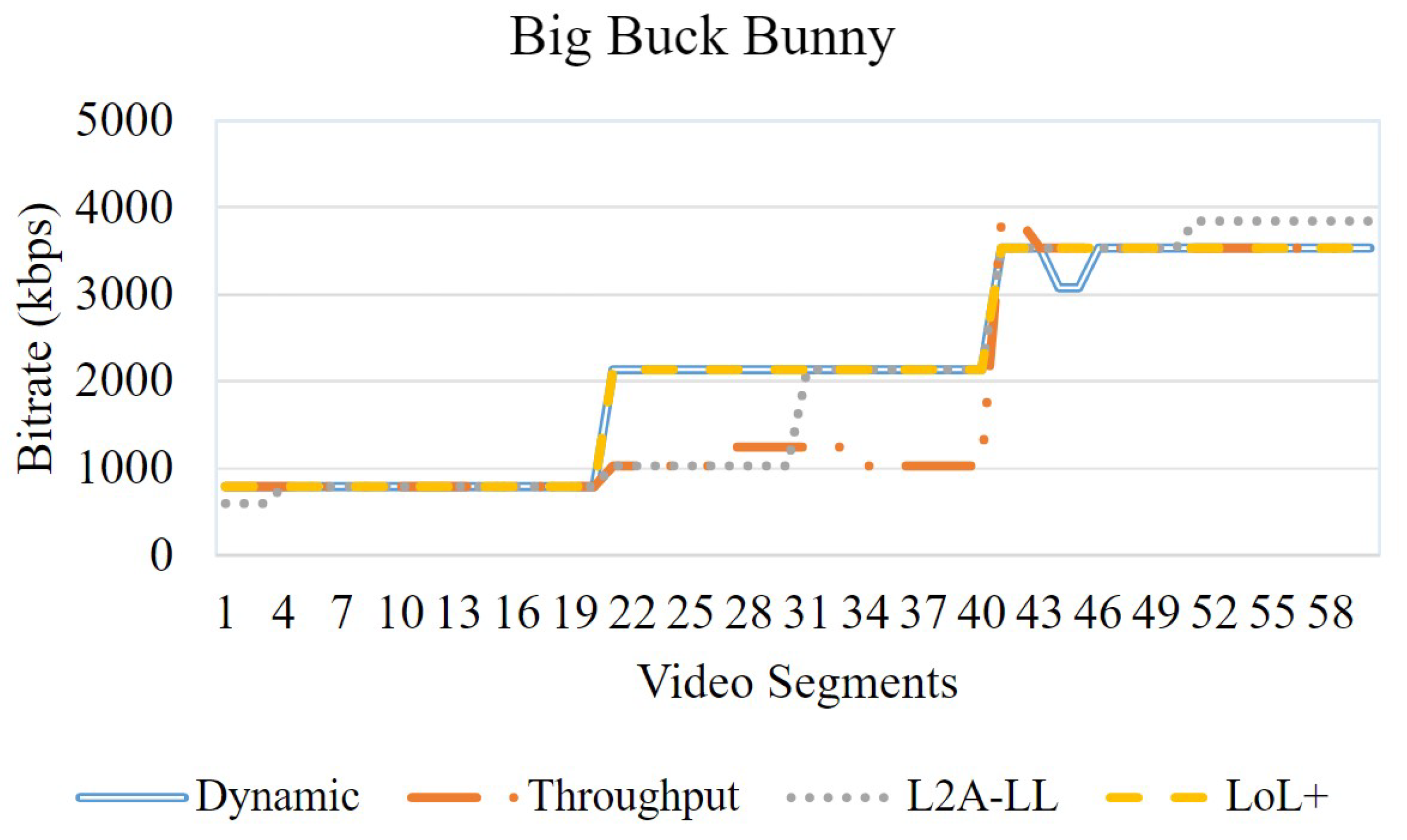

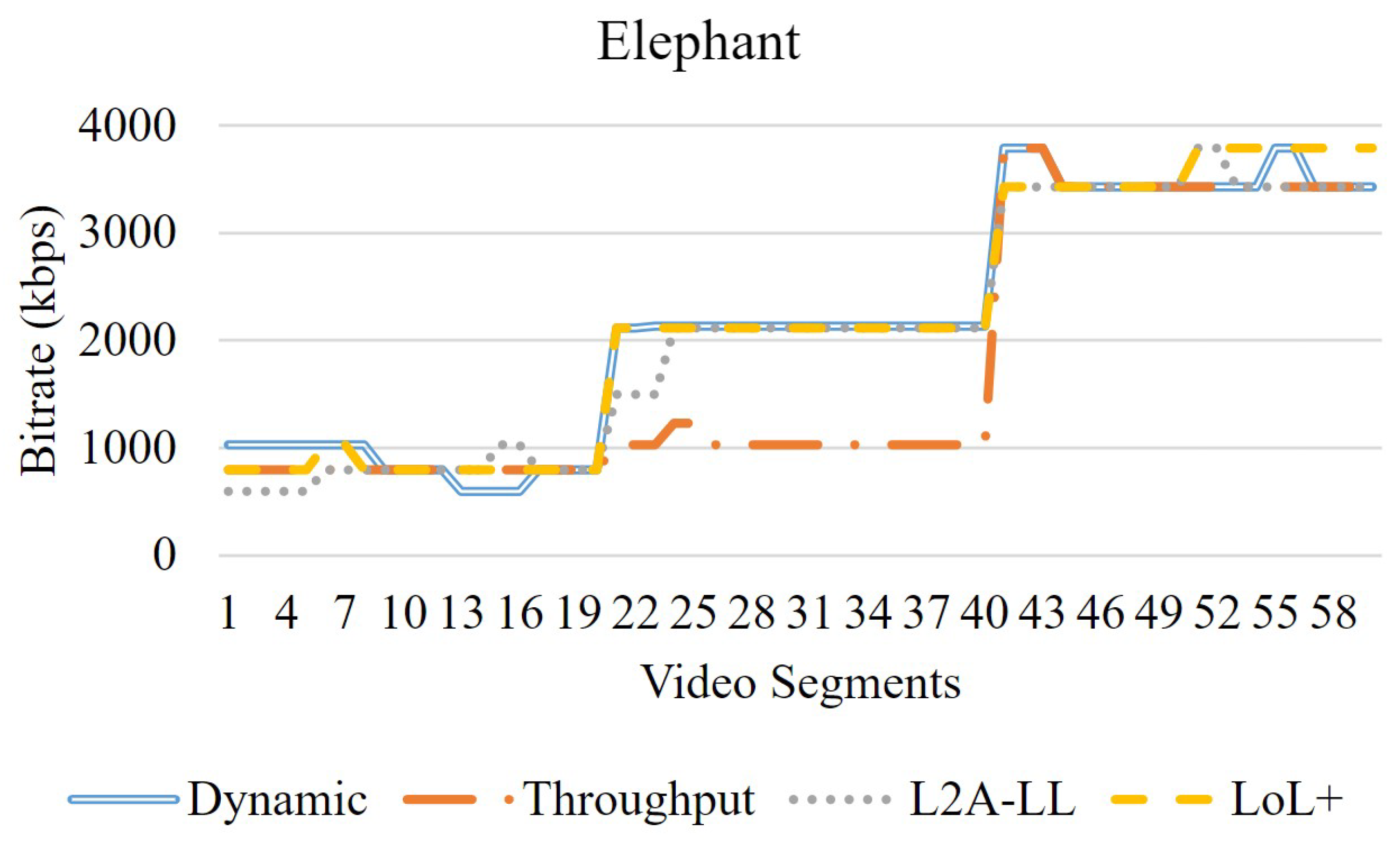

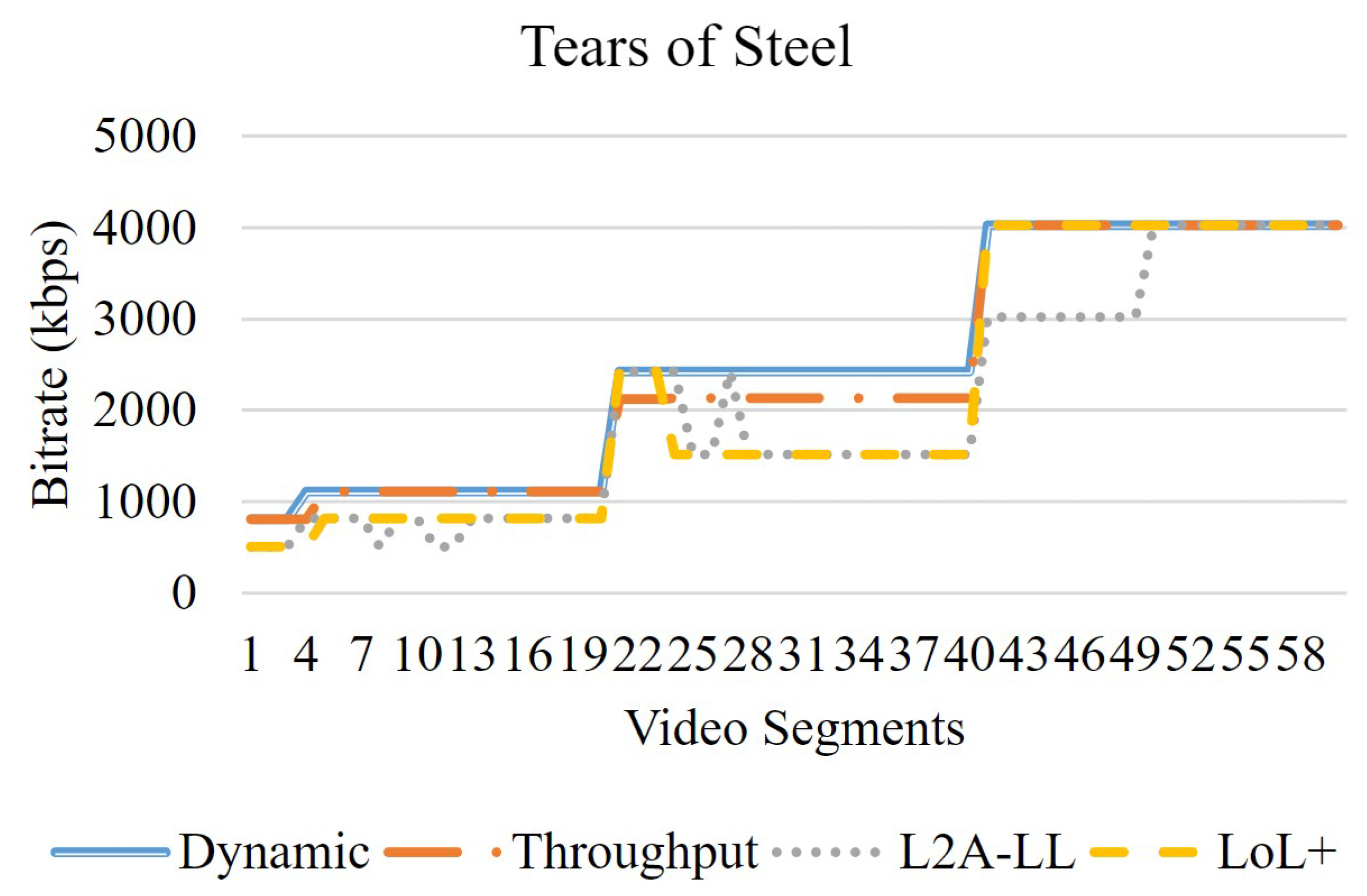

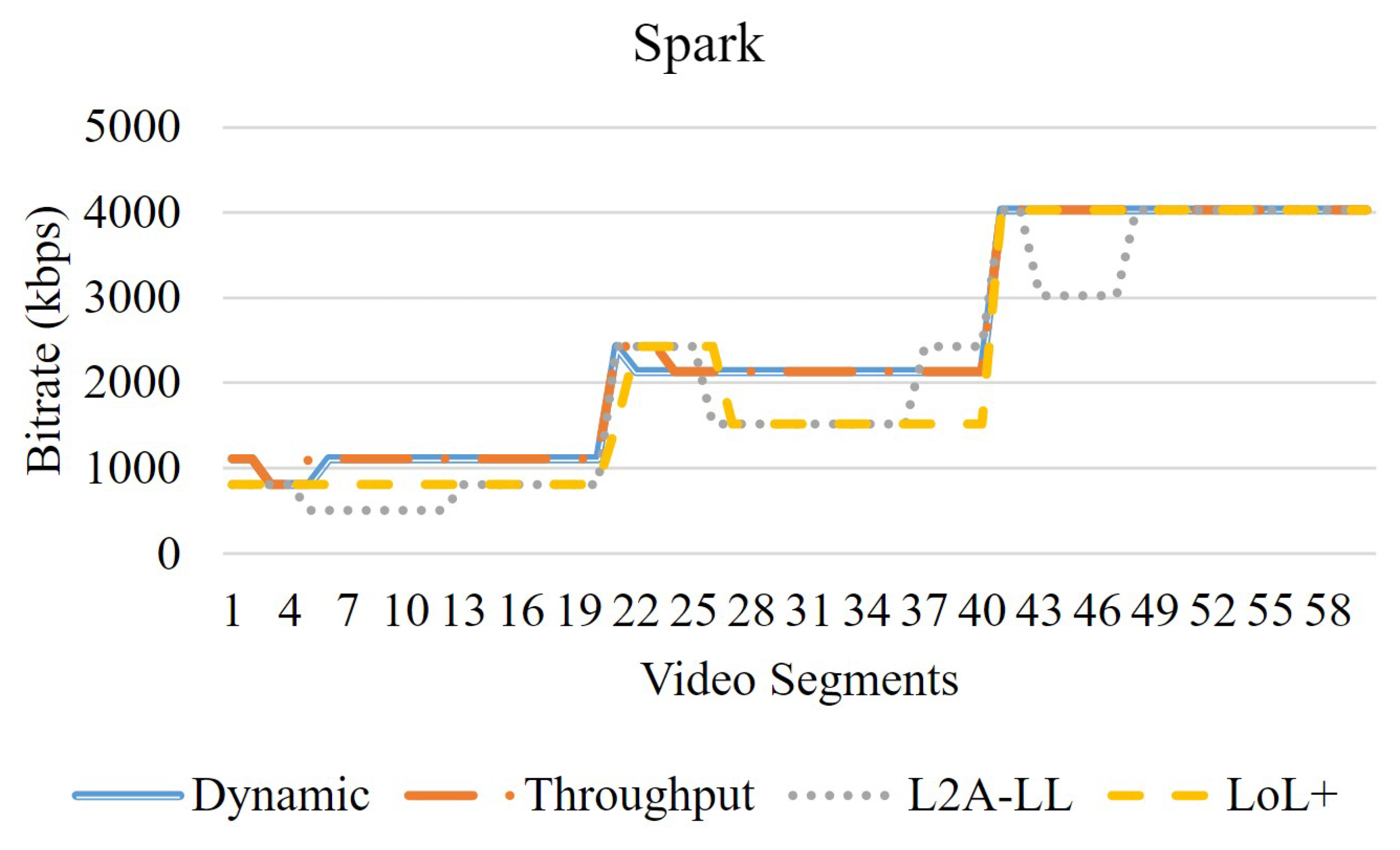

The results are consistent with the findings from our objective evaluation. As illustrated in Fig. 9, the frequent bitrate switches observed in the L2A-LL algorithm correspond to lower MOS values. This indicates that abrupt quality variations negatively affect user perception. Similarly, the lower average video quality produced by the Throughput algorithm results in reduced MOS scores for the Big Buck Bunny and Elephant sequences. Interestingly, despite exhibiting favorable objective metrics, such as high average quality and minimal switching, the LOL+ algorithm attains a relatively low MOS for Tears of Steel. This suggests that content characteristics or temporal quality perception factors may influence subjective ratings beyond purely objective parameters.

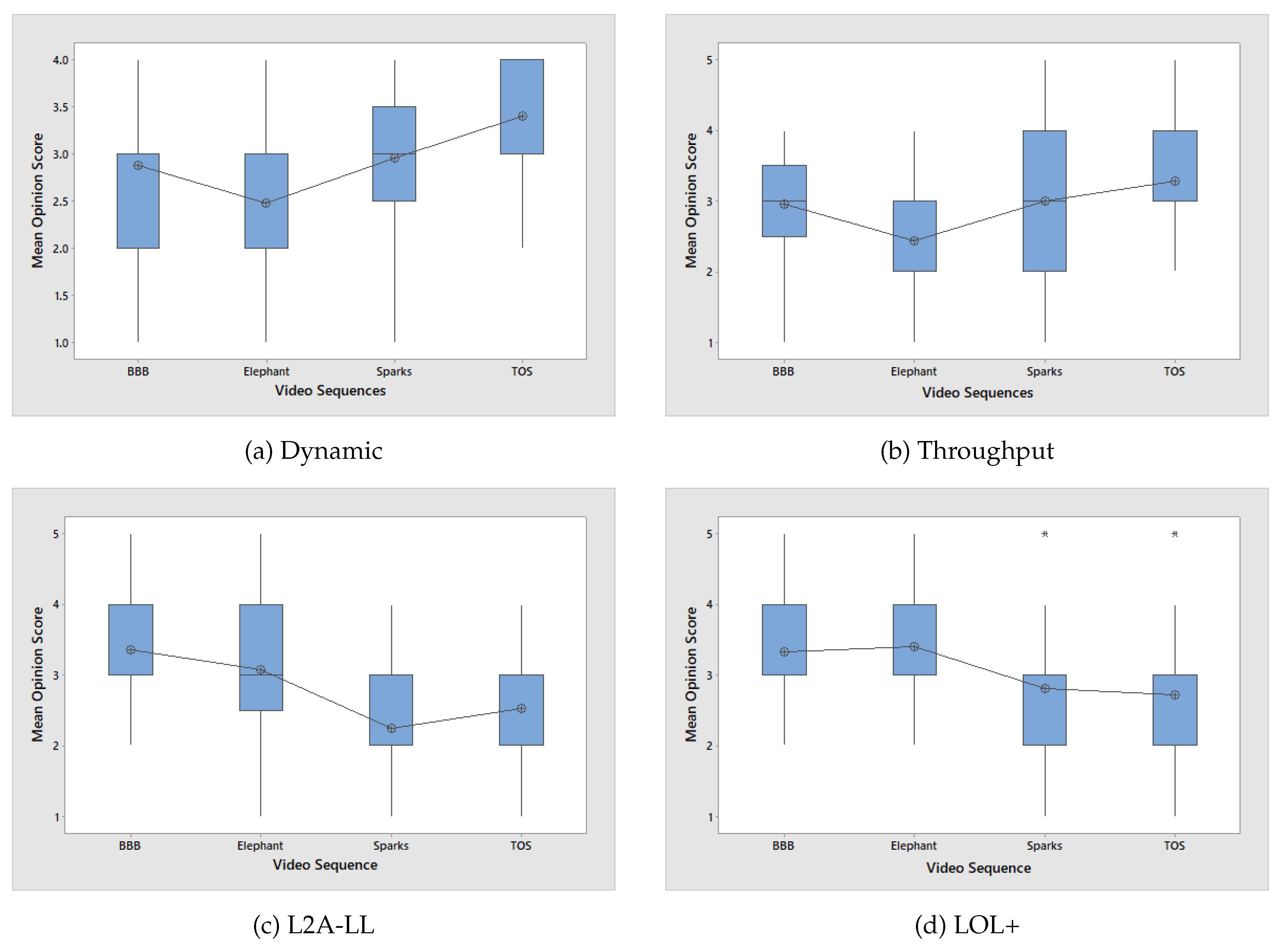

Figure 12, presents the MOS distributions for four ABR algorithms—Dynamic, Throughput, L2A-LL, and LOL+. The box plots provide insights into both the central tendency and the variability of user ratings. The Dynamic and Throughput algorithms exhibit relatively narrow interquartile ranges with consistent median MOS values around 3. This indicates a stable performance and limited perceptual fluctuation across different video contents. In contrast, the L2A-LL algorithm demonstrates a larger spread of scores, particularly for Sparks and TOS. This suggests a higher inconsistency in perceived quality. This variability can be attributed to its latency-oriented adaptation strategy, which may result in more frequent bitrate changes and visual fluctuations.

The LOL+ algorithm achieves higher median MOS scores for BBB and Elephant, indicating strong perceptual quality under favorable conditions. However, its increased score dispersion and presence of outliers for Sparks and TOS suggest sensitivity to complex or high-motion content, where the algorithm may struggle to maintain consistent user experience despite favorable objective metrics. Overall, the results indicate that Dynamic and Throughput provide more stable subjective quality, while L2A-LL and LOL+ exhibit greater variability. It highlights the trade-off between achieving low latency and maintaining consistent perceptual quality across diverse video content.

Figure 12.

Comparison of video score distributions across four ABR algorithms: (a) Dynamic, (b) Throughput, (c) L2A-LL, and (d) LOL+.

Figure 12.

Comparison of video score distributions across four ABR algorithms: (a) Dynamic, (b) Throughput, (c) L2A-LL, and (d) LOL+.

Table 3.

Descriptive Statistics of Mean Opinion Scores- Dynamic.

Table 3.

Descriptive Statistics of Mean Opinion Scores- Dynamic.

| Video |

N |

Mean |

StdDev |

95% CI |

| BBB |

25 |

2.880 |

0.781 |

(2.594, 3.166) |

| Elephant |

25 |

2.480 |

0.714 |

(2.194, 2.766) |

| Sparks |

25 |

2.960 |

0.790 |

(2.674, 3.246) |

| TOS |

25 |

3.400 |

0.577 |

(3.114, 3.686) |

Table 4.

Descriptive Statistics of Mean Opinion Scores- Throughput.

Table 4.

Descriptive Statistics of Mean Opinion Scores- Throughput.

| Video |

N |

Mean |

StdDev |

95% CI |

| BBB |

25 |

2.96 |

0.790 |

(2.641, 3.279) |

| Elephant |

25 |

2.44 |

0.651 |

(2.121, 2.759) |

| Sparks |

25 |

3.00 |

0.913 |

(2.681, 3.319) |

| TOS |

25 |

3.28 |

0.843 |

(2.961, 3.599) |

The descriptive statistics presented in Tables 3–6 reveal that the Dynamic and Throughput algorithms exhibit relatively low standard deviations (0.65–0.79) and narrow confidence intervals. This indicates a stable perceptual quality. The consistency suggests that both algorithms deliver smooth visual experiences with minimal perceptual fluctuations across diverse content types. In contrast, the L2A-LL algorithm demonstrates higher variability, with standard deviations reaching up to 0.997 for the Elephant sequence and wider confidence intervals across all videos. These results imply less stable performance and greater perceptual inconsistency. It is likely due to its aggressive latency-oriented adaptation strategy that leads to frequent bitrate changes and visible quality oscillations. The LOL+ algorithm, on the other hand, shows narrow confidence intervals for low-motion content such as Big Buck Bunny and Elephant, reflecting a high level of user consensus and stable visual quality. However, slightly broader intervals observed for more complex content, such as Tears of Steel. This suggests that scene dynamics and motion intensity may still influence the perceived quality.

Overall, the statistical dispersion and confidence analyses highlight that Dynamic and Throughput achieve steady perceptual outcomes. The L2A-LL introduces instability under fluctuating conditions, and LOL+ sustains high but content-sensitive consistency in subjective quality assessments.

Table 5.

Descriptive Statistics of Mean Opinion Scores- L2A-LL.

Table 5.

Descriptive Statistics of Mean Opinion Scores- L2A-LL.

| Video |

N |

Mean |

StdDev |

95% CI |

| BBB |

25 |

3.36 |

0.810 |

(3.035, 3.685) |

| Elephant |

25 |

3.08 |

0.997 |

(2.755, 3.405) |

| Sparks |

25 |

2.24 |

0.723 |

(1.915, 2.565) |

| TOS |

25 |

2.52 |

0.714 |

(2.195, 2.845) |

Table 6.

Descriptive Statistics of Mean Opinion Scores- LOL+.

Table 6.

Descriptive Statistics of Mean Opinion Scores- LOL+.

| Video |

N |

Mean |

StdDev |

95% CI |

| BBB |

25 |

3.32 |

0.748 |

(2.970, 3.670) |

| Elephant |

25 |

3.40 |

0.866 |

(3.050, 3.750) |

| Sparks |

25 |

2.80 |

0.913 |

(2.450, 3.150) |

| TOS |

25 |

2.72 |

0.980 |

(2.370, 3.070) |

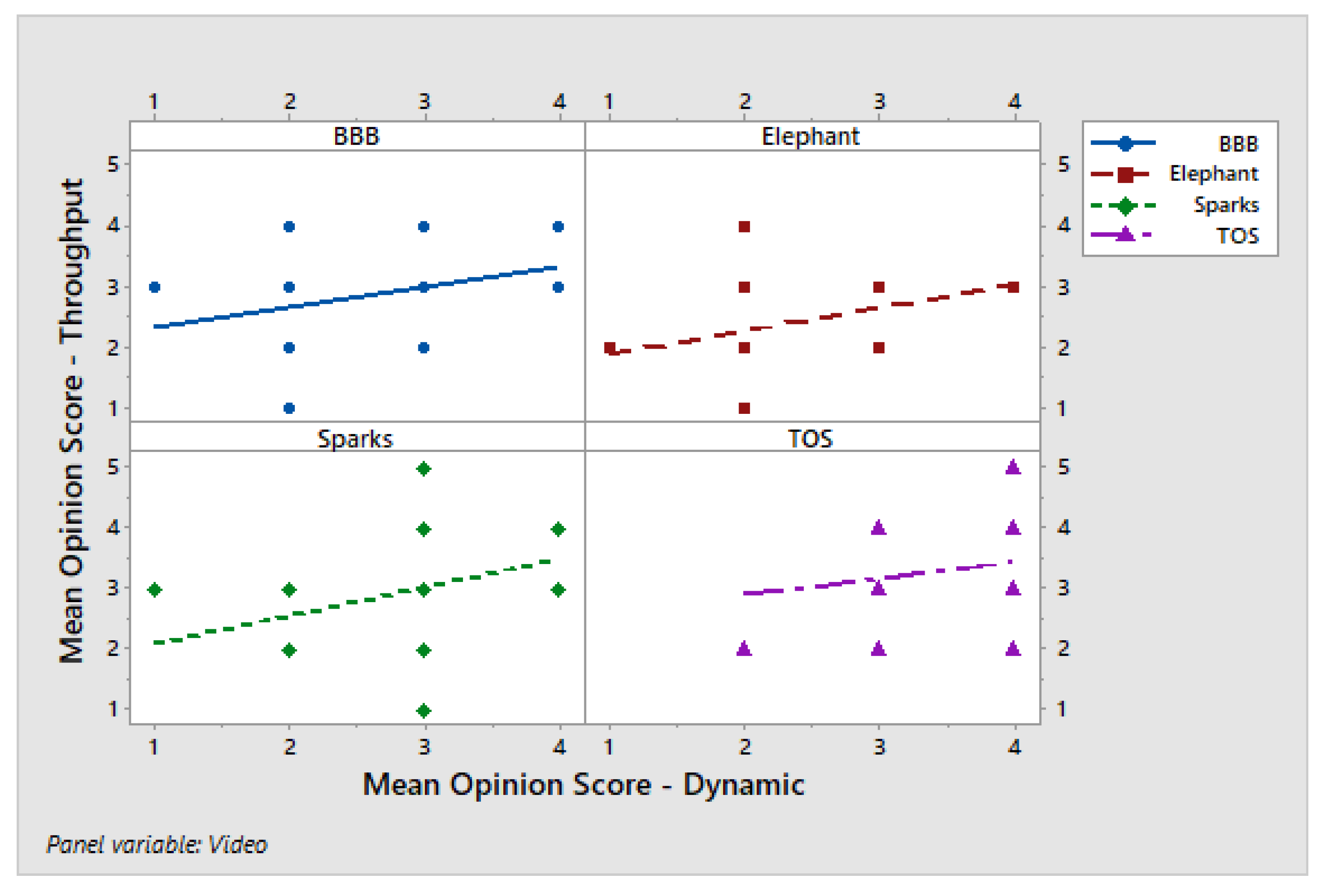

4.2.7. Regression Analysis of Low-Latency Algorithms

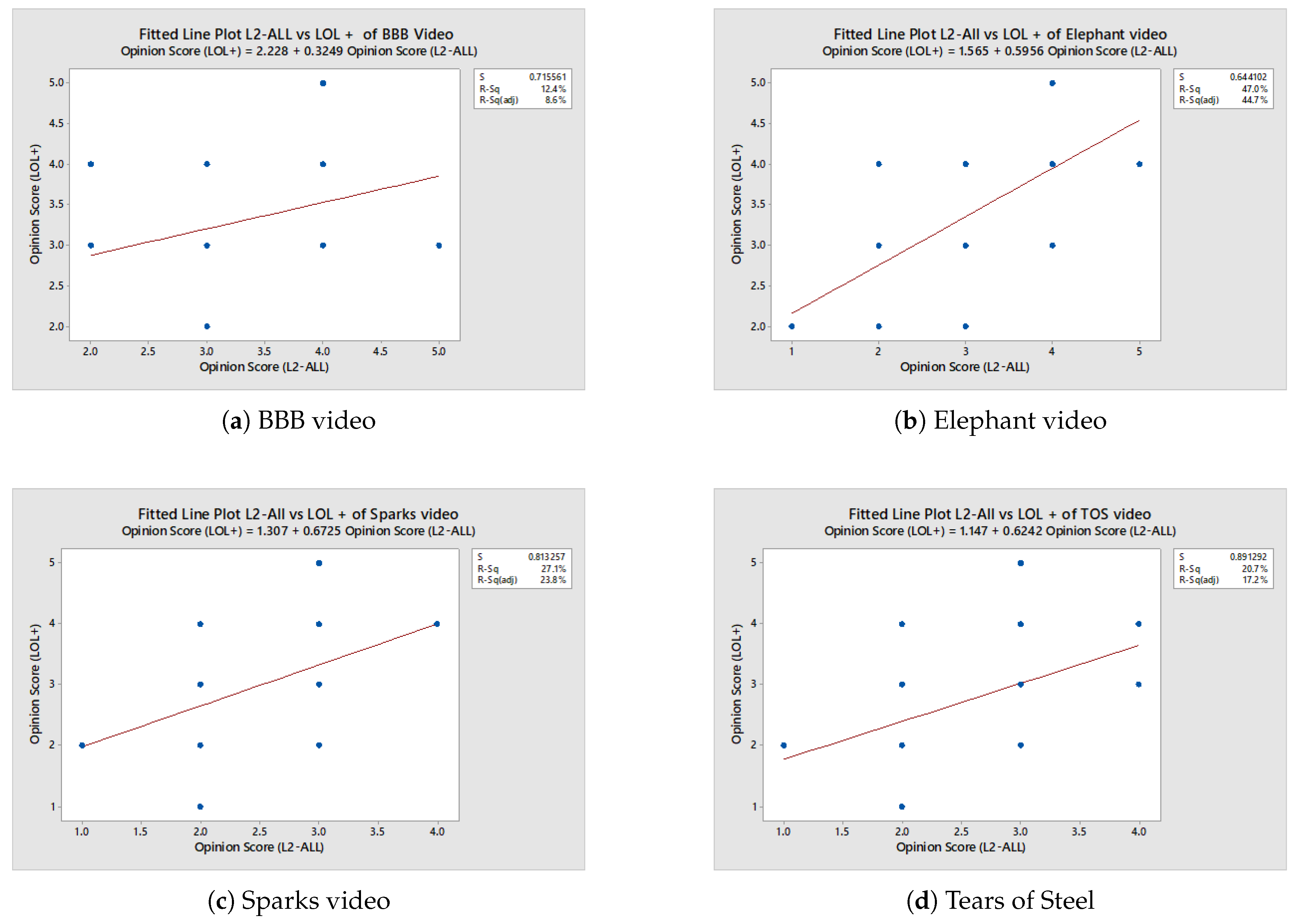

To further assess the relationship between two low-latency algorithms, a linear regression analysis was conducted comparing opinion scores from L2A-LL and LOL+ for all videos.

Figure 15a illustrates the regression relationship between the two algorithms, while

Table 7 summarizes the corresponding statistical parameters. First, a linear regression relationship is demonstrated between the opinion scores obtained from the L2A-LL and the LOL+ for the Big Buck Bunny (BBB) video. The equation is shown: The regression yielded the following model:

The equation above indicates that increases in L2A-LL scores are accompanied by slight increases in LOL+ scores. This suggests a weak positive association that lacks strong predictive capability. The presence of outliers highlights inconsistencies in participant evaluations, reflecting inherent subjectivity and variability in user perception. These findings suggest the need to consider additional predictors—such as content complexity, motion characteristics, and participant demographics—to more accurately explain differences in perceived QoE.

Table 7.

Statistical Analysis of Variance - BBB.

Table 7.

Statistical Analysis of Variance - BBB.

| Source |

DF |

SS |

MS |

F |

P |

| Regression |

1 |

1.66 |

1.66 |

3.25 |

0.085 |

| Error |

23 |

11.77 |

0.51 |

|

|

| Total |

24 |

13.44 |

|

|

|

Next, we assess the relationship between two low-latency algorithms for the Elephant video. Figure ?? illustrates a clear upward trend, indicating that users provide consistent ratings when evaluating this video. A detailed analysis is presented in Table 8. The relationship between the two metrics is modeled by the regression equation:

This implies that for every one-point increase in the L2-ALL score, the LOL+ score is expected to increase by approximately 0.60 units.

Table 8.

Statistical Analysis of Variance - Elephant Dream.

Table 8.

Statistical Analysis of Variance - Elephant Dream.

| Source |

DF |

SS |

MS |

F |

P |

| Regression |

1 |

8.45 |

8.45 |

20.39 |

0.00 |

| Error |

23 |

9.45 |

0.41 |

|

|

| Total |

24 |

18.00 |

|

|

|

Next, the Spark video sequence was presented to users for subjective rating. Figure illustrates reveals a clear trend line that indicates a moderate to strong positive correlation. The corresponding analysis of variance is summarized in Table 9. Based on the regression analysis, the following regression equation was derived:

This implies that for every one-unit increase in the L2-ALL score, the LOL+ score is expected to increase by approximately 0.67 units. The positive slope confirms a positive association between the two sets of ratings. However, the noticeable scatter around the regression line indicates variability. This suggests that while the overall trend holds, individual respondents’ ratings often deviate from the predicted values.

Table 9.

Statistical Analysis of Variance - Sparks.

Table 9.

Statistical Analysis of Variance - Sparks.

| Source |

DF |

SS |

MS |

F |

P |

| Regression |

1 |

8.45 |

8.45 |

20.39 |

0.00 |

| Error |

23 |

9.45 |

0.41 |

|

|

| Total |

24 |

18.00 |

|

|

|

Finally, we assess the relationship for the TOS video. As shown in Figure ??, the trend line indicates a moderate to strong positive relationship in users’ opinion scores for this content. The detailed analysis is summarized in Table ??. Based on the regression analysis, the following equation was derived:

The regression equation shows a positive slope of 0.6242. This indicates that for each one-unit increase in the L2-ALL score, the LOL+ score is expected to increase by approximately 0.62 units. This reflects a moderate positive association in respondent opinions across the two algorithms. For the TOS content, the relationship between L2-ALL and LOL+ scores is both moderate and statistically significant. The participants tended to rate the video in a similar manner under both scoring methods. However, the strength of agreement was lower than that observed for the Elephant content, with noticeable individual differences remaining. This variability implies that respondent ratings may have been influenced by contextual factors or subjective interpretation, rather than by the algorithmic method alone.

Table 10.

Statistical Analysis of Variance - Tears of Steel.

Table 10.

Statistical Analysis of Variance - Tears of Steel.

| Source |

DF |

SS |

MS |

F |

P |

| Regression |

1 |

4.76 |

4.76 |

6.00 |

0.022 |

| Error |

23 |

18.27 |

0.79 |

|

|

| Total |

24 |

23.04 |

|

|

|