2.2. Development of a Semantic Facial Image Segmentation Model

Using as a prototype the author's developments in the field of application of neural networks for image segmentation [

35,

36], it was determined that in the basic case the model of semantic segmentation of a facial image during biometric authentication at critical infrastructure facilities (M_{SS}) can be described using expressions of the form:

where

is the input face image to be segmented,

is the probability map of pixel classes,

is the segmentation function (

),

is the set of real numbers,

and

are the height and width of the image,

is the number of color channels in the image,

is the number of segmentation classes,

is the intensity of the

с-th color channel of the pixel with coordinates

and

is the probability that the pixel with coordinates

belongs to the

k-th class.

Note that in common image representation formats, the brightness values of each color channel are stored as integers. For example, when using the RGB format, it is quite common to record the brightness of each pixel using an 8-bit number. This means that , where – is a set of integers, and the brightness of each of the color channels of an arbitrary pixel is an integer from 0 to 255. However, in practice, the standard procedure for preparing an image before feeding it to the input of a neural network model involves scaling the brightness of each of the color channels to the range , by dividing the initial brightness value of each pixel by a number corresponding to the maximum possible brightness value, for example, by 255 for 8-bit images. Therefore, in expression (1) .

Considering that, according to the results of [

25,

35,

37], for semantic segmentation it is advisable to use a neural network model, the architecture of which involves the use of an encoder and a decoder, expression (1) can be modified as follows:

where

is the function that describes the result of applying the neural network encoder, and

is the function that describes the result of applying the neural network decoder.

Using the results of [

37,

38,

39], it was determined that in the basic case, the functionality of a neural network encoder and a neural network decoder can be described using expressions of the form (5) and (6), respectively. Note that according to the results of [

27,

30,

40,

41,

42], it is advisable to use such types of neural network models as VGG, ResNet, MobileNet, EfficientNet, HRNet as the basis of the encoder and decoder. It is believed that the most tested types of encoder and decoder are based on VGG models and are used in U-Net-like neural network models of semantic segmentation with possible omissions of subsampling layers and a variable number of convolution layers..

where

is the feature tensor of the encoded image,

is the size of the convolution/subsampling maps at the output of the encoder, and

is the number of convolution/subsampling maps at the output of the encoder (the depth of the feature space).

A detailed description of the procedure for the functioning of the encoder (5) can be carried out using expressions (7, 8), and to detail the functioning of the decoder (6) - expressions (9, 10).

where

– two-dimensional feature matrix at the output of the

c-th convolution map in the

l-th convolutional layer (

);

– number of convolution layers in the encoder;

– two-dimensional feature matrix at the output of the

s-th subsampling map;

– weights corresponding to the

c-th convolution map in the first convolutional layer;

– subsampling operation;

– activation function in the first convolutional layer of the encoder.

where

– two-dimensional feature matrix corresponding to the

c-th convolution map in the

n-th convolutional layer of the decoder (

);

– number of convolution layers in the decoder;

– concatenation (skip-connection) of weight coefficients from the corresponding encoder level.

In this case, according to [

43,

44], the term upsampling describes a procedure that is implemented by increasing the spatial resolution of the feature tensor, which is performed in the neural network decoder in order to step-by-step restore the original image size after downsampling, i.e. compression due to the passage of the input image through the encoder. The upsampling procedure can be described as follows:

where

upsampling result;

height and width of the input feature tensor

;

height and width of the upsampling kernel ; upsampling scaling factor; function for determining the nearest smallest integer; upsampling kernel value at position .

The values of

depend on the interpolation method used and are determined using expressions of the form:

where

Kronecker delta function;

fixed parameter (

);

function that corresponds to the definition of

as a result of training the neural network model.

Expression (12) is used when applying the nearest neighbor interpolation method, expression (13) - for two-dimensional linear interpolation; expressions (14, 15) - for bicubic interpolation, and expression (16) - when determining the upsampling kernel as a result of training a neural network model.

Integration of expressions (5-16), taking into account the features of the functioning of a decoder based on a convolutional neural network, allows us to write the resulting expression for calculating the pixel class probability map in the following form:

where

– weight coefficients of the last convolutional layer of the neural network decoder;

– a function describing the implementation of the upsampling procedure in the neural network decoder.

In the most common case of using a Softmax-type activation function in the neurons of the output layer of the decoder, the probability that a pixel with coordinates

belongs to the

k-th class can be calculated as follows:

where

– the decoder output signal (logit), representing a non-normalized estimate of whether a pixel with coordinates

belongs to the

k-th class.

We also note that the specificity of the task of recognizing the face of a representative of the personnel of a critical infrastructure facility indicates the need to obtain the result of semantic segmentation not only in the form of a probability map of pixel classes, but also in the form of a segmented image of the face, which displays its natural boundaries, eye boundaries and interference boundaries. Taking into account [

35,

38], the definition of such a segmented image can be implemented by assigning each pixel of this image to the class with the highest probability. That is:

where

is the class to which the image pixel with coordinates

belongs;

is the probability of assigning the pixel with coordinates

to the

k-th class;

is the segmented image.

Taking into account the results of [

42,

43,

44], the analysis of the developed mathematical support (1-20) of the neural network model of semantic segmentation of the face image of a representative of the personnel of a critical infrastructure facility, built on the basis of a U-Net-like architecture, indicates that the list of design parameters of this model, which ensure the possibility of its adaptation to the expected conditions of application, includes: the number of convolution layers and the number of convolution maps, the size and step of the convolution kernel in each of the layers for the encoder and decoder; the number and localization of the encoder subsampling layers; the number and localization of the upsampling layers; the kernel size, scale factor and interpolation method for each of the upsampling layers; number of skip-connections, localization of the input in the encoder and insertion into the decoder of each of the skip-connections; number of aggregation levels of skip-connection features; skip-connection aggregation variant. Note that in the case of using alternative architectures (ResNet, VGG, MobileNet, EfficientNet, HRNet) as the basis of a neural network model of semantic segmentation, the list of design parameters is additionally expanded due to the type of encoder, parameters of multi-scale context modules, attention blocks, strategies for combining multi-level features and methods of semantic post-processing. Thus, for an encoder and decoder that provide for the use of Residual blocks characteristic of the ResNet neural network, the functioning of which is described by expressions (21, 22), the list of design parameters should include: number of stages, number of blocks in each of the stages, convolution parameters for each block and parameters of the projecting convolution.

where

–

j-th Residual-block at the first stage;

– input tensor of the first stage;

– the number of stages;

– sequence of convolutions/upsampling in the Residual block;

- set of parameters of convolution/upsampling layers;

– set of convolution/upsampling parameters to reduce the dimension

to the dimension

.

In addition, based on [

45,

46,

47], the list of design parameters includes parameters that characterize the learning procedure: the type of optimizer (for example, Adam, SGD), the size of the mini-package (batch size), the learning rate (learning rate), the strategy for changing the learning rate (learning rate scheduling), the conditions for stopping training (early stopping, number of epochs), regularization methods (dropout, batch normalization, etc.), as well as the type of loss function. Using the data [

31,

35,

48], it was determined that the following types of loss functions are usually used in neural network models of semantic segmentation: Categorical Cross-Entropy, Binary Cross-Entropy, Dice Loss, Jaccard Loss, Focal Loss, Tversky Loss. Note that the Categorical Cross-Entropy loss function is defined by the expression (23), Binary Cross-Entropy – (24), Dice Loss – (25, 26), Jaccard Loss – (27, 28), Focal Loss – (29), Tversky Loss – (30, 31).

where

is the number of pixels in the image;

is the number of classes;

is the resulting segmentation mask;

is the true segmentation mask;

are the sets of pixels that fall into the categories true positive, false positive and false negative;

is the predicted probability of pixel

belonging to class

;

is the true label;

is the coefficient used to avoid division by 0;

is the weighting factor for class

k;

is the focusing parameters;

are the weights for pixels belonging to the sets FP and FN, respectively.

According to [

48],

,

,

are taken, and the value of

is set to be inversely proportional to the fraction of pixels of class

in the training data set. Given that in the process of building neural network tools for semantic segmentation, specific indicators are used that reflect the features of the training sample, the learning process and the specifics of the results of semantic segmentation, the mathematical support of the semantic segmentation model includes the training sample imbalance coefficient (

), the variance of the gradients of the loss function

(), he coefficient of deviation of the position of the boundaries of the selected object from the true boundaries (

), the coefficient of the relative size of the object mask (

), the average value of the standard deviation of the brightness

and the range of the average brightness values

of the images in the training sample. The calculation of these coefficients is provided using expressions (32-40). In this case, the training sample imbalance coefficient

is calculated as follows:

where

is the number of pixels of class

k in the whole sample.

Note that . In case when , the sample is considered balanced, and the case corresponds to a significant imbalance in the training sample.

The variance of the loss function gradients

is calculated as the relative standard deviation of the loss function gradient

, calculated over several consecutive epochs:

where

is the standard deviation and the average value of

during

training epochs;

is the gradient of loss function

.

The coefficient of deviation of the position of the boundaries of the selected object from the true boundaries

is calculated using the expressions:

where

is the set of pixels of the contour of the predicted mask for the k-th object;

is the set of pixels of the contour of the true mask for the

k-th object;

is the total number of pixels in the predicted contours of all objects (normalization factor);

is the minimum Euclidean distance from pixel

to the nearest pixel of the true contour

.

The coefficient of the relative size of the object mask

is calculated as:

where

is the number of objects in the sample;

is the number of pixels in the mask of the

j-th object in the

i-th image;

is the total number of pixels in the

i-th image;

is the number of objects in the

i-th image.

The average value of the standard deviation of the brightness

and the range of the average values of the brightness RV of the images in the HSV format in the training sample are calculated according to expressions (37-40). In this case, the brightness (channel V) is expressed as an integer in the range from 0 to 255.

where

is the number of examples (images) in the training sample;

is the standard deviation of the brightness values in the

n-th image;

is the array of brightness channel values in the nth image;

is the average brightness value of the nth image;

is the width and height of the image.

In conclusion, expressions (1-40) form the basis for describing the mathematical apparatus of the semantic segmentation model, which, due to the use of the encoder-decoder architecture of a neural network with variable design parameters that are subject to adaptation depending on the application conditions, provides the possibility of developing an effective method for determining the architectural parameters of the neural network model of semantic segmentation of a face image during biometric authentication at critical infrastructure facilities.

2.3. Formation of Rules for Determining Architectural Parameters of a Neural Network Model of Semantic Segmentation

Since, according to [

35,

36,

39], it is advisable to implement the definition of the mechanism for forming the set of admissible architectures and training parameters outside the semantic segmentation model, the next step of the research is associated with the formation of a set of rules (

), that regulate the relevant aspects of the development of neural network tools.

R1. Rule for forming the set of admissible basic architectures

The set of basic architectures is formed taking into account computational constraints, allowable segmentation time, image characteristics, class imbalance, variability of conditions and accuracy requirements. The set of basic architectures is formed by sequentially analyzing the key conditions of the semantic image segmentation problem:

- In the case of limited segmentation time and computational resources (allowable segmentation time - 100 ms, the amount of available memory for storing model parameters is less than 30 MB) - MobileNet, EfficientNet(B0-B1).

- For images in RGB format up to 256×256 - VGG (U-Net, U-Net++, or Attention U-Net++), MobileNet.

- If the number of segmentation classes does not exceed 3 - MobileNet, VGG (U-Net), and otherwise - VGG (U-Net++ or Attention U-Net++), ResNet, HRNet.

- If it is necessary to segment small objects (the area of the object occupies less than 0.1 of the area of the input image) and with a high imbalance of object examples (the imbalance coefficient is less than 0.2) to be segmented in the training sample - VGG (U-Net++ or Attention U-Net++), HRNet.

- With high variability of lighting and angle of video recording of the face image after preprocessing (the ranges of lighting and angle of video recording exceed the threshold values defined in [111], in the first approximation 0.5) - VGG (U-Net++ or Attention U-Net++), ResNet, EfficientNet, HRNet.

- If it is necessary to achieve high accuracy (IoU > 0.85) - VGG (U-Net++ or Attention U-Net++), ResNet, HRNet.

- If the total number of training examples , and the allowable number of training epochs - priority is given to models that achieve fast convergence on small samples (MobileNet, EfficientNet, ResNet-18, VGG (U-Net)).

- If - priority is given to models that are characterized by high initial convergence and stability of loss dynamics (ResNet-34, EfficientNet, VGG (U-Net++)).

- In cases where none of the basic architectures satisfies all the requirements, the use of MobileNet is envisaged as a compromise model that provides the optimal balance between processing speed and segmentation quality.

Using the results of [

22,

38,

42], it is determined that it is appropriate, depending on the number of weight coefficients (

), to distinguish three complexity classes of neural network architectures: low complexity, for which

, medium complexity -

and high complexity -

. In particular, low complexity models include MobileNet and EfficientNet-B0, medium complexity models include ResNet-18, ResNet-34, U-Net (VGG) and Attention U-Net, and high complexity models include ResNet-101, HRNet, U-Net++ and Attention U-Net++.

R2. Training data augmentation rule.

The rule defines the mechanism for augmenting training data, which is carried out to increase the model's generalization ability. In the basic version, augmentation mechanisms of the type:

- Random Crop, which randomly limits the image to 90% of its original size. This allows the model to better focus on local areas of the face;

- Gaussian Noise with a standard deviation of σ < 0.01, which simulates noise typical of camera sensors and increases the resistance to interference in the input image.

In addition, in the case of detecting significant variability in brightness characteristics in the training sample, Random Brightness/Contrast augmentation is applied: stochastic change in brightness and contrast with an amplitude within ±15%. The decision on the presence of significant variation is made if the average value of the standard deviation or the range , which are calculated according to expressions (37, 38).

R3. Loss function selection rule

The selection is implemented from the list of loss functions defined by expressions (23-31), taking into account the number of segmentation classes , the training sample imbalance coefficient , the deviation coefficient of the position of the boundaries of the selected object from the true boundaries and the coefficient of the relative size of the object mask , which are calculated according to expressions (32, 34-36).

- At . If Binary Cross-Entropy is used, if and - Focal Loss, and otherwise Dice Loss.

- At . If and - Jaccard Loss, and if - Dice Loss. If - Tversky Loss.

- At . If Categorical Cross-Entropy is used, if абo - Tversky Loss, and in other cases Dice Loss.

- With insufficient information or conflicting requirements - Dice Loss.

R4. Optimizer selection rule.

The rule defines an algorithm for optimizing the values of the neural network weight coefficients used during training to ensure stable convergence of the loss function, maintain stable dynamics of parameter updates throughout the training process, and achieve high segmentation quality under constraints on the training sample size and model complexity. Also, during training, the dynamics of the loss function on the validation sample is analyzed to confirm the effectiveness of the selected algorithm or make a decision on its correction. In this regard, rule R4 covers two levels of decision-making: the initial selection of the optimization algorithm (R4.1) and its adaptation during training (R4.2).

R4.1. Initial choice of optimization algorithm

- For models of medium and high complexity, in particular ResNet, HRNet, U-Net++, Attention U-Net, trained on limited samples () it is advisable to use the Adam optimizer, since it provides adaptive scaling of gradients and promotes stable convergence even in noisy or non-uniform gradient fields.

- For the same models of medium and high complexity, at the SGD optimizer with momentum (momentum=0.9) is used. This approach ensures stable convergence of the loss function and, provided there is a sufficient amount of data, allows achieving higher segmentation accuracy on validation data compared to adaptive methods.

- For models based on MobileNet and EfficientNet-B0 and at AdamW is used as a compromise solution between convergence speed and control over regularization.

R4.2. Optimization algorithm adaptation

- If during the training process a high dispersion of the gradients of the loss function is observed or instability of the dynamics of the loss function is observed, then the transition to the Adam optimizer is carried out. The dispersion of the gradients is considered high if the value >0,3 calculated according to expression (33) for at least 5 consecutive training epochs is considered unstable if its total decrease in 5 consecutive epochs is less than 1% or the amplitude of oscillations exceeds 20% of the average value in this interval.

R5. The rule for determining the learning rate parameters

The rule determines the learning rate parameters of a neural network model, which includes the selection of the initial value of the rate and the strategy for changing it during the learning process. This ensures stable convergence of the loss function, stable optimization dynamics throughout the entire learning period. Since the initial value of the rate is determined taking into account the complexity of the architecture, and the selection of the rate change strategy is implemented taking into account the size of the training sample, the permissible number of learning epochs and the features of the loss function convergence, the rule R5 covers three levels of decision-making: determining the initial value of the learning rate (R5.1), the initial selection of the strategy for changing the learning rate (R5.2) and adapting the strategy parameters to intermediate learning indicators (R5.3).

R5.1. Determining the initial value of the learning rate

The choice of the initial value of the learning rate is carried out from the standpoint of ensuring the stability of the learning process, which, according to [

14,

16,

27], largely depends on the complexity of the neural network model, which in turn mainly depends on the number of weight coefficients (

). Models of low complexity are characterized by limited depth and a small number of weight coefficients, therefore, the gradients of the loss function calculated at each iteration usually have a smaller norm, i.e. a relatively smaller absolute size of the change in weights. This reduces the risk of destabilization during parameter updates even with an increased learning rate. In other words, small models demonstrate less sensitivity to gradient fluctuations, and therefore can be effectively trained with a larger update step, which provides faster convergence in the initial phases of training. For such models, learning rate = 0.001. Models of medium complexity are characterized by a larger number of layers and weight coefficients, which leads to the accumulation of gradient errors in deeper layers. In such networks, the gradient norm (for example, in the L2 sense) can change from layer to layer - decrease (gradient decay effect) or increase (gradient explosion effect). Under these conditions, an increased learning rate value can lead to unstable oscillations in the optimization process, therefore, they require some reduction. Therefore, for models of medium complexity, learning rate = 0.0007. Models of high complexity are characterized by a large depth and a complex structure of connections (including residual blocks, attention mechanisms, etc.), which increases the probability of nonlinear growth of gradient norms in different parts of the network. This makes them especially sensitive to a large update step, since an excessive learning rate value can cause unstable weight updates, divergent behavior, or an explosion of activation values. Accordingly, for a high-complexity model, learning rate = 0.0005. The difference between the recommended learning rate values for medium- and high-complexity models is empirically based and is primarily focused on cases of using adaptive optimizers (Adam or AdamW). Thus,

- For neural network models based on MobileNet or EfficientNet-B0, which are low-complexity models, learning rate = 0.001.

- For neural network models based on ResNet-18, ResNet-34, U-Net (VGG) and Attention U-Net, which are medium-complexity models, learning rate = 0.0007.

- For neural network models based on ResNet-101, HRNet and models such as, U-Net++, Attention U-Net++, which are high-complexity models, learning rate = 0.0005.

R5.2. Choosing a learning rate change strategy

The choice of a learning rate change strategy is implemented from the standpoint of providing adaptive control of the learning rate, which allows for the effective use of available computational resources while maintaining the stability of the loss function optimization. The strategy is selected taking into account the permissible number of learning epochs () nd the total number of training examples ().

- If , then the Cosine Annealing strategy is used, which involves a gradual decrease in the learning rate along a cosine-like trajectory, which is the most acceptable strategy in conditions of limited training time, since it allows you to quickly pass the coarse optimization phase and gradually move to accurate local minimization.

- If , then the Step Decay strategy is used with a 10-fold decrease in the learning rate every 10 epochs, which avoids overtraining on a limited training sample.

- In other cases, the ReduceLROnPlateau strategy is used.

R5.3. Adaptation of the learning rate change strategy

- In the case of using the Cosine Annealing strategy, if after training during the first third of the specified number of training epochs () the loss function decrease is less than 0.5% over 5 epochs, the parameter of the strategy (the full length of the cosine phase) is reduced by 20%, which accelerates the learning rate decrease.

- In the case of using the Step Decay strategy, if after the first learning rate decrease (i.e. after 10 epochs) the loss function value increases or remains practically unchanged (decrease <1%) over the next 5 epochs, then the interval between learning rate decreases is reduced to 5 epochs.

- In the case of using the ReduceLROnPlateau strategy, if the loss function value on the validation sample does not decrease by more than 0.001 over 5 consecutive epochs, then the current learning rate value is reduced by 2 times.

At the same time, R5.3 takes into account the limits of changes in the parameters of the specified learning strategies, for the determination of which it is possible to use the results of [

15,

35].

Note that in rule R5 the type of input images and the architecture of the model are not directly taken into account, since these characteristics have already been used in the rule for selecting the basic architecture.

R6. Batch size selection rule.

The batch size selection is implemented taking into account the available computational resources (available amount of video memory of the graphics processor in bytes) (), the architectural complexity of the neural network model, the size of the input image and the dispersion of gradients, which allows for efficient use of hardware capabilities and to ensure the optimal ratio of speed and stability of convergence of the loss function. Accordingly, the R6 rule covers two levels of decision-making: selection of the batch size at the beginning of training (R6.1) and adaptation of the batch size to the training process (R6.2).

R6.1. Choosing the initial batch size

For neural network models based on ResNet-18, ResNet-34, U-Net (VGG) and Attention U-Net, which are medium complexity models with an image size of 256×256 pixels:

- If , then batch size = 4.

- If , then batch size = 8.

- If , then batch size = 16.

- If , then batch size = 32.

For neural network models based on MobileNet or EfficientNet-B0, which are low complexity models, the batch size increases twice, and for neural network models based on ResNet-101, HRNet and models such as, U-Net++, Attention U-Net++ , which belong to high complexity models, the batch size is reduced by half.

Also, the batch size is adjusted depending on the image size. For an image of size 512×512, the batch size is reduced by 4 times, for a size of 384×384, it is reduced by 2 times, and for a size of 128×128, it is increased by 2 times.

R6.2. Batch size adaptation

If during the learning process, during 5 consecutive learning epochs, the value of the variance of the gradients of the loss function () alculated using expression (33) exceeds 0.2, but is less than 0.3, i.e. when , provided that there is sufficient computing resources, the batch size increases by 2 times.

R7. Regularization mechanism selection rule

Adaptation of the regularization mechanism to the conditions of the segmentation problem is implemented to ensure a balance between the learning rate, the stability of the convergence of the loss function and the risk of overtraining. The type and parameters of the regularization mechanism are selected taking into account the number of training examples and the complexity of the model architecture, in particular the number of weight coefficients:

- In the case when the training sample size , is used to prevent overtraining, as well as L2-regularization with .

- For compact models based on the MobileNet or EfficientNet-B0 architectures, Dropout can negatively affect the convergence and efficiency of training. In this case, Dropout is not used, and to ensure uniform convergence dynamics and training rate, L2-regularization with .

- To reduce the risk of overfitting and stabilize weight coefficients when training models with more than parameters, such as ResNet (in configurations from ResNet-34 and higher), HRNet, Attention U-Net, U-Net++, Attention U-Net, Dropout with probability 0.3 is used, as well as L2-regularization with .

Note that the use of only Dropout and L2 regularization is explained by their proven effectiveness in applying neural networks for semantic image segmentation under conditions of limited computing resources..

R8. Rule for determining the number of training epochs

The number of training epochs () s determined from the standpoint of avoiding overtraining, ensuring the possibility of achieving the required segmentation accuracy taking into account the limited duration of training. This takes into account the convergence dynamics of the loss function, the maximum permissible number of training epochs, the volume and balance of the training sample.

R8.1. Determining the minimum number of training epochs

- If or the average number of examples of each class is less than 500, or (calculated according to (32)), then the minimum number of training epochs is 20. In other cases, the minimum number of training epochs is 30.

- If , then .

R8.2. Determining the conditions for stopping and continuing training

Training is stopped if, after the minimum number of training epochs, the loss function on the validation sample does not improve by more than 0.02 over the last 5 consecutive epochs, or a predetermined segmentation accuracy is achieved. Otherwise, provided that , training continues.

Note that the threshold values of the parameters used in the above rules are determined based on practical experience and data from literature sources [

15,

21,

35] as initial guidelines that can be refined at further stages of the study.

2.4. Development of a Method for Determining the Architectural Parameters of a Neural Network Model for Semantic Segmentation of Facial Images During Biometric Authentication at Critical Infrastructure Facilities

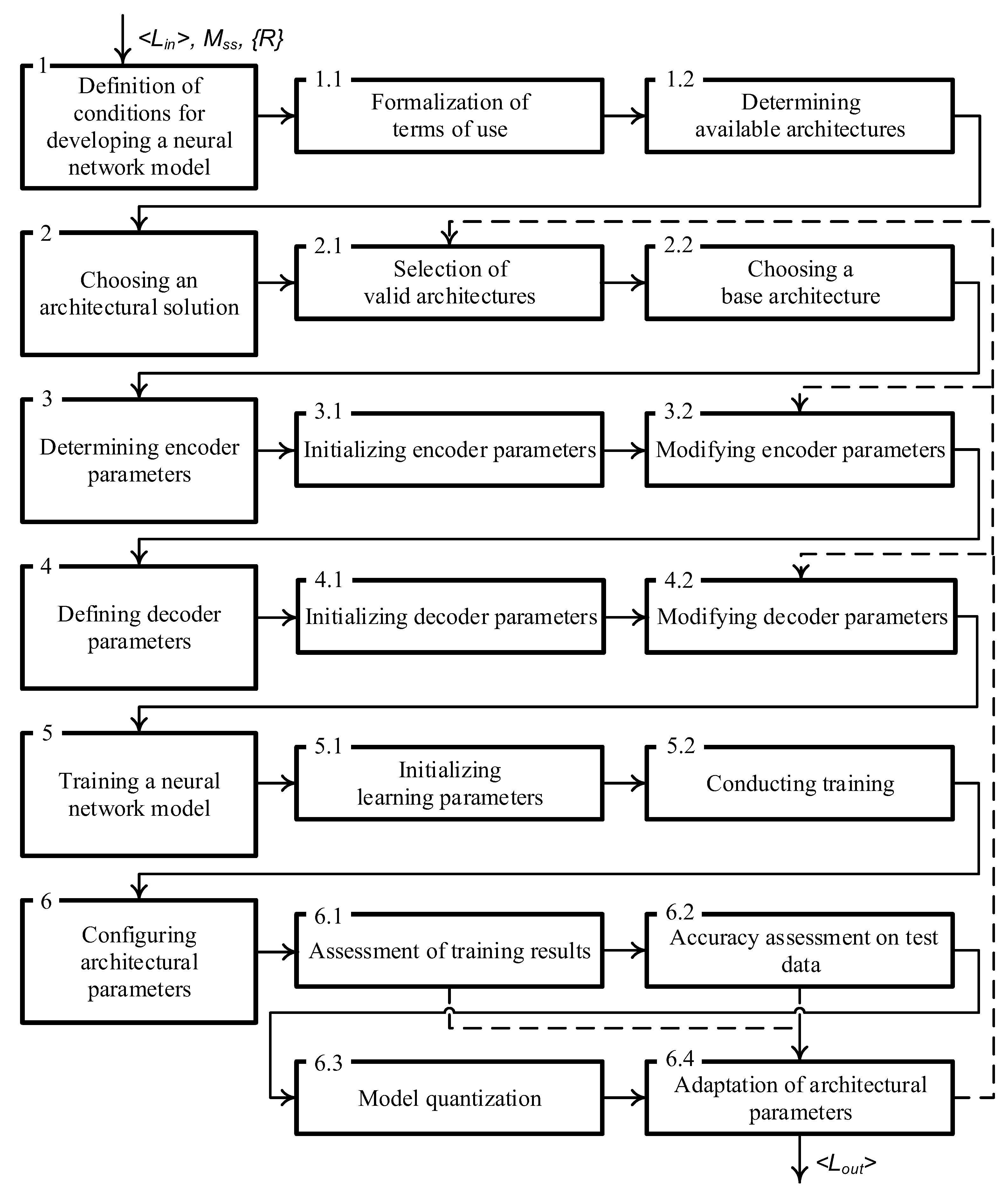

Using the method of semantic image segmentation described in [

35] as a prototype, based on the proposed semantic segmentation model (1–40) and the formed set of rules for determining architectural parameters, the implementation of the proposed method for determining architectural parameters of a neural network model of semantic segmentation of a face image during biometric authentication at critical infrastructure facilities is divided into six stages. At the same time, taking into account the need to adapt to variable application conditions and the results of [

39,

42], the method is based on the principle of phased adaptation of the architecture, including the training parameters - first by initializing the maximum permissible values for a certain type of neural network model, and then through gradual modification of the model parameters taking into account the achieved accuracy, level of resource consumption and stability of the training process.

Stage 1. Determination of the conditions for developing a neural network model. The input of the stage is a tuple of , parameters describing the conditions of the biometric authentication task, regulatory requirements for the biometric authentication system, characteristics of the available training data set, a description of the developed semantic segmentation model and a set of rules , is formed, which regulate the development of neural network tools.

Step 1.1. Formalization of application conditions. Based on expert evaluation of the tuple of , and parameters, a tuple of , parameters is determined, which characterize: the size of the input image and the number of color channels (), the number of segmentation classes (); total number of training examples (), number of training examples for each segmentation element (), maximum available memory size for storing model parameters (), acceptable size for storing model parameters (), allowable term of the semantic segmentation process (), type and minimum allowable value of the segmentation accuracy indicator (), allowable number of training epochs (), as well as the training sample imbalance coefficient (), the coefficient of deviation of the position of the boundaries of the selected object from the true boundaries (), the coefficient of the relative size of the object mask (), he average value of the standard deviation of the brightness and the range of the average brightness values of the images in the training sample, which are calculated using expressions (32, 34, 36-38) respectively.

Step 1.2. Determination of available architectures. Based on expert evaluation, a set of available basic neural network architectures (

) is determined. By default, the specified set includes architectures based on VGG (U-Net, U-Net++, Attention U-Net++) and architectures such as ResNet, MobileNet, EfficientNet, HRNet. Based on expert evaluation, taking into account the results obtained during the development of the semantic segmentation model, the design parameters of each architecture included in

and the range of values of these design parameters, which is determined by the set of minimum (

) and maximum values (

) are determined. Expert evaluation procedures are planned to be implemented based on the methods used in [

10,

47].

The output of the stage is a tuple of parameter values that characterize: restrictions related to segmentation tools, a set of available basic architectures, a set of design parameters of each of the basic architectures - . Note that the description of the developed semantic segmentation model and the formed set of rules received as input to the first stage are subsequently used in the execution of stages 2-6.

Stage 2. Selection of an architectural solution. The input to the stage is the tuple , determined as a result of the implementation of stage 1.

Step 2.1. Selection of admissible architectures. The selection is implemented based on the rule R1 taking into account the set of available architectures, computational constraints, image characteristics, class imbalance, variability of conditions and accuracy requirements defined in step 1.

Step 2.2. Selection of the base architecture. This step implements the selection of the architecture that will be used as the base. In the case when the implementation of the previous step leads to the selection of several architectures, then the architecture whose software and hardware implementation is less complex is selected as the base (A_{NB}). Also, in accordance with the defined type, from the standpoint of achieving maximum segmentation accuracy, a set of architectural parameters () is determined.

The output of step 2 is determined by the expression - .

Step 3. Determination of encoder parameters. The input of the stage is , , , – the correspondence between the value of the permissible and the value of the achieved accuracy indicator, which is determined as a result of the execution of stage 6. When performing stage 3 immediately after stage 2, it is assumed that .

Step 3.1. Initialization of encoder parameters. The step is performed in the case . he values of the encoder design parameters (5, 7, 8, 21, 22) are set equal to the values of the corresponding elements from the set для , тoбтo , where – is the number of the design parameter.

Step 3.2. Modification of encoder parameters. The step is performed in the case

. In this case, the depth of the encoder (number of convolutional layers/number of stacks) changes according to expressions (41, 42).

where

– number of the design parameter corresponding to the encoder depth.

Other encoder parameters are modified from the position of maintaining the compatibility of the encoder output signal with the decoder input signal. The output of stage 3 is a set of values of the encoder design parameters, .

Stage 4. Determination of decoder parameters. , , , та are fed to the input of the stage. When performing stage 4 immediately after stage 3, it is assumed that .

Step 4.1. Initialization of decoder parameters. The step is performed in the case . The values of the decoder design parameters (6, 9-16, 21, 22) are set equal to the values of the corresponding encoder design parameters, i.e. , where is the design parameter number. In this case, the maximum number of skip connections and attention modules are used, if they are available in the basic architecture.

Step 4.2. Modification of decoder parameters. The step is performed in the case .

The modification consists in the fact that starting from the deepest level of the decoder, which corresponds to the layer with the smallest feature map size, in accordance with (43, 44), the sequential removal of attention modules, skip connections, and upsampling layers is implemented.

Other decoder parameters are modified from the standpoint of maintaining the compatibility of the encoder output signal with the decoder input signal. The output of stage 4 is a set of values of the decoder design parameters, .

Stage 5. Training the neural network model

The input to the stage is , , , , and . When performing stage 5 immediately after stage 4, it is assumed that .

Step 5.1. Initialization of training parameters that regulate the process of determining the weight coefficients of synaptic connections when training the neural network model. The step is performed in the case of and is carried out in accordance with the rules R2, R3, R4.1, R5.1, R5.2, R6.1, R7, R8.1. In this case, the weights of the neurons of the convolutional and fully connected layers in which the ReLU activation function is used are initialized according to the He Normal (Kaiming Normal) scheme. For neurons of the output layer with the Softmax or Sigmoid activation function, Xavier Uniform is used.

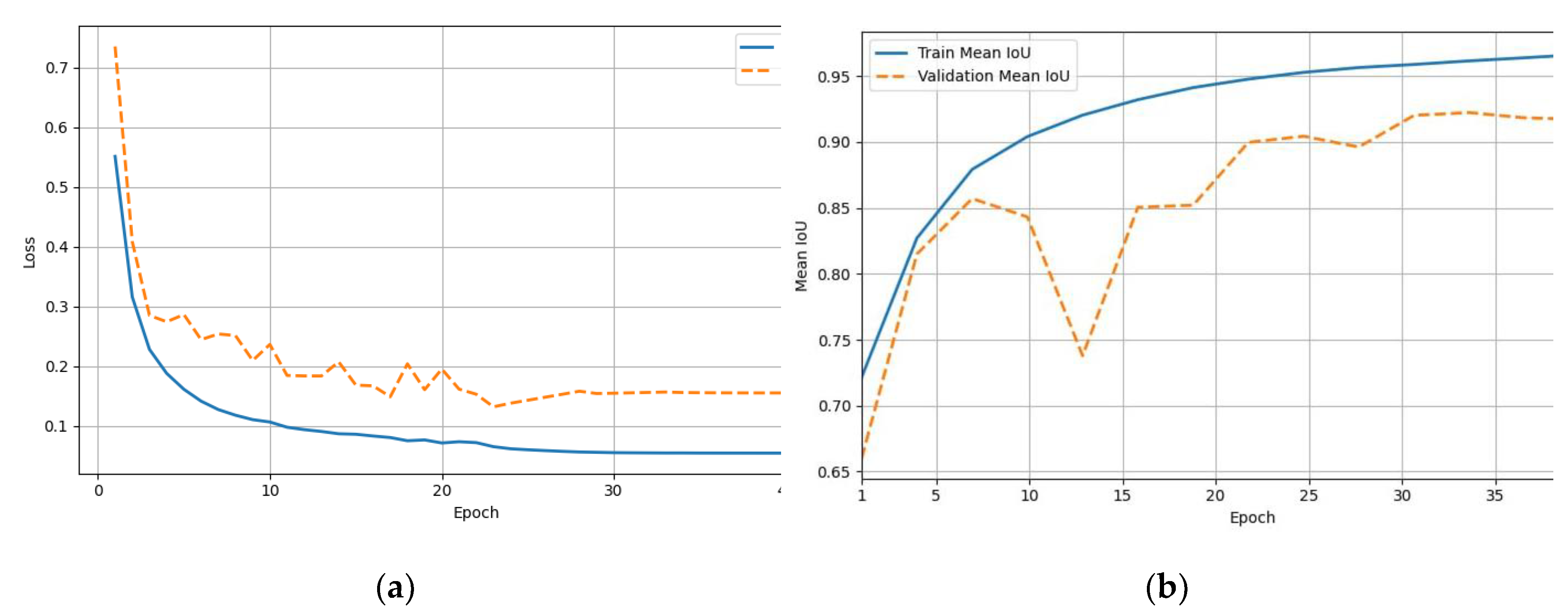

Step 5.2. Training. At each training epoch, the values of the loss function and the variance of the loss function are estimated, which are calculated according to (23-31, 33) and are used in rules R4.2, R5.3, R6.2 and R8.2 to make a decision on modifying the training parameters or terminating training.

If the training accuracy indicators correspond to the permissible values, then the transition to the first step of stage 6 occurs, otherwise the transition to the fourth step of stage 6 occurs.

The output of step 5.2 and stage 5 as a whole is: the values of the accuracy indicators at each training epoch on the training and validation samples () and the values of the weight coefficients of synaptic connections obtained as a result of training ().

Stage 6. Setting architectural parameters. The following are fed to the stage input: , , , , , , , and .

Step 6.1. Evaluation of training results - the values of the accuracy indicators achieved at the last training epoch are compared with the permissible ones. If the achieved indicators correspond to the permissible ones, that is, , then the transition to step 6.2 occurs, otherwise, when the transition to step 6.4 occurs.

Step 6.2. Accuracy assessment on test data – is reduced to comparing the values of accuracy indicators () obtained when applying the trained neural network model to the segmentation of images from the test sample that were not used in training with permissible values. If the segmentation accuracy does not correspond to the specified one, then and the transition to step 6.4 occurs. Otherwise, and the transition to step 6.3 occurs.

Step 6.3. Model quantization – is reduced to converting the numerical representation of weight coefficients to a format with a lower bit depth, which provides a reduction in the amount of memory required to store model parameters. The functionality of the step is implemented only in the case of provided that the resource intensity of the model is higher than . The basic version provides for the storage of weight coefficients in the format of 8-bit integers. As a result of quantization, a modified matrix of weight coefficients is formed, which is used to recalculate and evaluate the accuracy of the model on the test sample. If the accuracy of the model is sufficient, then the modified matrix , is used in the future, and in the opposite case , obtained as a result of step 5.2, is used.

Step 6.4. Adaptation of architectural parameters - consists in modifying the architectural parameters of the neural network model depending on the current values of architectural parameters and the evaluation results obtained depending on the transitions made or as a result of step 5.2 or as a result of steps 6.1-6.3. In this case:

- If then the transition to step 2.1 is implemented, which provides a change in the type of the basic neural network model, while the considered type of architecture is removed from the set of permissible ones. In the case when all architectures from the set of permissible basic architectures have been investigated, and none of them allowed to achieve the specified values of the efficiency indicators, a decision is made about the impossibility of building a neural network model, which under the specified application conditions provides effective semantic segmentation of the face image.

- If and the resource intensity of the model is higher than then the transition to step 4.2 is implemented, the execution of which leads to the modification of the decoder parameters, associated with a decrease in its resource intensity. In the case when all possible combinations of the values of the architectural parameters of the decoder are investigated, the transition to step 3.2 is implemented, associated with the modification of the encoder parameters, associated with the reduction of its resource intensity, provided that the encoder parameters are within acceptable limits.

The study is completed after determining the architectural parameters of the decoder and encoder, which, with an acceptable amount of computing resources, provide sufficient accuracy of segmentation of the face image of a representative of the personnel of a critical infrastructure facility. The output of step 6.4, stage 6 and the method as a whole is a tuple of parameters

, which characterize the architecture (

), the weight coefficients of synaptic connections (

) and the training results (

) of the neural network model of semantic segmentation of the face image of a representative of the personnel of a critical infrastructure facility. A generalized diagram of the proposed method, illustrating the procedure for its implementation, is shown in

Figure 1.

The direction of the method execution corresponds to the direction of the arrows in the diagram. In this case, sequential transitions are marked with solid lines, and alternative transitions that are implemented based on the results of checking the fulfillment of the conditions in steps 6.1, 6.2, 6.4 are marked with dotted lines.

In this case, in step 6.1, the condition of sufficient accuracy of the neural network model on the training data is checked, in step 6.2, the condition of sufficient accuracy on the test data is checked, and in step 6.4, the conditions associated with the modification of the basic architecture and the modification of the encoder and decoder parameters are checked.

It should be noted that checking the feasibility of quantizing the model in step 6.3 and checking the fulfillment of the conditions associated with the modification of the training parameters in step 5.2 do not change the sequence of the method execution.