1. Introduction

The United Nations' Sustainable Development Goals (SDGs) for 2030 emphasize the importance of efficient mobility and transportation in pursuing long-term sustainable global development. Urban infrastructure development plans must align to this initiative as the global population is forecasted to surpass 9 billion by 2040 [

1]. Traffic congestion in urban areas has worsened significantly due to increasing rates of private vehicle ownership brought about by population growth, urbanization, industrialization, and economic progression [

2,

3]. Increased urbanization and traffic challenges must be addressed by relevant local community and national stakeholders. Governments must promptly implement novel and advanced technologies that aim to optimize logistical efficiency, manage traffic, and promote sustainable transportation alternatives [

4] by integrating solutions mainly designed for sustainable urban mobility of drivers, passengers, and pedestrians [

5,

6]. Making these tools accessible to the public is crucial for reducing congestion and improving overall mobility efficiency [

7]. The effective integration of technology-based systems to transportation and mobility is commonly referred to as ITS or intelligent transportation systems [

8,

9]. Many stakeholders are continually integrating different emerging technologies in information and communications technology (ICT) [

10], Internet of Things (IoT) [

11,

12], and artificial intelligence (AI) [

1,

13] to address problems in the ITS field.

An abundant body of ITS literature remains heavily concentrated on traffic control and management applications. Studies concentrate on using smart applications to solve traffic in public roads. Fewer studies are available that use smart applications to solve parking facility management problems [

2,

14]. This research effort imbalance translates to an exacerbation of prevailing parking-related challenges, notably the scarcity of available parking spaces in urban areas, significantly contributing to traffic congestion [

15,

16]. Recent research indicates that non-optimal cruising while searching for vacant parking spaces significantly exacerbates urban congestion. This is because restricted facility entry, especially during peak hours, frequently necessitates vehicles queueing on public roads, further exacerbating traffic congestion. Furthermore, the average cruising distance during peak hours is 2.7 times longer than the optimal distance, increasing CO

2 emissions [

17]. These inefficiencies underscore the need for more intelligent parking solutions to address operational and environmental challenges [

18]. These oversights can be resolved by systematically implementing smart parking management systems (SPMS) capable of real-time vehicle profiling and activity monitoring within parking facilities [

2,

19]. Although existing research has investigated various aspects of smart parking management, there is still a gap in the transition of these practices to emergent trends, particularly the integration of smart cyber-physical systems (CPS). Developments are further stalled due to evolving data privacy laws that increasingly require explicit consent for the collection and processing of personal information, thus further complicating the integration of new technologies and legal matters.

SPMS implementations present substantial ethical and legal obstacles, particularly in user consent and data privacy. AI-driven surveillance, license plate recognition, and video footage collection present significant concerns over data collection [

20,

21]. In contrast to traditional implied data collection consent in retail, which assumes a user's presence signifies agreement [

22], modern data protection regulations such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) mandate explicit consent for collecting and processing personal data [

20,

21]. Users should be explicitly informed regarding the data collected and its intended purposes and must give clear, affirmative consent [

22]. Despite emerging data privacy concerns in the face of needing to introduce new technologies for system efficiency, security, and ethical considerations, smart parking technology development initiatives continue to persist through struct adherence to transparent data policies, anonymization techniques, and stringent access controls [

23]. Data privacy regulations have not impeded innovation. Rather, they have necessitated the development of more secure and privacy-conscious novel solutions that comply with industry standards. SPMS are advancing to incorporate more frameworks that improve operational efficiency and ensure compliance with data privacy laws [

24,

25].

Most CPS research leans towards developing digital twin (DT) models through a Building Information Models (BIM) framework for parking facilities. Current SPMS-BIM implementations face challenges in delivering an intuitive and encompassing visualization platform that effectively reflects the real-time status of the facility [

26]. Conventional monitoring systems depend on surveillance camera networks to provide comprehensive coverage throughout the facility. While camera networks allow managers to monitor vehicle parking activities remotely, it requires manual switching between various camera feeds, rendering the process laborious and inefficient, especially in facilities with extensive camera networks. DT models address this challenge by integrating real-time data into a cohesive visualization platform to help improve decision-making capabilities [

27].

DT models come in two forms: 2D and 3D. Although 2D models offer an organized framework of understanding, they often oversimplify geometric features. The level of detail is lesser, necessitating relevant personnel to mentally interpret flat and simplified 2D representations [

28]. In parking facility management contexts, this poses a challenge in effectively evaluating real-time occupancy, vehicle flow, and congestion points, thereby slowing down monitoring operations and reducing work efficiency. In contrast, 3D DT models closely represent the actual facility layout and provide a more user-friendly and immersive depiction. 3D models can optimize facility management by combining real-time data streams from smart applications such as machine vision and sensor data. Such configuration improves the situational awareness and lowers cognitive burden [

29,

30].

This study focuses on developing a framework for SPMS development integrated with a 3D BIM interface for 3D spatial understanding of the built environment. The system is a dynamic and interactive 3D BIM model integrated with video data streams, enabling precise monitoring and decision-making for parking facilities. This can streamline and improve the workflows of facility managers, thereby decreasing the chances of errors and inefficiencies and increasing facility productivity and resource turnover.

The primary contribution of this study is creating a dynamic DT interface to demonstrate a proof-of-concept remote parking occupancy monitoring system through a 3D BIM visualization interface. The developed SPMS combines object detection, scene text recognition, and data processing algorithms to profile vehicles and analyze occupancy statistics from surveillance video footage. A LiDAR-based point cloud model was used to create a 3D Revit model, ensuring an accurate geometric depiction of the parking facility environment. The Dynamo plugin in Autodesk Revit dynamically updates the model by attaching it to the system's backend, allowing recorded occupancy changes to be visually represented. This study demonstrates how several smart applications can be used to improve facility management operations. The findings create a scalable framework for smart infrastructure applications to help urban planners and engineers optimize resource usage, improve commuter experiences, and advance sustainability goals.

2. Smart Parking Management Systems

The design and implementation of an SPMS attempts to resolve vehicle congestion issues caused by the inefficiencies of manually operated parking facilities [

31]. By integrating advanced technologies, parking processes are optimized to ensure that the timely service of providing parking spaces to vehicles is made more efficient and straightforward [

32]. Smart parking facilities provide occupancy statistics on mobile applications, online digital viewing platforms, or public displays. These features improve the user experience of drivers browsing for parking spaces. The commuting public can access the data, navigate the parking area or nearby facilities, and seek available slots using their devices [

33,

34]. The information provision service will simplify locating vacant parking spaces by providing vehicles with information regarding the number of available spaces and their location [

2,

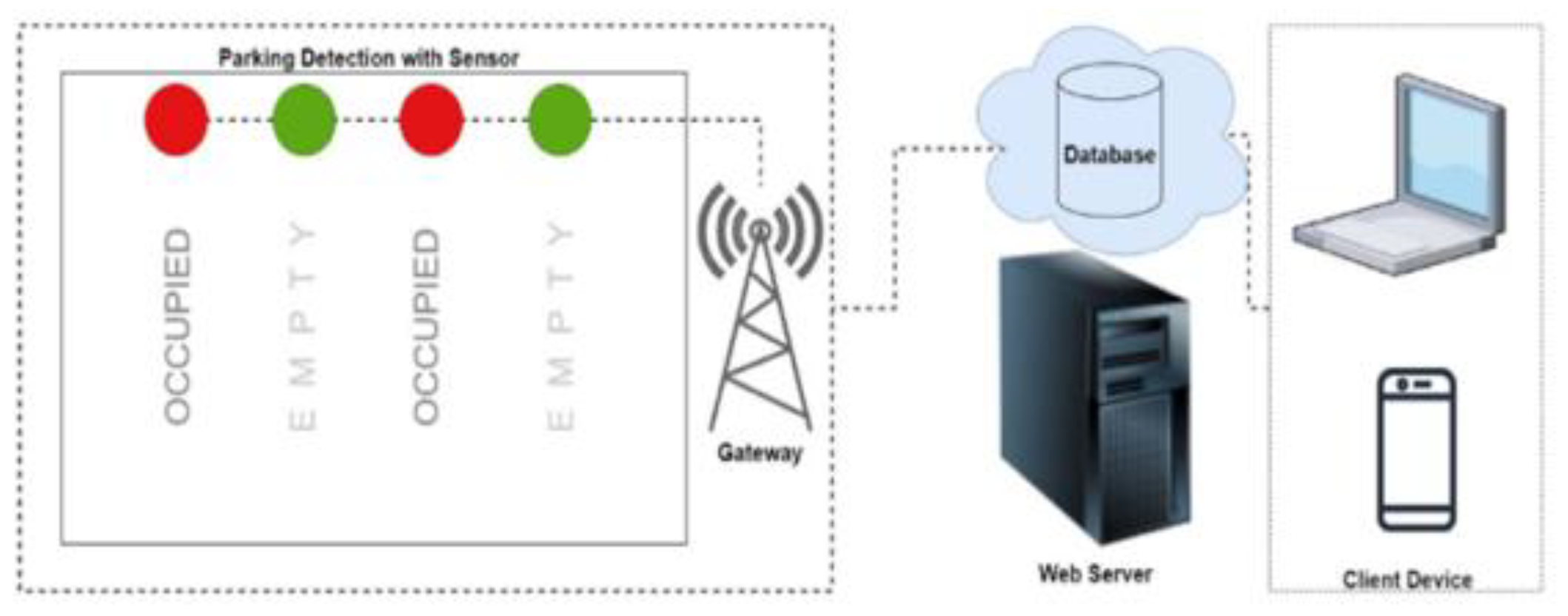

3]. The framework for developing smart parking facilities adheres to a generic framework, as shown in

Figure 1. Typically, facilities equipped with an SPMS infrastructure is comprised of hardware and software components. Examples of hardware components are low-cost or advanced sensor technologies that aid in the data collection process of parking activity data. The software infrastructure is often manifested in frontend and backend platforms. Frontend examples include a graphical user interface that provides a simplified or complex representation of the parking space in a client device-accessible graphical user interface (GUI). The backend component is the platform that contains data from which the information from the GUI is sourced. Data can be housed either locally or through a cloud web-based server [

31].

There are several approaches to developing SPMSs. Incorporating AI through machine learning and machine vision is one of the most common methods. Vision-driven systems acquire a scene understanding of vehicle parking activity events that are occurring by utilizing real-time or recorded video streams. This procedure may be referred to as automated parking facility activity monitoring [

35,

36]. Computer vision techniques, such as vehicle detection [

37,

38], vehicle tracking [

33,

39], and LPR [

36,

40], are used to automate the monitoring procedures. Research literature on integrating and applying these techniques is exceedingly saturated [

39,

41]. Computer vision tools can expedite facility monitoring and management processes. This is possible, provided that the built environment is equipped with the appropriate hardware. Else, these systems may be hindered by non-conducive external conditions, ranging from include insufficient illumination, unoptimized camera positioning, to communication latencies brought by signal disruptions [

40,

42]. The digital infrastructure of the parking facility processes the inference data acquired from these computer vision systems [

16]. A common method is using object detection architectures to detect and classify objects in images are widely applied in parking management systems. Vehicle detection is a common technique integrated into parking management systems [

33,

37,

39]. Through vehicle detection, video feed obtained from surveillance cameras can be used by the system to detect vehicle presence [

6,

43]. Vision system inference data can assist facility managers in making decisions that will optimize operations and more effectively manage demand [

31,

44].

Several object detection architectures are available. They are clustered into two types: single-stage and two-stage detectors [

45]. Single-stage detector architecture include EfficientDet, RetinaNet [

45], and the YOLO architectures [

46]. Two-stage detectors include Faster R-CNN [

47], Mask R-CNN [

38], and SSD Mobilenet [

48]. There are primary differences in how these object detectors perform inferences. Single-stage detectors process an entire image in a single phase. By generating anchor boxes of varying sizes and dimensions, they divide the image into a grid, score them for object presence, and localize their identified location in superimposed bounding boxes, directly predicting and classifying objects. This approach is more efficient than two-stage detectors, as the latter requires a distinct region proposal phase. Two-stage detectors must first provide proposals of regions where objects are possibly located in an image. After determining which regions are highly likely to contain objects of interest, these objects are then classified, and the bounding frames are refined by a second neural network that performs detection and classification in these regions [

6,

36,

45]. While two-stage detectors are more precise, the performance of single-stage detections are sufficient enough for real time contexts. The inference speed of single-stage detectors is highly effective for real-time use. Various studies reported that in system deployments, their minor precision deficiencies are outweighed by their speed, rendering them suitable for real-time applications [

45,

46].

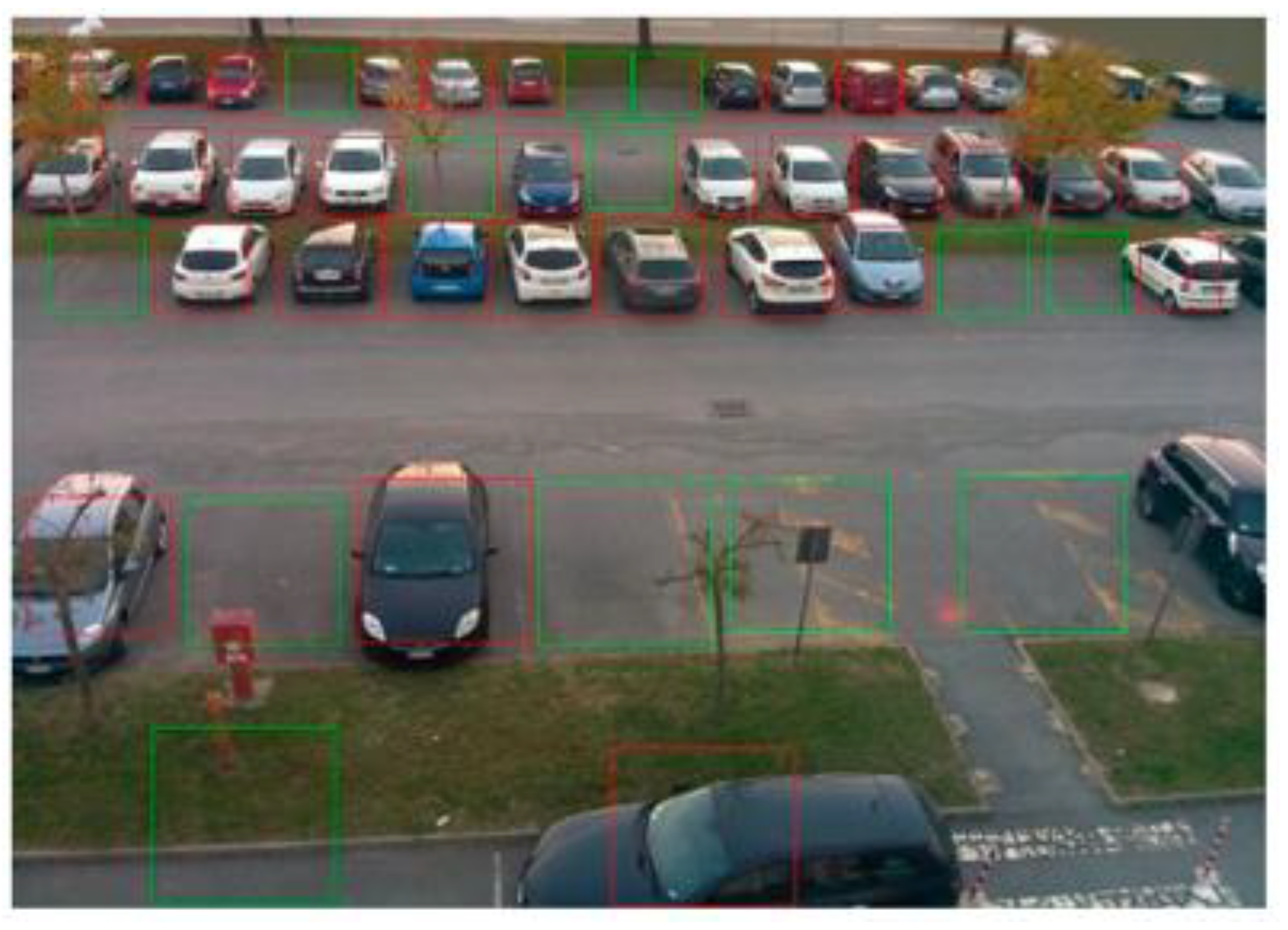

There are two object detection-based methods for determining parking occupancy. One approach is to develop a model capable of distinguishing between occupied and vacant parking spaces. Automated occupancy determination is facilitated by this method, eliminating the need for predefined parking spaces. Another method is to first predefine the regions in an image frame where parking spaces are located. Vehicle detection models will be used to detect vehicles, which are then passed to an algorithm to validate if the detected vehicle is located within the tolerance bounds of the predefined parking spaces.

Figure 2 below shows a sample implementation of object detection [

49].

System designers find the first method highly convenient, eliminating the need to predefine parking spaces. However, this approach is highly restrictive if scaling intentions were to be pursued by the parking facility’s management body [

50]. The first approach is two-step process where parking spaces are detected, and their occupancy state is then classified as either vacant or occupied. While functional, at times, performing vehicle profiling on parked vehicles is imperative. Facility managers may need more information on the vehicle as it aligns with the operational and management requirements of parking facilities [

51]. An example would be specific fee differentials based on the type of vehicle, such as motorcycles, public utility vehicles, and private vehicles [

52]. The second approach solves this limitation by using vehicle detection and classification in conjunction with algorithms that account for known parking space regions to accommodate potential future features. These systems are three-fold: contextual memory retention of specified parking space regions or regional ground truths, then comparing these ground truths with object detection bounding box inferences, and finally, having an algorithm to classify occupancy spaces based on bounding box region overlaps or intersections. A sample implementation of this three-step process is shown in

Figure 3 [

50].

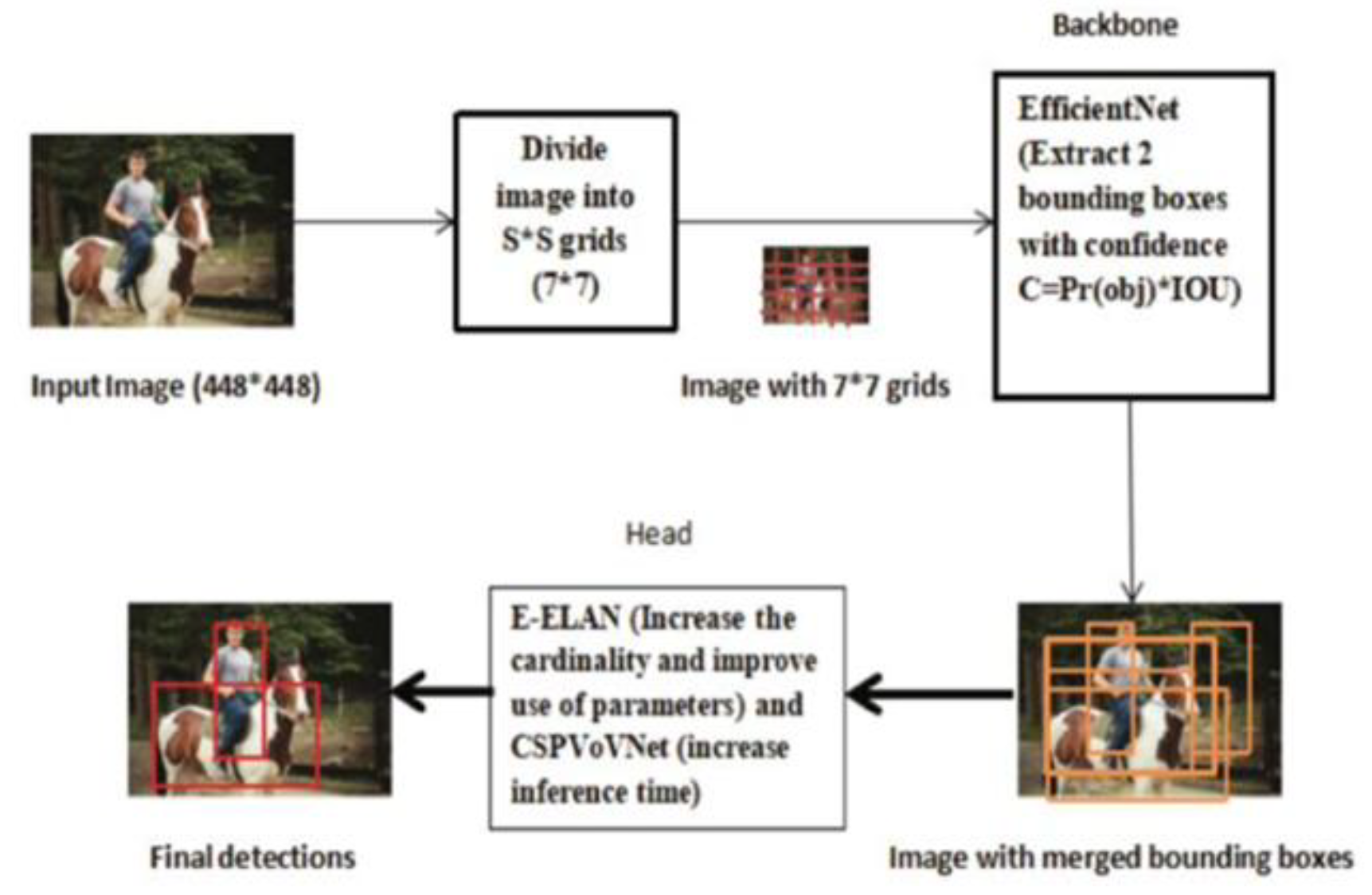

The YOLOv7 Object Detection architecture has become widely used in ITS solutions. The YOLO model architecture line has undergone developments, resulting in 11 major releases [

53]. Each version has sub-releases that are specifically designed to meet the requirements of various hardware and computing systems [

54]. In real-time applications, such as vehicle detection and classification in parking management systems, YOLOv7 is preferable due to its highly regarded validated performance in object detection [

55]. The input, backbone, and head network comprise its architecture, as shown in

Figure 4 [

56].

The input network divides an image into a 7x7 grid, generating multiple bounding boxes per grid cell. This grid-based method eliminates the necessity for region proposals, which are necessary for two-stage detectors such as Faster R-CNN. Additionally, it preprocesses images to guarantee consistent data management throughout the model by maintaining uniform dimensions. The CBS composite module, the Effective Layer Aggregation Network module and the MP module are the three primary modules that the backbone network employs to perform feature extraction. By halving the spatial dimensions of the feature map and doubling the number of channels, these modules enhance the network's capacity to represent intricate features. The backbone effectively scales these features to optimize precision without sacrificing efficiency. Output from the backbone is then used as the input of the head network. The head constructs three feature maps of varying proportions. It employs 1x1 convolutions for objects, class prediction, and bounding box prediction tasks. The expanded E-ELAN module and cardinality operations are integrated to improve features' accuracy and representation while optimizing parameter efficiency throughout the model [

46,

54].

3. Vehicle Profiling for Intelligent Systems

The capability to recognize alphanumeric characters and symbols on license plates is essential to profiling vehicles. For parking management systems, this enables the facility's smart infrastructure to obtain distinctive vehicle identifiers, which are crucial for security, invoicing, and tracking purposes [

8,

36]. In ITS research, License Plate Recognition is a critical and prominent implementation of machine vision and machine learning [

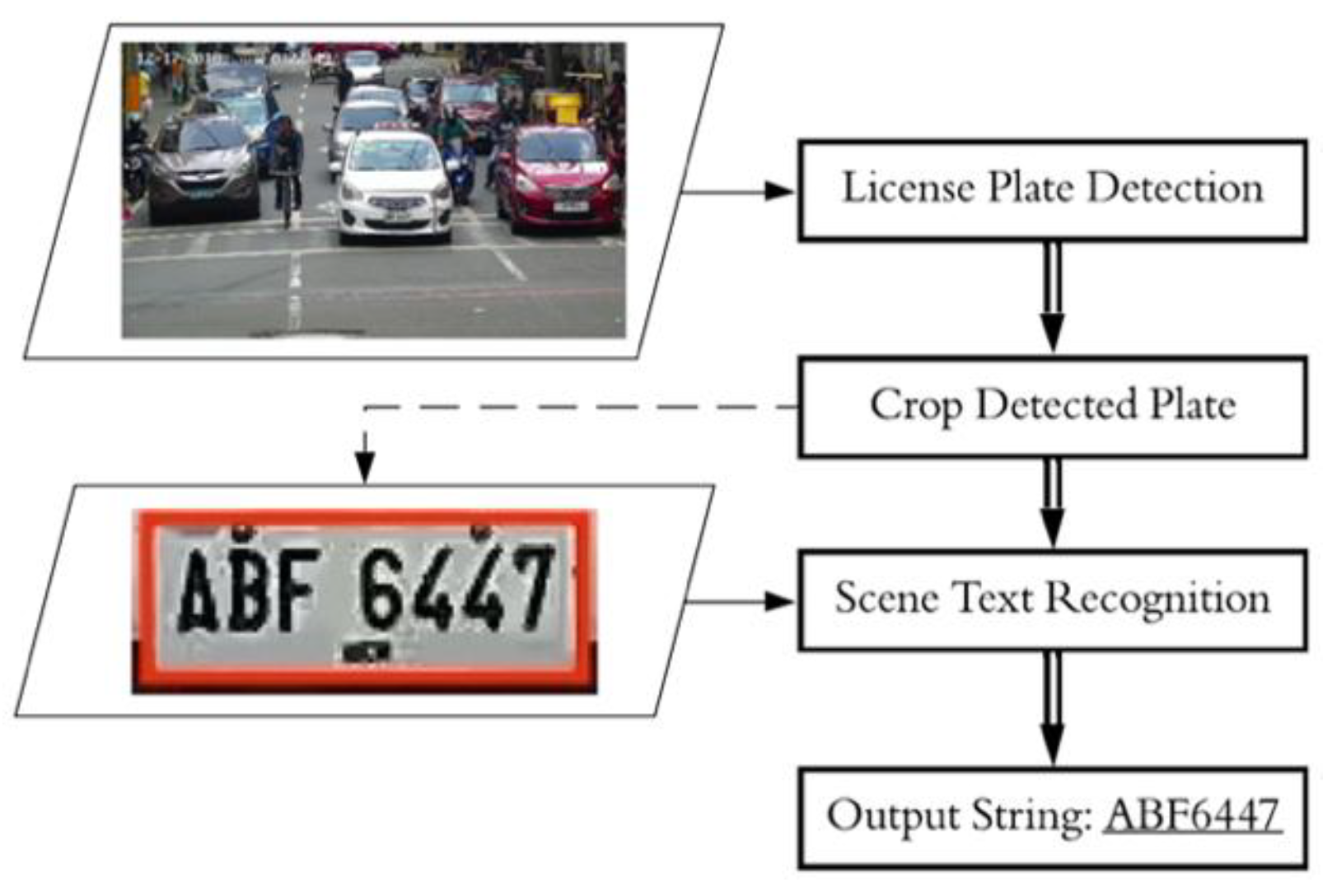

40]. The standard architecture of LPR systems, as referred to in

Figure 5, comprises four critical stages: (1) the detection of the license plate, (2) digital isolation of the detected license plate, (3) character recognition, and (4) generating the output string [

36].

LPR's initial phase is to direct the system to detect license plates using a variety of machine vision techniques such as object detection and instance segmentation. Aside from object classification, these model architectures generate bounding boxes inferences around detected vehicle license plates. The next phase is to use license plate detection (LPD) inferences to determine which region in the image must be cropped out and which area is retained. The retained region is the license plate where the character recognition task must be performed on [

36,

40]. During text recognition, characters and symbols within the cropped license plate first undergoes character segmentation. This procedure allows for letters and characters to be seen as individual character objects. This ensures that the system will attempt to perform character recognition on each segmented character within the license plate. Once character recognition has been performed, the expected output of an LPR system is a collection of character texts and symbols that are put together as a string. This data is the resulting profiled vehicle information of the LPR system [

36,

40]. The variation in license plate designs across different countries is a significant factor in developing LPR systems. This necessitates the training of localized models to manage a variety of formats, symbols, and character types [

57,

58].

Image and video materials fed to LPR systems must be of high quality to ensure clear and accurate inferences for SPMS [

59]. LPR systems must be tailored to the contextual requirements of the country where the system is being implemented or deployed in. For instance, Philippine license plates are visually distinct from other countries based on their design, as shown in

Figure 6 [

57]. There are four major license plate types in the Philippines, the 1981 series, the 2003 series, the 2014 series, and placeholder license plates that are based on the conduction stickers of vehicles upon purchase. Image datasets for training these models must include Philippine-specific license plate images, with precise annotation and labeling, to guarantee the accuracy and reliability of LPR systems [

57,

60].

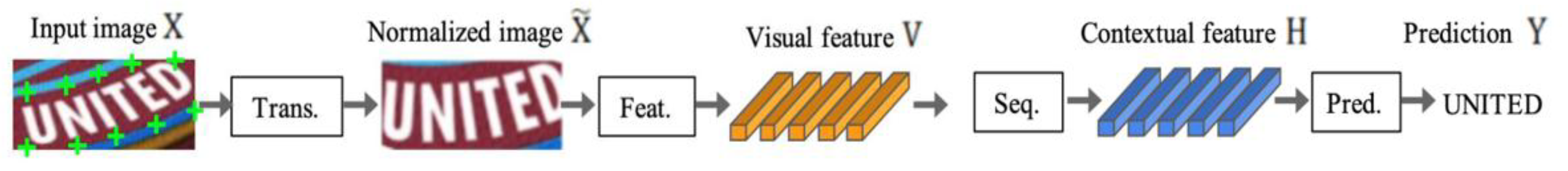

In commercial environments such as parking facilities, distorted images of vehicle license plates are often captured. These plates may be curved, tilted, and warped. Using conventional OCR systems will not suffice as they only perform well on undistorted images. In parking facilities, captured plates are often affected by occlusions, sunlight, weather conditions, and other external factors. These challenges present substantial challenges for machine vision and machine learning models, leading to inaccurate character recognition inferences [

59]. Noise distortions are frequently the result of suboptimal conditions that occur when acquiring images or videos [

42,

61]. Alternative methods, such as Scene Text Recognition, have been devised to circumvent these limitations. STR provides a more resilient approach to text recognition by integrating together sequence prediction and object detection architectures. STR models can accurately read text under noise-filled conditions by using CNN and recurrent neural networks. Deep Text Recognition, an STR architecture, extracts alphanumeric characters from objects found in noise-filled environments [

62,

63]. DTR uses sequentially ordered techniques: transformation, feature extraction, sequential modeling, and prediction, to guarantee precise measurements in low-quality, high-noise scenarios, as shown in

Figure 7 [

62].

The transformation stage employs a thin-plate spline, a type of spatial transformation network, to normalize the input image through fiducial point-based alignment. This procedure ensures uniformity in character regions, irrespective of distortions or irregularities in the input. This process improves the precision of subsequent phases by converting the image to a standardized format. A CNN extracts essential character-specific features from the image during the feature extraction stage while suppressing irrelevant features such as font, color, and background. This stage generates a feature map in which each column corresponds to a horizontal section of the image, enabling the precise identification of text features. The extracted features are subsequently reorganized into a sequential format during the sequence modeling stage, preserving contextual relationships between characters. This is accomplished by utilizing bidirectional long short-term memory (BiLSTM) networks, improving the model's capacity to comprehend the sequence's order and relationship between characters. The result is produced in the prediction stage by employing Attention-based Sequence Prediction and Connectionist Temporal Classification (CTC). The attention mechanism dynamically concentrates on specific segments of the sequence to generate a more refined character output, whereas CTC predicts characters based on each feature column, thereby eradicating blanks [

62,

63].

4. Assessment of Global and Local (Philippines) ITS Research

Traffic control and parking management are the two primary ITS applications [

64]. The aim of traffic control research is to reduce congestion through the development and integration of intelligent systems that seek to optimize signal timing and regulate vehicle traffic flow [

8,

57]. In contrast, SPMS applications differ in system design and implementation. SPMS help optimize space utilization, monitor occupancy, and enable automated vehicle profiling during entry and exit in a parking facility [

16,

30].

Table 1 provides a comprehensive summary of relevant ITS literature. The ratio of traffic control studies to SPMS studies suggests skewed research efforts towards traffic control topics. In high impact journals, traffic control topics were more abundant. In contrast, parking management studies were predominantly more accessible in conference proceedings, potentially indicating that researchers prioritize traffic control topics for high-impact publications.

The Philippines is a late adopter of emergent technologies, frequently incorporating advancements only after they have matured in more developed countries. This delay is attributed to a lacking readiness to adopt new technologies due to insufficient people and material resources. Technology adoption challenges is evident in the research outputs, which indicate a nation's readiness to implement, develop, and innovate new technologies [

73]. The Philippines' current ITS research landscape is characterized by an imbalance, with a substantially more significant emphasis on traffic control than optimizing parking facilities [

16,

74]. Notably, there are significantly fewer studies that address parking management. Like other countries, there is a preference on developing novel technologies traffic control monitoring applications. While this pursuit is not incorrect, the consequences of neglecting parking management can become pronounced, as inefficient parking strategies in parking facilities directly contribute to traffic congestion in public roads [

3,

37]. Optimizing vehicle flow and improving parking administration are both necessary for successfully mitigating traffic. Transport planners can potentially lessen the number of vehicles that may add to traffic by creating facilities and spaces able to efficiently service them when drivers wish to park their vehicles. Choosing to only increase parking spaces is regarded as an unviable and non-sustainable solution [

3].

The literature review highlights a key finding: most ITS research is conducted outside the Philippines. Although developing ITS solutions is a global endeavor, not all international implementations can be implemented in various countries. Effective ITS implementation necessitates localized adaptations, which is achieved by fine-tuning model architectures using country-specific datasets. To optimize intelligent applications for local conditions, datasets must accurately reflect the unique road infrastructure, vehicle classifications, and license plate formats [

71,

75]. While it is possible to use pre-trained public models trained using international datasets, their ability to identify country-specific vehicle and plate characteristics remains uncertain. The scarcity of Philippine-based studies in comparison to global research further emphasizes the necessity of specialized, localized research, particularly in smart parking management systems [

57,

76].

5. Building Information Management and Digital Twins

DT research has been an emerging trend in recent years. CPS-based technology has gained prominence in various fields, especially in computer science and engineering applications that model built environments. Across several studies, DT models exhibit varying features and modeling complexities [

77,

78]. However, there are common baselines.

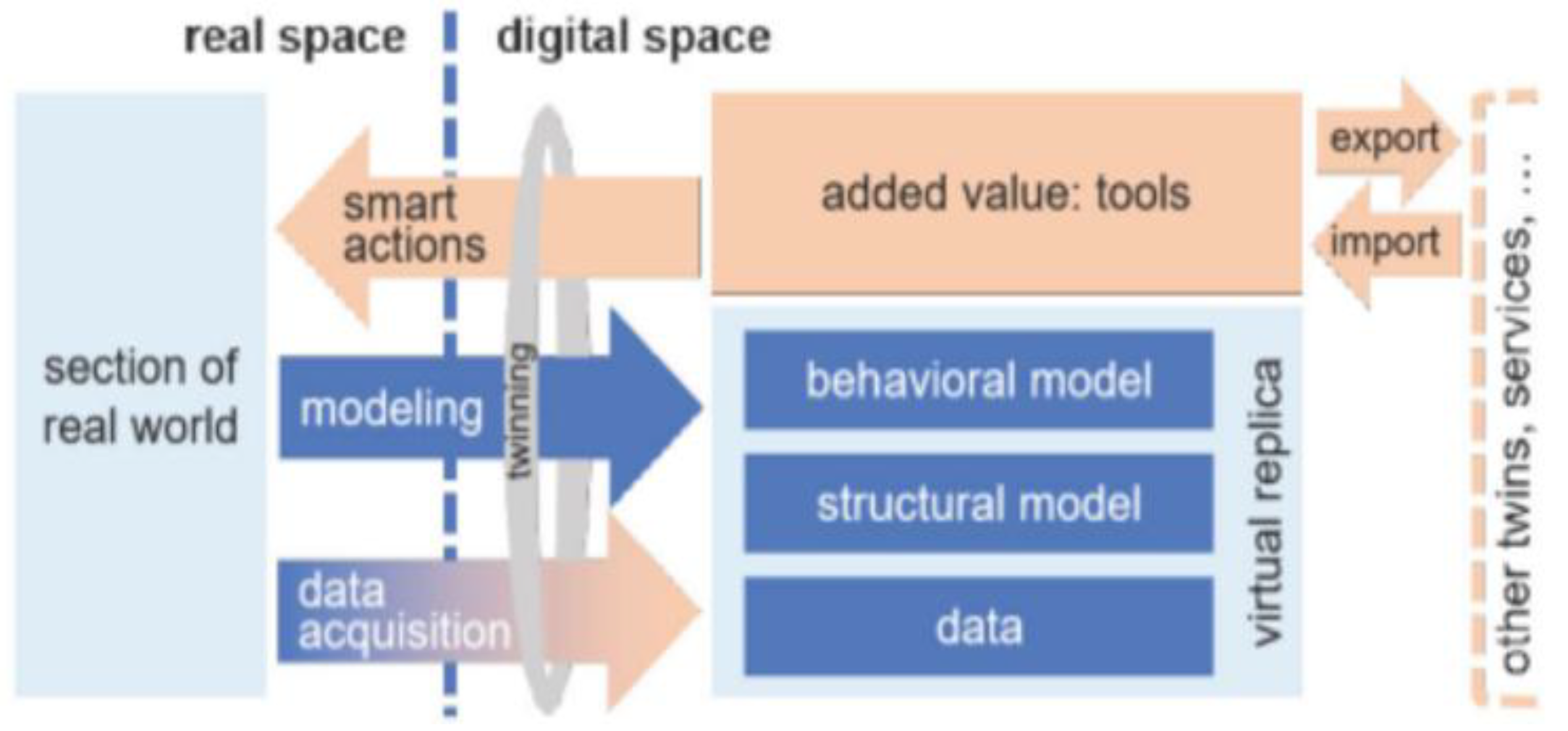

Figure 8 shows a framework for designing and developing digital twins [

78].

Figure 4.

Digital twin design and development framework. DT serves as a virtual replica of a real-world system, with the physical and digital models synchronized through a continuous data flow. A "twinning mechanism" ensures that real-time updates are reflected between the digital model and the physical system [

78].

Figure 4.

Digital twin design and development framework. DT serves as a virtual replica of a real-world system, with the physical and digital models synchronized through a continuous data flow. A "twinning mechanism" ensures that real-time updates are reflected between the digital model and the physical system [

78].

A DT is a model system representation located in the digital space. It reflects the real-world section or the physical system of interest. To produce a dynamic model of the physical system in the digital space, a twinning mechanism is needed to keep both spaces in sync with one another. The virtual twin commonly includes behavioral and structural dynamic CAD models. Analysis, forecasting, and optimization are made possible by constantly updating these models’ using information from the real world [

78,

79].

The four DT categories are as follows: (1) Component Digital Twins (C-DT), which model individual machine parts or sensors to improve their performance and maintenance; (2) Asset or Machine Digital Twins (AM-DT), which offer a digital representation of entire machines or equipment, allowing for improvements in operational efficiency and predictive maintenance; (3) System or Plant Digital Twins (SP-DT), which simulate a network of interconnected assets that are not limited to a factory production line; and (4) Enterprise-wide Digital Twins (EW-DT), which are high-level organizational models intended to provide end-to-end visibility into operational metrics, resource allocation, and overall business performance. EW-DTs combine many digital and physical processes, offering a thorough business intelligence framework for data-driven decision-making [

80].

A 3D BIM-based SPMS is an EW-DT based on functional design behavior. The BIM unifies several process metrics into a single, interactive platform, including vehicle movement analysis, occupancy tracking, and facility-wide performance indicators. The solution provides facility managers with a comprehensive operational picture through integrating data analytics with a 3D model. This enables managers to formulate and enforce policy compliance, simulate alternative management techniques, and maximize parking space use. Parking management practices have shifted from a passive monitoring approach to a more proactive decision-making framework due to this end-to-end visibility, thereby guaranteeing improved facility operations through efficient remote monitoring [

30,

79].

Designing, developing, and integrating EW-DT technology into SPMSs have been the subject of numerous research endeavors to resolve the inefficiencies of conventional parking strategies. Despite numerous parking facilities already relying on a variety of equipment hardware to report the availability of parking spaces, these systems often provide shallow data analytics [

2,

81]. DT technology facilitates better understanding collected data. DT models reflect real-time events in parking facilities and provide the avenue of simulating a specific set of proposed actions based on calculated facility metrics. Comprehensive insights into optimizing traffic flow, demand forecasting, and optimizing space utilization can be acquired. Facility managers can exploit the advantages of automated data pipelines that provide a more comprehensive understanding of parking activity patterns to facilitate strategic decision-making and predictive analysis [

44,

82].

DT models have the potential to enhance the user experience for drivers by customizing data presentations, enabling them to locate available spaces through displays swiftly. The operational efficiency of parking facilities and users' convenience are improved by integrating advanced analytics and smart infrastructure [

29,

30]. The visual representation of a digital parking facility twin model is a critical design factor to consider. The intuitive clarity that visual models may offer is absent from KPI or key performance indicator-based DT data dashboard [

78]. Facilities managers may encounter difficulty interpreting data dashboards, particularly in high-pressure situations where prompt decision-making is essential to resolve problems and issues. The likelihood of misinterpretation is elevated when only a single form of data representation is employed [

81]. Fortunately, risk minimization stemming from misinterpretation can be achieved by pursuing a balanced approach of creating DT models that incorporate visual representations and data analytics. Data dashboards can be coupled with either a 2D or 3D model of the parking facility showing parking occupancy and the location of cruising vehicles in no-park zones. Such a representation method provides a more exhaustive comprehension of the parking facility's status. Facility managers can make more informed decisions and better contextualize the data by associating critical metrics with a visual 2D or 3D model [

30,

79].

A clear and concise overview of available parking spaces is provided by 2D digital twin models with representation of parking occupancy and facility layout. This prevents users or facility administrators from being overwhelmed by excessive detail. For less complex parking systems, this clarity is especially advantageous [

29,

33]. In contrast, more intricate facilities necessitate a more extensive modeling approach. A 3D BIM approach circumvents around 2D modeling constraints, which is the tendency to oversimplify geometrical and contextual features of an environment or space [

30]. During the modeling process, these 3D models accurately replicate the structure and environment of the parking facility by capturing intricate details using scanning technologies, such as laser scanning, LiDAR, and point cloud data [

30,

83]. The BIM workflow employs these generated 3D models stored within BIM software that utilizes a programmable twinning mechanism [

84]. This mechanism integrates information with the data processed within the SPMS's backend data warehouse. It updates the model regularly to account for any new developments [

30,

85]. Facility administrators can improve their comprehension of operations and devise more effective management strategies by visualizing the layout, real-time parking events, and traffic patterns of key performance indicators in their supplementary data dashboards through a dynamic 3D digital twin [

79].

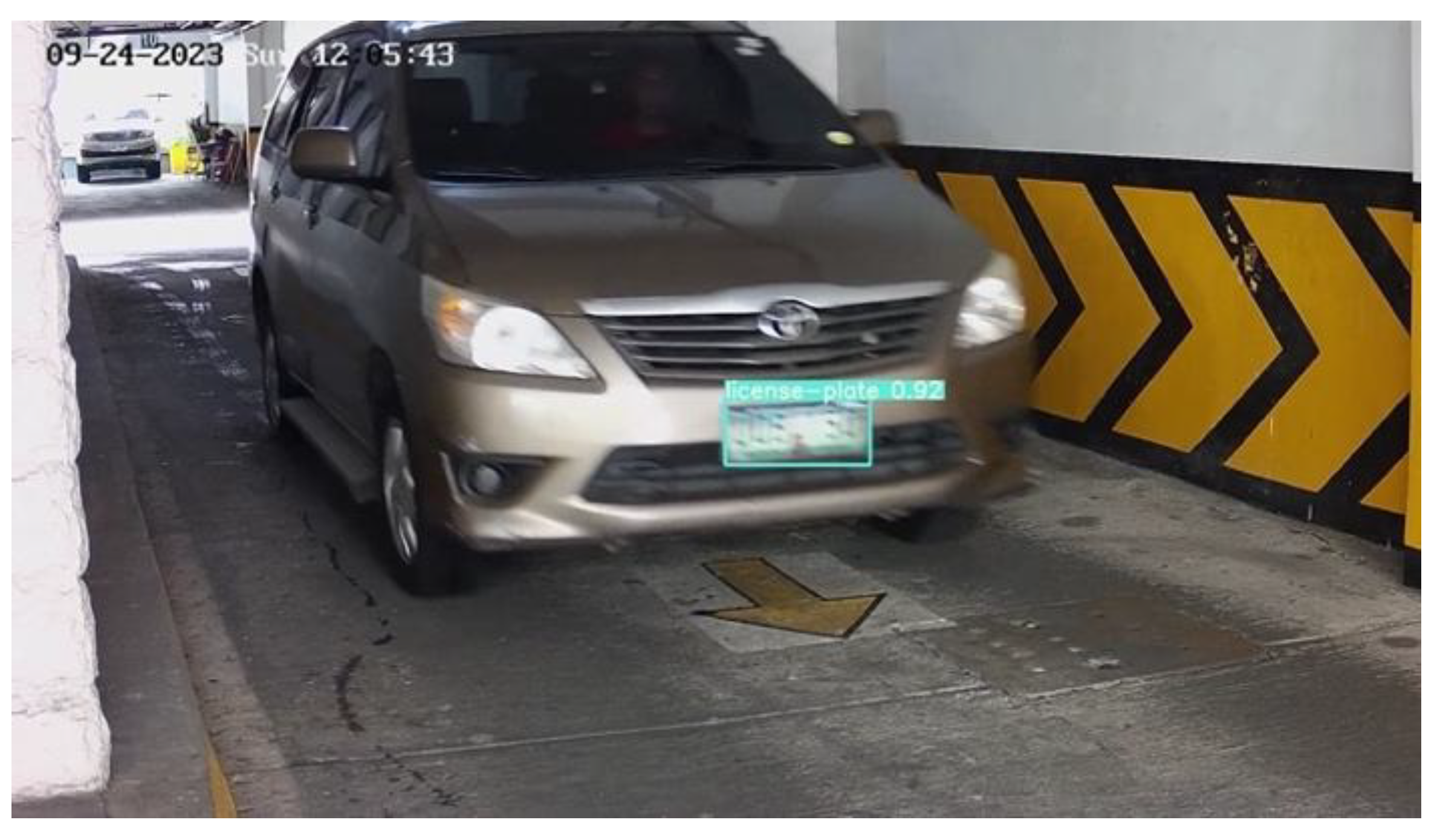

9. Design and Implementation Challenges for the System

The feature systems developed in this study are entirely functional and effectively achieve their intended functional design. In conjunction with the SM and DTM, the digital twinning mechanism effectively replicates dynamic changes from the video broadcast on the 3D BIM DT model and the data dashboard. Nevertheless, errors in the data still persist even though the I2M system effectively executes the relevant algorithms and conducts its intended intercommunication tasks with other modules. Two primary factors are responsible for these system discrepancies: the hardware limitations and the misalignment between the current facility operations and the optimal conditions necessary for AI-driven operational automation. During the design, development, and testing of the proposed smart parking management system, the following factors were encountered, resulting in three primary issues: (1) low FPS hardware combined with barrierless vehicle profiling, which prevented the cameras from capturing clear license plate images by allowing vehicles to pass without obstruction; (2) camera placement that exposed the system to intense sunlight glare, making license plates unreadable; and (3) the inaccuracy of LPR inference due to people movement obstructing vehicle license plates, which impacted the POD algorithms. All of these contribute to the performance and reliability of the system along with the challenges the system faces in potential scalability and commercialization.

9.1. Low Campera FPS and Barrierless Vehicle Profiling at Entrance and Exit Driveways

A data disparity was revealed by the facility's occupancy measurements, which showed that over 30 vehicles were using a parking lot that could only accommodate 30 vehicles. The natural consequence of this was that parking occupancy rates soared over 100%. As previously mentioned in previous sections, reasons for the erroneous presentation of data in the DTM data dashboard are influenced by limitations in the system’s current hardware configuration, along with the absence of dedicated infrastructure equipment designed to maximize the added value brought forth by the newly designed LPR parking entry and exit monitoring feature of the smart parking management system. The camera’s 30-fps framerate capture specification restricts its ability to capture sharp images of fast-moving vehicles, resulting in motion blur that renders consistent LPR-based vehicle profiling nearly impossible, especially when vehicles continue to enter and exit the parking facility in high-speeds (

Figure 39). Such limitation poses challenges for the system, which relies on precise LPR readings to accurately log vehicle entries and exits.

The system could overcome some hardware constraints by adding gate barriers that compel vehicles to pause momentarily. For instance, setting gate barriers at entry and exit points would minimize motion distortion and enable more accurate vehicle profiling regardless of the camera's frame rate. There are currently no gate barriers in the facility, so vehicles leave without stopping for a clear capture, resulting in incomplete or unsuccessful LPR readings. This can inflate occupancy statistics and increase errors in real-time occupancy data. Alternatively, purchasing cameras with a higher frame rate could improve the quality of the images captured by fast-moving vehicles. However, the system design and continuing maintenance costs would rise if cameras with better technical specifications were upgraded. Installing barriers and speed bumps may present a better economic advantage. They are generally cheaper and are a practical solution that will compel drivers to slow down during entry and exit, enabling the system to capture clear, unblurred license plates and get precise LPR readings.

9.2. Sunlight Glare and Campera Placement for LPR-based POD Algorithm Feature

On the ground floor of the building complex, the parking facility under monitoring for the LPR-based POD system is exposed to natural sunlight. The surface of the license plates, which is frequently quite bright during the daytime and in fair weather conditions, reflects the extreme sunlight glare caused by light exposure. Because of the optical occlusions induced by such glare, the I2M's trained DTR model has trouble in performing LPR readings reliably. Examples of situations where the system's performance is impacted by severe sunlight are shown in

Figure 38.

9.3. Inaccuracy of Output LPR-Based POD Data due to License Plate Occlusions

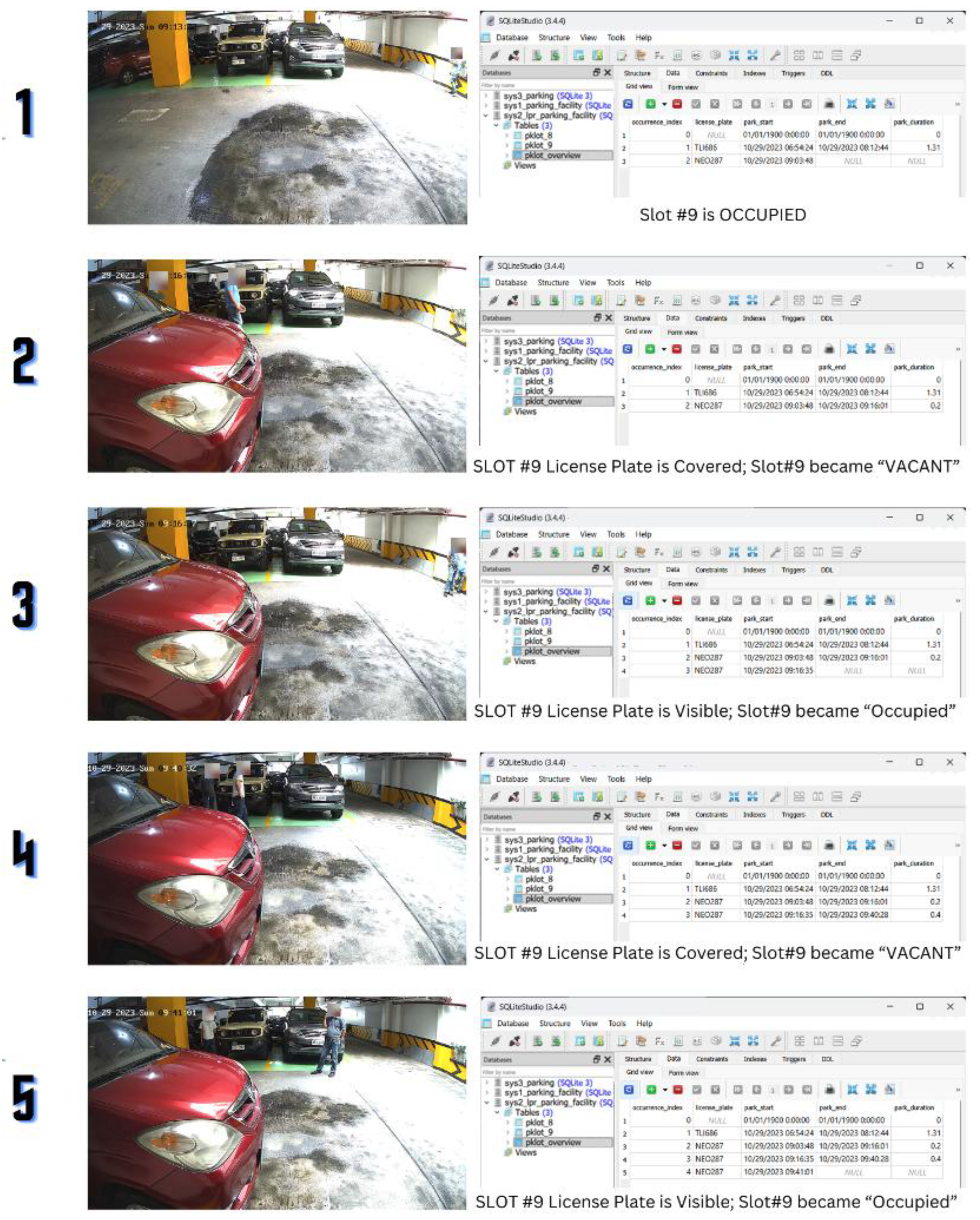

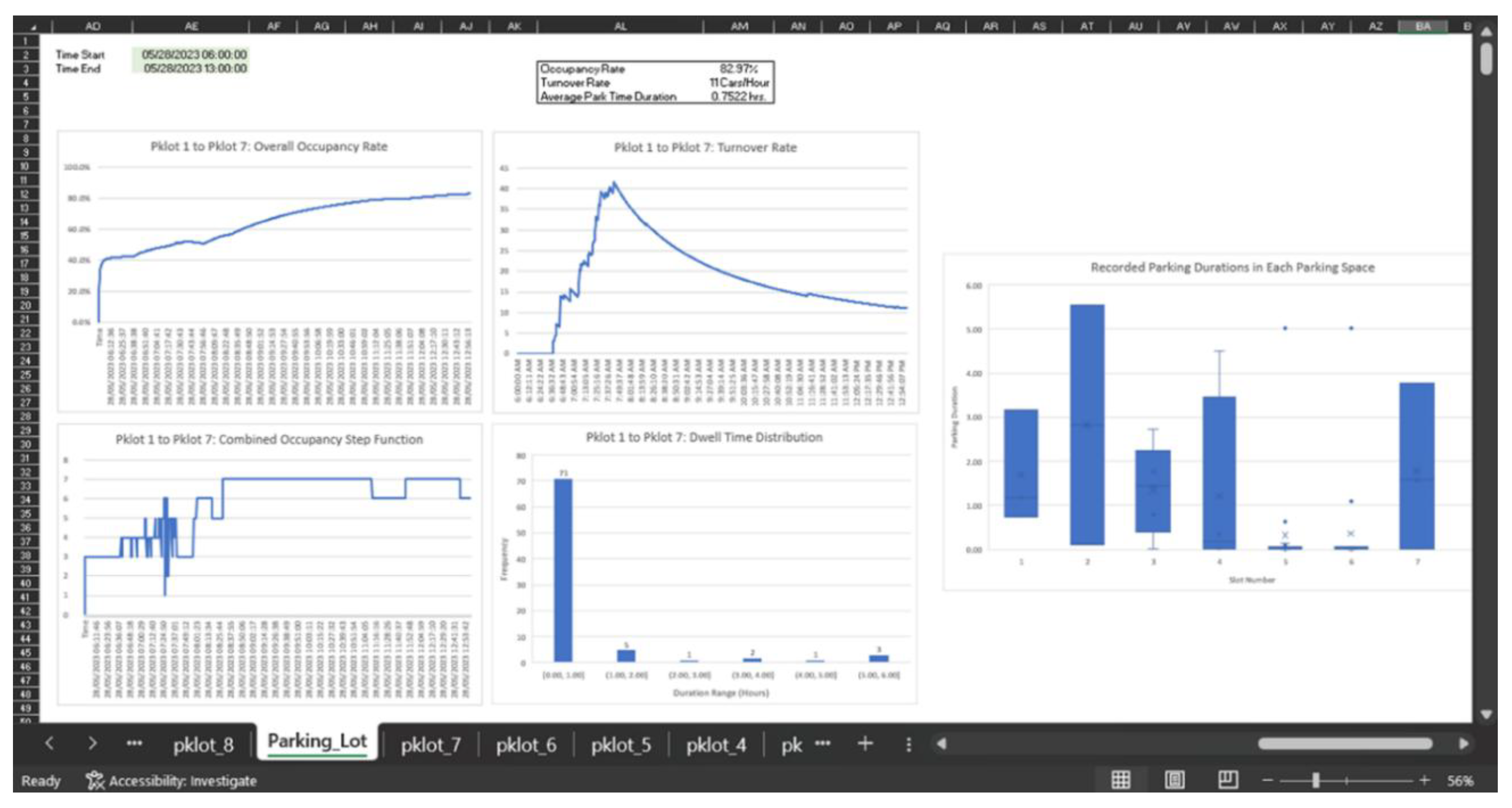

There are instances in the data dashboard where parking occupancy data suggest several parking space turnovers have occurred in between short periods. This is partly characterized by rapid consequential turnovers in the turnover graph and short-lived occupancy duration in the step function graph for individual parking slots. This is especially true when analyzing processed data from the LPR-based POD data feature system. When the system designers cross-checked the raw video footage, it was discovered that despite only one vehicle occupying the parking space for an extended period, it was recorded that the exact vehicle had entered and exited the parking space in multiple successions. An account of the events is shown in

Figure 39.

Figure 39.

Visual Representation of Events with Accompanying Database View for Parking Spaces exhibiting Rapid Turnover with Short-lived Parking Occupancy Durations.

Figure 39.

Visual Representation of Events with Accompanying Database View for Parking Spaces exhibiting Rapid Turnover with Short-lived Parking Occupancy Durations.

The investigation revealed that pedestrians briefly block license plates while walking past cars, leading to flawed recorded turnovers. The LPR-based POD system incorrectly perceives a pedestrian passing by as the vehicle leaving when this action momentarily obscures the license plate. The system records the exact vehicle as reentering the space once the person moves, making the license plate visible again.

A more reliable way to address this problem would be to switch from an occupancy model that relies on LPR to one based on vehicle detection. More reliable occupancy detection is ensured since, unlike license plates, the vehicle chassis is always visible, even when a person is walking in front of it. In this alternative method, vehicle detection would verify that a parking space is occupied. License plate recognition would be a conditional process that is only utilized to profile a parked vehicle when it is initially detected in a new parking space. This system adjustment could preserve the LPR function for precise vehicle profiling while removing mistakes caused by fleeting license plate occlusions.

The placement of the camera is another problem associated with occlusion. Due to the low camera positioning, the system may lose track of occupancy if pedestrians or passing cars block the view of parked cars. The passing people and vehicles would still cause errors anytime the view of the parked car is obscured, even if the system were to switch to a vehicle detection-based POD algorithm. Hence, the best camera placement is necessary to minimize these mistakes. To guarantee an unhindered field of view and reduce the possibility of visual access being blocked by passing objects or people, cameras should be installed at higher elevations near the ceiling. A common situation where low camera placement results in object occlusion and a parked car either partially or fully vanishes from the camera’s field of view is seen in

Figure 40 below.

9.4. System Scalability Challenges

The 3D BIM model cannot comprehensively monitor all vehicle activity and parking occupancy changes due to the limited camera coverage and insufficient high-performance computational resources. This study has presented a framework for integrating a BIM model into a smart parking management system, demonstrating the feasibility of incorporating digital twinning technology in smart parking facilities through a proof-of-concept prototype. As observed, its performance and reliability are limited to regulated settings where the actions of vehicles, drivers, and pedestrians are predictable. In practical deployment settings, uncontrolled variables introduce noise into the system's data stream, diminishing accuracy and complicating scalability intentions. Commercial parking facilities introduce additional complexities that necessitate specific design considerations beyond those applicable to controlled environments. Addressing scalability challenges is imperative and crucial, as such systems aim to increase business value.

Commercial parking facilities differ from controlled environments due to their design and complicated management operations, including but not limited to multiple entry and exit points, varied parking orientations, and multi-level structures. The variability in foot traffic, inconsistent vehicle movements, facility-specific operational policies, and diverse customer behaviors complicate understanding how the system should work. Moreover, technical challenges such as variable vehicle flows, physical obstructions, inconsistent lighting conditions, and regulatory constraints impede the accuracy of system modeling. Improving monitoring capabilities necessitates a comprehensive camera network throughout the facility and a strategic placement method to enhance visibility and inference accuracy in the presence of pedestrian and vehicle occlusion interferences. Optimal camera placement is crucial for achieving high-quality visual inputs in machine vision models that facilitate object detection and tracking. In addition to hardware factors, computational optimizations significantly enhance system responsiveness and reliability.

Integrating multiprocessing techniques in intelligent systems improves real-time inference by allowing simultaneous processing of multiple camera streams and image-based occupancy detection tasks. Conventional sequential processing techniques frequently result in latency during vehicle movement analysis, causing delays in decision-making. Multiprocessing enhances computational efficiency by distributing tasks across multiple CPU cores, significantly decreasing processing time for vehicle detection, license plate recognition, and space occupancy assessment. This is advantageous in commercial parking settings, where extensive monitoring requires ongoing data collection from various sources. Multiprocessing enhances load balancing across different image-processing tasks, ensuring optimal computational resource utilization. The system achieves real-time responsiveness under high-demand conditions by concurrently executing occupancy detection, vehicle tracking, and anomaly identification. Process synchronization and data-sharing protocols mitigate errors in concurrent execution while ensuring consistency in identified occupancy states. Multiprocessing offers practical advantages such as enhanced system scalability, accommodating growing data volumes through additional processing cores, and improved operational efficiency by reducing inference delays [

88].

In addition to computational optimizations, system reliability is contingent upon addressing environmental factors that influence model performance. Lighting inconsistencies can be mitigated through the installation of supplementary lighting to achieve uniform illumination, the augmentation of datasets with diverse lighting conditions, and the application of image processing techniques to improve visibility before inferencing [

35]. The optimizations enhance the DT's capacity for accurate occupancy monitoring, facilitate informed decision-making, and management operations in practical applications.

10. Cost Benefit Analysis

The feasibility of adopting smart parking management systems must be evaluated beyond its development expenses. For potential adopters, this involves assessing whether its deployment in commercial environments would function as a revenue-generating asset or a financial liability. A cost-benefit analysis can offer significant insight by comparing the advantages and disadvantages of the developed system with a conventional, non-technological option.

The upfront investment cost for system development, as

Table 5 has previously outlined, amounts to PHP 202,058.93 (USD 3,477.78). Furthermore, in 2024, Meralco, which is the largest private electric distribution utility in the Philippines, imposed an average fee of PHP 11.4377 per kWh (USD 0.20 per kWh) for electric consumption cost [

89].

Table 16 delineates the monthly operational expenses incurred in the system's operation.

The investment cost for a traditional setup lacking smart parking management hardware upgrades is reduced by eliminating two monitors, as dedicated displays for the 3D digital twin and data dashboard are not required. A single monitor suffices for the observation of surveillance footage. Furthermore, expenses associated with an external hard drive, a laptop, a high-end smartphone equipped with LiDAR scanning capabilities, and a subscription for a LiDAR scanning application are removed, as these devices are solely necessary for integrating smart technology. Essential hardware components, including security cameras, an NVR, and lighting fixtures, are required for surveillance, irrespective of machine vision implementation.

Table 17 presents the alternative investment costs for a traditional system, amounting to only PHP 30,545.90 (USD 525.73).

Foregoing a smart parking management infrastructure leads to reduced electricity consumption costs, as fewer monitors are necessary for operation. Without automated tools for vehicle entry and exit management, station cashiers are required to facilitate driver interactions and process payments. A smart parking system facilitates self-payment kiosks or cashless transactions, resulting in a fully autonomous and contactless experience. The employment of cashiers incurs supplementary labor expenses, with the average monthly salary for a cashier in the Philippines estimated at PHP 18,122.42 (USD 311.92), as the Economic Research Institute reported [

90].

Table 18 presents a detailed analysis of monthly expenses, considering the employment of two cashiers.

Simulations of the smart parking management system during operational hours over a day reveal a total gross profit of PHP 1,550.00 (see Table XVI). With an assumption of 30 business days per month, the monthly profit totals PHP 46,500.00, resulting in an annual profit of PHP 558,000. The return on investment (ROI) is defined by the breakeven point, indicating the duration necessary for the system to recover its initial investment through accumulated profits. The breakeven period is determined using Equation (22).

Upon computation, a SPMS-equipped parking facility’s expected breakeven period is in 4.73 months, while a facility with none of these upgrades has a 4.55 breakeven period. Such indicates a breakeven period of almost 5 months for both options. Although having almost the same breakeven period, the caveat in this is that the SPMS leads to significantly lower monthly expenses. Its financial impact is greatly appreciated and evident in the long run as it can deliver cost savings. The monthly cost savings can be computed for using Equation (23). Equation (24) on the other hand details the yearly cost savings.

Analysis indicates that implementing smart parking management system reduces manual labor expenses, leading to significant financial advantages. The monthly and annual costs savings are detailed in

Table 19 and

Table 20, respectively. Calculations show that a monthly cost of ₱35,997.7 (USD 619.58) can be reduced, resulting in an annual cost savings of ₱431,973.36 (USD 7,435.00).

The initial investment of ₱202,058.93 can be recovered in approximately 5.6 months. This is obtained through ratio between the total investment cost and the monthly cost savings. Exceeding the breakeven point results in sustained cost reductions, improved profitability, and potentially better operational system efficiency. The findings highlight the compelling financial rationale for potential adopters to pursue building smart parking systems, which facilitates ongoing savings and enhanced service quality over time.

11. Conclusion and Recommendations

This study presented a smart parking management system development framework that used machine vision, machine learning, and digital twinning to dynamically model vehicle parking activity within a parking facility. Using YOLOv7 for vehicle and LPD, and DTR for LPR, the system was able to demonstrate reliable modeling performance under varying situations, demonstrating the promise of digital twins in merging facility surveillance with modern data analytics. The developed enterprise-wide 3D digital twin BIM model in Autodesk Revit offers a visually intuitive data-driven viewing interface that informs users of parking activity in each parking facility. As the developed model was in 3D, viewers of the model need not mentally interpret and correlate 2D style information into a 3D spatial understanding. The DT model is built geometrically similar to the built environment, thus allowing for information to be intuitively understood, potentially improving the parking management decision-making processes for parking facility managers and serving as a baseline model for comparable deployments in future smart city initiatives involving intelligent systems and parking management. A summary of key performance metrics of the developed system and its capabilities are provided in

Table 21.

A critical insight for deploying new technologies in operational settings is the need for reciprocal flexibility between the technology introduced and its operating environment. Integrating new technologies should not disrupt existing workflows or compromise the current level of operational stability and efficiency. Rather, facilities should be flexible by adjusting their processes to allow for the optimal functioning of newly integrated technologies. To optimize the added value of SPMSs, it is critical to create conditions conducive to guaranteed reliable outputs, such as fair and ambient lighting conditions for LPR and optimal camera location for occlusion problems. Adjustments, such as building speed bumps or installing gate barriers to facilitate vehicle stops and clear license plate capture, improve the precision of calculated system metrics and enable a smooth integration without jeopardizing present operations.

Alternative digital twinning systems such as Unity, which provide more flexibility and long-term system support, should be investigated in future studies. Unity is a preferred alternative platform for 3D environment BIM models because of its backward compatibility offering. Unlike Autodesk software, which will eventually drop developer support for old software versions, Unity Engine will continue to enjoy continuous developer support from Unity. Thus, BIM developers need not worry about backward compatibility issues that may arise in the future. Furthermore, expanding 3D BIM modeling beyond parking management to other facility and infrastructure domains, such as building energy management, manufacturing process optimization, centralized airflow systems, and predictive maintenance, could demonstrate BIM technology's versatility and scalability in various applications. Integrating BIM into these areas increases the ability to maintain optimum conditions for facility operations, giving meaningful insights and improving decision-making in various settings. To increase system robustness in various settings, better object detection and scene text recognition models can be explored. Incorporating powerful computing tools, such as edge computing devices and cloud computing, can also guarantee system health and avoid performance throttling, guaranteeing that the parking management system will continue to be dependable and expandable as it develops.

Figure 1.

A smart parking management system uses sensors and equipment to collect vehicle activity data, which is processed and stored on a centralized server. This data is then made accessible to client devices, such as mobile apps or display systems [

31].

Figure 1.

A smart parking management system uses sensors and equipment to collect vehicle activity data, which is processed and stored on a centralized server. This data is then made accessible to client devices, such as mobile apps or display systems [

31].

Figure 2.

This method focuses on identifying parking space status rather than detecting individual vehicles. It classifies spaces as either "Occupied" or "Vacant" using images for inference. The dataset utilized for this approach is the PKLot online dataset [

49].

Figure 2.

This method focuses on identifying parking space status rather than detecting individual vehicles. It classifies spaces as either "Occupied" or "Vacant" using images for inference. The dataset utilized for this approach is the PKLot online dataset [

49].

Figure 3.

Dual object detection implementation for parking space occupancy determination. (a) The pixel coordinate locations of parking spaces were determined using parking space object detection. (b) The presence of vehicles was subsequently detected through vehicle detection. (c) The parking space is deemed occupied if the algorithm determines that the detected vehicles are within the parking space. Otherwise, the parking space is deemed occupied [

50].

Figure 3.

Dual object detection implementation for parking space occupancy determination. (a) The pixel coordinate locations of parking spaces were determined using parking space object detection. (b) The presence of vehicles was subsequently detected through vehicle detection. (c) The parking space is deemed occupied if the algorithm determines that the detected vehicles are within the parking space. Otherwise, the parking space is deemed occupied [

50].

Figure 3.

YOLOv7 Architecture. The architecture has three elements: The Input, the Backbone Network, and the Head Network [

56].

Figure 3.

YOLOv7 Architecture. The architecture has three elements: The Input, the Backbone Network, and the Head Network [

56].

Figure 5.

LPR architecture. There are four phases in an LPR system: (1) license plate detection, (2) the digital cropping and isolation of the license plate, (3) character recognition, and (4) concatenating together extracted alphanumeric text into a string for the LPR reading [

36].

Figure 5.

LPR architecture. There are four phases in an LPR system: (1) license plate detection, (2) the digital cropping and isolation of the license plate, (3) character recognition, and (4) concatenating together extracted alphanumeric text into a string for the LPR reading [

36].

Figure 6.

Philippine License Plates. These are the four variations of Philippine license plates for cars. (a-c) The first three license plates are standard issued-license plates of the Philippine Land Transportation Office (LTO). (d) The last license plate is a temporary license plate based on the vehicle’s conduction sticker [

57].

Figure 6.

Philippine License Plates. These are the four variations of Philippine license plates for cars. (a-c) The first three license plates are standard issued-license plates of the Philippine Land Transportation Office (LTO). (d) The last license plate is a temporary license plate based on the vehicle’s conduction sticker [

57].

Figure 7.

Deep text recognition architecture. There are four stages that the input image undergoes: Transformation, Feature Extraction, Sequential Modeling, and Prediction [

62].

Figure 7.

Deep text recognition architecture. There are four stages that the input image undergoes: Transformation, Feature Extraction, Sequential Modeling, and Prediction [

62].

Figure 9.

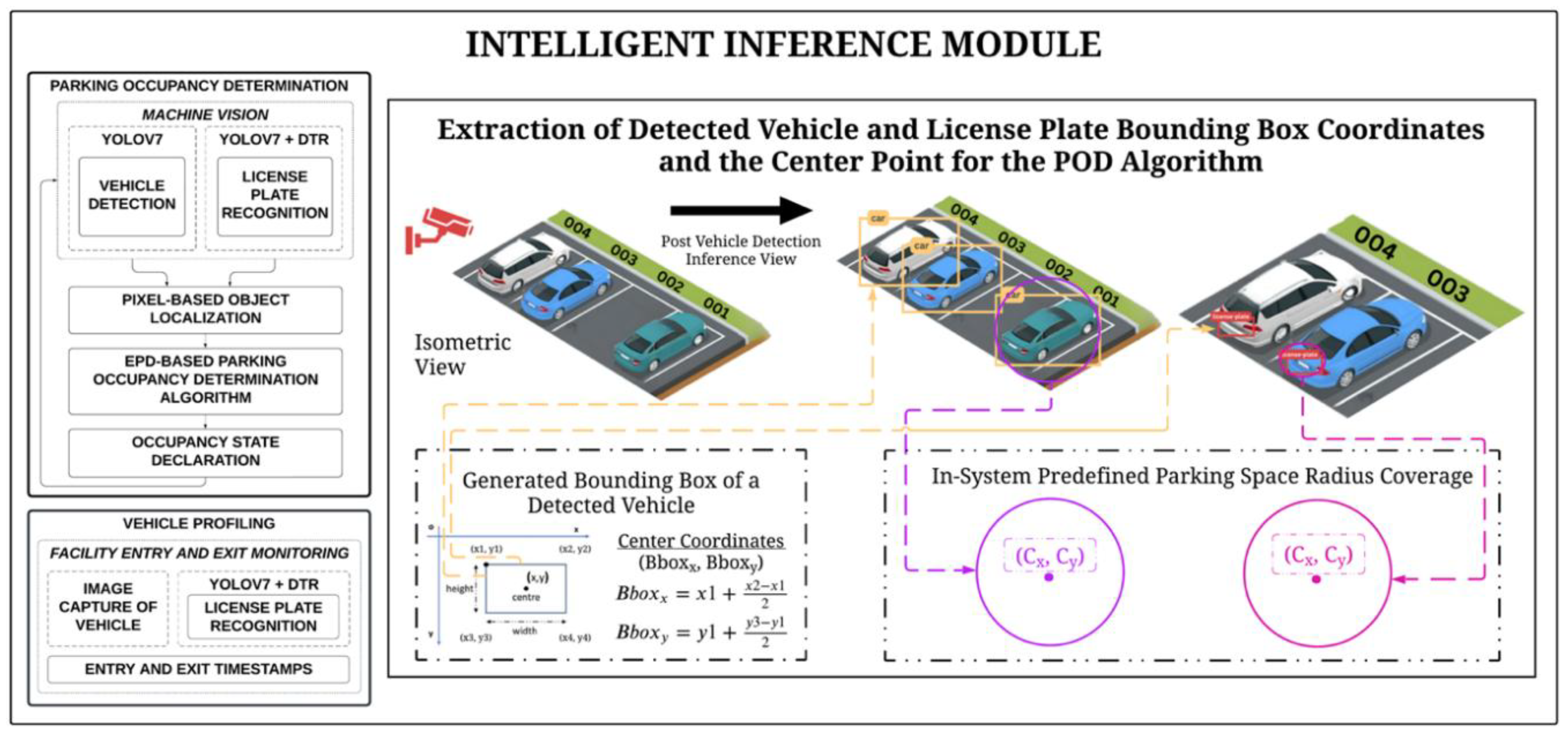

Intelligent Inference Module of the Smart Parking Management System. The module has two primary functions: (1) parking occupancy determination using vehicle detection or license plate recognition; and (2) vehicle profiling for facility entry and exit monitoring.

Figure 9.

Intelligent Inference Module of the Smart Parking Management System. The module has two primary functions: (1) parking occupancy determination using vehicle detection or license plate recognition; and (2) vehicle profiling for facility entry and exit monitoring.

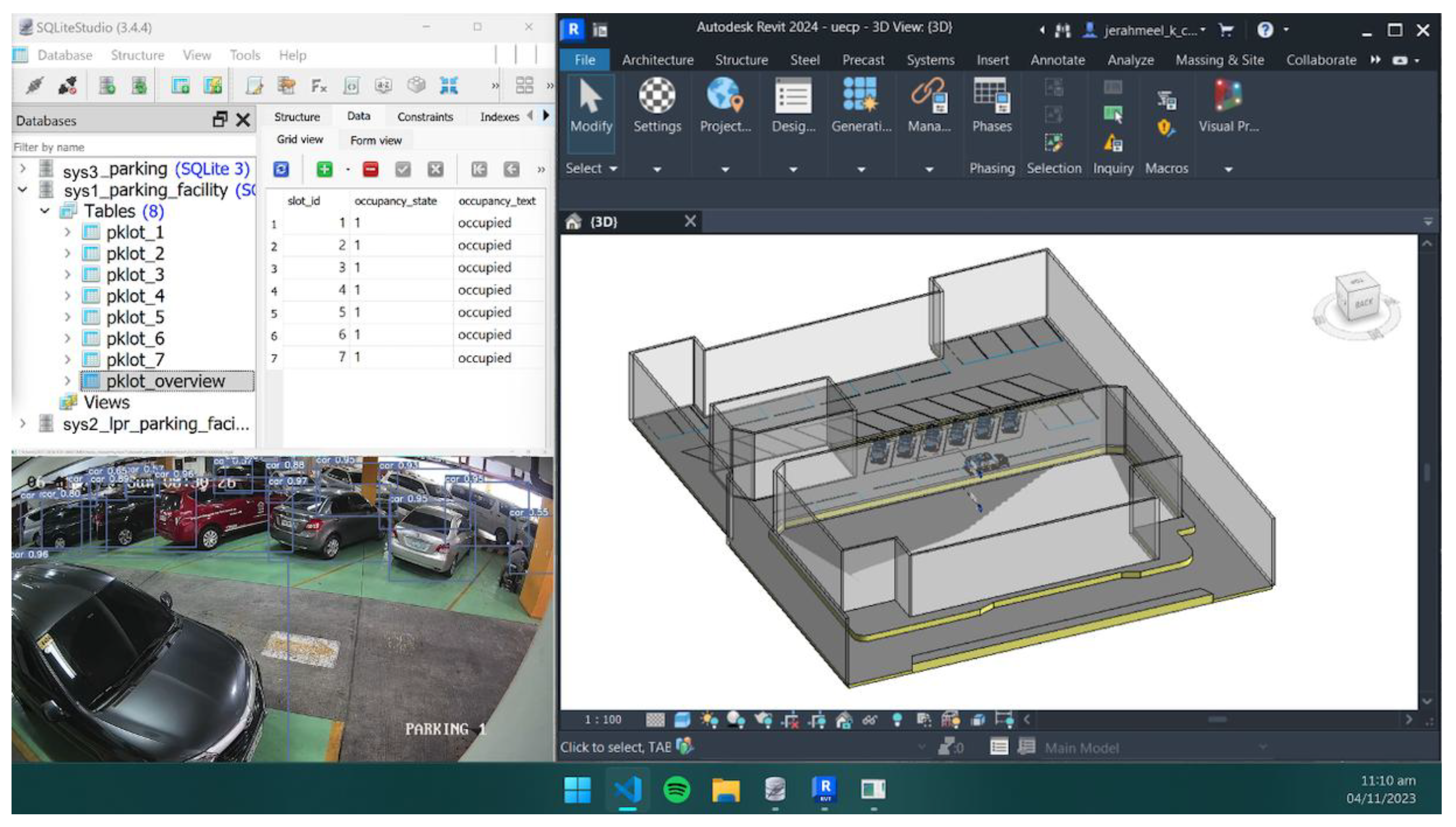

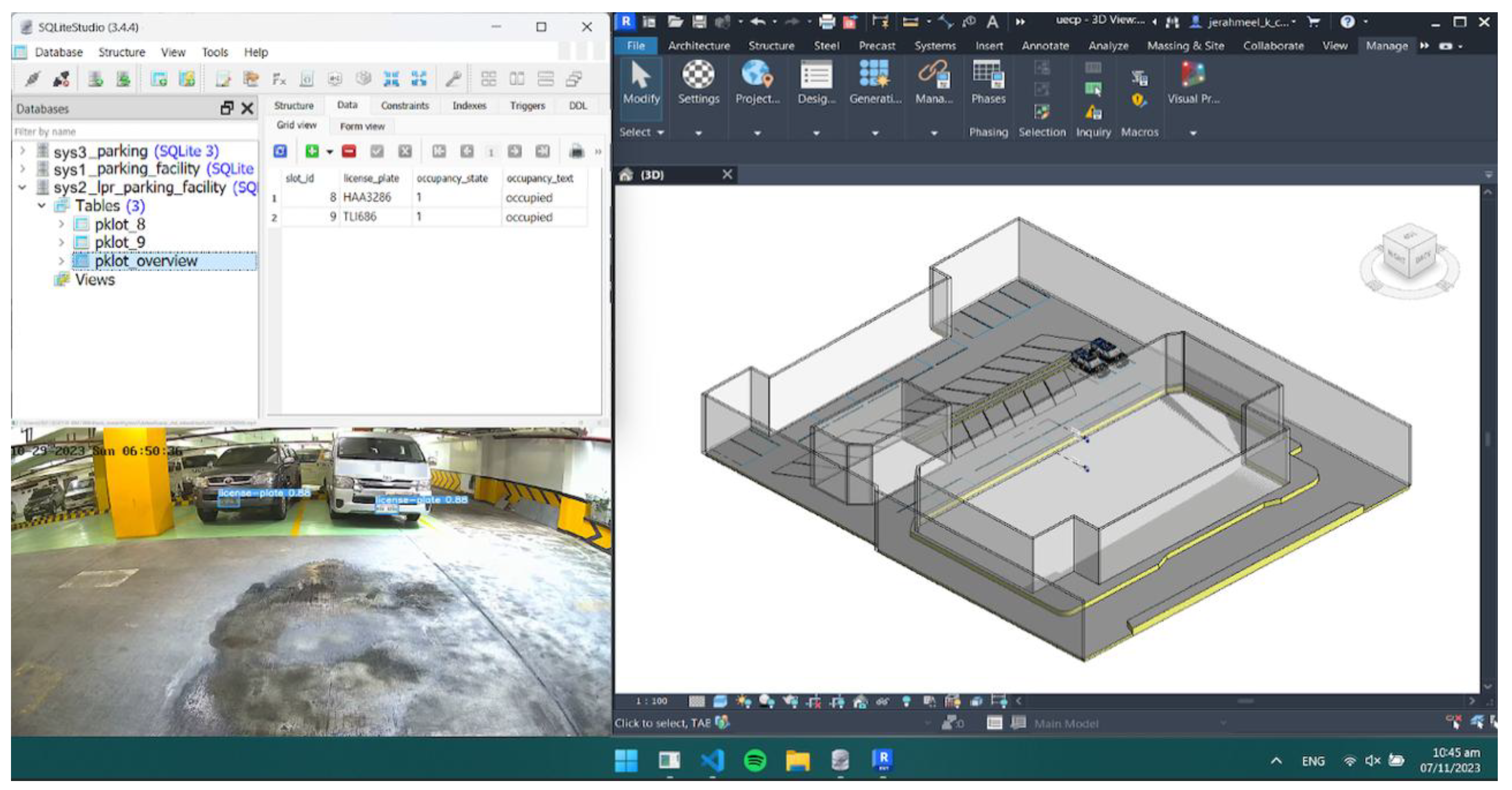

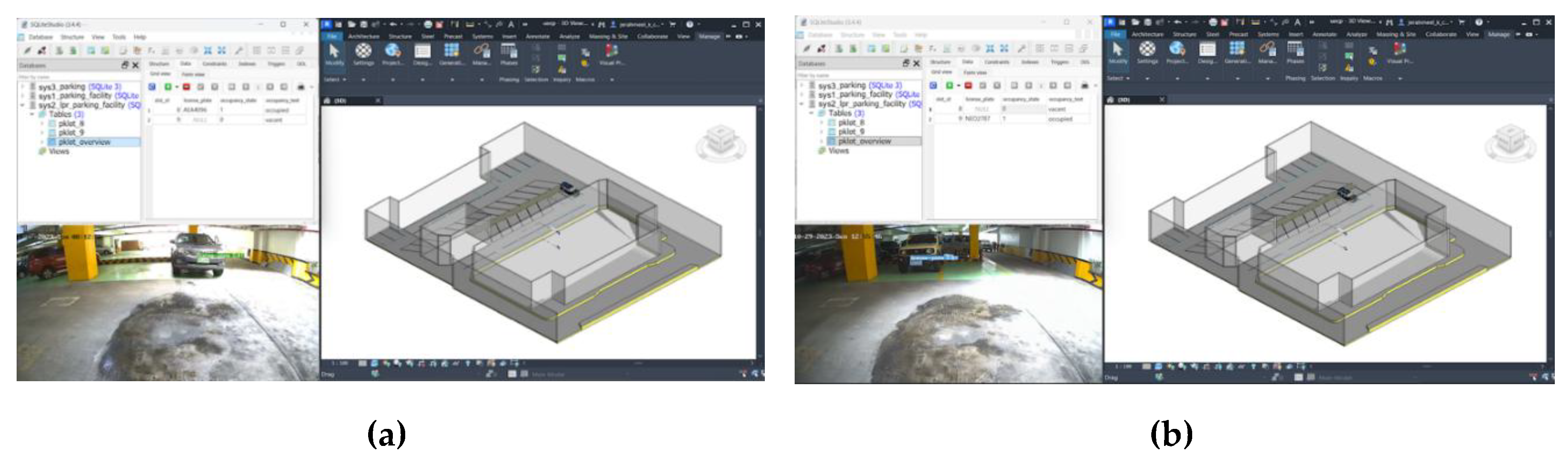

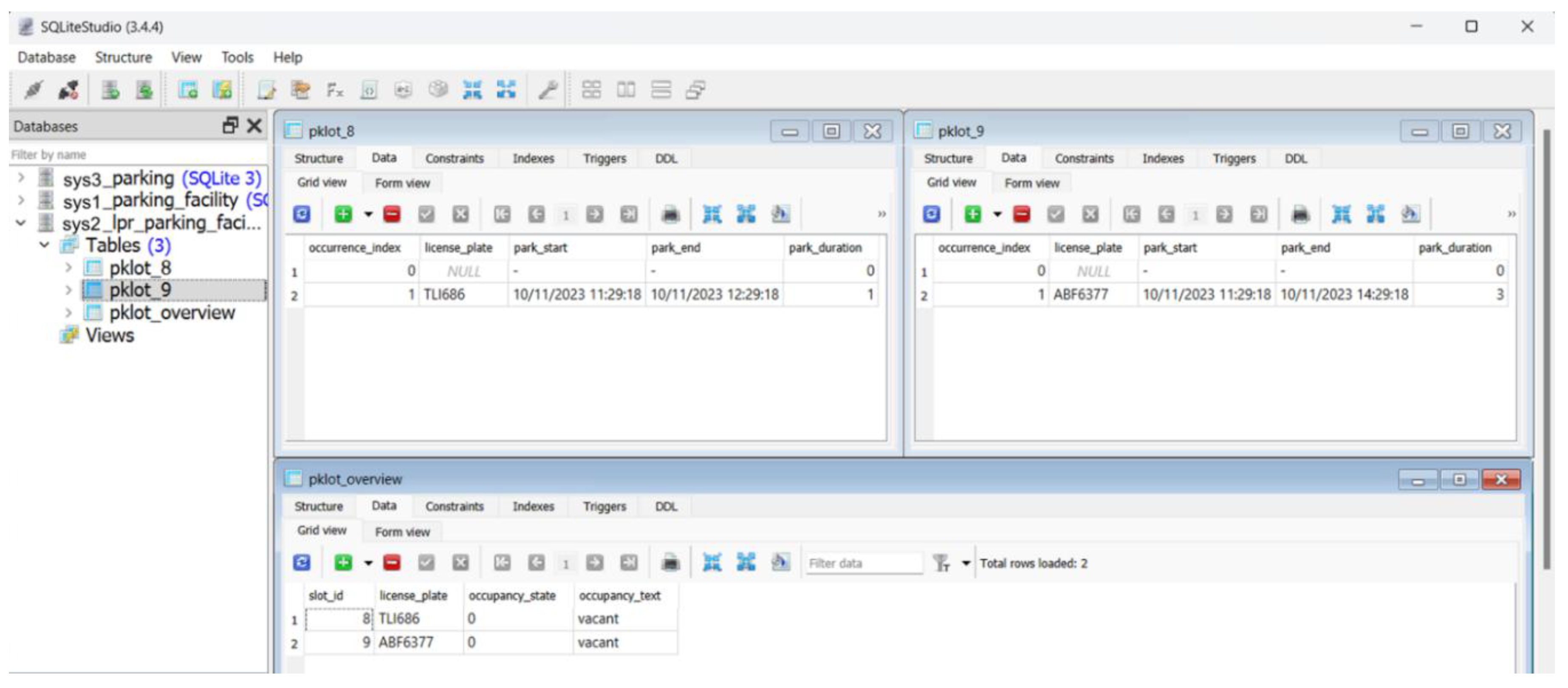

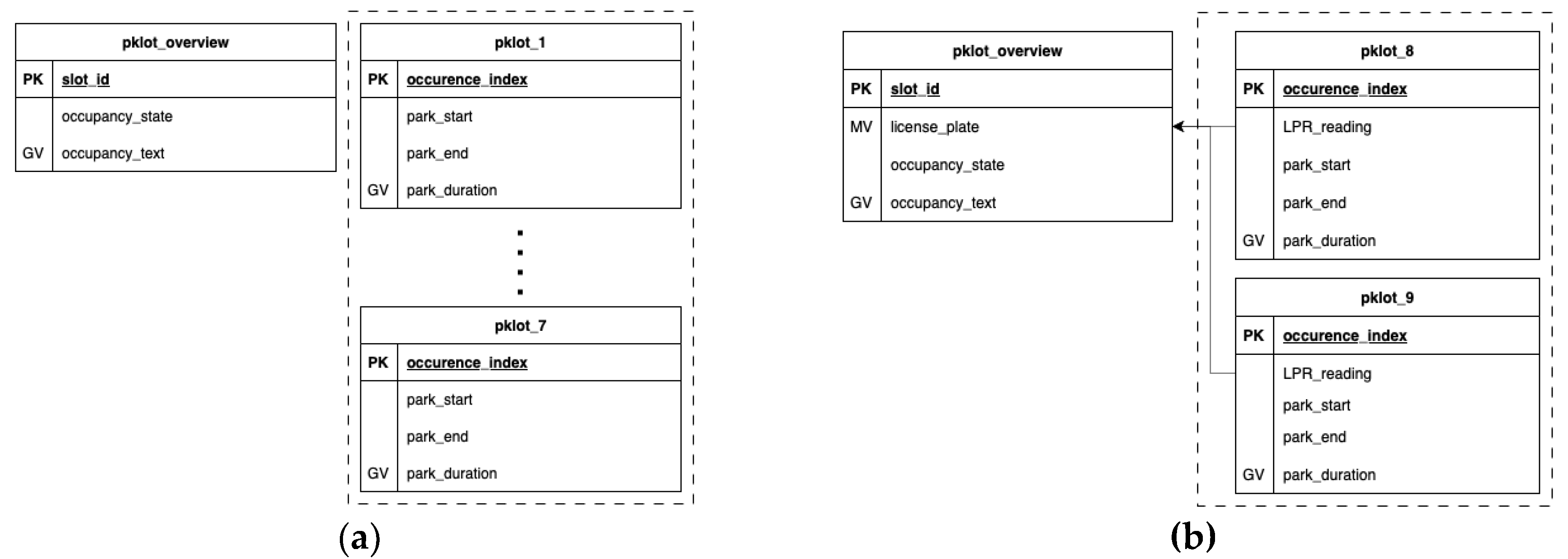

Figure 10.

SQL DB schema for two system features. (a) Tracks occupancy of seven parking spaces with individual tables storing vehicle start/end times and computed duration in hours. (b) Extends (a) with additional LPR_reading and license_plate fields in the pklot_overview and pklot_event tables for LPR.

Figure 10.

SQL DB schema for two system features. (a) Tracks occupancy of seven parking spaces with individual tables storing vehicle start/end times and computed duration in hours. (b) Extends (a) with additional LPR_reading and license_plate fields in the pklot_overview and pklot_event tables for LPR.

Figure 11.

SQL database schema for the second system feature. The data schema indicates an almost similar database structure to the first database. The difference is the inclusion of the LPR_reading and license_plate data attributes for pklot_overview and pklot event tables.

Figure 11.

SQL database schema for the second system feature. The data schema indicates an almost similar database structure to the first database. The difference is the inclusion of the LPR_reading and license_plate data attributes for pklot_overview and pklot event tables.

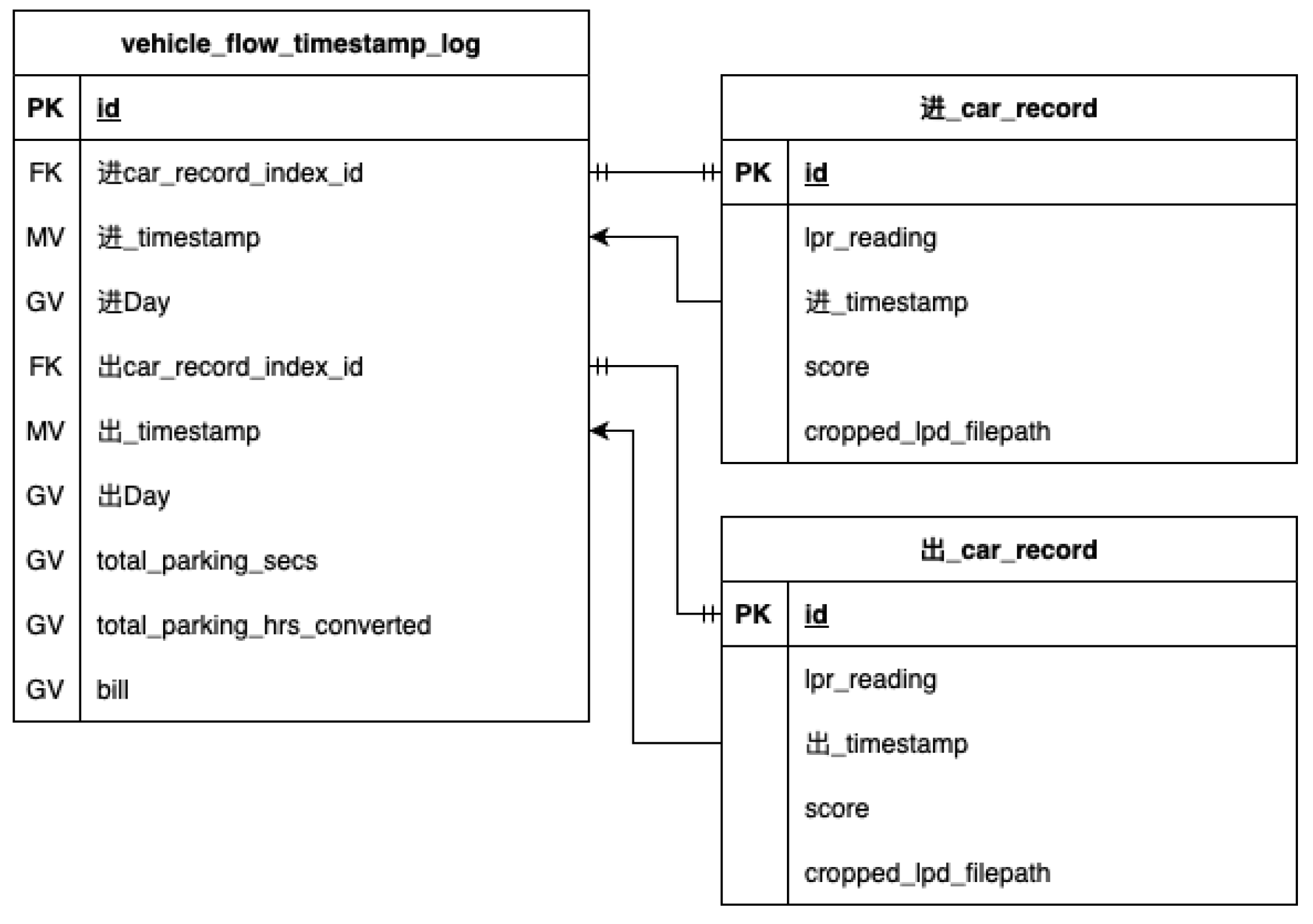

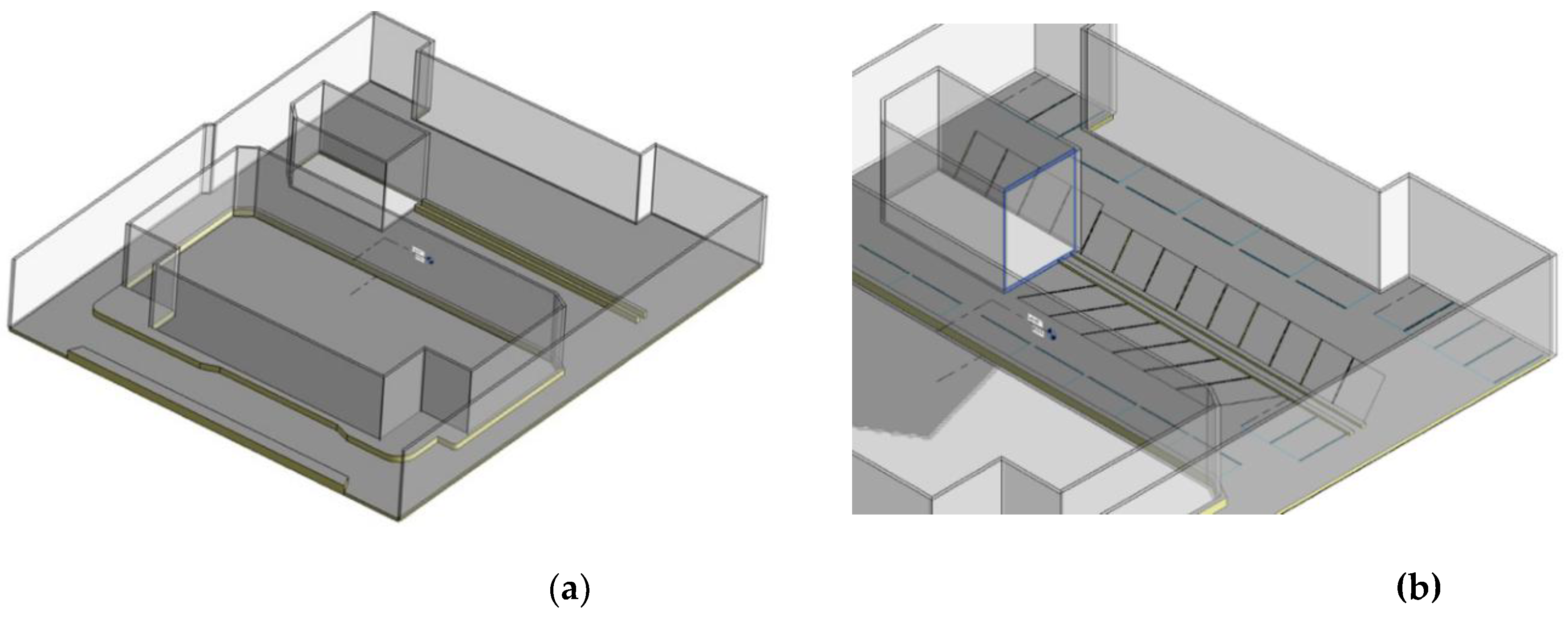

Figure 12.

Digital twin module of the smart parking management system. The development process is divided into two design phases: the initial phase is dedicated to 3D modeling, while the subsequent phase entails the integration of the model with other system module components.

Figure 12.

Digital twin module of the smart parking management system. The development process is divided into two design phases: the initial phase is dedicated to 3D modeling, while the subsequent phase entails the integration of the model with other system module components.

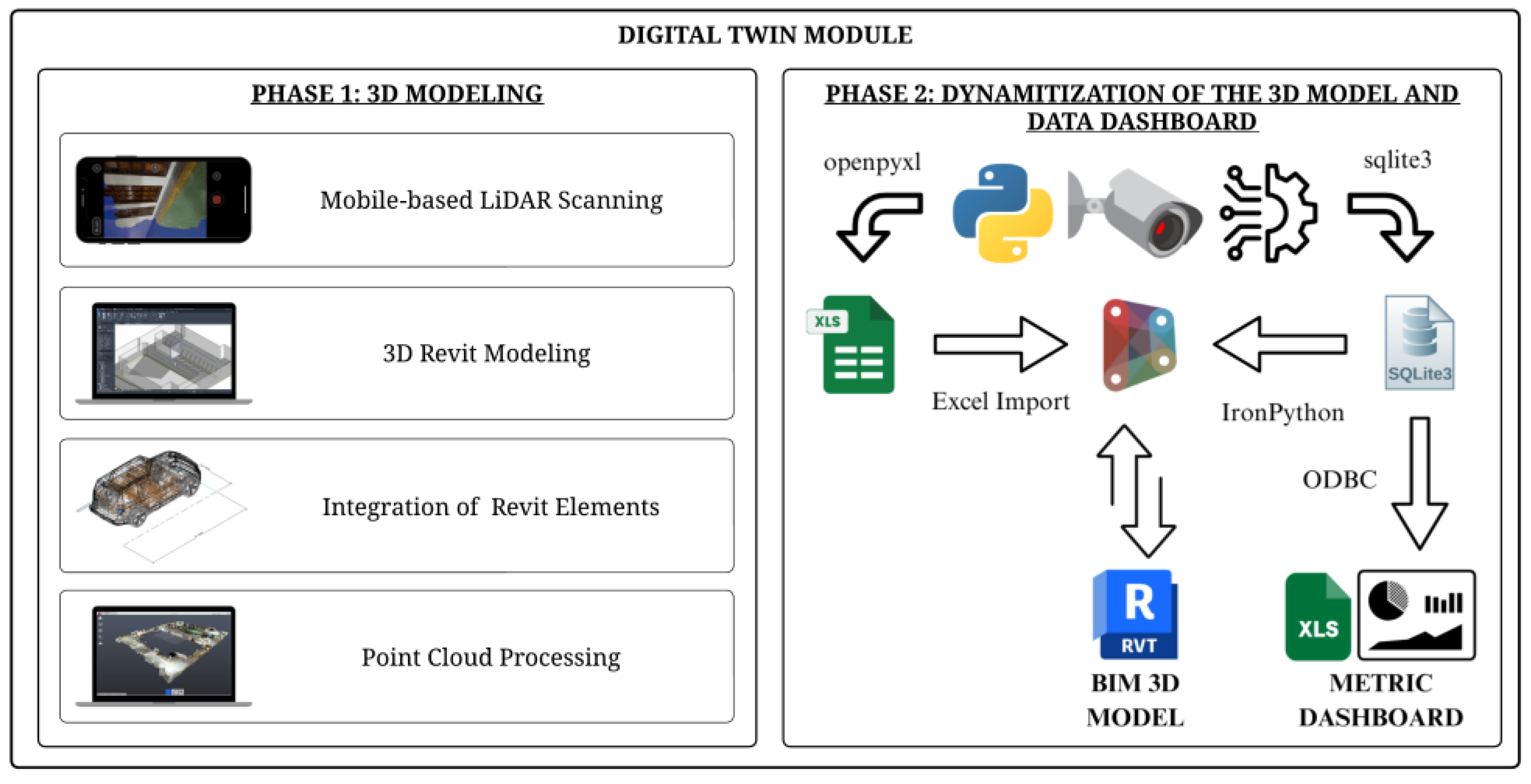

Figure 13.

Processed point cloud data from Autodesk Recap Pro of the Parking Facility Research Built Environment.

Figure 13.

Processed point cloud data from Autodesk Recap Pro of the Parking Facility Research Built Environment.

Figure 5.

Digital twin module model. (a) 3D model created in Autodesk Revit. (b) Zoomed-in view of the 3D model showing parking spaces within the parking facility.

Figure 5.

Digital twin module model. (a) 3D model created in Autodesk Revit. (b) Zoomed-in view of the 3D model showing parking spaces within the parking facility.

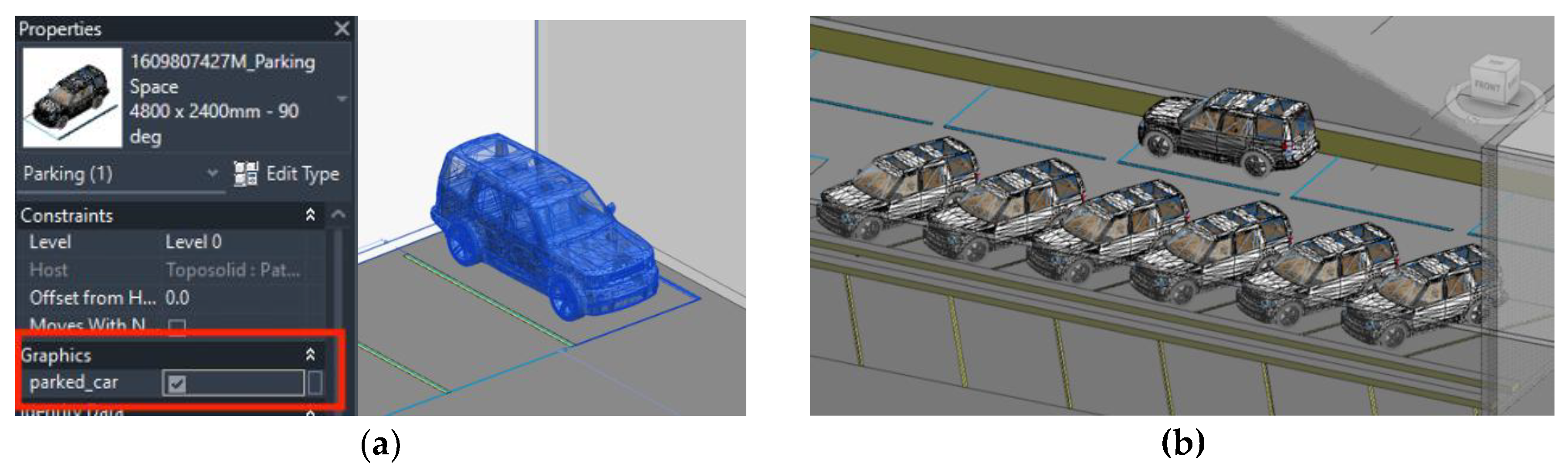

Figure 15.

Snapshots of Revit 3D modeling workspace. (a) Example showing a visible Land Rover SUV with the parked_car attribute set to True in a parking space Revit Family. (b) Close-up of parking spaces: occupied spaces have parked_car = True, while empty ones are set to False.

Figure 15.

Snapshots of Revit 3D modeling workspace. (a) Example showing a visible Land Rover SUV with the parked_car attribute set to True in a parking space Revit Family. (b) Close-up of parking spaces: occupied spaces have parked_car = True, while empty ones are set to False.

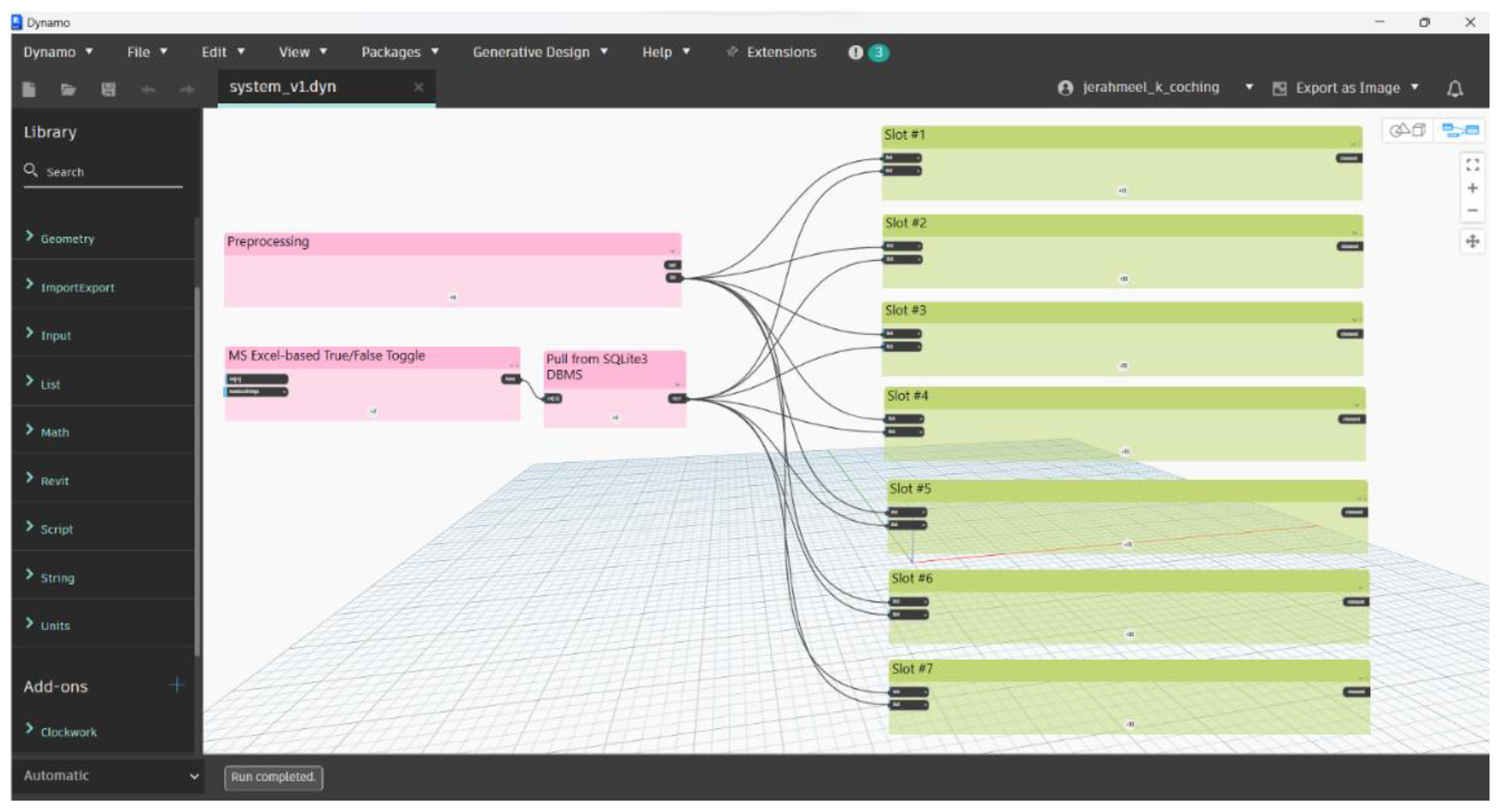

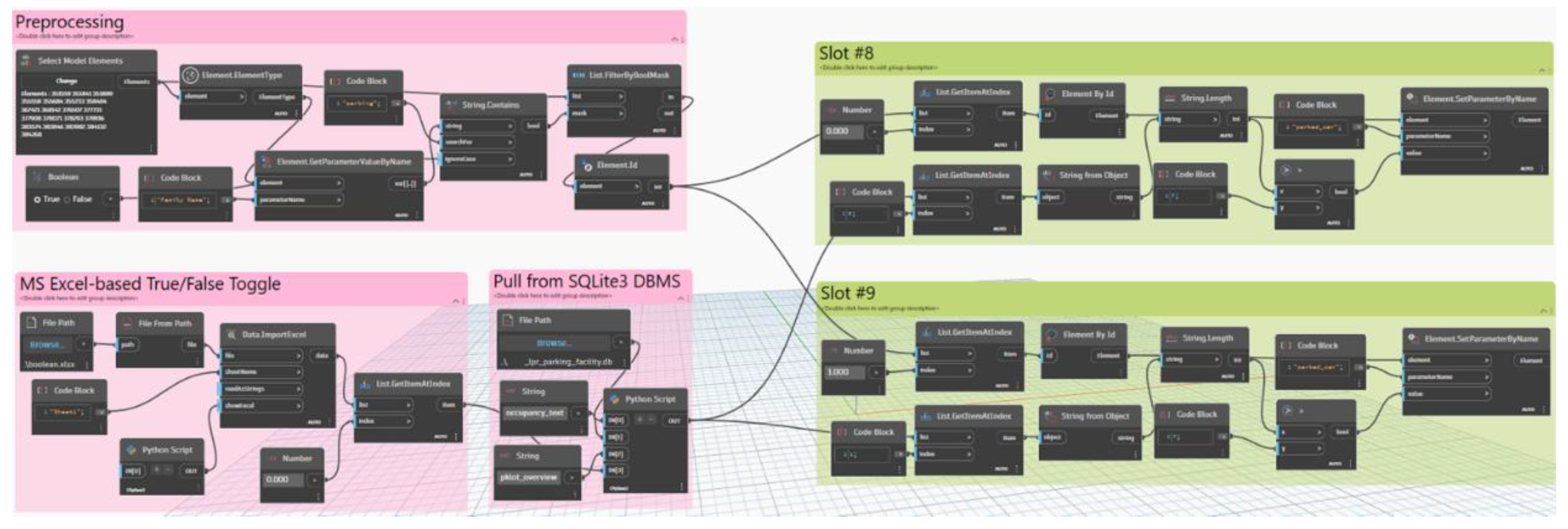

Figure 6.

General structure of the developed Revit Dynamo script for the Digital Twin module. The script supports the first feature system using a vehicle detection-based POD algorithm. For the second feature, only Slots #8 and #9 are considered. Pink node groups handle data processing; green nodes control vehicle visibility in parking spaces.

Figure 6.

General structure of the developed Revit Dynamo script for the Digital Twin module. The script supports the first feature system using a vehicle detection-based POD algorithm. For the second feature, only Slots #8 and #9 are considered. Pink node groups handle data processing; green nodes control vehicle visibility in parking spaces.

Figure 7.

Revit Dynamo for the Second Feature System Supporting the LPR-based POD Algorithm.

Figure 7.

Revit Dynamo for the Second Feature System Supporting the LPR-based POD Algorithm.

Figure 18.

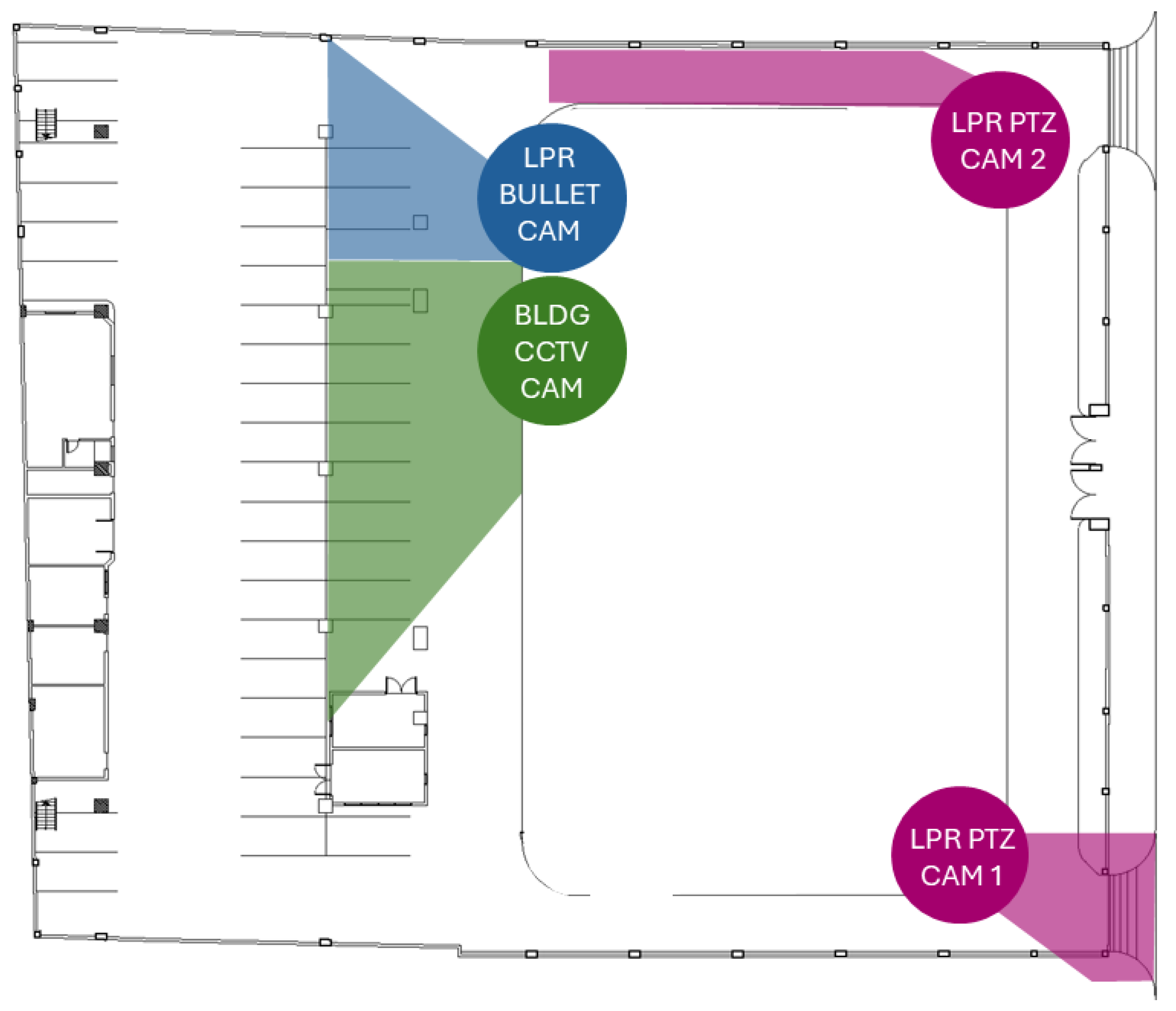

Floor plan of the building complex with camera positions provided. Each camera is color-coded to provide information on which system feature it belongs to.

Figure 18.

Floor plan of the building complex with camera positions provided. Each camera is color-coded to provide information on which system feature it belongs to.

Figure 19.

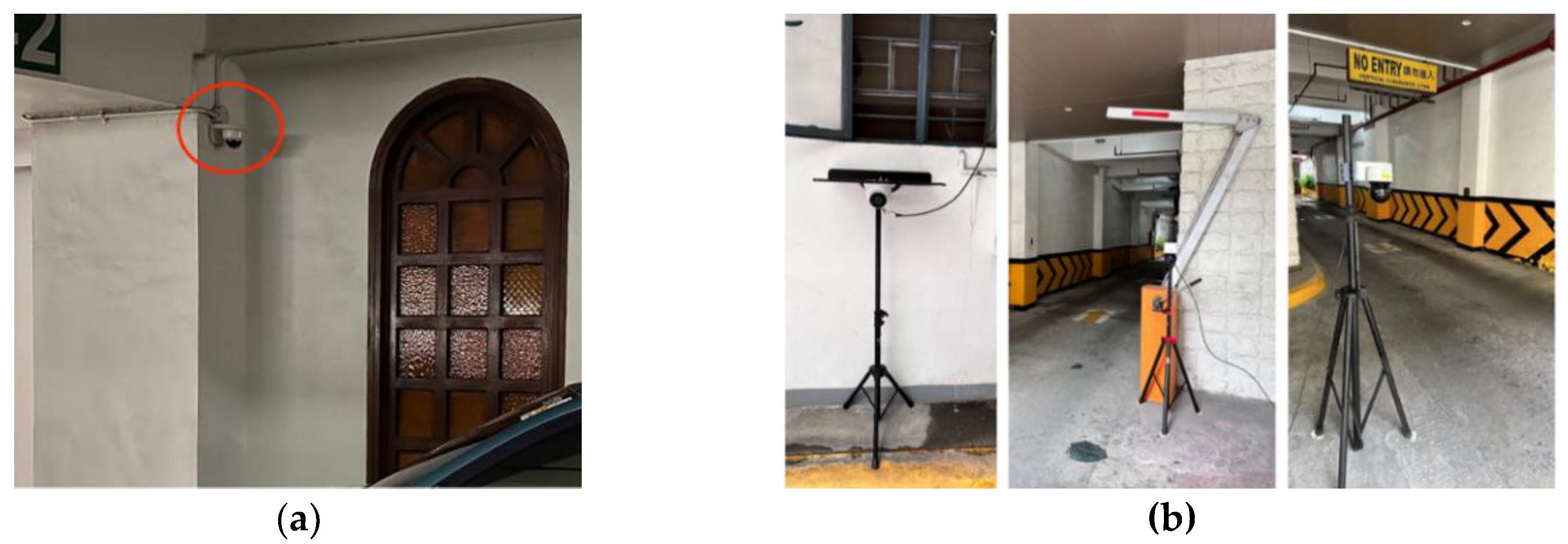

Security cameras are installed in the building complex. The following security cameras are installed within the building complex: (a) a fish-eye lens camera for the first system feature, (b) from left to right, a fixed bullet turret camera for the second system feature, and PTZ cameras for monitoring vehicle entry and exit for the third feature.

Figure 19.

Security cameras are installed in the building complex. The following security cameras are installed within the building complex: (a) a fish-eye lens camera for the first system feature, (b) from left to right, a fixed bullet turret camera for the second system feature, and PTZ cameras for monitoring vehicle entry and exit for the third feature.

Figure 20.

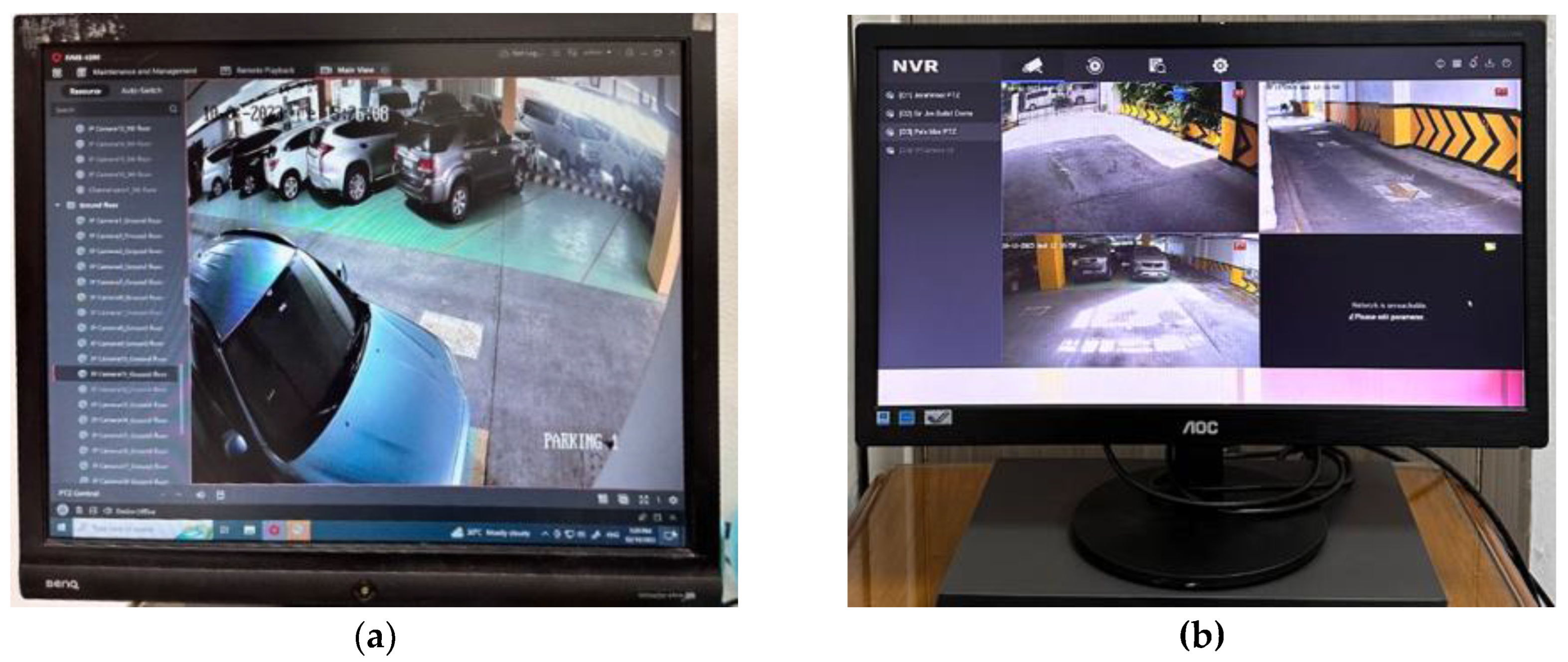

Security camera video feeds within the building complex. (a) First monitor shows most parking spaces, while (b) shows the entrance and exit driveways, and selected parking spaces.

Figure 20.

Security camera video feeds within the building complex. (a) First monitor shows most parking spaces, while (b) shows the entrance and exit driveways, and selected parking spaces.

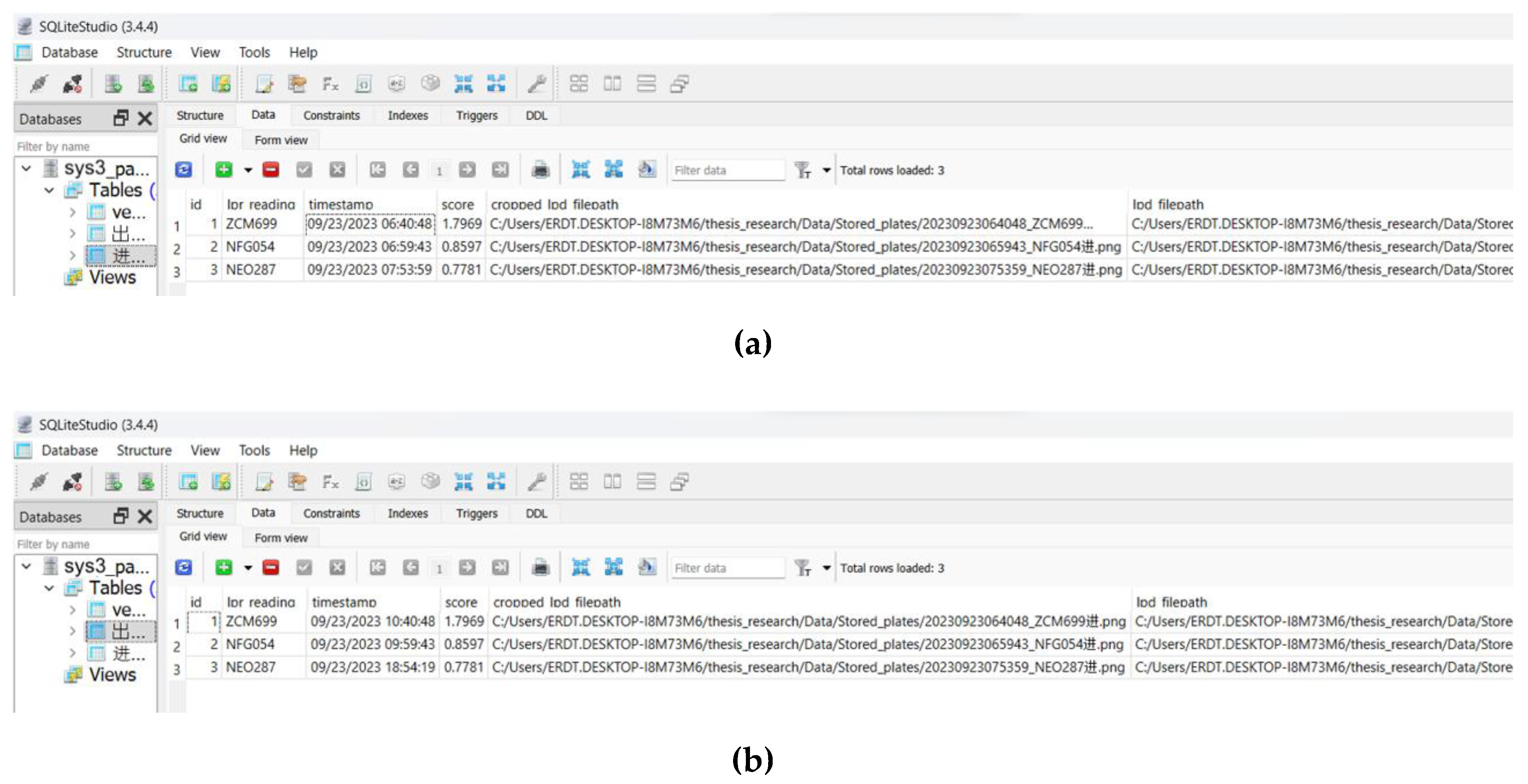

Figure 8.

Sample image from the CATCH-ALL dataset by DLSU ISL. This dataset shows a street with vehicles in Manila, Philippines.

Figure 8.

Sample image from the CATCH-ALL dataset by DLSU ISL. This dataset shows a street with vehicles in Manila, Philippines.

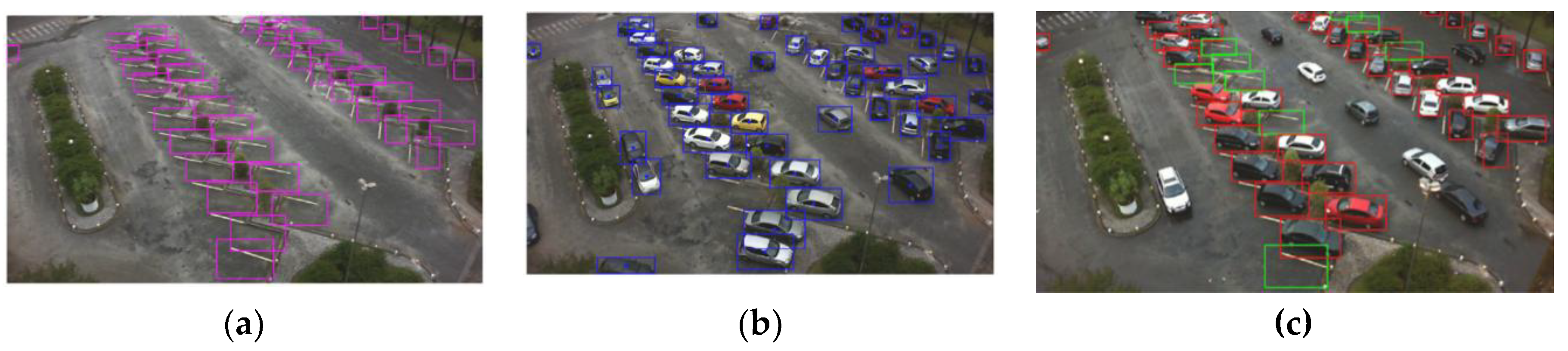

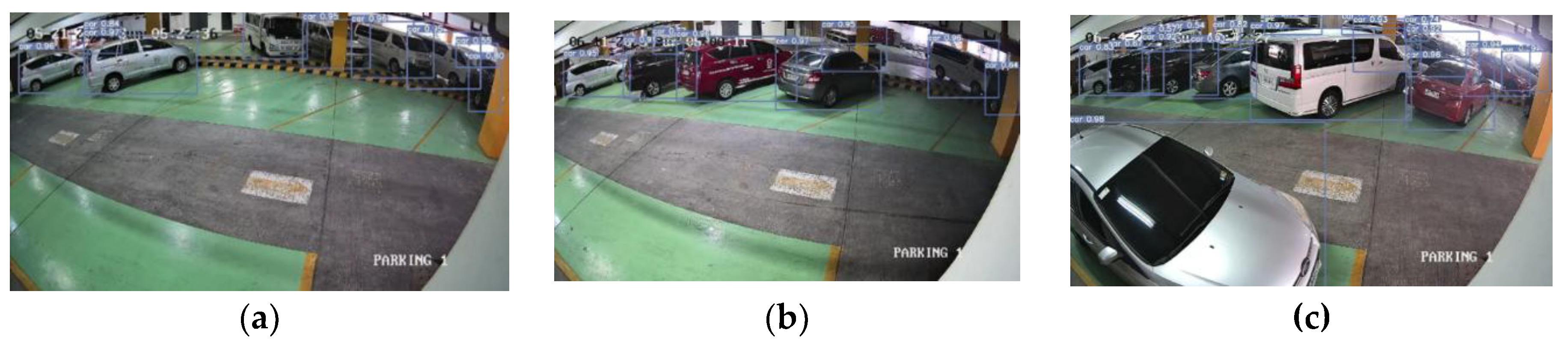

Figure 22.

YOLOv7-x Base Model Vehicle Detection Inferences on Video Footage Frames Showing different capacities: (a) Nearly Empty, (b) Moderately-Filled, and (c) Fully Occupied.

Figure 22.

YOLOv7-x Base Model Vehicle Detection Inferences on Video Footage Frames Showing different capacities: (a) Nearly Empty, (b) Moderately-Filled, and (c) Fully Occupied.

Figure 23.

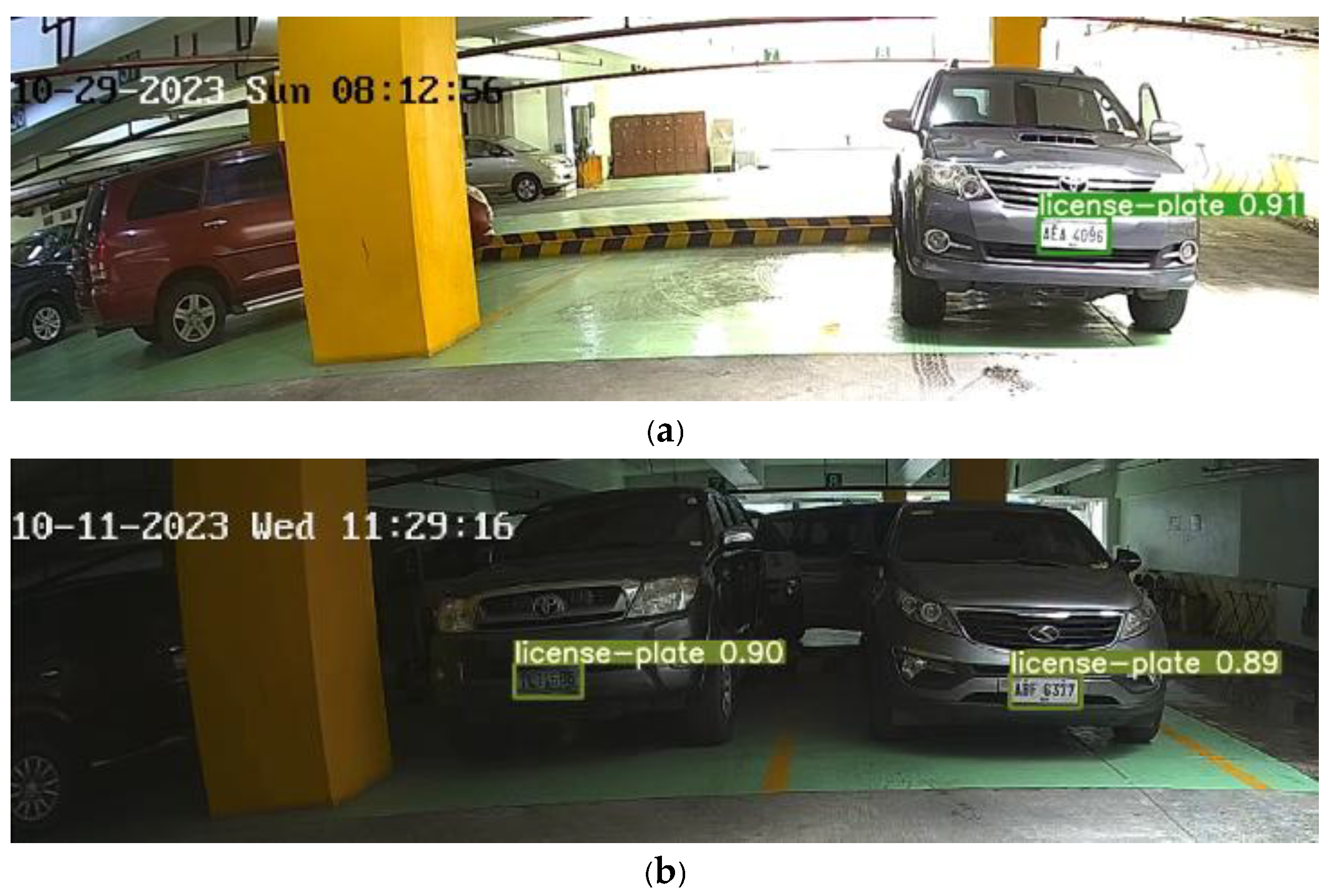

LPD inference using the finetuned YOLOv7 LPD model on parking facility video frames, showing: (a) one of two parking spaces occupied, and (b) both spaces occupied.

Figure 23.

LPD inference using the finetuned YOLOv7 LPD model on parking facility video frames, showing: (a) one of two parking spaces occupied, and (b) both spaces occupied.

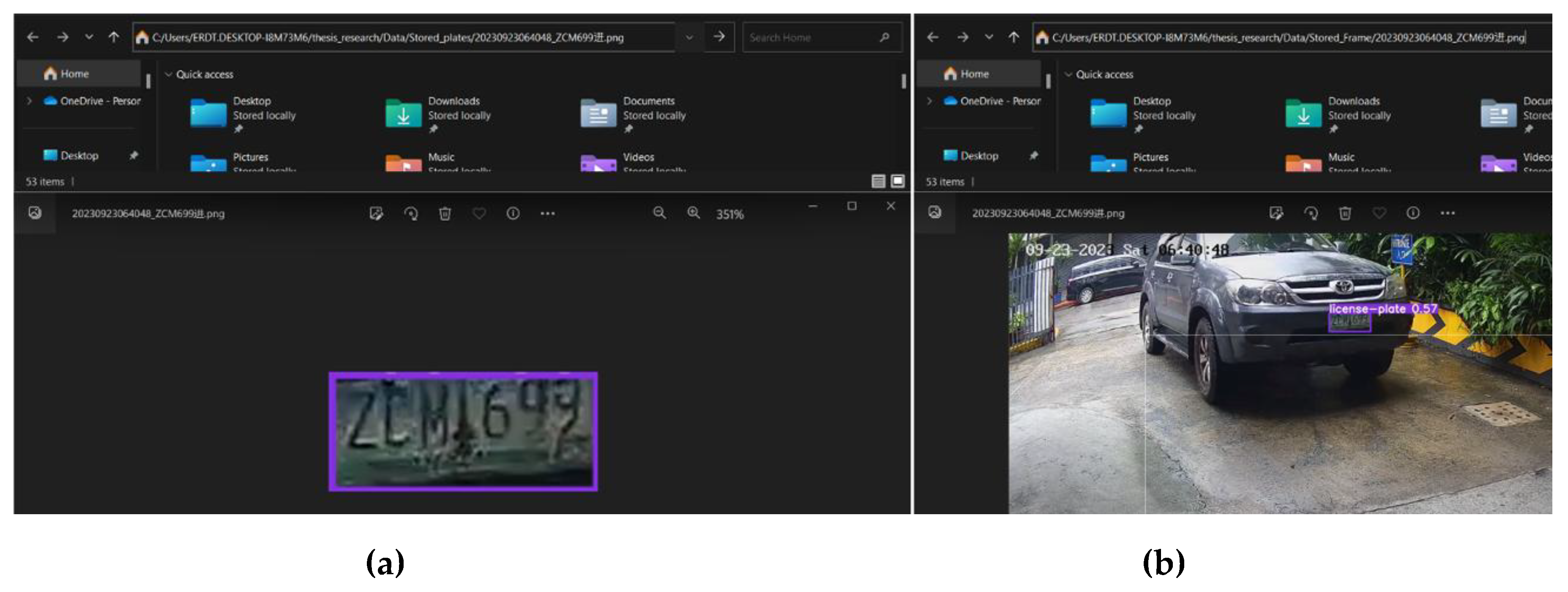

Figure 24.

Sample LPR Inference performed on video frame of parking facility footage. (a) Clear zoomed-in snapshot of the image frame, (b) Sample LPR reading stored in SQLite3 Database.

Figure 24.

Sample LPR Inference performed on video frame of parking facility footage. (a) Clear zoomed-in snapshot of the image frame, (b) Sample LPR reading stored in SQLite3 Database.

Figure 25.

Sample Output LPD Inferences taken from the (a) Entrance Driveway, and (b) the Exit Driveway during different times of the day.

Figure 25.

Sample Output LPD Inferences taken from the (a) Entrance Driveway, and (b) the Exit Driveway during different times of the day.

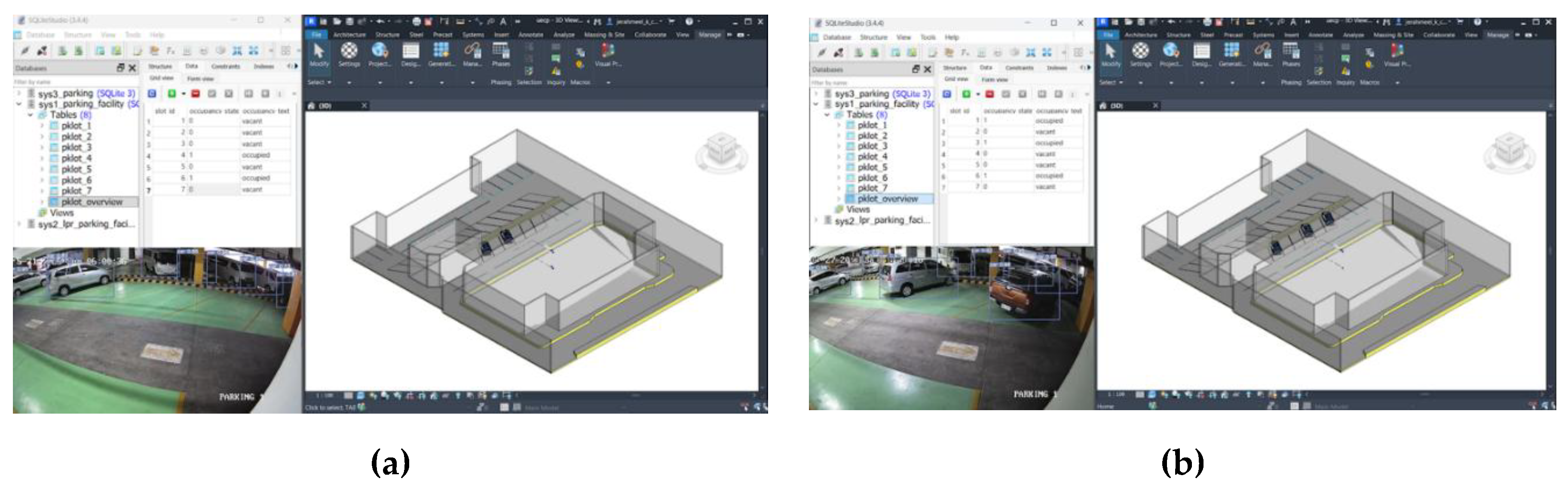

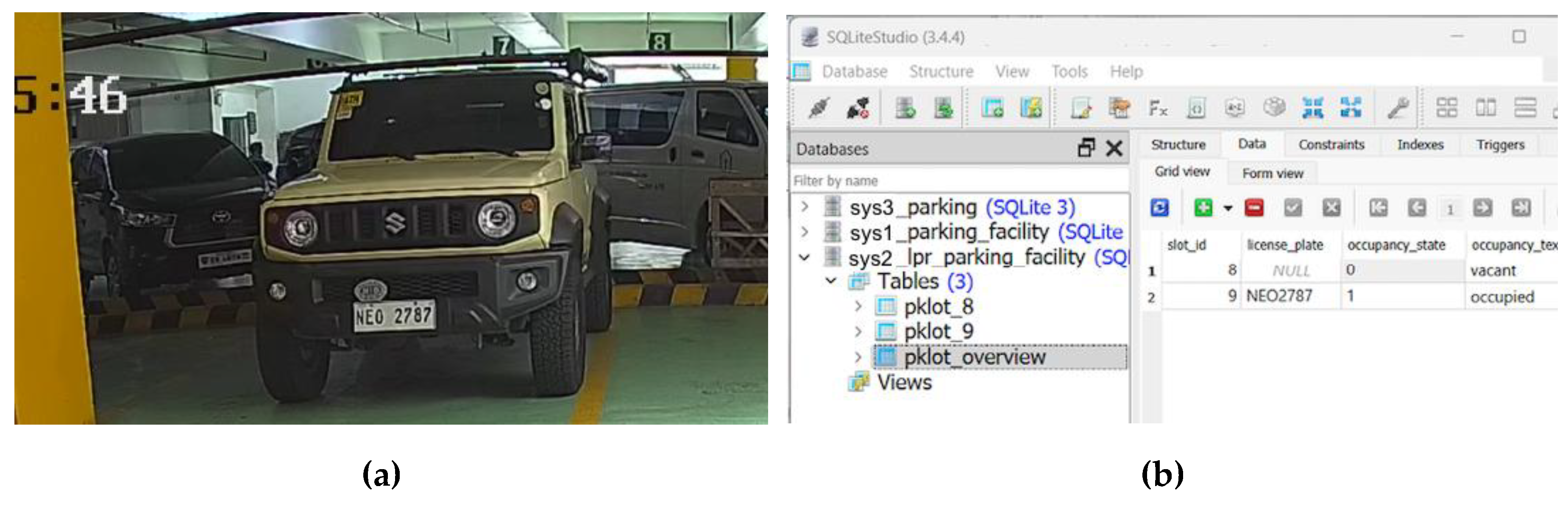

Figure 26.

Parking Space Numbering System for Database and BIM Model Reference. (a) The numbering system shown is for the vehicle detection-based POD feature system. (b) The numbering system for the LPR-based POD feature system.

Figure 26.

Parking Space Numbering System for Database and BIM Model Reference. (a) The numbering system shown is for the vehicle detection-based POD feature system. (b) The numbering system for the LPR-based POD feature system.

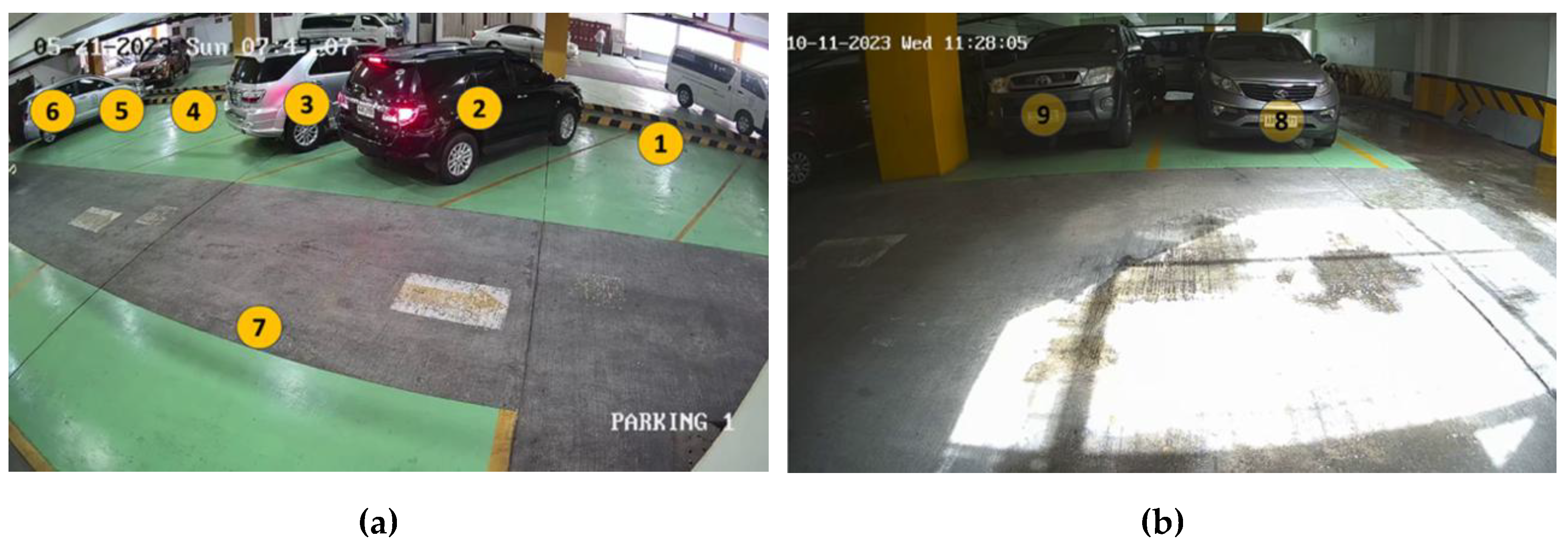

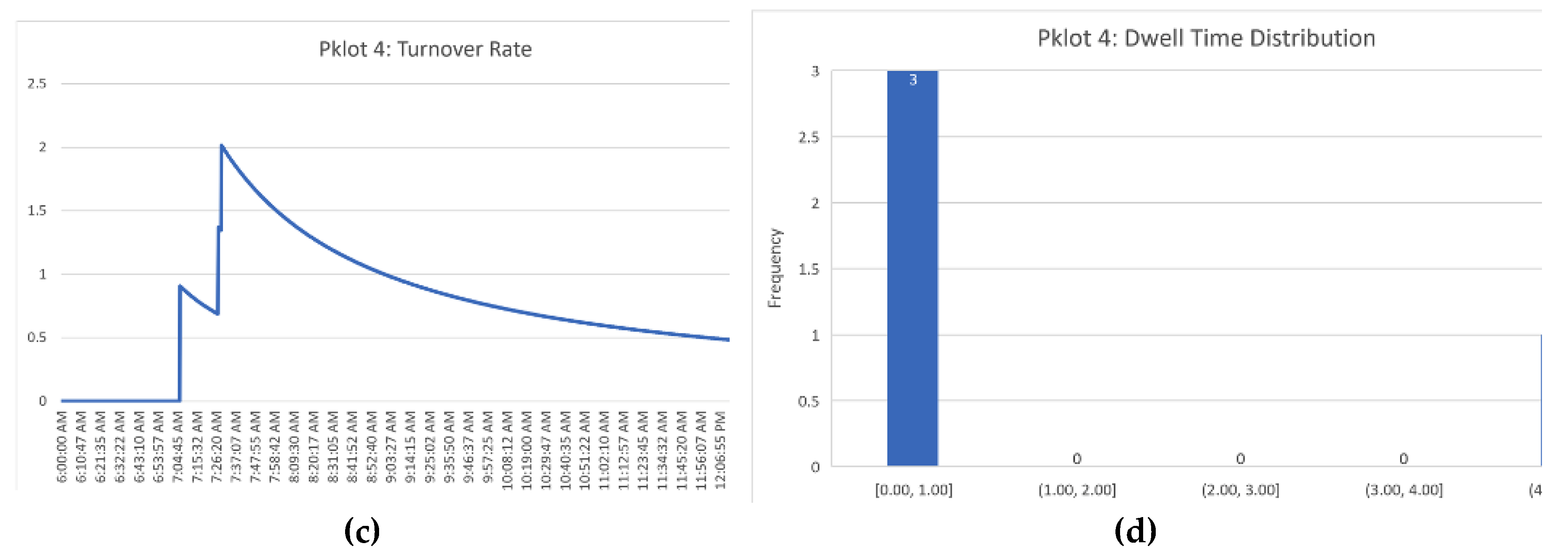

Figure 34.

DTM Data Dashboard Macro-Level Overview for the First Feature System. Metrics are displayed based on the specified filtration timestamps at the green-hued cells. Metrics are summarized at the top-middle of the dashboard, with various graphs provided below. These metrics explain vehicle parking activity for the whole parking based on vehicle occupancy in parking spaces.

Figure 34.

DTM Data Dashboard Macro-Level Overview for the First Feature System. Metrics are displayed based on the specified filtration timestamps at the green-hued cells. Metrics are summarized at the top-middle of the dashboard, with various graphs provided below. These metrics explain vehicle parking activity for the whole parking based on vehicle occupancy in parking spaces.

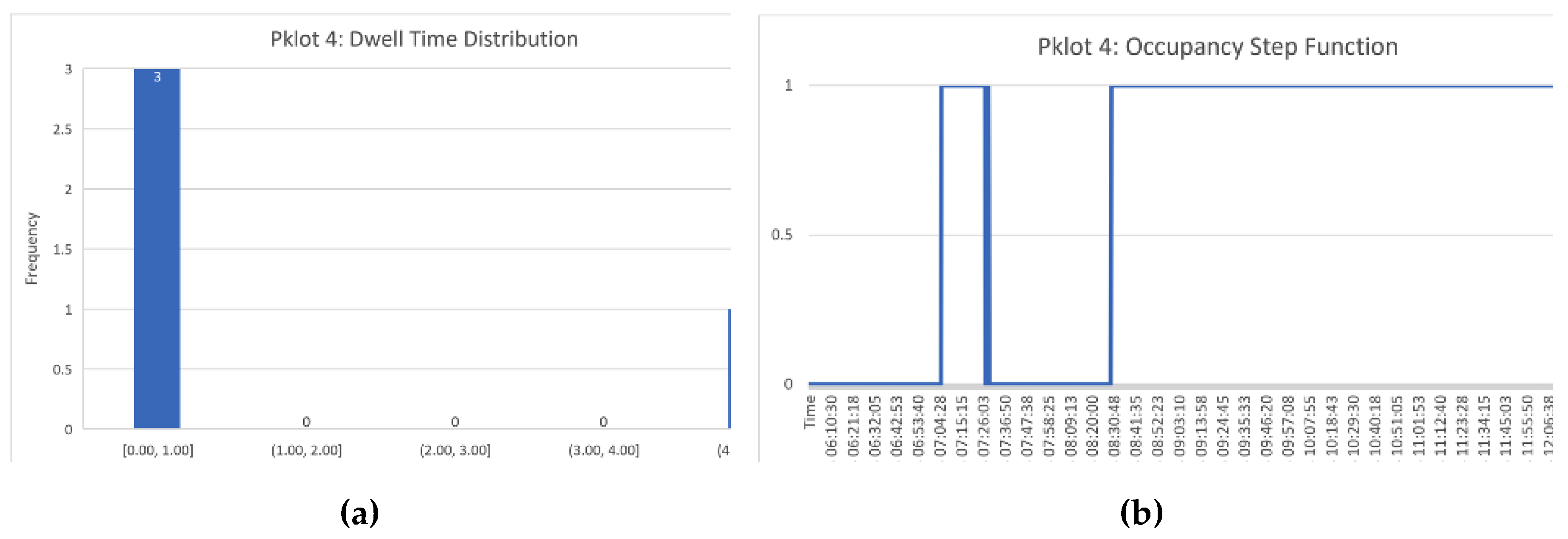

Figure 35.

Example sub-dashboard graph for Parking Slot #4. These graphs provide insights in parking activity behavior per parking slot.

Figure 35.

Example sub-dashboard graph for Parking Slot #4. These graphs provide insights in parking activity behavior per parking slot.

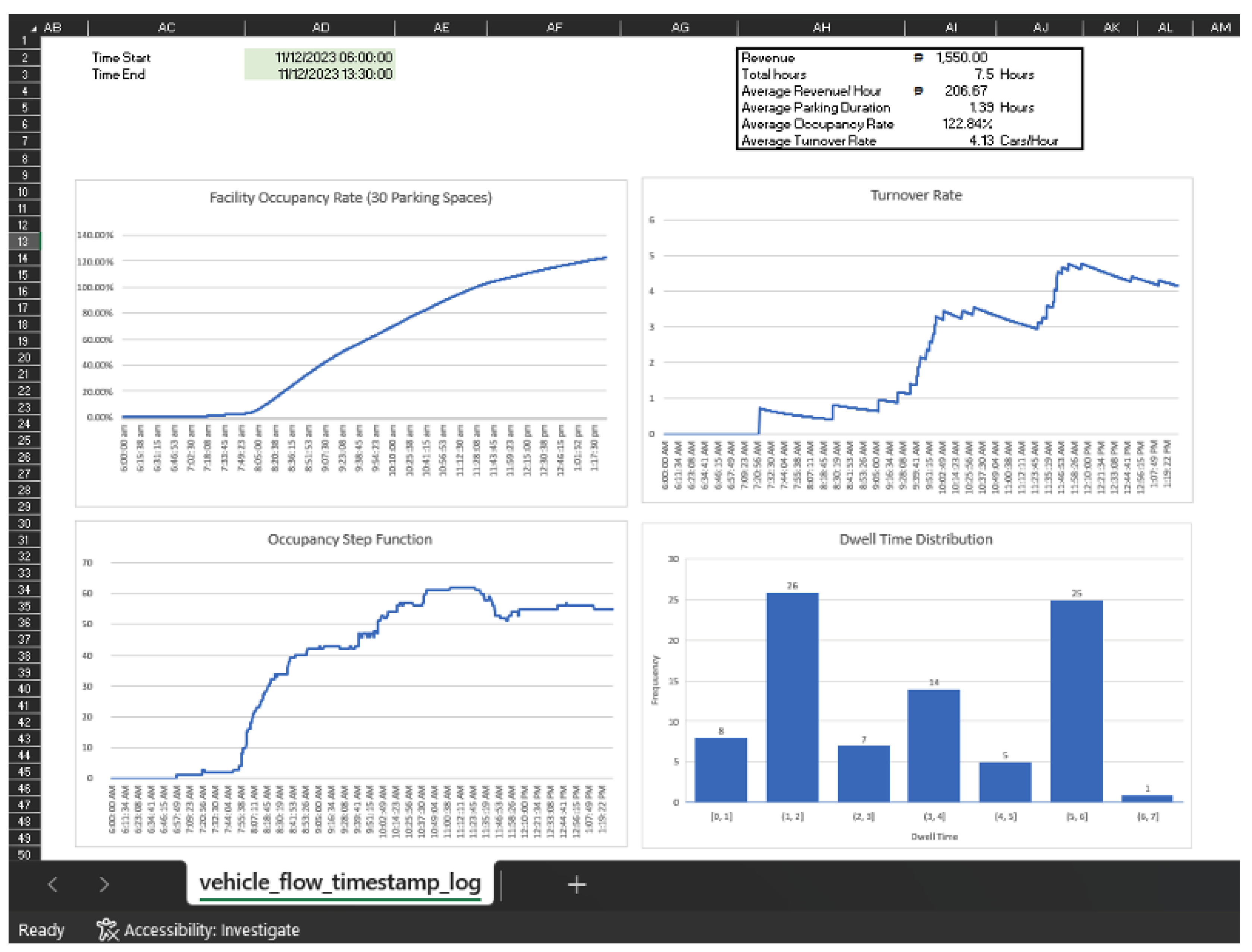

Figure 36.

DTM Data Dashboard Macro-Level Overview for the Third Feature System. Metrics are displayed based on the specified filtration timestamps at the green-hued cells. Metrics are summarized at the top-middle of the dashboard, with various graphs provided below to elucidate vehicle parking activity data in the parking facility based on vehicle entry and exit activity recorded by the parking management system.

Figure 36.

DTM Data Dashboard Macro-Level Overview for the Third Feature System. Metrics are displayed based on the specified filtration timestamps at the green-hued cells. Metrics are summarized at the top-middle of the dashboard, with various graphs provided below to elucidate vehicle parking activity data in the parking facility based on vehicle entry and exit activity recorded by the parking management system.

Figure 14.

Sample detected blurred license plate. Although LPD was performed successfully, the trained DTR model is unable to extract text from the blurred license plate.

Figure 14.

Sample detected blurred license plate. Although LPD was performed successfully, the trained DTR model is unable to extract text from the blurred license plate.

Figure 38.

Intense Sunlight Being Reflected on the Vehicle License Plates of Toyota Fortuner SUVs (right) in Two Separate Instances.

Figure 38.

Intense Sunlight Being Reflected on the Vehicle License Plates of Toyota Fortuner SUVs (right) in Two Separate Instances.

Figure 40.

Example Occluded Field of View of a Surveillance Camera.

Figure 40.

Example Occluded Field of View of a Surveillance Camera.

Table 1.

Literature Summary for ITS Research.

Table 1.

Literature Summary for ITS Research.

| Cluster |

Smart

Application

|

Publication Type |

Model

Architecture

|

Country |

Number |

|

| Traffic Control Management Applications |

Vehicle

Detection (10) |

Journal (3) |

YOLO |

Taiwan |

[52,65] |

|

| Mask R-CNN |

USA |

[19] |

|

| Conference (7) |

Deep CNN |

[19] |

|

| ANN |

Philippines |

[66] |

|

| CNN |

[59] |

|

| OpenCV |

Malaysia |

[67] |

|

| YOLO |

Italy |

[61] |

|

| Vietnam |

[68] |

|

| Philippines |

[69] |

|

License Plate

Detection (8) |

Journal (2) |

Inception V2 |

[70] |

|

| Faster R-CNN |

[57] |

|

| Conference (6) |

Bangladesh |

[71] |

|

| SSD |

|

| India |

[72] |

|

| Jordan |

[40] |

|

| YOLO |

Italy |

[61] |

|

| Philippines |

[36] |

|

License

Plate Text

Recognition (7) |

Journal (2) |

Faster R-CNN |

[57] |

|

| Inception V2 |

|

| Conference (5) |

ANN |

[8] |

|

| Jordan |

[40] |

|

| Italy |

[61] |

|

| SSD |

Bangladesh |

[71] |

|

| OpenCV |

Korea |

[43] |

|

| Smart Parking Management Applications |

Vehicle

Detection (5) |

Journal (3) |

YOLO |

Croatia |

[50] |

|

| Korea |

[33] |

|

| MobileNetV3 |

[49] |

|

| Conference (2) |

Deep CNN |

India |

[37] |

|

| Mask R-CNN |

China |

[38] |

|

License Plate

Detection (6) |

Journal (1) |

YOLO |

Korea |

[33] |

|

| Conference (5) |

SSD |

Jordan |

[40] |

|

| Russia |

[58] |

|

| YOLOR |

Philippines |

[6,16] |

|

| Faster R-CNN |

[60] |

|

License

Plate Text

Recognition (6) |

Journal (1) |

OpenCV |

Korea |

[33] |

|

| Conference (5) |

ANN |

Jordan |

[40] |

|

| Resent-18 |

Russia |

[58] |

|

| EasyOCR |

India |

[72] |

|

| Philippines |

[6,16] |

|

| Deep Text Recognition |

[36] |

|

Table 2.

Vehicle Detection-based POD Algorithm.

Table 2.

Vehicle Detection-based POD Algorithm.

| Step 1: |

Perform vehicle detection on video frame. |

|

|

(1) |

| Step 2 |

Generate the accounting for seven parking spaces. |

|

|

(2) |

|

(3) |

| Step 3: |

Perform NumPy thresholding (80 pixels) to obtain the .

|

|

(4) |

|

(5) |

| Step 4: |

Generate the Flattened 1D Occ State Array for the current frame. |

|

|

(6) |

| Step 5 |

Determine if the current inference is the first detection since initialization.

IF first,

THEN

ELSE

WHERE:

|

|

(7) |

|

(8) |

| Step 6: |

Examine all column elements in the to determine which parking spaces had changes in their occupancy state. |

| Step 7: |

Push updated data to the database depending on each value in the

. |

| Step 8: |

Return to Step 1. |

Table 3.

License Plate Recognition-based POD Algorithm.

Table 3.

License Plate Recognition-based POD Algorithm.

| Step 1: |

Perform license plate detection on video frame. |

|

| Step 2: |

Generate the for the two parking spaces. |

|

| Step 3: |

Perform NumPy thresholding (70 pixels) to obtain the .

|

|

| Step 4: |

Generate the for the current frame.

|

|

| Step 5: |

Determine if the current inference is the first detection since system initialization.

IF first,

THEN

ELSE

|

|

| Step 6: |

Examine all column elements in the

to determine which parking spaces had changes in their occupancy state. |

|

| Step 7: |

Perform corresponding action for each element found in the .

IF

THEN Perform License Plate Recognition and Extract LPR Reading.

ELSE:

Do not perform LPR. |

|

| Step 8: |

Return to Step 1. |

|

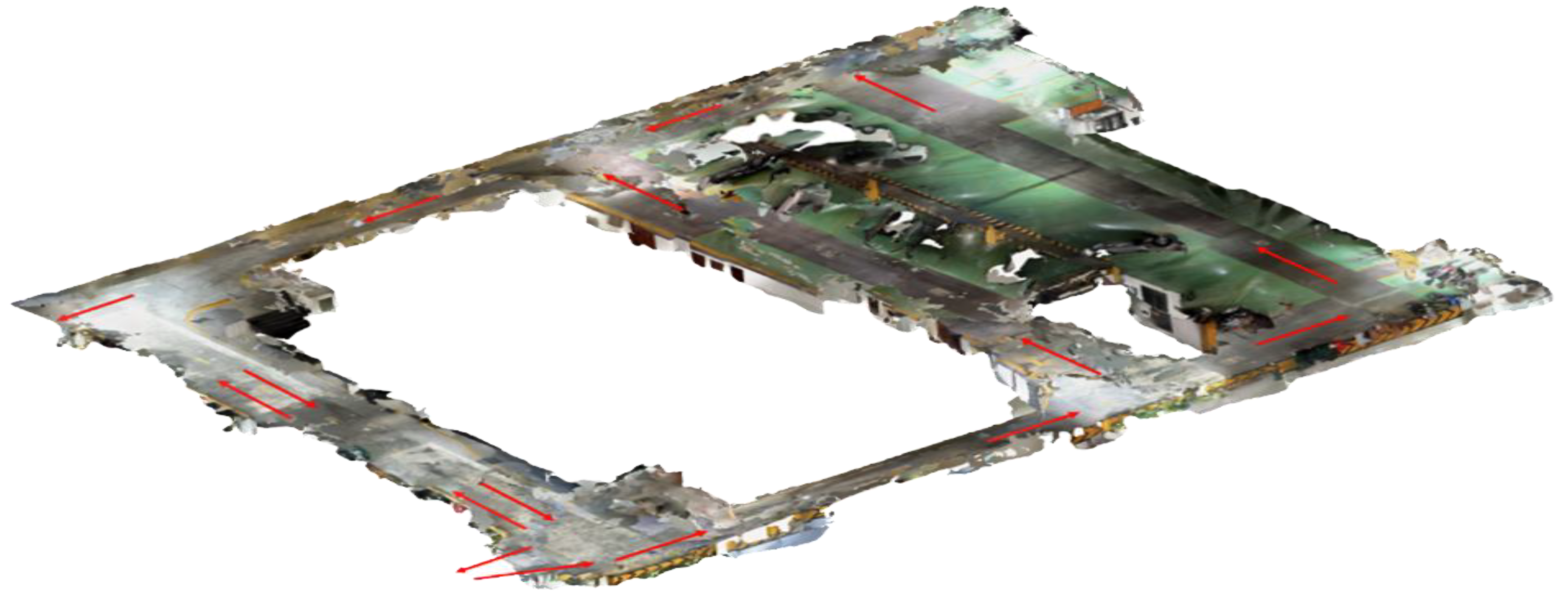

Table 4.

LPR Entry/Exit DBMS Data Processing Algorithm.

Table 4.

LPR Entry/Exit DBMS Data Processing Algorithm.

| Step 1: |

LPR during Vehicle Entry:

The vehicle is subjected to LPR upon entry. |

|

| Step 2: |

Entry Record Creation:

A new row is generated in the 进_car_record table to record the entry, which includes the entry timestamp, the LPR reading, and the reading score outputted by the system's model. |

|

| Step 3: |

Creation of Flow Log:

In the vehicle_flow_timestamp_log table, a row entry is generated in to start the cycle record of the vehicle's activity within the facility. |

|

| Step 4: |

Mirrored Entry Timestamp and Foreign Key:

The 进_timestamp from 进_car_record is mirrored in vehicle_flow_timestamp_log, which also stores the 进_car_record PK as an FK. |

|

| Step 5: |

Vehicle Exit and LPR:

When the vehicle departs, the system records an exit LPR reading and timestamp in a new 出_car_record table row. |

|

| Step 6: |

Entry Record Matching:

The system retrieves the latest matching PK from the 进_car_record table based on the vehicle's LPR reading at exit, ensuring accurate tracking of the most recent entry, even with multiple visits per day. |

|

| Step 7: |

Linking to Flow Log:

The entry foreign key of the identified primary key from the 进_car_record table is then used to locate it within the vehicle_flow_timestamp_log table. This ensures the entry and exit data belong to the exact vehicle instance. |

|

| Step 8: |

Mirroring Exit Timestamp and Foreign Key:

The exit record's primary key is stored as an foreign key in the vehicle_flow_timestamp_log table, and the exit timestamp (出_timestamp) is mirrored into the vehicle_flow_timestamp_log table. |

|

| Step 9: |

Automatic Calculations:

SQLite3 value expressions compute total parking duration (in seconds and hours) and invoicing based on pricing, using timestamps from the vehicle_flow_timestamp_log table. |

|

Table 5.

Budget Expenditure for Research Materials.

Table 5.

Budget Expenditure for Research Materials.

| Quantity |

Description |

Price |

| 1 |

ASUS ROG Strix G713QM-HX073T |

PHP |

97,920.00 |

| 1 |

HIK VISION DS-2DE3A404IW-DE/W Outdoor PTZ Camera |

PHP |

7,875.90 |

| 1 |

HIK VISION DS-Outdoor PT Camera |

PHP |

11,960.00 |

| 2 |

HIK VISION HiWatch Series E-HWIT Exir Fixed Turret Network Camera |

PHP |

3,500.00 |

| 3 |

20 Inch 60Hz LED Monitor |

PHP |

6147.00 |

| 1 |

iPhone 14 Pro (LIDAR Scanning Device) |

PHP |

65,000.00 |

| 1 |

HIK VISION DS-7604NI-Q1/4P POE NVR |

PHP |

5,161.00 |

| 1 |

Seagate ST1000VX005 1TB Skyhawk HDD |

PHP |

2,479.00 |

| 2 |

Monthly Polycam Subscription (USD 17.99/Month) |

PHP |

2,016.03 |

| |

TOTAL |

PHP |

202,058.93 |

Table 6.

Applied Image Augmentations to the Vehicle Detection Dataset.

Table 6.

Applied Image Augmentations to the Vehicle Detection Dataset.

| Augmentation Operation |

Value |

| Crop |

[0%, 5%] |

| Rotation |

[-10°, 10°] |

| Shear |

[±5° Horizontal, ±5° Vertical] |

| Grayscale |

20% of Images |

| Saturation |

[-20%, 20%] |

| Brightness |

[-25%, 25%] |

| Exposure |

[-10%, 10%] |

| Blur |

Until 2.5px |

| Noise |

Until 1% |

| Bbox Shear |

[±5° Horizontal, ±5° Vertical] |

| Bbox Brightness |

[-10%, 10%] |

| Bbox Exposure |

[-10%, 10%] |

| Bbox Blur |

Until 2px |

| Bbox Noise |

Until 1% |

Table 7.

Applied Image Augmentations to the Two LPD Datasets.

Table 7.

Applied Image Augmentations to the Two LPD Datasets.

| Augmentation Operation |

CATCH-ALL |

CUSTOM-LPD |

| Saturation |

[-50%, 50%] |

[-50%, 50%] |

| Brightness |

[-30%, 30%] |

[-30%, 30%] |

| Exposure |

[-20%, 20%] |

[-20%, 20%] |

| Blur |

Until 2px |

Until 2px |

| Noise |

Until 1% |

Until 1% |

| Rotation |

N/A |

[-5°, 5°] |

| Shear |

N/A |

[±5° Horizontal, ±5° Vertical] |

| Bbox Brightness |

[-30%, 30%] |

[-30%, 30%] |

| Bbox Exposure |

[-20%, 20%] |

[-20%, 20%] |

| Bbox Blur |

Up to 2.5px |

Up to 2.5px |

| Bbox Noise |

Until 1% |

Until 1% |

| Bbox Rotation |

N/A |

[-5°, 5°] |

| Bbox Shear |

N/A |

[±5° Horizontal, ±5° Vertical] |

Table 8.

Hardware Specifications of the Local Machine for Training.

Table 8.

Hardware Specifications of the Local Machine for Training.

| Hardware |

Technical Specification |

| GPU |

NVIDIA RTX 3060 Laptop GPU |

| CPU |