1. Introduction

With the advent of the information age, cybersecurity has become a significant global challenge. Government agencies, enterprises, and individuals are all facing increasingly complex cyber threats and attacks. Traditional cybersecurity measures, such as rule-based firewalls and intrusion detection systems (IDS), can address known threats to some extent, but they often fall short when faced with complex and evolving new types of attacks. Particularly with the intelligence and automation of attack methods, cybersecurity defenses face higher demands for real-time response and technical challenges. Large Language Models (LLMs), as a deep learning technology, have made groundbreaking achievements in natural language processing (NLP), enabling them to understand and generate natural language, demonstrating strong learning and generalization abilities. As LLM technology continues to advance, more research is exploring its potential applications in cybersecurity. LLMs can assist in analyzing massive security event logs, detecting anomalies, and predicting potential network threats based on large amounts of historical data, playing an essential role in threat detection, malicious activity identification, and security event response. This study aims to explore the application of LLMs in cybersecurity, analyzing their innovation and advantages over traditional security measures, especially in automated defense and malicious activity prediction. By comparing existing traditional security technologies, this paper focuses on the potential and challenges of LLMs in enhancing security, optimizing event response, and achieving adaptive defense. Additionally, it will examine the technical bottlenecks in their practical applications and propose future development directions and research priorities.

2. Overview of Large Language Models

2.1. Basic Principles of Large Language Models

Large Language Models (LLMs) are based on deep learning natural language processing (NLP) technologies, trained on large-scale datasets, and possess powerful language understanding and generation capabilities. The core idea of these models is to use neural networks, particularly models based on the Transformer architecture, to handle language syntax, semantics, and contextual information. LLMs can learn word relationships from large text datasets, capturing long-range dependencies in language, making them highly effective in various NLP tasks such as text generation, machine translation, and sentiment analysis.The Transformer architecture, which forms the basis of current LLMs, introduced the self-attention mechanism, significantly improving the model's ability to handle long texts and complex contexts [

1,

2,

3]. The self-attention mechanism calculates the relationships between each word and all others in the input, dynamically adjusting the importance of different words, thus allowing the model to consider all contextual information when processing sentences. Unlike traditional Recurrent Neural Networks (RNNs) and Long Short-Term Memory networks (LSTMs), Transformer models can process data in parallel, greatly improving computational efficiency and training speed.LLMs are typically trained in two stages: pre-training and fine-tuning [

4]. During pre-training, the model learns the basic language rules using large amounts of unlabeled text through self-supervised learning methods like Masked Language Modeling (MLM) and Causal Language Modeling (CLM). After pre-training, the model is fine-tuned on specific tasks, such as sentiment analysis or question answering, to further enhance its performance in practical applications.The strength of LLMs lies in their vast parameter scale, such as GPT-3, which contains 175 billion parameters. While more parameters bring stronger performance, they also require significant computational resources for training and inference. Furthermore, training data typically includes diverse texts from the internet, books, and articles, ensuring the model generalizes well across various domains and tasks.In summary, LLMs use deep learning, Transformer architecture, and self-attention mechanisms to achieve deep understanding and generation of language. They perform excellently in traditional NLP tasks and provide robust support for emerging applications, particularly in cybersecurity, automated customer service, and intelligent translation [

5,

6,

7].

2.2. Technological Progress and Trends in Large Language Models

In recent years, LLM technology has made significant progress and become a major research focus in the field of artificial intelligence. From early statistical language models to the current deep learning models, continuous innovation and optimization have allowed LLMs to excel in numerous NLP tasks, achieving breakthroughs in machine translation, sentiment analysis, and text generation. With the improvement of computational capabilities and the accumulation of data resources, the scale and application scope of LLMs continue to expand.A key technological advancement is the introduction of the Transformer architecture, which has significantly improved the efficiency and accuracy of LLMs in processing language data. Since the release of the Transformer architecture in 2017, its self-attention mechanism has become the core of LLMs [

8,

9]. Self-attention allows the model to capture long-range dependencies by calculating relationships between words, overcoming the limitations of RNNs and LSTMs in processing long sequences. The parallel computing ability and the advantage of handling long texts have greatly shortened training times and made large-scale data training feasible.In addition, the application of pre-training and fine-tuning strategies has further propelled the progress of LLM technology. The pre-training phase helps the model learn basic language patterns from vast amounts of unlabeled data, while fine-tuning tailors the model to specific tasks. Methods such as Masked Language Modeling (MLM) and Causal Language Modeling (CLM) enable the model to effectively capture semantic relationships and understand deeper language structures.As the scale of models continues to grow, their performance has also improved [

10]. For example, OpenAI’s GPT-3 model has 175 billion parameters, and larger models such as GPT-4 have further increased the number of parameters, demonstrating greater precision and flexibility in handling more complex tasks. However, with the increase in model size, the computational resources and time required for training and inference have also risen sharply, making the training cost and energy consumption of LLMs a pressing issue. Researchers are exploring more efficient training methods and optimization strategies, such as mixed-precision training, model compression, and knowledge distillation, to address these challenges.Looking ahead, the future trends of LLMs will primarily focus on two areas: first, the scale and performance of models will continue to increase, with new architectures and algorithms further enhancing their reasoning capabilities and application range; second, as technology advances, energy efficiency and computational efficiency will become key research focuses. How to reduce resource consumption and environmental impact while maintaining high performance will be an important direction for future LLM development.In conclusion, the progress of LLM technology has made their application in various fields more widespread. As technology continues to evolve, model accuracy and efficiency will further improve. In the future, LLMs will not only play a key role in traditional NLP tasks but also in emerging areas such as multimodal learning, intelligent decision-making, and automated analysis [

11].

3. Application of Large Language Models in Cybersecurity

3.1. Threat Detection and Intrusion Defense

As cyberattack methods continue to evolve, traditional security mechanisms are facing new challenges. The application of Large Language Models (LLMs) in threat detection and intrusion defense is emerging as a promising solution to combat complex security threats. LLMs, with their powerful natural language understanding and generation capabilities, can process vast amounts of network data, identify potential attack patterns, and automatically generate security responses, thereby improving the efficiency and accuracy of threat detection. Firstly, LLMs can quickly identify potential attack signs by analyzing large volumes of text data from network traffic, log files, emails, and other communication channels. For example, by analyzing abnormal patterns, malicious code, and suspicious activities in network traffic, LLMs can help security systems detect network intrusions and malicious attacks in real time [

12]. These models, by learning from known attack patterns and normal behaviors, can capture even the smallest anomalies, offering more efficient threat detection than traditional methods. In intrusion defense, LLMs can work in collaboration with Intrusion Detection Systems (IDS) to optimize attack detection and response mechanisms. Traditional IDS typically rely on rule matching or signature recognition, which is often ineffective against zero-day attacks or new types of threats. LLMs, through self-supervised learning, can handle unknown attack patterns, using their powerful learning capabilities to identify new security threats. LLMs can learn from attacker behavior patterns and predict attack actions based on the context of language, enabling proactive defense against unknown threats. For example, in the case of SQL injection or cross-site scripting (XSS) attacks on websites, LLMs can automatically analyze request data, identify potential abnormal actions, and promptly block them. Additionally, LLMs can generate defense strategies through natural language generation techniques, enhancing the automated response capabilities of intrusion defense systems. In the face of complex cyberattacks, rapid response and automated defense are critical. LLMs can automatically generate emergency response measures, such as blocking specific IP addresses, halting the spread of malicious programs, or even updating firewall configurations, enabling effective defense actions in the shortest possible time. Overall, the application of LLMs in threat detection and intrusion defense enables security systems to handle multi-dimensional data, recognize complex attack behaviors, and provide smarter automated responses. This innovative application not only improves the accuracy of network security detection but also enhances the system's ability to handle new types of threats. With continuous technological development, it is expected that LLMs will play an increasingly important role in cybersecurity, becoming an essential part of addressing future cyberattacks [

13].

3.2. Anomaly Detection and Malicious Activity Prediction

As cyberattack methods become more complex, traditional anomaly detection and malicious activity prediction methods often fail to effectively address new types of threats. Large Language Models (LLMs) have shown tremendous potential in this field due to their powerful data processing and pattern recognition abilities. Through deep learning and analysis of vast amounts of data, LLMs can accurately identify abnormal behaviors of users or systems and predict potential malicious activities, thereby enhancing network security defenses. In anomaly detection, LLMs can learn normal network behavior patterns and detect discrepancies. Unlike traditional rule-based or statistical analysis methods, LLMs can automatically extract features from data and capture more subtle and complex behavior patterns [

14]. For example, the model can identify atypical behaviors, such as account theft or the spread of malicious programs, by analyzing user login patterns, network request behaviors, and system operation logs. Additionally, LLMs can analyze the suspiciousness of behaviors based on contextual relationships, further improving detection accuracy. Through deep learning, the model can adapt to new attack patterns and previously unseen anomalies, allowing detection systems to identify known threats and respond in real time to unknown attacks. In malicious activity prediction, LLMs excel at processing large-scale data and learning from it. By training on historical data, network events, and attack logs, the model can identify potential signs of attacks and predict likely malicious activities. For instance, in the case of DDoS attacks, the model can predict large-scale traffic attacks by analyzing historical attack data, traffic patterns, and system loads, taking preventive measures in advance. Furthermore, the model can integrate external intelligence, such as hacker group activities or known vulnerabilities, to predict potential attack methods and targets, helping organizations prepare defenses proactively. The application of LLMs in anomaly detection and malicious activity prediction significantly enhances the intelligence level of security defense systems. Compared to traditional rule-based defense systems, LLMs can more accurately capture complex attack behaviors and provide real-time predictions and responses. Through self-learning and adaptive adjustments, LLMs can not only address known security threats but also react promptly to new, unknown attack methods. This technology can offer more efficient and comprehensive security protection for various network environments, ensuring data security and system stability. As LLM technology continues to evolve, its application in anomaly detection and malicious activity prediction will become even more refined, especially in handling large data volumes, identifying novel attacks, and automating responses. By combining other AI technologies, such as machine learning, deep learning, and big data analysis, LLMs’ capabilities will be further strengthened, bringing more innovation and breakthroughs to the cybersecurity field [

15,

16,

17].

3.3. Security Incident Response and Automation

As cyber threats evolve, traditional security incident response methods are increasingly inadequate for handling complex and ever-changing attack scenarios. The application of Large Language Models (LLMs) in security incident response and automation provides a new solution to improve the efficiency and accuracy of emergency responses. By leveraging the natural language processing capabilities of LLMs, security teams can more effectively analyze, respond to, and handle security incidents, while enabling automated security defenses that drastically reduce response times and minimize errors caused by human intervention [

18]. LLMs can automate the analysis of security event logs, helping security personnel quickly identify potential threats. Traditional log analysis typically requires manual inspection of large volumes of logs, which is time-consuming and prone to missing important information. LLMs, through self-supervised learning, can extract key information from vast amounts of log data, automatically identifying abnormal behaviors or attack signs and classifying them by priority. This allows security teams to focus more efficiently on the most urgent threats, greatly enhancing the speed of incident response. In the response process, LLMs’ natural language generation ability plays a crucial role. When a security incident occurs, the model can automatically generate detailed event reports, emergency response plans, and follow-up action recommendations based on analysis results. These reports not only provide decision support for security teams but also help management understand the nature, impact, and response measures of the incident. Additionally, the model can automatically recommend the most appropriate response strategies for different attack types, such as blocking specific IPs, modifying firewall rules, or isolating affected systems, enabling rapid and effective defense. Furthermore, with LLMs' automated defense capabilities, cybersecurity systems can quickly initiate automated response procedures after an incident occurs, reducing the need for human intervention. Traditional security systems often require manual rule settings and strategy updates, while LLMs can generate and adjust security policies in real time, automatically executing appropriate defense measures. For example, when the model detects a SQL injection attack, it can automatically activate database security policies, block suspicious connections, and prevent further expansion of the attack. This automated response not only improves defense efficiency but also reduces the delays and errors that may occur with manual intervention. Moreover, LLMs have significant advantages in coordinating security incident responses across systems. In large enterprises and organizations, security incidents often involve collaboration across multiple systems and platforms. LLMs can coordinate the information flow and operation commands between systems, ensuring that various security modules work in sync during incident response. Through automated cross-system collaboration, security teams can make faster decisions and execute defensive measures, avoiding information silos and response delays between different systems. In conclusion, the application of LLMs in security incident response and automation provides more efficient and intelligent solutions for cybersecurity. It not only improves the accuracy of threat detection and event analysis but also makes security defense more automated and real-time. With continuous technological advancements, LLMs will play an increasingly important role in security incident response, driving cybersecurity management towards greater efficiency and intelligence [

19,

20].

4. Data Analysis and Experimental Results

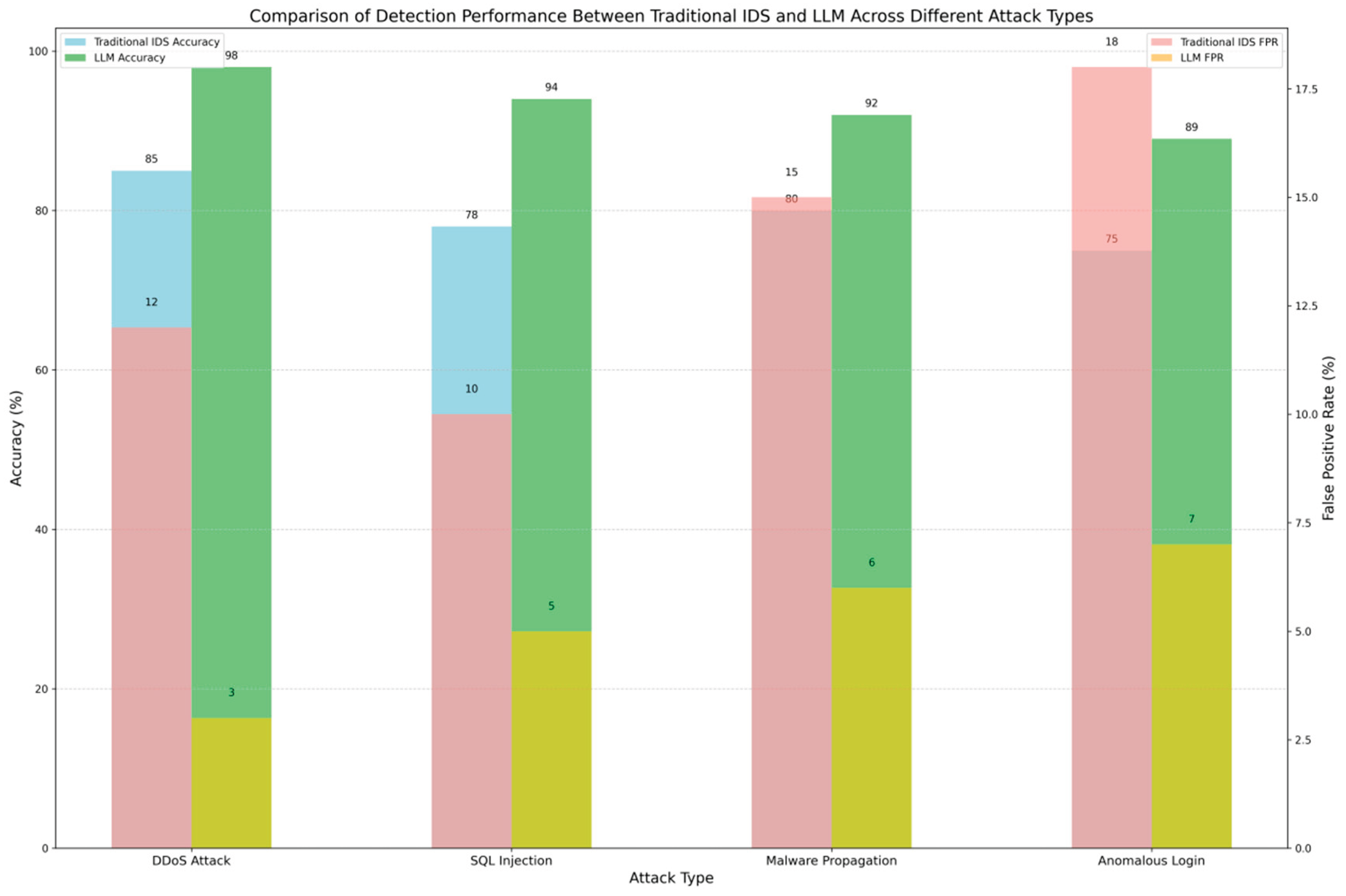

To validate the effectiveness of Large Language Models (LLMs) in cybersecurity, particularly in threat detection, anomaly behavior recognition, and security event response, multiple experiments were conducted. By comparing traditional methods with LLM-based security protection systems, the performance was evaluated in real network environments. The experimental data primarily came from simulated network attacks and abnormal behavior scenarios, including DDoS attacks, SQL injections, and malware propagation. This paper analyzes key performance indicators such as accuracy, false positive rates, and response time to demonstrate the advantages of LLMs in improving cybersecurity defense capabilities. We first collected network traffic data, system logs, and user behavior data from multiple real environments, preprocessed these data, and input them into the LLM. The experiment utilized both traditional Intrusion Detection Systems (IDS) and LLM-based detection systems, running multiple rounds of tests. The test content covered common types of network attacks and abnormal user behaviors such as DDoS attacks, SQL injections, and anomalous login activities. For each type of attack, the system's detection accuracy, false positive rate, and response time were measured. To assess the effectiveness of LLMs in cybersecurity, we compared it with traditional signature-based IDS systems. The table below shows the detection performance of both systems across different attack types [

21,

22,

23].

From the

Figure 1, it is clear that LLMs outperform traditional IDS in all tested attack types, especially in DDoS and SQL injection attacks. Furthermore, the false positive rate of LLMs is significantly lower, meaning the model is more accurate at identifying true security threats while reducing unnecessary alerts. In addition to detection performance, we also evaluated the response time of both systems. Response time is a critical indicator of a system’s defense capability, directly affecting how quickly cybersecurity incidents can be addressed. The following table compares the average response times of both systems in handling different security events [

24].

From the

Table 1, we can see that LLMs achieve faster response times for all tested security events, especially in handling DDoS and SQL injection attacks. This indicates that LLMs not only improve detection accuracy but also quickly generate and execute defense strategies, enhancing the efficiency and timeliness of event responses. The experimental results demonstrate that LLMs have significant advantages in cybersecurity, particularly in detection accuracy, false positive rate, and response time, compared to traditional methods. LLMs can handle complex cybersecurity data, identify new attack patterns, and automate response actions during security incidents [

25,

26]. These features make LLMs an important tool for improving network security and dealing with evolving attack methods. Furthermore, the results suggest that LLM performance can be further enhanced with continuous model training and data enrichment. Combining other advanced technologies such as reinforcement learning, automated configuration, and cross-system collaboration will enable LLMs to play an even greater role in cybersecurity [

27,

28,

29].

5. Challenges and Limitations of Large Language Models in Cybersecurity

Although Large Language Models (LLMs) have shown great potential in the field of cybersecurity, their application also faces several challenges and limitations.First, data privacy and security are significant challenges in the use of LLMs for cybersecurity applications. Training LLMs requires vast amounts of network data, which often contains sensitive information, such as user behavior and access logs. If this data is not properly handled, it could lead to privacy breaches or data misuse. Therefore, ensuring the security and privacy of data, especially when dealing with sensitive information, is a key issue in the application of LLMs. Second, computational resources and costs represent another major challenge. LLMs typically require extensive computational resources for training and inference, especially when dealing with complex cybersecurity tasks. For large enterprises and organizations, the hardware costs and energy consumption required to train and operate these models are high, which could limit their use in small to medium-sized businesses. Additionally, model generalization and false positives are limitations of LLMs. While LLMs perform well in threat detection and malicious activity prediction, they rely on vast amounts of training data, and their generalization ability still has limitations. When facing unknown types of attacks, the model may generate false positives or miss some threats, leading to instability in the defense system. Lastly, model transparency and interpretability are important technical challenges. LLMs are often seen as "black boxes," meaning their decision-making process is difficult to fully understand and explain. In the field of cybersecurity, especially in environments with increasing legal and compliance requirements, the lack of interpretability may limit the use of these models. In summary, although LLMs hold great promise in cybersecurity, they still face many challenges in areas such as data privacy protection, computational resource demands, model generalization ability, and interpretability. With ongoing technological advancements, it is expected that these issues will be addressed in the future through algorithm optimization, improved model efficiency, and enhanced security measures.

6. Conclusion

Large Language Models (LLMs) have demonstrated tremendous potential in cybersecurity, particularly in areas such as threat detection, anomaly behavior recognition, and security event response. Through their powerful data processing capabilities and self-learning mechanisms, LLMs can significantly improve the accuracy and speed of cybersecurity defenses. However, despite their significant advantages, the application of LLMs still faces challenges related to data privacy, computational resources, model generalization, and interpretability. In the future, with continued optimization of technology, LLMs are expected to play an increasingly important role in cybersecurity, especially in intelligent defense and automated response, helping to evolve cybersecurity systems towards more efficient and precise protection mechanisms.

References

- Xiang A, Qi Z, Wang H, et al. A multimodal fusion network for student emotion recognition based on transformer and tensor product. 2024 IEEE 2nd International Conference on Sensors, Electronics and Computer Engineering (ICSECE). IEEE, 2024: 1-4.

- Diao, Su, et al. "Ventilator pressure prediction using recurrent neural network." arXiv preprint arXiv:2410.06552 (2024).

- Yang, Haowei, et al. "Optimization and Scalability of Collaborative Filtering Algorithms in Large Language Models." arXiv preprint arXiv:2412.18715 (2024). [CrossRef]

- Yin J, Wu X, Liu X. Multi-class classification of breast cancer gene expression using PCA and XGBoost. Theoretical and Natural Science, 2025, 76: 6-11. [CrossRef]

- Tan, Chaoyi, et al. "Generating Multimodal Images with GAN: Integrating Text, Image, and Style." arXiv preprint arXiv:2501.02167 (2025).

- Yang, Haowei, et al. "Analysis of Financial Risk Behadvior Prediction Using Deep Learning and Big Data Algorithms." arXiv preprint arXiv:2410.19394 (2024). [CrossRef]

- Tang, Xirui, et al. "Research on heterogeneous computation resource allocation based on data-driven method." 2024 6th International Conference on Data-driven Optimization of Complex Systems (DOCS). IEEE, 2024.

- Mo K, Chu L, Zhang X, et al. Dral: Deep reinforcement adaptive learning for multi-uavs navigation in unknown indoor environment. arXiv preprint arXiv:2409.03930, 2024.

- Lin, Xueting, et al. "Enhanced Recommendation Combining Collaborative Filtering and Large Language Models." arXiv preprint arXiv:2412.18713 (2024).

- Shi X, Tao Y, Lin S C. Deep Neural Network-Based Prediction of B-Cell Epitopes for SARS-CoV and SARS-CoV-2: Enhancing Vaccine Design through Machine Learning. arXiv preprint arXiv:2412.00109, 2024.

- Xiang A, Huang B, Guo X, et al. A neural matrix decomposition recommender system model based on the multimodal large language model. Proceedings of the 2024 7th International Conference on Machine Learning and Machine Intelligence (MLMI). 2024: 146-150.

- Wang T, Cai X, Xu Q. Energy Market Price Forecasting and Financial Technology Risk Management Based on Generative AI. Applied and Computational Engineering, 2024, 100: 29-34. [CrossRef]

- Shen, Jiajiang, Weiyan Wu, and Qianyu Xu. "Accurate prediction of temperature indicators in eastern china using a multi-scale cnn-lstm-attention model." arXiv preprint arXiv:2412.07997 (2024). [CrossRef]

- Xiang A, Zhang J, Yang Q, et al. Research on splicing image detection algorithms based on natural image statistical characteristics. arXiv preprint arXiv:2404.16296, 2024. [CrossRef]

- Wang, H., Zhang, G., Zhao, Y., Lai, F., Cui, W., Xue, J., ... & Lin, Y. (2024, December). Rpf-eld: Regional prior fusion using early and late distillation for breast cancer recognition in ultrasound images. 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM): 2605-2612.

- Yu Q, Wang S, Tao Y. Enhancing anti-money laundering detection with self-attention graph neural networks. SHS Web of Conferences. EDP Sciences, 2025, 213: 01016. [CrossRef]

- Min, Liu, et al. "Financial Prediction Using DeepFM: Loan Repayment with Attention and Hybrid Loss." 2024 5th International Conference on Machine Learning and Computer Application (ICMLCA). IEEE, 2024.

- Ge, Ge, et al. "A review of the effect of the ketogenic diet on glycemic control in adults with type 2 diabetes." Precision Nutrition 4.1 (2025): e00100.

- Xiang A, et al. User Behavior Analysis in Privacy Protection with Large Language Models: A Study on Privacy Preferences with Limited Data. arXiv preprint arXiv:2505.06305, 2025.

- Li, Xiangtian, et al. "Artistic Neural Style Transfer Algorithms with Activation Smoothing." arXiv preprint arXiv:2411.08014 (2024).

- Guo H, Zhang Y, Chen L, et al. Research on vehicle detection based on improved YOLOv8 network. arXiv preprint arXiv:2501.00300, 2024. [CrossRef]

- Huang B, Lu Q, Huang S, et al. Multi-modal clothing recommendation model based on large model and VAE enhancement. arXiv preprint arXiv:2410.02219, 2024.

- Tan, Chaoyi, et al. "Real-time Video Target Tracking Algorithm Utilizing Convolutional Neural Networks (CNN)." 2024 4th International Conference on Electronic Information Engineering and Computer (EIECT). IEEE, 2024.

- Yang, Haowei, et al. "Research on the Design of a Short Video Recommendation System Based on Multimodal Information and Differential Privacy." arXiv preprint arXiv:2504.08751 (2025).

- Ziang H, Zhang J, Li L. Framework for lung CT image segmentation based on UNet++. arXiv preprint arXiv:2501.02428, 2025. [CrossRef]

- Qi, Zhen, et al. "Detecting and Classifying Defective Products in Images Using YOLO." arXiv preprint arXiv:2412.16935 (2024). [CrossRef]

- Yin Z, Hu B, Chen S. Predicting employee turnover in the financial company: A comparative study of catboost and xgboost models. Applied and Computational Engineering, 2024, 100: 86-92. [CrossRef]

- Shih K, Han Y, Tan L. Recommendation System in Advertising and Streaming Media: Unsupervised Data Enhancement Sequence Suggestions. arXiv preprint arXiv:2504.08740, 2025. [CrossRef]

- Lv, Guangxin, et al. "Dynamic covalent bonds in vitrimers enable 1.0 W/(m K) intrinsic thermal conductivity." Macromolecules 56.4 (2023): 1554-1561. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).