1. Introduction

Deep learning, a pivotal subset of machine learning, has emerged as a cornerstone of modern artificial intelligence (AI), enabling machines to process vast datasets and perform tasks once exclusive to human intelligence. While the conceptual roots of AI trace back to ancient philosophical inquiries into cognition, the formal field began in the mid-20th century. This survey provides a comprehensive overview of deep learning techniques, their applications across industries such as healthcare, finance, manufacturing, and environmental science, and the ethical challenges they pose. Current AI systems excel in specific domains like automated client interactions, voice recognition, and visual pattern recognition, yet challenges in sensing, reasoning, and innovation persist, promising future advancements.

1.1. Brief History and Importance in AI

Since its inception in the 1950s, artificial intelligence (AI) has undergone significant advancements, with key milestones shaping its impact across diverse fields (Russell & Norvig, 2020). Officially recognized as a discipline in 1956 at the Dartmouth Conference, AI laid the foundation for decades of research (McCarthy et al., 2006). Periods known as "AI winters" saw reduced funding due to the limitations of early rule-based systems in tackling complex tasks (Crevier, 1993). However, the 2000s marked a resurgence, driven by machine learning— particularly deep learning—which reignited interest in AI research by leveraging vast datasets to identify complex patterns (LeCun et al., 2015).

AI technologies hold promise for enhancing decision-making, boosting productivity, and solving previously intractable problems (Agrawal et al., 2018). Yet, they also raise ethical concerns regarding privacy, bias, and job displacement, necessitating careful consideration and regulation (Bostrom & Yudkowsky, 2014). It is crucial for researchers and practitioners to understand AI’s capabilities, limitations, and societal implications as it integrates further into various aspects of society (Jordan & Mitchell, 2015). Deep learning, in particular, stands out as a transformative area with the potential to revolutionize numerous industries..

According to Agrawal et al. (2018), AI technologies guarantee in improving decision-making, increasing productivity, and resolving difficult issues that were formerly unsolvable. Despite its potential, AI also exhibits ethical issues and problems with regard to privacy, bias, and

employment displacement, calling for cautious thought and regulation (Bostrom & Yudkowsky, 2014). It is authoritative that academics and practitioners comprehend AI's capabilities, limits, and societal ramifications as it continues to grow and becomes more integrated into many facets of our life (Jordan & Mitchell, 2015). Deep learning in specific is an area where current AI method development has the potential to completely transform a number of industries.

1.2. Deep Learning

Deep learning, a subset of machine learning, automatically extracts representations or patterns from data such as images, videos, or text, without requiring human domain knowledge or manually crafted rules. For instance, while traditional machine learning might rely on predefined features like "lemons are round and yellow" to detect a lemon on a production line, deep learning independently identifies relevant characteristics from raw data. Its primary advantage lies in eliminating the need for manual feature extraction, enabling models to learn directly from complex datasets.

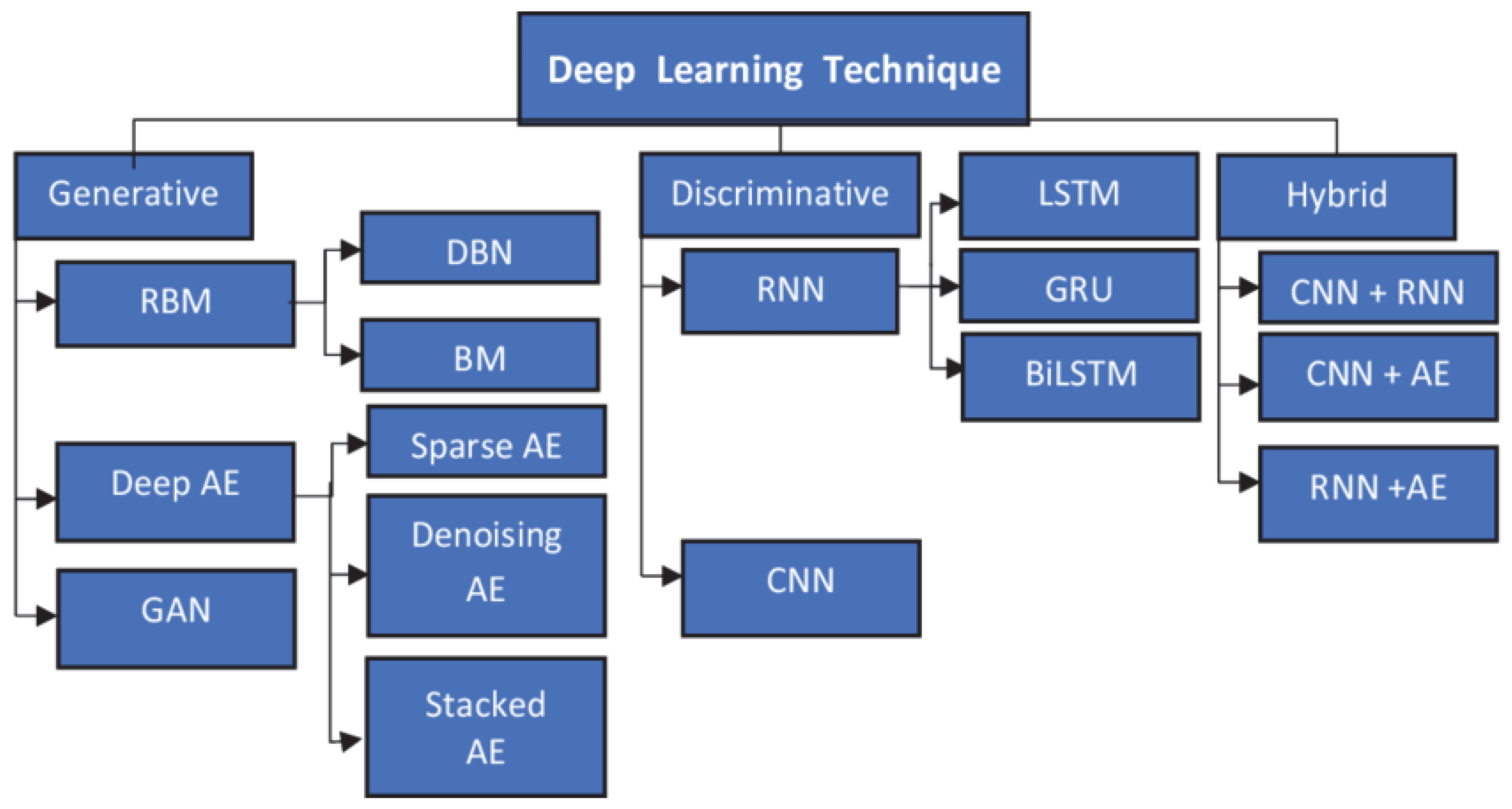

Typically, deep learning needs less human involvement, but needs more powerful hardware and resources with increased processing units to achieve a higher level of parallelism. Moreover, deep learning models consume more time to be constructed compared to machine learning models, but then once built, it will generate results quickly. Results quality will progress over time as more data is accessible or added for learning. DL algorithms can handle unstructured data in huge dimensions by engaging neural networks that are capable of learning and extracting relevant features by themselves, whereas, in case of machine learning the process depends on the programmer’s ability in deciding the features to be learned. Deep learning algorithms can be trained with a dataset containing images or instances to build a predictive model which can be further used to guess accurately from a given set of images or instances. Advanced machine learning algorithms like Artificial Neural Network (ANN) supports deep learning models and consequently, deep learning is also referred as deep neural learning or deep neural networking. An overview of key deep learning architectures and their typical applications is presented in

Figure 1.

1.3. Deep Learning Methods

Numerous approaches were used to generate robust deep learning models and the techniques includes training from scratch, transfer learning, learning rate decay and dropout.

Training from scratch: The technique needs large, labelled data set to construct a network architecture to acquire the features and model. This is particularly suitable for new applications, as well as applications with many output categories. This is an uncommon method to be used due to its lengthy time consumption (days or weeks) for training and voluminous data requirements.

Transfer learning: The procedure encompasses optimising an earlier trained / existing model and requires a port to the internals of an established network. Initially, the existing network is fed with fresh data containing unnamed groupings. After fine-tuning the existing network, new tasks were executed with improved classifying ability. Most importantly, this method requires lesser data, thereby reducing the time consumption considerably.

Learning rate decay: This technique starts with large learning rate and declines numerous times by supporting both generalization and optimization Learning rate is a regulation parameter that determines the step size of each iteration while moving to a minimum loss function. The learning rate symbolizes the speed at which the model learns by overruling the old information grounded on newly acquired information. Excessively high learning rates result in unbalanced training process and small learning rates may crop a prolonged training procedure with the possibility of getting stuck.

Dropout: Deep learning networks are artificial neural networks with loads of layers of neurons between input and output. This technique drops neurons from neural network or overlooks during training, i.e., neurons were detached from the neural network on a temporary basis. In other words, dropout amends the idea of learning all the weights or neurons in the network to learning only a portion of neurons in the network. In every iteration, dissimilar groups of neurons are stimulated, to avoid some neurons from directing the method. This supports to decrease overfitting and enables deeper and better network architectures to make good estimates on fresh data. Overfitting is a situation whereby there are few samples or instances to learn from resulting in poor prediction on fresh data. The difficulty is in generalizing from limited samples leads to poor performance.

1.4. Overview of Key Deep Learning Techniques

Artificial intelligence has been transformed by deep learning, a subset of machine learning that grants machines to acquire hierarchical representations of data (LeCun et al., 2015). Artificial neural networks with several layers that can automatically obtain features from natural data is the basis of deep learning (Goodfellow et al., 2016). On the choice of the CNNs, Krizhevsky et al., (2012) noted that they have displayed a great performance in image-related tasks, achieving new frontiers in computer vision tasks. RNNs and their variants like the LSTM has done reasonably well in analysing time series data in speech recognition and natural language processing (Hochreiter & Schmidhuber, 1997). The capability of deep learning models in understanding remote dependencies in sequential data has been improved with the incorporation of attention mechanisms and the transformer model (Vaswani et al., 2017). Availability of new sources of data has opened up by generative models for learning such as generative adversarial networks (GANs) and variational autoencoder (VAE) (Goodfellow et al., 2014). Kingma & Welling, 2013). By integrating deep neural networks with reinforcement

learning methods, deep reinforcement learning has brought breakthrough development in gaming and other dynamic decision-making problems (Mnih et al., 2015). The utilization of transfer learning and fine-tuning methodologies make it uneconomical to obtain comparatively big labeled data set (Pan & Yang, 2009). The gains have been posited in self-supervised learning with regards to representation learning from unlabelled data (Jing & Tian, 2020).

2. Fundamentals of Deep Learning

Deep learning is one of the many ancillary branches of machine learning which has recently been through a period of development and has brought radical shifts in artificial intelligence and the ADA framework. On these ideas, there are some points based on understanding of the neural network architecture and feature extraction that are important: At the core of these innovations are the neural network architectures that have demonstrated miraculous results in automatic feature extraction and have contributed highly to the development of the tasks of machine learning (Alom et al., 2019; Farsal et al., 2018).

2.1. Neural Networks Basics

In the centre of deep learning is a neural network – a calculation model that is based on a neural structure of the human brain. These consist of abstractions of interconnected layers of artificial neurons including each of which is able to process information as well as propagate the same. The neurons are basic units of the neural networks that are widely used in the composition of systems. As mathematical instruments, they are designed to take in input and then compute it and produce the desired output. Neural networks demonstrate great flexibility, as it is capable to learn non-linear functions through the altering of weights between neurons in the learning process. This makes it possible for them to learn patterns from the data and they stand out from the other types as they can be used for various jobs such as image recognition, natural language processing, and predictive modelling.

Neural networks in deep learning are designed with a specific architecture and the design has an impact on its performance. Core architectures, including convolutional neural networks and recurrent neural networks, have turned out to be very effective and efficient in delivering higher accuracy in many areas of application. They use the specific features of graphs, that are connectivity in the local area and sequential data processing, to solve various tasks (Mazurowski et al., 2018; LeCun et al., 2015).

2.2. Training Process Overview

From the literature, a deep learning model training process generally entails several vital stages as postulated by Shickel et al., 2017. The first stage of model training is to choose a neural network type such as convolutional or recurrent network depending on the requirements of the task in order to form the model structure (Guo et al., 2015). The training process for the given model involves use of a large set of sample data to fine tune the parameters of the model through an optimization loop that involves minimizing the loss function to increase the model accuracy. During the training, the model gains the understanding of how to extract patterns in the data and how to make accurate predictions. Training such large and complex models is computationally intensive as the process takes time and usually uses GPUs or distributed systems. (Tang et al., 2020)

2.3. Common Challenges for Beginners

Learners who engage in learning with the help of deep learning experience a number of obstacles that may affect the progress of the learning process, including issues with data and technical application..

Data Challenges

Data Preparation: That is why data pre-conditioning takes a substantial amount of time in deep learning, starting with data acquisition and ending with data checking. Freshers generally face some issues while working with bias or noisy data, and the impact on the model can be substantial (Whang & Lee, 2020).

Data Quantity and Quality: Deep learning models are very dependent on large volumes of quality data, which the learner may have a hard time accessing and managing (Whang & Lee, 2020).

Technical Challenges

Program Crashes and Debugging: Beginners frequently encounter program crashes and have limited debugging support, which can be frustrating and time-consuming (Zhang et al., 2019).

Model Migration and Implementation: There is a question about how one can move models from one framework to another and how one should do it properly. This include AVI misuse can be defined as wrong selection of hyperparameters as pointed by Zhang et al (2019).

Computational Resources: Here are some of the common challenges which a beginner may experience; utilization of GPU computation and control of static graph compute.

Learning and Understanding

Complex Concepts: At times, it is just not necessary to get bothered with the mathematical logic and the model structures of a neural network. This also comprises the knowledge of how CNNs, RNNs and other more complicated networks including the above mentioned one works (Rivas, 2020).

Framework Setup: Setting up and using popular deep learning frameworks like TensorFlow and Keras can be challenging without prior experience (Rivas, 2020).

Some of the challenges of beginners in deep learning include data handling, implementation, and understanding of the concept. For them to be able to overcome these challenges, they need to be good in handling data, master deep learning frameworks and have good knowledge about mathematics used in deep learning.

3. Convolutional Neural Networks (CNNs)

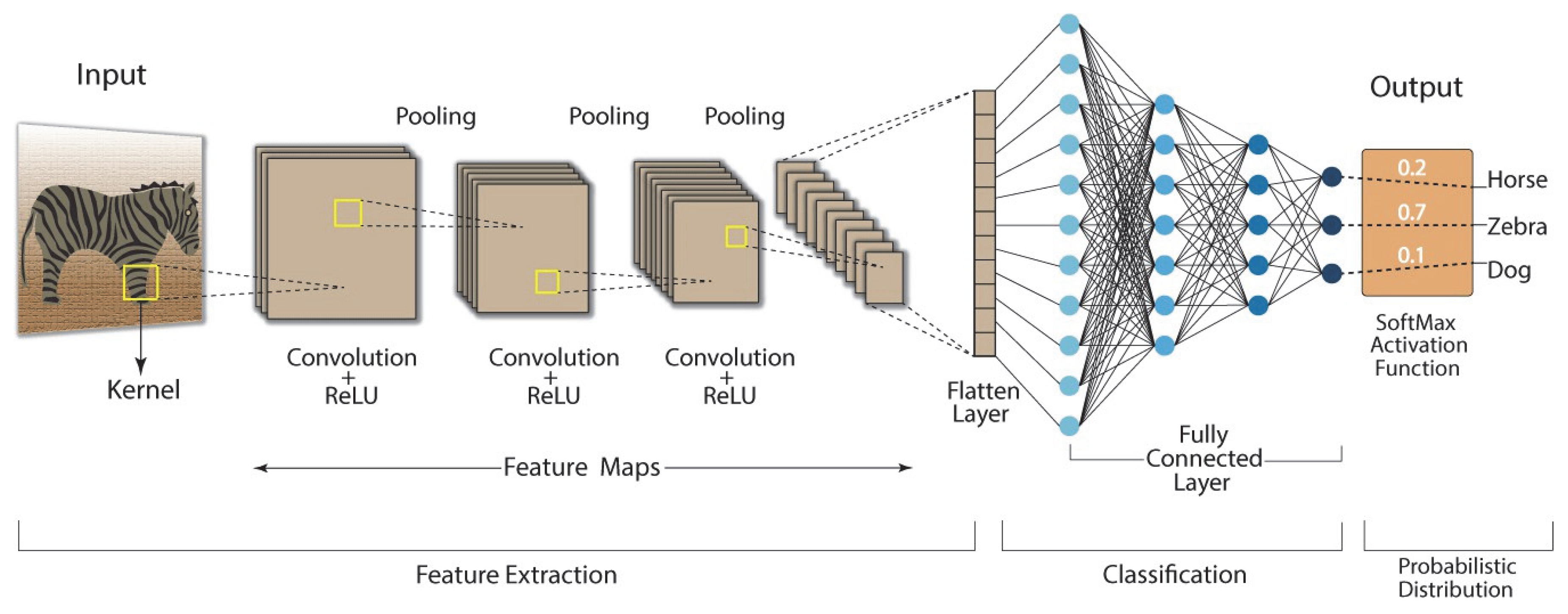

The cutting-edge architecture of neural network of Convolutional Neural Networks (CNNs) took the computer vision to a whole new level while also making solving natural language processing and speech recognition tasks easier. CNNs learning from the biological inspiration by mimicking animal vision systems learn features from data as well as extract data attributes, making them powerful for visual tasks like image categorization, object identification, video handling task, and so on.

3.1. Basic Architecture

The essential layers which represent CNNs incorporate distinctive elements that transform and extract knowledge from input data.

Convolutional Layers: By a process known as convolution these layers use filters to act on input data in order to extract features out of which they detect edge boundaries and textural patterns and shapes (Khan et al., 2019; Ghosh et al., 2019; Bhatt et al., 2021)

Pooling Layers: These layers reduce the spatial dimensions of data while reducing requirements for processing and ensure translation invariance to the system. The two standard pooling procedures in CNN processing are average pooling and max pooling (Ghosh et al., 2019; Bhatt et al., 2021).

Fully Connected Layers: The network processes data through its fully connected layers where it makes the final predictions based on obtained features (Albelwi & Mahmood, 2017).

Figure 2 provides a visual representation of a typical CNN architecture, showing convolutional, pooling, and fully connected layers in sequence.

3.2. Function and Applications

One of the main facilitators of the universal success of CNNs across various application domains is their ability to acquire structured hierarchical information from data. Attraction to image and video processing is high because CNNs produce state-of-the-art results in various benchmark evaluation strategies for such tasks as object detection and video analysis. The synergy between Natural Language Processing, Speech Recognition, and the application of CNNs for conducting a sentiment analysis and turning speech into text (Khan et al., 2019; Bhatt et al., 2021; Albelwi & Mahmood, 2017).

3.3. Architectural Innovations

Recent years’ aim to develop CNN architectures is to increase representational capacity and efficiency. Architecture techniques with usage of spatial and channel information combined with deep and wide networks as well as multi-path processing strategies improve CNN operational performance. Various algorithms such as genetic algorithms and Cartesian genetic programming have come up with automatic strategies to develop CNN architectures that reduce human expert interactions (Suganuma et al., 2020; Sun et al., 2018; Sun et al., 2020).

3.4. Applications of CNN

Convolutional Neural Network (CNNs) is a cornerstone technology in deep learning and has substantially changed diverse fields by enabling automatic feature detection and classification.

Land Cover Mapping: CNNs are greatly used for land cover classification as in which critical features are detected by the CNN alone without human guidance/assistance. Different CNN constructions have been developed to improve performance; however, no one model has been able to be best in all scenarios. Current developments strive to overcome problems and find new potential in the channel of land cover mapping (Kotaridis & Lazaridou, 2023). Industrial

Defect Detection: In an industrial setting CNNs have revolutionized quality inspection and defect detection by providing high accuracy and real time detection of surface defects. Nevertheless, the challenges of few datasets and computational complication still exist calling for more research to perfect the CNN application in industries (Khanam et al. 2024).

Smart Home Applications: Besides Recurrent Neural Networks (RNN), increasingly, CNN are used in smart homes for personalized functionality. They are superior at activity recognition, an important application area. By integrating CNNs into smart home systems it becomes possible to provide more efficient and easily used services (Yu et al., 2022).

Network Governance: CNNs are applied for the purpose of achieving the improvements in performance, security and allocation of resources in the network governance. Accuracy in the identification and classification of network applications increases CNNs for networking and governance, thus providing sustained solutions for increased performance and security (Gadhiya et al., 2024).

Visual Sentiment Analysis: Used on social media, CNNs can be tailored for visual sentiment analysis specifically in cloud environments. The integration of gabor filters with CNNs is an effective cost leaner that increases accuracy, making them apt for cloud-based applications, (Urolagin et al., 2022).

4. Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNN) are versatile solutions when it comes to the analysis of sequential data and are largely used in such applications as time series analysis, and natural language processing. They are especially appreciated for their power to catch temporal dependencies in data.

Figure 2 depicts the unrolled form of RNN that shows how the sequential input is operated across the time step shapes. However, their prospects also have some challenges such as prediction uncertainty, managing heterogeneous data, and difficulties with long term memory modelling.

4.2. Structure and Capability with Sequential Data

Uncertainty and Robustness in RNNs: Noise and heterogeneity in the data characterize the environments where RNNs are commonly placed, meaning that prediction uncertainty is a high matter. It is prone to be difficult for traditional RNNs to estimate this uncertainty. The TRUST framework proposes a technique to pass uncertainty through RNNs by placing a Gaussian prior over network parameters and computing the moments of the Gaussian variational distribution. This approach improves the robustness of RNNs to noise and adversarial attack, through providing a self-assessment mechanism that creates greater uncertainty to changes in noise level (Dera et al., 2024).

Universality and Approximation Capabilities: The universality of RNNs particularly within deep narrow structures has been proven, indicating that they are able to approximate any continuous function, under specific regionalities of the width. This universality is data length independent, which is important for practical use. In addition, this study further develops the idea of universality to other recurrent networks, like bidirectional RNNs, thus filling in the modeling gap between multi-layer perceptron’s and RNNs (Song et al., 2022).

Handling Heterogeneous and Sensitive Data: RNNs are faced with problems in dealing with heterogeneous sequential data especially for IoT applications where data privacy is topmost. The development of a DPTRNN with a tensor-based RNN accommodates these problems by having a tensor-based model, and a privacy-preserving approach to back-propagation in the training of RNN. With this method data privacy is maintained while having an acceptable accuracy which makes it appropriate for intimate IoT data (Feng et al., 2024).

Memory and Temporal Dependencies: RNNs are known to be able to do modelling of temporal dependencies but their computation suffers from vanishing and exploding gradient problem, thus limiting their capacity to learn the long-term dependence. By directly distributing input information via time optimality the proposed DMU enhances time modelling. With this approach, complexities in network dynamics are reduced, and the performance is improved with fewer parameters (Sun et al., 2023).

Quantum and Fourier Approaches: Today’s new advancements on RNN architectures are quantum computing and Fourier transformation. Quantum RNNs (QRNNs) leverage quantum devices to improve and deliver more efficient predictions and handle sequential data better than classical quantum devices. Just as Fourier-RNNs fuse Fourier Neural Operators and RNN architecture to model noisy, non-Markovian data more efficiently (or better than traditional RNNs in these cases) (Gopakumar et al., 2023; Li et al., 2023).

4.1. Applications of RNN

Recurrent Neural Networks (RNNs) are neural networks, and its design provide capacity to recognize patterns for sequential data therefore it results effective processing sequential data with high performance. Such networks have a general applicability in many areas because they retain their information for a time.

Language Modelling and Processing: Recurrent Neural Networks prove effective as language models because they effectively process statistical data through their ability to detect extended dependencies in sequential information. Extension works and optimization efforts have been developed to solve RNNs' time-intensive training requirements and context word constraint issues (Mulder et al., 2015; Yu et al., 2019).

Time Series Forecasting: Time Series Forecasting demonstrates that RNNs function excellently for predicting future occurrences in temporal sequence data including environmental factor forecasting. Their dynamic equations enable them to analyze different temporal patterns in order to work across multiple engineering and scientific operations (Chen et al., 2018).

Speech and Audio Processing: RNNs particularly the Long Short-Term Memory (LSTM) network architecture enables effective processing of speech and audio through its ability to handle sequence data and long-term dependencies (Yu et al., 2019; Lipton 2015)

Image and Video Analysis: RNNs analyze sequential image frames alongside videos in order to produce descriptive outputs that evaluate temporal adjustments in these media formats (Lipton 2015).

Quantum Computing: Quantum Recurrent Neural Networks (QRNNs) present great promise as future models of quantum sequential learning since they outperform classical RNNs in terms of accuracy and efficiency (Li et al., 2023).

Adaptive Control Systems: These control systems employ RNNs to predict as well as generate complex temporal patterns that respond to variations in environmental factors and provide higher stability along with improved operational performance (Pourcel, 2024).

5. Generative Adversarial Networks (GANs)

One type of deep learning model, Generative Adversarial Networks (GANs), teaches a discriminator and a generator neural network simultaneously. This paradigm is based around an adversarial process whereby the discriminator evaluates synthetic data samples to ascertain whether they are synthetic or original, while the generator generates synthetic data samples that try to mimic the distribution of original data (Goodfellow et al., 2014; Goodfellow et al., 2020).

5.1. Concept of Generator and Discriminator

The generator is a neural network which generates fake data similar to actual data by taking a random noise vector as input. The goal is to “fool” the discriminator in to classifying it as the real thing by generating data which is supposed to be indistinguishable from real data. In a minimax two-player game the generator is trying to maximise the probability that the discriminator will apply a mistake. A neural network acting as a classifier is a discriminator. It evaluates input data to see whether it is produced by the generator, or it is real. It is the task of this discriminator to distinguish original from fraudulent data. It facilitates the generator in making its output better through feedback (Goodfellow et al., 2014; Goodfellow et al., 2020).

Figure 4 shows how the adversarial training loop will be between the generator and discriminator networks.

5.2. Applications of GAN

Generative Adversarial Networks (GANs) have been optimized a lot since their inception in 2014 with major advancements in architecture and utilization. Major innovations are evident in terms of refinement in stability, diversity and realism of generated outputs. During this time, new GAN varieties and uses have emerged within various field areas, including data scarcity and ethical issues.

Computer Vision and Image Processing: GANs find extensive application in major fields such as image generation, style transfer and super resolution. Researchers utilized CycleGANs for unpaired image-to-image translation operations which yielded great results in style transfer applications (Zhu et al., 2021). GANs display exceptional competence in video prediction because they advance abilities to understand temporal progression and spatial relationships in videos (Aigner & Körner, 2022).

Natural Language Processing: Through the implementation of GANs researchers can generate cohesive text with contextual relevance according to Zhang et al. (2022). Research into GAN applications in NLP continues because the field mostly tackles text quality improvements and prevents mode collapse occurrences.

Emerging Domains: GANs are achieving increased popularity in materials science technology for modelling material properties along with material creation (Jiang et al., 2024).

Industry Adoption: GANs find application in the industry for multiple purposes such as digital editing software and diagnostic tools for healthcare alongside advertising platforms. The deployment of GAN technology in security-sensitive business domains is constrained by concerns regarding deepfake abuse and data security.

6. Transformer Models

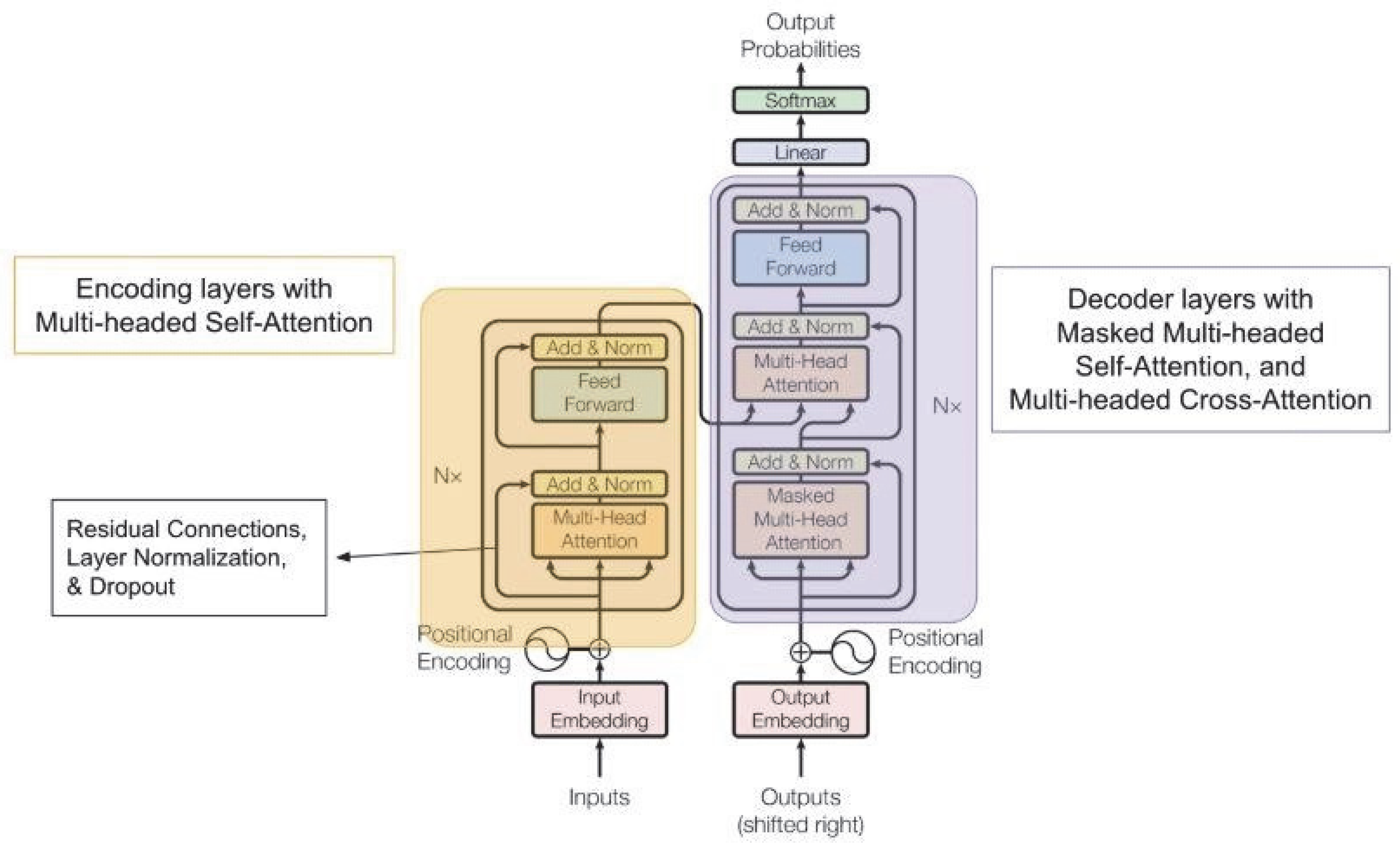

Vaswani et.al (2017) initially proposed transformer models back in 2017, and since that time they have become one of the most influential models in AI, especially in NLP, due to their use of self-attention mechanism to handle sequences (Vaswani et al., 2017). Since then, this architecture has been developed and expanded upon.

6.1. Basic Architecture and Attention Mechanism

The basis for the transformer models is laid out on the self-attention mechanism which allows the model to assess the relative importance of different parts of the input data. This is carried out by scoring attention for each component in the array with all others, enabling the model to be more contextually and far dependency aware than traditional recurrent neural networks (RNNs) (Brown et al. ,2020).

Non-Stationary Transformer Architecture does this by integrating mechanisms that consider non-stationary factors and hence learns better than the traditional transformer architecture and lays a great foundation for handling dynamic data (Liu et al 2024). Hybrid LSTM-Transformer Architectures which utilize Transformers to understand the context, and LSTMs to model the sequences are given in order to merge the strengths of both LSTMs and Transformers. With online learning and knowledge distillation approaches they have been applied in engineering systems for real time predictions (Cao et al., 2024).

Modular and multi-task learning framework is a recent advance that make easy management of multiple tasks and improves a model versatility (Cao et al. 2024). Since transformer-based language models can represent long-range connections in biological sequences, they have been used in bioinformatics for gene expression predictions and protein sequence analysis (Zhang et al., 2023). Engineers have shown their competencies in adapting complicated data and operational environment by applying the hybrid LSTM-Transformer models for on-the-go predictions in smart manufacturing and renewable energy administration (Cao et al., 2024).The Transformer architecture utilizes stacked self-attention mechanisms and point-wise fully connected layers in both its encoder and decoder components, as illustrated in the left and right halves of

Figure 3, respectively.

6.2. Applications of Transformer Models

The transformer model as a type of neural network architecture uses self-attention mechanisms to process sequential data thus revolutionizing artificial intelligence particularly in NLP (Vaswani et al., 2017). Transformers were initially developed for NLP applications, but developers have reconfigured this framework to serve various uses in robotics as well as computer vision, healthcare and finance.

Natural Language Processing (NLP): The text translation field along with sentiment analysis and question answering applications make successful use of transformers for modern processing tasks. The pre-training of BERT and GPT shows their capability to achieve specific tasks efficiently according to Brown et al. (2020) and Carion et al. (2020).

Computer Vision: Computer vision programs make use of transformers to execute both object detection and image segmentation operations. The Vision Transformer (ViT) model processes medical images efficiently because it applies hierarchical frameworks (Dosovitskiy et al., 2021).

Healthcare: The medical field utilizes transformers to analyze images and detect anomalies while segmenting brain tumours. Medical data analysis becomes more effective with Transformers because they maintain an ability to model local and global relationships within the data. The combination of CT scan data with a hybrid CNN-Transformer model enables medical professionals to forecast N-staging and survival rates among non-small cell lung cancer patients (Wang et al., 2024).

7. Deep Reinforcement Learning

The machine learning approach DRL unites reinforcement learning with deep learning to construct its functionality. The technique requires an agent to take successive steps across an environment which produces maximal reward accumulation through environment interaction. The critical basis of reinforcement learning exists through agent-environment interactions which form Markov Decision Processes (MDPs).

7.1. Concept of Agents and Environments

DRL agent has role of the deciding entity when it comes to DRL because it can view states and then apply policies to make decisions on action selection. The primary aim is to maximize total rewards during a long period. Deep neural networks function as value function and policy approximators enabling complex environments to become tractable. When interacting the agent executes actions which bring about environmental changes that result into a reward as a feedback. As it learns over time the agent finds optimal policies when it harmonizes exploration and exploitation through using deep learning techniques with convolutional neural networks or recurrent neural networks.

Modern Deep Reinforcement Learning techniques influenced how the researchers go about analysing both interactions between environment and agents, as well as their implementation. The findings of this research are produced by the Self-Predictive Representations architecture from Schwarzer et al. (2021) by facilitating agents to build long- lasting mental models from small data of interactions with the environment to achieve the optimal results on the Atari 100k benchmark. Adversarial environment generation with neural network are used to generate increasingly complex environments to reveal the policy limitation in certain agents as practiced by Dennis et al. (2021). Learning agents are thus aided by flexible environments, which provide greater durability while fastening up learning performance more than fixed learning methods.

The “Plan to Explore” method was based on Sekar et al (2021) by treating exploration as a planning problem through which agents could speculate on information gain from various techniques while maintaining the exploration versus exploitation aspect for Deep Reinforcement Learning studies. Papoudakis et al. (2021) advanced the state of the knowledge surrounding co-existing agents’ agent representations by developing benchmarks, which established standard evaluation methods to examine collaboration tasks for multi-agent systems. Dulac-Arnold et al. (2021) have focused on real-world industrial control problematics and proposed a complete frame for practical application development. This framework uses “Constrained Reality MDPs” which particularly represent safety boundaries over environments by assuming that safety constraints are part of the environment rather than goals of pursuit for the agents.

7.2. Applications of DRL

The progress of the DRL has seen it make inroads to influence many businesses other than in the gaming industry. The research uses the recent academic discovers by examining the effect of DRL applications on various domains.

Autonomous Systems and Robots: While rallying the capabilities of robotic manipulation, Deep Reinforcement Learning (DRL) also improved the autonomous navigation powers. From the researches by Lee et al. (2022), their algorithms were found effective in equipping robots on four legs to effectively navigate rugged terrains. The system, which used transfer protocols, experimented to find out which of these virtual rules actually transmuted into real world hardware. DRL’s powerful capabilities prove useful to solving the navigation problems the autonomous car systems must overcome. The intricate driving decision processes for autonomous vehicles are well handled by deep reinforcement learning strategies as Kiran et al., (2021) observe during the operations in environments that are ever changing.

Medicine and Medical Care: Application areas of Deep Reinforcement Learning (DRL) such as patient specific treatment planning as well as medical imaging technologies find eloquent place in healthcare applications. Yu et al (2021) found that the performance of the research was superior compared to the traditional approaches while analysing the reinforcement learning methods of enhancing long-term treatment of diseases. DRL brings in the assessment capabilities in the form of biometrical and patient chronicles to plan personalized treatment from the research survey.

Finance and Economics: DRL technology is used by financial organizations to undertake portfolio management not only as a part of algorithmic trading function but also in risk assessment activities. From the study Zhang et al. (2020) unveiled the high effectiveness of DRL in maximization of trading opportunities in financial markets.

Entertainment and Gaming: Research activities conducted this year led to additional capability advancements although early research happened in gaming. In 2021 Berner et al presented OpenAI Five which surpassed human world champions in the complex multiplayer game Dota 2. The research showed that reinforcement learning applied to multiple agents leads to advanced coordination and strategic development.

8. Practical Applications Across Industries

Industrial transformation depends on deep learning within machine learning which combines large computational power with huge data storage to achieve its goals. The technology produces operational capabilities, and it boosts business operational efficiency. Modern technologies optimize business operations in healthcare as well as finance and research for environmental science and manufacturing applications. Various applications provided specific solutions to handle industrial demands while achieving measurable results toward efficiency measurements and accuracy levels and cost-effectiveness. A summary of deep learning applications across various industries is presented in

Table 1. The assessed review demonstrates transformative capabilities of deep learning across various business industries.

8.1. Healthcare

Besides structural modelling, deep learning and traditional ML approaches such as random forest have been applied for sequence-based prediction of functional protein sites, including metal ion binding (Kumar, 2017). Medical imaging analysis underwent a fundamental transformation because deep learning enabled it to identify diseases as a diagnostic tool. Medical imaging analysis underwent a fundamental transformation because deep learning enabled it to identify diseases as a diagnostic tool. Deep learning models demonstrated strong diagnostic capabilities for COVID-19 through chest X-rays (Ahuja et al.,

2021; Simbun & Kumar, 2025), which the COVID-19 pandemic sped up AI-based diagnostic systems. After reducing diagnosis time expert radiological reliability could be achieved by using these diagnostic models. The drug development process received significant acceleration from deep learning technology which normally took multiple years to complete. Scientists can now predict protein structures using AlphaFold which shortens their process of developing precise therapeutic solutions (Jumper et al., 2021; Kumar, 2024). Recent surveys also highlight the growing role of deep learning in computational biology, showcasing its utility in genomics, protein modeling, and biomarker discovery (Kumar et al., 2023).

8.2. Finance

The application of deep learning provides financial organizations with their most effective fraud detection capability. The identification capabilities of neural networks for complex fraud patterns in transaction data lead to improved detection efficiency along with reduced false positive outcomes as per Roy et al. (2022). The evaluation of numerous patterns takes place simultaneously by networked tools. The market prediction algorithms use recurrent neural networks and Transformer topology because of their advanced capabilities. Financial institutions deploy deep learning algorithms to extract minimal market signals from multiple datasets which improves their trading performance during state of volatility according to Jiang (2021).

8.3. Environmental Science

Climate modelling advances its efficiency through deep learning technologies to a significant extent. Neural networks led to better weather and climate predictions after analysing environment sensor data according to Shi et al. (2023). Extreme weather pattern-based forecasting techniques produced better results than traditional physics methods existed independently. Deep learning enables developers to create automatic systems that track wildlife populations by implementing automation protocols. Video-trapped species now allow easier computer vision system management for accurate wildlife detection in challenging field settings as per Beery et al. (2021).

8.4. Manufacturing

Deep learning applications in computer vision technology have brought significant enhancements to quality control system operations. According to Tao et al. (2022) their industrial research established that AI inspection systems outperform human operators by being both faster with higher precision while surpassing traditional computer vision methods regarding fault detection. Multiple methods of predictive maintenance implementation have led to extensive opportunities for cost reduction. The deep learning systems of Diez-Olivan et al. (2022) determine facility maintenance expenses and system failures by using multiple sensor data for proactive equipment degeneration prediction in various manufacturing facilities.

Table 1.

Applications of Deep Learning Across Industries.

Table 1.

Applications of Deep Learning Across Industries.

| Industry |

DL Method Used |

Example Application |

Outcome / Impact |

| Healthcare |

CNN, RNN |

Medical image analysis (e.g., tumor detection, X- ray scans) |

Improved diagnostic accuracy, early disease detection |

| Finance |

LSTM,

Transformer |

Fraud detection, stock price prediction |

Reduced financial losses, better risk management |

| Manufacturing |

GAN, CNN |

Defect detection in quality control |

Enhanced product quality, lower operational costs |

| Environment |

CNN, RNN |

Remote sensing for land use classification |

Better climate monitoring, optimized resource usage |

| Retail |

CNN,

Transformer |

Personalized product recommendations |

Increased customer engagement, higher sales conversion |

| Agriculture |

CNN, LSTM |

Crop health monitoring using drone imagery |

Improved yield prediction, targeted

interventions |

| Autonomous Driving |

CNN, RNN |

Object detection and lane tracking |

Enhanced safety, real- time decision-making |

8.5. Critical Evaluation of Existing Methods

Deep learning has revolutionized multiple domains, but each method has its limitations. Here, we provide a comparative evaluation of key deep learning architectures.

Table 2 compares different deep learning model types in terms of requirements, accuracy, and trade- offs:

Convolutional Neural Networks (CNNs): Highly efficient for image processing tasks due to spatial hierarchies but struggle with temporal dependencies and require large labeled datasets. CNNs also face challenges in explainability and computational cost.

Recurrent Neural Networks (RNNs) & Long Short-Term Memory (LSTM): Effective for sequential data such as speech and language modeling but suffer from vanishing gradients, making it difficult to learn long-range dependencies. Transformers have largely replaced RNNs in modern NLP applications.

Generative Adversarial Networks (GANs): Powerful in generating realistic images and data augmentation, yet training is unstable due to mode collapse, and results are often difficult to control.

Transformers: State-of-the-art in NLP and increasingly applied to vision tasks (e.g., Vision Transformers). However, they demand massive computational resources and extensive pre- training datasets.

Deep Reinforcement Learning (DRL): Useful for decision-making tasks in robotics and autonomous systems, but requires extensive training, struggles with generalization across tasks, and often lacks interpretability

(Table 2).

Table 2.

Comparison of Deep Learning Model Types: Requirements, Performance, and Trade- offs.

Table 2.

Comparison of Deep Learning Model Types: Requirements, Performance, and Trade- offs.

| Model Type |

Dataset Requiremen

ts |

Accuracy |

Computation al

Complexity |

Strengths |

Weaknesses |

CNN

(Convolution al Neural Network)

|

Large labeled datasets, mostly images |

High for image- related tasks |

Moderate to high (requires GPUs) |

Excellent for image processing, spatial feature extraction |

Poor for sequential data, requires a lot of labeled data |

RNN

(Recurrent Neural Network)

|

Moderate, but benefits from large |

Moderate for short sequences, struggles |

High (due to sequential processing) |

Good for time-series and sequential |

Vanishing gradient problem, |

| |

sequential datasets |

with long- term dependenci es |

|

data (e.g., NLP,

speech) |

training inefficiencies |

| Transformer |

Large-scale pretraining datasets (e.g., BERT, GPT) |

Very high (outperfor ms RNNs in NLP tasks) |

Very high (requires substantial computing power) |

Best for NLP, long- range dependencie s, parallelizabl e training |

Expensive training, high memory consumption |

GAN

(Generative Adversarial Network)

|

Large training datasets required for stable convergence |

High, but depends on discriminat or strength |

Very high (training instability, mode collapse issues) |

Generates high-quality synthetic data, useful in augmentatio n and deepfake

detection |

Difficult to train, mode collapse issues |

| DRL (Deep Reinforceme nt Learning) |

Requires simulated environment s and reward- based feedback data |

High, especially in decision- making tasks |

Extremely high (requires large-scale training, often on GPUs/TPUs) |

Excels in real-time decision- making (e.g., robotics, gaming, autonomous

driving) |

Training inefficiencies

, high resource consumption, difficult hyperparamet er tuning |

8.6. Gaps in Current Research

Despite these advancements, deep learning still faces several unresolved challenges:

Model Interpretability: Many deep learning models, particularly CNNs and Transformers, function as "black boxes," making it difficult to understand decision-making processes.

Data Efficiency: Current deep learning approaches rely heavily on large-scale annotated datasets, which are expensive and time-consuming to create.

Generalization Across Domains: Models trained on specific datasets often fail to generalize well to unseen data, requiring retraining with domain-specific fine-tuning.

Ethical Concerns: Bias in training data results in unfair AI decision-making, particularly in healthcare and finance. Additionally, privacy concerns arise due to large-scale data collection. Key challenges in deep learning and the proposed solutions are summarized in

Table 3.

Computational Cost: Many of the cutting-edge models require significant amount of GPU/TPU resources and therefore out of reach for the researches and institutions with limited computational capacity.

Table 3.

Challenges in Deep Learning and Corresponding Solutions.

Table 3.

Challenges in Deep Learning and Corresponding Solutions.

| Challenge |

Description |

Affected Area |

Proposed Solutions / Techniques |

| Overfitting |

Model performs well on training data but poorly on unseen data |

Training |

Dropout, L2 Regularization, Early Stopping, Data Augmentation |

| Vanishing/Exploding Gradients |

Gradients become too small or large during backpropagation |

Training |

LSTM/GRU units, Gradient Clipping, Batch Normalization |

| High Computational Cost |

Requires large-scale hardware for training deep models |

Training, Deployment |

Model Pruning, Quantization, Efficient Architectures (e.g., MobileNet) |

| Lack of Interpretability |

Black-box nature makes decision- making hard to explain |

Ethics, Compliance |

Explainable AI (XAI), SHAP, LIME,

Saliency Maps |

| Bias in Data |

Models reflect societal or sample biases |

Ethics, Fairness |

Fairness-aware learning, Data balancing, Bias audits |

| Limited Labeled Data |

Insufficient annotated data for supervised learning |

Training |

Transfer Learning, Self-Supervised Learning, Data Synthesis |

| Scalability Issues |

Difficulty in scaling to real-time or big-data

scenarios |

Deployment |

Distributed Training, Model Compression |

| Security Vulnerabilities |

Susceptible to adversarial attacks |

Deployment, Safety |

Adversarial Training, Input Sanitization, Robust Architecture |

8.7. Future Directions

Addressing these challenges requires innovation in multiple areas:

Self-Supervised and Few-Shot Learning: By leveraging the methods of self-supervised and meta-earning, reducing the reliance upon labelled data sets to build.

Hybrid Architectures: Discussions on the combination of the strong points of various examples of models for instance the combination of CNNs and Transformer models to augment spatial and sequential grasp.

Efficient AI Models: Preparing energy efficient and light weight architectures such as pruning and quantization to help lighten up computation need.

Explainable AI (XAI): This will enhance interpretability through such techniques as attention maps, model distillation counter-factual explanations.

Ethical AI and Governance Frameworks: Implementation of AI regulatory standards for the problem of bias, fairness and privacy maintenance.

Having closed the gaps, the future research is able to hone the deep learning mechanisms to become more effective, open and applicable in a range of applications.

9. Ethical Considerations and Challenges

Deep learning technologies yielded ample industrial impact which led to numerous moral problems in decision-making processes. These consist of privacy issues, prejudice in AI systems, and the wider social effects of deep learning applications. AI systems built with biased educational data systems can escalate social inequalities thus making bias in AI systems an important concern. Deep learning model operations create privacy concerns about personal data sensitivity which challenge the surveillance methods and protections of data. Deep learning technology generates extensive social effects that modify power system dynamics and social relationships and influences the distribution of work. This literature review serves as a necessary foundation for both scholars and professionals to develop solutions for challenging moral problems.

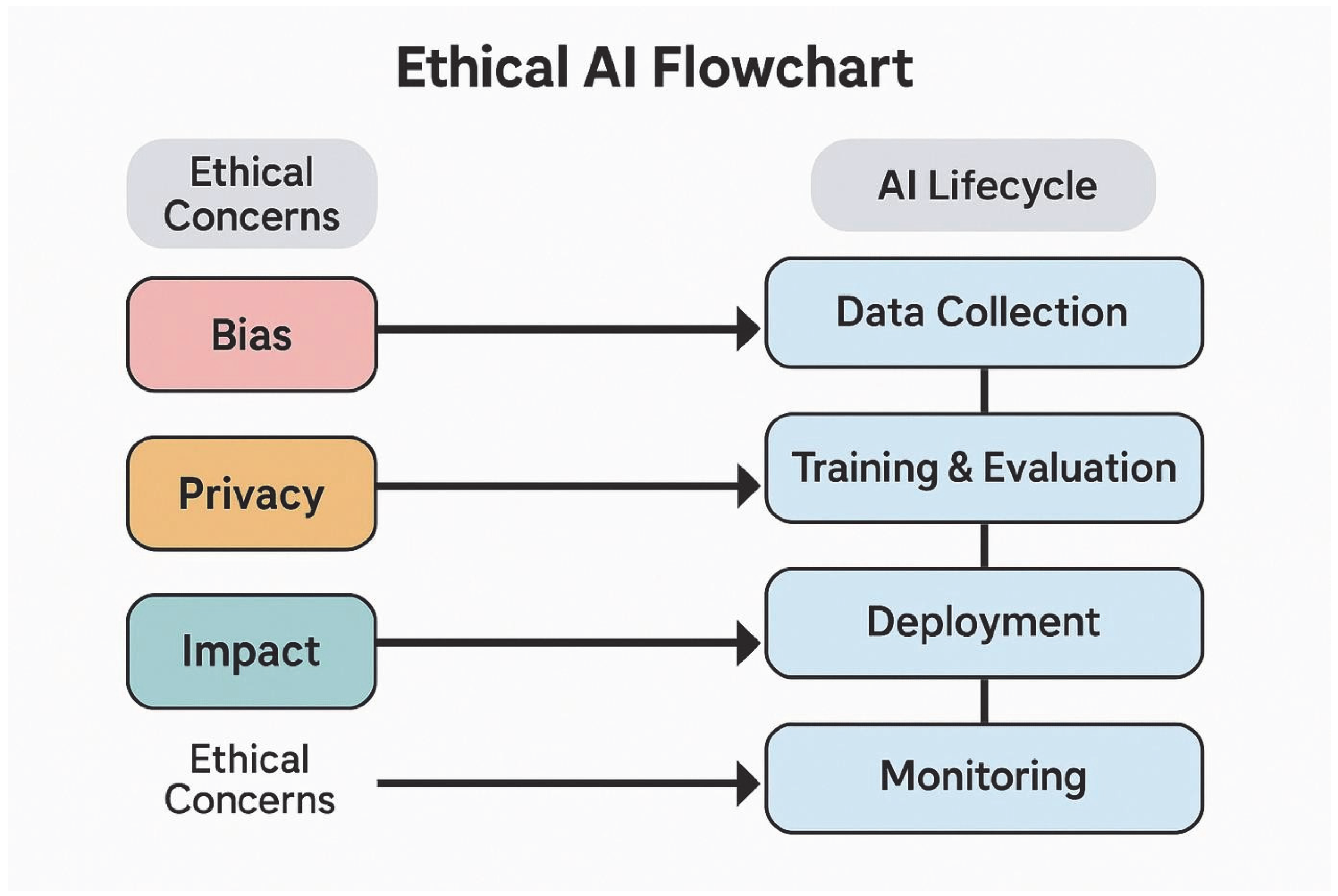

Figure 4 illustrates how ethical concerns such as bias, privacy, and societal impact propagate throughout the AI development lifecycle.

Figure 4.

Ethical AI Flowchart.A visual representation of how ethical concerns (bias, privacy, impact) propagate through the AI lifecycle.

Figure 4.

Ethical AI Flowchart.A visual representation of how ethical concerns (bias, privacy, impact) propagate through the AI lifecycle.

9.1. Bias in AI Systems

The researchers from Mehrabi et al. performed an extensive study of machine learning system bias in their 2021 report. Their investigation examined ten fairness indicators and identified twenty-three bias types with measurement bias and sampling and aggregate bias being among them. The authors propose joint socio-technical methods across different fields to address fairness problems since deep-seated social prejudices can only be resolved through these collaborations rather than through technological solutions. Researchers use this taxonomy as their primary instrument for developing fair algorithms. Raji et al. (2022) created a complete auditing process that enables businesses to evaluate bias detection methods before deploying algorithms. The authors emphasized effective auditing requires teams with technical expertise and experience in legal domains and specialized fields to combine their skills. Through their efforts the organization helped create new policies that regulate artificial intelligence technologies.

9.2. Privacy Concerns

Papernot et al. (2022) developed tempered sigmoid activations for differential privacy systems which represented a major advancement in privacy-protected machine learning methods. The presented methodology demonstrated 95% precision while ensuring ε=3 differential privacy levels thus proving that privacy-protected frameworks retain functionality in discrete learning systems. When the deep neural networks need to train under differential privacy restrictions the authors developed new techniques that resolved gradient instability problems. The authors Veale and Zuiderveen Borgesius (2021) presented unfavourable views about the EU AI Act primarily because it aimed to restrict access to unsafe AI systems. The study shows a wide disparity between legal standards and technological characteristics of responsibility methods because the Act includes imprecise technical rules that create difficulties for successful execution. The authors emphasized regulatory weaknesses that needed revision for seamless integration of legal standards into technology-based systems concerning enforcement processes and organizational jurisdictions.

9.3. Societal Impact of Deep Learning

Weidinger et al. (2022) developed a detailed taxonomy of large language model risks which divides into six different categories that cover environmental/economic damages and malicious applications and information threats and misinformation risks while also including discrimination/conversion hazards plus human-computer interface risks. Research indicates that language model operational capacity directly correlates to their safety risks since their development creates fresh ethical challenges The research evaluation in conjunction with the policy-related work relies on this assessment in order to reach the full understanding of the security threats to language models.

The paper by Mohamed et al. (2022) provides decolonial framework for AI ethics based on observations in disadvantaged and underdeveloped regions throughout the Southern Global. Since the starting line of the AI ethics discussion the Western philosophical legacy working with the play of interests from the corporate world confined their perspective to single value systems and epistemological belief. Using design techniques, the authors developed the principles of reciprocity, which fused with redistributive justice, having analyzed the technological colonial structures of AI.

10. Conclusion

The application of deep learning has turned into a core of the modern artificial intelligence that has revolutionized a numerous number of spheres due to the ability to process, analyze and train using huge datasets. Such review has outlined the major deep learning techniques, namely Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), Generative Adversarial Networks (GANs) Transformer Models, and Deep Reinforcement Learning (DRL), in details. Each approach has its unique saliency and proven ability to transform across disciplines (healthcare, finance, manufacturing, environmental studies to list a few). While the process has proved to be quite successful, there still is a number of challenges. Some of them include such things such as data dependence; interpretability of the model; the efficiency of training; ethics of the bias; privacy; societal impact. To defeat these challenges an interdisciplinary approach, innovations in self-supervised explainable AI, and energy-efficient as well as ethical deep learning frameworks should be revisited. Looking into the future, the future of deep learning then is to produce models not only accurate but also accountable, friendly and responsible. Motivated by the current development of hybrid architectures, transfer learning and few-shot learning, a potential option could open up in the form of designing adaptable AI systems that are capable of being applied on different tasks and domains. The science of deep learning is an unlimited resource for the rookies and the researchers too. By fostering curiosity, ethical awareness and the feeling of collaboration the next generation of AI practitioners might deliver an important contribution to technology for the benefit of humanity.

Acknowledgements

The authors would like to express their sincere gratitude to Management and Science University (MSU) for providing the facilities and support required to complete this study. Special thanks to the Artificial Intelligence and Cyber Security Centre and the Faculty of Health and Life Sciences at MSU for their encouragement and technical assistance.

Author Contributions

Asif Iqbal Hajamydeen and Suresh Kumar contributed equally to the conceptualization, research design, literature review, and manuscript preparation. Asif Iqbal Hajamydeen led the sections related to deep learning models and architectures, while Suresh Kumar contributed significantly to the practical applications and ethical considerations. Both authors reviewed and approved the final version of the manuscript.

References:

- Agrawal, A., Gans, J., & Goldfarb, A. (2018). Prediction machines: The simple economics of artificial intelligence. Harvard Business Review Press.

- Bostrom, N., & Yudkowsky, E. (2014). The ethics of artificial intelligence. In K. Frankish & W. M. Ramsey (Eds.), The Cambridge handbook of artificial intelligence (pp. 316- 334). Cambridge University Press.

- Crevier, D. (1993). AI: The tumultuous history of the search for artificial intelligence. Basic Books.

- Deng, L., & Yu, D. (2014). Deep learning: Methods and applications. Foundations and Trends in Signal Processing, 7(3-4), 197-387.

- Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT Press.

- Jordan, M. I., & Mitchell, T. M. (2015). Machine learning: Trends, perspectives, and prospects. Science, 349(6245), 255-260.

- LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436- 444.

- McCarthy, J., Minsky, M. L., Rochester, N., & Shannon, C. E. (2006). A proposal for the Dartmouth summer research project on artificial intelligence, August 31, 1955. AI Magazine, 27(4), 12-14.

- Russell, S., & Norvig, P. (2020). Artificial intelligence: A modern approach (4th ed.). Pearson.

- Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT Press.

- Goodfellow, I. J., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., & Bengio, Y. (2014). Generative adversarial nets. In Advances in Neural Information Processing Systems (pp. 2672-2680).

- Hochreiter, S., & Schmidhuber, J. (1997). Long short-term memory. Neural Computation, 9(8), 1735-1780.

- Jing, L., & Tian, Y. (2020). Self-supervised visual feature learning with deep neural networks: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(11), 4037-4058.

- Kingma, D. P., & Welling, M. (2013). Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114.

- Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems (pp. 1097-1105).

- LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436- 444.

- Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G., Graves, A., Riedmiller, M., Fidjeland, A. K., Ostrovski, G., Petersen, S., Beattie, C., Sadik, A., Antonoglou, I., King, H., Kumaran, D., Wierstra, D., Legg, S., & Hassabis, D. (2015). Human- level control through deep reinforcement learning. Nature, 518(7540), 529-533.

- Pan, S. J., & Yang, Q. (2009). A survey on transfer learning. IEEE Transactions on Knowledge and Data Engineering, 22(10), 1345-1359.

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, I. (2017). Attention is all you need. In Advances in Neural Information Processing Systems (pp. 5998-6008).

- Alom, M. Z., Taha, T. M., Yakopcic, C., Westberg, S., Sidike, P., Nasrin, M. S., Hasan, M., Essen, B. C. V., Awwal, A. A. S., & Asari, V. K. (2019). A State-of-the-Art Survey on.

- Deep Learning Theory and Architectures. In Electronics (Vol. 8, Issue 3, p. 292). Multidisciplinary Digital Publishing Institute. [CrossRef]

- Farsal, W., Anter, S., & Ramdani, M. (2018). Deep Learning (p. 1). [CrossRef]

- LeCun, Y., Bengio, Y., & Hinton, G. E. (2015). Deep learning [Review of Deep learning]. Nature, 521(7553), 436. Nature Portfolio. [CrossRef]

- Mazurowski, M. A., Buda, M., Saha, A., & Bashir, M. R. (2018). Deep learning in radiology: An overview of the concepts and a survey of the state of the art with focus on MRI. Journal of Magnetic Resonance Imaging, 49(4), 939. Wiley. [CrossRef]

- Guo, Y., Liu, Y., Oerlemans, A., Lao, S., Wu, S., & Lew, M. S. (2015). Deep learning for visual understanding: A review [Review of Deep learning for visual understanding: A review]. Neurocomputing, 187, 27. Elsevier BV. [CrossRef]

- Shickel, B., Tighe, P., Bihorac, A., & Rashidi, P. (2017). Deep EHR: A Survey of Recent Advances in Deep Learning Techniques for Electronic Health Record (EHR) Analysis [Review of Deep EHR: A Survey of Recent Advances in Deep Learning Techniques for Electronic Health Record (EHR) Analysis]. IEEE Journal of Biomedical and Health Informatics, 22(5), 1589. Institute of Electrical and Electronics Engineers. [CrossRef]

- Tang, Z., Shi, S., Chu, X., Wang, W., & Li, B. (2020). Communication-Efficient Distributed Deep Learning: A Comprehensive Survey. In arXiv (Cornell University). Cornell University. [CrossRef]

- Whang, S., & Lee, J. (2020). Data collection and quality challenges for deep learning. Proceedings of the VLDB Endowment, 13, 3429 - 3432. [CrossRef]

- Zhang, T., Gao, C., , L., Lyu, M., & Kim, M. (2019). An Empirical Study of Common Challenges in Developing Deep Learning Applications. 2019 IEEE 30th International Symposium on Software Reliability Engineering (ISSRE), 104-115. [CrossRef]

- Rivas, P. (2020). Deep Learning for Beginners: A beginner's guide to getting up and running with deep learning from scratch using Python. Packt Publishing Ltd.

- Khan, A., Sohail, A., Zahoora, U., & Qureshi, A., 2019. A survey of the recent architectures of deep convolutional neural networks. Artificial Intelligence Review, 53, pp. 5455 - 5516. [CrossRef]

- Ghosh, A., Sufian, A., Sultana, F., Chakrabarti, A., & De, D., 2019. Fundamental Concepts of Convolutional Neural Network., pp. 519-567. [CrossRef]

- Bhatt, D., Patel, C., Talsania, H., Patel, J., Vaghela, R., Pandya, S., Modi, K., & Ghayvat, H., 2021. CNN Variants for Computer Vision: History, Architecture, Application, Challenges and Future Scope. Electronics. [CrossRef]

- Suganuma, M., Kobayashi, M., Shirakawa, S., & Nagao, T., 2020. Evolution of Deep Convolutional Neural Networks Using Cartesian Genetic Programming. Evolutionary Computation, 28, pp. 141-163. [CrossRef]

- Albelwi, S., & Mahmood, A., 2017. A Framework for Designing the Architectures of Deep Convolutional Neural Networks. Entropy, 19, pp. 242. [CrossRef]

- Sun, Y., Xue, B., Zhang, M., & Yen, G., 2020. Completely Automated CNN Architecture Design Based on Blocks. IEEE Transactions on Neural Networks and Learning Systems, 31, pp. 1242-1254. [CrossRef]

- Sun, Y., Xue, B., Zhang, M., Yen, G., & Lv, J., 2018. Automatically Designing CNN Architectures Using the Genetic Algorithm for Image Classification. IEEE Transactions on Cybernetics, 50, pp. 3840-3854. [CrossRef]

- Kotaridis, I., & Lazaridou, M., 2023. Cnns in land cover mapping with remote sensing imagery: a review and meta-analysis. International Journal of Remote Sensing, 44, pp. 5896 - 5935. [CrossRef]

- Khanam, R., Hussain, M., Hill, R., & Allen, P., 2024. A Comprehensive Review of Convolutional Neural Networks for Defect Detection in Industrial Applications. IEEE Access, 12, pp. 94250-94295. [CrossRef]

- Yu, J., De Antonio Jiménez, A., & Villalba, E., 2022. Deep Learning (CNN, RNN) Applications for Smart Homes: A Systematic Review. Comput., 11, pp. 26. [CrossRef]

- Gadhiya, U., Faldu, P., Darji, K., Obaidat, M., Gupta, R., & Tanwar, S., 2024. CNN- based Application Recognition to Enhance Network Governance for Financial Networks. 2024 International Conference on Computer, Information and Telecommunication Systems (CITS), pp. 1-5. [CrossRef]

- Urolagin, S., Nayak, J., & Member, I., 2022. Gabor CNN Based Intelligent System for Visual Sentiment Analysis of Social Media Data on Cloud Environment. IEEE Access, 10, pp. 132455-132471. [CrossRef]

- Dera, D., Ahmed, S., Bouaynaya, N., & Rasool, G., 2024. TRustworthy Uncertainty Propagation for Sequential Time-Series Analysis in RNNs. IEEE Transactions on Knowledge and Data Engineering, 36, pp. 882-896. [CrossRef]

- Song, C., Hwang, G., Lee, J., & Kang, M., 2022. Minimal Width for Universal Property of Deep RNN. J. Mach. Learn. Res., 24, pp. 121:1-121:41. [CrossRef]

- Feng, J., Yang, L., Ren, B., Zou, D., Dong, M., & Zhang, S. (2024). Tensor Recurrent Neural Network With Differential Privacy. IEEE Transactions on Computers, 73, 683-693. [CrossRef]

- Gopakumar, V., Pamela, S., & Zanisi, L. (2023). Fourier-RNNs for Modelling Noisy Physics Data. ArXiv, abs/2302.06534. [CrossRef]

- Sun, P., Wu, J., Zhang, M., Devos, P., & Botteldooren, D. (2023). Delayed Memory Unit: Modelling Temporal Dependency Through Delay Gate. ArXiv, abs/2310.14982. [CrossRef]

- Li, Y., Wang, Z., Han, R., Shi, S., Li, J., Shang, R., Zheng, H., Zhong, G., & Gu, Y. (2023). Quantum Recurrent Neural Networks for Sequential Learning. Neural networks : the official journal of the International Neural Network Society, 166, 148-161. [CrossRef]

- 2 Mulder, W., Bethard, S., & Moens, M. (2015). A survey on the application of recurrent neural networks to statistical language modeling. Comput. Speech Lang., 30, 61-98. [CrossRef]

- 4 Chen, Y., Cheng, Q., Cheng, Y., Yang, H., & Yu, H. (2018). Applications of Recurrent Neural Networks in Environmental Factor Forecasting: A Review. Neural Computation, 30, 2855-2881. [CrossRef]

- Yu, Y., Si, X., Hu, C., & Zhang, J. (2019). A Review of Recurrent Neural Networks: LSTM Cells and Network Architectures. Neural Computation, 31, 1235-1270. [CrossRef]

- Li, Y., Wang, Z., Han, R., Shi, S., Li, J., Shang, R., Zheng, H., Zhong, G., & Gu, Y. (2023). Quantum Recurrent Neural Networks for Sequential Learning. Neural networks : the official journal of the International Neural Network Society, 166, 148-161. [CrossRef]

- Lipton, Z. (2015). A Critical Review of Recurrent Neural Networks for Sequence Learning. ArXiv, abs/1506.00019.

- Pourcel, G., Goldmann, M., Fischer, I., & Soriano, M. (2024). Adaptive control of recurrent neural networks using conceptors. Chaos, 34 10. [CrossRef]

- Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., ... & Bengio, Y. (2020). Generative adversarial networks. Communications of the ACM, 63(11), 139-144.

- Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., ... & Bengio, Y. (2014). Generative adversarial nets. Advances in neural information processing systems, 27.

- Aigner, P., & Körner, M. (2022). FutureGAN: Anticipating the Future Frames of Video Sequences Using Spatio-Temporal 3D Convolutions in Progressively Growing GANs. arXiv preprint arXiv:2203.14053.

- Agarwal, S., Farid, H., & Gu, M. (2022). Protecting World Leaders Against Deep Fakes. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 13142–13151.

- Jiang, Y., Li, J., Yang, X., & Yuan, R. (2024). Applications of generative adversarial networks in materials science. Materials Genome Engineering Advances, 2(1), e30.

- Zhu, J. Y., Park, T., Isola, P., & Efros, A. A. (2021). Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(5), 1535–1548.

- Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J. D., Dhariwal, P.,... & Amodei, D. (2020). Language models are few-shot learners. Advances in neural information processing systems, 33, 1877-1901.

- Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov, A., & Zagoruyko, S. (2020, August). End-to-end object detection with transformers. In European conference on computer vision (pp. 213-229). Cham: Springer International Publishing.

- Lin, X., Yu, L., Cheng, K. T., & Yan, Z. (2023). The lighter the better: rethinking transformers in medical image segmentation through adaptive pruning. IEEE transactions on medical imaging, 42(8), 2325-2337.

- Liu, Y., Li, G., Payne, T. R., Yue, Y., & Man, K. L. (2024). Non-stationary Transformer Architecture: A Versatile Framework for Recommendation Systems. Electronics, 13(11), 2075.MDPI.

- Cao, K., Zhang, T., & Huang, J. (2024). Advanced hybrid LSTM-transformer architecture for real-time multi-task prediction in engineering systems. Scientific Reports, 14(1), 4890.Nature.

- Zhang, S., Fan, R., Liu, Y., Chen, S., Liu, Q., & Zeng, W. (2023). Applications of transformer-based language models in bioinformatics: a survey. Bioinformatics Advances, 3(1), vbad001. oxford.

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., ... & Polosukhin, I. (2017). Attention Is All You Need. Advances in Neural Information Processing Systems (NIPS), 5998–6008.

- Wang, L., Li, R., Zhang, C., Fang, S., Duan, C., Meng, X., & Atkinson, P. M. (2022). UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS Journal of Photogrammetry and Remote Sensing, 190, 196-214.

- Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T.,... & Houlsby, N. (2021). An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 20621–20630.

- Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z.,... & Guo, B. (2021). Swin.

- transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 10012-10022).

- Wang, L., Zhang, C., & Li, J. (2024). A hybrid CNN-Transformer Model for Predicting N staging and survival in Non-small Cell Lung Cancer patients based on CT-Scan. Tomography, 10(10), 1676-1693.

- Dennis, M., Jaques, N., Vinitsky, E., Bayen, A., Russell, S., Critch, A., & Levine, S. (2021). Emergent Complexity and Zero-shot Transfer via Unsupervised Environment Design. In Proceedings of the 35th Conference on Neural Information Processing Systems (NeurIPS 2021) (pp. 13049-13061). [CrossRef]

- Dulac-Arnold, G., Levine, N., Mankowitz, D. J., Li, J., Paduraru, C., Gowal, S., & Hester, T. (2021). Challenges of Real-World Reinforcement Learning: Definitions, Benchmarks and Analysis. Machine Learning, 110(9), 2419-2468. [CrossRef]

- Papoudakis, G., Christianos, F., Schäfer, L., & Albrecht, S. V. (2021). Benchmarking Multi-Agent Deep Reinforcement Learning Algorithms in Cooperative Tasks. In Proceedings of the 35th Conference on Neural Information Processing Systems (NeurIPS 2021) (pp. 12588- 12600). [CrossRef]

- Schwarzer, M., Anand, A., Goel, R., Hjelm, R. D., Courville, A., & Bachman, P. (2021). Data-Efficient Reinforcement Learning with Self-Predictive Representations. In Proceedings of the 9th International Conference on Learning Representations (ICLR 2021). [CrossRef]

- Sekar, R., Rybkin, O., Daniilidis, K., Abbeel, P., Hafner, D., & Pathak, D. (2021). Planning to Explore via Self-Supervised World Models. In Proceedings of the 37th International Conference on Machine Learning (ICML 2021) (pp. 9459-9468). [CrossRef]

- Berner, C., Brockman, G., Chan, B., Cheung, V., Dębiak, P., Dennison, C., Farhi, D., Fischer, Q., Hashme, S., Hesse, C., Józefowicz, R., Gray, S., Olsson, C., Pachocki, J., Petrov, M., Pinto, H. P., Raiman, J., Salimans, T., Schlatter, J., Zhang, S. (2021). Dota 2 with Large Scale Deep Reinforcement Learning. arXiv. https://arxiv.org/abs/1912.06680.

- Kiran, B. R., Sobh, I., Talpaert, V., Mannion, P., Al Sallab, A. A., Yogamani, S., & Pérez, P. (2021). Deep reinforcement learning for autonomous driving: A survey. IEEE Transactions on Intelligent Transportation Systems, 23(6), 4909-4926. [CrossRef]

- Lee, J., Hwangbo, J., Wellhausen, L., Koltun, V., & Hutter, M. (2022). Learning quadrupedal locomotion over challenging terrain. Science Robotics, 5(47), eabc5986. [CrossRef]

- Yu, C., Liu, J., & Nemati, S. (2021). Reinforcement learning in healthcare: A survey. ACM Computing Surveys, 55(1), 1-36. [CrossRef]

- Zhang, Z., Zohren, S., & Roberts, S. (2020). Deep reinforcement learning for trading. The Journal of Financial Data Science, 2(2), 25-40. [CrossRef]

- Ahuja, S., Panigrahi, B. K., Dey, N., Rajinikanth, V., & Gandhi, T. K. (2021). Deep transfer learning-based automated detection of COVID-19 from lung CT scan slices. Applied Intelligence, 51(1), 571-585. [CrossRef]

- Beery, S., Cole, E., & Gjoka, A. (2021). The iWildCam 2020 competition dataset. arXiv preprint arXiv:2105.03494. [CrossRef]

- Diez-Olivan, A., Del Ser, J., Galar, D., & Sierra, B. (2022). Data fusion and machine learning for industrial prognosis: Trends and perspectives towards Industry 4.0. Information Fusion, 50, 92-111. [CrossRef]

- Jiang, W. (2021). Applications of deep learning in stock market prediction: Recent progress. Expert Systems with Applications, 184, 115537. [CrossRef]

- Jumper, J., Evans, R., Pritzel, A., Green, T., Figurnov, M., Ronneberger, O., Tunyasuvunakool, K., Bates, R., Žídek, A., Potapenko, A., Bridgland, A., Meyer, C., Kohl, S.A., Ballard, A. J., Cowie, A., Romera-Paredes, B., Nikolov, S., Jain, R., Adler, J.,... Hassabis, D. (2021). Highly accurate protein structure prediction with AlphaFold. Nature, 596(7873), 583-589. [CrossRef]

- Roy, A., Sun, J., Mahoney, R., Alonzi, L., Adams, S., & Beling, P. (2022). Deep learning detecting fraud in credit card transactions. Systems Engineering, 25(1), 137-156. [CrossRef]

- Shi, X., Gao, Z., Lausen, L., Wang, H., Yeung, D.-Y., Wong, W.-K., & Woo, W.-C. (2023). Deep learning for precipitation nowcasting: A benchmark and a new model. Neural Networks, 148, 430-440. [CrossRef]

- Tao, F., Qi, Q., Liu, A., & Kusiak, A. (2022). Data-driven smart manufacturing. Journal of Manufacturing Systems, 48, 157-169. [CrossRef]

- Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K., & Galstyan, A. (2021). A survey on bias and fairness in machine learning. ACM Computing Surveys, 54(6), 1-35. [CrossRef]

- Mohamed, S., Png, M. T., & Isaac, W. (2022). Decolonial AI: Decolonial theory as sociotechnical foresight in artificial intelligence. Philosophy & Technology, 35(2), 1-26. [CrossRef]

- Papernot, N., Thakurta, A., Song, S., Chien, S., & Erlingsson, Ú. (2022). Tempered sigmoid activations for deep learning with differential privacy. Proceedings of the 39th International Conference on Machine Learning, 17298-17316. https://proceedings.mlr.press/v162/papernot22a.html.

- Raji, I. D., Smart, A., White, R. N., Mitchell, M., Gebru, T., Hutchinson, B., Smith- Loud, J., Theron, D., & Barnes, P. (2022). Closing the AI accountability gap: Defining an end- to-end framework for internal algorithmic auditing. Proceedings of the 2022 Conference on Fairness, Accountability, and Transparency, 33-44. [CrossRef]

- Veale, M., & Zuiderveen Borgesius, F. (2021). Demystifying the Draft EU Artificial Intelligence Act. Computer Law Review International, 22(4), 97-112. [CrossRef]

- Weidinger, L., Mellor, J., Rauh, M., Griffin, C., Uesato, J., Huang, P.-S., Cheng, M., Glaese, M., Balle, B., Kasirzadeh, A., Kenton, Z., Brown, S., Hawkins, W., Stepleton, T., Biles, C., Birhane, A., Haas, J., Rimell, L., Hendricks, L. A., Gabriel, I. (2022). Taxonomy of risks posed by language models. Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, 214-229. [CrossRef]

- Simbun, A., & Kumar, S. (2025, March 17). Artificial Intelligence-Driven Prognostic Classification of COVID-19 Using Chest X-rays: A Deep Learning Approach. arXiv preprint arXiv:2503.13277.

- Kumar, S. (2024, August 17). Prediction of oligomeric status of quaternary protein structure by using sequential minimal optimization for support vector machine. bioRxiv. [CrossRef]

- Kumar, S., Guruparan, D., Aaron, P., Telajan, P., Mahadevan, K., Davagandhi, D., & Yue,.

- O. X. (2023, October 2). Deep learning in computational biology: Advancements, challenges, and future outlook. arXiv preprint arXiv:2310.03086.

- Kumar, S. (2017, December 29). Prediction of metal ion binding sites in proteins from amino acid sequences by using simplified amino acid alphabets and random forest model. Genomics & Informatics, 15(4), 162. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).