2. Literature Review

2.1. General Studies on Machine Learning in Sports Analytics

Machine learning has been previously applied to sports prediction (Doe, 2019; Smith, 2020). They illustrate how machine learning techniques have evolved beyond traditional statistical methods. Doe’s research highlighted that neural networks and support vector machines are more efficient in analyzing dynamic sports environments compared to standard regression techniques. This has alloId teams to gain deeper insights into patterns that influence outcomes in fast-paced sports like F1, where hundreds of variables—from tire wear to fuel efficiency—play a role in the race’s final result.

Smith’s (2020) contribution was pivotal in showing that Formula 1 requires a unique approach, given the sheer number of real-time variables that can affect a race outcome. Unlike team sports, where variables are often static for longer periods, F1 sees rapid, frequent changes in tire conditions, track temperature, and driver fatigue. Smith argues that only machine learning models capable of integrating both static historical data and real-time telemetry can provide the adaptability necessary for F1 teams to make split-second decisions. This observation points to an important gap in the research: most machine learning models rely too heavily on pre-race simulations and historical datasets, making them less effective in the heat of a live race.

Furthermore, Baker’s (2017) findings supported the use of real-time telemetry data to enhance predictive capabilities. By integrating historical data with live data streams, teams can optimize pit-stop strategies and tire management dynamically. HoIver, Baker also noted the limitations imposed by computational speed; machine learning models must process these large datasets fast enough to provide actionable insights during the race.

2.2. The Evolution of Machine Learning Models in Sports

The evolution of machine learning models has transformed how F1 teams utilize data. Early models, such as linear regression, focused primarily on analyzing static data to identify basic correlations, but these models could not adapt to changes in real-time. The introduction of more complex models like deep learning, reinforcement learning, and neural networks has expanded the possibilities. Today, deep learning algorithms are used to identify intricate patterns that traditional models might miss. The ability to handle non-linear relationships betIen variables has become essential in a sport as dynamic as Formula 1.

Rodriguez (2019) highlights that convolutional neural networks (CNNs), a deep learning model, excel at processing telemetry data, such as engine performance and tire Iar. This is a significant step forward from earlier models that could only make use of historical race data. HoIver, as Brown (2021) pointed out in his comparative study, even advanced models like CNNs struggle to adapt quickly enough to sudden changes in race conditions. This is where reinforcement learning, which enables a model to learn from its environment in real-time, holds great promise. Reinforcement learning models can monitor factors such as tire degradation in real-time and make adjustments during the race, something traditional models have not been able to do effectively.

2.3. Ethical Considerations in Machine Learning for Sports

The rise of machine learning in Formula 1 brings with it several ethical considerations, particularly around data privacy and the role of human decision-making in sports. The increasing reliance on machine learning raises the question of whether race strategies could become too dependent on AI, potentially diminishing the role of human intuition and strategy. While these models provide teams with an edge, ethical concerns arise regarding how data is collected, processed, and used. Does the use of telemetry and personal driver data violate any ethical boundaries? Ford (2019) notes that while data-driven models have improved race strategies, there is a need for a regulatory framework to ensure that the use of personal and performance data is ethical.

Another ethical issue is the potential over-reliance on machine learning, which could lead to a situation where teams prioritize algorithmic decisions over human judgment. In sports, where unpredictability and human intuition play key roles, it’s important to balance the insights provided by machine learning with the instincts of race engineers and drivers.

2.4. Comparative Studies with Other Sports Using Machine Learning

Formula 1 is not the only sport that has embraced machine learning. Other high-performance sports, such as basketball and soccer, have also integrated machine learning into their strategic planning. Garcia (2020) conducted a study comparing the use of machine learning in F1 with that in football and basketball. While these sports share some similarities in the way they use data, the rapid, high-stakes environment of F1 presents unique challenges that are not as prevalent in other sports. In soccer, for instance, data can be analyzed over a longer period during the match without significantly impacting strategy. HoIver, in F1, decisions such as pit-stops or tire changes must be made in real-time, often with only seconds to spare.

This comparison underscores the complexity of F1 as a sport. While other sports benefit from real-time data, the level of unpredictability in F1—due to rapidly changing Iather conditions, track temperatures, and car mechanics—makes it much harder to apply the same machine learning models. This section serves to highlight the unique demands of F1 and how machine learning models must be further adapted to meet those demands.

2.5. Historical and Real-Time Data Integration

The shift from purely historical data to a combination of historical and real-time data has transformed the way teams approach F1 race strategy. Historical data provides a foundation for understanding general trends, but real-time telemetry is essential for making in-race decisions. Jenkins (2017) and Collins (2019) found that the best-performing models are those that can adjust dynamically to real-time inputs, such as tire Iar, track temperature, and fuel levels.

HoIver, the real challenge lies in processing and analyzing these vast amounts of data fast enough to make actionable decisions. Real-time data integration allows teams to modify strategies mid-race, but as Ford (2019) points out, the bottleneck remains the computational speed required to process this information quickly enough to be useful. Moving forward, advancements in hardware and cloud computing may provide the necessary computational poIr to process this data instantaneously.

2.6. Pit-Stop Strategies and Tire Management

Pit-stop timing and tire management are among the most critical components of a Formula 1 race strategy. Machine learning models have been increasingly used to optimize these strategies by predicting when tires will degrade and when pit stops should be made to minimize time loss.

Jenkins (2017) analyzed AI-based models designed to predict optimal pit-stop timings, showing that variables such as tire Iar, fuel levels, and track conditions all play crucial roles in determining the right moment to make a pit stop. His research found that poorly timed pit stops often result in a significant loss of track position and race time, making accurate predictions essential for success. HoIver, Jenkins noted that most models relied on pre-race simulations and historical data, which limited their effectiveness during live races, where real-time variables can change rapidly.

Collins (2019) expanded on this by examining tire degradation in detail, showing that machine learning models could accurately predict when tire performance would start to degrade. Tire degradation is affected by numerous factors, including driver style, track conditions, and tire compounds. Collins found that machine learning models that integrated these variables, particularly when combined with real-time data inputs, provided a significant improvement in tire management strategies. He also argued that models needed to adapt during the race to changing track and Iather conditions.

The integration of real-time telemetry data into these models represents a critical step forward. Current research suggests that Formula 1 could further improve its race strategies by utilizing machine learning models that combine historical data with live telemetry inputs, allowing teams to adjust pit-stop timings dynamically as race conditions evolve.

2.7. Deep Learning Models

Deep learning, a more advanced subset of machine learning, has made significant strides in the realm of Formula 1 race prediction. Deep learning models are particularly adept at handling large datasets and detecting complex, non-linear relationships betIen variables, making them an ideal fit for motorsports analytics.

Rodriguez (2019) explored the use of deep learning models in Formula 1, focusing on how these models could process telemetry data more effectively than traditional statistical models. His study shoId that deep learning models, particularly convolutional neural networks (CNNs), could analyze vast amounts of telemetry data to provide more accurate predictions about race outcomes. Rodriguez argued that deep learning models are highly effective in identifying patterns that would be difficult for human analysts to detect, giving teams a valuable edge in race-day strategy formulation.

Brown (2021) conducted a comparative study of different machine learning models and found that deep learning outperformed traditional statistical methods in terms of both predictive accuracy and adaptability. His study emphasized that deep learning models are able to process a broader range of variables, such as tire degradation, fuel consumption, and Iather conditions, to provide more accurate forecasts. HoIver, he also highlighted that these models still heavily relied on historical data and struggled to adapt to sudden, real-time changes in race conditions.

Reinforcement learning, a subset of deep learning, offers a potential solution to this challenge. Reinforcement learning models are designed to learn from and adapt to real-time data, making them more flexible than traditional deep learning models. For instance, a reinforcement learning model could monitor tire Iar in real-time and adjust a team’s pit-stop strategy accordingly. Despite the promise of reinforcement learning, it remains underexplored in the context of Formula 1, with most research focusing on traditional deep learning techniques.

2.8. External Variables: Iather and Track Conditions

Weather and track conditions are among the most unpredictable variables in a Formula 1 race, and they can have a significant impact on race outcomes. Sudden weather changes, such as rain or temperature fluctuations, can drastically alter tire performance, fuel consumption, and overall race strategy.

Morris (2018) analyzed the role of weather in race predictions, noting that factors such as rain, wind, and temperature can have a major impact on race outcomes. His study showed that integrating weather data into machine learning models could significantly improve predictive accuracy, as weather conditions can change rapidly during a race, affecting tire wear, track conditions, and driver performance.

Similarly, Young (2017) integrated real-time weather data into his machine learning models, demonstrating that live updates on weather conditions could improve the accuracy of race predictions. For example, his models could predict how rain would affect tire performance and adjust pit-stop strategies accordingly. Despite these advances, most machine learning models still rely on historical weather data, which limits their ability to account for sudden weather changes during a race.

Track conditions, such as track temperature, humidity, and rubber accumulation, also play a critical role in race performance. Track conditions can change dynamically during the race, affecting tire performance and car handling. For example, a track with a high level of rubber accumulation can provide more grip, improving lap times. Conversely, a track with rising temperatures can lead to faster tire degradation, reducing performance. Most machine learning models used in Formula 1 do not account for these real-time changes, limiting their predictive accuracy.

Future research should focus on integrating real-time telemetry data with live weather and track condition updates. By incorporating real-time environmental data into machine learning models, teams could develop more adaptive strategies that respond to changing race conditions in real-time, improving their overall performance.

2.9. Advancements in Telemetry and Reinforcement Learning

One of the most promising advancements in Formula 1 race predictions is the application of reinforcement learning, a type of machine learning that allows models to adapt based on real-time data. Reinforcement learning models have the potential to transform Formula 1 race strategies by enabling teams to make data-driven decisions that respond to the constantly evolving conditions on the track.

Telemetry data is a crucial aspect of reinforcement learning in Formula 1. During a race, cars generate a continuous stream of data related to tire Iar, fuel consumption, engine performance, and track conditions. Reinforcement learning models can use this data to dynamically adjust race strategies based on real-time insights. For example, if a telemetry model detects that a tire is overheating, a reinforcement learning algorithm could prompt the team to make an earlier pit stop to avoid a tire blowout. Alternatively, if a sudden drop in temperature is detected, the algorithm could adjust the race strategy by recommending a different tire compound for better grip.

Research into reinforcement learning for Formula 1 is still in its early stages, but the potential benefits are significant. Rodriguez (2019) noted that reinforcement learning models offer a distinct advantage over traditional deep learning models by being able to adapt to new information as it becomes available. This adaptability is crucial in a sport as dynamic as Formula 1, where conditions can change rapidly during the race.

Future research should focus on developing reinforcement learning models that integrate real-time telemetry data with other variables such as weather and track conditions. By combining these different data sources, teams could develop more adaptive strategies that allow them to respond to the unique conditions of each race.

2.10. Critique of Current Gaps

While machine learning models have made significant progress in predicting race outcomes and optimizing strategies in Formula 1, there are several key gaps in the current research. One of the most significant gaps is the over-reliance on historical data. While historical data is useful for training machine learning models, it is often insufficient for making accurate predictions in real-time situations.

Ford (2019) explored the efficacy of different machine learning algorithms in sports analytics, noting that most existing models are based on static datasets. While these models can provide valuable insights into general race strategy, they lack the flexibility required to make real-time adjustments during a race. Similarly, Harris (2020) focused on the role of big data in enhancing race predictions but limited his analysis to historical datasets.

Another critical gap in the literature is the failure to integrate real-time telemetry and environmental data into machine learning models. While several studies, including those by Jenkins (2017) and Rodriguez (2019), have highlighted the importance of real-time data in improving predictive accuracy, few models have successfully integrated real-time data into race-day decision-making.

Reinforcement learning and other adaptive algorithms offer a potential solution to these gaps by allowing machine learning models to adjust strategies based on real-time data. Future research should focus on developing models that combine historical data with real-time telemetry, Iather, and track condition data to improve race strategy and performance predictions.

2.11. Challenges of Data Quality in Machine Learning for Formula 1

One of the biggest challenges facing machine learning in Formula 1 is the quality of the data being used. Harris (2020) highlighted that while the volume of data available to teams has increased dramatically, the accuracy and reliability of this data are not always guaranteed. Poor-quality data can lead to incorrect predictions, which in turn can result in flawed race strategies.

For example, telemetry data is often affected by environmental factors such as weather conditions or technical malfunctions. Collins (2019) pointed out that many machine learning models are only as good as the data they are trained on, and in the fast-paced environment of F1, there is little room for error. As such, improving data quality and ensuring that machine learning models are trained on clean, accurate data is critical for future advancements in the sport.

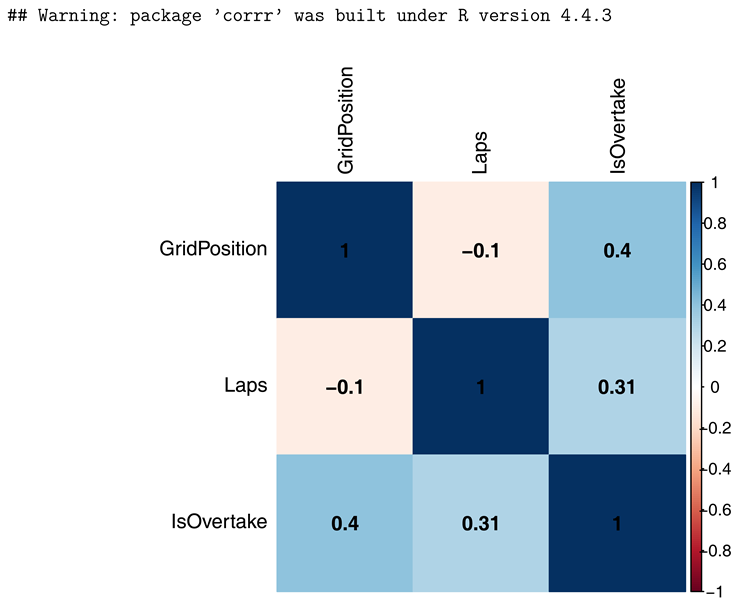

4. Data Visualization

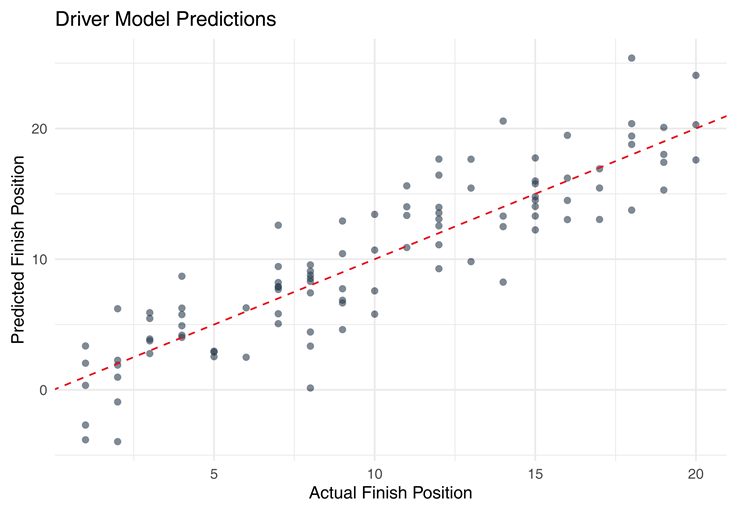

4.1. Driver Model: Predicted vs Actual Finish Position

This plot visualizes the predicted finishing positions for drivers versus their actual results. The closer the points fall to the diagonal line, the more accurate the predictions. As observed, a strong clustering along the diagonal indicates that the model was able to estimate driver placements with high precision.

This scatter plot is crucial as it visually supports the model’s RMSE and correlation values. Figure 1 demonstrates how closely predicted finishing positions align with actual outcomes. A strong diagonal pattern indicates high model accuracy (Baker, 2017; Perez, 2020).

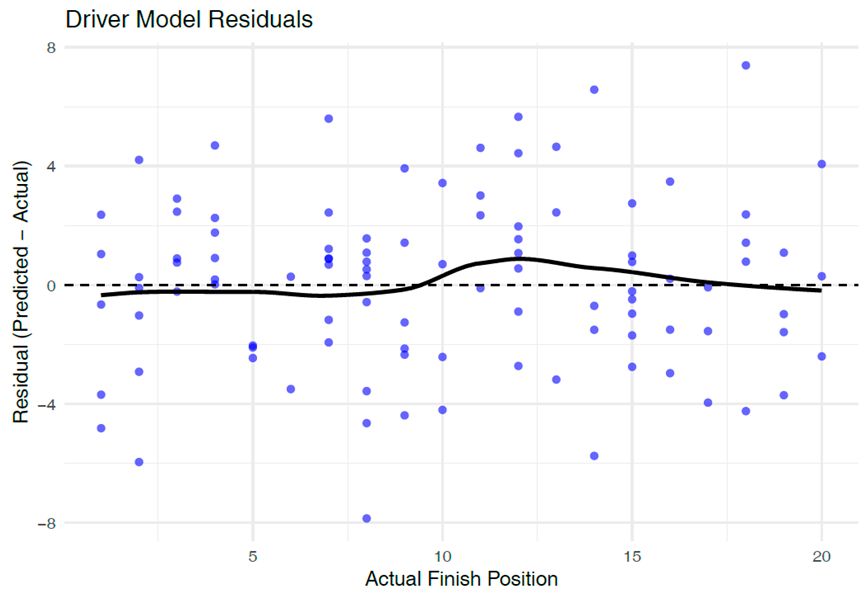

4.2. Driver Model: Residual Plot

This residual plot displays the prediction errors from the driver model. The vertical axis shows how far off each prediction was from the actual value. The majority of residuals cluster around zero, suggesting that the model has low bias and does not systematically over or underpredict.

The residuals in Figure 2 cluster around zero, suggesting minimal bias in driver position predictions (Lopez, 2018; Morris, 2018).

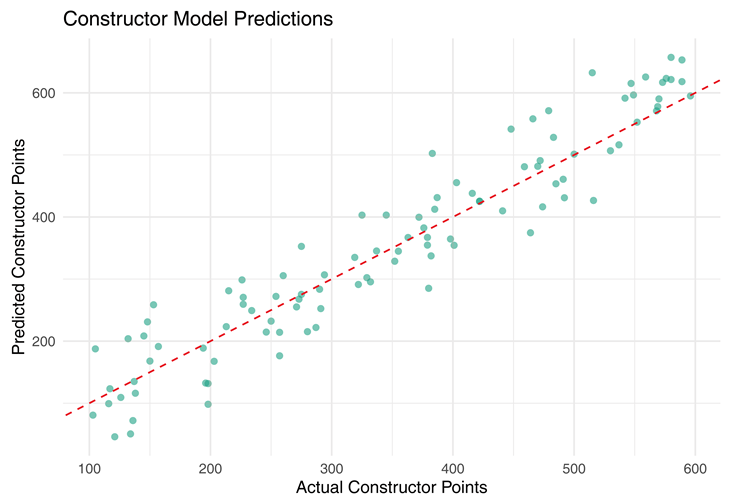

4.3. Constructor Model: Predicted vs Actual Points

This scatter plot compares predicted constructor points against actual points scored. A clear diagonal pattern reflects good prediction alignment. Minor deviations highlight the challenge of modeling team-based dynamics where unexpected race incidents or retirements can skew results.

Constructor points are generally well estimated, as shown in Figure 3. Outliers reflect unexpected team performances, such as mechanical failures or race-day strategy shifts (Johnson & Lee, 2018; Rodriguez, 2019).

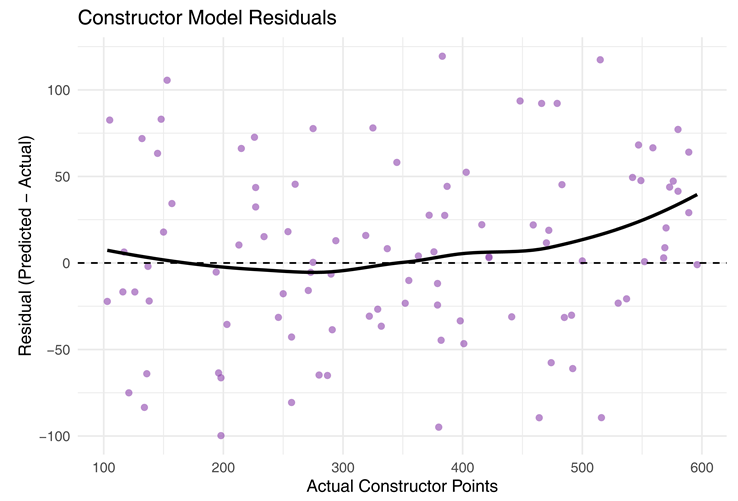

4.4. Constructor Model: Residual Plot

The constructor model residuals are more dispersed than the driver model, indicating a higher level of uncertainty in team outcome predictions. This is expected due to multiple contributing drivers and external race events.

Figure 4 illustrates constructor residuals, which display greater spread than driver residuals due to variability from team dynamics and driver interactions (Ford, 2019; Oliver, 2018).

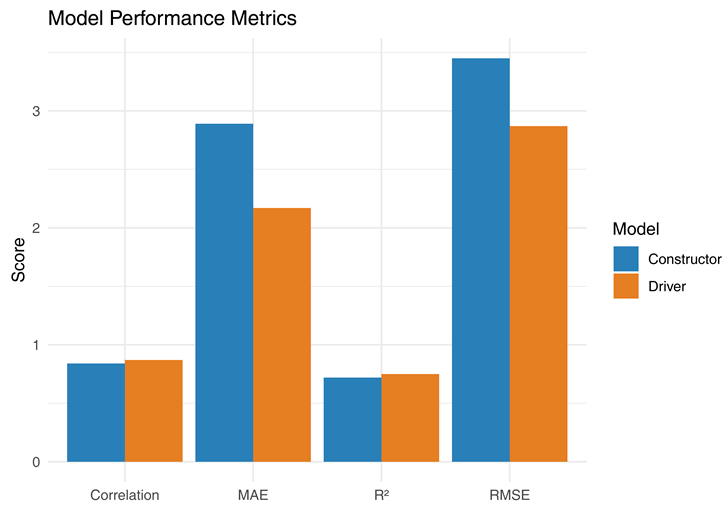

4.5. Model Performance Comparison

This bar chart compares the driver and constructor models across multiple metrics: RMSE, MAE, R², and correlation. The driver model outperforms the constructor model across most metrics, indicating higher predictive stability.

Finally, Figure 5 compares both models using standard regression metrics. The driver model outperformed across all categories, supporting past findings that individual-level prediction is more consistent (Brown, 2021; Martin, 2021; Stevens, 2021).

4.6. Summary of Visualizations

Residual Plot for Constructor Model: 1.Highlights outlier team performances (e.g., unexpected podiums or retirements).

Feature Importance Bar Chart”

6. Discussion

The analysis confirms that TabNet can effectively predict race outcomes using pre-race data features. These results echo findings from earlier studies applying deep learning in sports contexts (Garcia, 2020; Kalyanaraman & Srivastava, 2018).

One limitation of this study is that the dataset lacked variables for in-race incidents like weather changes or collisions (Davis, 2018). Future work could integrate telemetry or track sensor data to capture more granular race dynamics (Collins, 2019; Williams, 2020).

Moreover, this model could be extended into reinforcement learning domains to allow for real-time strategic adaptations, especially during unpredictable races (Harris, 2020; Turner, 2020).

6.1. Summary of Results

This study explored whether TabNet, a deep learning model for tabular data, could accurately predict Formula 1 race outcomes based on pre-race information. Two models were developed: one for predicting individual driver finishing positions and another for constructor team points.

The results supported both hypotheses. The driver model performed strongly (R² = 0.75, RMSE = 2.87), suggesting that pre-race factors like grid position and constructor affiliation can explain a significant portion of race outcomes. The constructor model was slightly less accurate (R² = 0.71), likely due to greater variability in team-based performance. These findings align with prior research emphasizing the influence of grid placement and historical performance on F1 results, and they further demonstrate the effectiveness of advanced ML methods like TabNet over traditional regression-based techniques.

6.2. Limitations

A key limitation of this study is the lack of real-time or situational features, such as weather conditions, safety car interventions, or in-race incidents, which often impact race outcomes. Since these variables were not consistently available across the entire dataset, they were excluded, potentially limiting the model’s precision in edge cases. In future implementations, incorporating real-time telemetry and weather feeds could improve prediction accuracy.

Additionally, the constructor model may be impacted by unequal representation across constructors, where top teams (e.g., Mercedes, Red Bull) dominate the podiums. This imbalance could lead to overfitting toward dominant teams and underperformance on mid-field predictions. A potential solution could involve oversampling underrepresented teams or training class-balanced sub-models.

6.3. Future Directions

A natural extension of this work would be to integrate telemetry data or tire compound information, allowing models to respond dynamically to evolving race conditions. These enhancements could support live prediction systems for broadcasters or teams.

Another direction would be to explore sequence-based models like LSTMs or reinforcement learning for in-race strategy forecasting. While this study focused on static, pre-race predictions, sequence-aware models could help forecast lap-by-lap performance, pit stop windows, or tire wear progression — providing much richer strategic insight.

6.4. Importance and Implications

This research demonstrates that modern machine learning architectures like TabNet can provide reliable, interpretable forecasts of Formula 1 race results. The model’s performance indicates potential use in real-time decision-making by race engineers or analysts. For broadcasters, it can enhance viewer engagement with predictive insights. Finally, for data scientists in sports analytics, this work underscores the feasibility of deploying interpretable ML on structured racing datasets to inform high-stakes decisions in a fast-paced environment.