Submitted:

02 April 2025

Posted:

02 April 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Review the architectural components and core concepts of MCP.

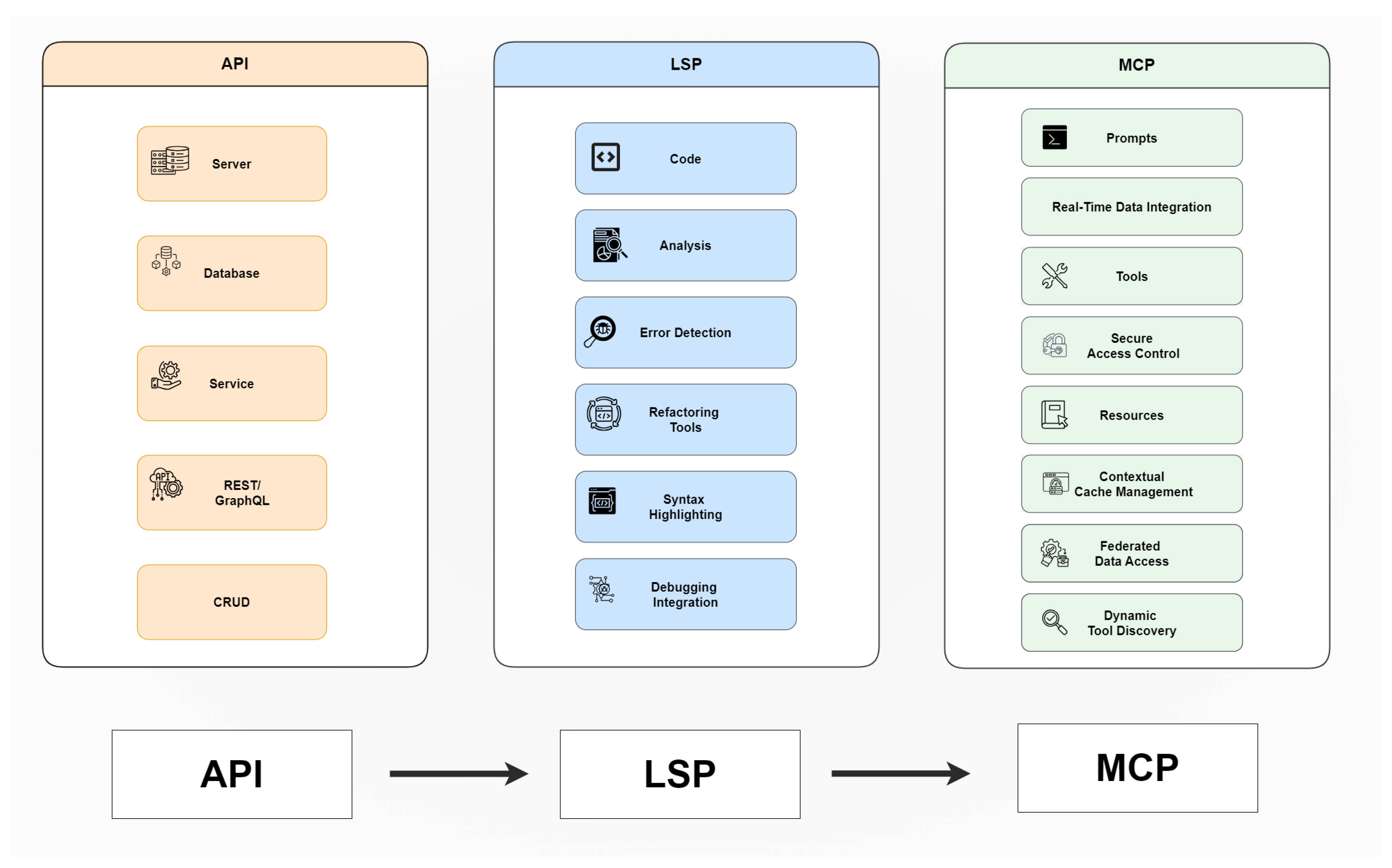

- Critically assess how MCP compares with conventional API integrations, as in Table 1.

- Discuss potential applications across various industries.

- Identify challenges and propose directions for future work, acknowledging that empirical validation is still emerging.

2. Background

- Knowledge Staleness: LLMs frequently lack up-to-date information, causing inaccuracies or irrelevant outputs when responding to queries related to recent developments.

- Contextual Limitations: Static models are often unable to adapt dynamically to changing contexts or effectively integrate external real-time information, limiting their contextual responsiveness.

- Integration Complexity: Building diverse API integrations requires repeated effort, extending development cycles.

- Scalability Issues: Adding new data sources or tools is cumbersome, limiting scalability and adaptability [6].

- Interoperability Barriers: The absence of standardized protocols hinders reuse across AI models, leading to redundancy.

- Security Risks: Custom API integrations often lack consistent security measures, increasing the risk of data breaches [7].

- Prompts: [10] Standardizing the provision and formatting of input context to AI models.

- Tools: Establishing consistent methodologies for dynamic tool [11] discovery, integration, and usage by AI agents.

- Resources: Defining standardized access to and utilization of external data and auxiliary resources for contextual enrichment.

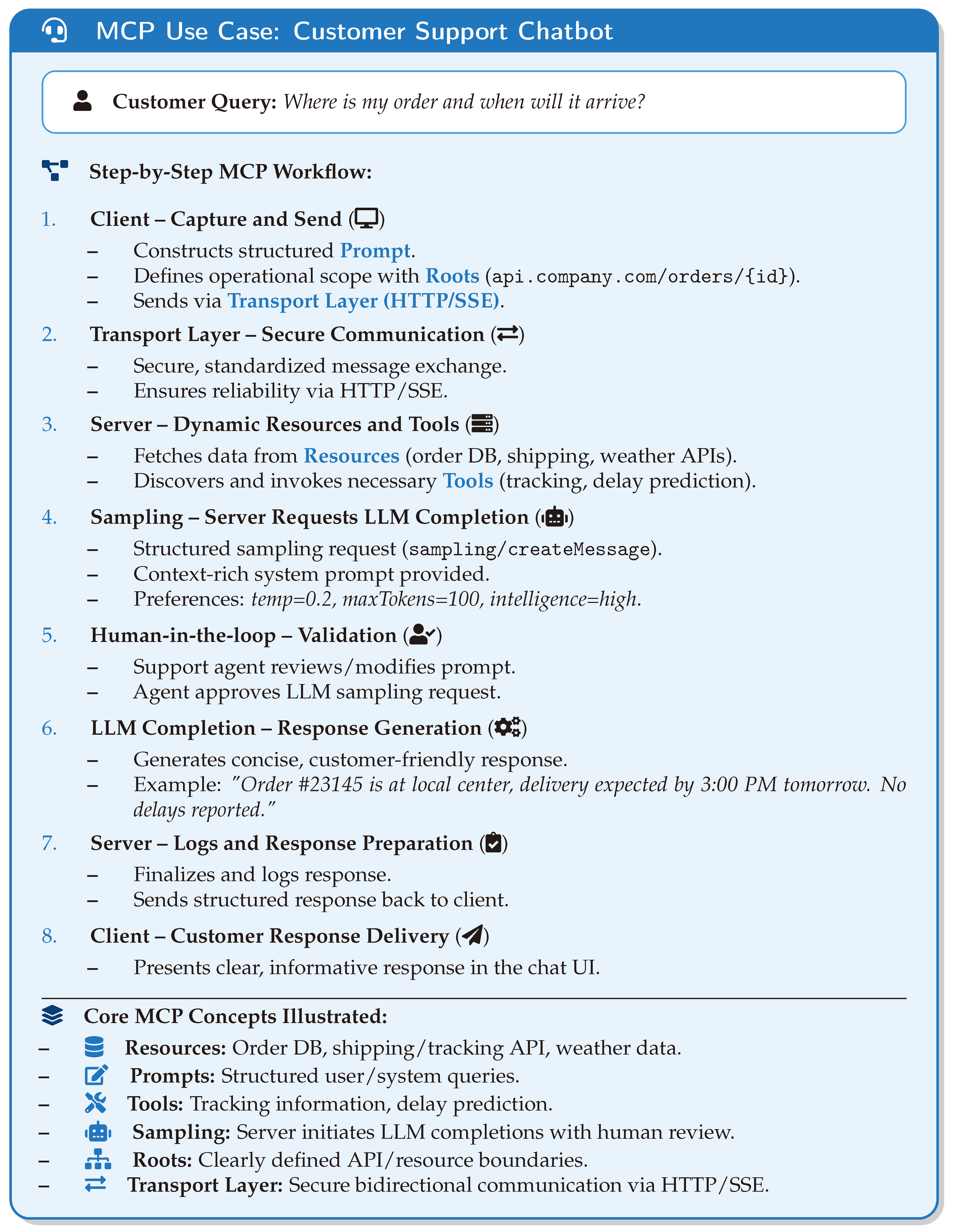

3. Architecture of MCP

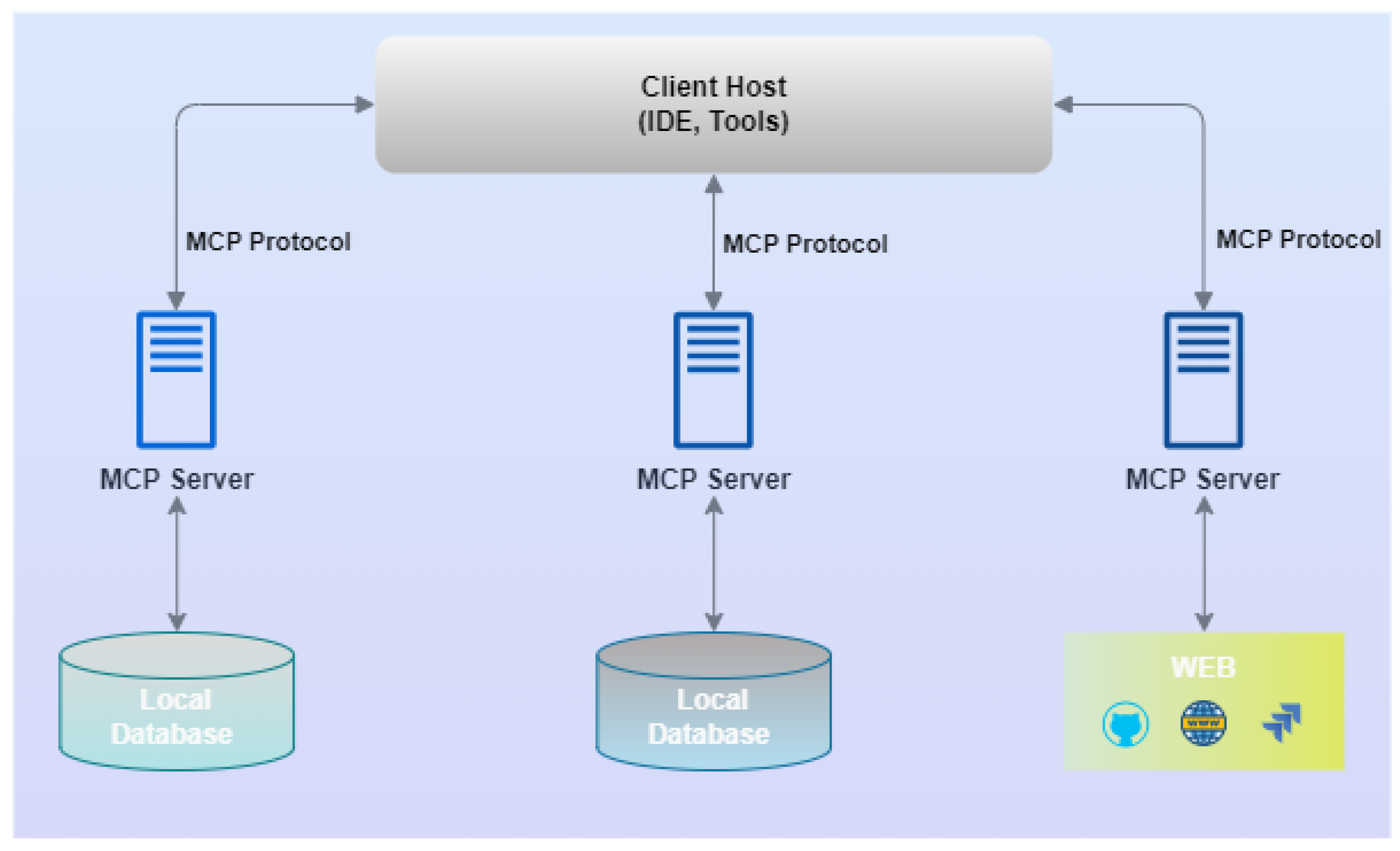

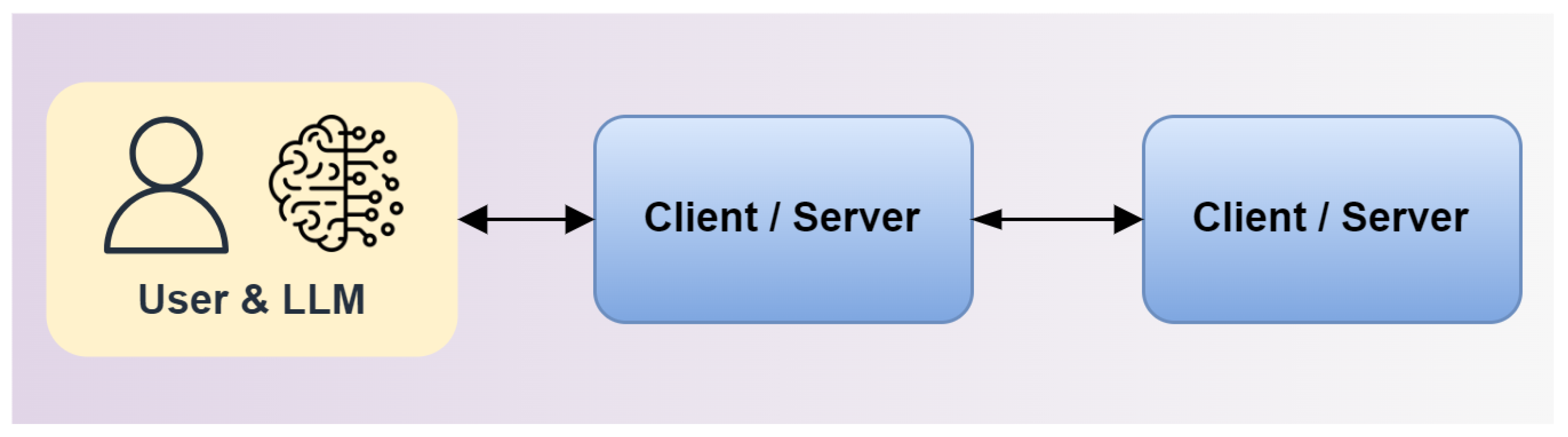

3.1. Client-Server Architecture

- Hosts refer to LLM applications, such as IDEs or platforms like Claude Desktop, that initiate communication.

- Clients establish dedicated one-to-one connections with servers within these host applications.

- Servers provide essential contextual data, tools, and prompts to enhance the client’s functionality.

3.2. Protocol Layer

3.3. Transport Layer

- Standard Input/Output (Stdio): Ideal for local and command-line based interactions, efficient in same-machine scenarios.

- HTTP with Server-Sent Events (SSE): Suitable for remote interactions and streaming scenarios, leveraging widely-adopted web standards.

3.4. Message Types

- Requests: Initiated by a client expecting a response.

- Results: Successful responses to requests.

- Errors: Responses signaling failures in request processing.

- Notifications: One-way informational messages that do not require responses.

4. Fundamental MCP Concepts

4.1. Resources

- Text Resources: These resources consist of UTF-8 encoded textual data, making them ideal for sharing source code, configuration files, JSON or XML data, and plain text documents. Textual resources facilitate straightforward integration with LLM workflows for tasks like summarization, data extraction, and code analysis.

- Binary Resources: Binary resources comprise data encoded in base64 format, suitable for multimedia content such as images, audio, video, PDF documents, and other non-textual formats. Binary resources extend the capability of LLMs to interact with richer, multimedia-based contexts, supporting advanced tasks such as image recognition, document analysis, or multimedia summarization.

- Direct Resource Listing: Servers explicitly list available resources through the resources/list endpoint, providing metadata including the resource URI, descriptive name, optional detailed description, and MIME type.

- Resource Templates: Servers can define dynamic resources via URI templates following RFC 6570 standards. Templates enable clients to construct specific resource URIs dynamically based on contextual requirements or parameters.

4.2. Prompts

- Standardization of Interactions: Prompts define structured formats that can include dynamic arguments, allowing consistent interactions regardless of the specific application context or LLM used.

- Dynamic Context Integration: Prompts can integrate content from external resources dynamically, enriching the interaction context available to LLMs, which facilitates more informed and relevant model responses.

- Workflow Automation and Composability: Prompts can encapsulate multi-step interaction workflows, enabling automated or semi-automated processes that guide users and models through complex tasks systematically.

- Name and Description: Clearly identifies the prompt, providing descriptive context for easy discovery and selection.

- Arguments: Optionally includes parameters defined by schema, specifying required or optional inputs from users or automated processes.

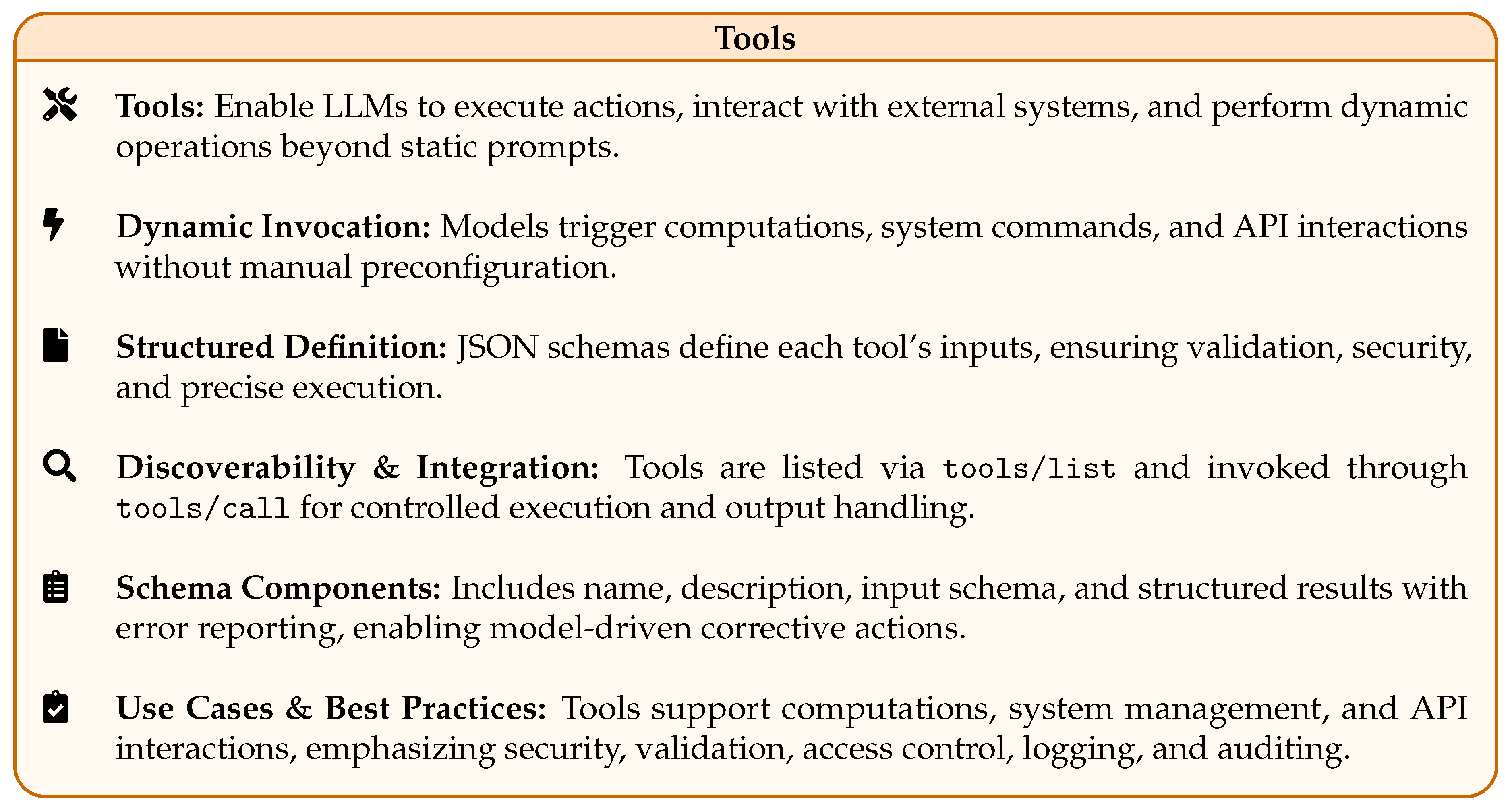

4.3. Tools

- Dynamic Action Invocation: Tools allow models to trigger operations dynamically, performing tasks such as computations, system commands, or API interactions without manual preconfiguration at runtime.

- Structured Tool Definition: Each tool is explicitly defined through JSON schema specifying inputs, ensuring clarity in interactions, precise validation, and secure invocation by clearly outlining required parameters and expected data formats.

- Discoverability and Integration: Clients discover tools through the tools/list endpoint, retrieving descriptive metadata and schemas. Invocation occurs via the structured tools/call mechanism, which facilitates clear, controlled tool execution and output handling.

- Name and Description: Clearly identifying each tool and detailing its functionality.

- Input Schema: Precisely describing required inputs, parameter types, and validation constraints to prevent erroneous or unsafe executions.

- Structured Results and Error Reporting: Tools explicitly communicate execution outcomes, including successful results or structured errors, thereby enabling model-driven error handling or corrective actions.

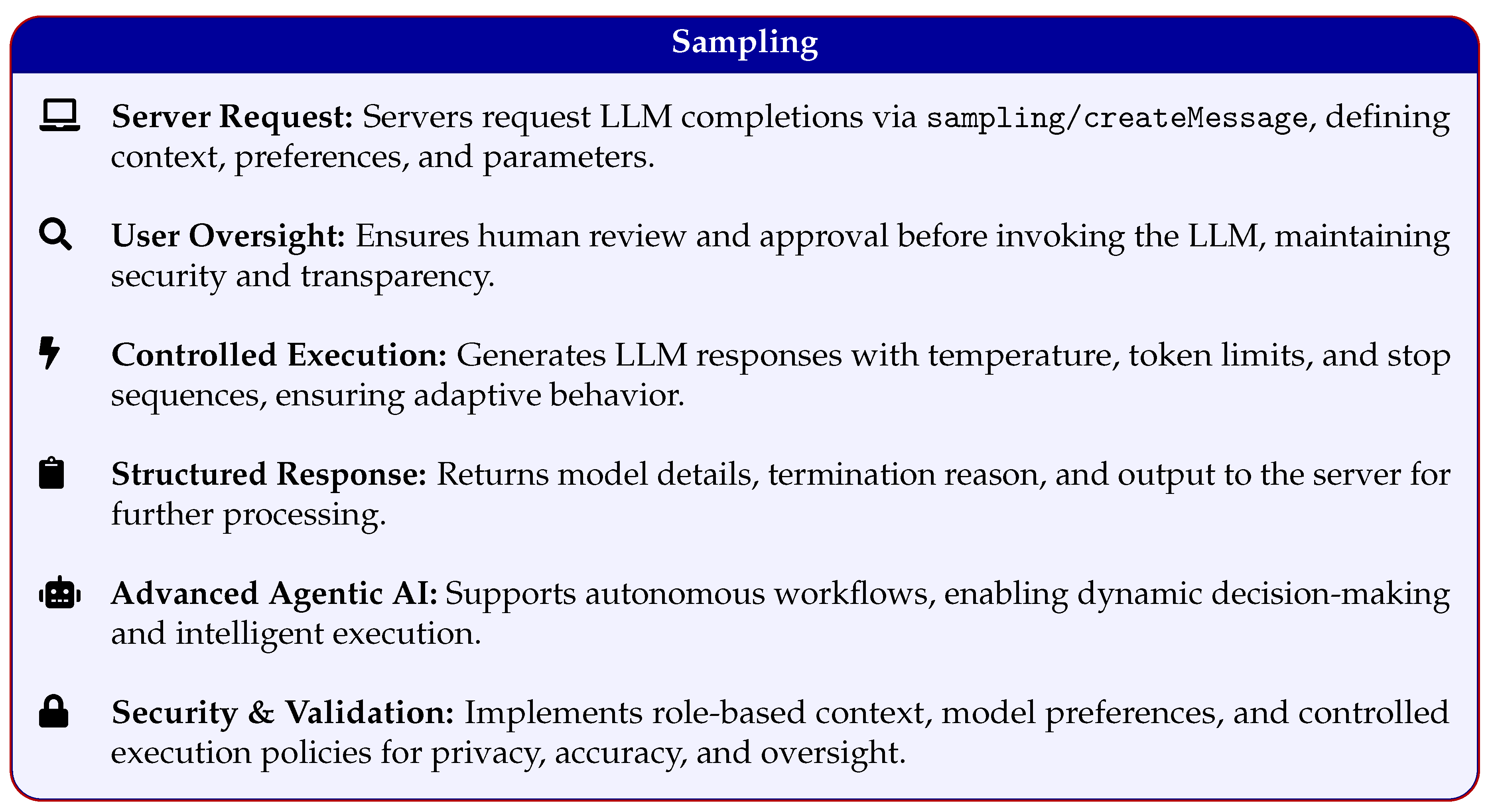

4.4. Sampling

- Server Initiates Request: The server initiates a sampling request through the sampling/create Message endpoint, providing structured input, including the message context, optional model preferences (e.g., desired cost, latency, or capabilities), and sampling parameters such as temperature, token limits, and stop sequences.

- Client Review and Approval: Before invoking the LLM, clients typically allow users to review, adjust, or approve the proposed message, thereby maintaining human-in-the-loop oversight and security.

- Model Execution and Completion Generation: Upon user approval, the client interacts with the selected LLM to generate a completion, considering the provided sampling parameters to control response variability and specificity.

- Return and Handling of Completions: The generated completion is returned to the initiating server through a structured response, clearly identifying the model used, the reason for completion termination, and the resultant output (textual or multimodal).

- Contextual Messages: Input messages structured with roles (e.g., user or assistant) and content type (text or images), guiding model responses clearly and effectively.

- Model Preferences: Providing hints or priorities for cost-efficiency, response speed, and model intelligence, assisting clients in optimal model selection and use.

- Context Inclusion Policies: Defining the scope of additional context to be included in the sampling request, such as data from the current or connected servers, enhancing the relevance and accuracy of model completions.

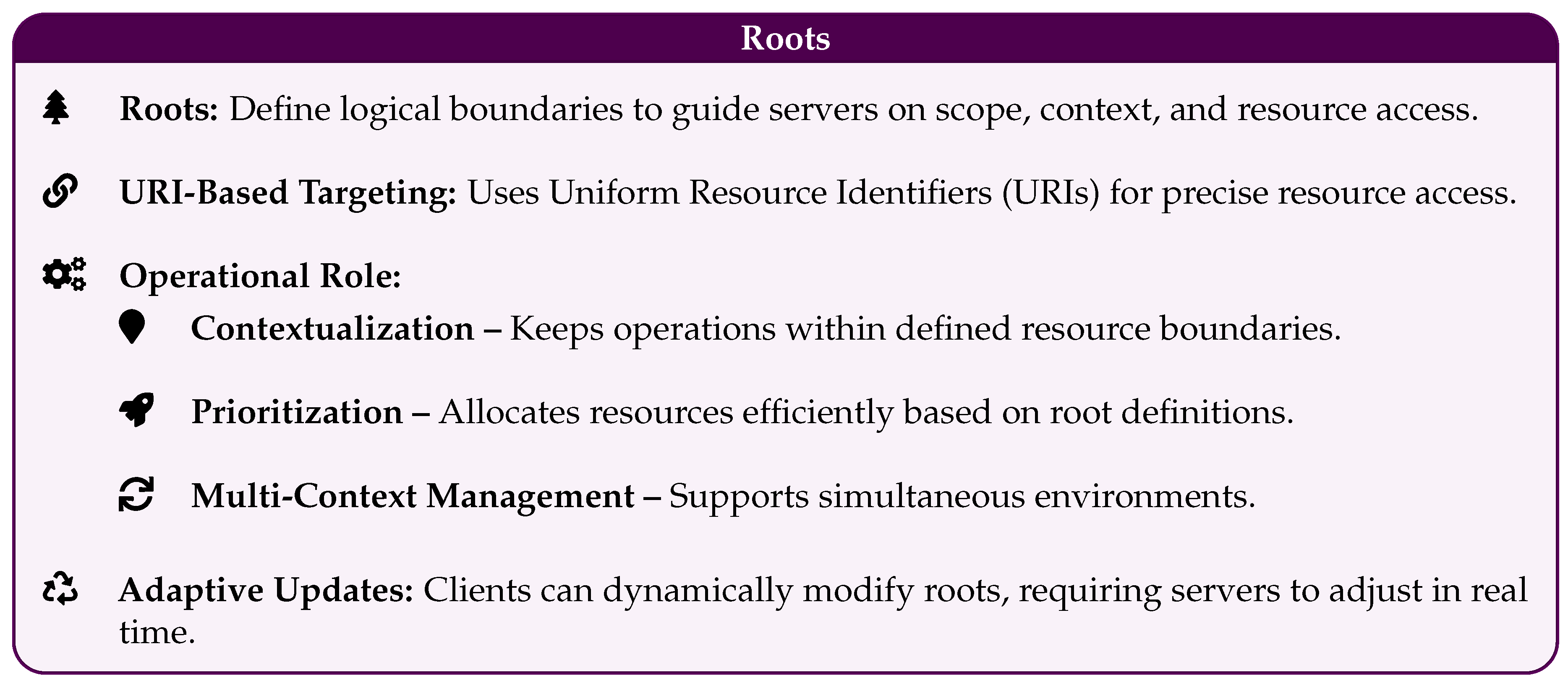

4.5. Roots

- Local directories, for example: file:///home/user/projects/app

- API endpoints, for instance: https://api.example.com/v1

- Repository locations, configuration paths, or resource identifiers within distinct operational domains.

- Contextualize Operations: Focus their data retrieval or tool invocation strictly within relevant resource boundaries.

- Prioritize Activities: Allocate resources and execution priority based on the specified roots, improving operational efficiency.

- Manage Multi-Context Environments: Seamlessly support scenarios involving multiple simultaneous operational contexts, such as multiple project directories or diverse remote services.

5. Building Effective Agents with MCP

5.1. Sampling: Federated Intelligence Requests

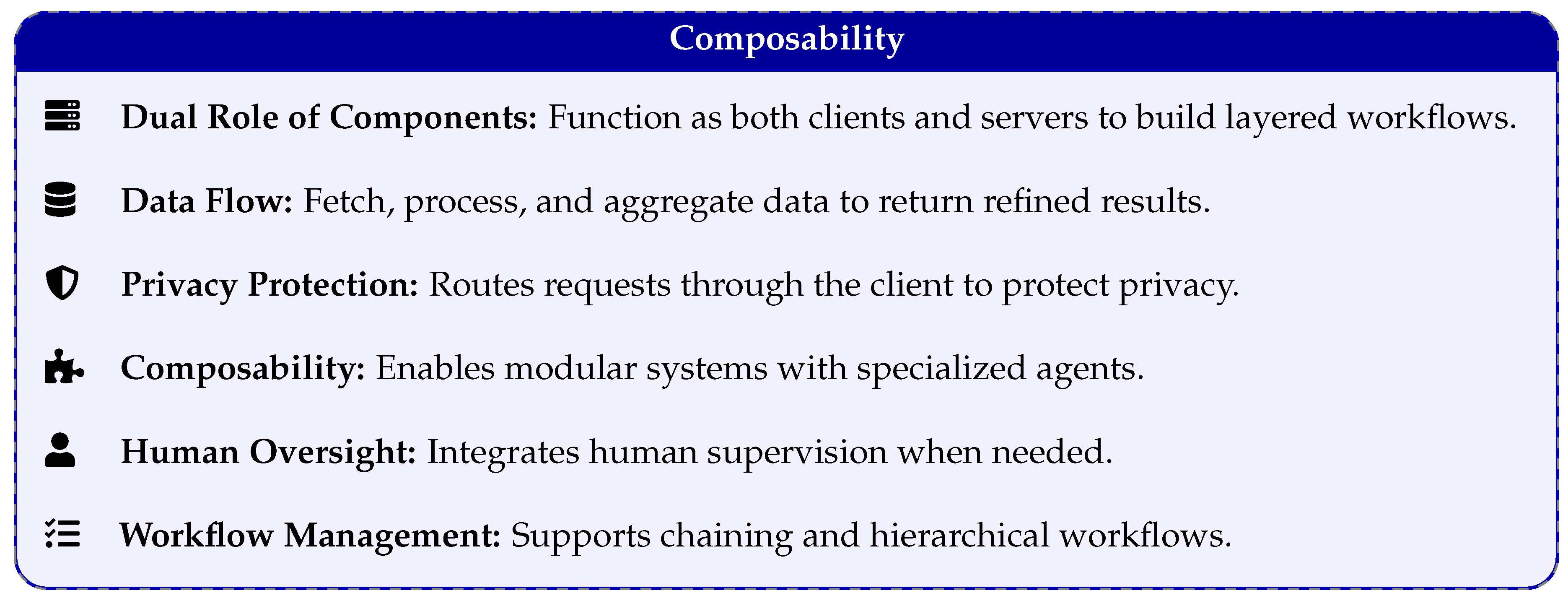

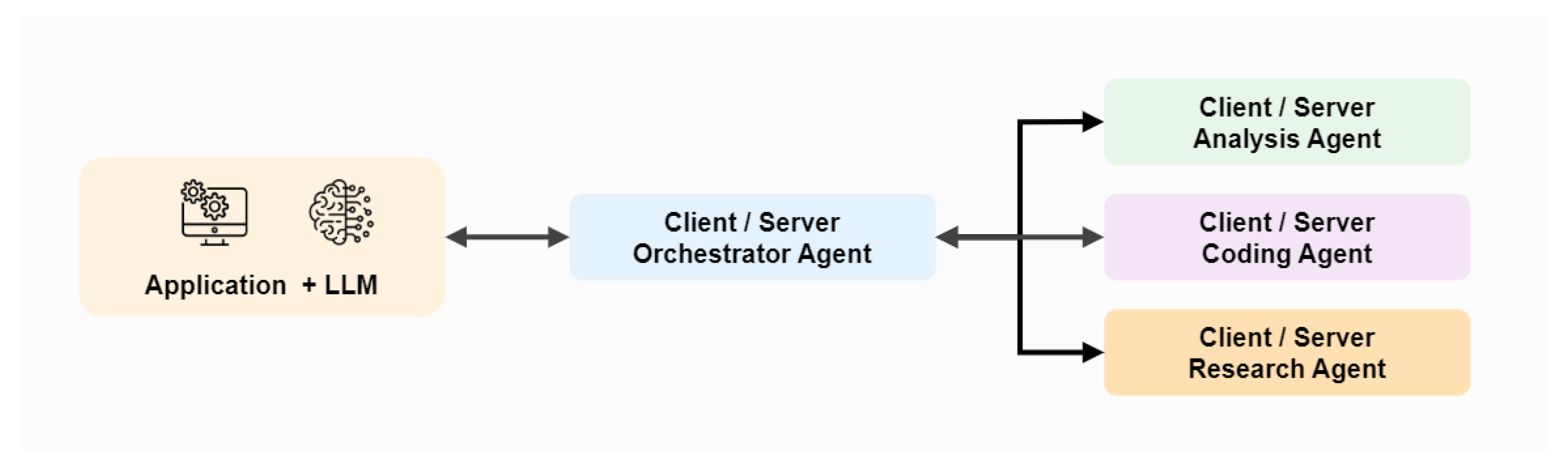

5.2. Composability: Logical Separation and Agent Chaining

- Modular design of complex agent systems

- Clear task specialization among agents

- Scalability in multi-agent systems

5.3. Combined Sampling and Composability

- Centralized control and orchestration

- Secure and flexible agent interactions

- Enhanced capabilities for complex task management

6. Applications and Impact

6.1. Real-World Applications

- Finance: Automated financial analysis, fraud detection, and personalized financial advice become more efficient through real-time data integration. For instance, the Stripe or PayPal MCP servers (see Table 2) allow LLMs to handle secure transactions and financial workflows, mitigating the fragmentation of traditional financial APIs.

- Healthcare: By integrating diverse healthcare data via specialized MCP servers, automated diagnosis, personalized treatments, and drug discovery become feasible. Although FHIR-based integrations are not yet available, they offer a promising path to standardize patient data access and bolster security.

- Education: Through personalized learning experiences, automated grading, and research assistance, MCP can enhance educational outcomes. Integrations like Canvas and Blackboard servers (see Table 2) streamline content delivery and user management, fostering adaptive learning environments.

- Scientific Research: By automating data analysis, literature review, and hypothesis generation, MCP supports faster, more thorough research processes. Tools such as PostHog or Supabase provide real-time analytics and manage large datasets efficiently, reducing time-to-insight in research workflows.

- Customer Service: MCP facilitates the development of intelligent chatbots and recommendation systems that deliver automated, personalized support and issue resolution. For example, Salesforce or Zendesk servers (see Table 2) enable LLMs to handle customer tickets, escalate complex queries, and securely manage user data, resulting in improved response times and customer satisfaction.

- Marketing and Social Media: Platforms like Mailchimp, LinkedIn, or Reddit can be integrated via MCP to automate campaign management, lead generation, and real-time audience engagement, thereby reducing manual overhead and ensuring up-to-date marketing efforts.

- E-commerce: Using Shopify or WooCommerce servers, LLMs can process orders, track inventory, and manage customer queries in a unified environment, making e-commerce operations more adaptive and efficient.

- Collaboration and Project Management: MCP servers for Jira, Asana, or Trello simplify workflow automation, task delegation, and real-time progress tracking, ensuring that teams can leverage AI to coordinate projects more effectively.

7. Challenges

- Adoption and Standardization: Establishing MCP as the industry standard requires active community involvement.

- Security and Privacy: Implementing comprehensive security mechanisms is crucial.

- Scalability and Performance: Ensuring low-latency communication as the system scales is essential.

- Ecosystem Development: Building a robust ecosystem of compatible tools and services is necessary.

8. Conclusions

References

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A Survey of Large Language Models, 2025, [arXiv:cs.CL/2303.18223]. [CrossRef]

- Perron, B.E.; Luan, H.; Qi, Z.; Victor, B.G.; Goyal, K. Demystifying Application Programming Interfaces (APIs): Unlocking the Power of Large Language Models and Other Web-based AI Services in Social Work Research, 2024, [arXiv:cs.SE/2410.20211]. [CrossRef]

- Anthropic. Model Context Protocol. https://modelcontextprotocol.io/introduction, 2024. Accessed: 2025-03-18.

- Model Context Protocol. https://www.anthropic.com/news/model-context-protocol, 2024. Accessed: 2025-03-18.

- Kaddour, J.; Harris, J.; Mozes, M.; Bradley, H.; Raileanu, R.; McHardy, R. Challenges and Applications of Large Language Models, 2023, [arXiv:cs.CL/2307.10169]. [CrossRef]

- Macvean, A. API Usability at Scale. https://ppig.org/files/2016-PPIG-27th-Macvean.pdf, 2016. Accessed: 2025-03-18.

- Software Engineering Institute, C.M.U. API Vulnerabilities and Risks. https://insights.sei.cmu.edu/documents/5908/api-vulnerabilities-and-risks-2024sr004-1.pdf. Accessed: 2025-03-18.

- Chen, X.; Gao, C.; Chen, C.; Zhang, G.; Liu, Y. An Empirical Study on Challenges for LLM Application Developers, 2025, [arXiv:cs.SE/2408.05002]. [CrossRef]

- Kjær Rask, J.; Palludan Madsen, F.; Battle, N.; Daniel Macedo, H.; Gorm Larsen, P. The Specification Language Server Protocol: A Proposal for Standardised LSP Extensions. Electronic Proceedings in Theoretical Computer Science 2021, 338, 3–18. [CrossRef]

- White, J.; Fu, Q.; Hays, S.; Sandborn, M.; Olea, C.; Gilbert, H.; Elnashar, A.; Spencer-Smith, J.; Schmidt, D.C. A Prompt Pattern Catalog to Enhance Prompt Engineering with ChatGPT, 2023, [arXiv:cs.SE/2302.11382]. [CrossRef]

- Qin, Y.; Liang, S.; Ye, Y.; Zhu, K.; Yan, L.; Lu, Y.; Lin, Y.; Cong, X.; Tang, X.; Qian, B.; et al. ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs, 2023, [arXiv:cs.AI/2307.16789]. [CrossRef]

- Layer, P. Protocol Layer - Model Context Protocol. https://modelcontextprotocol.io/docs/concepts/architecture#protocol-layer, 2025. Accessed: 2025-03-18.

- Resources. Resources - Model Context Protocol. https://modelcontextprotocol.io/docs/concepts/resources, 2025. Accessed: 2025-03-18.

- Prompts. Prompts - Model Context Protocol. https://modelcontextprotocol.io/docs/concepts/prompts, 2025. Accessed: 2025-03-18.

- Tools. Tools - Model Context Protocol. https://modelcontextprotocol.io/docs/concepts/tools, 2025. Accessed: 2025-03-18.

- Sampling. Sampling - Model Context Protocol. https://modelcontextprotocol.io/docs/concepts/sampling, 2025. Accessed: 2025-03-18.

- Roots. Roots - Model Context Protocol. https://modelcontextprotocol.io/docs/concepts/roots, 2025. Accessed: 2025-03-18.

| Feature | API | MCP |

|---|---|---|

| Primary Focus | Application-to-application communication, specific function execution. | LLM-to-external resource communication, contextual data delivery, dynamic tool use, and standardized resource access. |

| Integration Approach | Fragmented, custom-built integrations. | Standardized, unified protocol for diverse resources and tools. |

| Context Handling | Limited, requires explicit context passing. | Built-in mechanisms for contextual data delivery, metadata, and prompt-based context communication. |

| Tool/Data Discovery | Manual endpoint discovery, requires prior knowledge. | Dynamic discovery through server queries, metadata, and tool introspection. |

| Security | Varies widely, depending on API design. | Standardized security mechanisms, including authentication, authorization, and data encryption. |

| LLM Specificity | General-purpose, used across various applications. | Designed specifically for LLM integration, Agentic capabilities, and resource/tool management. |

| Tool Usage | Requires custom-built coding for tool access. | Standardized tool usage via tool metadata, dynamic invocation, and prompt-based interaction. |

| Resource Access | Limited, requires specific API endpoints. | Standardized resource access through addressable entities and defined protocols. |

| Prompt Interaction | Typically simple text-based requests. | Structured prompts for detailed data and function requests, supporting complex interactions. |

| Transports | Varies, often HTTP/HTTPS. | Standardized transports for reliable communication, supporting diverse environments. |

| Sampling | Implementation varies, often manual. | Standardized sampling mechanisms for efficient data retrieval. |

| Category | Providers |

|---|---|

| Productivity & Project Management |

Airtable, Airtable,  Google Tasks, Google Tasks,  Monday, Monday,  ClickUp, ClickUp,  Trello Trello |

| Design & Creative Tools |

Figma, Figma,  Canva Canva |

| Marketing & Social Media |

LinkedIn, LinkedIn,  X, X,  Mailchimp, Mailchimp,  Ahrefs, Ahrefs,  Reddit Reddit |

| Productivity |

Asana, Asana,  Jira, Jira,  Bolna Bolna |

| Entertainment & Media |

YouTube YouTube |

| CRM |

Salesforce, Salesforce,  HubSpot, HubSpot,  Pipedrive, Pipedrive,  Apollo Apollo |

| Education & LMS |

Canvas, Canvas,  Blackboard, Blackboard,  D2L Brightspace D2L Brightspace |

| Other |

Browserbase, Browserbase,  WeatherMap, WeatherMap,  Google Maps, Google Maps,  HackerNews HackerNews |

| Explore the Complete List of MCP Providers at: | awesome-mcp-servers (GitHub) mcp.composio.dev mcp.so github.com/modelcontextprotocol/servers |

| Aspect | Impact on Agentic AI |

|---|---|

| Contextual Awareness | Improves LLM understanding by incorporating contextual data for informed decisions. |

| Dynamic Tool Utilization | Allows LLMs to dynamically discover and use tools, enabling adaptation and complex task performance. |

| Scalability & Interoperability | Promotes standardized frameworks, enhancing robustness, versatility, scalability, and interoperability. |

| Secure Data Exchange | Ensures secure interaction between LLMs and external resources, enhancing trust and supporting sensitive applications. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).