Submitted:

27 January 2025

Posted:

28 January 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

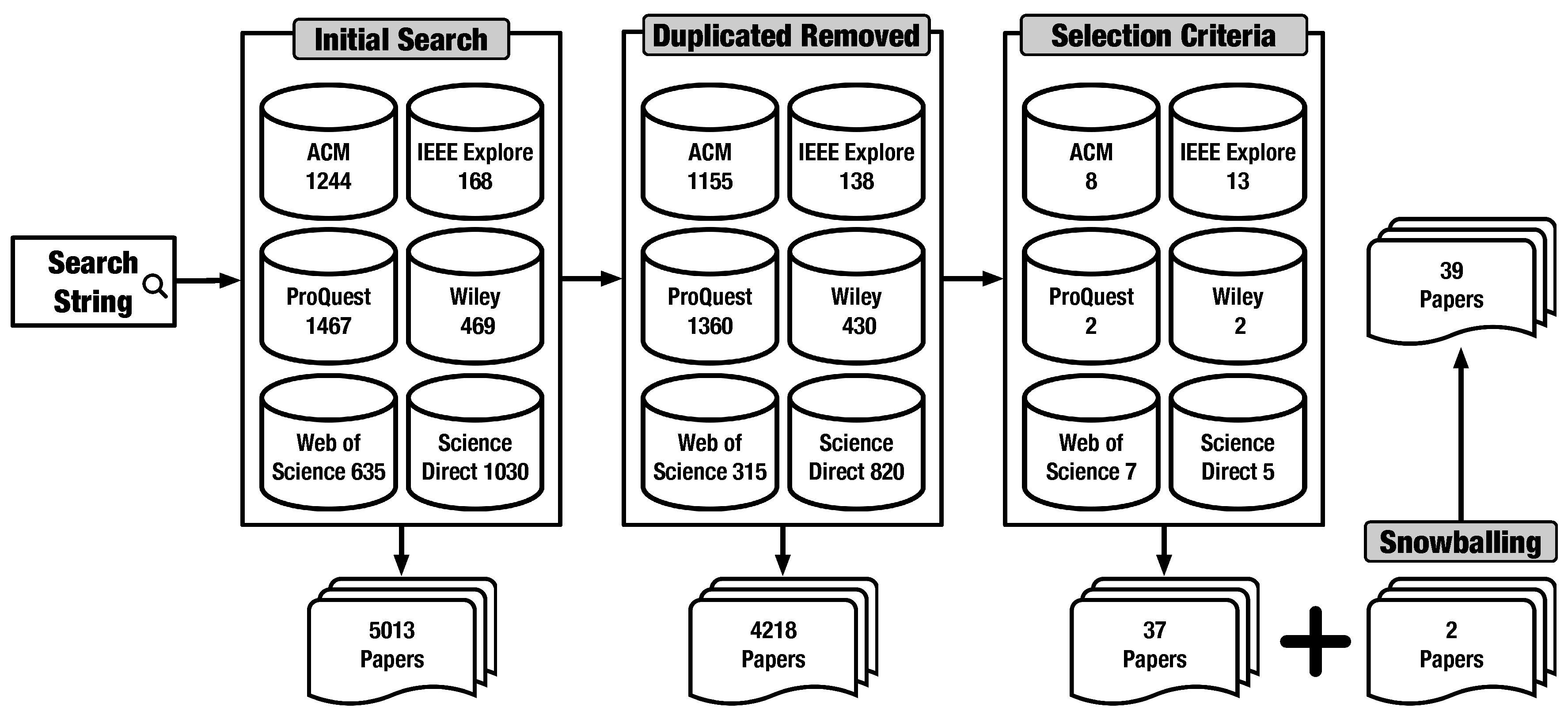

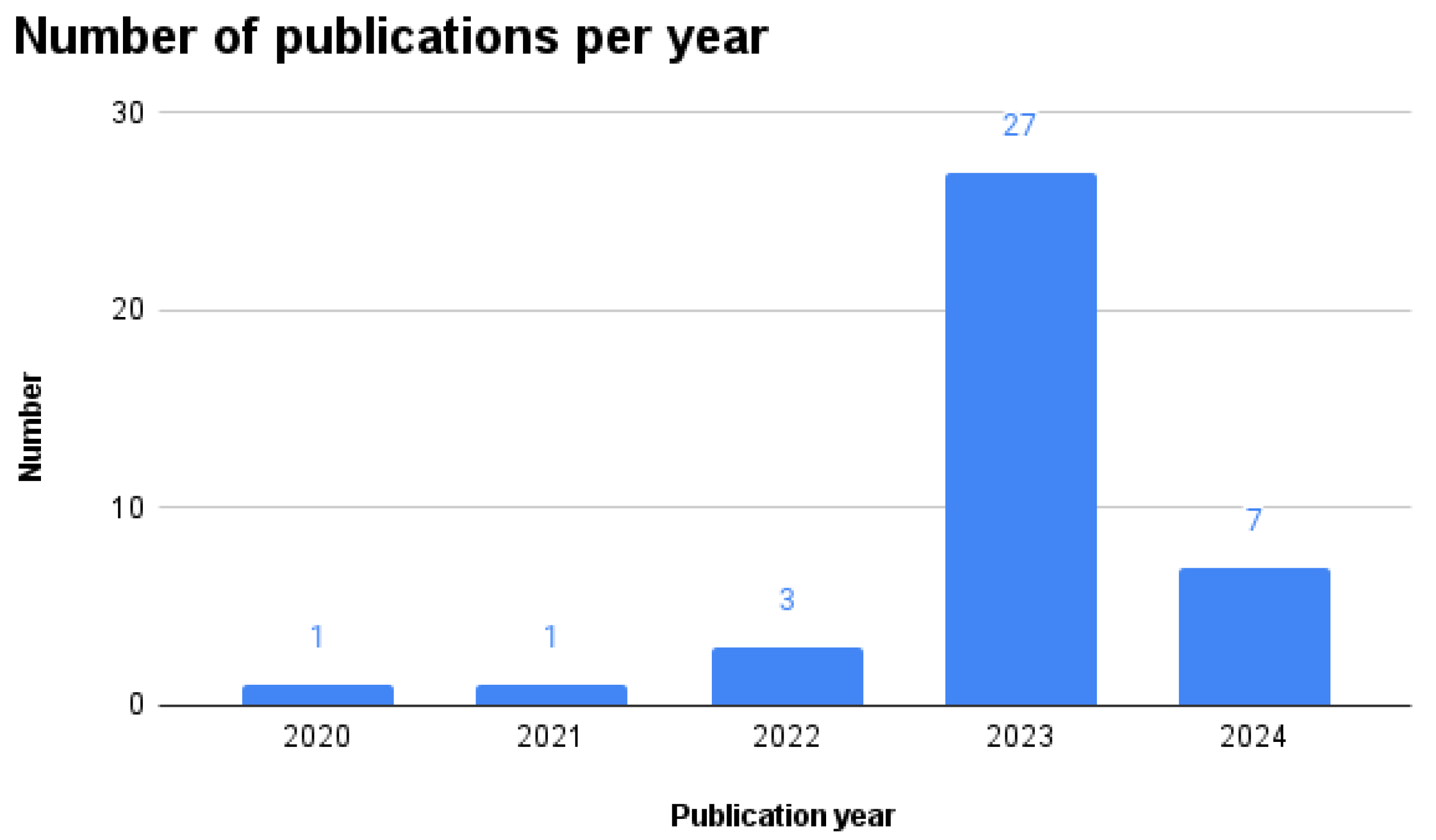

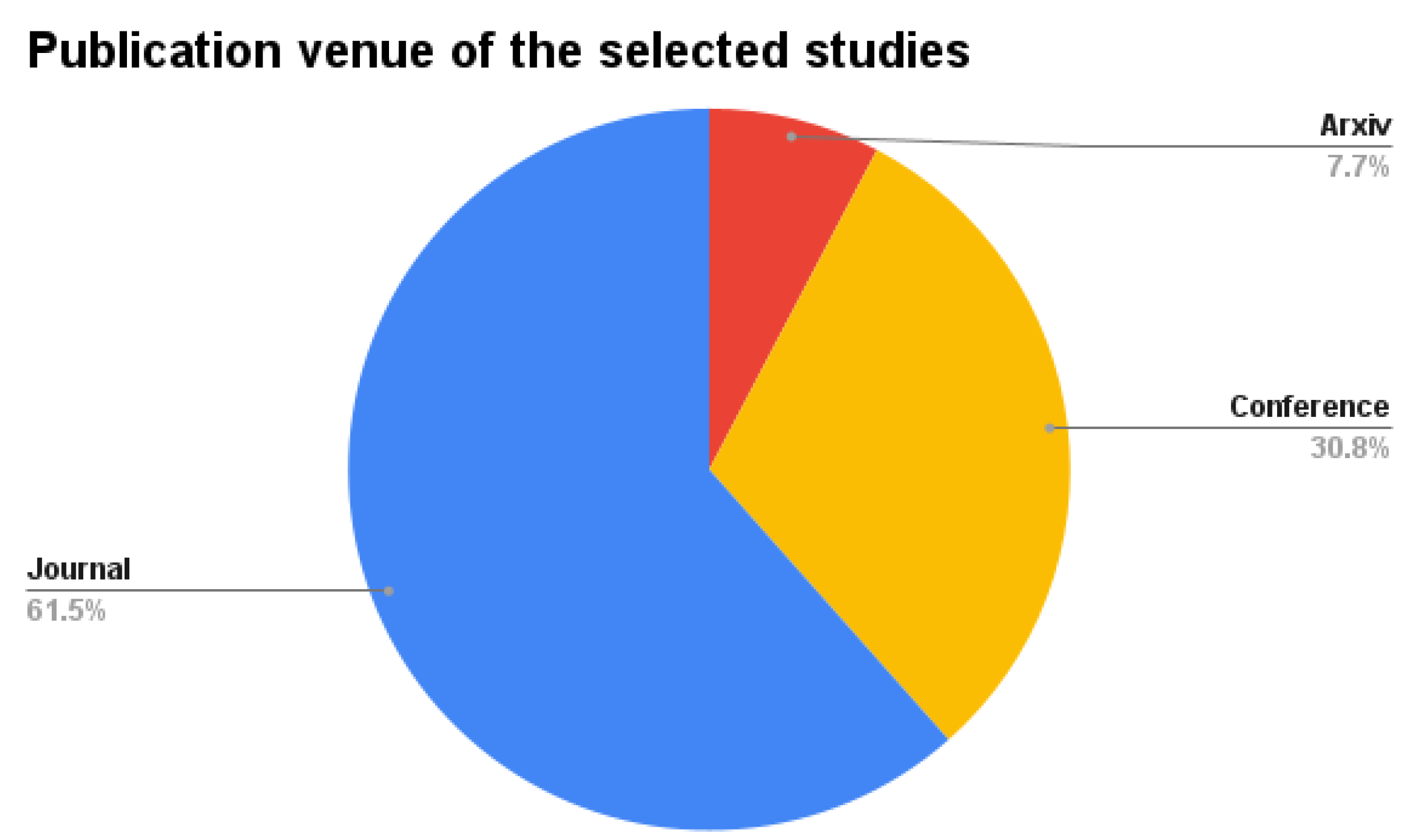

- We identified a list of 39 primary studies that focus on the ethical concerns of using generative AI. All the studies identified various ethical concerns related to specific ethical dimensions and provided strategies to address these concerns.

- Of these 39 papers, 13 conducted some form of empirical evaluation to either investigate or validate a proposed strategy. The remaining 26 papers are conceptual papers that propose strategies but do not conduct empirical evaluations, or in some cases, empirical evaluation is not feasible.

- We identified the most prominent ethical dimensions where ethical concerns are concentrated and the ethical dimensions that require more focused attention.

- We identified a set of key research directions needed to address ethical issues with using LLMs in practice.

2. Background

2.1. Terminology

2.2. Evolution and Advancements of Generative AI and LLMs

2.2.1. OpenAI’s GPT Series

2.3. Ethical Implications of LLMs

2.4. Regulatory and Policy Frameworks

2.5. Industry and Governmental Guidelines Selected

2.6. Existing Mitigation Strategies for Ethical Concerns

2.7. Related Systematic Reviews

3. Methodology

3.1. Research Questions

3.2. Search Strategy

3.2.1. Search String Formulation

3.2.2. Automated Search and Filtering

4. Results

4.1. Selected Studies

- General AI: Papers categorized under “General AI" address AI’s broad concepts and applications without focusing on a specific domain. They discuss relevant ethical challenges across multiple sectors where AI is deployed.

- Healthcare: Healthcare is a domain that frequently engages with ethical concerns such as privacy, safety, and bias, especially with the increasing use of AI and LLMs for medical applications. The papers in this domain discuss how AI-driven systems can both enhance and complicate healthcare practices, with patient confidentiality, data security, and algorithmic fairness being recurring themes. This domain is highly sensitive due to the direct impact on human well-being and medical decision-making.

- Legal: Papers focusing on the legal domain highlight the intersection of AI with law, exploring issues like accountability, transparency, and bias in legal AI systems. The integration of AI in legal contexts raises concerns about fairness, potential racial biases in predictive policing tools, and the transparency of AI-driven legal decisions.

- Education: The educational domain explores the ethical use of AI in learning environments, where concerns include privacy, fairness, and the transparency of AI systems in assessing student performance or providing educational content.

- Public safety: This domain involves the use of AI in public safety systems, where the ethical dimensions center on accountability, bias, and safety. AI systems used in policing, emergency response, and public surveillance must be scrutinized for fairness and transparency to avoid unintended harm to communities, especially marginalized groups.

- Societal Impact: Papers in this domain examine how LLMs interact with broader societal issues, such as their role in shaping public discourse, policy, and societal norms. Ethical concerns focus on the responsibility of those deploying LLMs to consider their social impact, including how they influence public opinion, access to information, and equality.

- Cybersecurity: This domain highlights mainly the privacy concerns specifically related to systems that use AI components or LLMs. Papers here focus on issues such as the protection of personal data, the risks of data leakage, and how AI systems can be designed to respect user privacy, especially focusing on data-hungry models like LLMs.

- Economics: Papers in this domain focus on the economic impact and ethical concerns surrounding AI and LLMs, particularly in relation to automation, job displacement, and the ethical use of AI in economic decision-making. The economic dimension of LLMs also brings up issues of accessibility and fairness in how AI systems are distributed and who benefits from their deployment.

| LLM Models | Number Mentions |

|---|---|

| ChatGPT | 18 |

| Conversational Agents | 6 |

| DALL-E | 1 |

| EduLLMs | 1 |

| Gemini (Bard) | 2 |

| GPT-2 | 1 |

| General LLMs | 8 |

| LLM-based Chatbots | 1 |

| LLM Virtual Assistants | 1 |

| RoBERTa | 1 |

| Transformers | 1 |

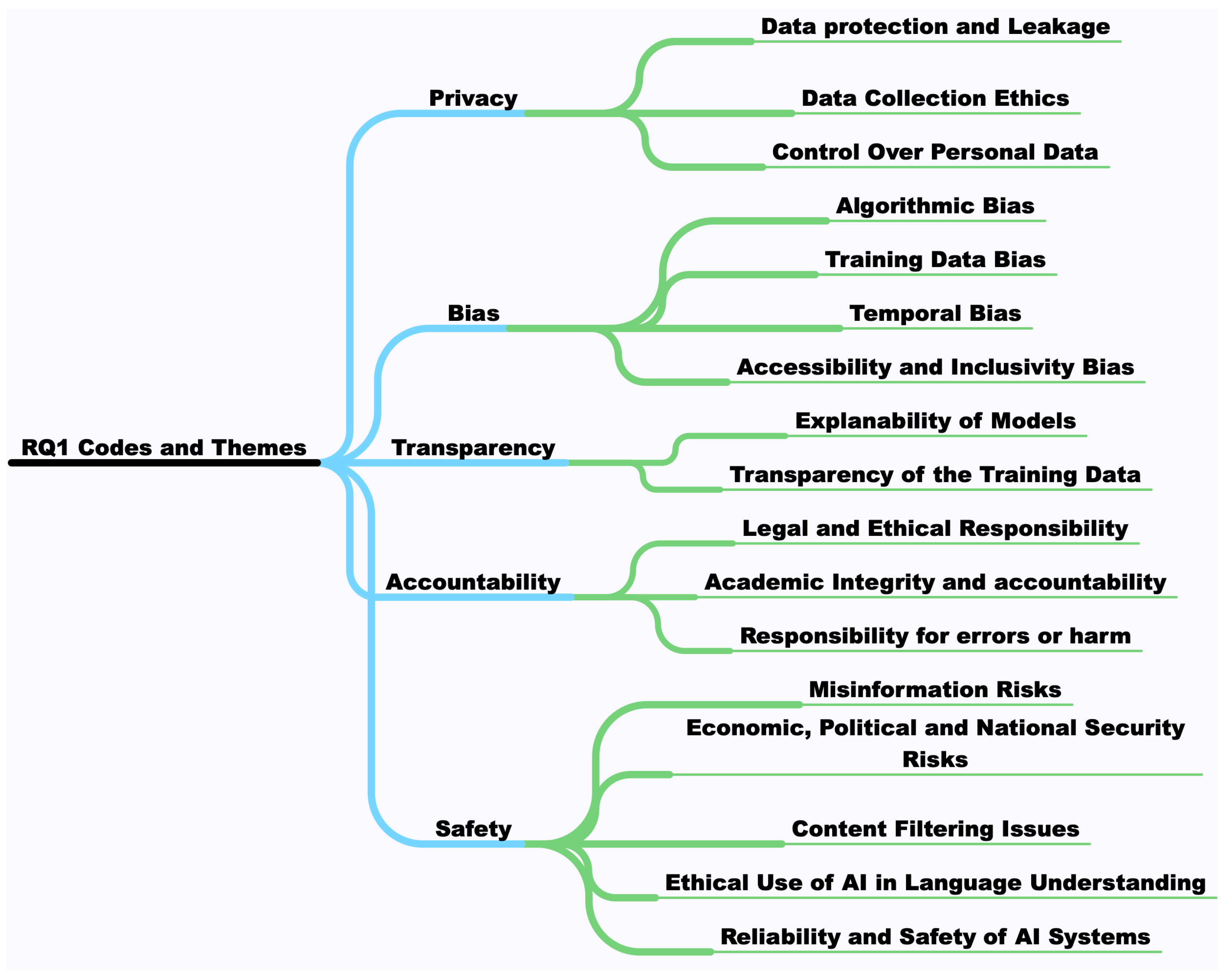

4.2. RQ1 Results

4.2.1. Safety

4.2.2. Privacy

4.2.3. Bias

4.2.4. Accountability

4.2.5. Transparency

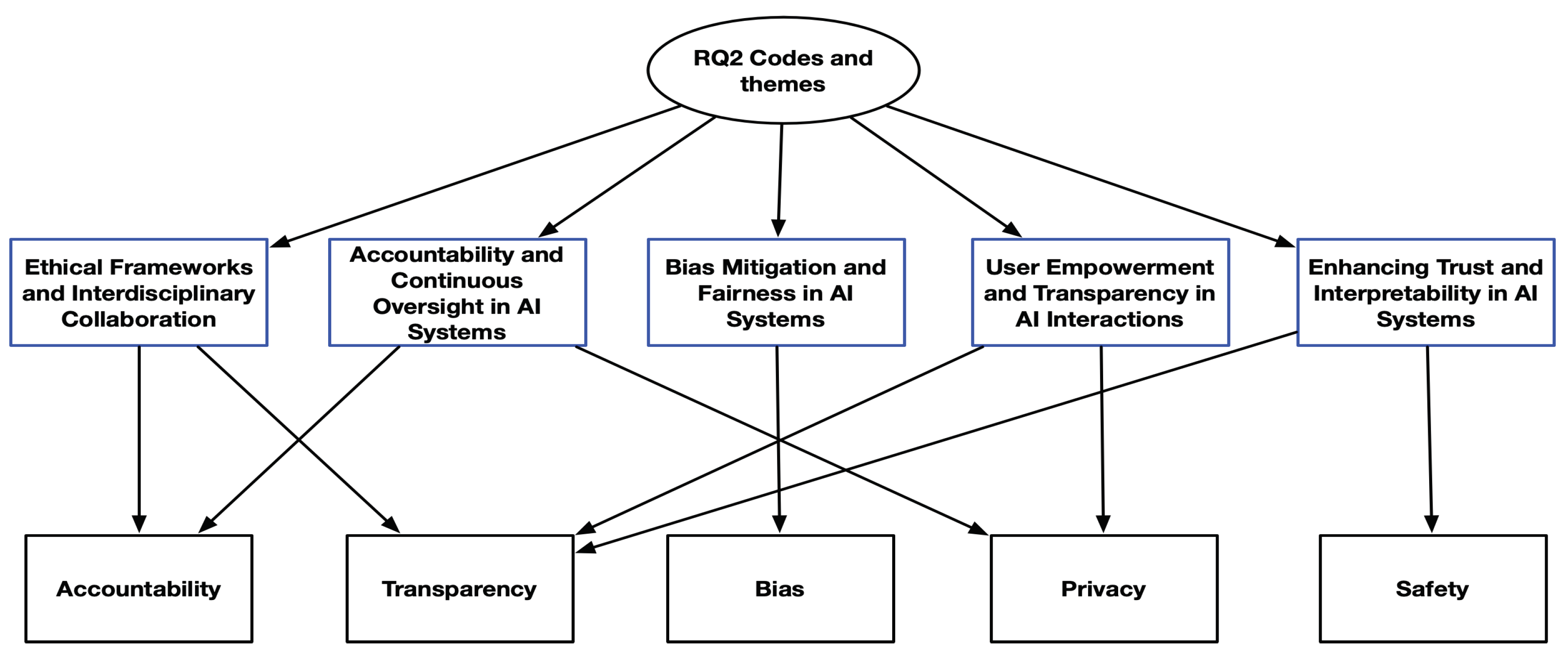

4.3. RQ2 Results

- Ethical Frameworks and Interdisciplinary Collaboration: Establishing ethical guidelines for LLMs requires input from diverse disciplines to address the complex ethical challenges they present, this theme is covered in P1, P2, P4, P6, P16, P18, P24, P25, P30, P35, P37, P38. In P1, the recommendation emphasizes the need to establish ethical guidelines and regulatory frameworks for AI in healthcare, advocating for interdisciplinary collaboration to ensure that ethical standards are comprehensive and adaptable to advancements in technology. This collaboration is essential to create frameworks that are both effective and applicable across various domains. In P2, a Machine Ethics approach is proposed, suggesting that ethical standards and reasoning should be directly embedded within AI systems. This approach would enable AI to make ethically informed decisions autonomously, integrating ethical principles into the core of AI functionality. In P6, there is a focus on the legal dimensions of AI, suggesting that the training phase of AI may be covered under fair use; however, clearer guidelines are needed to inform system users. These examples illustrate the necessity of establishing ethical frameworks that are continuously refined through interdisciplinary cooperation, ensuring that LLMs operate ethically and are aligned with societal expectations.

- Bias Mitigation and Fairness in AI systems: Addressing biases and promoting fairness in LLMs requires a multifaceted approach, with strategies implemented at various stages of the AI development process, this theme is covered in P1, P2, P3, P4, P6, P8, P16, P19, P25, P28, P35. In P3, a mitigation strategy is employed using a fill-in-the-blank method in a GPT-2 model to ensure that context is carefully considered during predictions, focusing on binary racial decisions between “White" and “Black." This method involves examining racial bias by masking racial references within the context, prompting the model to assign probabilities and observe potential biases. In P4, a combination of techniques is used to handle biases systematically: Pre-processing methods transform input data to reduce biases before training, while in-processing techniques modify learning algorithms to eliminate discrimination during training. Additionally, post-processing methods are applied to adjust the model’s output after training, particularly when retraining is not an option, treating the model as a black box. These strategies showcase a comprehensive effort to mitigate biases at different levels of the AI development pipeline, aiming to create fairer and more equitable AI systems.

- Enhancing Trust and Interpretability in AI Systems: Building trust in LLMs relies heavily on making AI systems transparent and understandable to users, especially in critical fields like healthcare, this theme include P1, P7, P9, P15, P20, P24, P30. In P1, a recommendation is made to focus on improving the interpretability of AI algorithms so that healthcare professionals can clearly understand and trust the decisions generated by these systems. This involves making the decision-making processes of AI transparent, allowing professionals to verify and rely on AI outputs confidently. In P7, a mitigation strategy involves using leading questions to guide ChatGPT when it fails to generate valid responses, enabling users to refine the system’s outputs until they are accurate iteratively. This interaction fosters trust by giving users greater control over the AI’s response quality. In P9, the HCMPI method is recommended to reduce data dimensions, focusing on extracting only relevant K-dimensional information for healthcare chatbot systems. This reduction makes the AI’s reasoning clearer and more interpretable, allowing users to follow the underlying logic without getting overwhelmed by excessive data. These strategies aim to enhance AI systems by making them more interactive and interpretable.

- User Empowerment and Transparency in AI Interactions: Empowering users and ensuring transparency in AI systems are critical for fostering ethical interactions and trust, this theme is covered in P1, P2, P5, P8, P10, P11, P12, P13, P16, P17, P24, P28, P29, P30, P31, P33. In P2, a recommendation is made to emphasize critical reflection throughout conversational AI’s design and development phases. This includes giving users more control over their interactions with AI agents and being transparent about the AI’s non-human nature and limitations. Such transparency allows users to understand the AI’s capabilities and limitations, enabling more informed decision-making. In P8, a mitigation strategy involves techniques like logit output verification and proactive detection of hallucinations. These strategies are paired with participatory design, where users actively shape AI systems, ensuring that the AI’s responses remain accurate and meaningful. In P30, further mitigation strategies include functionality audits to assess whether LLM applications meet their intended goals and impact audits to evaluate how AI affects users, specific groups, and the broader environment. These strategies prioritize user involvement and clarity, fostering a transparent and user-centered AI ecosystem.

- Accountability and Continuous Oversight in AI Systems: Ensuring that AI systems are accountable and continuously monitored is essential to maintain ethical standards and protect users, this theme is covered in P5, P7, P9, P10, P11, P12, P13, P20, P24, P25, P30, P31, P32, P35. In P24, a recommendation is made for AI tools like ChatGPT to be evaluated by regulators, specifically in healthcare settings, to ensure safety, efficacy, and reliable performance. This highlights the importance of oversight from regulatory bodies to ensure that AI applications adhere to established standards. In P9, a mitigation strategy involves the Healthcare Chatbot-based Zero Knowledge Proof (HCZKP) method, which enables the use of data without making it visible. This approach reduces the need for extensive data collection, safeguarding privacy, and ensuring ethical data handling. Additionally, the strategy recommends adopting data minimization principles by collecting only necessary essential data and decentralizing patient data during feedback training. These strategies emphasize the need for ongoing oversight and accountability in how AI systems manage and utilize data, particularly in sensitive environments.

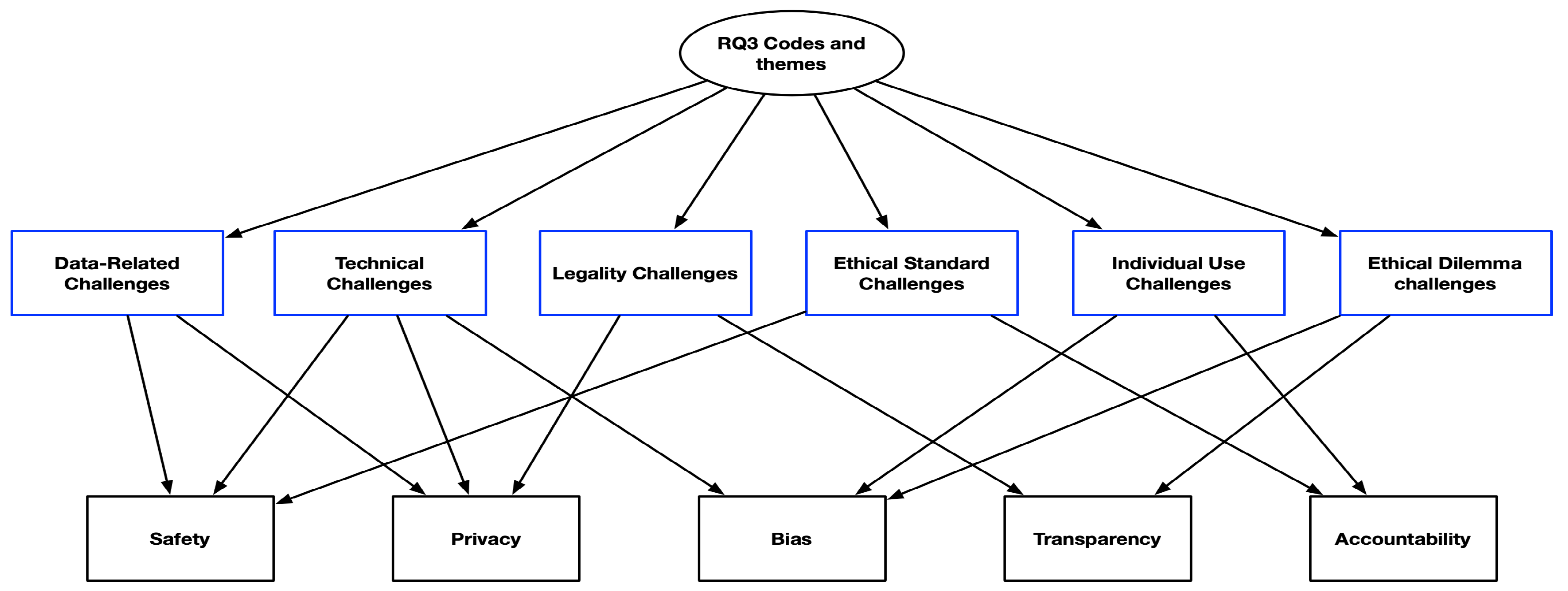

4.4. RQ3 Results

- Technical Challenges: Technical challenges in implementing mitigation strategies for LLMs often revolve around the complexity of integrating advanced techniques within diverse and sensitive contexts, this theme is covered in P1, P2, P4, P7, P10, P11, P12, P14, P20, P21, P22, P25, P28, P29, P30, P32, P33. In P1, the use of Natural Language Processing (NLP) on Electronic Health Records (EHR) data highlights multiple challenges, such as accurately identifying clinical entities, ensuring privacy protection, handling spelling errors, and managing the de-identification of sensitive information. These challenges are compounded by the lack of labeled data, the difficulty of detecting negations, and the complexities of deciphering numerous medical abbreviations. In P2, Gonen and Goldberg (2019) critique the current debiasing methods applied to word vectors. While these techniques produce high scores on self-defined metrics, they often result in only superficially hiding biases rather than genuinely eliminating them. P25 emphasizes that Red Teaming, a method used to identify security vulnerabilities, must be a continuous process and not a one-time solution. It requires a persistent commitment to security throughout the development and operation of AI systems, with careful consideration of both legal and ethical implications. These examples demonstrate the multifaceted technical hurdles that complicate the practical application of ethical mitigation strategies in LLMs.

- Ethical Standard Challenges: Implementing ethical standards in the context of LLMs is another challenging task, particularly due to the dynamic and diverse regulatory and cultural environments in which these technologies operate, this theme inclues P2, P8, P10, P30, P32, P34. In P10, the proposed recommendations are based on the analysis of the current regulatory landscape and anticipated regulations by the European Commission. However, as regulations continue to evolve, these recommendations may need to be revised to remain applicable and relevant. This highlights the importance of staying updated with the latest legal requirements in each jurisdiction to ensure ongoing compliance. P34 underscores the significance of incorporating a user-centered approach in cybersecurity, recognizing that addressing human factors can enhance the effectiveness of these measures. Yet, as cybersecurity threats evolve, there is a need for continuous development of strategies to stay ahead of emerging challenges. In P8, it is noted that a one-size-fits-all approach to ethical standards is unlikely to be effective, as LLMs span diverse cultural and global contexts. The absence of standardized or universally regulated frameworks for LLMs adds to the complexity, requiring adaptable and context-sensitive solutions. These examples illustrate the challenges of creating consistent and effective ethical standards in a rapidly changing and diverse landscape.

- Ethical Dilemma Challenges: Ethical dilemmas in the implementation of LLMs often arise from conflicting values and considerations, especially when navigating fairness, transparency, and privacy. In P3, the issue of learned associations within AI models highlights a significant ethical dilemma, this theme is covered in P3, P5, P8, P9, P10. For example, in P3, language models trained on data that frequently describe suspects and criminals as “black males" may reinforce stereotypes, raising concerns about legal equality. This brings up the ethical question of whether such associations, which exist in the training data, should be allowed in AI systems deployed for legal purposes, where fairness and equality are paramount. In P5, another ethical dilemma is identified in balancing transparency and protecting non-public information. While transparency is crucial for understanding an AI system’s behavior and decision-making process, companies often prioritize commercial secrecy to protect proprietary technology and business models. These conflicts illustrate the complexities of ethical decision-making in AI, where satisfying one ethical principle may compromise another, necessitating careful consideration and context-specific solutions.

- Individual Use Challenges: Implementing mitigation strategies for LLMs encounters specific challenges related to how individuals use and interpret AI-generated content, this theme is covered in P13, P25, P27, P30. In P13, even with established guidelines and recommendations, reports have surfaced in the healthcare and wellness sectors where individuals consult LLMs like ChatGPT for health-related matters and take its advice without proper scrutiny. Despite mitigation efforts, the widespread sharing of such AI-generated content on social media demonstrates the difficulty in ensuring that users critically assess AI advice. In P25, engaging multiple stakeholders introduces further complications, even when measures are in place to involve diverse perspectives. Some stakeholders may use their involvement to advance personal agendas, reinforce existing biases, or misuse sensitive data, undermining transparency and ethical intentions. These challenges illustrate the complexities in managing individual behavior and ensuring responsible AI use, despite mitigation strategies aimed at fostering ethical and informed engagement with LLMs.

- Legality Challenges: Legal challenges in implementing mitigation strategies for LLMs often involve navigating the complexities of intellectual property, censorship, and transparency, this theme is covered in P7, P8, P30. In P7, one significant concern is the potential leakage of business secrets and proprietary information when users interact with LLMs like ChatGPT. If proprietary code is inadvertently shared during AI interactions, it may become part of the chatbot’s knowledge base, raising issues of copyright infringement and the preservation of business confidentiality. This poses a risk for organizations that depend on protecting sensitive information. In P8, censorship within LLMs, while intended to prevent harmful outputs, introduces legal dilemmas. There is often no clear or objective standard for determining what content is harmful, which can lead to the suppression of free speech or creative expression. Overly restrictive censorship may also hinder important debates, while a lack of transparency around censorship policies can create distrust in the AI system. These examples underscore the legal complexities of implementing effective mitigation strategies, where balancing ethical considerations with regulatory compliance is a persistent challenge.

- Data-related Challenges: Data-related challenges are a critical factor in the implementation of mitigation strategies for LLMs, as they affect the quality, availability, and reliability of the datasets used in AI development, this theme is covered in P11, P12, P28, P32. In P28, concerns are raised about the future limitations of data collection and usage in machine learning. Research indicates that high-quality language data could be exhausted by 2026, with lower-quality data potentially running out by 2060. This forecast suggests that the limited availability of suitable datasets may constrain the future development and improvement of LLMs, affecting their ability to perform effectively and ethically. In P32, the quality of data is further questioned, as a substantial portion of source material comes from preprint servers that lack rigorous peer review. This reliance on unverified data can limit the generalizability and reliability of LLMs, particularly when data is drawn from diverse and variable contexts. These examples highlight the significant data challenges faced when attempting to implement mitigation strategies, where the quality, scope, and future availability of data play a crucial role in shaping the effectiveness of LLM interventions.

5. Discussion

5.1. Contextual Significance of Ethical Dimensions Accross Different Domains

5.2. The need for Continuous Evaluation and Iterative Improvements

5.3. Achieving Cross-Framework Consistency

5.4. The lack of Scalability of Mitigations

5.5. Engaging with End Users and Interdisciplinary Collaboration

6. Threats to Validity

7. Conclusion

Acknowledgments

Appendix A. Selected Primary Studies

Appendix B. Database Search Strings

| Database | Search Strings |

|---|---|

| IEEE | ((“Large Language Model*” OR LLMs) AND (guideline* OR standard* OR framework* OR compliance OR principles OR practices OR governance OR impact OR oversight OR algorithmic OR policy OR policies) AND (development OR deployment OR use OR design OR implementation) AND (ethics OR ethical OR moral OR bias OR fairness OR transparency OR accountability OR privacy OR security OR sustainability OR responsible OR trustworthiness OR equit* OR inclus* OR diversity OR legal OR rights OR cultural)) |

| ACM | (([All: “large language model*”] OR [All: llms]) AND ([All: guideline*] OR [All: standard*] OR [All: framework*] OR [All: compliance] OR [All: principles] OR [All: practices] OR [All: governance] OR [All: impact] OR [All: oversight] OR [All: algorithmic] OR [All: policy] OR [All: policies]) AND ([All: development] OR [All: deployment] OR [All: use] OR [All: design] OR [All: implementation]) AND ([All: ethics] OR [All: ethical] OR [All: moral] OR [All: bias] OR [All: fairness] OR [All: transparency] OR [All: accountability] OR [All: privacy] OR [All: security] OR [All: sustainability] OR [All: responsible] OR [All: trustworthiness] OR [All: equit*] OR [All: inclus*] OR [All: diversity] OR [All: legal] OR [All: rights] OR [All: cultural])) |

| ProQuest | ((noft(“Large Language Model*”) OR noft(LLMs)) AND (noft(guideline*) OR noft(standard*) OR noft(framework*) OR noft(compliance) OR noft(principles) OR noft(practices) OR noft(governance) OR noft(impact) OR noft(oversight) OR noft(algorithmic) OR noft(policy) OR noft(policies)) AND (noft(development) OR noft(deployment) OR noft(use) OR noft(design) OR noft(implementation)) AND (noft(ethics) OR noft(ethical) OR noft(moral) OR noft(bias) OR noft(fairness) OR noft(transparency) OR noft(accountability) OR noft(privacy) OR noft(security) OR noft(sustainability) OR noft(responsible) OR noft(trustworthiness) OR noft(equit*) OR noft(inclus*) OR noft(diversity) OR noft(legal) OR noft(rights) OR noft(cultural))) |

| Web of Science | (("Large Language Model*" OR llms) AND (guideline* OR standard* OR framework* OR compliance OR principles OR practices OR governance OR impact OR oversight OR algorithmic OR policy OR policies) AND (development OR deployment OR use OR design OR implementation) AND (ethics OR ethical OR moral OR bias OR fairness OR transparency OR accountability OR privacy OR security OR sustainability OR responsible OR trustworthiness OR equit* OR inclus* OR diversity OR legal OR rights OR cultural)) |

| Wiley Online Library | (("Large Language Model*" OR llms) AND (guideline* OR standard* OR framework* OR compliance OR principles OR practices OR governance OR impact OR oversight OR algorithmic OR policy OR policies) AND (development OR deployment OR use OR design OR implementation) AND (ethics OR ethical OR moral OR bias OR fairness OR transparency OR accountability OR privacy OR security OR sustainability OR responsible OR trustworthiness OR equit* OR inclus* OR diversity OR legal OR rights OR cultural)) |

| Science Direct | (“Large Language Model” OR “LLMs”) AND (“guideline” OR “standard” ) AND (“development” OR “design”) AND (“ethics” OR “moral”) |

References

- M. Alawida, S. Mejri, A. Mehmood, B. Chikhaoui, O. Isaac Abiodun, A comprehensive study of chatgpt: advancements, limitations, and ethical considerations in natural language processing and cybersecurity, Information 14 (8) (2023) 462. [CrossRef]

- C. Arora, J. Grundy, M. Abdelrazek, Advancing requirements engineering through generative ai: Assessing the role of llms, in: Generative AI for Effective Software Development, Springer, 2024, pp. 129–148. [CrossRef]

- A. A. Linkon, M. Shaima, M. S. U. Sarker, N. Nabi, M. N. U. Rana, S. K. Ghosh, M. A. Rahman, H. Esa, F. R. Chowdhury, et al., Advancements and applications of generative artificial intelligence and large language models on business management: A comprehensive review, Journal of Computer Science and Technology Studies 6 (1) (2024) 225–232. [CrossRef]

- D. H. Hagos, R. Battle, D. B. Rawat, Recent advances in generative ai and large language models: Current status, challenges, and perspectives, IEEE Transactions on Artificial Intelligence (2024). [CrossRef]

- Bengesi, H. El-Sayed, M. K. Sarker, Y. Houkpati, J. Irungu, T. Oladunni, Advancements in generative ai: A comprehensive review of gans, gpt, autoencoders, diffusion model, and transformers., IEEE Access (2024). [CrossRef]

- S. Reddy, Generative ai in healthcare: an implementation science informed translational path on application, integration and governance, Implementation Science 19 (1) (2024) 27. [CrossRef]

- Y. J. P. Bautista, C. Theran, R. Aló, Ethical considerations of generative ai: A survey exploring the role of decision makers in the loop, in: Proceedings of the AAAI Symposium Series, Vol. 3, 2024, pp. 391–398. [CrossRef]

- N. Bontridder, Y. Poullet, The role of artificial intelligence in disinformation, Data & Policy 3 (2021) e32. [CrossRef]

- F. Germani, G. Spitale, N. Biller-Andorno, The dual nature of ai in information dissemination: Ethical considerations, JMIR AI 3 (2024) e53505. [CrossRef]

- T. W. Sanchez, M. Brenman, X. Ye, The ethical concerns of artificial intelligence in urban planning, Journal of the American Planning Association (2024) 1–14. [CrossRef]

- A. Rezaei Nasab, M. Dashti, M. Shahin, M. Zahedi, H. Khalajzadeh, C. Arora, P. Liang, Fairness concerns in app reviews: A study on ai-based mobile apps, ACM Transactions on Software Engineering and Methodology (2024). [CrossRef]

- B. Kitchenham, L. Madeyski, D. Budgen, Segress: Software engineering guidelines for reporting secondary studies, IEEE Transactions on Software Engineering 49 (3) (2022) 1273–1298. [CrossRef]

- K. Petersen, S. Vakkalanka, L. Kuzniarz, Guidelines for conducting systematic mapping studies in software engineering: An update, Information and software technology 64 (2015) 1–18. [CrossRef]

- J. Dewey, J. H. Tufts, Ethics, DigiCat, 2022.

- S. Sivasubramaniam, D. H. Dlabolová, V. Kralikova, Z. R. Khan, Assisting you to advance with ethics in research: an introduction to ethical governance and application procedures, International Journal for Educational Integrity 17 (2021) 1–18. [CrossRef]

- D. C. Poff, Academic ethics and academic integrity, in: Encyclopedia of business and professional ethics, Springer, 2023, pp. 11–16.

- A. Jobin, M. Ienca, E. Vayena, The global landscape of ai ethics guidelines, Nature machine intelligence 1 (9) (2019) 389–399. [CrossRef]

- S. L. Anderson, M. Anderson, Ai and ethics, AI and Ethics 1 (1) (2021) 27–31. [CrossRef]

- J.-C. Põder, Ai ethics–a review of three recent publications (2021). [CrossRef]

- E. Kazim, A. S. Koshiyama, A high-level overview of ai ethics, Patterns 2 (9) (2021).

- M. Coeckelbergh, AI ethics, Mit Press, 2020.

- L. Floridi, J. Cowls, M. Beltrametti, R. Chatila, P. Chazerand, V. Dignum, C. Luetge, R. Madelin, U. Pagallo, F. Rossi, et al., Ai4people—an ethical framework for a good ai society: opportunities, risks, principles, and recommendations, Minds and machines 28 (2018) 689–707. [CrossRef]

- R. Eitel-Porter, Beyond the promise: implementing ethical ai, AI and Ethics 1 (1) (2021) 73–80. [CrossRef]

- E. Hickman, M. Petrin, Trustworthy ai and corporate governance: the eu’s ethics guidelines for trustworthy artificial intelligence from a company law perspective, European Business Organization Law Review 22 (2021) 593–625. [CrossRef]

- I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, Y. Bengio, Generative adversarial nets, Advances in neural information processing systems 27 (2014).

- A. Vaswani, Attention is all you need, Advances in Neural Information Processing Systems (2017).

- T. B. Brown, Language models are few-shot learners, arXiv preprint arXiv:2005.14165 (2020).

- V. Alto, Modern Generative AI with ChatGPT and OpenAI Models: Leverage the capabilities of OpenAI’s LLM for productivity and innovation with GPT3 and GPT4, Packt Publishing Ltd, 2023.

- E. M. Bender, T. Gebru, A. McMillan-Major, S. Shmitchell, On the dangers of stochastic parrots: Can language models be too big?, in: Proceedings of the 2021 ACM conference on fairness, accountability, and transparency, 2021, pp. 610–623. [CrossRef]

- C. Rudin, Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead, Nature machine intelligence 1 (5) (2019) 206–215. [CrossRef]

- R. Shokri, M. Stronati, C. Song, V. Shmatikov, Membership inference attacks against machine learning models, in: 2017 IEEE symposium on security and privacy (SP), IEEE, 2017, pp. 3–18. [CrossRef]

- J. Li, W. Xiao, C. Zhang, Data security crisis in universities: identification of key factors affecting data breach incidents, Humanities and Social Sciences Communications 10 (1) (2023) 1–18. [CrossRef]

- T. Madiega, Artificial intelligence act, European Parliament: European Parliamentary Research Service (2021).

- E. Jillson, Aiming for truth, fairness, and equity in your company’s use of ai, Federal Trade Commission 19 (2021).

- N. AI, Artificial intelligence risk management framework: Generative artificial intelligence profile (2024). [CrossRef]

- S. Migliorini, China’s interim measures on generative ai: Origin, content and significance, Computer Law & Security Review 53 (2024) 105985 (2024) 105985. [CrossRef]

- I. S. Association, et al., The ieee global initiative on ethics of autonomous and intelligent systems. ieee. org. retrieved march 12, 2021 (2017). [CrossRef]

- S. Pichai, Ai at google: our principles, The Keyword 7 (2018) (2018) 1–3.

- OpenAI, Openai safety standards, https://openai.com/safety-standards/ (2024).

- M. C. Buiten, Towards intelligent regulation of artificial intelligence, European Journal of Risk Regulation 10 (1) (2019) 41–59. [CrossRef]

- IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems, Ethically aligned design: A vision for prioritizing human well-being with autonomous and intelligent systems, version 2, http://standards.ieee.org/wp-content/uploads/import/documents/other/ead_v2.pdf (2017). [CrossRef]

- N. AI, Artificial intelligence risk management framework (ai rmf 1.0) (2023).

- Microsoft, Microsoft responsible ai standard, version 2: General requirements, https://blogs.microsoft.com/wp-content/uploads/prod/sites/5/2022/06/Microsoft-Responsible-AI-Standard-v2-General-Requirements-3.pdf (2022).

- A. Oketunji, M. Anas, D. Saina, Large language model (llm) bias index—llmbi, Data & Policy (2023). [CrossRef]

- M. T. Ribeiro, S. Singh, C. Guestrin, " why should i trust you?" explaining the predictions of any classifier, in: Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, 2016, pp. 1135–1144. [CrossRef]

- B. McMahan, E. Moore, D. Ramage, S. Hampson, B. A. y Arcas, Communication-efficient learning of deep networks from decentralized data, in: Artificial intelligence and statistics, PMLR, 2017, pp. 1273–1282.

- P. on AI, Annual report 2021 (2021).URL https://partnershiponai.org/resource/annual-report-2021/.

- F. Li, N. Ruijs, Y. Lu, Ethics & ai: A systematic review on ethical concerns and related strategies for designing with ai in healthcare, Ai 4 (1) (2022) 28–53. [CrossRef]

- E. Atlam, M. Almaliki, A. Alfahaid, I. Gad, G. Elmarhomy, M. Alwateer, A. Ahmed, Slm-aie: A systematic literature map of artificial intelligence ethics (2024). [CrossRef]

- G. Palumbo, D. Carneiro, V. Alves, Objective metrics for ethical ai: a systematic literature review, International Journal of Data Science and Analytics (2024) 1–21. [CrossRef]

- D. Leslie, Understanding artificial intelligence ethics and safety, arXiv preprint arXiv:1906.05684 (2019).

- L. Floridi, J. Cowls, A unified framework of five principles for ai in society, Machine learning and the city: Applications in architecture and urban design (2022) 535–545. [CrossRef]

- V. Dignum, Responsible artificial intelligence: how to develop and use AI in a responsible way, Vol. 2156, Springer, 2019.

- T. Hagendorff, The ethics of ai ethics: An evaluation of guidelines, Minds and machines 30 (1) (2020) 99–120.

- J. Morley, L. Floridi, L. Kinsey, A. Elhalal, From what to how: an initial review of publicly available ai ethics tools, methods and research to translate principles into practices, Science and engineering ethics 26 (4) (2020) 2141–2168.

- A. Anawati, H. Fleming, M. Mertz, J. Bertrand, J. Dumond, S. Myles, J. Leblanc, B. Ross, D. Lamoureux, D. Patel, et al., Artificial intelligence and social accountability in the canadian health care landscape: A rapid literature review, PLOS Digital Health 3 (9) (2024) e0000597. [CrossRef]

- H. Smith, Clinical ai: opacity, accountability, responsibility and liability, Ai & Society 36 (2) (2021) 535–545.

- N. H. Shah, M. A. Pfeffer, M. Ghassemi, The need for continuous evaluation of artificial intelligence prediction algorithms, JAMA Network Open 7 (9) (2024) e2433009–e2433009. [CrossRef]

- R. Ortega-Bolaños, J. Bernal-Salcedo, M. Germán Ortiz, J. Galeano Sarmiento, G. A. Ruz, R. Tabares-Soto, Applying the ethics of ai: a systematic review of tools for developing and assessing ai-based systems, Artificial Intelligence Review 57 (5) (2024) 110. [CrossRef]

- S. Kijewski, E. Ronchi, E. Vayena, The rise of checkbox ai ethics: a review, AI and Ethics (2024) 1–10. [CrossRef]

- M. Srikumar, R. Finlay, G. Abuhamad, C. Ashurst, R. Campbell, E. Campbell-Ratcliffe, H. Hongo, S. R. Jordan, J. Lindley, A. Ovadya, et al., Advancing ethics review practices in ai research, Nature Machine Intelligence 4 (12) (2022) 1061–1064. [CrossRef]

- Y. Li, S. Goel, Making it possible for the auditing of ai: A systematic review of ai audits and ai auditability, Information Systems Frontiers (2024) 1–31. [CrossRef]

- E. Prem, From ethical ai frameworks to tools: a review of approaches, AI and Ethics 3 (3) (2023) 699–716.

- A. A. Khan, M. A. Akbar, M. Fahmideh, P. Liang, M. Waseem, A. Ahmad, M. Niazi, P. Abrahamsson, Ai ethics: an empirical study on the views of practitioners and lawmakers, IEEE Transactions on Computational Social Systems 10 (6) (2023) 2971–2984. [CrossRef]

- V. Vakkuri, K.-K. Kemell, Implementing ai ethics in practice: An empirical evaluation of the resolvedd strategy, in: Software Business: 10th International Conference, ICSOB 2019, Jyväskylä, Finland, November 18–20, 2019, Proceedings 10, Springer, 2019, pp. 260–275.

- C. Deng, Y. Duan, X. Jin, H. Chang, Y. Tian, H. Liu, H. P. Zou, Y. Jin, Y. Xiao, Y. Wang, et al., Deconstructing the ethics of large language models from long-standing issues to new-emerging dilemmas, arXiv preprint arXiv:2406.05392 (2024).

- A. A. Khan, S. Badshah, P. Liang, M. Waseem, B. Khan, A. Ahmad, M. Fahmideh, M. Niazi, M. A. Akbar, Ethics of ai: A systematic literature review of principles and challenges, in: Proceedings of the 26th International Conference on Evaluation and Assessment in Software Engineering, 2022, pp. 383–392. [CrossRef]

- J. Fjeld, N. Achten, H. Hilligoss, A. Nagy, M. Srikumar, Principled artificial intelligence: Mapping consensus in ethical and rights-based approaches to principles for ai, Berkman Klein Center Research Publication (2020-1) (2020).

- R. Binns, Fairness in machine learning: Lessons from political philosophy, in: Conference on fairness, accountability and transparency, PMLR, 2018, pp. 149–159.

- W. Hoffmann-Riem, Artificial intelligence as a challenge for law and regulation, Regulating artificial intelligence (2020) 1–29. [CrossRef]

- C. Cath, Governing artificial intelligence: ethical, legal and technical opportunities and challenges, Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 376 (2133) (2018) 20180080. [CrossRef]

- K. Murphy, E. Di Ruggiero, R. Upshur, D. J. Willison, N. Malhotra, J. C. Cai, N. Malhotra, V. Lui, J. Gibson, Artificial intelligence for good health: a scoping review of the ethics literature, BMC medical ethics 22 (2021) 1–17. [CrossRef]

- A. Hagerty, I. Rubinov, Global ai ethics: a review of the social impacts and ethical implications of artificial intelligence, arXiv preprint arXiv:1907.07892 (2019).

- S. Pink, E. Quilty, J. Grundy, R. Hoda, Trust, artificial intelligence and software practitioners: an interdisciplinary agenda, AI & SOCIETY (2024) 1–14. [CrossRef]

- M. Al-kfairy, D. Mustafa, N. Kshetri, M. Insiew, O. Alfandi, Ethical challenges and solutions of generative ai: An interdisciplinary perspective, in: Informatics, Vol. 11, MDPI, 2024, p. 58. [CrossRef]

- S. Keles, Navigating in the moral landscape: analysing bias and discrimination in ai through philosophical inquiry, AI and Ethics (2023) 1–11. [CrossRef]

- R. Gianni, S. Lehtinen, M. Nieminen, Governance of responsible ai: From ethical guidelines to cooperative policies, Frontiers in Computer Science 4 (2022) 873437. [CrossRef]

| Criteria ID | Criterion |

|---|---|

| Inclusion Criteria | |

| I01 | Papers discussing ethical concerns in the use of Generative AI |

| I02 | Full text of the article is available. |

| I03 | Peer-reviewed studies, and sector-specific studies. |

| I04 | Papers written in English language. |

| Exclusion Criteria | |

| E01 | Papers about GenAI that do not discuss ethical concerns |

| E02 | Papers about ethical concerns that are not in the field of GenAI |

| E03 | Papers that are less than four pages in length. |

| E04 | Conference or workshop papers if an extended journal version of the same paper exists. |

| E05 | Papers with inadequate information to extract relevant data. |

| E06 | Vision papers, books (chapters), posters, discussions, opinions, keynotes, magazine articles, experience, and comparison papers. |

| Domain | Papers |

| Cybersecurity | [P1, P23, P27, P29, P31, P33] |

| Education | [P14, P16, P26] |

| General AI | [P2, P4, P5, P15, P17, P20, P28, P30, P35, P37, P39] |

| Healthcare | [P1, P9, P10, P11, P13, P21, P22, P24, P32, P34] |

| Societal Impact | [P2, P25, P38] |

| Legal | [P3, P6, P8] |

| Public Safety | [P7, P18, P19, P27] |

| Ethical Dimensions | Definition | Paper ID |

| Safety | Generative AI systems need to be ensured to operate safely and securely, minimizing risks and issues | P2, P6, P7, P8, P9, P10, P12, P13, P14, P18, P19, P21, P22, P25, P27, P28, P30, P32, P34, P38 |

| Privacy | The information and privacy of individuals need to be protected when using generative AI systems | P1, P2, P5, P7, P8, P9, P10, P11, P12, P14, P16, P17, P22, P23, P24, P25, P26, P27, P28, P29,P31, P33, P34, P37, P38, P39 |

| Transparency | The operations and decisions of generative AI systems are open and understandable to stakeholders | P2, P5, P6, P8, P10, P11, P12, P13, P15, P16, P19, P21, P22, P24, P34, P38, P39 |

| Bias | The biases of the system need to be addressed as they may affect the fairness and equity of the AI system | P1, P3, P4, P5, P6, P8, P10, P11, P12, P14, P15, P16, P17, P18, P19, P20, P22, P24, P25, P28, P30, P34, P35, P36, P38 |

| Accountability | AI systems need to be responsible and their actions can be traced and justified | P1, P5, P11, P16, P17, P21, P24, P34, P37, P38 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).