I. Introduction

LLMSeamCarver is an image resizing method designed to maintain important image details during resizing. By integrating large language models (LLMs), LLMSeamCarver goes beyond traditional methods, enabling a smarter, context-aware resizing process. LLMs enhance tasks such as region prioritization, interpolation, edge detection, and energy calculation by analyzing image context or textual inputs. This allows LLMSeamCarver to preserve crucial image features like faces and text while optimizing resizing for different scenarios.

This paper explores how LLMs improve the accuracy and efficiency of image resizing.

II. Background

i. Image Resizing and LLMs

SeamCarver enhances the traditional seam carving method by incorporating LLMs. The integration of LLMs allows for dynamic resizing of images based on real-time context and user input, rather than relying on fixed parameters. The LLM dynamically optimizes the energy functions that determine how seams are selected and removed, making the resizing process adaptive and context-sensitive. This approach allows for superior preservation of image details and better quality when resizing for specific tasks, such as creating thumbnails or preparing images for various screen sizes.

LLMs contribute by adjusting the parameters based on the content of the image and the desired effect, improving the quality of resized images while providing flexibility. The dynamic optimization of the resizing process using LLMs represents a major leap in the flexibility and efficiency of image resizing techniques.

ii. LLM-Related Work

Recent research in Large Language Models (LLMs) has demonstrated their potential in a variety of domains, including text, image, and video generation.

Recently, LLMs has shown its power and potential to enhance traditional image processing workflows through advanced model architectures and optimization techniques. For instance, Li et al. (2024) demonstrated how LLMs can optimize convolutional neural network (CNN) layers for feature extraction in image processing tasks, improving the performance of deep learning models in image classification and segmentation [

1]. Zhang et al. (2024) explored the use of LLMs in multi-modal fusion networks, enabling the integration of both visual and textual information, which enhances image analysis tasks [

2,

3,

4,

5].

Additionally, efficient algorithm design [

5,

6,

7,

8,

9,

10,

11,

12] and efficient LLMs [

13,

14] have shown promising prospective in efficient model design with LLMs. Through dynamic optimization, LLMs allow for more context-aware resizing by adjusting energy functions during the process. This flexibility ensures that fine-grained details are preserved, which is especially crucial for tasks like content-aware resizing, as seen in techniques such as SeamCarver. Studies on text-to-image models have demonstrated how LLMs can modify images based on contextual prompts [

1,

15,

16,

17,

18,

19,

20], providing further advancements in content-aware image resizing.

These advancements highlight the increasing role of LLMs in enhancing traditional image processing tasks and their ability to contribute significantly to content-aware resizing techniques like SeamCarver.

iii. Image and Vision Generation Work

The application of deep learning techniques in

image and vision generation has also seen significant advancements [

4,

11,

21,

22,

23,

24,

25,

26]. Deep convolutional networks, for instance, have been used for texture classification (Bu et al., 2019), which is directly relevant to tasks like energy function optimization in SeamCarver [

10]. These methods help improve the detail preservation during image resizing by ensuring that textures and edges are maintained throughout the process.

Furthermore,

multi-modal fusion networks and techniques for

image-driven predictions (as demonstrated by Dan et al., 2024) offer important insights into how AI can be used to process and modify images in real-time [

11,

21]. Besides, model compression currently becoming a favor from both model and system design perspective [

27,

28]. These innovations align closely with SeamCarver’s goal of dynamic, user-controlled image resizing, making them valuable for future developments in image resizing technology.

iv. Image Resizing and Seam Carving Research

SeamCarver builds upon earlier work in

image resizing techniques. In addition to the foundational work by Avidan and Shamir (2007), other studies have contributed to enhancing seam carving methods. Kiess (2014) introduced improved edge preservation methods within seam carving [

29], which is crucial for ensuring that resized images do not suffer from visible distortions along object boundaries. Zhang (2015) compared classic image resizing methods and found that seam carving provided superior results when compared to simpler resizing techniques, particularly in terms of detail preservation [

30].

Frankovich (2011) further advanced seam carving by integrating energy gradient functionals to enhance the carving process, providing even more control over the resizing operation [

31]. These improvements are incorporated into

SeamCarver, which leverages LLMs to further optimize the parameter tuning and energy functions during resizing.

v. Impact of SeamCarver and Future Directions

The development of SeamCarver represents a significant step forward in content-aware image resizing. By leveraging the power of LLMs, this approach enables adaptive resizing, maintaining high-quality images across a variety of use cases. As machine learning and AI continue to evolve, future versions of SeamCarver could integrate even more advanced techniques, such as generative models for even higher-quality resizing and multi-task learning to tailor resizing for specific contexts.

Moreover, SeamCarver provides an excellent example of how LLMs can be used to enhance traditional image processing tasks, enabling more intelligent and user-driven modifications to images. This work will likely spur further research into dynamic image resizing and contribute to more versatile, AI-enhanced image editing tools in the future.

III. Functionality

SeamCarver leverages LLM-Augmented methods to ensure adaptive, high-quality image resizing while preserving both structural and semantic integrity. The key functionalities are:

LLM-Augmented Region Prioritization: LLMs analyze semantics or textual inputs to prioritize key regions, ensuring critical areas (e.g., faces, text) are preserved.

LLM-Augmented Bicubic Interpolation: LLMs optimize bicubic interpolation for high-quality enlargements, adjusting parameters based on context or user input.

LLM-Augmented LC Algorithm: LLMs adapt the LC algorithm by adjusting weights, ensuring the preservation of important image features during resizing.

LLM-Augmented Canny Edge Detection: LLMs guide Canny edge detection to refine boundaries, enhancing clarity and accuracy based on contextual analysis.

LLM-Augmented Hough Transformation: LLMs strengthen the Hough transformation, detecting structural lines and ensuring the preservation of geometric features.

LLM-Augmented Absolute Energy Function: LLMs dynamically adjust energy maps to improve seam selection for more precise resizing.

LLM-Augmented Dual Energy Model: LLMs refine energy functions, enhancing flexibility and ensuring effective seam carving across various use cases.

LLM-Augmented Performance Evaluation: CNN-based classification experiments on CIFAR-10 are enhanced with LLM feedback to fine-tune resizing results.

IV. LLM-Guided Region Prioritization

To enhance the seam carving process, we integrate Large Language Models (LLMs) to guide region prioritization during image resizing. Traditional seam carving typically removes seams based on an energy map derived from pixel-level intensity or gradient differences. However, this method may struggle to preserve regions with semantic significance, such as faces, text, or objects, which require more context-aware resizing. Our approach introduces LLMs to assign semantic importance to different regions of the image, modifying the energy map to prioritize the preservation of these crucial regions.

i. Method Overview

Given an image

I, the initial energy map

is computed using standard seam carving techniques, typically relying on pixel-based features such as intensity gradients and contrast:

where

and

represent the gradient values of the image

I at pixel

in the

x- and

y-directions, respectively.

Next, a

Large Language Model (LLM) is employed to analyze either the image content directly or a user-provided textual description of the regions to prioritize. For example, a user might specify that "faces should be preserved" or "text should remain readable." This description is processed by the LLM, which assigns an importance score

to each pixel based on its semantic relevance. The function that generates these scores is denoted as:

where

represents the output of the LLM processing both the image

I and a description

D. The LLM interprets the description

D through its internal knowledge of language and context, identifying which parts of the image correspond to higher-priority regions (e.g., faces, text, objects).

The LLM’s understanding of the image is derived using advanced techniques like **transformer architectures** [

32] and **contextual embedding** [

33], which allow the model to capture both local and global relationships within the image, ensuring that important features are accurately recognized and prioritized. For example, the LLM might recognize that a region containing a face is more important than a background area when performing resizing.

ii. Energy Map Adjustment

To modify the energy map, the semantic importance scores

are combined with the original energy map

. This modified energy map

is calculated as follows:

where

is a scalar weight that determines the influence of the LLM-based importance scores on the energy map. By incorporating

, the energy map becomes content-aware, ensuring that the regions with higher semantic importance (e.g., faces, text) have lower energy values, making them less likely to be removed during the seam carving process.

iii. Energy Map Adjustment

The modified energy map

is calculated as:

where: -

is the original energy map. -

is the semantic importance score derived from the

LLM. -

is a weight factor controlling the influence of semantic importance.

iv. Pseudocode

|

Algorithm 1:LLM-Guided Region Prioritization for Seam Carving |

|

Initialize:

Compute the initial energy map for the image I;

Obtain semantic importance scores from LLM based on image content or user description;

Normalize the importance scores to a suitable range.

Adjustment:

1. For each pixel , compute the adjusted energy map:

2. Set to control the influence of semantic importance on the energy map.

3. Repeat for all pixels to generate the adjusted energy map .

Output:

The adjusted energy map for guiding seam carving.

|

V. LLM-Augmented Bicubic Interpolation

SeamCarver integrate a LLM-Augmented bicubic interpolation method for image resizing. This method uses a bicubic policy to smooth pixel values, with LLMs improving the visual quality of enlarged images. However, traditional bicubic interpolation does not account for the semantic importance of image regions. To address this limitation, we augment the standard interpolation with semantic guidance from LLMs, ensuring that regions of high importance—such as faces, text, and objects—are preserved more effectively during enlargement.

The traditional bicubic interpolation algorithm operates by using a 4x4 pixel grid surrounding the target pixel to calculate the new pixel value. This method typically focuses on the rate of change between neighboring pixel intensities. In contrast, our approach leverages LLMs to assign semantic importance scores to each pixel, reflecting its contextual significance. These importance scores are derived from the image content or a user-provided description, and they adjust the interpolation weights, effectively guiding the resizing process to preserve critical regions.

The bicubic interpolation formula for a pixel at position

is based on calculating the weighted sum of the 4x4 neighborhood of surrounding pixels. Traditionally, the interpolation weights

and

are determined based on the relative distance between the target pixel and its neighbors. These weights can be defined as:

Then, the new pixel value at position

is computed by summing the contributions of the surrounding 16 pixels:

For a floating-point pixel coordinate

, the interpolation involves considering the 4x4 neighborhood

, where

, and calculating the new pixel value as follows:

In the augmented version, the weights

and

are modified based on the importance scores

derived from the

LLM. For each pixel, we compute the adjusted interpolation weight

as:

where

is a scalar factor that controls the influence of the semantic importance score. By incorporating these adjusted weights into the interpolation process, regions deemed important by the LLM receive greater priority during the resizing process, resulting in higher-quality enlargements that better preserve semantic content.

The incorporation of LLMs significantly improves the ability of bicubic interpolation to perform content-aware resizing, ensuring that important regions, such as faces, text, or other key objects, are preserved with higher fidelity. The LLM’s ability to interpret the image context or a user’s textual description enables a more adaptive resizing strategy, where the image can be enlarged in a way that prioritizes and preserves the most semantically relevant regions.

In conclusion, this approach not only enhances the visual quality of enlarged images by preserving important areas but also allows for a more flexible and context-aware image resizing process. The integration of LLMs elevates bicubic interpolation from a purely geometric operation to a more intelligent, context-sensitive method, improving overall resizing performance.

VI. LLM-Augmented LC (Loyalty-Clarity) Policy

SeamCarver also used LLM-Augmented LC (Loyalty-Clarity) Policy to resize images. Traditionally, the LC policy evaluates each pixel’s contrast relative to the entire image, focusing on maintaining the most visually significant elements. However, by incorporating **LLMs**, we enhance this method with semantic understanding, allowing the system to prioritize image regions based not only on visual contrast but also on their semantic importance, as understood from contextual descriptions or image content analysis.

i. Global Contrast Calculation with LLM Influence

The traditional LC policy computes the global contrast of a pixel by summing the distance between the pixel in question and all other pixels in the image. This measure indicates the pixel’s relative importance in terms of visual contrast. In our **LLM-augmented** approach, the global contrast is modified by considering semantic relevance, as dictated by the LLM’s analysis of the image or user-provided description.

For instance, if a user inputs that the image contains important "faces" or "text," the

LLM assigns higher weights to these regions, increasing their importance in the contrast calculation. The LLM’s guidance is mathematically integrated into the contrast calculation as follows:

In this formulation: - represents the intensity of the pixel being analyzed, while represents the intensity of all other pixels. - is the weight assigned to a region r by the LLM, which is based on its semantic importance, such as prioritizing faces or text. - is a scaling factor that controls the influence of the **LLM**’s weighting on the global contrast calculation.

By adjusting based on the LLM-driven understanding of important regions, the algorithm effectively prioritizes preservation of the semantically significant areas.

ii. Frequency-Based Refinement with LLM Augmentation

To further refine the contrast measure, we incorporate the frequency distribution of intensity values in the image. The traditional frequency-based contrast is enhanced with the LLM’s semantic input, which guides how regions of different intensities should be prioritized.

In the standard approach, the global contrast for a pixel

is computed as:

Where: - is the frequency of the intensity value . - represents the intensity of the pixel , and are the intensity values of all other pixels.

In the LLM-augmented approach, the

LLM provides additional weighting for specific regions, emphasizing the importance of certain intensities based on semantic input. The modified calculation is:

Here, adjusts the weight of the frequency term for pixels in semantically significant regions, as determined by the LLM. This allows for a more refined and context-aware adjustment of the image’s contrast, ensuring that the most relevant image areas are preserved during the resizing process.

iii. Application in Image Resizing

By incorporating LLM-guided adjustments into the LC Policy, SeamCarver becomes significantly more content-aware. The LLM allows the software to prioritize critical regions—such as human faces, text, or objects—based on user input or semantic analysis of the image. This semantic understanding of the image ensures that, even during resizing, key features remain sharp and well-defined, while less important regions are more freely adjusted.

For example, if a user specifies that "faces" should be preserved, the LLM ensures that these areas have a higher weight during the resizing process, while the surrounding less important areas can be resized with minimal distortion. This LLM-augmented LC Policy thus improves the visual integrity of resized images, making the process more adaptable to both user needs and semantic context.

Figure 1.

Outlier detected by the LC algorithm

Figure 1.

Outlier detected by the LC algorithm

VII. LLM-Augmented Canny Line Detection

In LLMSeamCarver, the LLM-augmented Canny Line Detection algorithm enhances edge and structural feature preservation during image resizing. By incorporating Large Language Models (LLMs), the edge detection process is guided semantically to prioritize regions that are critical to image content, such as faces and text.

i. Algorithm Overview

The Canny Edge Detection algorithm detects edges by analyzing intensity gradients. The standard method detects edges using the first derivative of the image’s intensity, but the LLM-augmented approach incorporates semantic information, adjusting the edge detection for important regions identified by the LLM.

ii. Gaussian Filter Application

The image is first smoothed using a Gaussian filter to reduce noise. The filter is represented as:

This step prepares the image for the gradient calculation while minimizing false edges. In the LLM-augmented process, the filter may be adapted based on the semantic regions detected by the LLM, ensuring more precise edge detection in critical areas.

iii. Gradient Calculation with LLM Augmentation

After Gaussian filtering, the gradient at each pixel

is calculated using the Sobel operator:

Where and are the derivatives in the horizontal and vertical directions, respectively.

In the

LLM-augmented method, the gradients are modified by the semantic importance

of each region, as identified by the

LLM. The semantic importance adjusts the gradient magnitude, giving higher weight to edges in critical areas:

Here, is the semantic score assigned by the LLM, where higher values correspond to regions that are semantically more important (e.g., faces, text).

iv. Edge Enhancement

The Canny algorithm applies non-maximum suppression and hysteresis thresholding to refine the detected edges. In the

LLM-augmented process, the suppression threshold is adapted based on the importance scores:

By incorporating the LLM, edges in semantically significant regions (e.g., faces or objects) are preserved with greater accuracy, while less important areas are suppressed more aggressively.

v. Significance in Image Resizing

The LLM-augmented Canny Line Detection improves the image resizing process by ensuring that the edges and features critical to the image’s content are better preserved. This is especially important when resizing images with significant content like faces or text, where traditional methods might fail to preserve important details.

Figure 3.

Edges Detected by the Canny Detector (with LLM Augmentation)

Figure 3.

Edges Detected by the Canny Detector (with LLM Augmentation)

VIII. LLM-Augmented Hough Transformation

The LLMSeamCarver integrates the LLM-Augmented Hough Transformation to enhance line detection during image resizing. This method incorporates semantic guidance from Large Language Models (LLMs) to ensure the preservation of key structural features, such as text or faces, during resizing. The LLM augments the traditional Hough Transformation by adjusting the accumulator based on the semantic importance of regions within the image.

i. Algorithm Overview

The Hough Transformation detects lines by mapping points from the image domain to the Hough space. In the traditional approach, collinear points converge to peaks in Hough space, indicating the presence of a line. The LLM-Augmented Hough Transformation introduces semantic weighting to this process, ensuring that semantically significant lines (e.g., those in text or faces) are prioritized during line detection.

IX. LLM-Augmented Absolute Energy Equation

The LLM-Augmented Absolute Energy Equation refines energy calculations by integrating semantic weights derived from Large Language Models (LLMs). This ensures seam carving preserves critical features such as text and faces.

i. Semantic Weighting

The LLM assigns a semantic score

to each pixel:

where

I is the input image and context includes features like object and text importance.

scales pixel importance, with higher values for semantically significant regions.

ii. Gradient Refinement

The original energy gradient:

is modified as:

where

and

are direction-specific weights computed by the LLM.

iii. Cumulative Energy Update

The cumulative energy function integrates

into the seam carving process:

Here, are adjusted by to prioritize semantically significant pixels.

X. LLM-Augmented Dual Gradient Energy Equation

The proposed LLM-Augmented Dual Gradient Energy Equation utilizes Large Language Models (LLMs) to refine edge detection by dynamically adjusting numerical differentiation and gradient computation. LLMs provide context-aware corrections for each computational step.

i. Numerical Differentiation with LLM Adjustments

Taylor expansions are dynamically adjusted with LLM corrections. The forward expansion is expressed as:

where

includes context-aware corrections predicted by the LLM. Similarly, for the backward expansion:

LLMs adapt dynamically based on local image gradients and refine higher-order terms to reduce numerical error.

ii. Gradient Approximation with Adaptive Refinements

Gradient approximations in the

x- and

y-directions incorporate corrections from LLMs:

Here, and are LLM-predicted corrections based on local edge strength and texture complexity. The LLM also adapts to handle regions with high-gradient variations.

iii. Energy Calculation with LLM Refinements

The energy of a pixel is computed using the LLM-enhanced gradients for each RGB channel. For the

x-direction:

and similarly for the

y-direction:

LLM contributions include predicting and to improve accuracy and dynamically adjusting channel weights for better feature preservation.

XI. Result Evaluation

To evaluate the effectiveness of the image resizing methods in LLMSeamCarver, we conducted an experiment using Convolutional Neural Networks (CNNs) for image classification. The goal was to compare how different resizing techniques impact classification accuracy. Additionally, we explored the role of LLM-augmented approaches in enhancing image feature preservation and improving classification outcomes after resizing.

i. Experimental Setup

In the experiment, images were resized using various methods implemented in LLMSeamCarver, including traditional methods and LLM-augmented approaches. The resized images were then fed into a CNN model to assess how well each resizing method preserved image features essential for accurate classification. The CIFAR-10 dataset, a well-known benchmark in image classification, was used for this experiment.

ii. Methodology

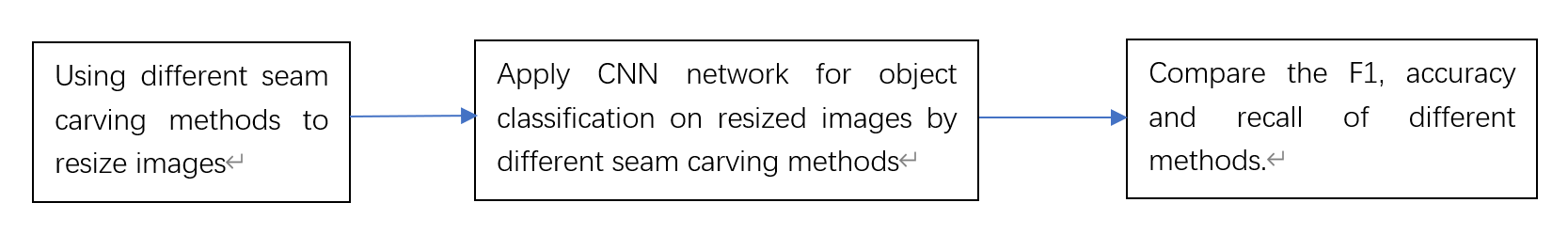

The workflow of the experiment is as follows:

Figure 7.

Workflow of the experiment, including LLM-augmented methods for image resizing

Figure 7.

Workflow of the experiment, including LLM-augmented methods for image resizing

The CNN model was first trained on the original CIFAR-10 images, and subsequently, the same model was used to classify images that had been resized using different methods in LLMSeamCarver. This allowed us to evaluate how each resizing method influenced the model’s ability to recognize key features. Additionally, LLM-augmented methods were used to improve the preservation of important image details during resizing, enhancing classification accuracy.

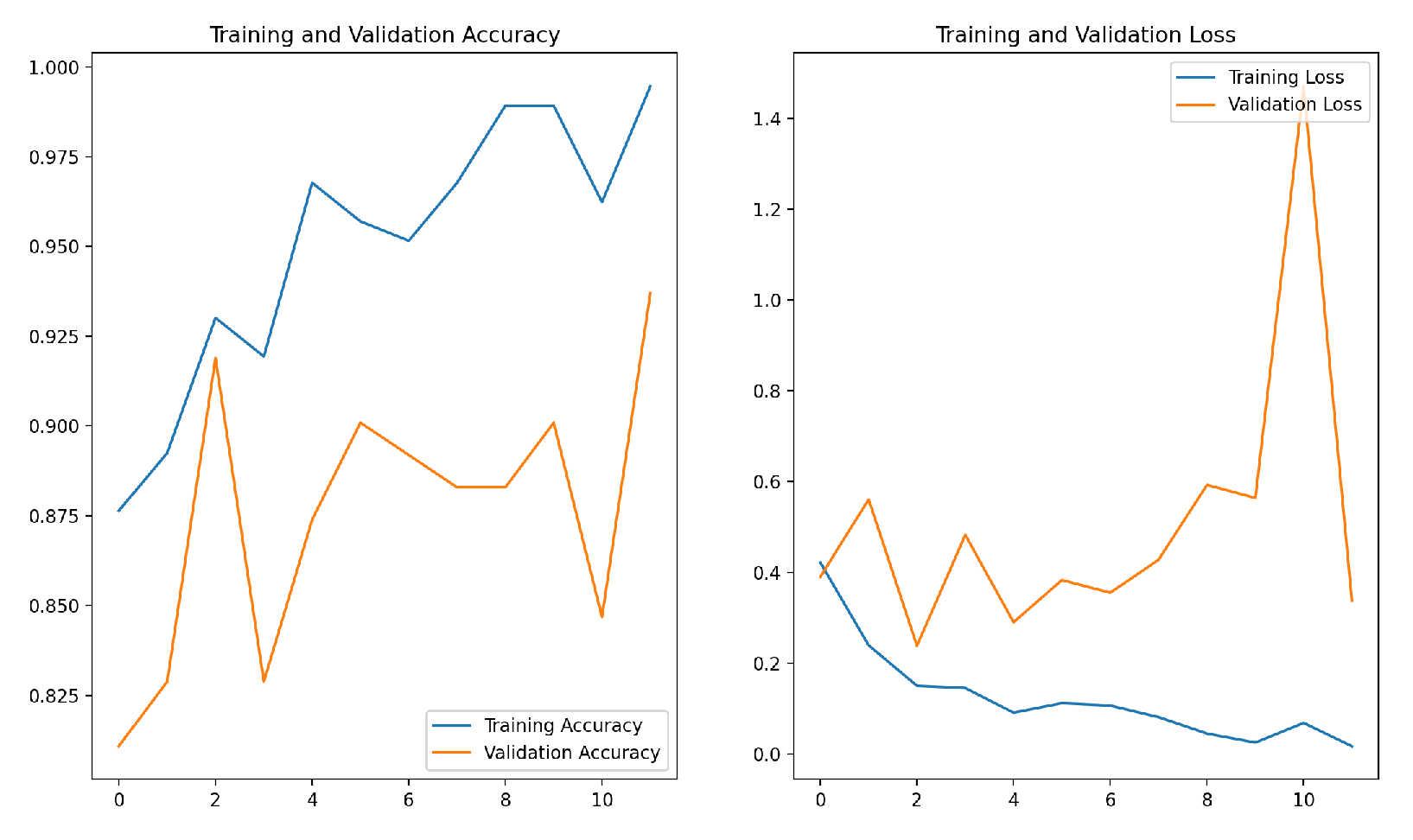

iii. Results and Discussion

The results, including accuracy metrics and error rates for each sub-experiment, are provided below. The experiment revealed that LLM-augmented resizing methods led to superior performance in image classification, particularly in cases where maintaining fine image details was critical.

Figure 8.

Error and accuracy of a sub-experiment showing the improvements from LLM-augmented methods

Figure 8.

Error and accuracy of a sub-experiment showing the improvements from LLM-augmented methods

Illustrative examples of images processed by different methods, including LLM-augmented techniques, are shown below, highlighting the visual differences in the resized images and how LLM-augmented methods contribute to better feature preservation.

Figure 9.

Image processed by the Bicubic Method

Figure 9.

Image processed by the Bicubic Method

Figure 10.

Image processed by the Absolute Energy Method

Figure 10.

Image processed by the Absolute Energy Method

Figure 11.

Image processed by the Canny Edge Detection Method

Figure 11.

Image processed by the Canny Edge Detection Method

Figure 12.

Image processed by the Dual Gradient Energy Method

Figure 12.

Image processed by the Dual Gradient Energy Method

Figure 13.

Image processed by the Hough Transformation Method

Figure 13.

Image processed by the Hough Transformation Method

Figure 14.

Image processed by the LC Method

Figure 14.

Image processed by the LC Method

iv. Conclusion

This experiment highlights the significant improvements brought by LLM-augmented methods in image resizing. By integrating LLM-augmented techniques, LLMSeamCarver can preserve finer image details, resulting in improved performance for image classification tasks. These findings emphasize the importance of selecting the right resizing method in applications where image recognition accuracy is crucial.

References

- Li, K.; Liu, L.; Chen, J.; Yu, D.; Zhou, X.; Li, M.; Wang, C.; Li, Z. Research on reinforcement learning based warehouse robot navigation algorithm in complex warehouse layout. arXiv preprint, 2024; arXiv:2411.06128 2024. [Google Scholar]

- Hu, Z.; Lei, F.; Fan, Y.; Ke, Z.; Shi, G.; Li, Z. Research on Financial Multi-Asset Portfolio Risk Prediction Model Based on Convolutional Neural Networks and Image Processing. arXiv preprint, 2024; arXiv:2412.03618 2024. [Google Scholar]

- Ke, Z.; Yin, Y. Tail Risk Alert Based on Conditional Autoregressive VaR by Regression Quantiles and Machine Learning Algorithms. arXiv.org 2024. [Google Scholar]

- Xiang, A.; Qi, Z.; Wang, H.; Yang, Q.; Ma, D. A Multimodal Fusion Network For Student Emotion Recognition Based on Transformer and Tensor Product 2024. arXiv:cs.CV/2403.08511].

- Wu, C.; Yu, Z.; Song, D. Window views psychological effects on indoor thermal perception: A comparison experiment based on virtual reality environments. E3S Web of Conferences 2024, 546, 02003. [Google Scholar] [CrossRef]

- Ke, Z.; Xu, J.; Zhang, Z.; Cheng, Y.; Wu, W. A Consolidated Volatility Prediction with Back Propagation Neural Network and Genetic Algorithm. arXiv, 2024; arXiv:2412.07223 2024. [Google Scholar]

- Ke, Z.; Yin, Y. Tail Risk Alert Based on Conditional Autoregressive VaR by Regression Quantiles and Machine Learning Algorithms. arXiv, 2024; arXiv:2412.06193 2024. [Google Scholar]

- Guo, F.; Mo, H.; Wu, J.; Pan, L.; Zhou, H.; Zhang, Z.; Li, L.; Huang, F. A hybrid stacking model for enhanced short-term load forecasting. Electronics 2024, 13, 2719. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, P.; Al Hammadi, A.Y.; Guo, F.; Damiani, E.; Yeun, C.Y. Reputation-based federated learning defense to mitigate threats in eeg signal classification. In Proceedings of the 2024 16th International Conference on Computer and Automation Engineering (ICCAE). IEEE; 2024; pp. 173–180. [Google Scholar]

- Bu, X.; Wu, Y.; Gao, Z.; Jia, Y. Deep convolutional network with locality and sparsity constraints for texture classification. Pattern Recognition 2019, 91, 34–46. [Google Scholar] [CrossRef]

- Dan, H.C.; Lu, B.; Li, M. Evaluation of asphalt pavement texture using multiview stereo reconstruction based on deep learning. Construction and Building Materials 2024, 412, 134837. [Google Scholar] [CrossRef]

- Xiang, J.; Chen, J.; Liu, Y. Hybrid Multiscale Search for Dynamic Planning of Multi-Agent Drone Traffic. Journal of Guidance, Control, and Dynamics 2023, 46, 1963–1974. [Google Scholar] [CrossRef]

- Liu, D.; Lai, Z.; Wang, Y.; Wu, J.; Yu, Y.; Wan, Z.; Lengerich, B.; Wu, Y.N. Efficient Large Foundation Model Inference: A Perspective From Model and System Co-Design 2024. arXiv:cs.DC/2409.01990].

- Liu, D.; Pister, K. LLMEasyQuant – An Easy to Use Toolkit for LLM Quantization 2024. arXiv:cs.LG/2406.19657].

- Cao, H.; Zhang, Z.; Li, X.; Wu, C.; Zhang, H.; Zhang, W. Mitigating Knowledge Conflicts in Language Model-Driven Question Answering 2024. arXiv:cs.CL/2411.11344].

- Lai, Z.; Wu, J.; Chen, S.; Zhou, Y.; Hovakimyan, N. Residual-based Language Models are Free Boosters for Biomedical Imaging 2024. arXiv:cs.CV/2403.17343].

- Xin, W.; Wang, K.; Fu, Z.; Zhou, L. Let Community Rules Be Reflected in Online Content Moderation 2024. arXiv:cs.SI/2408.12035].

- Li, K.; Wang, J.; Wu, X.; Peng, X.; Chang, R.; Deng, X.; Kang, Y.; Yang, Y.; Ni, F.; Hong, B. Optimizing automated picking systems in warehouse robots using machine learning. arXiv, 2024; arXiv:2408.16633 2024. [Google Scholar]

- Li, S.; Sun, K.; Lai, Z.; Wu, X.; Qiu, F.; Xie, H.; Miyata, K.; Li, H. ECNet: Effective Controllable Text-to-Image Diffusion Models 2024. arXiv:cs.CV/2403.18417].

- Guo, Y.; Chen, S.; Zhan, R.; Wang, W.; Zhang, J. LMSD-YOLO: A lightweight YOLO algorithm for multi-scale SAR ship detection. Remote Sensing 2022, 14, 4801. [Google Scholar] [CrossRef]

- Dan, H.C.; Huang, Z.; Lu, B.; Li, M. Image-driven prediction system: Automatic extraction of aggregate gradation of pavement core samples integrating deep learning and interactive image processing framework. Construction and Building Materials 2024, 453, 139056. [Google Scholar] [CrossRef]

- Peng, J.; Bu, X.; Sun, M.; Zhang, Z.; Tan, T.; Yan, J. Large-scale object detection in the wild from imbalanced multi-labels. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp.; pp. 9709–9718.

- Fang, X.; Si, S.; Sun, G.; Sheng, Q.Z.; Wu, W.; Wang, K.; Lv, H. Selecting workers wisely for crowdsourcing when copiers and domain experts co-exist. Future Internet 2022, 14, 37. [Google Scholar] [CrossRef]

- Wu, W. Alphanetv4: Alpha Mining Model. arXiv, 2024; arXiv:2411.04409 2024. [Google Scholar]

- Hu, Y.; Cao, H.; Yang, Z.; Huang, Y. Improving text-image matching with adversarial learning and circle loss for multi-modal steganography. In Proceedings of the International Workshop on Digital Watermarking. Springer; 2020; pp. 41–52. [Google Scholar]

- Cheng, Y.; Yang, Q.; Wang, L.; Xiang, A.; Zhang, J. Research on Credit Risk Early Warning Model of Commercial Banks Based on Neural Network Algorithm 2024. arXiv:q-fin.RM/2405.10762].

- Liu, D.; Waleffe, R.; Jiang, M.; Venkataraman, S. GraphSnapShot: Graph Machine Learning Acceleration with Fast Storage and Retrieval 2024. arXiv:cs.LG/2406.17918].

- Liu, D.; Yu, Y. MT2ST: Adaptive Multi-Task to Single-Task Learning 2024. arXiv:cs.LG/2406.18038].

- Kiess, H. Improved Edge Preservation in Seam Carving for Image Resizing. Computer Graphics Forum 2014, 33, 421–429. [Google Scholar] [CrossRef]

- Zhang, W.; Wu, C.; Li, X. Comparison of Image Resizing Techniques: A Case Study of Seam Carving vs. Traditional Resizing Methods. Journal of Visual Communication and Image Representation 2015, 29, 149–158. [Google Scholar] [CrossRef]

- Frankovich, R. Enhanced Seam Carving: Energy Gradient Functionals and Resizing Control. In Proceedings of the Proceedings of the IEEE International Conference on Image Processing (ICIP), 2011; pp. 2157–2160. [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Advances in Neural Information Processing Systems 2017, 30. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the Proceedings of NAACL-HLT; 2019. [Google Scholar]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).