Submitted:

25 July 2024

Posted:

26 July 2024

Read the latest preprint version here

Abstract

Keywords:

I. Introduction

A. Background

B. Research Problem

- How to build a large-scale annotated dataset containing rich vehicle types and complex traffic scenes to train and validate classification models?

- How to design a deep learning model to effectively extract the global and local features of a vehicle and improve its adaptability to occlusion and complex backgrounds?

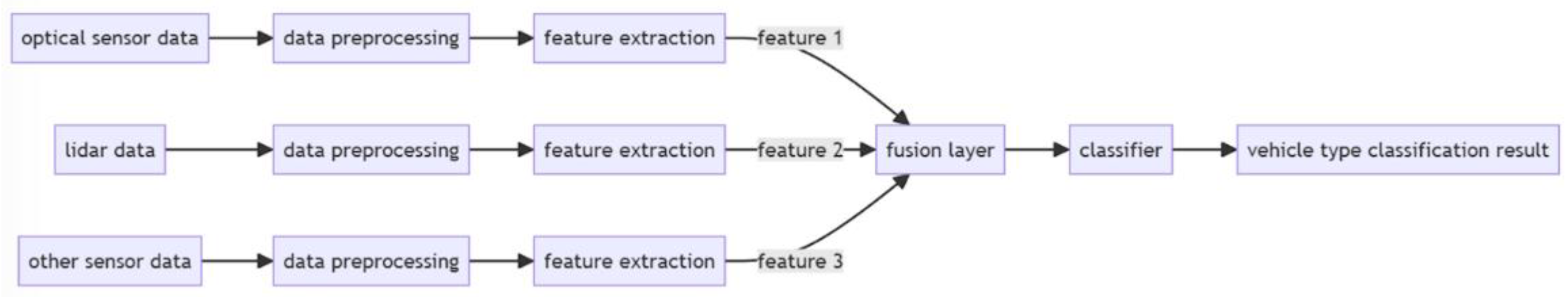

- How to fuse data from different sensors, like images, lidar, etc., to enhance the consistency and reliability of classification results?

- How to design an effective ensemble learning method to optimize and fuse the outputs of multiple classifiers to achieve the best classification performance?

- Dataset construction: Collect and label large-scale vehicle images and LiDAR data, covering different vehicle types, perspectives, and traffic scenarios, to improve the generalization capability of our model.

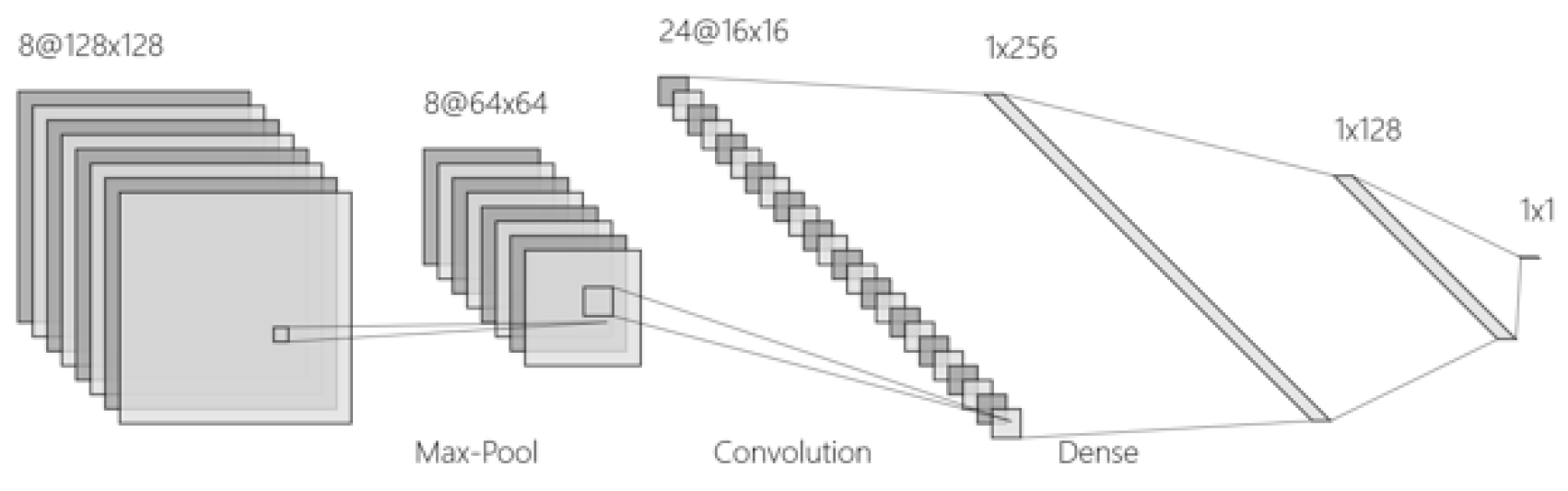

- Feature extraction: Design two convolutional neural network (CNN) models based on different architectures to extract global and local features of the vehicle respectively, improving the ability to identify changes in vehicle appearance.

- Multi-sensor fusion: Use lidar sensors to achieve the spatial structure architecture of the vehicle and combine it with our output of CNN model to better enhance the classification effectiveness and performance in occlusions and complex scenes.

- Ensemble learning: Gradient boosting decision tree (GBDT) is used to fuse the classification results of CNN and lidar sensors to optimize the final classification decision as an ensemble learning algorithm.

C. Innovation Points

- A vehicle classification method combining deep learning and multi-sensor data is proposed, which effectively improves the accuracy and robustness of classification.

- A model targeting the global and local characteristics of the vehicle was designed to enhance our model in identifying different vehicle types.

- The fusion strategy of lidar sensors and deep learning models is finetuned to optimize the system's adaptability to complex scenes.

- Ensemble learning technology is applied to further improve classification performance by optimizing the output of multiple classifiers.

II. Related Works

III. Method

A. DeepVehiSense

B. CohereSenseAggregator

C. IntelliFeatureExtractor

- Multi-scale feature fusion

- Attention mechanism

|

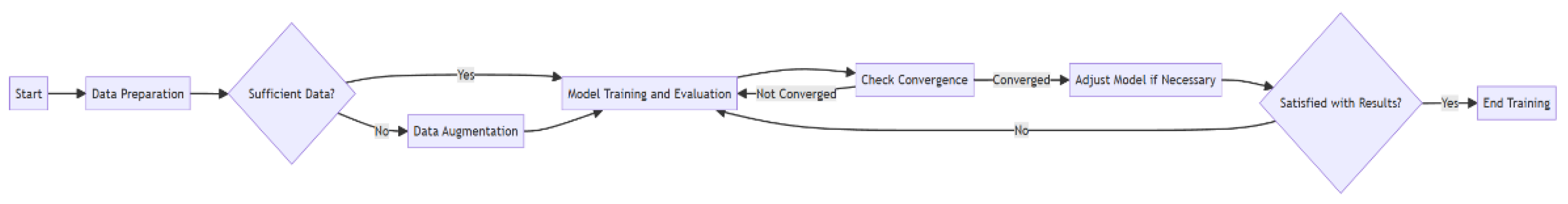

D. OptiTrain Dynamics

- Multi-scale training: The network is trained on images of different scales to improve the recognition of vehicles of different sizes. Multi-scale training enables the model to better understand and generalize the characteristics of vehicles of different sizes, making it more flexible and accurate in practical applications [23].

- End-to-end attention mechanism: The attention module is integrated into the network then end-to-end trained with other layers, which enables that the attention module can directly learn from the data which features are most important for the classification task, thereby improving the efficiency and accuracy of feature extraction.

E. Model Fusion with Gradient Boosting

IV. Experiments

A. Dataset Description

B. Model Configuration

- Optimizer: learning rate of 0.001, Adam.

- Loss function: Categorical cross-entropy loss.

- Evaluation metrics: Accuracy, F1 score, recall, and precision.

C. Experimental Results

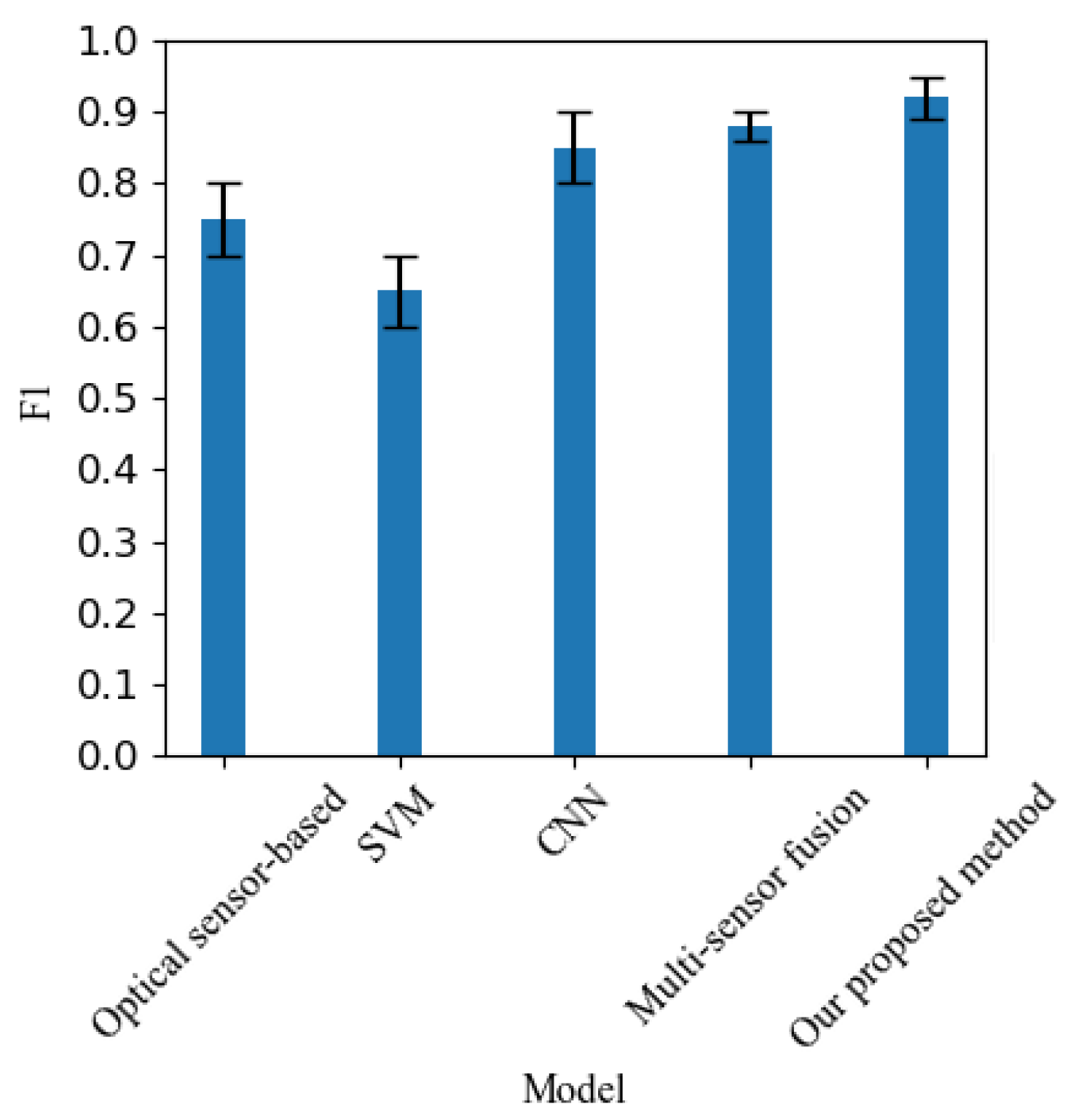

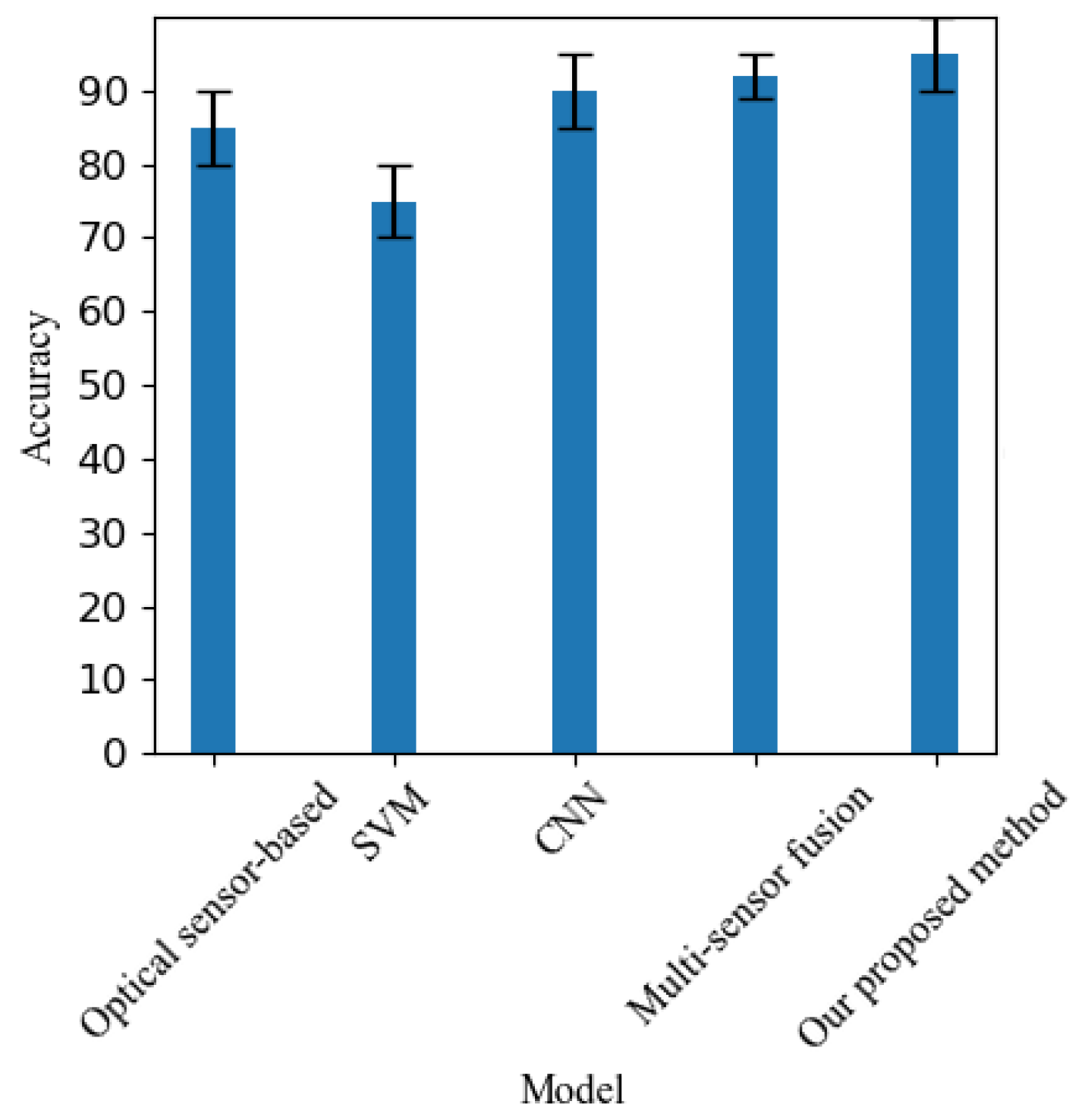

| Method | Accuracy (%) | F1 score | Recall rate (%) | |||

| Mean | MinMax | Mean | MinMax | Mean | MinMax | |

| Optical sensor-based methods | 85 | 80-90 | 0.75 | 0.70-0.80 | 80 | 75-85 |

| Traditional machine learning methods | 75 | 70-80 | 0.65 | 0.60-0.70 | 70 | 65-75 |

| Deep learning method (CNN) | 90 | 85-95 | 0.85 | 0.80-0.90 | 85 | 80-90 |

| Multi-sensor fusion method | 92 | 85-95 | 0.88 | 0.85-0.90 | 90 | 85-95 |

| Our proposed method | 95 | 90-100 | 0.92 | 0.90-0.95 | 95 | 90-100 |

V. Discussion

VI. Conclusions

A. Key Findings

- DeepVehiSense architecture: By combining improvements in the VGG-16 architecture and the self-attention module, DeepVehiSense is able to effectively extract key features of vehicles and improve classification accuracy.

- Multi-scale feature fusion: This technology significantly improves recognize vehicles of different sizes and occlusion situations.

- Attention mechanism: The self-attention module ensures the model to concentrate on the areas in some part of thefigures that are most critical part for the classification, further improving classification accuracy.

- Experimental results: On the dataset provided by VINCI Autoroutes, DeepVehiSense has significantly improved compared to existing systems and other methods.

B. Effectiveness of Method

C. Impact on Future Work

References

- Zhao, P.; Qi, C.; Liu, D. Resource-constrained hierarchical task net-work planning under uncontrollable durations for emergency decision-making. Journal of Intelligent & Fuzzy Systems 2017, 33, 3819–3834. [Google Scholar]

- Lin, Z.; Wang, Z.; Zhu, Y.; Li, Z.; Qin, H. Text sentiment detection and classification based on integrated learning algorithm. Applied Science and Engineering Journal for Advanced Research 2024, 3, 27–33. [Google Scholar]

- Li, M.; Tong, L.; Zhou, Y.; Li, Y.; Yang, X. Scattering from fractal surfaces based on decomposition and reconstruction theorem. IEEE Transactions on Geoscience and Remote Sensing 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Lyu, W.; Zheng, S.; Ling, H.; Chen, C. Backdoor attacks against transformers with attention enhancement. In Proceedings of the ICLR 2023 Workshop on Backdoor Attacks and Defenses in Machine Learning; 2023. [Google Scholar]

- Yabo, A.; Arroyo, S.I.; Safar, F.G.; Oliva, D. Vehicle classification and speed estimation using computer vision techniques. In Proceedings of the XXV Congreso Argentino de Control Autom ́atico (AADECA 2016) (Buenos Aires, 2016); 2016. [Google Scholar]

- Jahan, N.; Islam, S.; Foysal, M.F.A. Real-time vehicle classification using cnn. In Proceedings of the 2020 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT); IEEE, 2020; pp. 1–6. [Google Scholar]

- Chen, J.; Xu, W.; Wang, J. Prediction of car purchase amount based on genetic algorithm optimised bp neural network regression algorithm. Preprints, June 2024. [Google Scholar]

- Asvadi, A.; Garrote, L.; Premebida, C.; Peixoto, P.; Nunes, U.J. Multimodal vehicle detection: fusing 3d-lidar and color camera data. Pattern Recognition Letters 2018, 115, 20–29. [Google Scholar] [CrossRef]

- Feng, D.; Haase-Schutz, C.; Rosenbaum, L.; Hertlein, H.; Glaeser, C.; Timm, F.; Wiesbeck, W.; Dietmayer, K. Deep multi-modal object detection and semantic segmentation for autonomous driving: Datasets, methods, and challenges. IEEE Transactions on Intelligent Transporta-tion Systems 2020, 22, 1341–1360. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv arXiv:1409.1556.

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition; 2015; pp. 1–9. [Google Scholar]

- Lin, Z.; Wang, C.; Li, Z.; Wang, Z.; Liu, X.; Zhu, Y. Neural radiance fields convert 2d to 3d texture. Applied Science and Biotechnology Journal for Advanced Research 2024, 3, 40–44. [Google Scholar]

- Tan, C.; Wang, C.; Lin, Z.; He, S.; Li, C. Editable neural radiance fields convert 2d to 3d furniture texture. International Journal of Engineering and Management Research 2024, 14, 62–65. [Google Scholar]

- Wang, J.; Zheng, H.; Huang, Y.; Ding, X. Vehicle type recognition in surveillance images from labeled web-nature data using deep transfer learning. IEEE Transactions on Intelligent Transportation Systems 2017, 19, 2913–2922. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. IEEE, 2020; Volume 109, no. 1, pp. 43–76. [Google Scholar]

- Haghighat, A.K.; Ravichandra-Mouli, V.; Chakraborty, P.; Esfandiari, Y.; Arabi, S.; Sharma, A. Applications of deep learning in intelligent transportation systems. Journal of Big Data Analytics in Transportation 2020, 2, 115–145. [Google Scholar] [CrossRef]

- Engel, E.; Engel, N. A review on machine learning applications for solar plants. Sensors 2022, 22, 9060. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Zhu, Z. Multimodal and multi-task audio-visual vehicle detection and classification. In Proceedings of the 2012 IEEE Ninth International Conference on Advanced Video and Signal-Based Surveillance; IEEE, 2012; pp. 440–446. [Google Scholar]

- Zhou, C.; Zhao, Y.; Cao, J.; Shen, Y.; Cui, X.; Cheng, C. Optimizing search advertising strategies: Integrating reinforcement learning with generalized second-price auctions for enhanced ad ranking and bidding. 2024.

- Dang, B.; Zhao, W.; Li, Y.; Ma, D.; Yu, Q.; Zhu, E.Y. Real-time pill identification for the visually impaired using deep learning. 2024.

- Yan, C.; Qiu, Y.; Zhu, Y. Predict oil production with lstm neural network. Unknown Journal 2021, 357–364. [Google Scholar]

- Li, L.; Li, Z.; Guo, F.; Yang, H.; Wei, J.; Yang, Z. Prototype comparison convolutional networks for one-shot segmentation. IEEE Access 2024, 12, 54978–54990. [Google Scholar] [CrossRef]

- Zhao, G.; Li, P.; Zhang, Z.; Guo, F.; Huang, X.; Xu, W.; Wang, J.; Chen, J. Towards sar automatic target recognition multicategory sar image classification based on light weight vision transformer. 2024.

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the 2012 IEEE conference on computer vision and pattern recognition; IEEE, 2012; pp. 3354–3361. [Google Scholar]

- Wang, S.; Liu, Z.; Peng, B. A self-training framework for automated medical report generation. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing; 2023; pp. 16443–16449. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).