Submitted:

23 June 2024

Posted:

24 June 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Complexity of Real-World Scenes: Real-world environments are highly variable and unpredictable. Objects can appear in various orientations, scales, and lighting conditions, making it difficult for a detection algorithm to generalize and maintain accuracy [8].

- Occlusions and Clutter: Objects may be partially obscured by other objects, leading to incomplete information that must be accurately interpreted [9].

- Speed and Efficiency: Many applications, such as autonomous driving and real-time surveillance, require rapid processing of visual data to make timely decisions, demanding both high accuracy and low latency from detection algorithms [10].

1.1. Traditional Approaches

- Correlation Filters: Used to detect objects by correlating a filter with the image, often struggling with variations in object appearance [12].

- Gabor Features: Extracted texture features using Gabor filters, which are effective for texture representation but computationally intensive [13].

- Histogram of Oriented Gradients (HOG): Captures edge or gradient structures that characterize the shape of objects, typically combined with Support Vector Machines (SVM) for classification [14].

- Local Binary Patterns (LBP): Utilizes pixel intensity comparisons to form a binary pattern, used in texture classification and face recognition [15].

- SVM and Multilayer Perceptrons (MLP): Traditional classifiers used in conjunction with the aforementioned features to detect and classify objects [16]

1.2. Emergence of Convolutional Neural Networks

- Hierarchical Feature Learning: CNNs learn to extract low-level features (e.g., edges, textures) in early layers and high-level features (e.g., object parts, shapes) in deeper layers, facilitating robust object representation [19].

- Spatial Invariance: Convolutional layers enable CNNs to recognize objects regardless of their position within the image, enhancing detection robustness [20].

- Scalability: CNNs can be scaled to handle larger datasets and more complex models, improving performance on a wide range of tasks [21].

1.3. The R-CNN

1.4. You Only Look Once Approach

1.5. Motivation and Organization of the Study

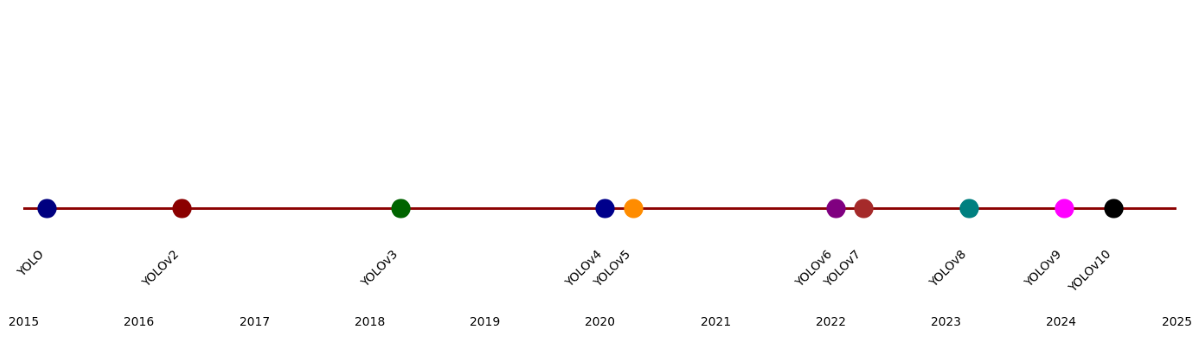

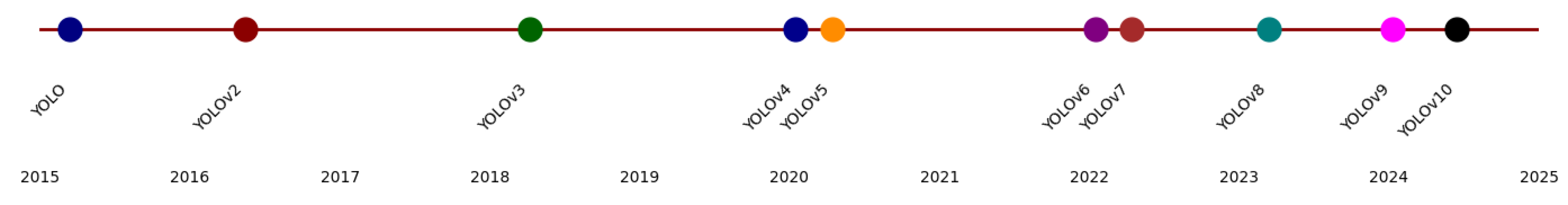

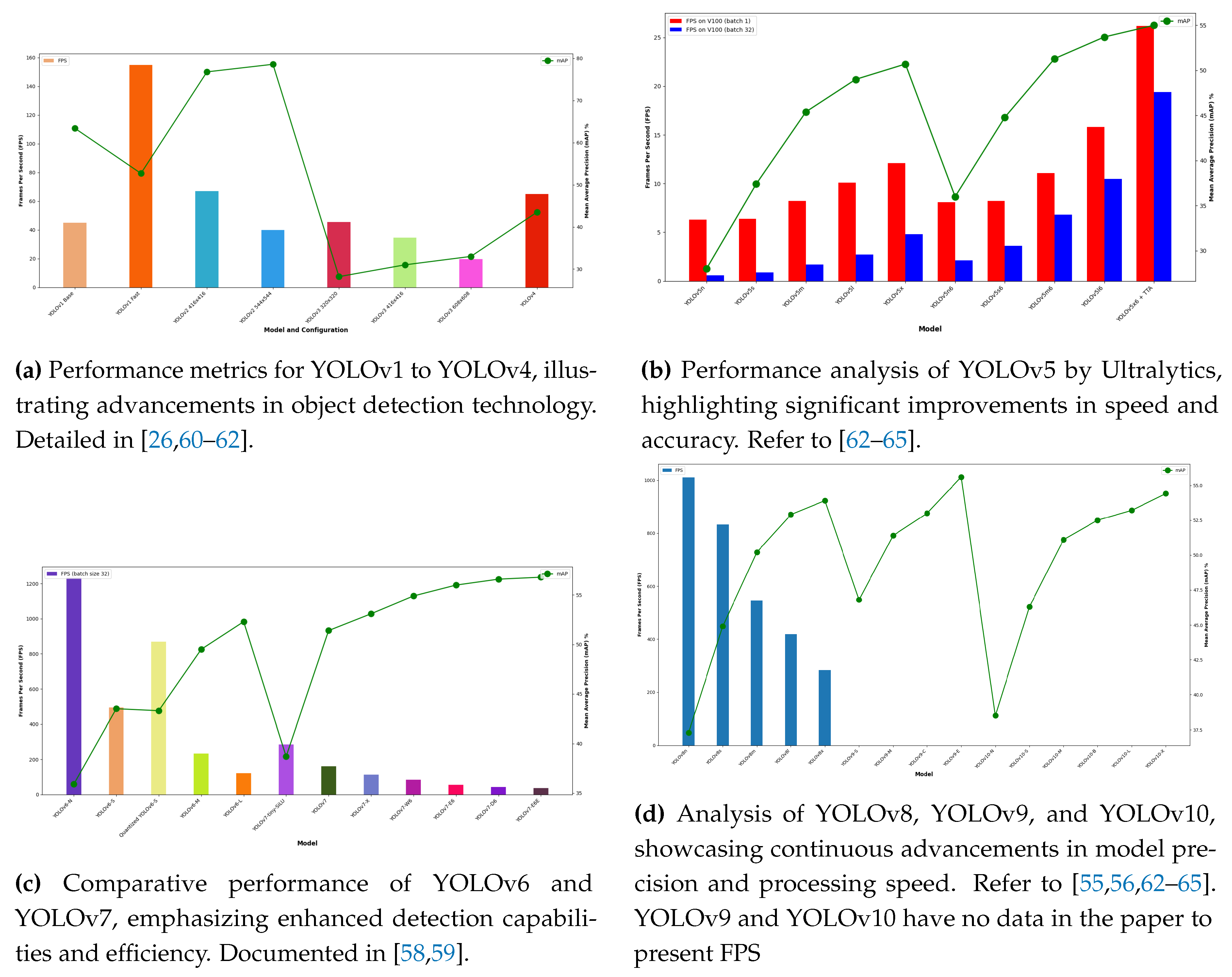

2. YOLO Trajectory

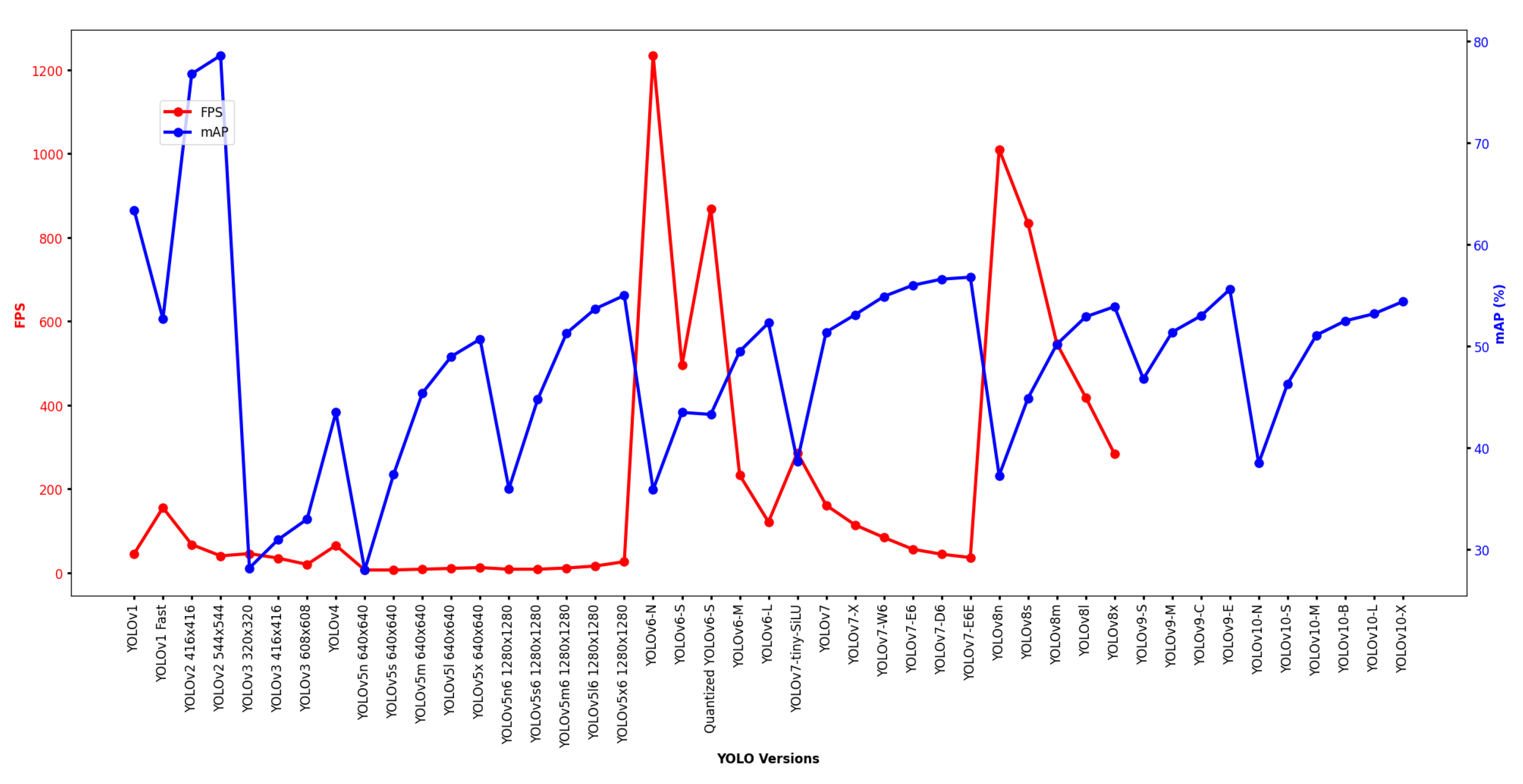

2.1. Significance of Latency and mAP Scores in YOLO

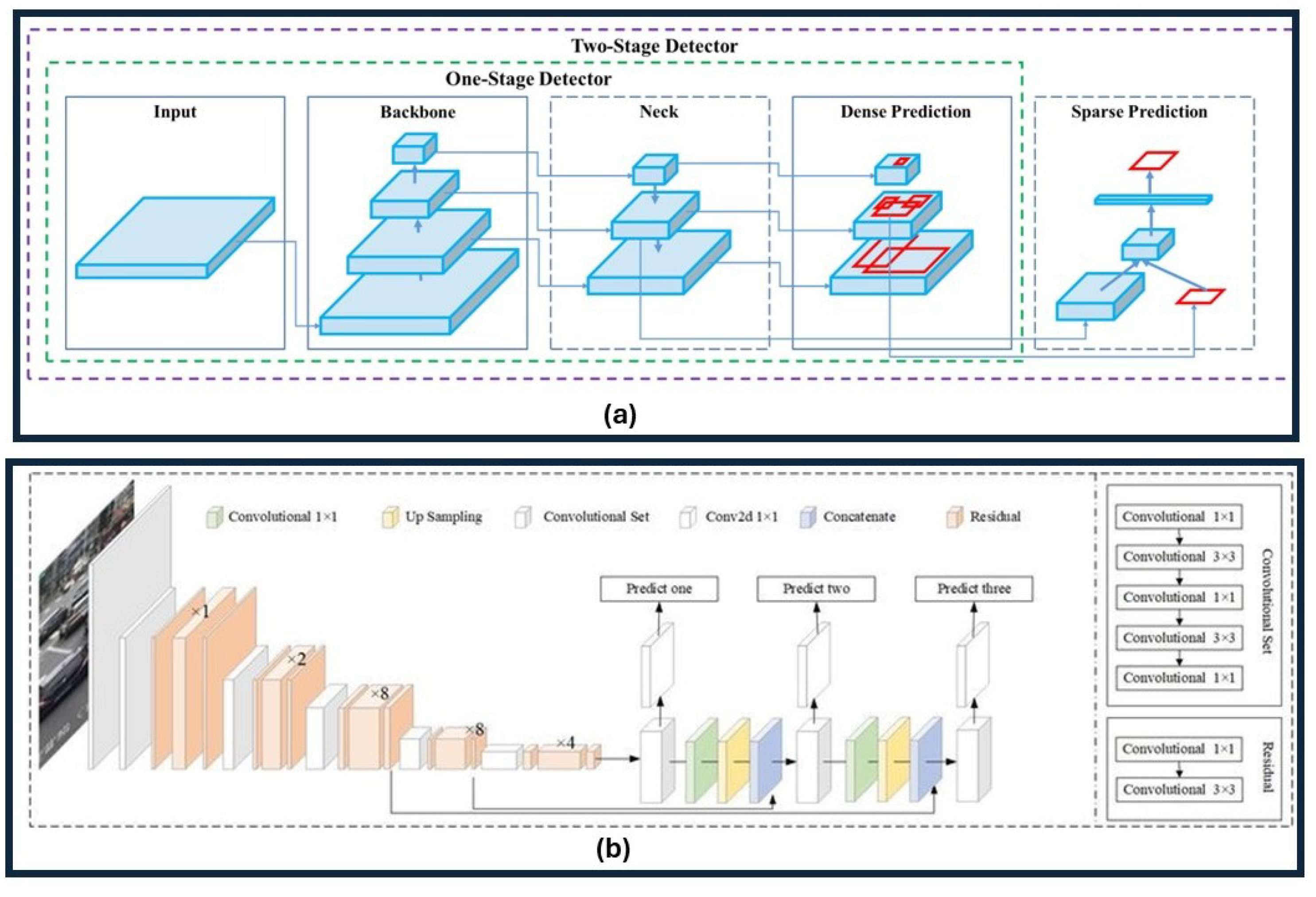

2.2. Single Stage Detection in YOLO

3. Prior YOLO literature: Context and Distinctions

- "A Review of YOLO Algorithm Developments" by Peiyuan Jiang et al. [82] provided an insightful overview on YOLO algorithm development and its evolution through its versions. The authors analyze the fundamental aspects of YOLO’s to object detection, comparing its various iterations to traditional CNNs. They emphasizes the ongoing improvements in YOLO, particularly in enhancing target recognition and feature extraction capabilities. It also discusses YOLO’s application in specific fields like finance, highlighting its practical implications in feature extraction for image-based news analysis [82].

- "A Comprehensive Systematic Review of YOLO for Medical Object Detection (2018 to 2023)" by Ragab et al. [83] presented a systematic review of YOLO’s application in the medical field, that analyzes how different variants, particularly YOLOv7 and YOLOv8, have been employed for various medical detection tasks. They highlight the algorithm’s significant performance in lesion detection, skin lesion classification, and other critical areas, demonstrating YOLO’s superiority over traditional methods in terms of accuracy and computational efficiency. Despite its successes, the review identifies challenges, such as the need for well-annotated datasets and addresses the high computational demands of YOLO implementations. The paper suggested directions for future research to optimize YOLO’s application in medical object detection [83].

- "A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS" by Terven et al. [84] provides an extensive analysis of the evolutionary trajectory of the YOLO algorithm, detailing how each iteration has contributed to advancements in real-time object detection. Their review covers the significant architectural and training enhancements from YOLOv1 through YOLOv8 and introduces YOLO-NAS and YOLO with Transformers. This study serves as a valuable resource for understanding the progression in network architecture, which has progressively improved YOLO’s efficacy in diverse applications such as robotics and autonomous driving.

- "YOLOv1 to v8: Unveiling Each Variant–A Comprehensive Review of YOLO" by Hussain [57], provided in-depth analyses on the internal components and architectural innovations of each YOLO variant. It provided a deep dive into the structural details and incremental improvements that have marked the evolution of YOLO, presenting a well-structured analysis complete with performance benchmarks. This methodological approach not only highlights the capabilities of each variant but also discusses their practical impact across different domains, suggesting the potential for future enhancements like federated learning to improve privacy and model generalization [57].

- "YOLO-v1 to YOLO-v8, the rise of YOLO and its complementary nature toward digital manufacturing and industrial defect detection" by Muhammad Hussain [85] reviewed and showed rapid progression of the YOLO variants, focusing on their critical role in industrial applications, specifically for defect detection in manufacturing. Starting with YOLOv1 and extending through YOLOv8, the paper illustrates how each version has been optimized to meet the demanding needs of real-time, high-accuracy defect detection on constrained devices. Hussain’s work not only examines the technical advancements within each YOLO iteration but also validates their practical efficacy through deployment scenarios in the manufacturing sector, emphasizing YOLO’s alignment with industrial needs [85].

4. Review of YOLO Versions

4.1. YOLOv10, YOLOv9 and YOLOv8

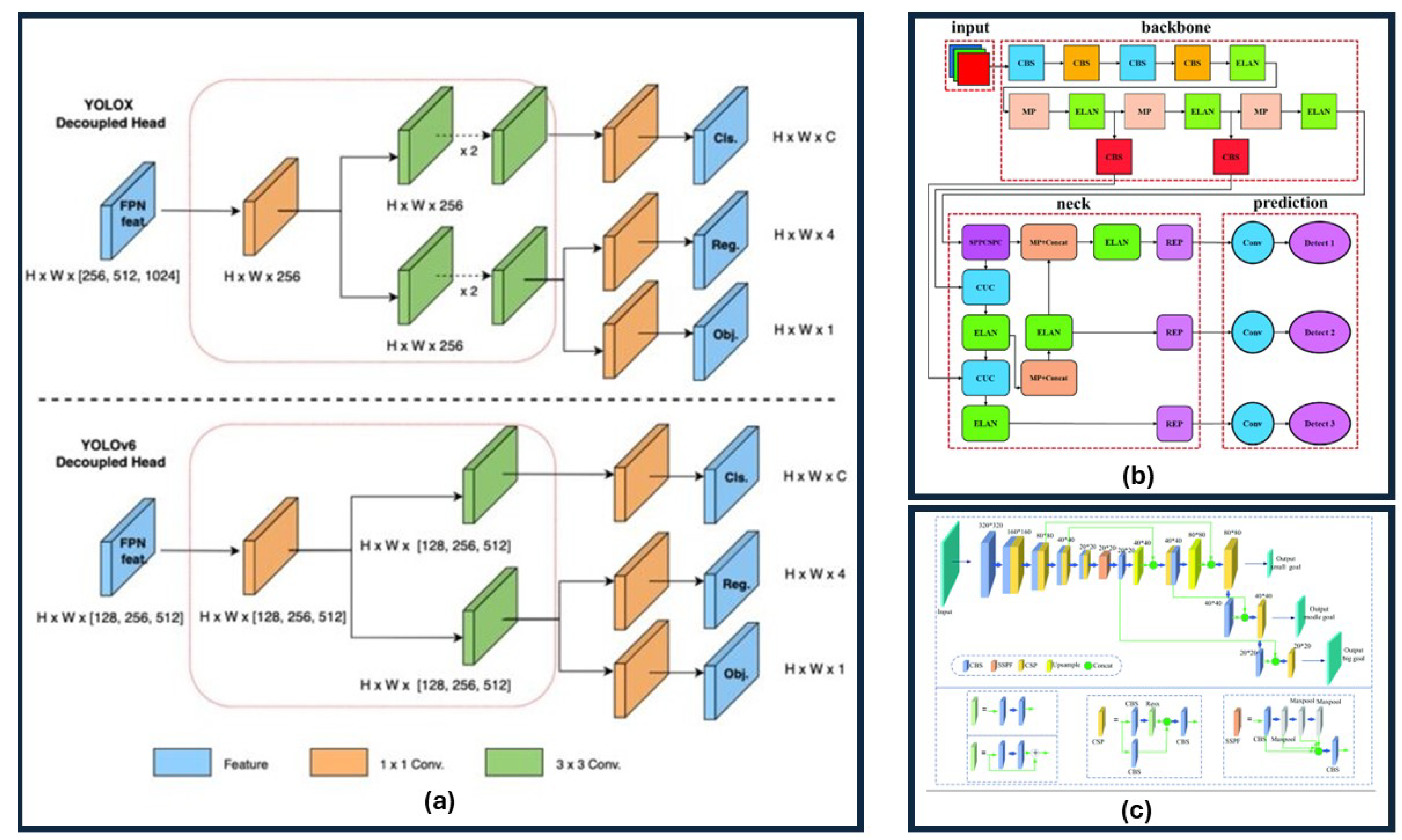

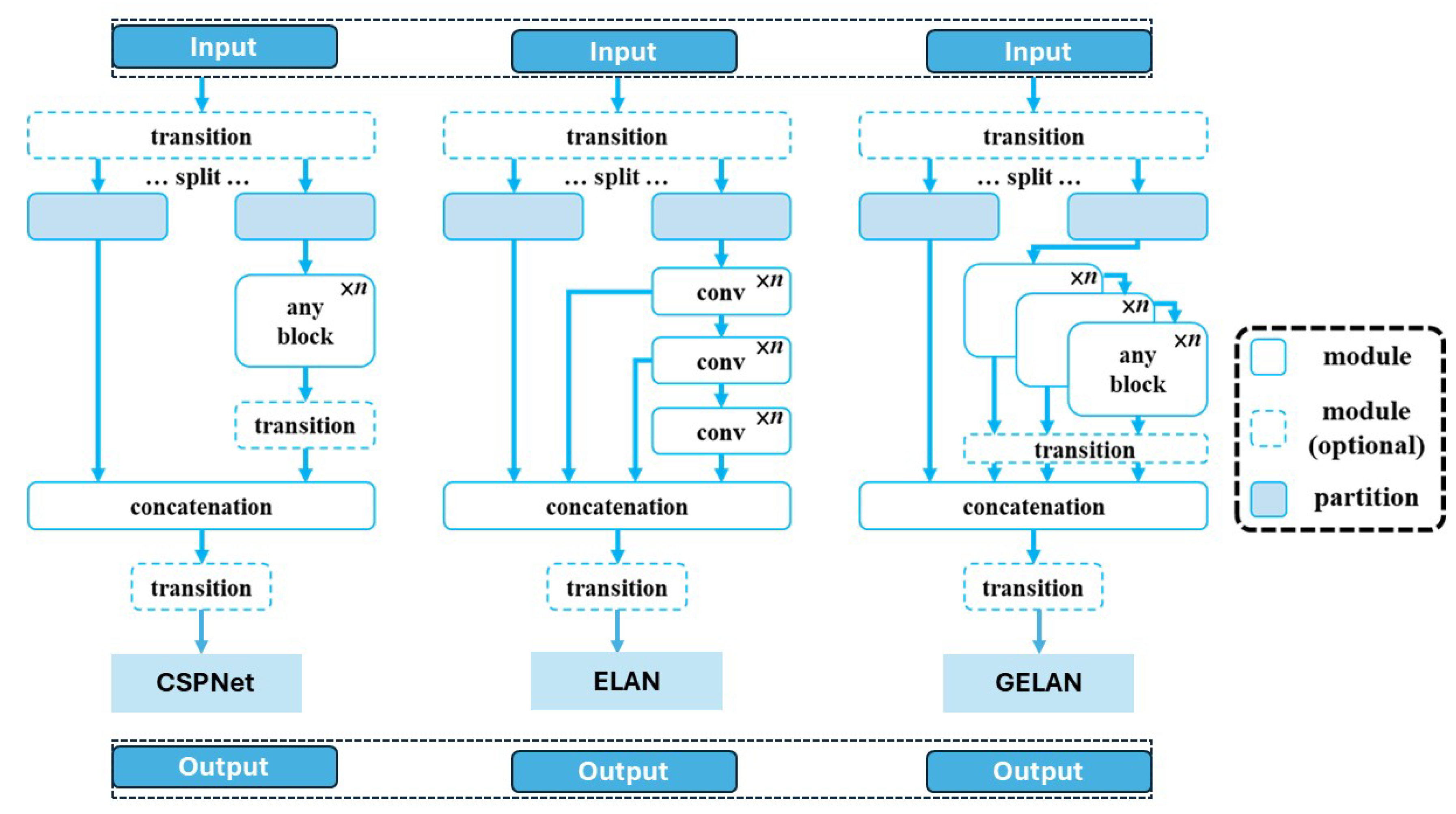

4.2. YOLOv7, YOLOv6 and YOLOv5

4.3. YOLOv4, YOLOv3, YOLOv2 and YOLOv1

5. Applications

5.1. Autonomous Vehicles

5.2. Healthcare and Medical Imaging

5.3. Security and Survelliance

| Title of Paper | Description of Work | Purpose and YOLO Usage | Version | Ref. and Year |

|---|---|---|---|---|

| "Efficient Skin Lesion Detection using YOLOv9 Network" | Utilized YOLOv9 for advanced skin lesion detection, leveraging deep learning to enhance diagnostic accuracy and speed. | Developed improved skin lesion identification using YOLOv9, showcasing significant advances in detection performance. | YOLOv9 | [145], 2023 |

| "Fracture detection in pediatric wrist trauma X-ray images using YOLOv8 algorithm" | Employed YOLOv8 with data augmentation on the GRAZPEDWRI-DX dataset for detecting fractures in pediatric wrist X-ray images. | Enhanced fracture detection in pediatric wrist trauma using YOLOv8, achieving superior mAP compared to previous versions. Designed an app for surgical use. | YOLOv8 | [145], 2023 |

| "Chapter 4 - Medical image analysis of masses in mammography using deep learning model for early diagnosis of cancer tissues" | Utilizes YOLOv7 to detect and diagnose cancerous tissues in mammogram images, leveraging advancements in deep learning for early cancer detection. | Aims to enhance early detection of breast cancer using YOLOv7, improving diagnostic accuracy with deep learning integration. Performance measured by Precision, Recall, and F1-score. | YOLOv7 | [168], 2024 |

| "Improving YOLOv6 using advanced PSO optimizer for weight selection in lung cancer detection and classification" | Enhanced YOLOv6 with Particle Swarm Optimization for weight optimization in lung cancer detection from CT scans. | Utilized advanced PSO to optimize YOLOv6 for higher accuracy in detecting lung cancer, significantly outperforming previous methods on the LUNA 16 Dataset. | YOLOv6 | [150], 2024 |

| "One-Stage methods of computer cision object detection to classify carious lesions from smartphone imaging" | Utilized YOLO v5, YOLO v5X, and YOLO v5M to detect and classify carious lesions from smartphone images. | Aimed to automate caries detection with enhanced accuracy using YOLO. mAP, P, and R metrics validated performance. | YOLOv5, YOLOv5X, YOLOv5M | [169], 2023 |

| "An Improved Method of Polyp Detection Using Custom YOLOv4-Tiny" | Customized YOLOv4-tiny with Inception-ResNet-A block for enhanced detection of polyps in wireless endoscopic images. | Developed to imporve the detection performance of polyp detection using a modified YOLOv4-tiny. Demonstrated significant perforamnce improvement. | YOLOv4-Tiny | [170], 2022 |

| "Detection of dental caries in oral photographs taken by mobile phones based on the YOLOv3 algorithm" | Utilized YOLOv3 for detecting dental caries from mobile phone images, employing image augmentation and enhancement for improved accuracy. | Enhanced detection and diagnosis of dental caries using YOLOv3, with evaluation of diagnostic precision, recall, and F1-score across different datasets. | YOLOv3 | [171], 2021 |

| "Automatic thyroid nodule recognition and diagnosis in ultrasound imaging with the YOLOv2 neural network" | Employed YOLOv2 for automatic detection and diagnosis of thyroid nodules in ultrasound images, enhancing diagnostic precision. | Compared AI performance with radiologists using YOLOv2, showing improved accuracy and specificity in thyroid nodule diagnosis. ROC curve analysis confirms effectiveness. | YOLOv2 | [172], 2019 |

| "Real-Time Facial Features Detection from Low Resolution Thermal Images with Deep Classification Models" | Developed a method to localize facial features from low-resolution thermal images by modifying existing deep classification networks for real-time detection. | Demonstrates how spatial information can be restored and utilized from classification models for facial feature detection, significantly reducing dataset preparation time while maintaining high precision. | Custom Deep Classification Model and YOLO | [173], 2018 |

5.4. Manufacturing

| Title of Paper | Description of Work | Purpose and YOLO Usage | Version | Ref. and Year |

|---|---|---|---|---|

| "YOLOv9-Enabled Vehicle Detection for Urban Security and Forensics Applications" | Implements YOLOv9 for aerial vehicle detection via UAVs, enhancing urban security and forensic capabilities. | Focus on utilizing YOLOv9 for real-time vehicle monitoring, facilitating efficient law enforcement and forensic analysis in urban settings. | YOLOv9 | [166], 2024 |

| "SC-YOLOv8: A Security Check Model for the Inspection of Prohibited Items in X-ray Images" | Developed a custom YOLOv8 model for X-ray image analysis to detect prohibited items. Enhanced model accuracy using a novel backbone structure and data augmentation. | Aimed to improve security screening effectiveness and reduce error rates in detecting prohibited items. Showcases an innovative use of YOLOv8 in security applications. | YOLOv8 | [195], 2023 |

| "Detection of Prohibited Items Based upon X-ray Images and Improved YOLOv7" | Improved YOLOv7 with spatial attention for contraband detection in X-ray images. Implemented large kernel attention mechanisms to improve texture and feature extraction to boost accuracy. | Aims to automate security inspections and enhance public safety by improving prohibited item detection with modified YOLOv7 . Demonstrates YOLOv7’s adaptability in security systems. | YOLOv7 | [196], 2022 |

| "Suspicious Activity Trigger System using YOLOv6 Convolutional Neural Network" | Implements YOLOv6 to detect and classify suspicious activities in CCTV footage, enhancing home surveillance systems. Utilizes deep learning to automatically trigger alerts, improving response times and security effectiveness. | Aims to reduce property theft by integrating YOLOv6 into home security systems to auto-detect suspicious behavior and alert users. Demonstrates YOLOv6’s effectiveness in real-world security applications. | YOLOv6 | [197], 2023 |

| "Real-time Object Detection for Substation Security Early-warning with Deep Neural Network based on YOLO-V5" | Utilizes YOLO-v5 to enhance substation security by detecting multiple threats like fire, unauthorized entry, and vehicle misplacement in real-time. Combines deep learning with video surveillance to reduce the need for extra hardware. | Designed to improve substation security management without costly additional equipment by detecting various security threats simultaneously using YOLO-v5. Demonstrates the application of YOLO-v5 in critical infrastructure protection. | YOLOv5 | [198], 2022 |

| "Fighting against terrorism: A real-time CCTV autonomous weapons detection based on improved YOLO v4" | Imporved YOLOv4 with SCSP-ResNet backbone and F-PaNet module for detecting weapons in CCTV footage, integrating synthetic and real-world data to enhance detection. | Aims to bolster security and counter-terrorism efforts by accurately identifying weapons in CCTV using an advanced YOLOv4 architecture, demonstrating significant performance improvements. | YOLOv4 | [199], 2023 |

| "Automatic tracking of objects using improvised Yolov3 algorithm and alarm human activities in case of anomalies" | Utilizes an enhanced YOLOv3 model to automatically track objects and alert for anomalies in live video feeds, comparing performance with CNNs and decision trees. | Designed to enhance surveillance systems by detecting and alerting on anomalies like bag stealing and lock-breaking, demonstrating rapid processing and high detection accuracy. | YOLOv3 | [200], 2022 |

| "Multi-Object Detection using Enhanced YOLOv2 and LuNet Algorithms in Surveillance Videos" | Employs a novel YOLOv2-LuNet combination for efficient multi-object tracking in video surveillance, enhancing feature extraction and object detection accuracy. | Designed to improve real-time surveillance by enabling robust multi-object tracking in challenging conditions. Highlights the effectiveness of combined YOLOv2 and LuNet approach. | YOLOv2 | [201], 2024 |

| "From Silence to Propagation: Understanding the Relationship between ’Stop Snitchin’ and ’YOLO’" | Examines the cultural shift from ’Stop Snitchin” to ’YOLO’ in urban hip-hop culture, highlighting the role of social media in promoting individualism and exceptionalism. | Aims to explore how social media influences criminal behavior and public perception, applying cultural criminology to assess changes in social interactions and deviance. | N/A | [202], 2015 |

5.5. Agriculture

| Title of Paper | Description of Work | Purpose and YOLO Usage | Version | Ref. and Year |

|---|---|---|---|---|

| "YOLO-IMF: An Improved YOLOv8 Algorithm for Surface Defect Detection in Industrial Manufacturing Field" | Proposes an enhanced YOLOv8, YOLO-IMF, for surface defect detection on aluminum plates. Replaces CIOU with EIOU loss function to better handle small and irregularly shaped targets, achieving significant improvements in precision. | Demonstrates YOLOv8’s extended applicability in industrial settings by enhancing accuracy and defect detection capabilities. | YOLOv8 | [190], 2023 |

| "YOLOv7-SiamFF: Industrial Defect Detection Algorithm Based on Improved YOLOv7" | Introduces YOLOv7-SiamFF, an advanced defect detection framework employing YOLOv7 with Siamese network enhancements for superior defect identification and background noise suppression. | Enhances industrial defect detection by integrating attention mechanisms and feature fusion modules, achieving higher accuracy in pinpointing defect locations. | YOLOv7 | [185], 2024 |

| "A Novel Finetuned YOLOv6 Transfer Learning Model for Real-Time Object Detection" | Enhances real-time object detection by integrating a transfer learning approach with a pruned and finetuned YOLOv6 model, significantly boosting detection accuracy and speed. | Focuses on improving YOLOv6 for efficient object detection in embedded systems, using advanced pruning techniques for reduced model size without sacrificing performance. | YOLOv6 | [228], 2023 |

| "Real-time Tool Detection in Smart Manufacturing Using YOLOv5" | Utilizes YOLOv5 for advanced real-time tool detection in manufacturing environments, optimizing object detection capabilities for precise tool localization. | Aims to enhance smart manufacturing by leveraging YOLOv5 for accurate and real-time detection of various tools, contributing significantly to Industry 4.0 initiatives. | YOLOv5 | [229], 2023 |

| "Efficient Automobile Assembly State Monitoring System Based on Channel-Pruned YOLOv4" | Implements a channel-pruned YOLOv4 algorithm to optimize monitoring in automobile assembly, enhancing detection speed without compromising accuracy. | Designed to streamline assembly monitoring in industrial environments, showcasing YOLOv4’s utility in enhancing operational efficiency and deployment readiness. | YOLOv4 | [230], 2024 |

| "YOLO V3 + VGG16-based Automatic Operations Monitoring in Manufacturing Workshop" | Utilizes a combined YOLO V3 and VGG16 framework to recognize and monitor industrial operations accurately for Industry 4.0 manufacturing workshops. | Aims to enhance production efficiency and quality by automating action analysis and process monitoring using advanced YOLO V3 and VGG16 technologies. | YOLO V3, VGG16 | [231], 2022 |

| "Improvements of Detection Accuracy by YOLOv2 with Data Set Augmentation" | Employs YOLOv2 with an innovative data set augmentation method to enhance the detection accuracy and confidence in identifying defective areas in industrial products. | Seeks to optimize defect detection and visualization on production lines, demonstrating YOLOv2’s effectiveness with limited data augmentation options. | YOLOv2 | [232], 2023 |

6. Challenges, Limitations and Future Directions

- As the latest version in the YOLO series, YOLOv10 has not yet seen widespread adoption in published research. Its release promises cutting-edge improvements in object detection capabilities, but the lack of extensive testing and real-world application data makes it difficult to ascertain its full potential and limitations.

- Preliminary evaluations suggest that while YOLOv10 might offer advancements in speed and accuracy, integrating it into existing systems could present challenges due to compatibility and computational demands. Potential users may hesitate to adopt this version until more comprehensive studies and benchmarks are available, which articulate its advantages over previous models.

- The expectation with YOLOv10, much like its predecessors, is that it will drive further research in object detection technologies. Its eventual widespread implementation could pave the way for addressing complex detection scenarios with higher accuracy, particularly in dynamic environments. However, as with any new technology, the adaptation phase will be crucial in understanding its practical limitations and operational challenges.

- Despite YOLOv9’s enhancements in detection capabilities, it has only been featured in a handful of studies, which limits a comprehensive understanding of its performance across diverse applications. This lack of extensive validation may deter organizations from adopting it until more empirical evidence and comparative analyses establish its efficacy and efficiency over earlier versions.

- While YOLOv9 improves upon the speed and accuracy of its predecessors, it may still struggle with detecting small or overlapping objects in cluttered scenes. This is a recurring challenge in high-density environments like crowded urban areas or complex natural scenes, where precise detection is critical for applications such as autonomous driving and wildlife monitoring.

- Future developments for YOLOv9 could focus on enhancing its robustness in adverse conditions, such as varying weather, lighting, or occlusions. Integrating more adaptive and context-aware mechanisms could help in mitigating false positives and improving the reliability of the system under different operational conditions. The implementation of advanced training techniques such as federated learning could also be explored to enhance its adaptability and learning efficiency from decentralized data sources.

- YOLOv8 has shown significant improvements in object detection tasks, particularly in real-time applications. However, it continues to face challenges in terms of computational efficiency and resource consumption when deployed on lower-end hardware [260]. This can limit its applicability in resource-constrained environments where deploying advanced hardware solutions is not feasible [132].

- The future direction for YOLOv8 could involve optimizing its architectural design to reduce computational load without compromising detection accuracy. Enhancing its scalability to efficiently process images of varying resolutions and conditions can broaden its application scope. Moreover, incorporating adaptive scaling and context-aware training methods could potentially address the detection challenges in complex scenes, making it more robust against diverse operational challenges.

- Although YOLOv7 introduces significant improvements in detection accuracy and speed, its adoption across varied real-world applications reveals a persistent challenge in handling highly dynamic scenes. For instance, in environments with rapid motion or in scenarios involving occlusions, YOLOv7 can still experience drops in performance. The algorithm’s ability to generalize across different types of blur and motion artifacts remains an area for further research and enhancement.

- The complexity of YOLOv7’s architecture, while beneficial for accuracy, imposes a substantial computational burden. This makes it less ideal for deployment on edge devices or platforms with limited processing capabilities, where maintaining a balance between speed and power efficiency is crucial [161,261]. Efforts to streamline the model for such applications without significant loss of performance are necessary.

- Looking forward, there is significant potential in expanding YOLOv7’s capabilities through the integration of semi-supervised or unsupervised learning paradigms. This would enable the model to leverage unlabeled data effectively, a common challenge in the real-world where annotated datasets are often scarce or expensive to produce. Additionally, enhancing the model’s resilience to adversarial attacks and variability in data quality could further solidify its utility in security-sensitive applications like surveillance and fraud detection.

- One of the notable challenges with YOLOv6 is its handling of scale variability within images, which can affect its efficacy in environments where objects appear at diverse distances from the camera. While YOLOv6 shows improved accuracy and speed over its predecessors, it sometimes struggles with small or partially occluded objects, which are common in crowded scenes or complex industrial environments [151,262]. This limitation can be critical in applications such as automated surveillance or advanced manufacturing monitoring.

- YOLOv6, while efficient, still requires considerable computational resources when compared to other models optimized for edge devices. Its deployment in resource-constrained environments such as mobile or embedded systems often requires a trade-off between detection performance and operational efficiency. Further optimizations and model pruning are necessary to achieve the best of both worlds—real-time performance with reduced computational demands.

- Future enhancements for YOLOv6 could focus on incorporating more advanced feature extraction techniques that improve its robustness to variations in object appearance and environmental conditions. Additionally, integrating more adaptive and context-aware learning mechanisms could help overcome some of the challenges related to background clutter and similar adversities. Enhancing the model’s capacity to learn from a limited number of training samples, through techniques such as few-shot learning or transfer learning, could address the scarcity of labeled training data in specialized applications.

- YOLOv5 has made significant strides in improving detection speed and accuracy, but it faces challenges in consistently detecting small objects due to its spatial resolution constraints. This is particularly evident in fields like medical imaging or satellite image analysis, where precision is crucial for identifying fine details. Techniques such as spatial pyramid pooling or enhanced up-sampling may be needed to increase the receptive field and improve the detection of smaller objects without compromising the model’s efficiency [120,263,264].

- While YOLOv5 offers faster training and inference times compared to previous versions, its deployment on edge devices is limited by high memory and processing requirements [127,265]. Although optimized models like YOLOv5s provide a solution, they sometimes do so at the cost of detection accuracy. Optimizing network architecture through neural architecture search (NAS) could potentially offer a more balanced solution, enhancing both performance and efficiency for real-time object detection applications.

- The adaptability of YOLOv5 to varied environmental conditions and different types of data distribution remains an area for development. Future research could focus on enhancing the robustness of YOLOv5 through advanced data augmentation techniques and domain adaptation strategies. This would enable the model to maintain high accuracy levels across diverse application settings, from urban surveillance to complex natural environments, effectively handling variations in lighting, weather, and seasonal changes.

- The advancements in YOLOv4 brought significant improvements in speed and accuracy, but the model’s performance remains inconsistent across various datasets, especially in class imbalance and rare object recognition. Its computational demand limits its practical deployment on low-power devices. Efforts to enhance model compression and environmental adaptability could further broaden its utility in real-world applications.

- YOLOv3 improved upon the balance of speed and accuracy, yet it struggles with small object detection due to its grid limitation. Its computational efficiency poses challenges for deployment in resource-constrained environments, prompting research towards optimization techniques to improve efficiency without sacrificing performance. Additionally, enhancing the model’s robustness to environmental variations could improve its reliability for applications like autonomous driving and urban surveillance.

- Despite the incremental improvements introduced in YOLOv2, it faces challenges in detecting small objects, balancing speed with accuracy, and maintaining relevance with the advent of more capable successors. This version’s reliance on a fixed grid system hampers its ability to perform in high-precision detection tasks. Future developments may shift towards adapting YOLOv2’s core strengths in new architectures that enhance its spatial resolution and dynamic scaling capabilities.

- The potential for YOLOv4, YOLOv3, and YOLOv2 in future research involves exploring adaptive mechanisms that can tailor learning rates and augment data to better handle diverse operational scenarios. Integrating these models with newer technologies like model pruning and feature fusion may address existing inefficiencies and extend their applicability to a wider range of applications.

- YOLOv1 was revolutionary for its time, introducing real-time object detection by processing the entire image at once as a single regression problem. However, it faces significant challenges in dealing with small objects due to each grid cell predicting only two boxes and the probabilities for the classes. This structure often leads to poor performance on groups of small objects that are close together, such as flocks of birds or traffic scenes with multiple vehicles at a distance. Improvements in subsequent models focus on increasing the number of predictions per grid and incorporating finer-grained feature maps to enhance small object detection.

- Another limitation of YOLOv1 is the spatial constraints of its bounding boxes. Since each cell in the grid can only predict two boxes and has limited context about its neighboring cells, the precision in localizing objects, especially those with complex or irregular shapes, is often compromised. This challenge is particularly evident in medical imaging and satellite image analysis, where the exact contours of the objects are crucial. Advances in convolutional neural network designs and cross-layer feature integration in later versions seek to address these drawbacks.

- Despite the foundational advancements introduced by YOLOv1, its direct application has waned over the years, superseded by more robust iterations like YOLOv2 and YOLOv3. These later versions build upon the core principles of YOLOv1 but offer refined mechanisms for handling varied object sizes and aspect ratios. Future research directions are less likely to focus on YOLOv1 itself but may explore its integration into hybrid models or specialized adaptations that can leverage its speed for real-time applications where latency is critical, albeit with compensations in detection accuracy and granularity.

- Future iterations could focus on dynamic grid systems, lighter network architectures, and advanced scaling features to tackle the challenges of small object detection and computational limitations. These improvements could enhance their deployment in emerging areas such as edge computing, where real-time processing and low power consumption are crucial.

- As newer models like YOLOv8 and YOLOv9 continue to evolve, the foundational aspects of YOLOv4, YOLOv3, and YOLOv2 can still offer valuable insights for developing hybrid models or specialized applications. Research may increasingly focus on leveraging these older versions for their speed attributes while compensating for their detection limitations through composite and hybrid modeling approaches.

6.1. YOLO and the Artificial General Intelligence - AGI

6.1.1. YOLO as the “Neural Network That Can Do”

6.2. YOLO on the Edge Devices

6.3. Future Prospects

6.4. Challenges in Statistical Metrics for Evaluation

7. Conclusion

Author Contributions: Ranjan Sapkota

References

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. International journal of computer vision 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Fernandez, R.A.S.; Sanchez-Lopez, J.L.; Sampedro, C.; Bavle, H.; Molina, M.; Campoy, P. Natural user interfaces for human-drone multi-modal interaction. 2016 International Conference on Unmanned Aircraft Systems (ICUAS). IEEE, 2016, pp. 1013–1022.

- Wang, R.J.; Li, X.; Ling, C.X. Pelee: A real-time object detection system on mobile devices. Advances in neural information processing systems 2018, 31. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Advances in neural information processing systems 2015, 28. [Google Scholar] [CrossRef] [PubMed]

- Flippo, D.; Gunturu, S.; Baldwin, C.; Badgujar, C. Tree Trunk Detection of Eastern Red Cedar in Rangeland Environment with Deep Learning Technique. Croatian journal of forest engineering 2023, 44, 357–368. [Google Scholar] [CrossRef]

- Guerrero-Ibáñez, J.; Zeadally, S.; Contreras-Castillo, J. Sensor technologies for intelligent transportation systems. Sensors 2018, 18, 1212. [Google Scholar] [CrossRef]

- Hussain, R.; Zeadally, S. Autonomous cars: Research results, issues, and future challenges. IEEE Communications Surveys & Tutorials 2018, 21, 1275–1313. [Google Scholar]

- Kaushal, M.; Khehra, B.S.; Sharma, A. Soft Computing based object detection and tracking approaches: State-of-the-Art survey. Applied Soft Computing 2018, 70, 423–464. [Google Scholar] [CrossRef]

- Khan, S.M.; Shah, M. Tracking multiple occluding people by localizing on multiple scene planes. IEEE transactions on pattern analysis and machine intelligence 2008, 31, 505–519. [Google Scholar] [CrossRef]

- Gupta, A.; Anpalagan, A.; Guan, L.; Khwaja, A.S. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array 2021, 10, 100057. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proceedings of the IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Liu, S.; Liu, D.; Srivastava, G.; Połap, D.; Woźniak, M. Overview and methods of correlation filter algorithms in object tracking. Complex & Intelligent Systems 2021, 7, 1895–1917. [Google Scholar]

- Hu, X.d.; Wang, X.q.; Meng, F.j.; Hua, X.; Yan, Y.j.; Li, Y.y.; Huang, J.; Jiang, X.l. Gabor-CNN for object detection based on small samples. Defence Technology 2020, 16, 1116–1129. [Google Scholar] [CrossRef]

- Surasak, T.; Takahiro, I.; Cheng, C.h.; Wang, C.e.; Sheng, P.y. Histogram of oriented gradients for human detection in video. 2018 5th International conference on business and industrial research (ICBIR). IEEE, 2018, pp. 172–176.

- Karis, M.S.; Razif, N.R.A.; Ali, N.M.; Rosli, M.A.; Aras, M.S.M.; Ghazaly, M.M. Local Binary Pattern (LBP) with application to variant object detection: A survey and method. 2016 IEEE 12th international colloquium on signal processing & its applications (CSPA). IEEE, 2016, pp. 221–226.

- Chiu, H.J.; Li, T.H.S.; Kuo, P.H. Breast cancer–detection system using PCA, multilayer perceptron, transfer learning, and support vector machine. IEEE Access 2020, 8, 204309–204324. [Google Scholar] [CrossRef]

- Xiang, Y.; Mottaghi, R.; Savarese, S. Beyond pascal: A benchmark for 3d object detection in the wild. IEEE winter conference on applications of computer vision. IEEE, 2014, pp. 75–82.

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 3520–3529.

- Li, X.; Song, D.; Dong, Y. Hierarchical feature fusion network for salient object detection. IEEE Transactions on Image Processing 2020, 29, 9165–9175. [Google Scholar] [CrossRef] [PubMed]

- Crawford, E.; Pineau, J. Spatially invariant unsupervised object detection with convolutional neural networks. Proceedings of the AAAI Conference on Artificial Intelligence, 2019, Vol. 33, pp. 3412–3420.

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 10781–10790.

- Zhiqiang, W.; Jun, L. A review of object detection based on convolutional neural network. 2017 36th Chinese control conference (CCC). IEEE, 2017, pp. 11104–11109.

- Teutsch, M.; Kruger, W. Robust and fast detection of moving vehicles in aerial videos using sliding windows. Proceedings of the IEEE conference on computer vision and pattern recognition workshops, 2015, pp. 26–34.

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J.; Mercan, E. R-CNN for object detection. IEEE Conference, 2014.

- Girshick, R. Fast r-cnn. Proceedings of the IEEE international conference on computer vision, 2015, pp. 1440–1448.

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 779–788.

- Badgujar, C.M.; Poulose, A.; Gan, H. Agricultural Object Detection with You Look Only Once (YOLO) Algorithm: A Bibliometric and Systematic Literature Review. arXiv 2024, arXiv:2401.10379. [Google Scholar]

- Patel, G.S.; Desai, A.A.; Kamble, Y.Y.; Pujari, G.V.; Chougule, P.A.; Jujare, V.A. Identification and separation of medicine through Robot using YOLO and CNN Algorithms for Healthcare. 2023 International Conference on Artificial Intelligence for Innovations in Healthcare Industries (ICAIIHI). IEEE, 2023, Vol. 1, pp. 1–5.

- Ajayi, O.G.; Ashi, J.; Guda, B. Performance evaluation of YOLO v5 model for automatic crop and weed classification on UAV images. Smart Agricultural Technology 2023, 5, 100231. [Google Scholar] [CrossRef]

- Morbekar, A.; Parihar, A.; Jadhav, R. Crop disease detection using YOLO. 2020 international conference for emerging technology (INCET). IEEE, 2020, pp. 1–5.

- Li, D.; Ahmed, F.; Wu, N.; Sethi, A.I. Yolo-JD: A Deep Learning Network for jute diseases and pests detection from images. Plants 2022, 11, 937. [Google Scholar] [CrossRef] [PubMed]

- Cheeti, S.; Kumar, G.S.; Priyanka, J.S.; Firdous, G.; Ranjeeva, P.R. Pest detection and classification using YOLO AND CNN. Annals of the Romanian Society for Cell Biology 2021, pp.15295–15300.

- Pham, M.T.; Courtrai, L.; Friguet, C.; Lefèvre, S.; Baussard, A. YOLO-Fine: One-stage detector of small objects under various backgrounds in remote sensing images. Remote Sensing 2020, 12, 2501. [Google Scholar] [CrossRef]

- Cheng, L.; Li, J.; Duan, P.; Wang, M. A small attentional YOLO model for landslide detection from satellite remote sensing images. Landslides 2021, 18, 2751–2765. [Google Scholar] [CrossRef]

- Chen, C.; Zheng, Z.; Xu, T.; Guo, S.; Feng, S.; Yao, W.; Lan, Y. Yolo-based uav technology: A review of the research and its applications. Drones 2023, 7, 190. [Google Scholar] [CrossRef]

- Luo, X.; Wu, Y.; Zhao, L. YOLOD: A target detection method for UAV aerial imagery. Remote Sensing 2022, 14, 3240. [Google Scholar] [CrossRef]

- Prinzi, F.; Insalaco, M.; Orlando, A.; Gaglio, S.; Vitabile, S. A YOLO-based model for breast cancer detection in mammograms. Cognitive Computation 2024, 16, 107–120. [Google Scholar] [CrossRef]

- Aly, G.H.; Marey, M.; El-Sayed, S.A.; Tolba, M.F. YOLO based breast masses detection and classification in full-field digital mammograms. Computer methods and programs in biomedicine 2021, 200, 105823. [Google Scholar] [CrossRef] [PubMed]

- Ünver, H.M.; Ayan, E. Skin lesion segmentation in dermoscopic images with combination of YOLO and grabcut algorithm. Diagnostics 2019, 9, 72. [Google Scholar] [CrossRef] [PubMed]

- Tan, L.; Huangfu, T.; Wu, L.; Chen, W. Comparison of RetinaNet, SSD, and YOLO v3 for real-time pill identification. BMC medical informatics and decision making 2021, 21, 1–11. [Google Scholar] [CrossRef]

- Suksawatchon, U.; Srikamdee, S.; Suksawatchon, J.; Werapan, W. Shape Recognition Using Unconstrained Pill Images Based on Deep Convolution Network. 2022 6th International Conference on Information Technology (InCIT). IEEE, 2022, pp. 309–313.

- Arroyo, M.A.; Ziad, M.T.I.; Kobayashi, H.; Yang, J.; Sethumadhavan, S. YOLO: frequently resetting cyber-physical systems for security. Autonomous Systems: Sensors, Processing, and Security for Vehicles and Infrastructure 2019. SPIE, 2019, Vol. 11009, pp. 166–183.

- Bordoloi, N.; Talukdar, A.K.; Sarma, K.K. Suspicious activity detection from videos using yolov3. 2020 IEEE 17th India Council International Conference (INDICON). IEEE, 2020, pp. 1–5.

- Gorave, A.; Misra, S.; Padir, O.; Patil, A.; Ladole, K. Suspicious activity detection using live video analysis. Proceeding of International Conference on Computational Science and Applications: ICCSA 2019. Springer, 2020, pp. 203–214.

- Kolpe, R.; Ghogare, S.; Jawale, M.; William, P.; Pawar, A. Identification of face mask and social distancing using YOLO algorithm based on machine learning approach. 2022 6th International conference on intelligent computing and control systems (ICICCS). IEEE, 2022, pp. 1399–1403.

- Bashir, S.; Qureshi, R.; Shah, A.; Fan, X.; Alam, T. YOLOv5-M: A deep neural network for medical object detection in real-time. 2023 IEEE Symposium on Industrial Electronics & Applications (ISIEA). IEEE, 2023, pp. 1–6.

- Pham, D.L.; Chang, T.W.; others. A YOLO-based real-time packaging defect detection system. Procedia Computer Science 2023, 217, 886–894. [Google Scholar]

- Klarák, J.; Andok, R.; Malík, P.; Kuric, I.; Ritomskỳ, M.; Klačková, I.; Tsai, H.Y. From Anomaly Detection to Defect Classification. Sensors 2024, 24, 429. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Yang, J. Improved YOLOv2 object detection model. 2018 6th international conference on multimedia computing and systems (ICMCS). IEEE, 2018, pp. 1–6.

- Nakahara, H.; Yonekawa, H.; Fujii, T.; Sato, S. A lightweight YOLOv2: A binarized CNN with a parallel support vector regression for an FPGA. Proceedings of the 2018 ACM/SIGDA International Symposium on field-programmable gate arrays, 2018, pp. 31–40.

- Kim, K.J.; Kim, P.K.; Chung, Y.S.; Choi, D.H. Performance enhancement of YOLOv3 by adding prediction layers with spatial pyramid pooling for vehicle detection. 2018 15th IEEE international conference on advanced video and signal based surveillance (AVSS). IEEE, 2018, pp. 1–6.

- Nepal, U.; Eslamiat, H. Comparing YOLOv3, YOLOv4 and YOLOv5 for autonomous landing spot detection in faulty UAVs. Sensors 2022, 22, 464. [Google Scholar] [CrossRef]

- Sozzi, M.; Cantalamessa, S.; Cogato, A.; Kayad, A.; Marinello, F. Automatic bunch detection in white grape varieties using YOLOv3, YOLOv4, and YOLOv5 deep learning algorithms. Agronomy 2022, 12, 319. [Google Scholar] [CrossRef]

- Mohod, N.; Agrawal, P.; Madaan, V. YOLOv4 vs YOLOv5: Object detection on surveillance videos. International Conference on Advanced Network Technologies and Intelligent Computing. Springer, 2022, pp. 654–665.

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Hussain, M. YOLOv1 to v8: Unveiling Each Variant–A Comprehensive Review of YOLO. IEEE Access 2024, 12, 42816–42833. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2023, pp. 7464–7475.

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W. ; others. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: better, faster, stronger. Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 7263–7271.

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ultralytics. Home — docs.ultralytics.com. https://docs.ultralytics.com/. [Accessed 28-05-2024].

- Ultralytics. Comprehensive Guide to Ultralytics YOLOv5 — docs.ultralytics.com. https://docs.ultralytics.com/yolov5/. [Accessed 28-05-2024].

- GitHub - ultralytics/yolov5: YOLOv5 in PyTorch > ONNX > CoreML > TFLite — github.com. https://github.com/ultralytics/yolov5. [Accessed 28-05-2024].

- Tang, S.; Yuan, Y. Object detection based on convolutional neural network. International Conference-IEEE–2016, 2015.

- Mao, H.; Yang, X.; Dally, W.J. A delay metric for video object detection: What average precision fails to tell. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 573–582.

- Chen, B.; Ghiasi, G.; Liu, H.; Lin, T.Y.; Kalenichenko, D.; Adam, H.; Le, Q.V. Mnasfpn: Learning latency-aware pyramid architecture for object detection on mobile devices. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 13607–13616.

- Pestana, D.; Miranda, P.R.; Lopes, J.D.; Duarte, R.P.; Véstias, M.P.; Neto, H.C.; De Sousa, J.T. A full featured configurable accelerator for object detection with YOLO. IEEE Access 2021, 9, 75864–75877. [Google Scholar] [CrossRef]

- Zhou, P.; Ni, B.; Geng, C.; Hu, J.; Xu, Y. Scale-transferrable object detection. proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 528–537.

- Hall, D.; Dayoub, F.; Skinner, J.; Zhang, H.; Miller, D.; Corke, P.; Carneiro, G.; Angelova, A.; Sünderhauf, N. Probabilistic object detection: Definition and evaluation. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2020, pp. 1031–1040.

- Goutte, C.; Gaussier, E. A probabilistic interpretation of precision, recall and F-score, with implication for evaluation. European conference on information retrieval. Springer, 2005, pp. 345–359.

- Liang, Z.; Zhang, Z.; Zhang, M.; Zhao, X.; Pu, S. Rangeioudet: Range image based real-time 3d object detector optimized by intersection over union. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 7140–7149.

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14. Springer, 2016, pp. 21–37. 11 October.

- Fu, C.Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. Dssd: Deconvolutional single shot detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Zhang, S.; Wen, L.; Bian, X.; Lei, Z.; Li, S.Z. Single-shot refinement neural network for object detection. Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 4203–4212.

- Cui, L.; Ma, R.; Lv, P.; Jiang, X.; Gao, Z.; Zhou, B.; Xu, M. MDSSD: multi-scale deconvolutional single shot detector for small objects. arXiv 2018, arXiv:1805.07009. [Google Scholar]

- Lin, T.; Zhao, X.; Shou, Z. Single shot temporal action detection. Proceedings of the 25th ACM international conference on Multimedia, 2017, pp. 988–996.

- Tang, X.; Du, D.K.; He, Z.; Liu, J. Pyramidbox: A context-assisted single shot face detector. Proceedings of the European conference on computer vision (ECCV), 2018, pp. 797–813.

- Li, Z.; Yang, L.; Zhou, F. FSSD: feature fusion single shot multibox detector. arXiv 2017, arXiv:1712.00960. [Google Scholar]

- Jiang, J.; Xu, H.; Zhang, S.; Fang, Y. Object detection algorithm based on multiheaded attention. Applied Sciences 2019, 9, 1829. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo algorithm developments. Procedia computer science 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Ragab, M.G.; Abdulkader, S.J.; Muneer, A.; Alqushaibi, A.; Sumiea, E.H.; Qureshi, R.; Al-Selwi, S.M.; Alhussian, H. A Comprehensive Systematic Review of YOLO for Medical Object Detection (2018 to 2023). IEEE Access 2024. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A comprehensive review of yolo architectures in computer vision: From yolov1 to yolov8 and yolo-nas. Machine Learning and Knowledge Extraction 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Hussain, M. YOLO-v1 to YOLO-v8, the rise of YOLO and its complementary nature toward digital manufacturing and industrial defect detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Rothe, R.; Guillaumin, M.; Van Gool, L. Non-maximum suppression for object detection by passing messages between windows. Computer Vision–ACCV 2014: 12th Asian Conference on Computer Vision, Singapore, Singapore, November 1-5, 2014, Revised Selected Papers, Part I 12. Springer, 2015, pp. 290–306.

- Li, S.; Li, M.; Li, R.; He, C.; Zhang, L. One-to-few label assignment for end-to-end dense detection. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2023, pp. 7350–7359.

- Bhagat, S.; Kokare, M.; Haswani, V.; Hambarde, P.; Kamble, R. WheatNet-lite: A novel light weight network for wheat head detection. Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 1332–1341.

- Hu, Y.; Tan, W.; Meng, F.; Liang, Y. A Decoupled Spatial-Channel Inverted Bottleneck For Image Compression. 2023 IEEE International Conference on Image Processing (ICIP). IEEE, 2023, pp. 1740–1744.

- Yang, G.; Wang, J.; Nie, Z.; Yang, H.; Yu, S. A lightweight YOLOv8 tomato detection algorithm combining feature enhancement and attention. Agronomy 2023, 13, 1824. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13. Springer, 2014, pp. 740–755.

- Jocher, G.; others. YOLOv8: A Comprehensive Improvement of the YOLO Object Detection Series. https://docs.ultralytics.com/yolov8/, 2022. Accessed: 2024-06-05.

- Tishby, N.; Zaslavsky, N. Deep learning and the information bottleneck principle. 2015 ieee information theory workshop (itw). IEEE, 2015, pp. 1–5.

- Zhang, B.; Li, J.; Bai, Y.; Jiang, Q.; Yan, B.; Wang, Z. An Improved Microaneurysm Detection Model Based on SwinIR and YOLOv8. Bioengineering 2023, 10, 1405. [Google Scholar] [CrossRef]

- Chien, C.T.; Ju, R.Y.; Chou, K.Y.; Chiang, J.S. YOLOv9 for Fracture Detection in Pediatric Wrist Trauma X-ray Images. arXiv 2024, arXiv:2403.11249. [Google Scholar] [CrossRef]

- YOLOv8 Object Detection Model: What is, How to Use — roboflow.com. https://roboflow.com/model/yolov8. [Accessed 28-05-2024].

- Ultralytics. Ultralytics YOLOv8 Solutions: Quick Walkthrough — ultralytics.medium.com. https://ultralytics.medium.com/ultralytics-yolov8-solutions-quick-walkthrough-b802fd6da5d7. [Accessed 28-05-2024].

- Du, S.; Zhang, B.; Zhang, P.; Xiang, P. An improved bounding box regression loss function based on CIOU loss for multi-scale object detection. 2021 IEEE 2nd International Conference on Pattern Recognition and Machine Learning (PRML). IEEE, 2021, pp. 92–98.

- Xu, S.; Wang, X.; Lv, W.; Chang, Q.; Cui, C.; Deng, K.; Wang, G.; Dang, Q.; Wei, S.; Du, Y. others. PP-YOLOE: An evolved version of YOLO. arXiv 2022, arXiv:2203.16250. [Google Scholar]

- Yue, X.; Qi, K.; Na, X.; Zhang, Y.; Liu, Y.; Liu, C. Improved YOLOv8-Seg Network for Instance Segmentation of Healthy and Diseased Tomato Plants in the Growth Stage. Agriculture 2023, 13, 1643. [Google Scholar] [CrossRef]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 2778–2788.

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. Proceedings of the European conference on computer vision (ECCV), 2018, pp. 3–19.

- Hnewa, M.; Radha, H. Integrated multiscale domain adaptive yolo. IEEE Transactions on Image Processing 2023, 32, 1857–1867. [Google Scholar] [CrossRef] [PubMed]

- Bai, Z.; Pei, X.; Qiao, Z.; Wu, G.; Bai, Y. Improved YOLOv7 Target Detection Algorithm Based on UAV Aerial Photography. Drones 2024, 8, 104. [Google Scholar] [CrossRef]

- Jocher, G.; Team, U. YOLOv5. https://github.com/ultralytics/yolov5, 2020. Accessed: 2024-06-05.

- Team, M. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. https://github.com/meituan/YOLOv6, 2022. Accessed: 2024-06-05.

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Sirisha, U.; Praveen, S.P.; Srinivasu, P.N.; Barsocchi, P.; Bhoi, A.K. Statistical analysis of design aspects of various YOLO-based deep learning models for object detection. International Journal of Computational Intelligence Systems 2023, 16, 126. [Google Scholar] [CrossRef]

- Wang, K.; Liew, J.H.; Zou, Y.; Zhou, D.; Feng, J. Panet: Few-shot image semantic segmentation with prototype alignment. proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 9197–9206.

- Zhang, Z.; Lu, X.; Cao, G.; Yang, Y.; Jiao, L.; Liu, F. ViT-YOLO: Transformer-based YOLO for object detection. Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 2799–2808.

- Mahasin, M.; Dewi, I.A. Comparison of cspdarknet53, cspresnext-50, and efficientnet-b0 backbones on yolo v4 as object detector. International Journal of Engineering, Science and Information Technology 2022, 2, 64–72. [Google Scholar] [CrossRef]

- Misra, D. Mish: A self regularized non-monotonic activation function. arXiv 2019, arXiv:1908.08681. [Google Scholar]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. Cutmix: Regularization strategy to train strong classifiers with localizable features. Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 6023–6032.

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Dropblock: A regularization method for convolutional networks. Advances in neural information processing systems 2018, 31. [Google Scholar]

- Müller, R.; Kornblith, S.; Hinton, G.E. When does label smoothing help? Advances in neural information processing systems 2019, 32. [Google Scholar]

- Zhang, Z.; He, T.; Zhang, H.; Zhang, Z.; Xie, J.; Li, M. Bag of freebies for training object detection neural networks. arXiv 2019, arXiv:1902.04103. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar]

- Santurkar, S.; Tsipras, D.; Ilyas, A.; Madry, A. How does batch normalization help optimization? Advances in neural information processing systems 2018, 31. [Google Scholar]

- Vijayakumar, A.; Vairavasundaram, S. Yolo-based object detection models: A review and its applications. Multimedia Tools and Applications.

- Benjumea, A.; Teeti, I.; Cuzzolin, F.; Bradley, A. YOLO-Z: Improving small object detection in YOLOv5 for autonomous vehicles. arXiv 2021, arXiv:2112.11798. [Google Scholar]

- Sarda, A.; Dixit, S.; Bhan, A. Object detection for autonomous driving using yolo [you only look once] algorithm. 2021 Third international conference on intelligent communication technologies and virtual mobile networks (ICICV). IEEE, 2021, pp. 1370–1374.

- Cai, Y.; Luan, T.; Gao, H.; Wang, H.; Chen, L.; Li, Y.; Sotelo, M.A.; Li, Z. YOLOv4-5D: An effective and efficient object detector for autonomous driving. IEEE Transactions on Instrumentation and Measurement 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Zhao, J.; Hao, S.; Dai, C.; Zhang, H.; Zhao, L.; Ji, Z.; Ganchev, I. Improved vision-based vehicle detection and classification by optimized YOLOv4. IEEE Access 2022, 10, 8590–8603. [Google Scholar] [CrossRef]

- Woo, J.; Baek, J.H.; Jo, S.H.; Kim, S.Y.; Jeong, J.H. A study on object detection performance of YOLOv4 for autonomous driving of tram. Sensors 2022, 22, 9026. [Google Scholar] [CrossRef] [PubMed]

- Ye, C.; Wang, Y.; Wang, Y.; Tie, M. Steering angle prediction YOLOv5-based end-to-end adaptive neural network control for autonomous vehicles. Proceedings of the Institution of Mechanical Engineers, Part D: Journal of Automobile Engineering 2022, 236, 1991–2011. [Google Scholar] [CrossRef]

- Mostafa, T.; Chowdhury, S.J.; Rhaman, M.K.; Alam, M.G.R. Occluded object detection for autonomous vehicles employing YOLOv5, YOLOX and Faster R-CNN. 2022 IEEE 13th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON). IEEE, 2022, pp. 0405–0410.

- Jia, X.; Tong, Y.; Qiao, H.; Li, M.; Tong, J.; Liang, B. Fast and accurate object detector for autonomous driving based on improved YOLOv5. Scientific reports 2023, 13, 9711. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, X.; Zhang, W.; Yao, G.; Li, D.; Zeng, L. Autonomous Parking Space Detection for Electric Vehicles Based on Improved YOLOV5-OBB Algorithm. World Electric Vehicle Journal 2023, 14, 276. [Google Scholar] [CrossRef]

- Liu, X.; Yan, W.Q. Vehicle-related distance estimation using customized YOLOv7. International Conference on Image and Vision Computing New Zealand. Springer, 2022, pp. 91–103.

- Mehla, N.; Ishita.; Talukdar, R.; Sharma, D.K. Object Detection in Autonomous Maritime Vehicles: Comparison Between YOLOV8 and Efficient Det. International Conference on Data Science and Network Engineering. Springer, 2023, pp. 125–141.

- Patel, P.; Vekariya, V.; Shah, J.; Vala, B. Detection of traffic sign based on YOLOv8. AIP Conference Proceedings. AIP Publishing, 2024, Vol. 3107.

- Soylu, E.; Soylu, T. A performance comparison of YOLOv8 models for traffic sign detection in the Robotaxi-full scale autonomous vehicle competition. Multimedia Tools and Applications 2024, 83, 25005–25035. [Google Scholar] [CrossRef]

- Wang, H.; Liu, C.; Cai, Y.; Chen, L.; Li, Y. YOLOv8-QSD: An Improved Small Object Detection Algorithm for Autonomous Vehicles Based on YOLOv8. IEEE Transactions on Instrumentation and Measurement 2024. [Google Scholar] [CrossRef]

- Kumar, D.; Muhammad, N. Object detection in adverse weather for autonomous driving through data merging and YOLOv8. Sensors 2023, 23, 8471. [Google Scholar] [CrossRef] [PubMed]

- Oh, G.; Lim, S. One-Stage Brake Light Status Detection Based on YOLOv8. Sensors 2023, 23, 7436. [Google Scholar] [CrossRef]

- Afdhal, A.; Saddami, K.; Sugiarto, S.; Fuadi, Z.; Nasaruddin, N. Real-Time Object Detection Performance of YOLOv8 Models for Self-Driving Cars in a Mixed Traffic Environment. 2023 2nd International Conference on Computer System, Information Technology, and Electrical Engineering (COSITE). IEEE, 2023, pp. 260–265.

- Bakirci, M.; Bayraktar, I. Transforming Aircraft Detection Through LEO Satellite Imagery and YOLOv9 for Improved Aviation Safety. 2024 26th International Conference on Digital Signal Processing and its Applications (DSPA). IEEE, 2024, pp. 1–6.

- Wibowo, A.; Trilaksono, B.R.; Hidayat, E.M.I.; Munir, R. Object Detection in Dense and Mixed Traffic for Autonomous Vehicles With Modified Yolo. IEEE Access 2023, 11, 134866–134877. [Google Scholar] [CrossRef]

- Kaur, R.; Singh, J. Local Regression Based Real-Time Traffic Sign Detection using YOLOv6. 2022 4th International Conference on Advances in Computing, Communication Control and Networking (ICAC3N). IEEE, 2022, pp. 522–526.

- Mahaur, B.; Mishra, K. Small-object detection based on YOLOv5 in autonomous driving systems. Pattern Recognition Letters 2023, 168, 115–122. [Google Scholar] [CrossRef]

- Dewi, C.; Chen, R.C.; Jiang, X.; Yu, H. Deep convolutional neural network for enhancing traffic sign recognition developed on Yolo V4. Multimedia Tools and Applications 2022, 81, 37821–37845. [Google Scholar] [CrossRef]

- Zaghari, N.; Fathy, M.; Jameii, S.M.; Shahverdy, M. The improvement in obstacle detection in autonomous vehicles using YOLO non-maximum suppression fuzzy algorithm. The Journal of Supercomputing 2021, 77, 13421–13446. [Google Scholar] [CrossRef]

- Hung, W.C.W.; Zakaria, M.A.; Ishak, M.; Heerwan, P. Object Tracking for Autonomous Vehicle Using YOLO V3. In Enabling Industry 4.0 through Advances in Mechatronics: Selected Articles from iM3F 2021, Malaysia; Springer, 2022; pp. 265–273.

- Pandey, S.; Chen, K.F.; Dam, E.B. Comprehensive multimodal segmentation in medical imaging: Combining yolov8 with sam and hq-sam models. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 2592–2598.

- Ju, R.Y.; Cai, W. Fracture detection in pediatric wrist trauma X-ray images using YOLOv8 algorithm. Scientific Reports 2023, 13, 20077. [Google Scholar] [CrossRef]

- Inui, A.; Mifune, Y.; Nishimoto, H.; Mukohara, S.; Fukuda, S.; Kato, T.; Furukawa, T.; Tanaka, S.; Kusunose, M.; Takigami, S.; others. Detection of elbow OCD in the ultrasound image by artificial intelligence using YOLOv8. Applied Sciences 2023, 13, 7623. [Google Scholar] [CrossRef]

- Luo, Y.; Zhang, Y.; Sun, X.; Dai, H.; Chen, X.; others. Intelligent solutions in chest abnormality detection based on YOLOv5 and ResNet50. Journal of healthcare engineering 2021, 2021. [Google Scholar] [CrossRef] [PubMed]

- Wu, B.; Pang, C.; Zeng, X.; Hu, X. Me-yolo: Improved yolov5 for detecting medical personal protective equipment. Applied Sciences 2022, 12, 11978. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, Q.; Zhang, M.; Wei, Z.; Ku, R.; Zhang, Z.; Yu, Y.; Zhang, B.; Liu, Y.; Wang, C. CSFF-YOLOv5: Improved YOLOv5 based on channel split and feature fusion in femoral neck fracture detection. Internet of Things 2024, 26, 101190. [Google Scholar] [CrossRef]

- Goel, L.; Patel, P. Improving YOLOv6 using advanced PSO optimizer for weight selection in lung cancer detection and classification. Multimedia Tools and Applications 2024, pp.1–34.

- Norkobil Saydirasulovich, S.; Abdusalomov, A.; Jamil, M.K.; Nasimov, R.; Kozhamzharova, D.; Cho, Y.I. A YOLOv6-based improved fire detection approach for smart city environments. Sensors 2023, 23, 3161. [Google Scholar] [CrossRef] [PubMed]

- Zou, J.; Arshad, M.R. Detection of whole body bone fractures based on improved YOLOv7. Biomedical Signal Processing and Control 2024, 91, 105995. [Google Scholar] [CrossRef]

- Salinas-Medina, A.; Neme, A. Enhancing Hospital Efficiency Through Web-Deployed Object Detection: A YOLOv8-Based Approach for Automating Healthcare Operations. 2023 Mexican International Conference on Computer Science (ENC). IEEE, 2023, pp. 1–6.

- Razaghi, M.; Komleh, H.E.; Dehghani, F.; Shahidi, Z. Innovative Diagnosis of Dental Diseases Using YOLO V8 Deep Learning Model. 2024 13th Iranian/3rd International Machine Vision and Image Processing Conference (MVIP). IEEE, 2024, pp. 1–5.

- Pham, T.L.; Le, V.H. Ovarian Tumors Detection and Classification from Ultrasound Images Based on YOLOv8. Journal of Advances in Information Technology 2024, 15. [Google Scholar] [CrossRef]

- Krishnamurthy, V.; Balasubramanian, S.; Kanmani, R.S.; Srividhya, S.; Deepika, J.; Nimeshika, G.N. Endoscopic Surgical Operation and Object Detection Using Custom Architecture Models. International Conference on Human-Centric Smart Computing. Springer, 2023, pp. 637–654.

- Palanivel, N.; Deivanai, S.; Sindhuja, B. ; others. The Art of YOLOv8 Algorithm in Cancer Diagnosis using Medical Imaging. 2023 International Conference on System, Computation, Automation and Networking (ICSCAN). IEEE, 2023, pp. 1–6.

- Karaköse, M.; Yetış, H.; Çeçen, M. A New Approach for Effective Medical Deepfake Detection in Medical Images. IEEE Access 2024. [Google Scholar] [CrossRef]

- Bhojane, R.; Chourasia, S.; Laddha, S.V.; Ochawar, R.S. Liver Lesion Detection from MR T1 In-Phase and Out-Phase Fused Images and CT Images Using YOLOv8. International Conference on Data Science and Applications. Springer, 2023, pp. 121–135.

- Majeed, F.; Khan, F.Z.; Nazir, M.; Iqbal, Z.; Alhaisoni, M.; Tariq, U.; Khan, M.A.; Kadry, S. Investigating the efficiency of deep learning based security system in a real-time environment using YOLOv5. Sustainable Energy Technologies and Assessments 2022, 53, 102603. [Google Scholar] [CrossRef]

- AFFES, N.; KTARI, J.; BEN AMOR, N.; FRIKHA, T.; HAMAM, H. Comparison of YOLOV5, YOLOV6, YOLOV7 and YOLOV8 for Intelligent Video Surveillance. Journal of Information Assurance & Security 2023, 18. [Google Scholar]

- Cao, F.; Ma, S. Enhanced Campus Security Target Detection Using a Refined YOLOv7 Approach. Traitement du Signal 2023, 40. [Google Scholar] [CrossRef]

- Chatterjee, N.; Singh, A.V.; Agarwal, R. You Only Look Once (YOLOv8) Based Intrusion Detection System for Physical Security and Surveillance. 2024 11th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions)(ICRITO). IEEE, 2024, pp. 1–5.

- Sandhya. ; Kashyap, A. Real-time object-removal tampering localization in surveillance videos by employing YOLO-V8. Journal of Forensic Sciences 2024. [Google Scholar]

- Bakirci, M.; Bayraktar, I. Boosting aircraft monitoring and security through ground surveillance optimization with YOLOv9. 2024 12th International Symposium on Digital Forensics and Security (ISDFS). IEEE, 2024, pp. 1–6.

- Bakirci, M.; Bayraktar, I. YOLOv9-Enabled Vehicle Detection for Urban Security and Forensics Applications. 2024 12th International Symposium on Digital Forensics and Security (ISDFS). IEEE, 2024, pp. 1–6.

- Chakraborty, S.; Zahir, S.; Orchi, N.T.; Hafiz, M.F.B.; Shamsuddoha, A.; Dipto, S.M. Violence Detection: A Multi-Model Approach Towards Automated Video Surveillance and Public Safety. 2024 International Conference on Advances in Computing, Communication, Electrical, and Smart Systems (iCACCESS). IEEE, 2024, pp. 1–6.

- Julia, R.; Prince, S.; Bini, D. Medical image analysis of masses in mammography using deep learning model for early diagnosis of cancer tissues. In Computational Intelligence and Modelling Techniques for Disease Detection in Mammogram Images; Elsevier, 2024; pp. 75–89.

- Salahin, S.S.; Ullaa, M.S.; Ahmed, S.; Mohammed, N.; Farook, T.H.; Dudley, J. One-stage methods of computer vision object detection to classify carious lesions from smartphone imaging. Oral 2023, 3, 176–190. [Google Scholar] [CrossRef]

- Doniyorjon, M.; Madinakhon, R.; Shakhnoza, M.; Cho, Y.I. An improved method of polyp detection using custom YOLOv4-tiny. Applied Sciences 2022, 12, 10856. [Google Scholar] [CrossRef]

- Ding, B.; Zhang, Z.; Liang, Y.; Wang, W.; Hao, S.; Meng, Z.; Guan, L.; Hu, Y.; Guo, B.; Zhao, R.; others. Detection of dental caries in oral photographs taken by mobile phones based on the YOLOv3 algorithm. Annals of Translational Medicine 2021, 9. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Yang, S.; Yang, S.; Zhao, C.; Tian, G.; Gao, Y.; Chen, Y.; Lu, Y. Automatic thyroid nodule recognition and diagnosis in ultrasound imaging with the YOLOv2 neural network. World journal of surgical oncology 2019, 17, 1–9. [Google Scholar] [CrossRef]

- Kwaśniewska, A.; Rumiński, J.; Czuszyński, K.; Szankin, M. Real-time facial features detection from low resolution thermal images with deep classification models. Journal of Medical Imaging and Health Informatics 2018, 8, 979–987. [Google Scholar] [CrossRef]

- Chen, G.; Du, W.; Xu, T.; Wang, S.; Qi, X.; Wang, Y. Investigating Enhanced YOLOv8 Model Applications for Large-Scale Security Risk Management and Drone-Based Low-Altitude Law Enforcement. Highlights in Science, Engineering and Technology 2024, 98, 390–396. [Google Scholar] [CrossRef]

- Pashayev, F.; Babayeva, L.; Isgandarova, Z.; Kalejahi, B.K. Face Recognition in Smart Cameras by Yolo8. KHAZAR JOURNAL OF SCIENCE AND TECHNOLOGY (KJSAT), 2023; 67. [Google Scholar]

- Kaç, S.B.; Eken, S.; Balta, D.D.; Balta, M.; İskefiyeli, M.; Özçelik, İ. Image-based security techniques for water critical infrastructure surveillance. Applied Soft Computing, 2024; 111730. [Google Scholar]

- Gao, Q.; Deng, H.; Zhang, G. A Contraband Detection Scheme in X-ray Security Images Based on Improved YOLOv8s Network Model. Sensors 2024, 24, 1158. [Google Scholar] [CrossRef]

- Antony, J.C.; Chowdary, C.L.S.; Murali, E.; Mayan, A. ; others. Advancing Crowd Management through Innovative Surveillance using YOLOv8 and ByteTrack. 2024 International Conference on Wireless Communications Signal Processing and Networking (WiSPNET). IEEE, 2024, pp. 1–6.

- Zhang, D. A Yolo-based Approach for Fire and Smoke Detection in IoT Surveillance Systems. International Journal of Advanced Computer Science & Applications 2024, 15. [Google Scholar]

- Khin, P.P.; Htaik, N.M. Gun Detection: A Comparative Study of RetinaNet, EfficientDet and YOLOv8 on Custom Dataset. 2024 IEEE Conference on Computer Applications (ICCA). IEEE, 2024, pp. 1–7.

- Nkuzo, L.; Sibiya, M.; Markus, E.D. A Comprehensive Analysis of Real-Time Car Safety Belt Detection Using the YOLOv7 Algorithm. Algorithms 2023, 16, 400. [Google Scholar] [CrossRef]

- Chang, R.; Zhang, B.; Zhu, Q.; Zhao, S.; Yan, K.; Yang, Y.; others. FFA-YOLOv7: Improved YOLOv7 Based on Feature Fusion and Attention Mechanism for Wearing Violation Detection in Substation Construction Safety. Journal of Electrical and Computer Engineering 2023, 2023. [Google Scholar] [CrossRef]

- Ahmad, H.M.; Rahimi, A. Deep learning methods for object detection in smart manufacturing: A survey. Journal of Manufacturing Systems 2022, 64, 181–196. [Google Scholar] [CrossRef]

- Pendse, R.; Rajput, H.; Saraf, S.; Sarwate, A.; Jadhav, J.; others. Defect Detection in Manufacturing using YOLOv7. IJRAR-International Journal of Research and Analytical Reviews (IJRAR) 2023, 10, 179–185. [Google Scholar]

- Yi, F.; Zhang, H.; Yang, J.; He, L.; Mohamed, A.S.A.; Gao, S. YOLOv7-SiamFF: Industrial defect detection algorithm based on improved YOLOv7. Computers and Electrical Engineering 2024, 114, 109090. [Google Scholar] [CrossRef]

- Wang, H.; Xu, X.; Liu, Y.; Lu, D.; Liang, B.; Tang, Y. Real-time defect detection for metal components: a fusion of enhanced Canny–Devernay and YOLOv6 algorithms. Applied Sciences 2023, 13, 6898. [Google Scholar] [CrossRef]

- Ludwika, A.S.; Rifai, A.P. Deep learning for detection of proper utilization and adequacy of personal protective equipment in manufacturing teaching laboratories. Safety 2024, 10, 26. [Google Scholar] [CrossRef]

- Beak, S.; Han, Y.H.; Moon, Y.; Lee, J.; Jeong, J. YOLOv7-Based Anomaly Detection Using Intensity and NG Types in Labeling in Cosmetic Manufacturing Processes. Processes 2023, 11, 2266. [Google Scholar] [CrossRef]

- Zhao, H.; Wang, X.; Sun, J.; Wang, Y.; Chen, Z.; Wang, J.; Xu, X. Artificial intelligence powered real-time quality monitoring for additive manufacturing in construction. Construction and Building Materials 2024, 429, 135894. [Google Scholar] [CrossRef]

- Liu, Z.; Ye, K. YOLO-IMF: an improved YOLOv8 algorithm for surface defect detection in industrial manufacturing field. International Conference on Metaverse. Springer, 2023, pp. 15–28.

- Wen, Y.; Wang, L. Yolo-sd: simulated feature fusion for few-shot industrial defect detection based on YOLOv8 and stable diffusion. International Journal of Machine Learning and Cybernetics, 2024; 1–13. [Google Scholar]

- Karna, N.; Putra, M.A.P.; Rachmawati, S.M.; Abisado, M.; Sampedro, G.A. Towards Accurate Fused Deposition Modeling 3D Printer Fault Detection using Improved YOLOv8 with Hyperparameter Optimization. IEEE Access 2023. [Google Scholar] [CrossRef]

- Li, W.; Solihin, M.I.; Nugroho, H.A. RCA: YOLOv8-Based Surface Defects Detection on the Inner Wall of Cylindrical High-Precision Parts. Arabian Journal for Science and Engineering, 2024; 1–19. [Google Scholar]

- Hu, Y.; Wang, J.; Wang, X.; Sun, Y.; Yu, H.; Zhang, J. Real-time evaluation of the blending uniformity of industrially produced gravelly soil based on Cond-YOLOv8-seg. Journal of Industrial Information Integration 2024, 39, 100603. [Google Scholar] [CrossRef]

- Han, L.; Ma, C.; Liu, Y.; Jia, J.; Sun, J. SC-YOLOv8: A Security Check Model for the Inspection of Prohibited Items in X-ray Images. Electronics 2023, 12, 4208. [Google Scholar] [CrossRef]

- Yuan, J.; Zhang, N.; Xie, Y.; Gao, X. Detection of Prohibited Items Based upon X-ray Images and Improved YOLOv7. Journal of Physics: Conference Series; 3rd International Conference on Advanced Materials and Intelligent Manufacturing (ICAMIM 2022),, 2022; Vol. 2390, p. 012114. [CrossRef]

- Awang, S.; Rokei, M.Q.R.; Sulaiman, J. Suspicious Activity Trigger System using YOLOv6 Convolutional Neural Network. 2023 International Conference on Artificial Intelligence in Information and Communication (ICAIIC). IEEE, 2023, pp. 527–532.

- Xiao, Y.; Chang, A.; Wang, Y.; Huang, Y.; Yu, J.; Huo, L. Real-time object detection for substation security early-warning with deep neural network based on YOLO-V5. 2022 IEEE IAS Global Conference on Emerging Technologies (GlobConET). IEEE, 2022, pp. 45–50.

- Wang, G.; Ding, H.; Duan, M.; Pu, Y.; Yang, Z.; Li, H. Fighting against terrorism: A real-time CCTV autonomous weapons detection based on improved YOLO v4. Digital Signal Processing 2023, 132, 103790. [Google Scholar] [CrossRef]

- Kashika, P.; Venkatapur, R.B. Automatic tracking of objects using improvised Yolov3 algorithm and alarm human activities in case of anomalies. International Journal of Information Technology 2022, 14, 2885–2891. [Google Scholar] [CrossRef]

- Mohandoss, T.; Rangaraj, J. Multi-Object Detection using Enhanced YOLOv2 and LuNet Algorithms in Surveillance Videos. e-Prime-Advances in Electrical Engineering, Electronics and Energy 2024, 8, 100535. [Google Scholar] [CrossRef]

- Smiley, C.J. From silence to propagation: Understanding the relationship between “Stop Snitchin” and “YOLO”. Deviant Behavior 2015, 36, 1–16. [Google Scholar] [CrossRef]

- Yang, S.; Zhang, Z.; Wang, B.; Wu, J. DCS-YOLOv8: An Improved Steel Surface Defect Detection Algorithm Based on YOLOv8. Proceedings of the 2024 7th International Conference on Image and Graphics Processing, 2024, pp. 39–46.

- Wang, X.; Gao, H.; Jia, Z.; Li, Z. BL-YOLOv8: An improved road defect detection model based on YOLOv8. Sensors 2023, 23, 8361. [Google Scholar] [CrossRef]

- Luo, B.; Kou, Z.; Han, C.; Wu, J. A “Hardware-Friendly” Foreign Object Identification Method for Belt Conveyors Based on Improved YOLOv8. Applied Sciences 2023, 13, 11464. [Google Scholar] [CrossRef]

- Wang, C.; Sun, Q.; Dong, X.; Chen, J. Automotive adhesive defect detection based on improved YOLOv8. Signal, Image and Video Processing, 2024; 1–13. [Google Scholar]

- Wu, Q.; Kuang, X.; Tang, X.; Guo, D.; Luo, Z. Industrial equipment object detection based on improved YOLOv7. International Conference on Computer, Artificial Intelligence, and Control Engineering (CAICE 2023). SPIE, 2023, Vol. 12645, pp. 600–608.

- Kim, O.; Han, Y.; Jeong, J. Real-time Inspection System Based on Moire Pattern and YOLOv7 for Coated High-reflective Injection Molding Product. WSEAS Transactions on Computer Research 2022, 10, 120–125. [Google Scholar] [CrossRef]

- Chen, J.; Bai, S.; Wan, G.; Li, Y. Research on YOLOv7-based defect detection method for automotive running lights. Systems Science & Control Engineering 2023, 11, 2185916. [Google Scholar]

- Hussain, M.; Al-Aqrabi, H.; Munawar, M.; Hill, R.; Alsboui, T. Domain feature mapping with YOLOv7 for automated edge-based pallet racking inspections. Sensors 2022, 22, 6927. [Google Scholar] [CrossRef]

- Zhu, B.; Xiao, G.; Zhang, Y.; Gao, H. Multi-classification recognition and quantitative characterization of surface defects in belt grinding based on YOLOv7. Measurement 2023, 216, 112937. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, L.; Chen, H.; Hussain, A.; Ma, C.; Al-gabri, M. Mushroom-YOLO: A deep learning algorithm for mushroom growth recognition based on improved YOLOv5 in agriculture 4.0. 2022 IEEE 20th International Conference on Industrial Informatics (INDIN). IEEE, 2022, pp. 239–244.

- Badgujar, C.M.; Armstrong, P.R.; Gerken, A.R.; Pordesimo, L.O.; Campbell, J.F. Real-time stored product insect detection and identification using deep learning: System integration and extensibility to mobile platforms. Journal of Stored Products Research 2023, 104, 102196. [Google Scholar] [CrossRef]

- Bhat, S.; Shenoy, K.A.; Jain, M.R.; Manasvi, K. Detecting Crops and Weeds in Fields Using YOLOv6 and Faster R-CNN Object Detection Models. 2023 International Conference on Recent Advances in Information Technology for Sustainable Development (ICRAIS). IEEE, 2023, pp. 43–48.

- Bist, R.B.; Subedi, S.; Yang, X.; Chai, L. A novel YOLOv6 object detector for monitoring piling behavior of cage-free laying hens. AgriEngineering 2023, 5, 905–923. [Google Scholar] [CrossRef]

- Jiang, K.; Xie, T.; Yan, R.; Wen, X.; Li, D.; Jiang, H.; Jiang, N.; Feng, L.; Duan, X.; Wang, J. An attention mechanism-improved YOLOv7 object detection algorithm for hemp duck count estimation. Agriculture 2022, 12, 1659. [Google Scholar] [CrossRef]

- Kumar, P.; Kumar, N. Drone-based apple detection: Finding the depth of apples using YOLOv7 architecture with multi-head attention mechanism. Smart Agricultural Technology 2023, 5, 100311. [Google Scholar]

- Chen, G.; Hou, Y.; Cui, T.; Li, H.; Shangguan, F.; Cao, L. YOLOv8-CML: A lightweight target detection method for Color-changing melon ripening in intelligent agriculture. ResearchSquare 2023. [Google Scholar] [CrossRef]

- Sapkota, R.; Ahmed, D.; Karkee, M. Synthetic Meets Authentic: Leveraging Text-to-Image Generated Datasets for Apple Detection in Orchard Environments. Available at SSRN 4770722 2024. [Google Scholar] [CrossRef]

- Ahmed, D.; Sapkota, R.; Churuvija, M.; Karkee, M. Machine vision-based crop-load estimation using yolov8. arXiv 2023, arXiv:2304.13282. [Google Scholar]

- Sapkota, R.; Ahmed, D.; Karkee, M. Comparing YOLOv8 and Mask RCNN for object segmentation in complex orchard environments. arXiv 2023, arXiv:2312.07935. [Google Scholar]

- Zhang, L.; Ding, G.; Li, C.; Li, D. DCF-Yolov8: An Improved Algorithm for Aggregating Low-Level Features to Detect Agricultural Pests and Diseases. Agronomy 2023, 13, 2012. [Google Scholar] [CrossRef]

- Badgujar, C.M.; Poulose, A.; Gan, H. Agricultural object detection with You Only Look Once (YOLO) Algorithm: A bibliometric and systematic literature review. Computers and Electronics in Agriculture 2024, 223, 109090. [Google Scholar] [CrossRef]

- Junior, L.C.M.; Ulson, J.A.C. Real time weed detection using computer vision and deep learning. 2021 14th IEEE International Conference on Industry Applications (INDUSCON). IEEE, 2021, pp. 1131–1137.

- Yu, X.; Yin, D.; Xu, H.; Pinto Espinosa, F.; Schmidhalter, U.; Nie, C.; Bai, Y.; Sankaran, S.; Ming, B.; Cui, N. ; others. Maize tassel number and tasseling stage monitoring based on near-ground and UAV RGB images by improved YoloV8. Precision Agriculture, 2024; 1–39. [Google Scholar]

- Khalid, M.; Sarfraz, M.S.; Iqbal, U.; Aftab, M.U.; Niedbała, G.; Rauf, H.T. Real-time plant health detection using deep convolutional neural networks. Agriculture 2023, 13, 510. [Google Scholar] [CrossRef]