Submitted:

11 January 2024

Posted:

11 January 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

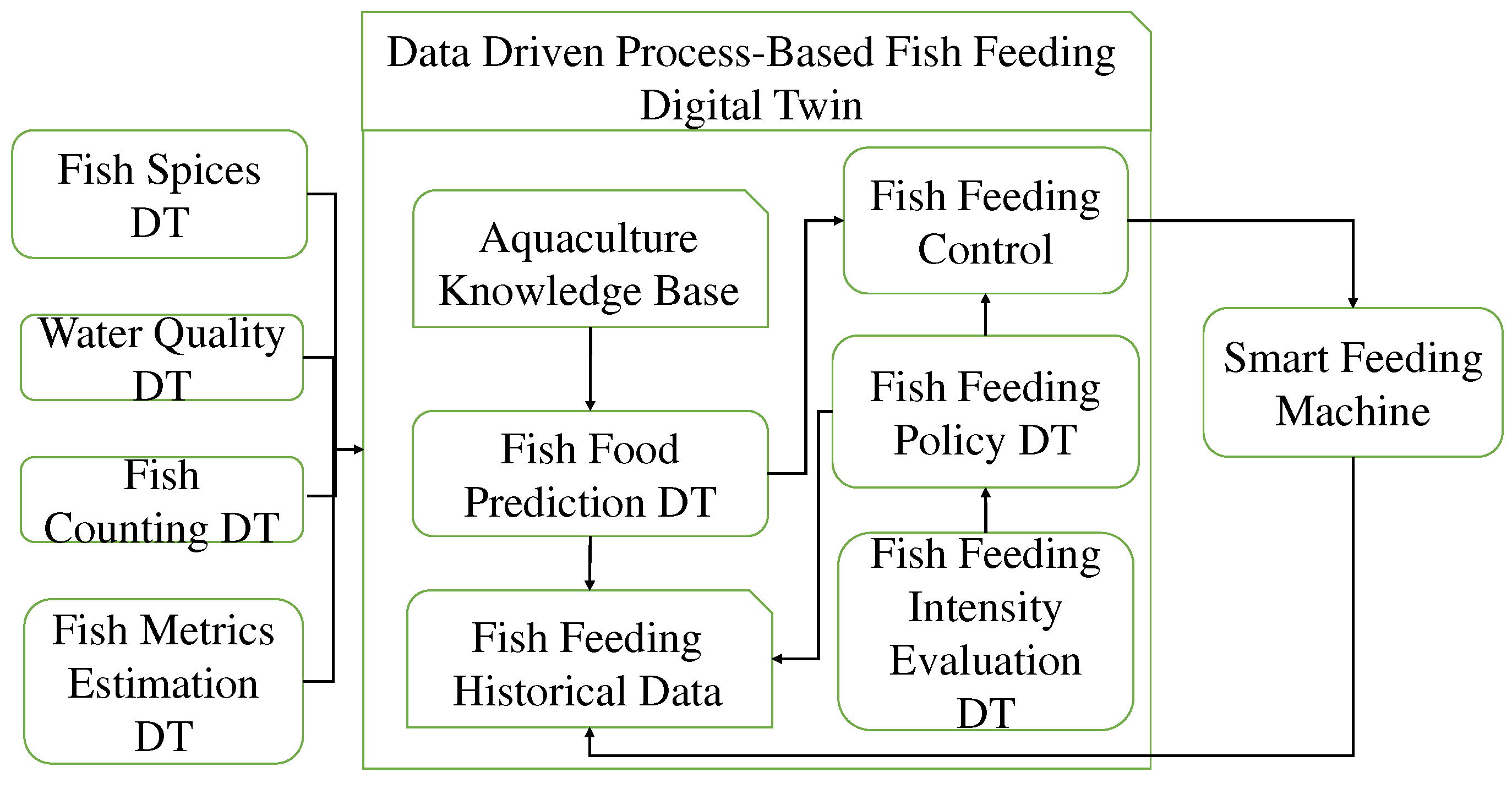

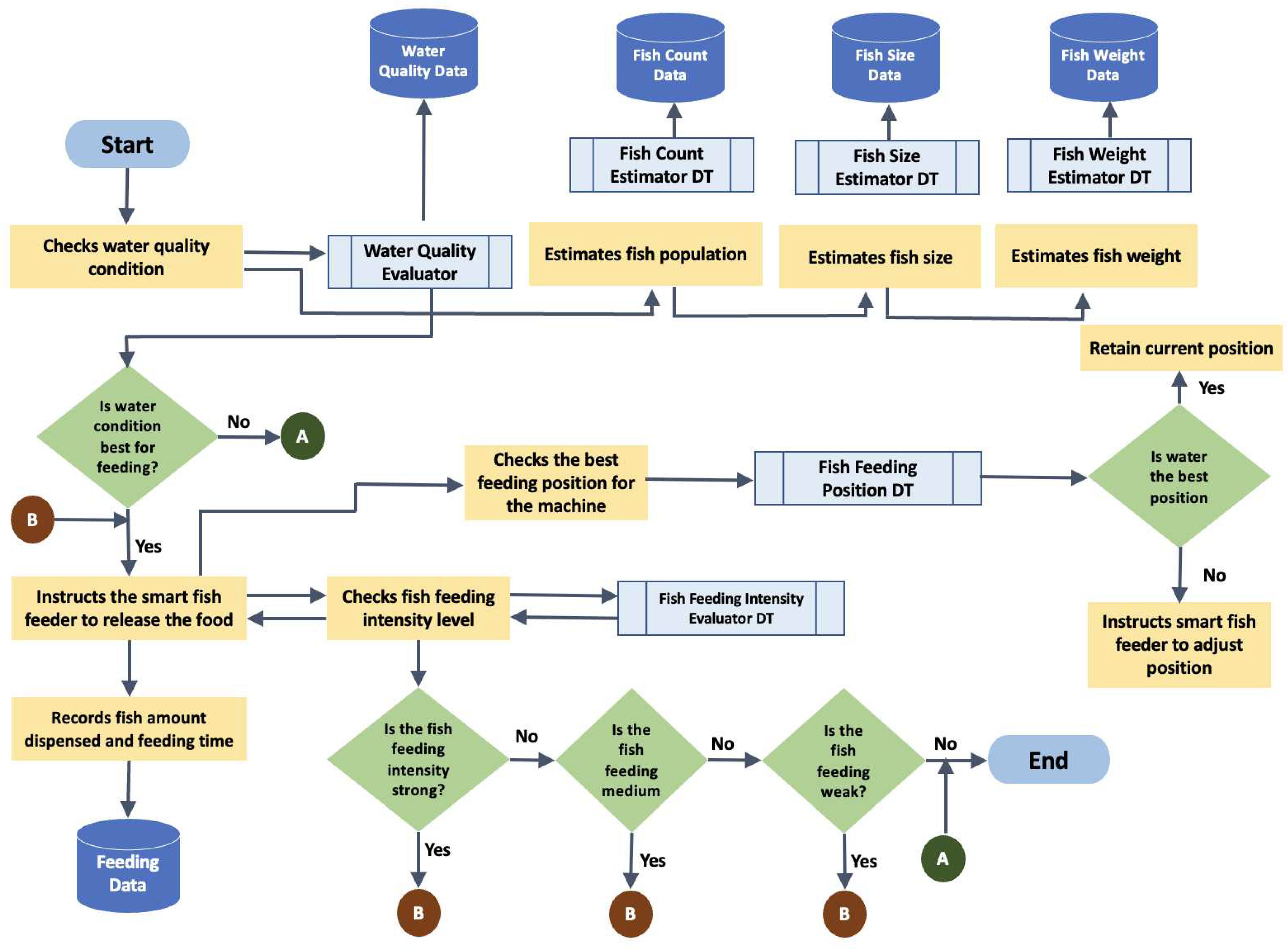

- Fish Species Input: This input specifies the type of fish to be bred and retrieves a set of genetic parameters from the aquaculture knowledge base. These parameters characterize the fish's growth model and are determined by genetics, independent of environmental factors. Initially defined by aquaculture experts, these parameters are subject to calibration and refinement through machine learning algorithms using historical fish feeding input and output data.

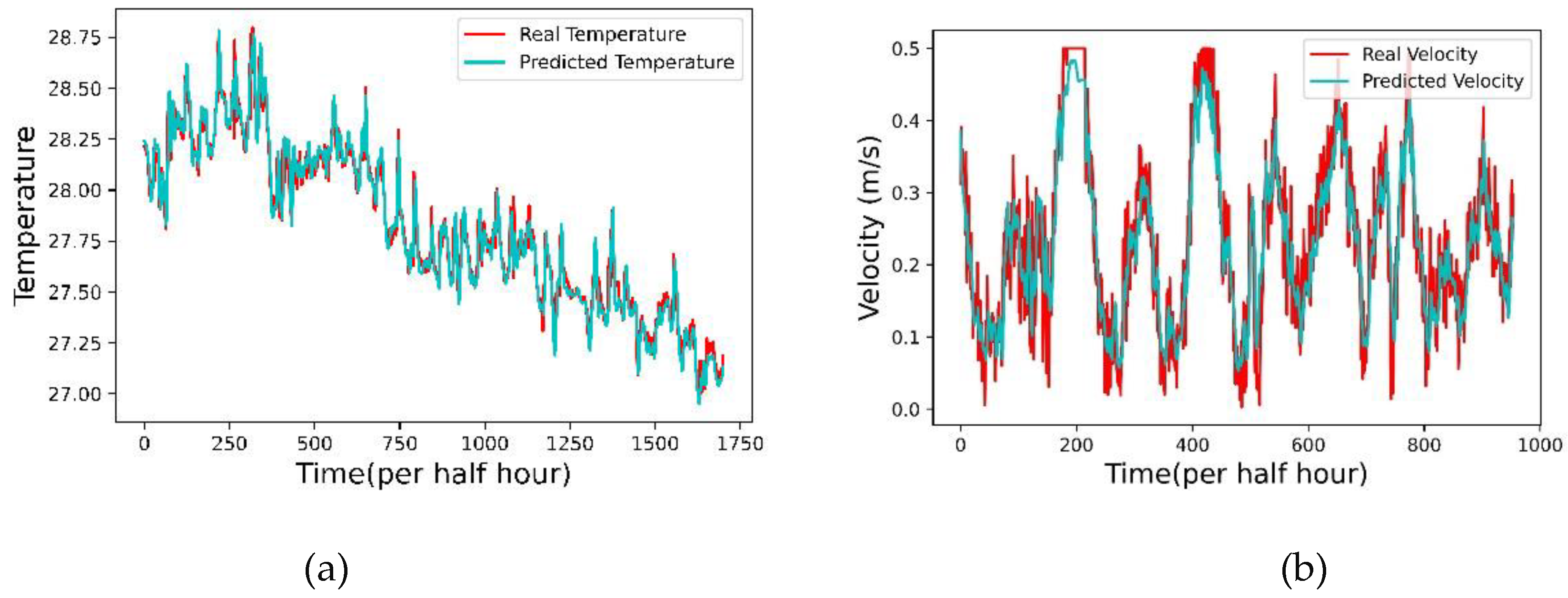

- Environment Input: The environment input comprises a set of continuously collected environmental data, including temperature, water velocity, dissolved oxygen, salinity, etc. These data are acquired using the deployed water quality DT object.

- Fish Metrics Input: This input consists of fish monitoring results, including current fish count, length, width, and weight, obtained through pre-trained deep learning prediction models analyzing data from RGB-D cameras or sonar imaging devices. The related DT objects encompass fish counting DT and fish metrics estimation DT.

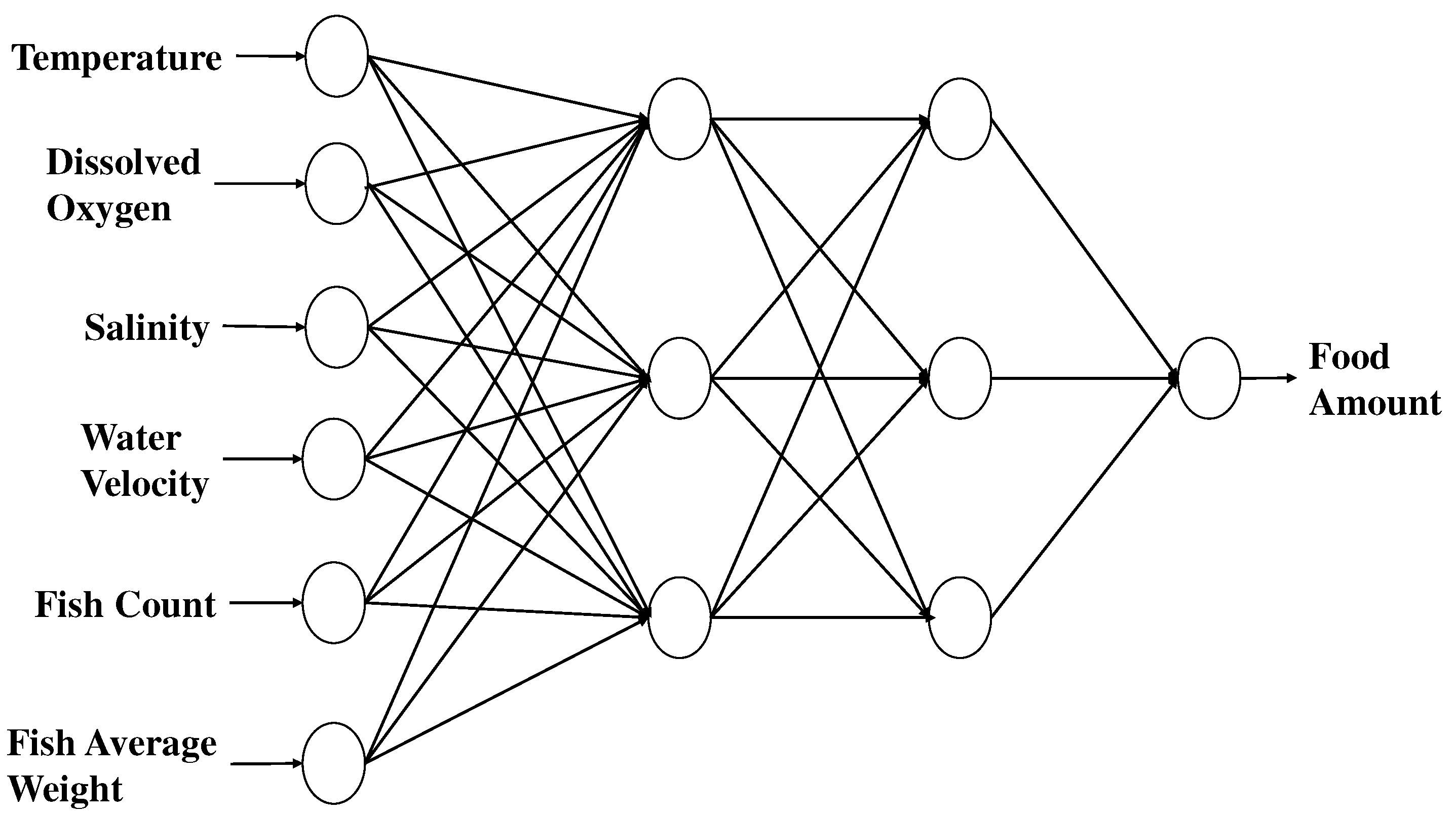

- Fish Food Prediction Model: An artificial intelligence neural network predicts the quantity of food to be dispensed into the fish cages, following our fish feeding policy, using remotely controllable smart feeding machines. This model is incorporated into the fish food prediction DT, which takes inputs from the water quality DT, fish counting DT, and fish metrics estimation DT to determine the daily fish feeding amount.

- Fish Feeding Intensity Evaluation DT: This deep learning model predicts the level of fish feeding intensity, which is then used as input for the fish feeding policy DT to regulate the optimal actions controlling the smart feeding machine and minimize potential residual food during daily fish feeding.

2. Materials and Methods

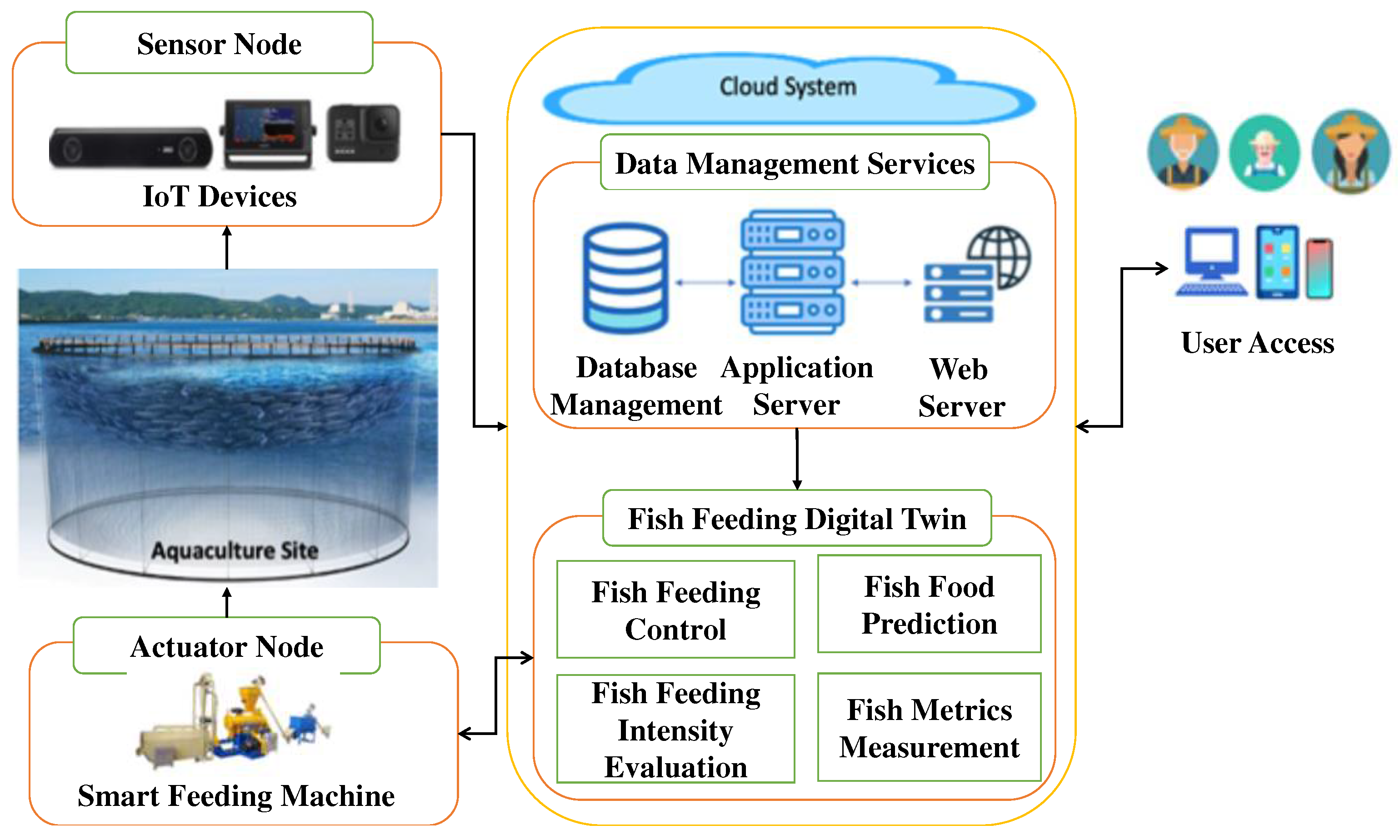

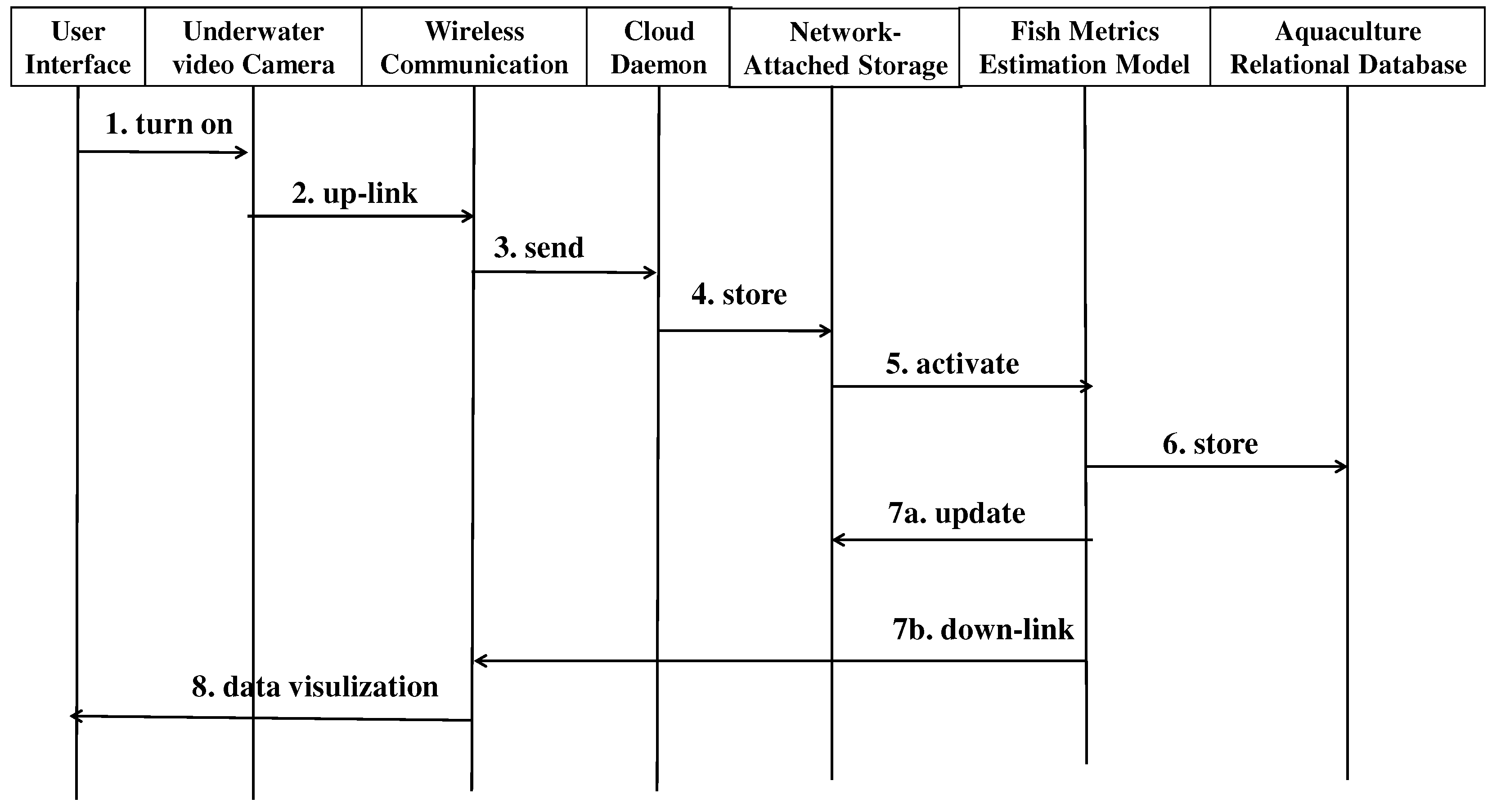

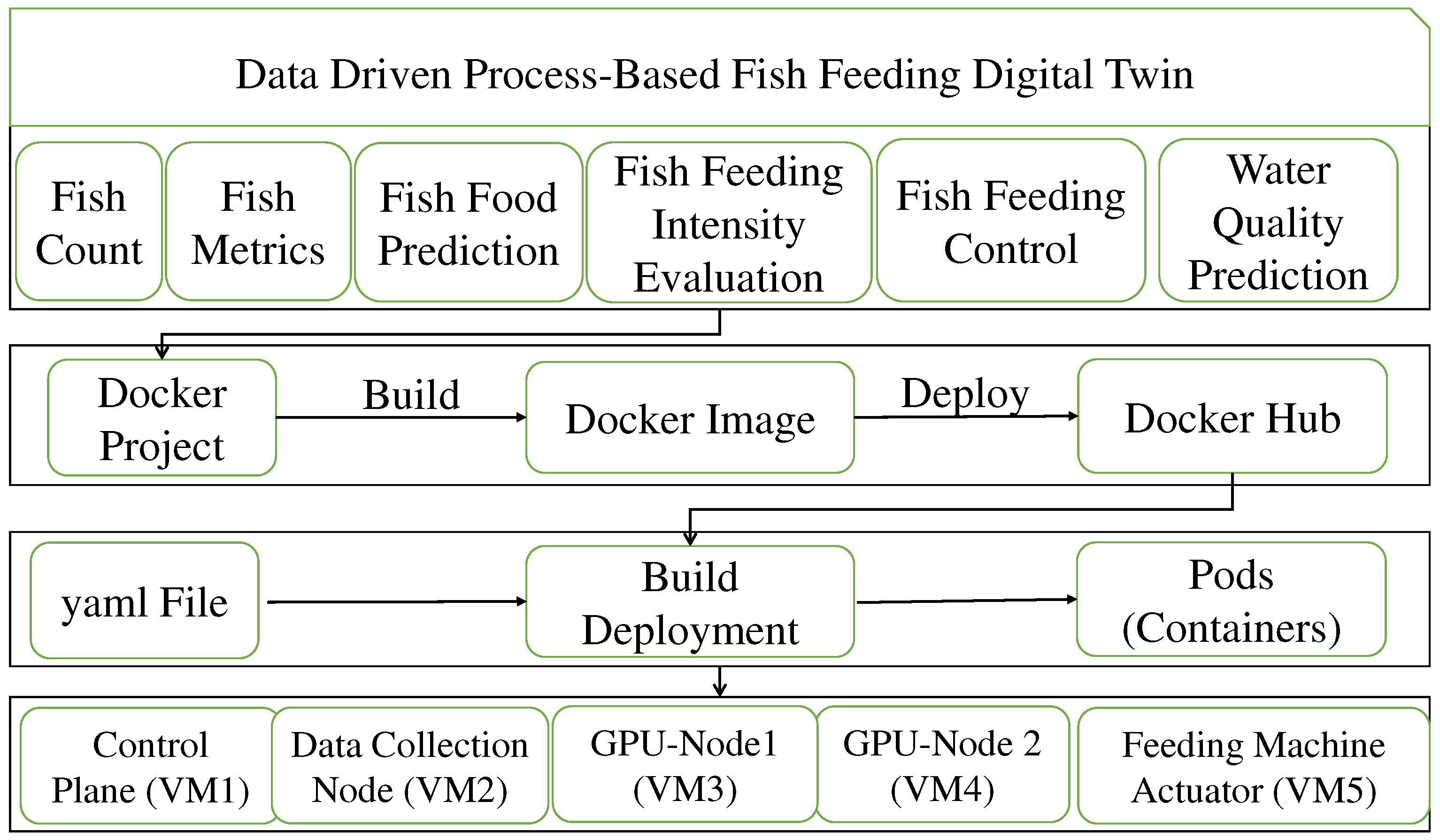

2.1. Cloud-Based Digital Twin Architecture

2.2. The Digital Twin for Fish Feeding Management

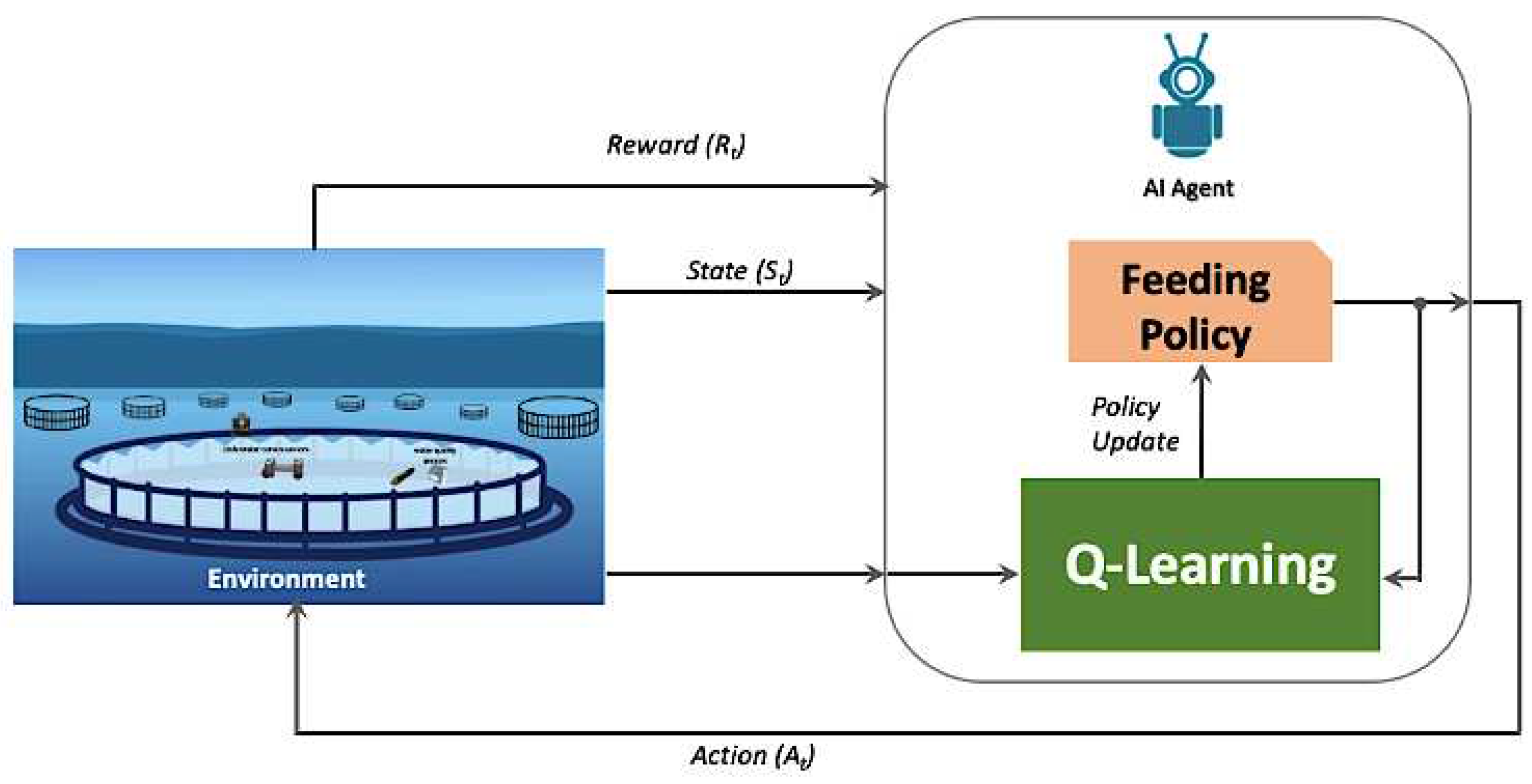

| Algorithm 1: Q-Learning Policy Control Algorithm |

| Initialization: arbitrarily, and Repeat (for each episode): Initialize Repeat (for each step of the episode): Choose from using policy-derived (e.g., greedy) Take action , observe ; Until is terminal |

3. Results

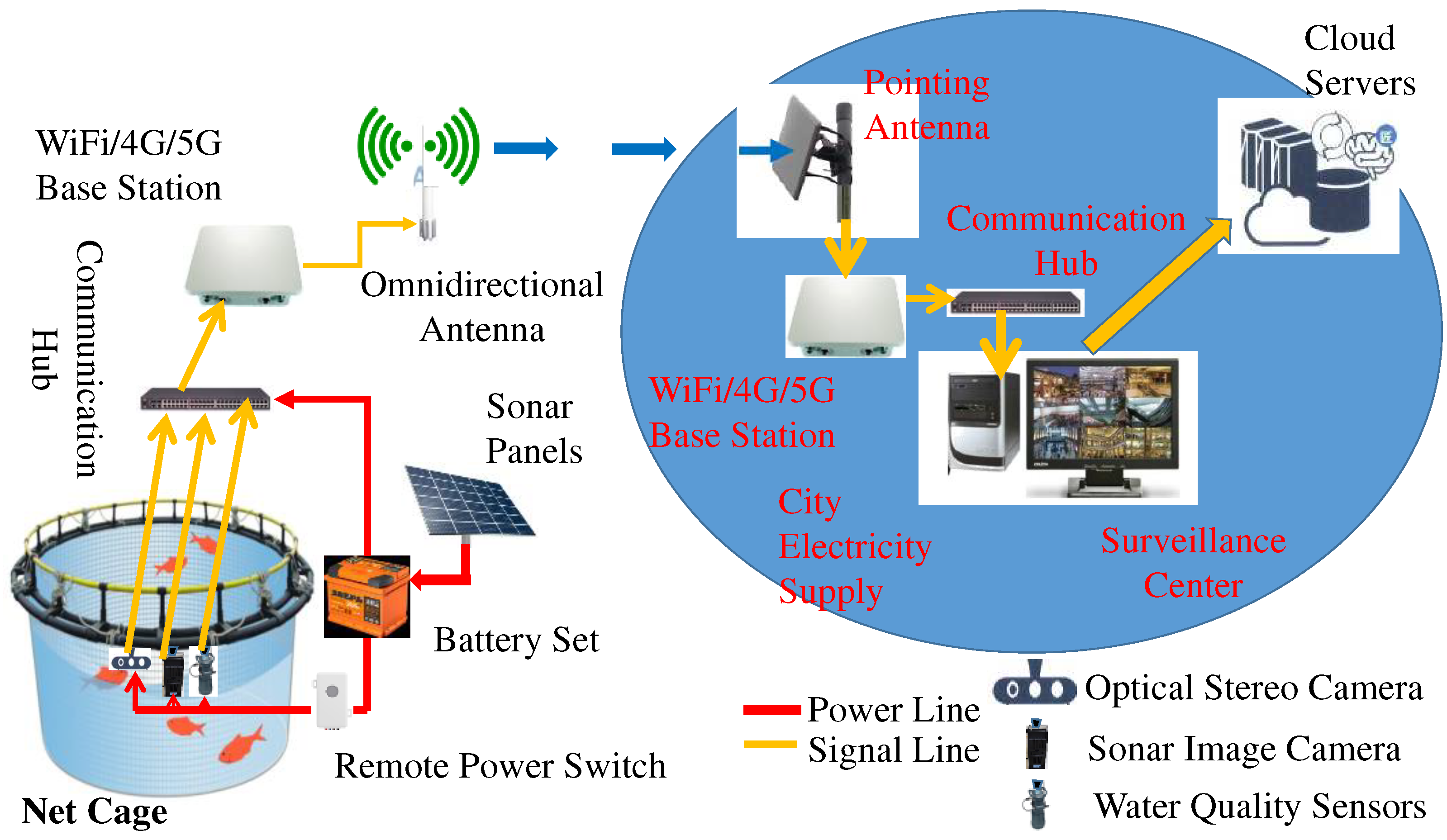

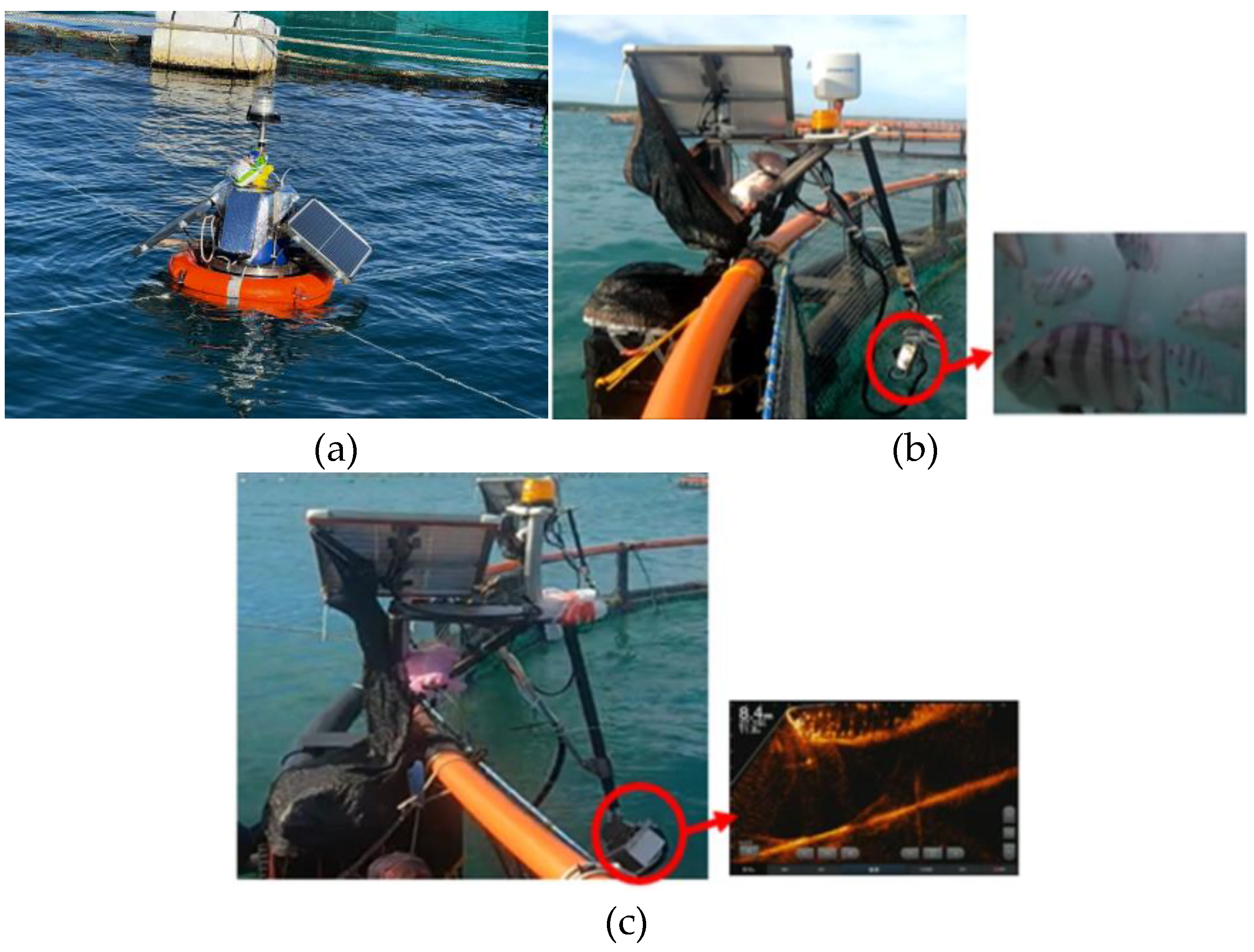

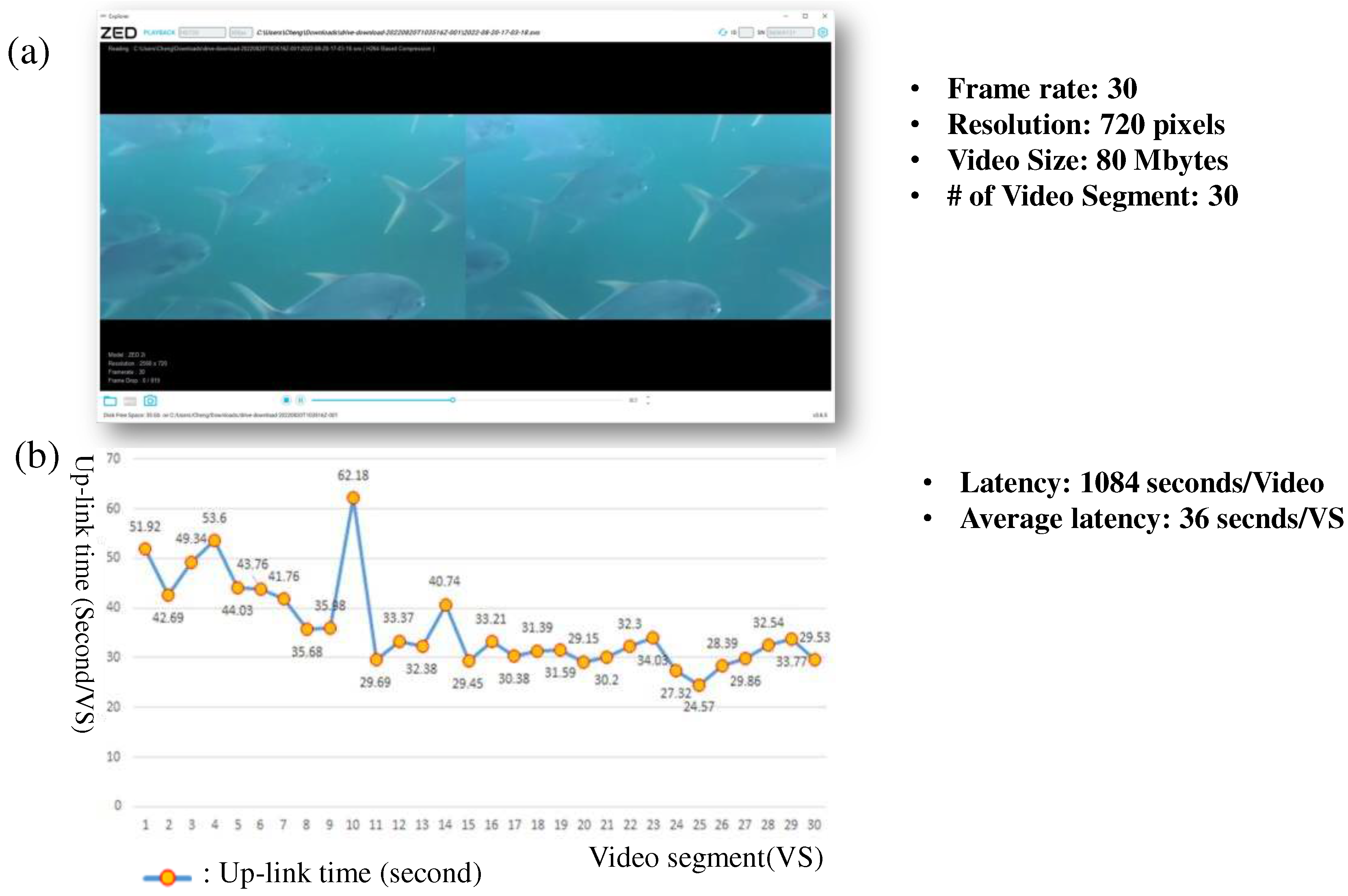

3.1. Experimental Devices and Set-up

| DT Object | Machine Learning and Deep Learning Models | Physical/DT Objects |

|---|---|---|

| Water Quality Prediction | LSTM [37] | Salinity meter, dissolved oxygen meter, temperature sensing, water velocity meter, pH level meter |

| Fish Counting | YoLoV7 [38], MLP [36] | RGB Camera |

| Fish Length/Weight Estimation | Mask R-CNN[39], Optical Flow [40], KNN Regression [41],Principal Component Analysis (PCA) [42] | RGB Stereo Camera |

| Food Prediction | MLP [36] | Water Quality Prediction, Fish Counting, Fish Length/Weight Estimation |

| Fish Feeding Intensity Evaluation | Optical Flow [40], I3D Action Recognition [43] | RGB Stereo Camera |

| Fish Feeding Control | Q-Learning [32] | Automatic Feeding Machine, Fish Feeding Intensity Evaluation |

3.2. Implementation of the Cloud Computing Framework

3.3. Experimental Results

| Strong | Medium | Weak | Normal |

| 1.0 | 0.7 | 0.6 | 0.2 |

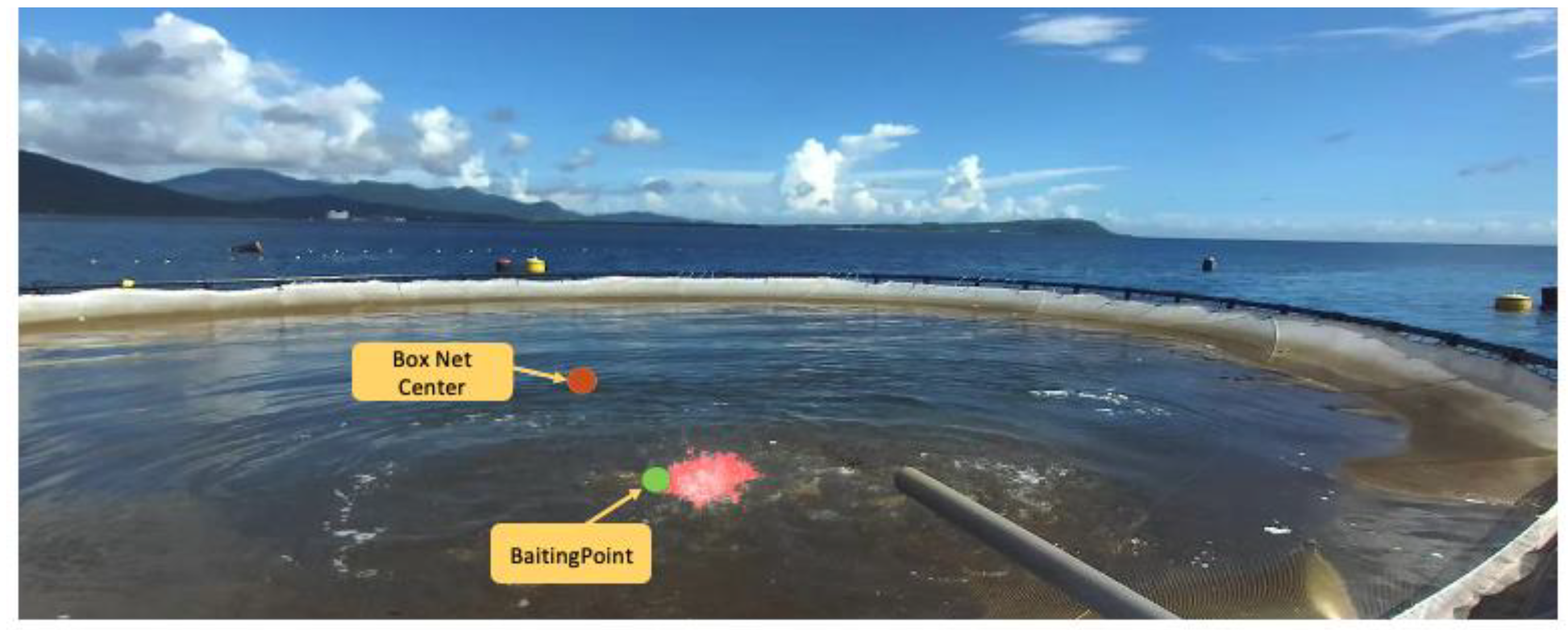

- The Fish Counting DT exhibits a 4% error rate. This DT module utilizes optical underwater video as input, employing the YOLOv7 deep learning object detector to identify fish objects within individual frames. Subsequently, it feeds the time-series fish counts into an MLP regression model for fish count prediction. To ensure the accuracy of prediction results, it is essential to accurately record the initial fish count and the number of deceased fish. In the event that the ground truth for fish count changes, the MLP regression model undergoes re-training accordingly.

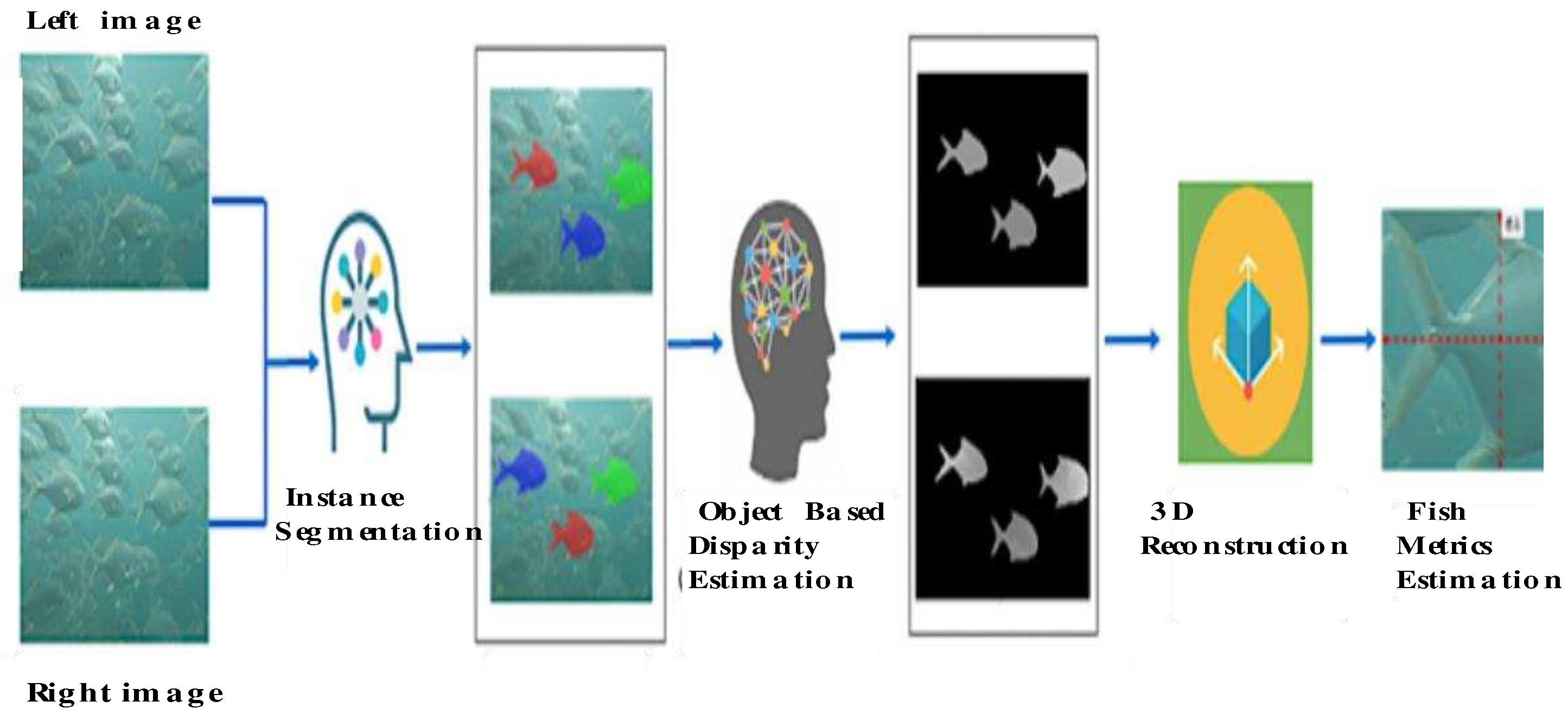

- The Fish Metrics DT modules estimate fish length and height using the input stereo video. This process involves acquiring implicit and explicit camera parameters through a camera calibration algorithm [44], followed by the segmentation of fish objects in the rectified left and right frames using MaskRCNN [39]. The segmented objects in the left image are then matched with corresponding objects in the right image, and an optical flow deep learning model [40] is employed to establish pixel correspondences for each matched object pair. Pixel-wise disparity and dense values are computed based on the calibrated camera parameters, facilitating 3D fish object reconstruction. Finally, Principal Component Analysis (PCA) [42] is applied to estimate fish length and height. Additionally, this study includes a human-labeled dataset, with each record containing information on fish length, height, and weight. Leveraging the estimated fish length and height alongside this dataset as inputs, we utilize the K-NN regression model [41] to predict fish weight. Notably, the error rate associated with fish weight estimation is approximately 12%.

- The Fish Food DT employs an MLP regression model, as depicted in Figure 8, to forecast the daily food requirements for executing the fish feeding process. To align the actual daily food consumption observed in real-world fish feeding operations with the outputs of the aforementioned DT objects, a dedicated training dataset is meticulously curated for training the food prediction model. Empirical findings indicate that the food prediction model exhibits an error rate of approximately 2%.

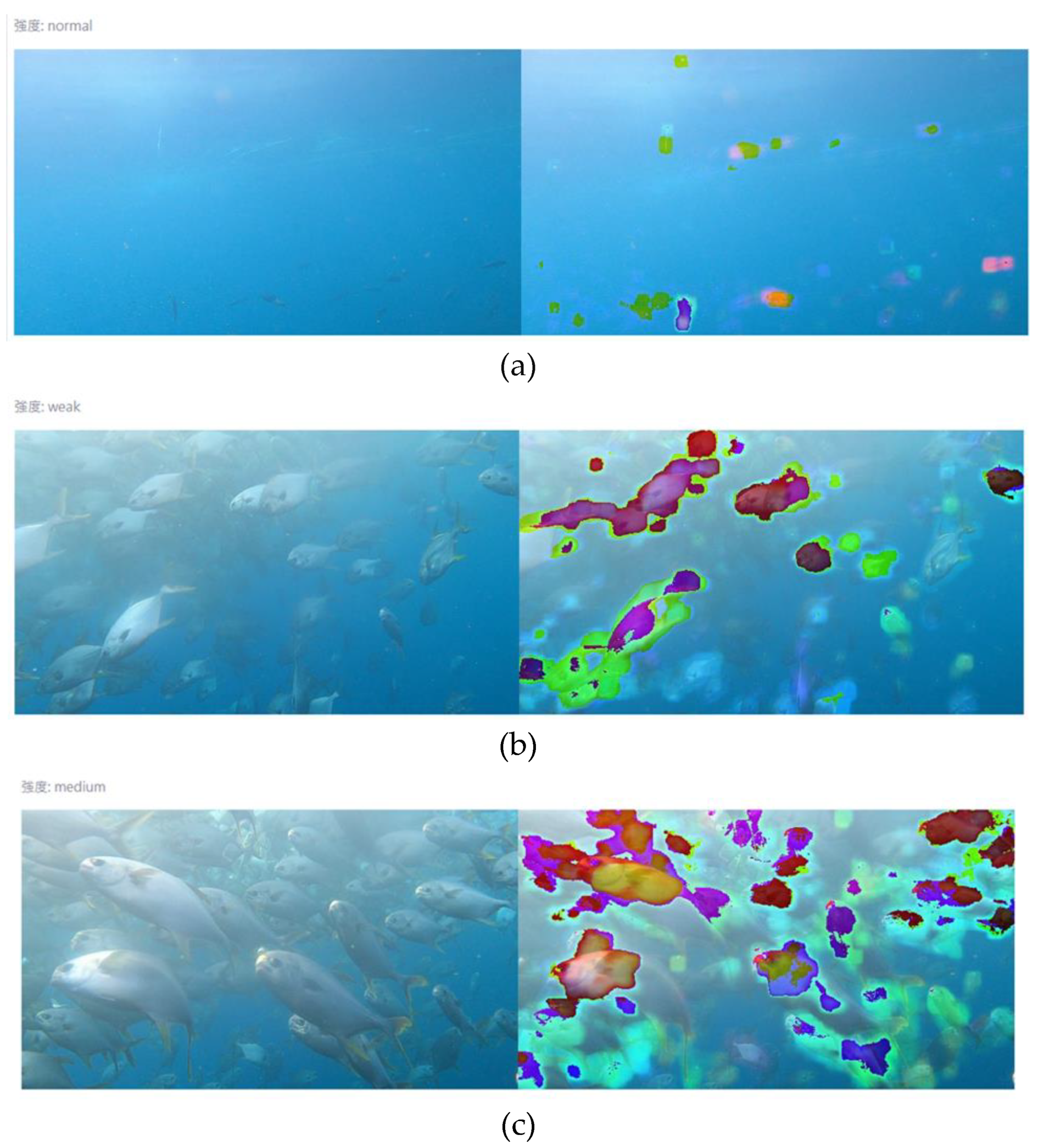

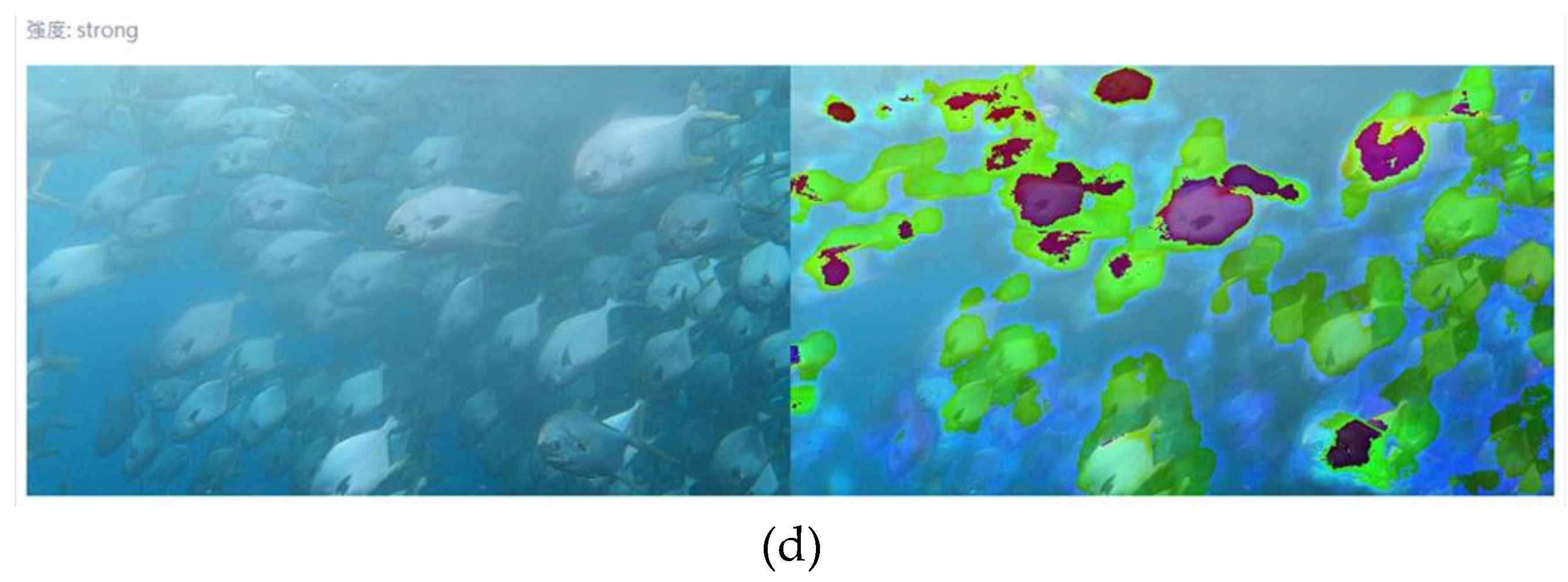

- The DT object for fish feeding intensity evaluation is designed to minimize residual food through the fish feeding control algorithm. In practice, the daily fish food for feeding is initially separated into 5 to 10 parts. The fish feeding control algorithm then uses our smart feeding machine to send food into the cage part-by-part. For each part feeding, the state of fish feeding intensity is evaluated to determine the scaling factor of fish food for the next part feeding by looking up Table 3. The results are also monitored across frames in Figure 14, showing how the fish movement intensity varies from frame to frame. When feed is fed to the fish, they initially move faster to grab food at the water's surface. Eventually, their movement decreases or stops as their appetite gradually declines when they are almost sated. Thus, the speed of fish movement is used to define their feeding level. In this work, we classified fish feeding intensity into four levels: weak, normal, medium, and strong, as presented in Figure 15. The left image shows the exact image captured from the aquaculture farm, and the right image is the result of the analysis of the fish motions. The second image with fewer color masks shows a normal fish movement or no feeding being performed. Details on how fish feeding intensity is modeled and implemented are fully described in our other works on fish feeding intensity evaluation [12]. The error rate of the fish feeding evaluation model is about 95.8% based on the human-labeled training dataset.

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, H.; Chatzifotis, S.; Lian, G.; Duan, Y.; Li, D.; Chen, T. Mechanistic Model based Optimization of Feeding Practices in Aquaculture. Aquacultural Engineering 2022, 97, 102245. [CrossRef]

- Flinn, S. A; Midway, S. R. Trends in Growth Modeling in Fisheries Science. Fishes 2021 , 6(1), 1. [CrossRef]

- DeVries, D.A.; Grimes, C.B. Spatial and Temporal Variation in Age and Growth of King Mackerel, Scomberomorus Cavalla, 1977–1992. Fish. B-NOAA 1997, 95, pp. 694–708. [CrossRef]

- Helser, T.E.; Lai, H.L. A Bayesian Hierarchical Meta-analysis of Fish growth: With an Example for North American largemouth Bass, Micropterus salmoides. Ecol. Model. 2004, 178, pp. 399–416. [CrossRef]

- Midway, S.R.; Wagner, T.; Arnott, S.A.; Biondo, P.; Martinez-Andrade, F.; Wadsworth, T.F. Spatial and Temporal Variability in Growth of Southern Flounder (Paralichthys lethostigma). Fish. Res. 2015, 167, pp. 323–332. [CrossRef]

- Ubina, N. A.; Lan H.-Y. ; Cheng, S.-C.; Chang C.-C.; Lin, S.-S.; Zhang K.-X.; Lu, H.-Y.; Cheng, C.-Y.; Hsieh, Y.-Z. Digital Twin-based Intelligent Fish Farming with Artificial Intelligence Internet of Things (AIoT). Smart Agricultural Technology 2023, 5, 100285. [CrossRef]

- Ubina, N.; Cheng, S.-C. A review of unmanned system technologies with its application to aquaculture farm monitoring and management. Drones 2022, 6(1), 12. [CrossRef]

- Manoharan, H.; Teekaraman, Y.; Kshirsagar, P. R.; Sundaramurthy, S.; Manoharan, A. Examining the Effect of Aquaculture Using Sensor-based Technology with Machine Learning Algorithm. Aquaculture Research 2020, 51(11), 4748–4758. [CrossRef]

- Yang, X. T.; Zhang, S.; Liu, J. T.; Gao, Q. F.; Dong, S. L.; Zhou, C. Deep Learning for Smart Fish Farming: Applications, Opportunities and Challenges. Rev Aquaculture 2021, 13 (1), pp. 66-90. [CrossRef]

- Naomi A.; Cheng, S.-C. Chen, H.-Y.; Chang, C.-C.; Lan, H.-Y. A Visual Aquaculture System Using a Cloud-based Autonomous Drones. Drones 2021, 5(4), 109. [CrossRef]

- Chang, C.-C.; Wang, Y.-P.; Cheng, S. C. (2021, Nov). Fish Segmentation in Sonar Images by Mask R-CNN on Feature Maps of Conditional Random Fields. Sensors 2021, 21(22), 7625. [CrossRef] [PubMed]

- Ubina, N.; Cheng, S.-C.; Chang, C.-C.; Chen, H.-Y. Evaluating Fish Feeding Intensity in Aquaculture with Convolutional Neural Networks. Aquac. Eng. 2021, 94, 102178. [CrossRef]

- Lu, H.-Y.; Cheng, C.-Y; Cheng, S.-C.; Cheng, Y.-H.; Lo, W.-C.; Jiang, W.-L.; Nan, F.-H.; Chang, S.-H.; Ubina, N. A. Low-cost AI Buoy System for Monitoring Water Quality at Offshore Aquaculture Cages. Sensors 2022, 22(11), 4078. [CrossRef] [PubMed]

- Ubina, A.; Cheng, S.-C.; Chang, C.-C.; Cai, S.-Y.; Lan, H.-Y.; Lu, H.-Y. Intelligent Underwater Stereo Camera Design for Fish Metric Estimation Using Reliable Object Matching. IEEE Access 2022, 10, pp. 74605-74619. [CrossRef]

- Li, D.; Wang, Q.; Li, X.; Niu, M.; Wang, H.; Liu, C. Recent Advances of Machine Vision Technology in Fish Classification. ICES J. Mar. Sci. 2022, 79, pp. 263–284. [CrossRef]

- Kuswantori, A.; Suesut, T.; Tangsrirat, W.; Schleining, G.; Nunak, N. Fish Detection and Classification for Automatic Sorting System with an Optimized YOLO Algorithm. Appl. Sci. 2023, 13, 3812. [CrossRef]

- Ferko, E.; Bucaioni, A.; Beham, M. Architecting Digital Twins. IEEE Access 2022, 10, pp. 50335-50350. [CrossRef]

- Jacoby, M.; Usländer, T. Digital twin and Internet of Things—Current standards landscape. Applied Sciences 2020, Volume 10(18), 6519. [CrossRef]

- Minerva, R.; Lee, G. M.; Crespi, N. Digital Twin in the IoT Context: A Survey on Technical Features, Scenarios, and Architectural Models. Proceedings of the IEEE 2020, 108(10), pp. 1785-1824. [CrossRef]

- Nasirahmadi, A.; Hensel, O. Toward the Next Generation of Digitalization in Agriculture Based on Digital Twin Paradigm. Sensors 2022, 22(2), 498. [CrossRef]

- Chang, C.-C.; Ubina, N.A.; Cheng, S.-C.; Lan, H.-Y.; Chen, K.-C.; Huang, C.-C. A Two-Mode Underwater Smart Sensor Object for Precision Aquaculture Based on AIoT Technology. Sensors 2022, 22(19), 7603. [CrossRef]

- Wang, C.; Li, Z.; Wang, T.; Xu, X.; Zhang, X.; Li, D. Intelligent fish farm—the future of aquaculture. Aquaculture International 2021, Volume 29, pp. 2681-2711. [CrossRef] [PubMed]

- Lima, A.; Royer, E.; Bolzonella, M.; Pastres, R. Digital Twins for Land-based Aquaculture: A Case Study for Rainbow Trout (Oncorhynchus mykiss). Open Research Europe 2023, 28(2), 16. [CrossRef]

- Lan, H.-Y.; Afero, F.; Huang, C.-T.; Chen, B.-Y.; Huang, P.-L.; Hou, Y.-L. Investment Feasibility Analysis of Large Submersible Cage Culture in Taiwan: A Case Study of Snubnose Pompano (Trachinotus anak) and Cobia (Rachycentron canadum). Fishes 2022, 7, 151. [CrossRef]

- Somers, I. F. On a Seasonally Oscillating Growth function. Fishbyte 1998, 6 (1), pp. 8-11.

- Gompertz, B. On the Nature of the Function Expressive of the Law of Human Mortality, and on a New Mode of Determining the Value of Life Contingencies. Philosophical Transactions of the Royal Society of London 1825, 115, pp. 513-583. [CrossRef]

- Lipkin, L.; Smith, D. Logistic Growth Model - Background: Logistic Modeling. Mathematical Association of America. https://www.maa.org/press/periodicals/loci/joma/logistic-growth-model-background-logistic-modeling (accessed March 22, 2023).

- Quinn, T. J.; Deriso, R. B. Quantitative Fish Dynamics (Biological Resource Management). Oxford University Press, 1999.

- Pauly, D. The Relationships between Gill Surface Area and Growth Performance in Fish: a Generalization of von Bertalanffy's Theory of Growth. Meeresforsch 1981, 28, pp. 251-282.

- Ursin, E. A Mathematical Model of Some Aspects of Fish Growth, Respiration, and Mortality. Journal of the Fisheries Research Board of Canada 1967, 24, pp. 2355-245. [CrossRef]

- Yang, Y. A Bioenergetics Growth Model for Nile Tilapia (Oreochromis Niloticus) Based on Limiting Nutrients and Fish Standing Crop in Fertilized Ponds. Aquacult Eng. 1998, 18 (3), pp. 157-173. [CrossRef]

- Chahid, A.; N'Doye, I.; Majoris, J. E.; Berumen, Michael L.; Laleg-Kirati, T.-M. Fish Growth Trajectory Tracking Using Q-learning in Precision Aquaculture, Aquaculture 2022, 550, 737838. [CrossRef]

- Liu, C.; Ding, J.; Sun, J. Reinforcement Learning Based Decision Making of Operational Indices in Process Industry Under Changing Environment. IEEE Transactions on Industrial Informatics 2021, 17 (4), pp. 2727-2736. [CrossRef]

- Jananpa, P. C.; Takada, R.; de Freitas, T. M.; Pereira, M.M.B.; Sá-Freire, L.; Lugert, V.; Sartuuri, C.; Pereira, M. M. Nonlinear Regression Analysis of Length Growth in Cultured Rainbow Trout. Arquivo Brasileiro de Medicina Veterinária e Zootecnia 72(5):1778-1788. [CrossRef]

- Zhou, H. et al. Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting. The Thirty-Fifth AAAI Conference on Artificial Intelligence (AAAI-21), February 2-9, 2021, 2021. [CrossRef]

- Qin, Y; Li. C,; Shi, X; Wang, W. MLP-Based Regression Prediction Model For Compound Bioactivity. Front Bioeng Biotechnol. 2022, 10, 946329. [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-term Memory. Neural computation 1997, 9, pp. 1735-1780. [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, M. H.-Y. YOLOv7: Trainable Bag-of-freebies Sets New State-of-the-art for Real-time Object Detectors. Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2023. [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. Proc. IEEE International Conference on Computer Vision 2017.

- Teed, Z.; Deng, J. RAFT: Recurrent All-Pairs Field Transforms for Optical Flow. Proc. Of the Thirtieth International Joint Conference on Artificial Intelligence 2021. [CrossRef]

- Campos, G. O.; Zimek, A.; Sander, J.; Campello, R. J. G. B.; Micenková, B.; Schubert, E.; Assent, I.; Houle, M. E. On the Evaluation of Unsupervised Outlier Detection: Measures, Datasets, and an Empirical Study. Data Mining and Knowledge Discovery 2016, 30 (4), pp. 891–927. [CrossRef]

- Jolliffe, I. T. Principal Component Analysis, Springer-Verlag New York, 2002.

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. Proc 2017 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2017.

- Ding, W.; Liu, X.; Xu, D.; Zhang, D.; Zhang. Z. A Robust Detection Method of Control Points for Calibration and Measurement with Defocused Images. IEEE Transactions on Instrumentation and Measurement 2017, 66(10), pp. 2725–2735. [CrossRef]

- Lall, S. P.; Tibbetts, S. M. Nutrition, Feeding, and Behavior of Fish, Veterinary Clinics of North America. Exotic Animal Practice 2009, 12 (2), pp. 361-372. [CrossRef]

| Name | Processor | Motherboard | Graphics Card | Memory | Storage |

| Master 01Worker 01Worker 02 | Intel i9-10900F | MSI MAG B460M MORTAR WIFI | GTX 1060 6G | 16GB * 2 | 1TB SSD |

| Survival Rate | # of Cages | Food Cost | BCR | NPV | IRR | Payback Period |

|---|---|---|---|---|---|---|

| 70% | 10 | -10% | 1.99 | 1,592 | 13.38% | 4.63 |

| -20% | 2.07 | 2,079 | 14.39% | 4.33 | ||

| -30% | 2.14 | 2,566 | 15.39% | 3.98 | ||

| 20 | -10% | 2.99 | 25,427 | 58.05% | 1.33 | |

| -20% | 3.15 | 26,400 | 59.80% | 1.44 | ||

| -30% | 3.33 | 27,374 | 61.54% | 1.45 | ||

| 80% | 10 | -10% | 2.18 | 5,048 | 20.37% | 3.60 |

| -20% | 2.27 | 5,605 | 21.47% | 3.43 | ||

| -30% | 2.36 | 6,162 | 22.55% | 3.20 | ||

| 20 | -10% | 3.21 | 32,339 | 70.40% | 1.03 | |

| -20% | 3.40 | 33,453 | 72.38% | 1.17 | ||

| -30% | 3.60 | 34,566 | 74.36% | 1.22 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).