1. Introduction

Clouds play an extremely critical role in regulating the Earth's climate system [

1]. Clouds can reflect incoming solar shortwave radiation, reducing the amount of heat absorbed at the surface; meanwhile, they can also absorb outgoing longwave radiation from the surface, producing a greenhouse effect [

2]. In essence, clouds serve as an important "sunshade" to maintain the balance of the greenhouse effect and prevent overheating of the Earth [

3]. However, different cloud types at varying altitudes impact the climate system differently. For instance, high-level cirrus clouds mainly contribute to reflection and scattering, while low-level stratus and cumulus clouds more so cause the greenhouse effect [

4]. Accurately determining cloud types, distributions and evolutions is vital for long-term climate change monitoring and forecasting [

5]. Moreover, there are considerable regional disparities in cloud amount, and pronounced differences exist in regional climate characteristics, which makes precise cloud quantification even more crucial. Accurate cloud detection can provide critical climate change information to advance our understanding of the climate system from multiple dimensions [

6]; it also helps validate the accuracy of climate model predictions, furnishing input parameters for climate sensitivity research [

7]. Therefore, conducting accurate quantitative cloud observations is of great significance for climate change science, which is also the motivation behind this study's effort to achieve precise cloud quantification through image processing techniques.

Currently, accurate cloud typing and quantification still face certain difficulties and limitations. For cloud classification, common approaches include manual identification, threshold segmentation, texture feature extraction, satellite remote sensing, ground-based cloud radar detection, aircraft sounding observations, etc. [

8,

9,

10,

11,

12]. Manual visual identification relies on the experience of professional meteorological observers to discern cloud shapes, colors, boundaries and other features to categorize cloud types. This method has long been widely used, but is heavily impacted by individual differences and lacks consistency, with low efficiency [

13]. Threshold segmentation sets thresholds based on RGB values, brightness and other parameters in images to extract pixel features corresponding to different cloud types for classification. It is susceptible to illumination conditions and ineffective at distinguishing transitional cloud zones [

14]. Texture feature analysis utilizes measurements of roughness, contrast, directionality and other metrics to perform multi-feature combined identification of various clouds, but adapts poorly to both tenuous and thick clouds [

15]. Satellite remote sensing discerns cloud types based on spectral features in different bands combined with temperature inversion results, but has low resolution and inaccurate recognition of ground-level small clouds [

16]. Ground-based cloud radar differentiation of water and ice clouds relies on measured Doppler velocity and other parameters, with inadequate detection of high thin clouds [

17]. Aircraft sounding observations synthesize multiple parameters to make judgments, but have limited coverage and observation time. For cloud quantification, currently prevalent methods include laser radar measurement, satellite remote sensing inversion, ground-based cloud radar, and whole sky image recognition [

18]. Laser radar emits sequenced laser pulses and estimates cloud vertical structure and optical depth from the backscatter to directly quantify cloud amount, but has large equipment size, high costs, limited coverage area, and cannot produce cloud distribution maps. Satellite remote sensing inversion utilizes parameters like cloud top temperature and optical depth, combined with inversion algorithms to obtain cloud amount distribution. However, restricted by resolution, it has poor recognition of local clouds [

11]. Ground-based cloud radar can measure backscatter signals at different altitudes to determine layered cloud distribution, but has weak return signals for high thin clouds, resulting in inadequate detection. With multiple cloud layers, it struggles to differentiate between levels, unfavorable for accurate quantification [

19]. The conventional whole sky image segmentation utilizes fisheye cameras installed at ground stations to acquire whole sky images, then segments the images based on color thresholds or texture features to calculate pixel proportions of various cloud types, which are converted to cloud cover. This method has the advantage of easy and economical image acquisition, but is susceptible to illumination changes that can impact segmentation outcomes, with poor recognition of small or high clouds [

13]. In summary, the current technical means for cloud classification and quantification lack high accuracy, cannot precisely calculate regional cloud information, and need improved stability and reliability. They fall short of meeting the climate change science demand for massive fine-grained cloud datasets.

In recent years, with advances in computer vision and machine learning theories, some more sophisticated technical means have been introduced into cloud classification and recognition, making significant progress. For instance, cloud image classification algorithms based on deep learning have become a research hotspot. Deep learning can automatically learn feature representations from complex data and construct models to synthetically judge the visual information of cloud shapes, boundaries, textures, etc. to distinguish between different cloud types [

20]. Meanwhile, unsupervised learning methods like k-means clustering are also widely applied in cloud segmentation and recognition. This algorithm can autonomously discover inherent data category structures without manual annotation, enabling cloud image partitioning and greatly simplifying the workflow [

21]. Some studies train k-means models to swiftly cluster and recognize cloud and clear sky regions in whole sky images, improving cloud quantification speed and efficiency. It can be foreseeable that the combination of deep learning and unsupervised clustering for cloud recognition will find expanded applications in meteorology. We also hope to lay the groundwork for revealing circulation characteristics, radiative effects and climate impacts of different cloud types through this cutting-edge detection approach.

Despite some progress made in current cloud recognition algorithms, numerous challenges remain. Firstly, accurately identifying different cloud types is still difficult, especially indistinct high-altitude cirrus clouds and transitional mixed cloud types [

10]. Secondly, illumination condition changes can drastically impact recognition outcomes, leading to high misjudgment rates in situations like polarization and shadowing. To address these issues and limitations, we propose constructing an end-to-end cloud recognition framework, with a focus on achieving accurate classification of cirrus, clear sky, cumulus and stratus clouds, paying particular attention to the traditionally challenging cirrus clouds. Building upon the categorization, we design adaptive dehazing algorithms and k-means clustering finite element segmentation to enhance recognition of cloud edges and tenuous regions. We hope that through optimized framework design, long-standing issues of cloud typing and fine-grained quantification can be solved, significantly improving ground-based cloud detection and quantification for solid data support in related climate studies. The structure of this paper is as follows.

Section 2 introduces the study area, data acquisition, and construction of the cloud classification dataset.

Section 3 elaborates the methodologies including neural networks, image enhancement, adaptive processing algorithms, and evaluation metrics. Finally,

Section 4,

Section 5 and

Section 6 present the results, discussions, and conclusions respectively.

3. Materials and Methods

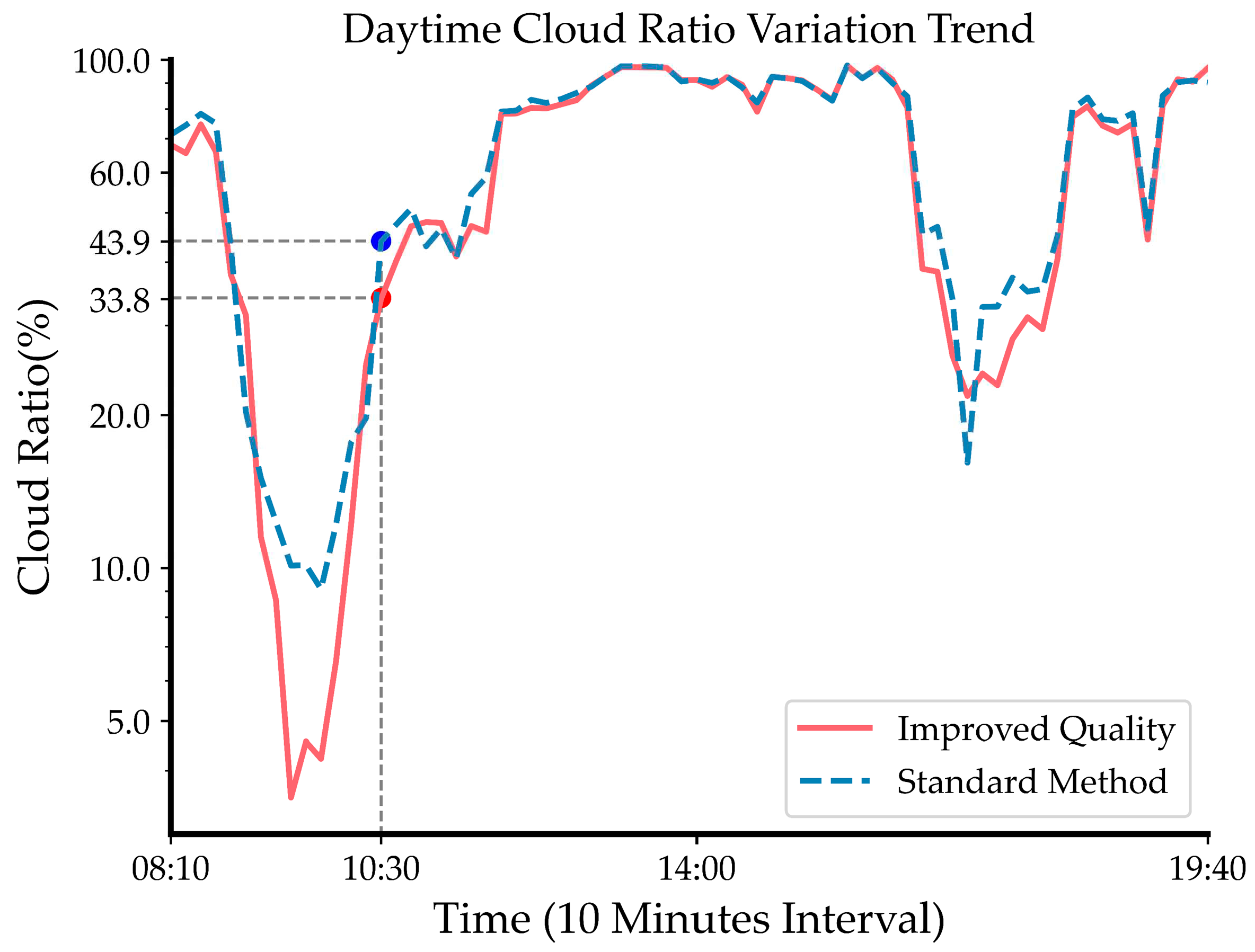

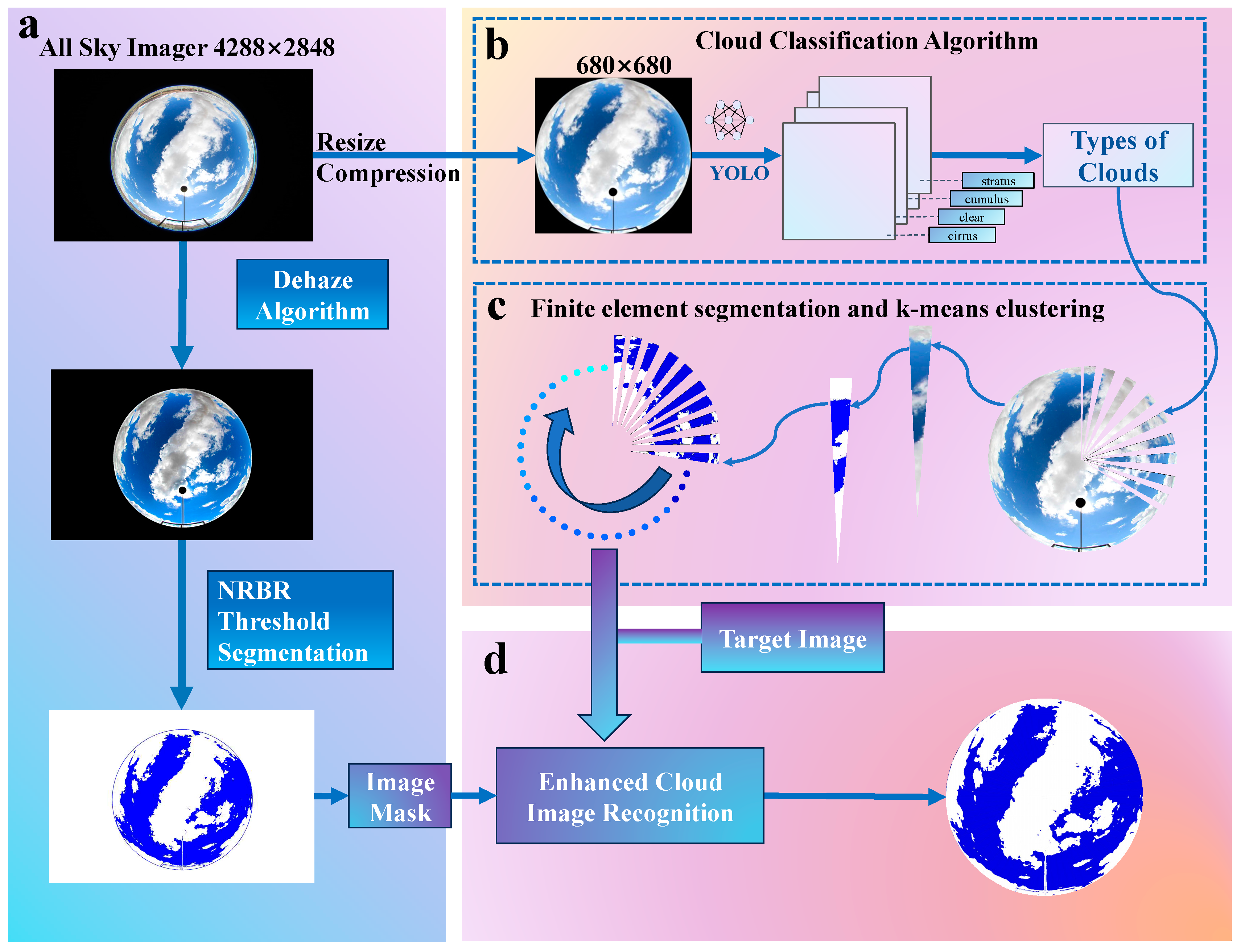

The framework proposed in this study is illustrated in

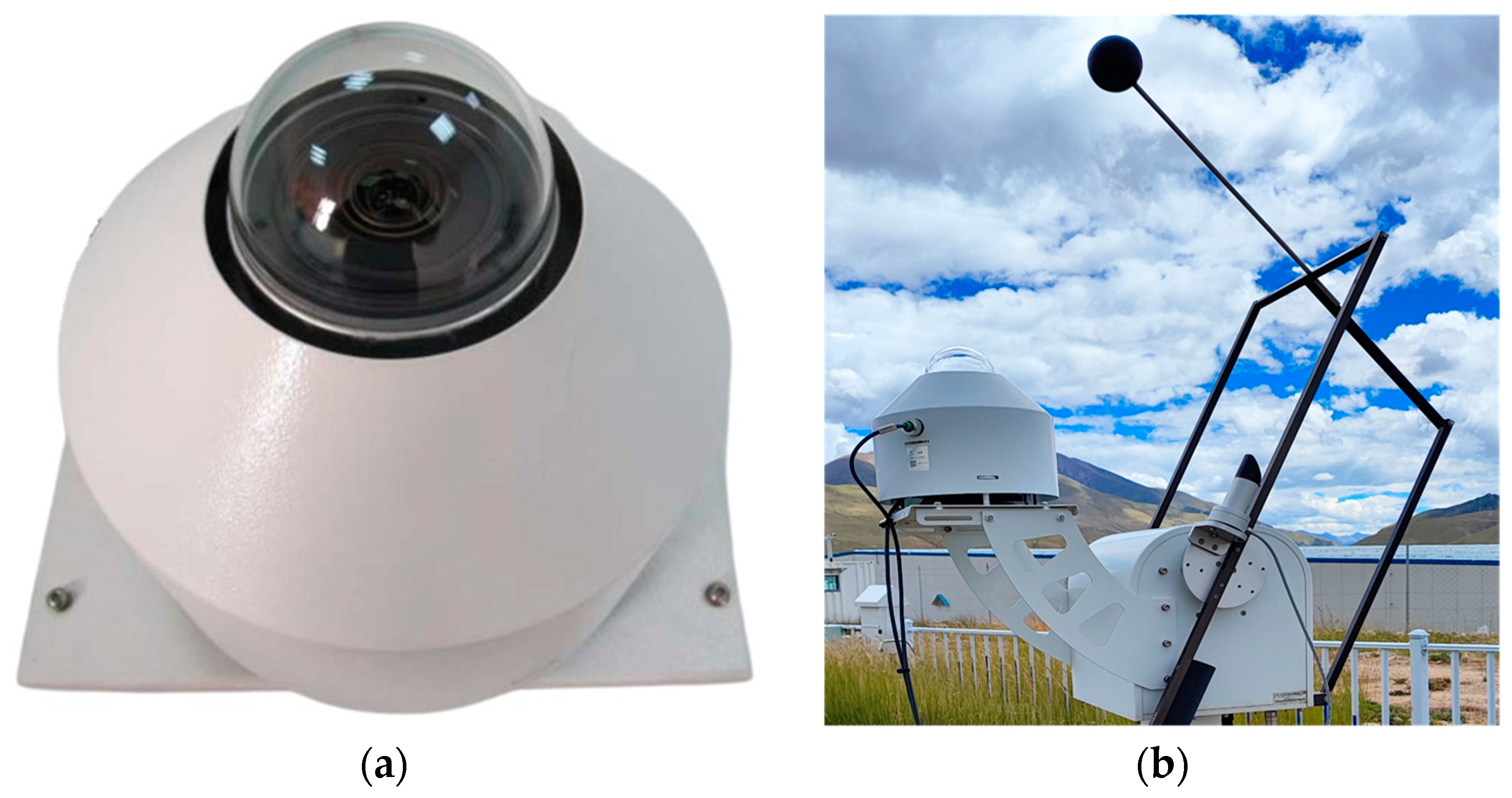

Figure 2. It can be summarized into the following steps:(1) Data quality control and preprocessing. First, quality control is performed on the collected raw all-sky images to remove distorted images caused by occlusion or sensor issues. Then, image size and resolution are standardized.(2) Deep neural network classification and evaluation metrics. The YOLOv8 deep neural network is utilized to categorize the cloud images, judging which of the four types (cirrus, clear sky, cumulus, and stratus) each image belongs to. Precision, recall, and F1-scores are used to evaluate the classification performance.(3) Adaptive enhancement. Different image enhancement strategies are adopted according to cloud type to selectively perform operations like dehazing, contrast adjustment etc. to improve image quality.(4) Finite element segmentation and K-means clustering. K-means clustering based on finite element segmentation is conducted on the enhanced images by category to extract cloud features and obtain accurate cloud detection results.

3.1. Quality Control and Preprocessing

Considering that irrelevant ground objects may occlude the edge areas of the original all-sky images, directly using the raw images to train models could allow unrelated ground targets to interfere with the learning of cloud features, reducing the model's ability to recognize cloud regions [

23]. Therefore, we cropped the edges of the original images, using the geometric center of the all-sky images as the circle center and calculating the circular coverage range corresponding to a 26° zenith angle, to precisely clip out this circular image area and remove ground objects on the edges. This cropping operation eliminated ground objects from the original images that could negatively impact cloud classification, resulting in circular image regions containing only sky elements. To facilitate subsequent image processing operations while ensuring image detail features, the cropped images underwent size adjustment to set the target resolution to 680×680 pixels. Compared to the original 4288×2848 pixels, adjusting the resolution retained the main detail features of the cloud areas in the images, but significantly reduced the file size for easier loading and calculation during network training. Finally, a standardized dataset was constructed by cloud type - the resolution-adjusted images were organized and divided into four folders for cirrus, clear sky, cumulus, and stratus, with 1000 pre-processed images in each folder. A standardized all-sky image dataset containing diverse cloud morphologies was built.

3.2. Deep Neural Network Classification

3.2.1. Network Structure Design

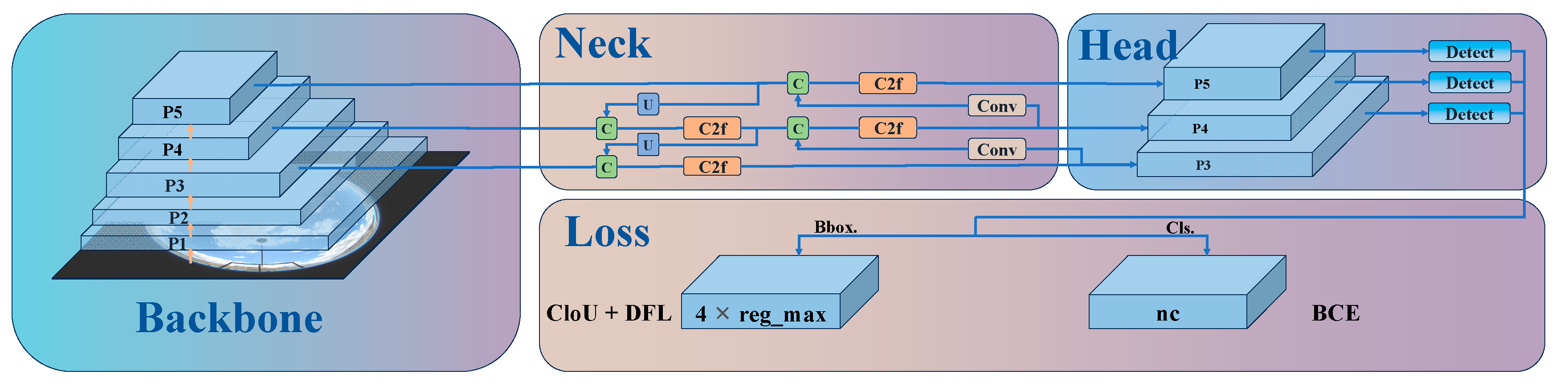

This study employs YOLOv8 as the base model architecture for the cloud classification task. As illustrated in

Figure 3, the YOLOv8 network structure consists primarily of the Backbone, Neck, Head and Loss components. The Backbone of YOLOv8 uses the Darknet-53 network, replacing all C3 modules in the backbone with C2f modules, which draw design inspiration from C3 modules and ELAN to extract richer gradient flow information [

24]. The Neck of YOLOv8 implements a PAN-FPN structure to achieve model lightweightization while retaining original performance levels. The detection Head uses a decoupled structure with two convolutions respectively responsible for classification and regression [

25]. For the classification task, binary cross entropy loss (BCE Loss) is utilized, while distribution focal loss (DFL) and complete IoU (CIoU) are used for predicting bounding box regression. This detection structure can improve detection accuracy and accelerate model convergence [

26]. Considering the limited scale of cloud datasets, we load the YOLOv8-X-cls model pre-trained on ImageNet with 57.4M parameters as the initialized model. Through deliberate designs of modules, pre-trained model initialization and configuration of training parameters, a superior end-to-end cloud classification network is constructed.

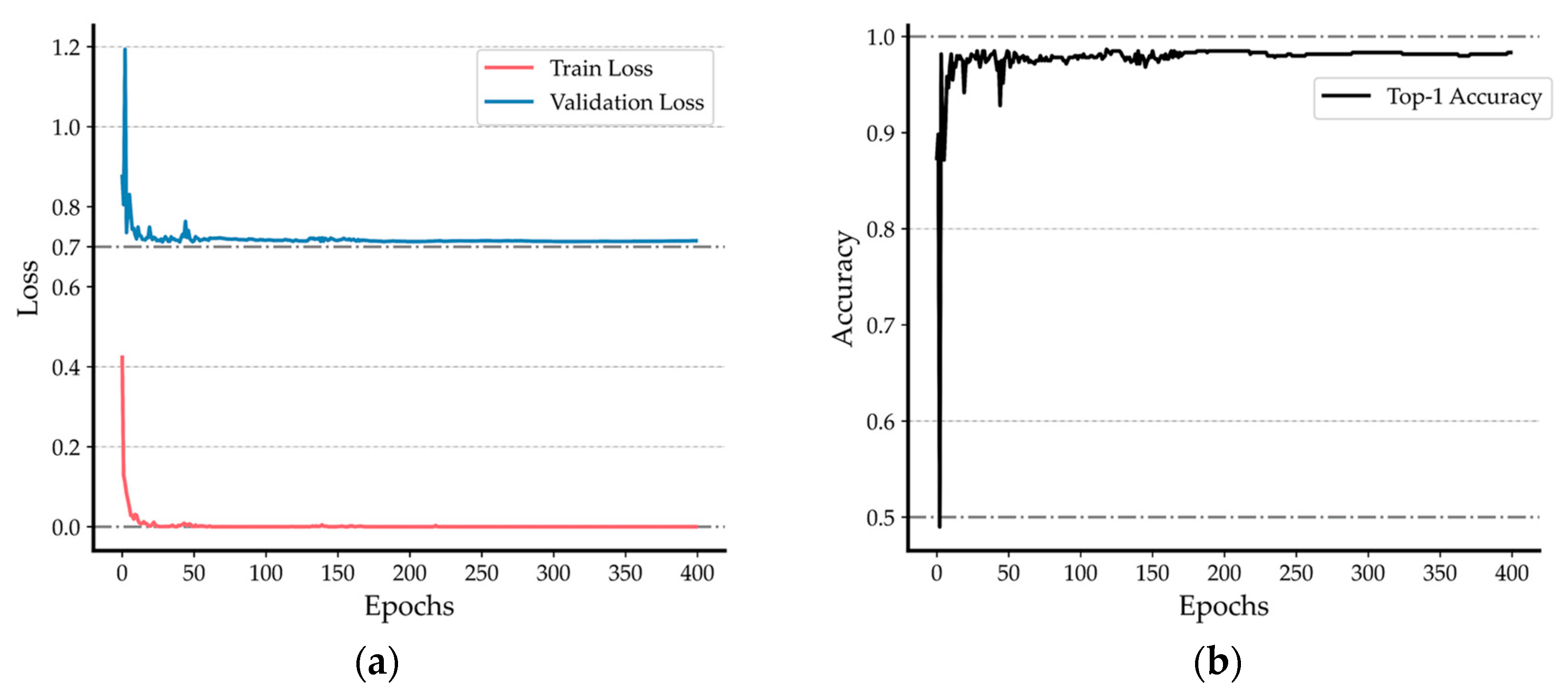

3.2.2. Experimental Parameter Settings

After constructing the model architecture, we trained the model using the previously prepared classification dataset containing images of multiple cloud types. During training, the input image size was set to 680×680. We set the maximum number of training epochs to 400, and the number of samples used per iteration was 32. To prevent overfitting, momentum and weight decay terms were added to the optimizer and the patience parameter was adjusted to 50. To augment the sample space, various data augmentation techniques were employed such as random horizontal flipping (probability of 0.5) and mosaic (probability of 1.0). The SGD optimizer was chosen since its stochastic sampling and parameter update provide opportunities to jump out of local optima, helping locate the global optimum in a wider region. Considering initial and final learning rates, the initial learning rate was set to 0.01 and gradually decayed during training to enable more refined optimization of model parameters during later convergence.

3.2.3. Cloud Classification Evaluation Indicators

To comprehensively evaluate the cloud classification performance of the model, a combined qualitative and quantitative analysis scheme was adopted.Qualitatively, we inspected the model's ability in categorizing different cloud types, boundaries, and detail structures by comparing classification recognition differences between the validation set and test set.Quantitatively, metrics including precision, recall and F1-score were used to assess the model. Precision reflects the portion of true positive cases among samples predicted as positive, and is calculated as:

Recall represents the fraction of correctly classified positive examples out of all positive samples, and is calculated as:

F1-score considers both precision and recall via the formula:

Here, TP stands for true positives, TN true negatives, FP false positives, and FN false negatives. Through this combined qualitative and quantitative evaluation system, the cloud classification recognition performance can be fully examined.

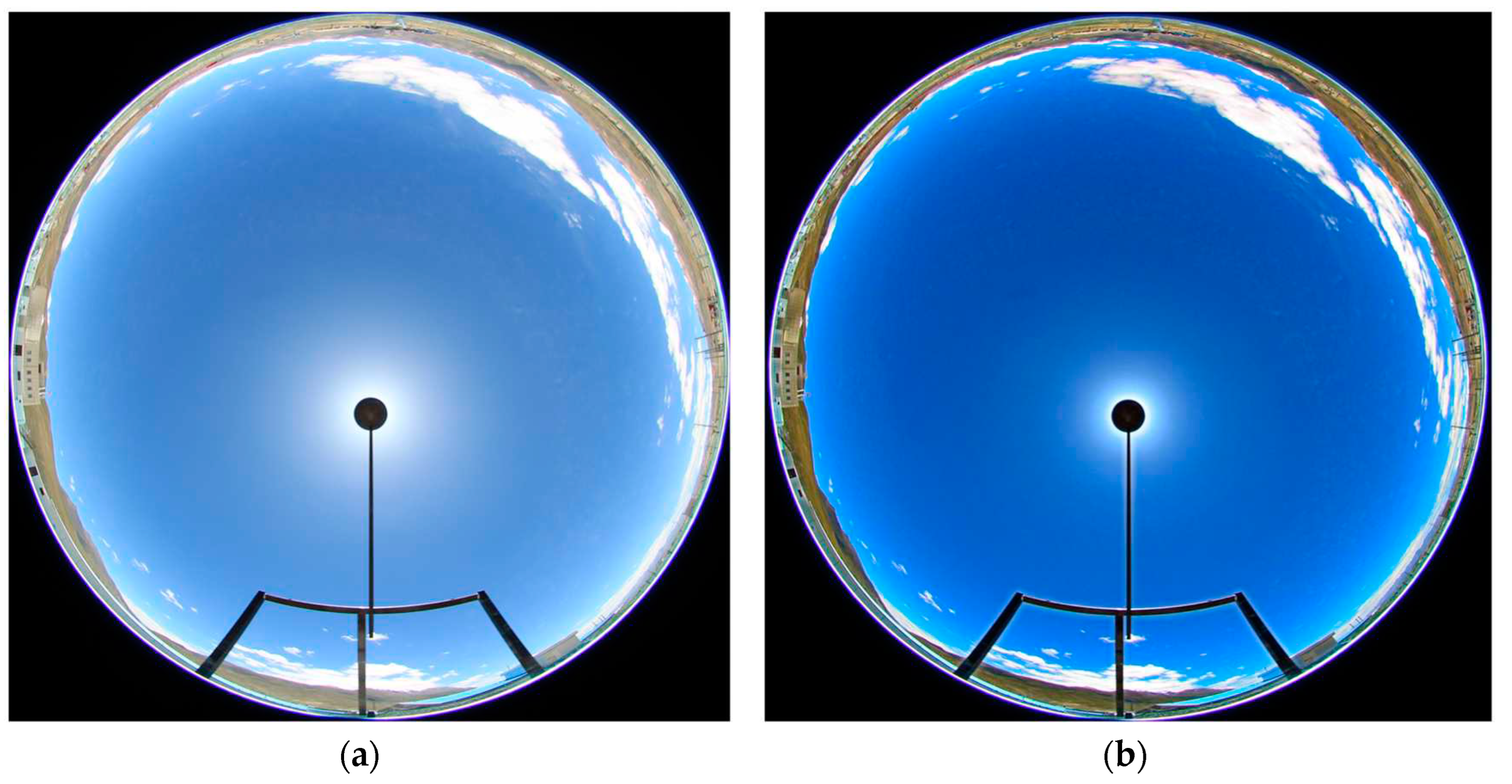

3.3. Adaptive Enhancement Algorithm

When processing all-sky images, we face the challenges of visual blurring and low contrast caused by overexposure and haze interference. To address this, a dark channel prior algorithm is adopted in this study. The core idea of the dark channel prior algorithm is to perform haze estimation and elimination based on dark channel images [

27]. The dark channel image selects the minimum value among the RGB channels at each pixel location, thus reflecting the minimum brightness within the pixel area. Low brightness values tend to manifest in regions with haze, thereby providing clues for haze. We utilize an image enhancement algorithm to further optimize image quality following these steps based on the dark channel prior assumption: First, calculate the dark channel for each pixel of the input image by taking the minimum value across the three RGB color channels. Next, estimate the global atmospheric light A using the non-zero minimums of the dark channel. Based on the atmospheric scattering model, obtain the transmission t for each pixel, which indicates the visibility of that pixel. Finally, apply the formula:

where J is the recovered haze-free image, and I is the original input image. The dehazing algorithm can effectively eliminate fog in images to yield more discernible cloud and sky boundaries, facilitating subsequent generation of high quality cloud covers.

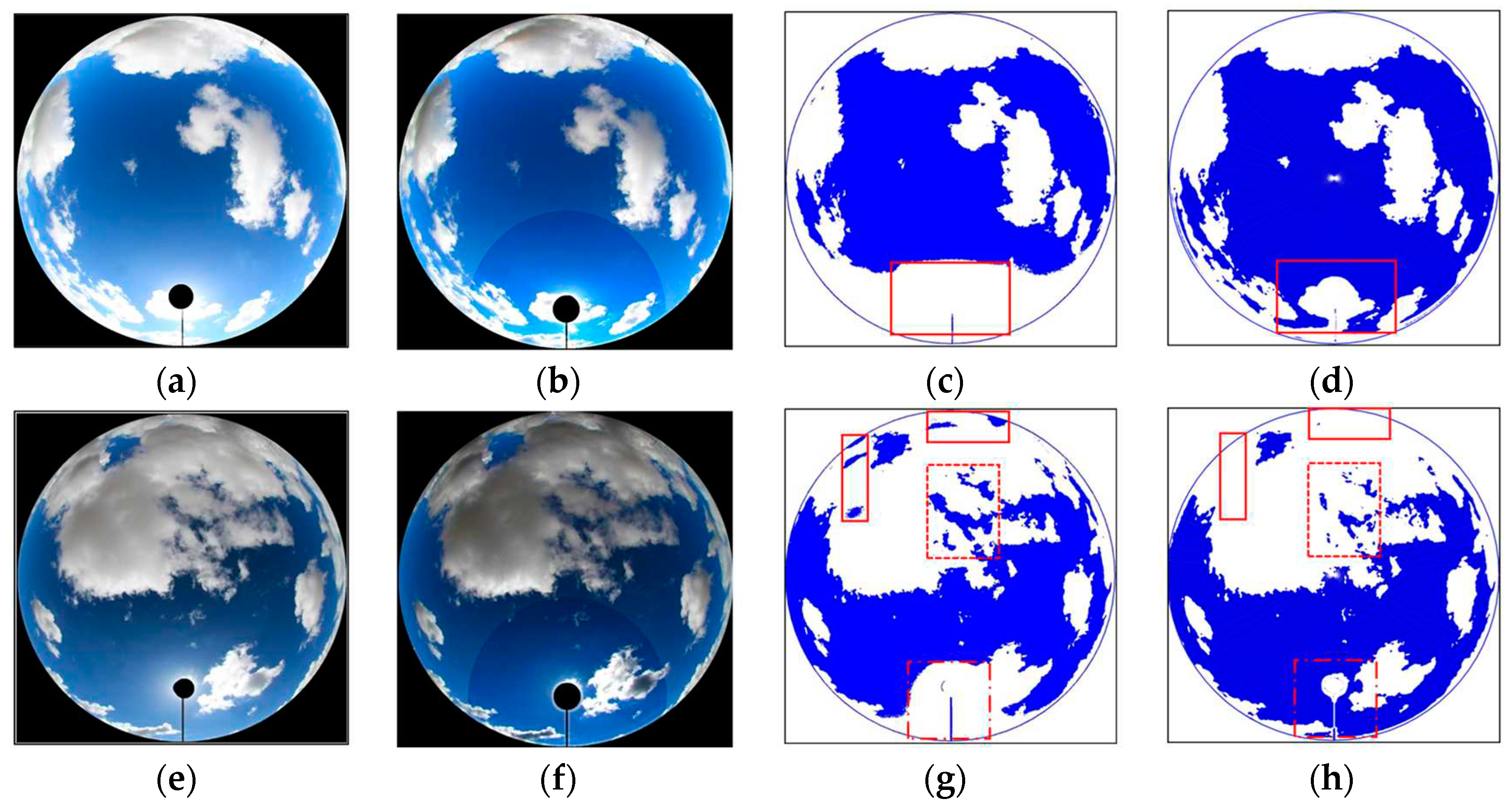

In the image enhancement algorithm, the atmospheric light value A directly impacts the intensity of dehazing. Adaptive enhancement strategies are designed according to cloud type. For relatively thin cirrus, excessive enhancement may filter them out, hence smaller A values are chosen to preserve details. Meanwhile, thicker cumulus, stratus and clear sky images allow larger A values to strengthen haze removal, eliminating overexposed areas surrounding the sun and on edges to obtain more uniform sky distributions. We focused our analyses on the processing effects on two types of easily misclassified areas - surrounding the sun, where overexposure often leads to misjudgements; and white glows on sky edges, which can also readily cause errors. To improve segmentation quality, additional processing is implemented on these two areas. The sun's position can be determined by recognizing the location of the occulting disk, enabling application of enhanced dehazing to this circular region to reduce white glow. For the sky edge area, after removing ground elements, an annular region on the sky edge is designated for strengthened haze removal to alleviate white glow influence on identification. Through the above optimized designs, misjudgement issues surrounding the sun and edges can be effectively controlled to boost cloud segmentation quality.

3.4. Finite Element Segmentation and K-means Clustering

On the basis of obtained cloud type classification results, we propose an adaptive image segmentation method tailored to cloud morphology. Different cloud types exhibit varying shapes, necessitating customized segmentation strategies for optimal effects. Specifically, cirrus clouds appear faint against the sky with indistinct edges, requiring more delicate regional divisions. Clear sky images simply contain blue sky and black background - a few sectors sufficiently represent such simplicity. Cumulus has discernible but potentially unevenly lit edges, warranting more sectors. Finally, stratus images primarily show clouds and black backdrop - fewer sectors suffice. These differential approaches are formulated per cloud traits to extract representative features for improved cloud quantification.With cloud type adaptively segmented images, a K-means clustering algorithm is then implemented in each sector to further glean cloud information. It first randomly initializes K cluster centers, then categorizes samples to the nearest cluster based on distance, before recalculating cluster centers [

28]. This iterative process converges when centers remain static. A proper k value is chosen per sector to partition it into sky, cloud and background. Each region is then recolored according to clustering outcomes to obtain a preliminary cloud detection image. This design capitalizes on K-means’ clustering capability to automatically distinguish sky and cloud elements in sectors.

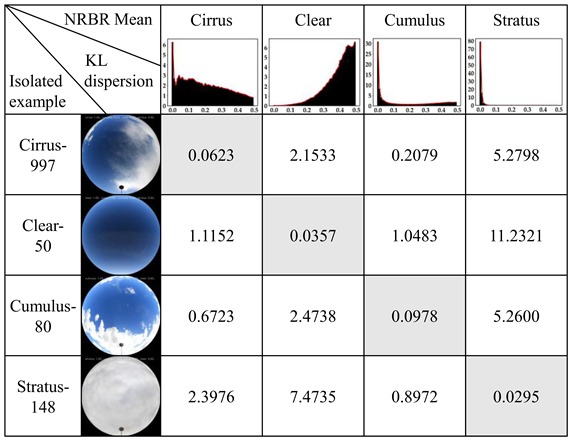

In traditional cloud segmentation, the Normalized Red/Blue Ratio (NRBR) method exhibits certain shortcomings. Firstly, it struggles to effectively distinguish intense white light around the sun, often misclassifying these overexposed areas as cloud regions. Secondly, it fails to properly handle the bottom of thick cloud layers, where the regions appear dark due to the lack of penetrating light and may be erroneously classified as clear sky areas. Both misclassifications stem from the NRBR method overly relying on RGB color features without comprehensive consideration of lighting conditions. When atypical lighting distributions occur, accurate cloud and sky differentiation becomes challenging based solely on red/blue ratio values. Therefore, after obtaining the initial cloud segmentation results, we propose a mask-based refined segmentation method to further enhance the effectiveness.The specific approach involves first extracting the predicted sky regions from the aforementioned segmentation results, using them as a mask template. Subsequently, each sector undergoes k-means clustering to identify blue sky and white clouds, restricting the region after concatenating sectors within the mask-defined blue sky template. This process yields more nuanced identification results. By conducting secondary segmentation only on key areas and leveraging the results from adaptive k-means extraction, a finer segmentation is achieved. Ultimately, building upon the initial segmentation, this approach significantly improves potential misclassifications at the cloud edges, generating more accurate final cloud detection results. This design, guided by prior masks for localized refinement, effectively enhances the quality of cloud segmentation.

5. Discussion

Cloud detection and identification has long been a research focus and challenge in meteorology and remote sensing. Current mainstream ground-based cloud detection methods can be summarized into two categories – traditional image processing approaches and deep learning-based techniques [

31]. Traditional methods like threshold segmentation and texture analysis rely on manually extracted features with weaker adaptability to atypical cases, whereas deep learning can automatically learn features for superior performance. This study belongs to the latter, utilizing the YOLOv8 model for cloud categorization to capitalize on deep learning’s visual feature extraction strengths. Compared to other deep learning based cloud detection studies, the innovations of this research are three-fold: 1) An adaptive segmentation strategy tailored to different cloud types was designed, with segmentation parameters set according to cloud morphology to extract representative traits, improving partitioning accuracy. 2) Adaptive image enhancement algorithms were introduced, which markedly improved detection in regions with strong illumination impact like solar vicinity over conventional NRBR segmentation. 3) Multi-level refinement was adopted to enhance capturing of cloud edges and bottoms. These aspects enhanced adaptivity to various cloud types under complex illumination. Limitations of this study include: 1) Small dataset scale containing only Yangbajing area samples due to geographic and instrumentation constraints; 2) Sophisticated model training and tuning demanding substantial computational resources; 3) Room for further improving adaptability to overexposed regions. Future work may address these deficiencies via enlarged samples, cloud computing resources, and more powerful models.

Although only validated at the Yangbajing Comprehensive Atmospheric Observatory in Tibet, this approach exhibits considerable scalability and versatility. Firstly, the constructed end-to-end recognition framework has generalization capability – with appropriate fine-tuning, it can adapt to cloud morphological traits in other regions. Secondly, the adaptive image enhancement strategy functions irrespective of specific lighting conditions hence widely applicable to diverse environments; the finite element segmentation with k-means clustering philosophy can also generalize to cloud quantification at different sites – regions with more prevalent hazes would benefit well. The modularized design ensures convenient upgradability of individual components. Therefore, this proposed technique can be readily transferred within the cloud monitoring network to enable coordinated high-precision multi-regional recognition, providing a referential paradigm for cloud detection tasks under other challenging illumination circumstances.

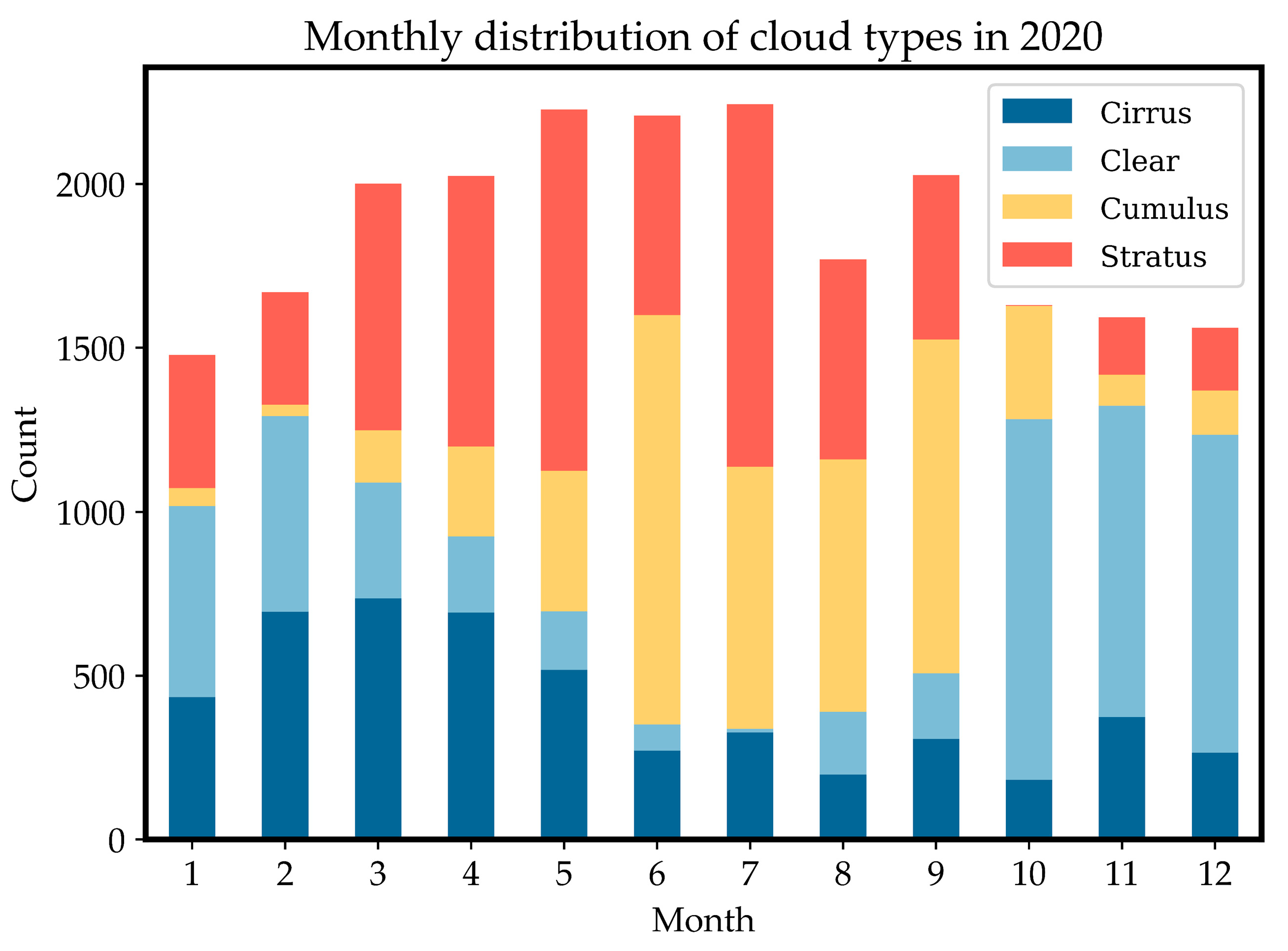

An additional crucial area of research is correlation with solar radiation. We noticed that different cloud types impact radiation divergently throughout the day – occurrences of stratus and cumulus clouds closely associate with radiation, concentrating in the higher insolation afternoon periods, while clear sky and cirrus clouds vary less with radiation trends. Solar radiation is the vital energy source driving weather processes, directly influencing generation, evolution and dissipation of varied cloud types [

32]. For the Tibetan region, solar radiation largely shapes its climate characteristics [

33]. Abundant insolation provides the energy source for water vapor to fuel convective activity beneficial for thick cloud buildup. Additionally, solar radiation intricately intertwines with glacier ablation, ecological transformations and other changes over the Qinghai-Tibet Plateau [

34]. Looking ahead, with intensifying global warming, solar intensity may continue strengthening, potentially raising stratus and cumulus clouds over Tibet, further impacting climate via cloud fraction changes. Hence persistent monitoring of relationships between cloud varieties and solar radiation carries significance for predicting future regional climate change tendencies. Our study established favorable grounds for constructing coupled models between cloud amount and radiation.

6. Conclusions

This research proposes a novel deep learning based whole sky image cloud detection solution, constructing a 4000-image multi-cloud dataset spanning cirrus, clear sky, cumulus and stratus categories that achieved markedly improved recognition and quantification outcomes in Tibet’s Yangbajing area. Specifically, an end-to-end cloud recognition framework was built, first leveraging the YOLOv8 model to accurately classify cloud types with over 95% accuracy. Building on this, tailored adaptive segmentation strategies were designed for different cloud shapes, notably enhancing segmentation precision through extracting representative traits, especially for indistinct cirrus clouds. Moreover, adaptive image enhancement algorithms were introduced to significantly improve detection in illumination-challenging areas around the sun. Finally, multi-level refinement modules based on finite element techniques further upgraded judgment precision of cloud edges and details. Validation on the 2020 annual Yangbajing dataset proves stratus clouds constitute the predominant type, appearing in 30% of daytime cloud images, delivering valuable data support for regional climate studies. In conclusion, this framework significantly raises the automation level of ground-based cloud quantification to create a strong technological foundation for research on climate change. It does this by integrating various modules that cover classification, adaptive segmentation, and image enhancement. Additionally, it offers a referable paradigm for other cloud recognition tasks under complex lighting environments.

Author Contributions

Conceptualization, Y.W. and J.L.; methodology, Y.W. and J.L.; software, Y.W. and J.L.; validation, Y.W. and J.L.; formal analysis, Y.W., J.L., D.L. and D.S.; investigation, Y.W., J.L. and Y.P.; resources, Y.W., J.L., Y.P., L.W., W.Z., J.Z., D.S. and D.L.; data curation, Y.W., J.L., Y.P., L.W., W.Z., X.H., Z.Q. and J.Z.; writ-ing—original draft preparation, J.L.; writing—review and editing, all authors; funding acquisition, Y.W. All authors have read and agreed to the published version of the manuscript.