Submitted:

02 November 2023

Posted:

02 November 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Work

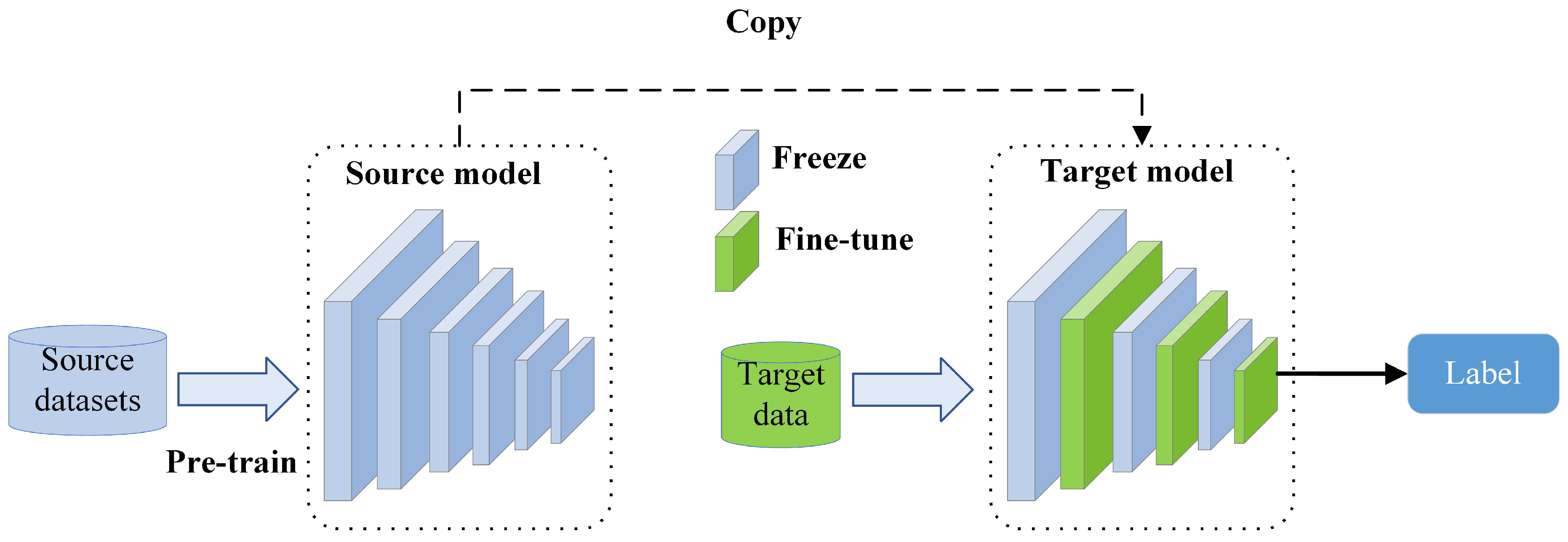

2.1. Traditional Fine-tuning Methods

2.2. Optimization-based Fine-tuning Methods

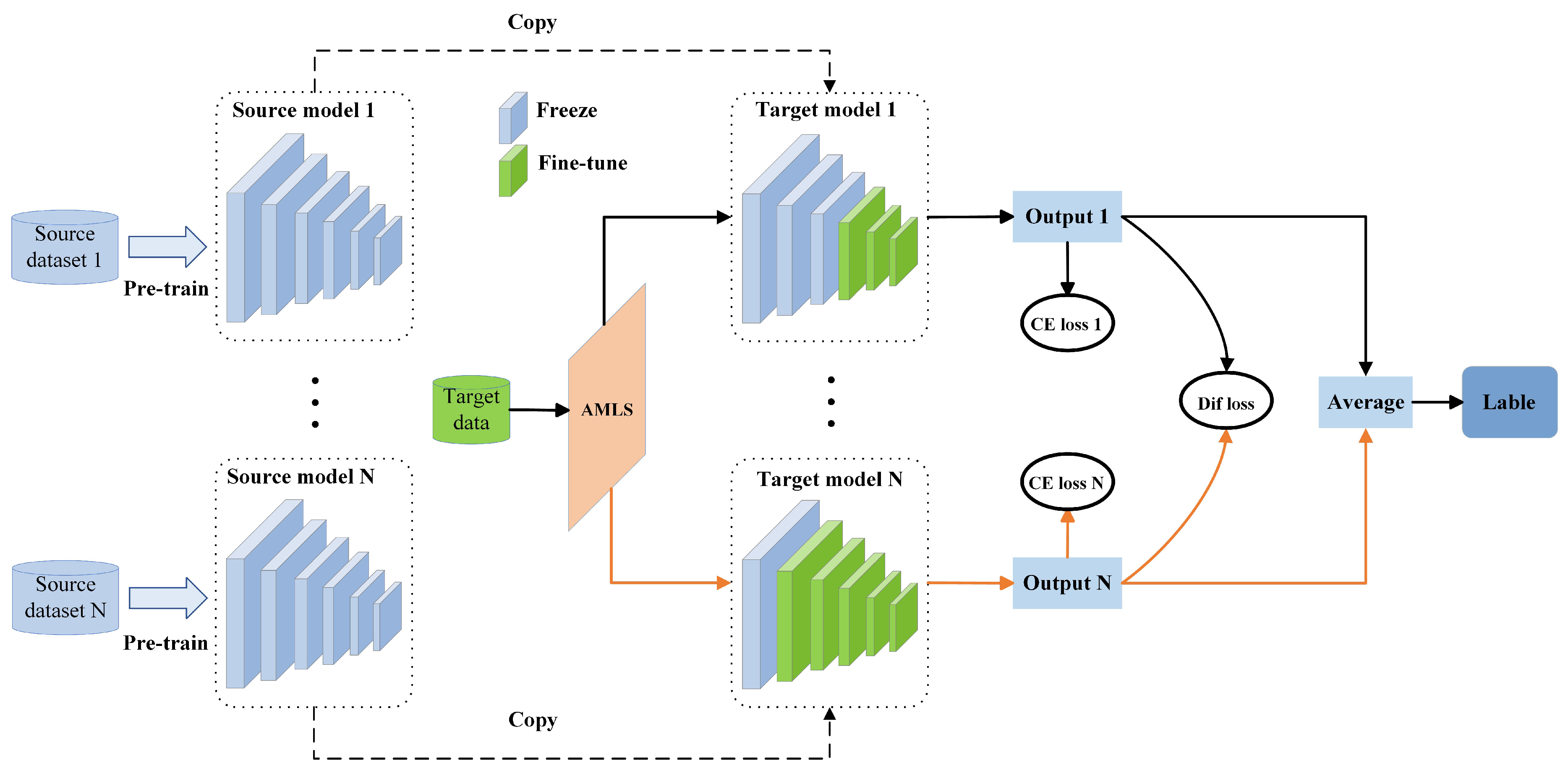

3. Adaptive Multi-source Domain Collaborative Fine-tuning Framework

3.1. Adaptive Multi-source Domain Layer Selection Strategy

| Algorithm 1: Adaptive multi-source domain layer selection strategy. |

Input:The source-domain models ; target data ; population size n; the max iteration number G of the population.

|

3.2. Multi-source Collaborative Loss Function

4. Experimental Design and Analysis of the Obtained Results

4.1. Experimental Setup

- Train-From-Scratch: This method trains the model anew using randomly initialized weights without applying any transfer learning methods.

- Standard Fine-Tuning: This method fine-tunes all the parameters of the source-domain model on the target dataset [12].

- L2-SP: This regularized fine-tuning method uses an L2 penalty in the loss function to ensure that the fine-tuned model is similar to the pre-trained model [13].

- Child-Tuning: This method selects a child network in the source-domain model for fine-tuning through a Bernoulli distribution [15].

- AdaFilter: This method uses a policy network to determine which filter parameters need to be fine-tuned [20].

- ALS: This method uses a genetic algorithm to automatically select an effective update layer for transfer learning [18].

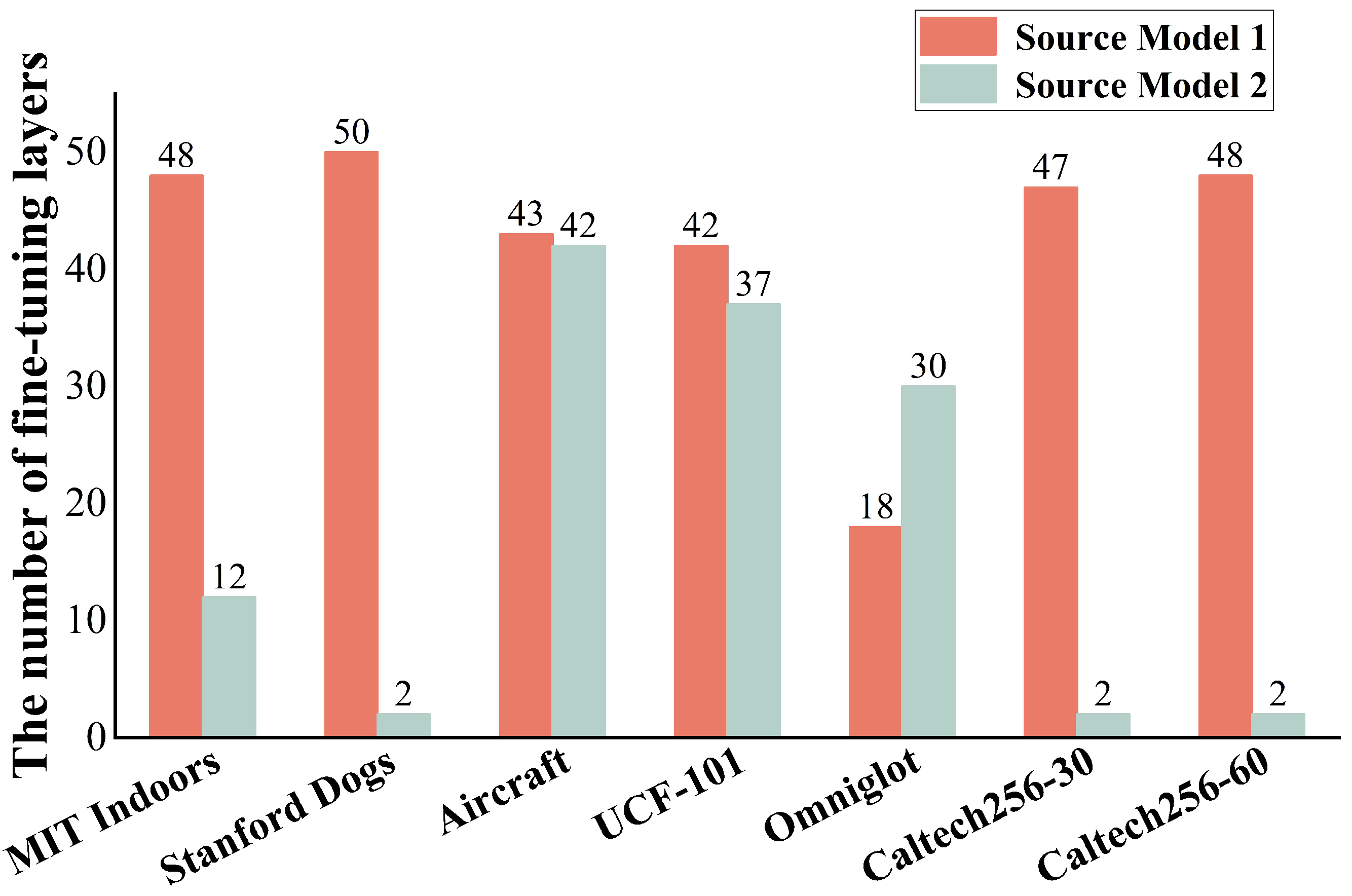

4.2. Effectiveness Analysis of the Adaptive Multi-source Domain Layer Selection Strategy

4.3. Effectiveness Analysis of the Multi-source Collaborative Loss Function

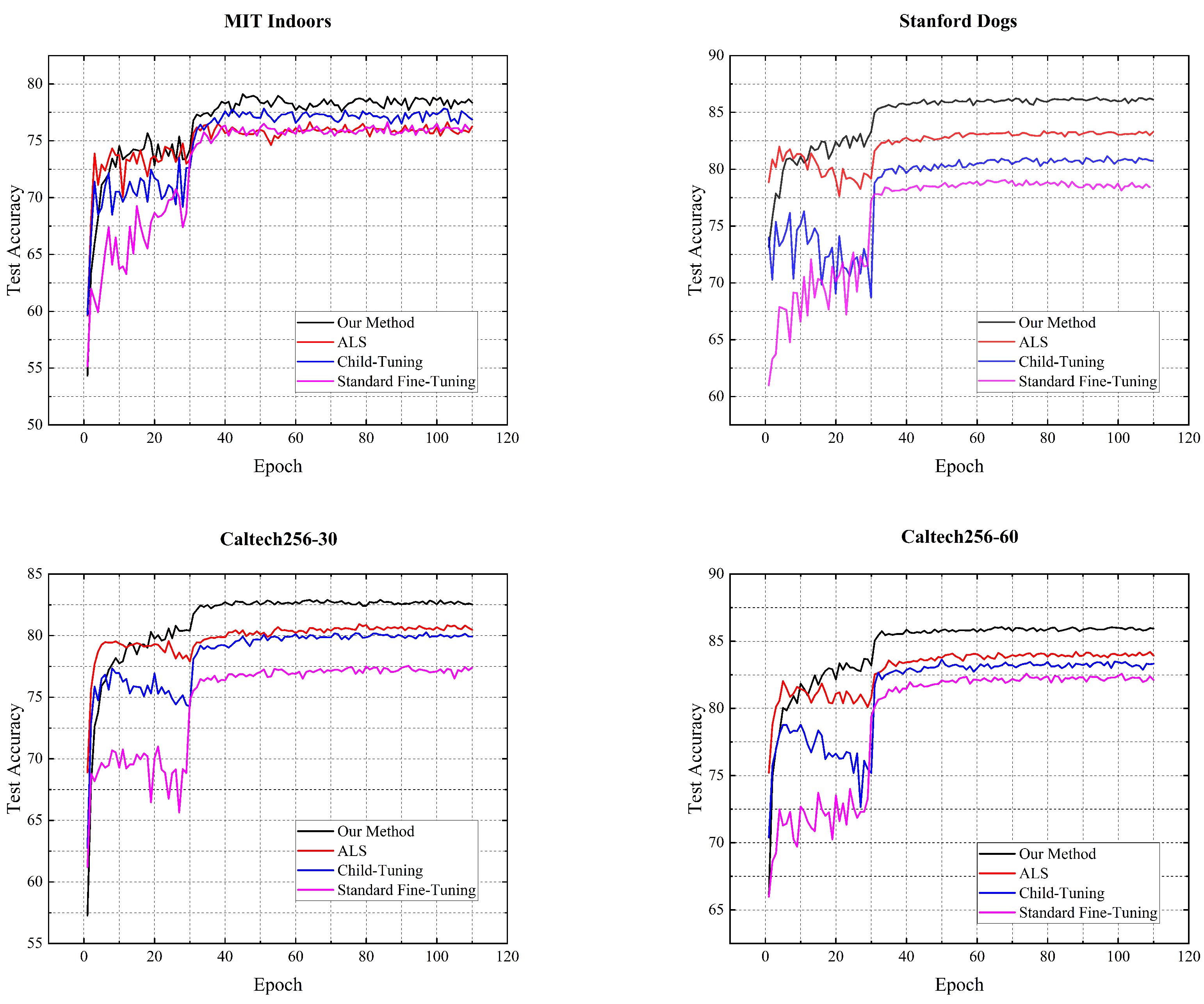

4.4. Comparison of the Adaptive Multi-source Collaborative Fine-tuning Method with State-of-the-art Fine-tuning Methods

4.5. Experimental Findings

5. Conclusion

Author Contributions

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pan, S. J.; Yang, Q. A Survey on Transfer Learning. IEEE Transactions on Knowledge and Data Engineering 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A Comprehensive Survey on Transfer Learning. Proceedings of the IEEE 2021, 109, 43–76. [Google Scholar] [CrossRef]

- Alhussan, A. A.; Abdelhamid, A. A.; Towfek, S. K.; Ibrahim, A.; Abualigah, L.; Khodadadi, N.; Khafaga, D. S.; Al-Otaibi, S.; Ahmed, A. E. Classification of Breast Cancer Using Transfer Learning and Advanced Al-Biruni Earth Radius Optimization. Biomimetics 2023, 8. [Google Scholar] [CrossRef]

- Li, H.; Fowlkes, C.; Yang, H.; Dabeer, O.; Tu, Z.; Soatto, S. Guided Recommendation for Model Fine-Tuning. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2023; pp. 3633–3642. [Google Scholar]

- Dai, Z.; Cai, B.; Lin, Y.; Chen, J. Unsupervised Pre-Training for Detection Transformers. IEEE Transactions on Pattern Analysis and Machine Intelligence 2023, 45, 12772–12782. [Google Scholar] [CrossRef]

- Chi, Z.; Huang, H.; Liu, L.; Bai, Y.; Gao, X.; Mao, X. L. Can Pretrained English Language Models Benefit Non-English NLP Systems in Low-Resource Scenarios? IEEE/ACM Transactions on Audio, Speech, and Language Processing 2023, 1–14. [Google Scholar] [CrossRef]

- Chen, L. W.; Rudnicky, A. Exploring Wav2vec 2.0 Fine Tuning for Improved Speech Emotion Recognition. 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); 2023; pp. 1–5. [Google Scholar]

- Liao, W.; Zhang, Q.; Yuan, B.; Zhang, G.; Lu, J. Heterogeneous Multidomain Recommender System Through Adversarial Learning. IEEE Transactions on Neural Networks and Learning Systems 2022, 1–13. [Google Scholar] [CrossRef]

- Badawy, M.; Balaha, H. M.; Maklad, A. S.; Almars, A. M.; Elhosseini, M. A. Revolutionizing Oral Cancer Detection: An Approach Using Aquila and Gorilla Algorithms Optimized Transfer Learning-Based CNNs. Biomimetics 2023, 8. [Google Scholar] [CrossRef]

- Tian, J.; Dai, X.; Ma, C. Y.; He, Z.; Liu, Y. C.; Kira, Z. Trainable Projected Gradient Method for Robust Fine-Tuning. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2023; pp. 7836–7845. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; Uszkoreit, J.; Houlsby, N. An image is worth 16x16 words: transformers for image recognition at scale. In International Conference on Learning Representations (ICLR) 2021. [Google Scholar] [CrossRef]

- Kornblith, S.; Shlens, J.; Le, Q. V. Do Better ImageNet Models Transfer Better? 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2019; pp. 2656–2666. [Google Scholar]

- LI, X.; Grandvalet, Y.; Davoine, F. Explicit Inductive Bias for Transfer Learning with Convolutional Networks. In Proceedings of the 35th International Conference on Machine Learning 2018, pp, 2825–2834. [Google Scholar]

- Peters, M. E.; Ruder, S.; Smith, N. A. To Tune or Not to Tune? Adapting Pretrained Representations to Diverse Tasks. In In Proceedings of the 4th Workshop on Representation Learning for NLP 2019, pp, 7–14. [Google Scholar]

- Xu, R.; Luo, F.; Zhang, Z.; Tan, C.; Chang, B.; Huang, S.; Huang, F. Raise a Child in Large Language Model: Towards Effective and Generalizable Fine-tuning. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing 2021, pp, 9514–9528. [Google Scholar]

- Shen, Z.; Liu, Z.; Qin, J.; Savvides, M.; Cheng, K.-T. Partial Is Better Than All: Revisiting Fine-tuning Strategy for Few-shot Learning. In Proceedings of the AAAI Conference on Artificial Intelligence 2021, pp, 9594–9602. [Google Scholar] [CrossRef]

- Wang, W.; Chen, Y.; Ghamisi, P. Transferring CNN With Adaptive Learning for Remote Sensing Scene Classification. IEEE Transactions on Geoscience and Remote Sensing 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Nagae, S.; Kanda, D.; Kawai, S.; Nobuhara, H. Automatic layer selection for transfer learning and quantitative evaluation of layer effectiveness. Neurocomputing 2022, 469, 151–162. [Google Scholar] [CrossRef]

- Jang, Y.; Lee, H.; Hwang, S. J.; Shin, J. Learning What and Where to Transfer. In Proceedings of the 36th International Conference on Machine Learning 2019, pp, 3030–3039. [Google Scholar]

- Guo, Y.; Li, Y.; Wang, L.; Rosing, T. Adafilter: Adaptive filter fine-tuning for deep transfer learning. In Proceedings of the AAAI Conference on Artificial Intelligence 2020, pp, 4060–4066. [Google Scholar] [CrossRef]

- Wen, Y. W.; Peng, S. H.; Ting, C. K. Two-Stage Evolutionary Neural Architecture Search for Transfer Learning. IEEE Transactions on Evolutionary Computation 2021, 25, 928–940. [Google Scholar] [CrossRef]

- Mendes, R. d. L.; Alves, A. H. d. S.; Gomes, M. d. S.; Bertarini, P. L. L.; Amaral, L. R. d. Many Layer Transfer Learning Genetic Algorithm (MLTLGA): a New Evolutionary Transfer Learning Approach Applied To Pneumonia Classification. In 2021 IEEE Congress on Evolutionary Computation (CEC) 2021, pp, 2476–2482. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Proceedings of the 27th International Conference on Neural Information Processing Systems 2014, pp, 3320–3328. [Google Scholar]

- Chen, M.; Radford, A.; Child, R.; Wu, J.; Jun, H.; Luan, D.; Sutskever, I. Generative pretraining from pixels. In Proceedings of the 37th International Conference on Machine Learning 2020, pp, 1691–1703. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P. J. Exploring the limits of transfer learning with a unified text-to-text transformer. The Journal of Machine Learning Research 2020, 21, 5485–5551. [Google Scholar]

- Raghu, S.; Sriraam, N.; Temel, Y.; Rao, S. V.; Kubben, P. L. EEG based multi-class seizure type classification using convolutional neural network and transfer learning. Neural Networks 2020, 124, 202–212. [Google Scholar] [CrossRef]

- Basha, S. H. S.; Vinakota, S. K.; Pulabaigari, V.; Mukherjee, S.; Dubey, S. R. AutoTune: Automatically Tuning Convolutional Neural Networks for Improved Transfer Learning. Neural Networks 2021, 133, 112–122. [Google Scholar] [CrossRef]

- Zunair, H.; Mohammed, N.; Momen, S. Unconventional Wisdom: A New Transfer Learning Approach Applied to Bengali Numeral Classification. In 2018 International Conference on Bangla Speech and Language Processing (ICBSLP) 2018, pp, 1–6. [Google Scholar]

- Ghafoorian, M.; Mehrtash, A.; Kapur, T.; Karssemeijer, N.; Marchiori, E.; Pesteie, M.; Guttmann, C. R. G.; de Leeuw, F.-E.; Tempany, C. M.; van Ginneken, B.; Fedorov, A.; Abolmaesumi, P.; Platel, B.; Wells, W. M. Transfer Learning for Domain Adaptation in MRI: Application in Brain Lesion Segmentation. In Medical Image Computing and Computer Assisted Intervention-MICCAI; 2017; pp. 516–524. [Google Scholar]

- Guo, Y.; Shi, H.; Kumar, A.; Grauman, K.; Rosing, T.; Feris, R. SpotTune: Transfer Learning Through Adaptive Fine-Tuning. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2019; pp. 4800–4809. [Google Scholar]

- Chen, L.; Yuan, F.; Yang, J.; He, X.; Li, C.; Yang, M. User-Specific Adaptive Fine-Tuning for Cross-Domain Recommendations. IEEE Transactions on Knowledge and Data Engineering 2023, 35, 3239–3252. [Google Scholar] [CrossRef]

- Vrbančič, G.; Podgorelec, V. Transfer Learning With Adaptive Fine-Tuning. IEEE Access 2020, 8, 196197–196211. [Google Scholar] [CrossRef]

- Hasana, M. M.; Ibrahim, M.; Ali, M. S. Speeding Up EfficientNet: Selecting Update Blocks of Convolutional Neural Networks using Genetic Algorithm in Transfer Learning. arXiv 2023, arXiv:00261. [Google Scholar]

- Zeng, N.; Wang, Z.; Liu, W.; Zhang, H.; Hone, K.; Liu, X. A Dynamic Neighborhood-Based Switching Particle Swarm Optimization Algorithm. IEEE Transactions on Cybernetics 2022, 52, 9290–9301. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhuang, F.; Wang, D. Aligning domain-specific distribution and classifier for cross-domain classification from multiple sources. In Proceedings of the 33 AAAI Conference on Artificial Intelligence 2019, pp, 5989–5996. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G. Learning multiple layers of features from tiny images. Technical report 2009. [Google Scholar]

- Yamada, Y.; Otani, M. Does Robustness on ImageNet Transfer to Downstream Tasks? 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2022; pp. 9205–9214. [Google Scholar]

- Khosla, A.; Jayadevaprakash, N.; Yao, B.; Li, F.-F. Novel dataset for fine-grained image categorization: Stanford dogs. Proc. CVPR workshop on fine-grained visual categorization (FGVC) 2011. [Google Scholar]

- Quattoni, A.; Torralba, A. Recognizing indoor scenes. In 2009 IEEE Conference on Computer Vision and Pattern Recognition 2009, pp, 413–420. [Google Scholar]

- Griffin, G.; Holub, A.; Perona, P. Caltech-256 object category dataset. California Institute of Technology 2007. [Google Scholar]

- Maji, S.; Rahtu, E.; Kannala, J.; Blaschko, M.; Vedaldi, A. J. A. P. A. Fine-grained visual classification of aircraft. arXiv 2013, arXiv:1306.5151 2013. [Google Scholar]

- Bilen, H.; Fernando, B.; Gavves, E.; Vedaldi, A.; Gould, S. Dynamic Image Networks for Action Recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2016, pp, 3034–3042. [Google Scholar]

- Lake, B. M.; Salakhutdinov, R.; Tenenbaum, J. B. Human-level concept learning through probabilistic program induction. Science 2015, 350, 1332–1338. [Google Scholar] [CrossRef]

| Target Datasets | Training Instances | Evaluation Instances | Classes |

|---|---|---|---|

| Stanford Dogs [38] | 12000 | 8580 | 120 |

| MIT Indoor [39] | 5360 | 1340 | 67 |

| Caltech256-30 [40] | 7680 | 5120 | 256 |

| Caltech256-60 [40] | 15360 | 5120 | 256 |

| Aircraft [41] | 3334 | 3333 | 100 |

| UCF-101 [42] | 7629 | 1908 | 101 |

| Omniglot [43] | 19476 | 6492 | 1623 |

| Selection Strategy | MIT Indoor | Stanford Dogs | Caltech256-30 | Caltech256-60 | Aircraft | UCF-101 | Omniglot |

|---|---|---|---|---|---|---|---|

| Random Selection | 77.91% | 81.32% | 77.59% | 79.02% | 49.50% | 72.43% | 85.15% |

| AMLS | 79.10% | 86.30% | 82.91% | 86.05% | 62.31% | 80.54% | 87.76% |

| Loss Function | MIT Indoor | Stanford Dogs | Caltech256-30 | Caltech256-60 | Aircraft | UCF-101 | Omniglot |

|---|---|---|---|---|---|---|---|

| (CE-loss, Model 1) | 76.19% | 84.79% | 82.59% | 84.65% | 54.39% | 76.25% | 87.30% |

| (CE-loss, Model 2) | 70.74% | 75.86% | 67.91% | 77.16% | 51.45% | 76.25% | 86.59% |

| (CE-loss, Model 1 and 2) | 78.95% | 85.31% | 81.03% | 84.86% | 57.03% | 78.13% | 87.53% |

| (MC-loss, Model 1 and 2) | 79.10% | 86.30% | 82.91% | 86.05% | 62.31% | 80.54% | 87.76% |

| Method | MIT Indoor | Stanford Dogs | Caltech256-30 | Caltech256-60 | Aircraft | UCF-101 | Omniglot |

|---|---|---|---|---|---|---|---|

| Train-From-Scratch | 40.82% | 42.45% | 25.41% | 47.55% | 12.12% | 43.61% | 84.82% |

| Standard Fine-Tuning [12] | 76.64% | 79.02% | 77.53% | 82.57% | 56.10% | 76.83% | 87.21% |

| L2-SP [13] | 76.41% | 79.69% | 79.33% | 82.89% | 56.52% | 74.33% | 86.92% |

| Child-Tuning [15] | 77.83% | 81.13% | 80.19% | 83.63% | 55.92% | 77.40% | 87.32% |

| AdaFilter [20] | 77.53% | 82.44% | 80.62% | 84.31% | 55.41% | 76.99% | 87.46% |

| ALS [18] | 76.64% | 83.34% | 80.93% | 84.21% | 56.04% | 75.78% | 87.09% |

| Ours | 79.10% | 86.30% | 82.91% | 86.05% | 62.31% | 80.54% | 87.76% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).