Introduction

In everyday communication, people often encounter noisy environments, which affect their ability to listen effectively. Everyday listening is typically manageable due to the brain's ability to focus on relevant sounds and filter out distractions (Kerlin et al., 2010). However, individuals with auditory challenges, such as those with autism spectrum conditions (ASC), may struggle to ignore background noise and would hear and attend to sounds that others did not notice, leading to effortful listening and heightened sensitivity to sounds (Kirby et al., 2015; Landon et al., 2016). ASC individuals often exhibit reduced attention to language and atypical noise response, affecting their speech-in-noise (SiN) perception (O'Connor, 2012; Schwartz et al., 2018). In school settings, where effective communication is crucial, excessive listening effort can negatively impact learning, memory, and social interactions (Keen et al., 2016). This is concerning as inadequate SiN processing is linked to such challenges (Alcántara et al., 2004; Ashburner et al., 2008; Mallory & Keehn, 2021). Meanwhile, prolonged exposure to the listening effort can cause cognitive overload, resulting in negative health impacts, such as headaches, fatigue, and even depression (Pichora-Fuller et al., 2016).

Despite its importance, research into listening effort for ASC individuals remains underexplored. Individuals with ASC exhibit distinct speech processing differences even in quiet conditions (Huang et al., 2018; Key & D'Ambrose Slaboch, 2021; Wang et al., 2017; Yu et al., 2015; 2018; 2021, 2022). Similarly, NT children may also struggle with speech perception in noisy environments (McGarrigle et al., 2017; Prodi et al., 2019). To understand the nuanced between-group differences, previous research often focused on perception performance using task-based measures such as recognition threshold and accuracy rate, or assessed listening effort through questionnaires and scales like the Short Sensory Profile and the Listening Inventory for Education (Groen et al., 2009; Mair, 2013; Schafer et al., 2020). However, the performance data have limitations as listeners may expend varying amounts of effort with different cognitive strategies to achieve similar intelligibility scores (Koelewijn et al., 2012). Self-reported measures and caregiver questionnaires may indicate listening effort, but they lack objective quantification and can be biased or inaccurate sometimes. To our knowledge, two ASC studies have used objective measures for listening effort. Rance et al. (2017) compared the cortisol response of 26 children with ASC before and after a word-level SiN recognition task and found a non-significant increase over the course, but a significant correlation between poorer recognition and higher cortisol levels. More recently, Feldman et al. (2022) adopted a dual-task paradigm on 32 children and adolescents with ASC, revealing longer response times for complex stimuli compared to simpler ones during SiN recognition tasks.

The two studies are informative, but certain aspects warrant further exploration. Both studies lacked matched neurotypical (NT) comparison groups. Previous research indicates that NT children also struggle with processing speech in noisy environments, showing poorer performance or more effort compared to quiet settings (e.g., McGarrigle et al., 2017; Prodi et al., 2019). It is necessary to examine whether children with ASC truly underperform their NT peers or show extra effort during SiN perception tasks. These studies also failed to fully account for the potential impact of atypical speech perception in ASC children when evaluating their SiN processing. Preceding studies have highlighted atypical speech-specific processing in the ASC population (Key & D'Ambrose Slaboch, 2021). Subpar performance or heightened effort in autism might exist even in quiet conditions, not just due to background noise. To assert that noise significantly affects children with ASC, baseline data on their speech perception in quiet settings is crucial. Furthermore, both studies lacked comprehensive demographic considerations like bilingualism and socioeconomic status, which are known to influence SiN processing in different ways (Schwab & Lew-Williams, 2016; Skoe & Karayanidi, 2019). Noticeably, previous measurements could be indirect and process-independent to the discussion on listening effort. The cortisol response is engendered by the hypothalamic-pituitary-adrenal axis activity associated with particularly psychosocial stressors and feelings of distress (Francis & Love, 2020). However, listening effort as a cognitive demand might not directly lead to increased stress or related neurochemicals. The dual-task paradigm offers insights into resource allocation but falls short of revealing real-time cognitive processes underpinning auditory behaviors.

Pupillometry, as an objective and non-invasive tool to measure online pupil dilation, has proven effective for assessing listening effort (Koelewijn et al., 2015; Koelewijn et al., 2017; Wendt et al., 2018; Zekveld et al., 2014). It is a sensitive and viable measure suitable for testing ages as young as 2 years old (Blaser et al., 2014). To date, a few studies have adopted this method to assess the listening effort in children during SiN processing (e.g., Cooper et al., 2015; McGarrigle et al., 2017). While these studies demonstrated the feasibility of using pupillometry for up to 40 minutes in school-aged children, its potential for ASC individuals remains largely untapped. Extant pupillometric studies on autism primarily address pupillary light reflex and task-evoked pupillary response to visual stimuli, with limited focus on speech processing (see de Vries et al., 2021 for a review). Nevertheless, pupillometry can be particularly advantageous when functional auditory measures are not available or sufficiently reliable due to participants’ inadequate cooperation or restricted verbal ability, which are both particularly common in cases of children with ASC. It is also a promising and translational tool to assess listening effort and illuminating precise temporal indications of online speech perception processes in the ASC population, offering a novel avenue for understanding their SiN difficulties and the different patterns behind them.

Pupillary response analysis typically centers on three metrics: peak dilation/amplitude (the maximum pupil size within a post-stimulus interval), peak latency (the time taken to reach the peak dilation), and mean dilation over the same time window (Zekveld et al., 2011). Specifically, peak dilation is a robust indicator of maximum task-elicited cognitive load, marking the zenith of listening effort at a particular moment. Mean dilation gauges the sustained cognitive load throughout a task, reflecting the overall allocation of cognitive resources. Peak latency reflects the time required for an individual's cognitive system to fully engage and reach its peak load in response to an auditory stimulus. These metrics provide insights into the cognitive load, processing complexity, and working memory (Koelewijn et al., 2012; Wendt et al., 2016; Winn & Moore, 2018; Zekveld et al., 2010, 2011). Cumulative evidence indicates that interindividual capacity differences affect speech perception specifically in difficult conditions where greater allocated capacity boosts performance and processing load (Zekveld et al., 2018). ASC individuals often focus on details rather than global context, which can hinder their capacity to process more intricate linguistic or social information and curtail their ability for multimodal integration (Thye et al., 2018; Yi et al., 2022). Goldknopf (2013) postulated that in autism, especially in those with pronounced sensory symptoms, resources might be either narrowed or reduced, potentially with atypical intensity in restricted aspects (such as fewer modalities, less processing, and smaller cortical areas) or with a reduced amount allotted to each of those. Such allocation patterns might manifest as reduced peak latency and increased peak amplitude during SiN perception.

So far, it has remained unclear how the aforementioned metrics behave in the ASC population and which aspects best distinguish ASC from NT populations during auditory tasks. To address these gaps, we adopted a 2×2 mixed factorial design to examine the effects of the subject group (ASC vs. NT) and listening conditions (Quiet vs. Noise) on the three pupillometry indices of listening effort in Mandarin-speaking school-age children. The study aimed to uncover how ASC individuals allocate cognitive resources during listening tasks. It hypothesized that noise would increase listening effort for both groups, and ASC individuals would demonstrate unique patterns regardless of listening conditions. An interaction effect between conditions and groups was also anticipated, indicating increased differences in ASC individuals' listening effort under noisy conditions. The findings will shed light on the nature of listening effort for SiN on group and individual levels, potentially paving the way for targeted interventions.

Method

Participants

This study was approved by the Institutional Review Board of Shanghai Mental Health Center. Written informed consent was obtained before experiments. A total of 45 children were tested, and three children with ASC were excluded due to failure to capture substantial pupillometric data, resulting in age- and sex-matched cohorts of 23 ASC and 19 NT individuals. All participants were from middle-class families in nearby cities including Shanghai, primarily speaking Mandarin Chinese and Wu dialects. Most had prior English learning experience, except two young ASC participants. All were right-handed with no history of visual or hearing impairments, neurological injuries, or disorders such as Fragile X syndrome, Down syndrome, and epilepsy, which can co-occur with autism but have distinct physical symptoms. Due to the symptom overlap between ASC and attention-deficit/hyperactivity disorder (ADHD) that could skew results, participants were screened for ADHD using the Chinese version of the Swanson, Nolan, and Pelham version IV scale (SNAP-IV; Gau et al., 2009), revealing no significant between-group differences between groups (

Table 1).

Both groups were evaluated using the Chinese version of the Autism Behavior Checklist (ABC; Krug et al., 1980) under the supervision of licensed psychologists. The ASC group scored above the threshold of 68, indicating a high probability of autism, while the NT group scored below 31. Diagnoses in the ASC group were confirmed through comprehensive assessments adhering to American Psychiatric Association criteria (2013), conducted by accredited pediatric neurologists using the Childhood Autism Rating Scale (CARS; Schopler et al., 1980). ASC group scores fell within the mild to moderate range (30 to 36).

Participants were informed to refrain from caffeine and adrenergic-related medications 48 hours before testing to avoid affecting pupillary responses (Abokyi et al., 2017; Reimer et al., 2016). Cognitive abilities were assessed by two certified examiners using the Wechsler Intelligence Scale for Children 4th edition-Chinese version (WISC-IV-Chinese; Zhang, 2009). All had IQ scores above 70, indicating no intellectual disability, though a slight between-group IQ difference was noted (

Table 1). A certified audiologist measured the pure-tone hearing thresholds of participants. Both ears were ≤ 20 dB hearing level (HL) at frequencies of 250, 500, 1000, 2000, and 4000 Hz, and ≤ 30 dB HL at 6000 and 8000 Hz. As prior research (Ohlenforst et al., 2018; Wendt et al., 2018) suggests a correlation between the peak dilation and speech recognition thresholds (SRTs, the minimum sound intensity level for identifying speech at 50% accuracy), we also measured participants’ SRTs using an adaptive procedure. Subsequent analysis revealed no significant between-group SRT differences (

Table 1).

Stimuli

Sentences from the Mandarin Hearing in Noise Test for Children (MHINT-C; Chen & Wong, 2020), spoken by a male speaker, were delivered at a fixed 65 dBA as stimuli. Prior research linked peak pupillary dilation with receptive vocabulary knowledge, suggesting that comprehension might affect pupillary responses. A questionnaire assessed word familiarity in ASC children's parents. Sentences with similar unfamiliar word percentages were chosen for pupillometric tests. Word familiarity's potential impact on pupillary dilation was examined through Pearson's correlation, showing a weak and significant association (r = 0.18, p = 0.005) with limited influence on pupillary responses in our study.

During SiN perception tasks, a speech-spectrum-shaped steady-state masker was presented at 0 dB signal-to-noise ratio (SNR), ensuring equal signal and noise power. Normal-hearing adults' listening effort peaks around their SRT value, nearly -4 dB SNR (Wendt et al., 2018). In general, children require more favorable SNRs for adult-like SiN task performance (e.g., Leibold & Buss, 2013; Nittrouer & Boothroyd, 1990; Schafer et al., 2018). This difference might be more pronounced in children with ASC, potentially requiring even higher SNRs. To manage this, a 0 dB SNR based on participants' SRT data was chosen, aiming to prevent task disengagement due to challenging SNR conditions and mitigate potential confounding effects. Careful masker selection was also vital to avoid result confounding. Masker attributes, acoustical and linguistic, significantly impact listening effort. Complex maskers can lead to disengagement, particularly in children whose cognitive capacities are still developing (Johnson et al., 2014; Koelewijn et al., 2012; Ohlenforst et al., 2018; Wendt et al., 2017). Therefore, a basic steady-state noise masker was chosen to minimize potential disengagement, which was validated in our recent study (Xu et al., 2023).

Apparatus and Settings

The study utilized an eye-tracking system (Eyelink 1000, SR Research Ltd., Canada) with a 250 Hz sampling rate to record stable pupil diameter. Participants were seated in front of a display PC and an infrared camera that tracked eye and head movements. Adjustments were made for chair height and distance to the eye tracker, ensuring stable pupil response. Participants sat in a chinrest at a fixed distance from a 21-inch computer monitor (1920x1080, 144Hz, luminance 55 lx), while room lighting varied (60±12 lx). Room illumination was individually adjusted to the median pupil size value of its dynamic range, reducing ceiling and floor effects in pupil dilation responses (Zekveld et al., 2014) to make the dilation independent of possible between-group differences in the tonic pupil size (Anderson and Colombo, 2009).

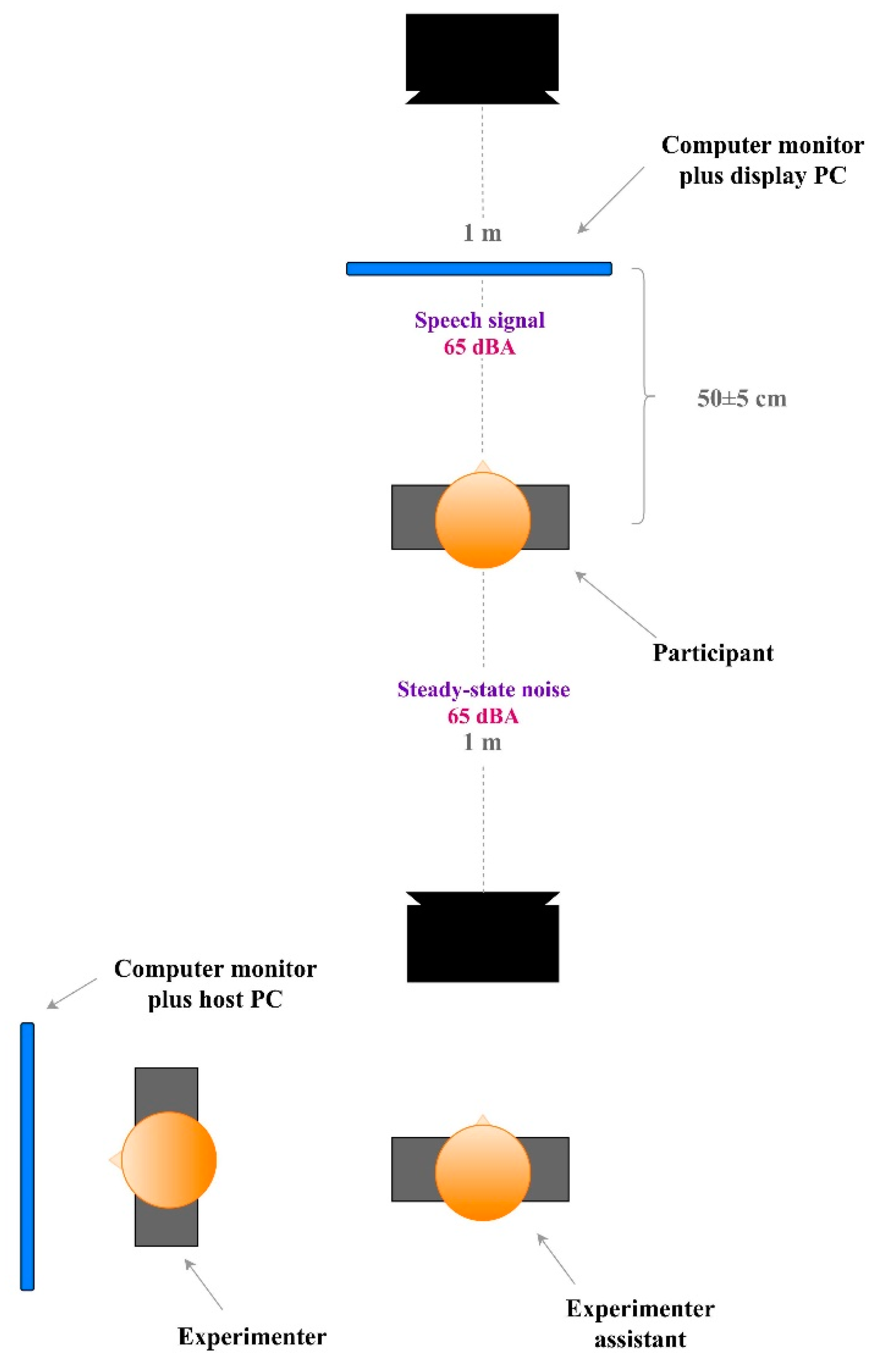

Stimulus presentation was controlled using MATLAB and PsychToolbox on a display PC. Test stimuli were delivered through a sound card and presented by loudspeakers situated in a double-walled, acoustically treated room with background noise below 25 dBA. The speech signal from the MHINT-C was presented via a loudspeaker located at 0° azimuth, while the masker was via a loudspeaker sited at 180° azimuth. Both loudspeakers were set at head level at a distance of one meter from the participant. The primary experimenter sat in front of the host PC to keep track of the pupillary state and record verbal responses while a trained research assistant was seated behind the rear loudspeaker to record simultaneously as a reliability check. An illustration of setting details is provided in

Figure 1.

Procedure

Participants had two lab visits: one for screening and recruitment, and another for experiments. During the first visit, all children underwent pre-recruitment assessments, including intelligence tests and pure tone audiometry. For the ASC group, guardians completed questionnaires about their children's medical history and familiarity with MHINT-C words.

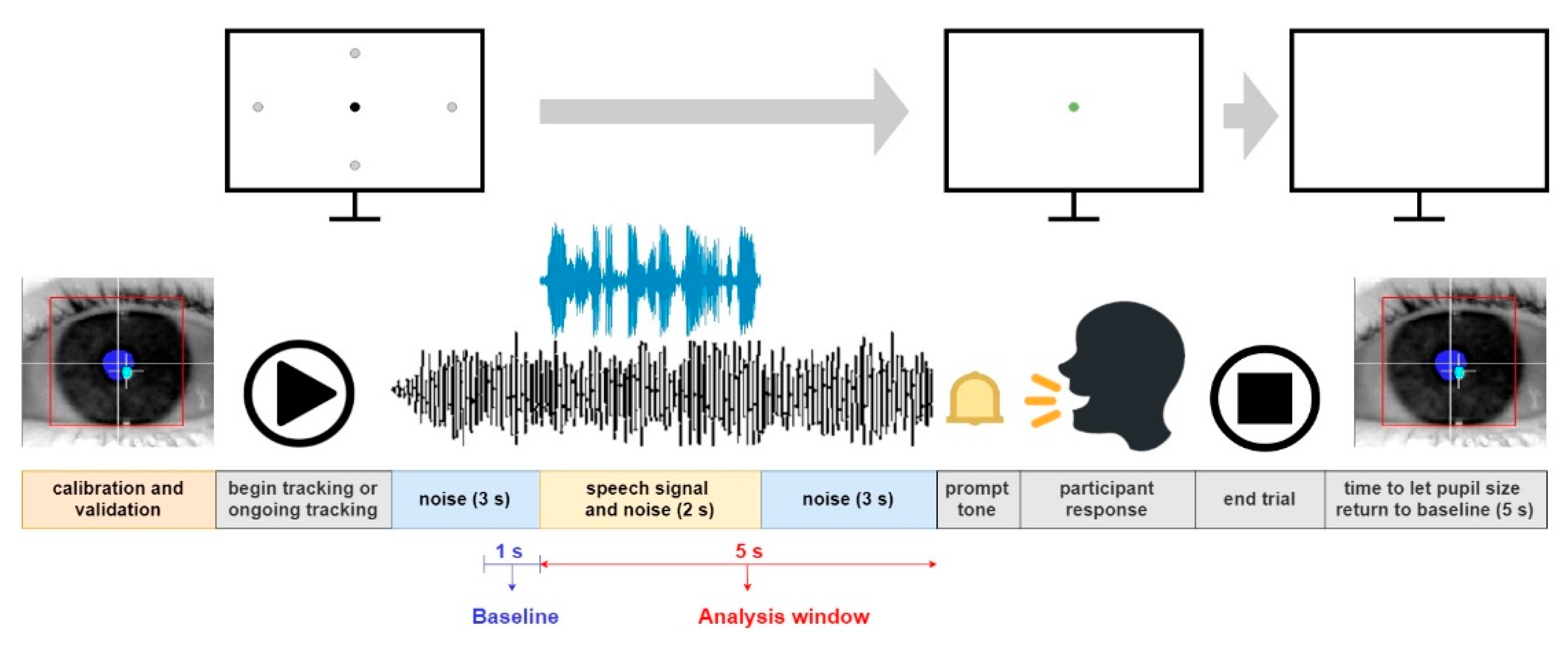

In the second visit, participants completed pupillometric tests in randomized sequences to counterbalance order effects. Before starting, the eye gaze position was validated and calibrated using a 9-point grid. A training block of 10 trials familiarized participants with the procedure and setup. Each treatment level had 10 trials. Masker noise began 3 seconds before sentence onset and ended 3 seconds after sentence offset. A black fixation dot (15x15 pixels) was displayed at the center or midpoints of the screen's sides to sustain younger participants' attention. Participants were guided to gaze at the dot, repeat the sentence after noise cessation, and click 'next' or tap the space bar to continue. Assistance was given as needed. A 5-second pause followed each trial to return pupil size to baseline. NT group measurement took about 30 minutes, and ASC group measurement took around 50 minutes, with 10-minute breaks in between. See

Figure 2 for a detailed event sequence.

Statistical Analyses

Data preprocessing and statistical analyses were conducted using R version 4.2.2 (R Core Team, 2022). Raw accuracy data, expressed as percent correct scores, were transformed into rationalized arcsine units (RAU; Studebaker et al., 1995) due to non-normal distribution. Pupil data were preprocessed using the gazeR package (Geller et al., 2020). Mean pupil dilations and standard deviations were calculated for traces from 3 seconds before speech onset to noise offset. Pupil diameters below three standard deviations were treated as eye blinks, and rectified by linear interpolation. Trials with over 25% eye-blink duration, artifacts, or missing data were removed. Incorrect behavioral responses were excluded to eliminate confounding. A 5-second moving average filter smoothed remaining trials, and baseline pupil size was subtracted to control for arousal-related size variation.

Peak dilation was the maximum within 5 seconds from speech onset to prompt tone onset. Peak latency was relative to sentence onset. Linear mixed-effects models (LMMs) analyzed performance and pupillometric measures (peak dilation and latency), considering participants' variability and dependencies. LMMs were created using lmerTest (Kuznetsova et al., 2017), guided by AIC (Akaike information criterion), and inspected for normality and homoscedasticity. Interaction terms were tested using emmeans or emtrends (Bonferroni/Kenward-Roger adjusted) from the emmeans package (Lenth, 2020).

Generalized additive mixed models (GAMMs) were used to analyze holistic pupillary changes over time. GAMMs accounted for temporal effects more accurately. Data were down-sampled to 50 Hz without additional smoothing. mgcv and itsadug packages were used for GAMMs. A backward stepwise selection based on visual inspection and summary statistics fitted parsimonious models. AIC assessed model strength. The goodness-of-fit was assessed with acf_resid and acf_plot functions. The scaled-t family transformed residuals to normality. The final GAMM included a Group effect and a Group×Condition interaction, estimating nonlinear regression lines for each Group level. Random smooth for Time and Event accounted for non-linear random variances. This term compensated for participants' reduced attention and increased pupil size during the experiment (Boswijk et al., 2020).

Results

Speech Recognition Accuracy

Table 2 displays descriptive statistics and the LMM results for accuracy data (in RAU). A Type III ANOVA on the LMM with Satterthwaite's method revealed significant main effects of

Condition [

F(1,40) = 90.23,

p < 0.0001,

η²p = 0.69] and

Group [

F(1,40) = 24.49,

p < 0.0001,

η²p = 0.38], along with a significant interaction between them [

F(1,40) = 34.68,

p < 0.0001,

η²p = 0.46]. Post-hoc pairwise comparisons revealed significant accuracy differences between groups in noisy conditions (estimate

NT-ASC= 2.12, SE = 0.33, t = 6.36, p < 0.0001), indicating reduced accuracy in the ASC group when exposed to noise. Conversely, no significant group difference emerged in quiet conditions (p = 0.33). Specifically, noise significantly compromised accuracy for ASC participants (estimate

Quiet- Noise = 2.20, SE = 0.19, t = 11.44, p < 0.0001), while showing negligible impact on NT participants (p = 0.12).

Pupillometric Measures

Figure 3 illustrates peak dilation (left panel) and peak latency (right panel) statistics across the four experimental levels. Meanwhile,

Table 3 and

Table 4 summarize LMM results for peak dilation and peak latency, respectively.

LMM for peak dilation revealed a significant Group main effect [F(1, 37.47) = 29.80, p < 0.001, η²p = 0.44]. However, Condition (p=0.28) and IQ (p=0.67) had no significant main effects. Notably, significant interactions emerged: Group×Condition [F(1, 726.88) = 5.68, p<0.05, η²p = 0.001] and Condition×IQ [F(1, 724.58) = 7.96, p<0.01, η²p = 0.01]. The post hoc tests for the first interaction showed significant between-group differences in both quiet (estimateNT-ASC= -0.08, SE = 0.02, t = -3.85, p < 0.01) and noisy conditions (estimateNT-ASC= -0.12, SE = 0.02, t = -5.94, p < 0.0001). Surprisingly, the ASC group's noise-related peak dilation resembled the NT group's in quiet (p = 1.00). Both groups exhibited greater peak dilation in noise compared to quiet (ASC: estimateQuiet-Noise= -0.14, SE = 0.01, t = -12.21, p < 0.0001; NT: estimateQuiet- Noise= -0.10, SE = 0.01, t = -7.73, p < 0.0001). Regarding the second interaction, post-hoc analysis revealed an estimated marginal trend for IQ in quiet at -0.001 [SE = 0.0007, 95% CI = (-0.002, 0.0002), t = -1.64, p = 0.11], and 0.0006 in noise [SE = 0.0007, 95% CI = (-0.0008, 0.002), t = 0.86, p = 0.39]. A significant difference between these trends was confirmed [estimateQuiet-Noise = -0.002, SE = 0.0006, t = -2.82, p < 0.01]. These findings suggest higher IQ is associated with reduced effort in quiet settings, while effort amplifies in noisy conditions.

Moreover, LMM for the peak latency revealed a significant Group main effect [F(1, 39.34) = 226.34, p < 0.0001, η²p = 0.85], indicating substantial peak latency differences between groups. ASC participants displayed approximately 936.85 ms shorter peak latency than NT participants (p < 0.001). While the Condition did not have a significant effect (p = 0.39), the Group×Condition interaction was highly significant [F(1, 732.52) = 99.07, p<0.0001, η²p = 0.12], indicating an intricate interplay between these factors. Post hoc analysis unveiled that in quiet, NT's peak latency exceeded ASC's (estimateNT-ASC= 936.8, SE = 51.9, t = 18.06, p < 0.0001). Comparing both groups in noise, NT also had longer peak latency (estimateNT-ASC= 358.6, SE = 52.0, t = 6.89, p < 0.0001). ASC consistently exhibited shorter peak latencies regardless of listening conditions. NT's peak latency did not significantly differ between conditions (p = 0.82). Conversely, ASC's peak latency significantly increased from quiet to noise (estimateQuiet-Noise= -638.3, SE = 37.1, t = -17.21, p < 0.0001), underlining group distinctions in auditory processing and broader cognitive mechanisms. For the second interaction, the modulatory effect of IQ on the Condition's impact on peak latency was evident [F(2, 74.28) = 4.46, p<0.05, η²p = 0.11]. Post-hoc trend analysis showed a differential impact of IQ on peak latency across listening conditions. For the quiet condition, the relationship between IQ and peak latency was negligible, as evidenced by a slope of 0.47 (p = 0.80). In contrast, noisy conditions showed a strong positive association with a slope of 5.37 [SE = 1.88, 95% CI (1.63, 9.12), t = 2.86, p<0.01]. A direct trend comparison confirmed a significantly stronger IQ-peak latency effect in noise than in quiet (estimateQuiet-Noise= -4.9, SE = 2.1, t = -2.34, p < 0.05), implying higher IQ individuals may have prolonged peak latencies in challenging auditory environments.

Pupillary Time Course Analysis

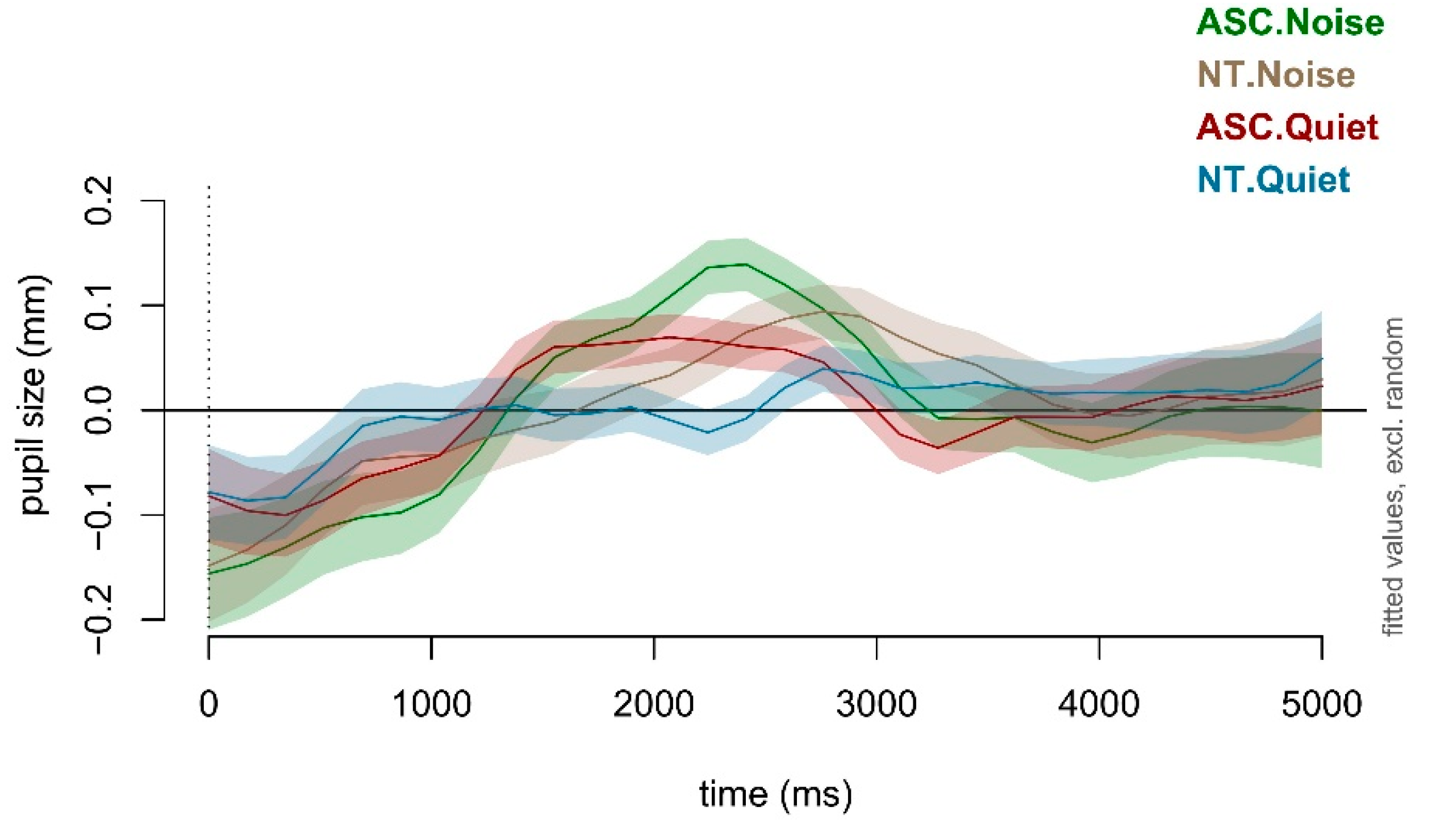

The final GAMM captured 60.60% of deviance, signifying its robust ability to model pupil response variation over time.

Table 5 outlines the GAMM output. A significant main effect of

Condition emerged: Participants generally demonstrated greater pupil dilation—reflecting heightened listening effort—in noise compared to quiet over the 5-second analysis window. The main effect of

Group did not show statistical significance; however, the

Group×

Condition interaction was significant. This interaction was explored through post hoc testing using the

emmeans function and detailed visualization via

plot_smooth and

plot_diff functions.

Table 6 shows that after

Bonferroni correction, NT's mean estimate surpassed ASC's across listening conditions. Notably, NT's mean dilation increased in noise relative to quiet, while ASC's showed no substantial difference.

Figure 4 displays estimated effects across experimental levels, revealing ASC's heightened variability in pupil size throughout analyses. About 1000 ms post sentence onset, both groups exhibited a pupil size decrease from baseline, more pronounced in ASC. Pupil diameters then surged, peaking near speech end (around 1500 ms to 2500 ms). Intriguingly, autistic children displayed a more pronounced peak dilation and shorter latency than NT peers, aligning with prior LMM findings.

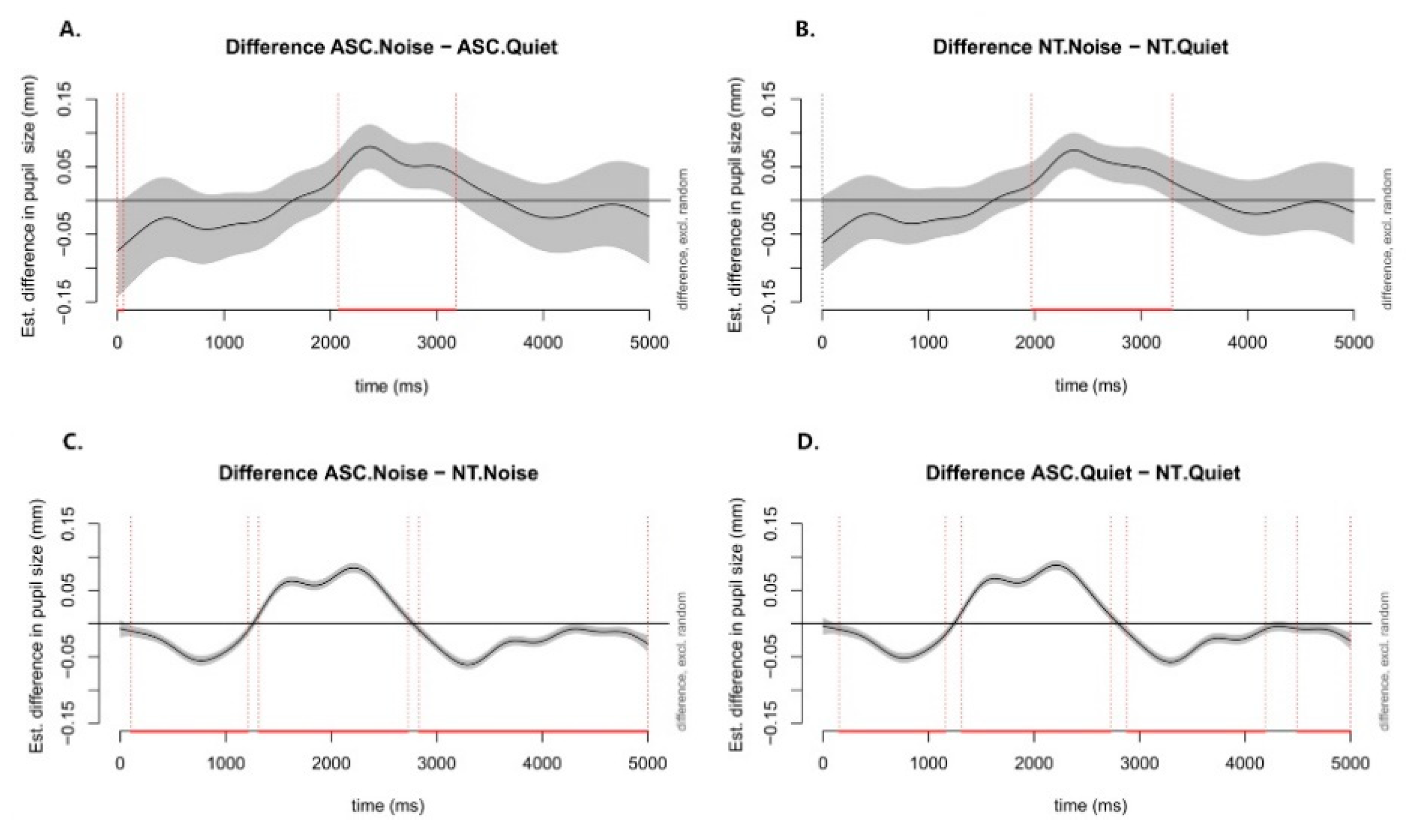

Figure 5 illustrates estimated pupil response disparities over time for four contrasts: noisy minus quiet conditions in ASC (

Figure 5A), noisy minus quiet conditions in NT (

Figure 5B), ASC minus NT in noisy conditions (

Figure 5C), and ASC minus NT in quiet conditions (

Figure 5D). Notable differences between noisy and quiet conditions appeared during the first half of the 3-second retention interval. Over an approximate 1200-ms window centered around the sentence end (2000 ms), ASC exhibited significantly larger pupil dilation compared to NT, with the reverse pattern outside this window.

Discussion

This study aimed to investigate the impact of background noise on the cognitive demands of speech perception in children with ASC compared to NT children. We utilized the relatively new pupillometry approach, demonstrating that online pupillary dilation responses can effectively reflect the cognitive workload and listening effort during SiN perception in both ASC and NT children.

Effects of Noise

The results largely confirmed our first hypothesis that demanding auditory conditions, exemplified by background noise, would heighten the cognitive resources required for listening tasks, producing increased peak dilation, prolonged peak latency, and elevated mean dilation in both groups when exposed to noisy environments. This aligns with previous research suggesting that the presence of competing auditory stimuli, such as noise, challenges cognitive systems, leading to increased demands on auditory tasks (Peelle, 2018). It is especially salient in educational settings, where students, particularly those in the early stages of auditory development, grapple with comprehending instructions amidst diverse background disturbances over extended durations.

While the challenges of processing speech in noise are notably pronounced in the ASC group, this should not be regarded as an exclusive, distinct deficit solely associated with autism. Instead, it can be understood as a broader cognitive challenge that impacts a wide range of young individuals (including NT children), albeit to varying degrees, which resonates with the principles of the neurodiversity movement (Chawner & Owen, 2022). Thus, implementing acoustic enhancements in all learning spaces or introducing interventions tailored to enhance speech processing amidst auditory complexities could prove advantageous.

The effects of noise on listening effort exhibited variability based on children's cognitive capacities, irrespective of their neurodevelopmental profiles. Higher IQ individuals showed increased effort in noise, seen in larger peak dilations and longer peak latencies, with less effect in quiet. This relates to cognitive spare capacity in terms of extra resources beyond task needs. Higher cognitive capacity allocates more resources for processing speech in noise, ensuring comprehension. In quiet, cognitive resources are unstrained, yielding reduced effort. When faced with background noise, individuals with higher cognitive capacities draw upon their cognitive reserves to surmount the barriers introduced by the noise. Their surplus cognitive resources enable them to allocate more effort towards task completion, leading to increased listening effort (Peelle, 2018). Additionally, individuals with greater cognitive resources often hold higher performance expectations and exhibit more motivation to engage in cognitively taxing tasks (Ackerman & Heggestad, 1997). They are also inclined to employ intricate top-down processing strategies, leveraging their cognitive resources to counteract degraded auditory input and meet heightened task demands (Rönnberg et al., 2013).

Between-Group Differences in Listening Effort

The data strongly supported our second hypothesis that the ASC group's listening effort patterns would deviate from those of the NT comparison group. The ASC group consistently exhibited larger peak dilations compared to their NT counterparts in comparable listening contexts. Strikingly, even under quiet conditions, the ASC group displayed peak dilations akin to those observed in NT participants exposed to noisy conditions. This divergence persisted despite similar recognition accuracy between the ASC and NT groups. These findings suggest that ASC children may need to heighten their cognitive involvement to achieve accuracy levels comparable to their NT peers, potentially to overcome underlying speech processing challenges.

Notably, the distinct speech processing patterns observed in the ASC population are well-documented. Individuals with ASC often grapple with difficulties in analyzing and manipulating phonetic and phonological speech components (Kjelgaard & Tager-Flusberg, 2001). Furthermore, they frequently exhibit reduced sensitivity to vocal cues (Fan & Cheng, 2014; Gervais et al., 2004), manifest unique speech discrimination and transcription patterns (Huang et al., 2018; Russo et al., 2009), and display altered perception of linguistic and emotional prosody (Zhang et al., 2021).

However, the data also revealed an intriguing contrast. Despite the ASC group's larger peak dilation, they showcased significantly shorter peak latencies and reduced mean dilations compared to the NT group when exposed to similar listening conditions. These patterns suggest that the ASC group might engage fewer cognitive resources, leaning towards more rapid and automated auditory processing mechanisms to address auditory input and task demands. This perspective aligns with the reduced average IQ scores of the ASC cohort compared to the NT group, indicating diminished cognitive resources available to sustain prolonged processing.

The larger mean dilation observed in the NT group resonates with the 'effort hypothesis’ – individuals with higher cognitive abilities allocate more resources to tasks, leading to augmented performance and increased processing loads (van der Meer et al., 2010; Zekveld et al., 2011). Alternatively, the resource allocation hypothesis (Goldknopf, 2013) posits that resources in autism, especially in cases with pronounced sensory symptoms, could be more constrained compared to typical cognition. Such a constraint might result in an intense and disproportionate allocation of resources across modalities, processing stages, and cognitive functions. This could explain the ASC group's larger peak dilation and shorter peak latency, indicative of intensive engagement with early speech processing stages. However, sustaining such high engagement over time might strain their limited resources, potentially elucidating the reduced mean pupil dilation observed in the ASC group compared to NT individuals. This resource competition could be accentuated in noisy conditions, as evidenced by the ASC group's rapid decline in pupil size post-peak compared to quiet conditions. Furthermore, difficulties in modulating arousal and attention, or different arousal mechanisms during auditory processing (e.g., Orekhova & Stroganova, 2014; Zhao et al., 2022), could also contribute. The ASC group might experience heightened arousal upon initial exposure to speech stimuli, potentially explaining their larger peak dilations and shorter peak latencies compared to NT peers.

It is also plausible that ASC individuals might use unique auditory strategies, prioritizing local details over global context (Happé & Frith, 2006). This focus could explain their larger peak dilation. However, challenges in integrating auditory information, especially in noise, could result in their smaller mean dilation, suggesting less holistic integration than NT children, which is consistent with what Alcántara et al. (2004) reported.

Interplay between Noise and Subject Group

The results partly support our third hypothesis that proposed an interaction between the subject group and listening conditions, indicating that discerning speech amidst noise posed greater listening effort and difficulty for children with ASC compared to their NT peers. Both groups displayed increased peak dilations in noise compared to quiet, yet the ASC group consistently exhibited significantly larger peak dilations. Furthermore, noise led to longer peak latencies and decreased recognition accuracy among ASC participants, while NT participants showed no significant effect.

Several factors may account for these response patterns. Firstly, individuals with ASC struggle with selective attention and filtering out irrelevant information (Keehn et al., 2013), which noise exacerbates, contributing to their longer latencies and reduced accuracy. Deficits in rapid auditory processing and atypical activation in auditory regions (Brock et al., 2002; Gandal et al., 2010) compromise their auditory stream segregation, a crucial ability to perceptually organize and differentiate overlapping sound sources into coherent auditory streams in complex acoustic settings, which may further compound their cognitive load in noise. Secondly, a key finding lies in the distinct mean dilation response in noise: While both cohorts exhibited increased mean dilation in noise, the ASC group’s increase was more subdued compared to the NT group, whose noise-quiet contrast reached statistical significance. The ASC group's relatively restrained increase reflected their constrained cognitive resources, which may inhibit their ability to mobilize additional resources in demanding auditory contexts (Goldknopf, 2013). Conversely, NT's robust cognitive reserve helps manage noise, enabling them to manage the heightened processing demands of challenging auditory conditions effectively. Thirdly, altered auditory cortex connectivity in autism might affect their auditory processing in noisy settings. Typical brain development features a well-integrated auditory cortex connected to various brain regions, facilitating comprehensive auditory information processing. However, in individuals with ASC, this connectivity is often disrupted, especially between the auditory cortex and regions pivotal for attention, cognitive control, and multisensory integration (Keehn et al., 2013). Such connectivity challenges in ASC can further limit resource allocation and integration, leading to diminished neural synchronization and cognitive saturation (Mamashli et al., 2017), possibly resulting in subdued dilation compared to NT peers in noisy environments.

Limitations

This study has several limitations. First, pupillometry results are subject to multiple confounding factors. Emotional arousal and personality characteristics can influence pupil dilation, as indicated by Bradley et al. (2008) and Lyall and Jarvikivi (2021). Additionally, motivation significantly affects listening effort, especially in noisy conditions (Carolan et al., 2022). This complexity in the context of sensory issues in autism may strongly influence listening effort. Shields et al. (2022) discuss challenges in measuring listening effort, revealing no consensus on the best method. Integrating physiological markers like galvanic skin response or heart rate variability may offer a more comprehensive understanding (Madsen & Parra, 2022). Second, the interventions that the participants underwent would conceivably influence the outcomes. For instance, music training or therapy can influence SiN perception (Benitez-Barrera et al., 2022; Dubinsky et al., 2019), but the impact on ASC remains controversial (Marquez-Garcia et al., 2021). Third, both sample size and diversity are vital, given the heterogeneity of the ASC population (Hand et al., 2017). Larger, more diverse samples could strengthen the statistical power of the study. Our sample included a demographic bias towards middle-class families from major cities. Socioeconomic status impacts language and auditory skills (Fernald et al., 2013; Ursache & Noble, 2016), and its effects on SiN processing require further study. While excluding co-occurring disorders helps data analysis and interpretation, it also limits the findings' applicability (Simonoff et al., 2008). Lastly, our controlled stimulus design aids data consistency but may miss real-world complexity. Steady-state noise oversimplifies real-world auditory scenes (Bronkhorst, 2015). Fixed SNR levels also do not mirror real-world variability (Best et al., 2016). Ecologically valid scenarios and stimuli using technologies like virtual reality can better replicate authentic auditory challenges (Zhang et al. 2022).

Conclusions

The current study represents an initial exploration using pupillometry to gain insights into how school-age children with ASC process auditory information in noise. Compared to their NT peers, the ASC group showed clear differences in accuracy and pupillary responses. They exhibited more prominent peak dilation and extended peak latencies in noisy conditions, whereas the NT group's variations were subtler. These patterns underscore heightened listening effort in ASC and suggest potential differences in cognitive resource allocation. The disparities in mean pupil dilation between conditions also hint at diverse cognitive resource availability and utilization. These results underscore pupillometry's value as a feasible measure of cognitive load, particularly in autism research, which has implications for the development or assessment of improved auditory settings or tailored assistive technologies for children with ASC.

Funding Statement

This study was supported by Sonova (Shanghai) Co. Ltd. and funded in part by the Major Program of the National Social Science Foundation of China (18ZDA293).

Data Availability Statement

The data that support the findings of this research are available from the corresponding authors upon reasonable request.

Acknowledgments

We extend heartfelt gratitude to the participants, their families, and undergraduate volunteers from East China Normal University and Shanghai Jiao Tong University for their generous contributions of time and effort to this study.

Conflicts of Interest

The authors declare that no competing interests exist at the time of publication.

References

- Abokyi, S., Owusu-Mensah, J., & Osei, K. A. (2017). Caffeine intake is associated with pupil dilation and enhanced accommodation. Eye, 31(4), 615-619. [CrossRef]

- Ackerman, P. L., & Heggestad, E. D. (1997). Intelligence, personality, and interests: Evidence for overlapping traits. Psychological Bulletin, 121(2), 219-245.

- Alcántara, J. I., Weisblatt, E. J., Moore, B. C., & Bolton, P. F. (2004). Speech-in-noise perception in high-functioning individuals with autism or Asperger's syndrome. Journal of Child Psychology and Psychiatry, 45(6), 1107-1114. [CrossRef]

- American National Standards Institute. (1996). Specifications for audiometers (ANSI S3.6-1996). American National Standards Institute.

- American Psychiatric Association. (2013). Diagnostic and statistical manual of mental disorders (5th ed.). American Psychiatric Publishing.

- Anderson, C. J., & Colombo, J. (2009). Larger tonic pupil size in young children with autism spectrum disorder. Developmental Psychobiology, 51(2), 207-211. [CrossRef]

- Ashburner, J., Rodger, S., & Ziviani, J. (2008). Sensory processing and classroom emotional, behavioural, and educational outcomes in children with autism spectrum disorder. American Journal of Occupational Therapy, 62(5), 564-573. [CrossRef]

- Benitez-Barrera, C. R., Skoe, E., Huang, J., & Tharpe, A. M. (2022). Evidence for a musician speech-perception-in-noise advantage in school-age children. Journal of Speech, Language, and Hearing Research, 65(10), 3996-4008. [CrossRef]

- Best, V., Keidser, G., Freeston, K., & Buchholz, J. M. (2016). A dynamic speech comprehension test for assessing real-world listening ability. Journal of the American Academy of Audiology, 27(7), 515-526. [CrossRef]

- Blaser, E., Eglington, L., Carter, A. S., & Kaldy, Z. (2014). Pupillometry reveals a mechanism for the Autism Spectrum Disorder (ASD) advantage in visual tasks. Scientific Reports, 4, 4301. [CrossRef]

- Boswijk, V., Loerts, H., & Hilton, N. H. (2020). Salience is in the eye of the beholder: Increased pupil size reflects acoustically salient variables. Ampersand, 7. [CrossRef]

- Bouvet, L., Mottron, L., Valdois, S., & Donnadieu, S. (2016). Auditory stream segregation in autism spectrum disorder: Benefits and downsides of superior perceptual processes. Journal of Autism and Developmental Disorders, 46(5), 1553-1561. [CrossRef]

- Bradley, M. M., Miccoli, L., Escrig, M. A., & Lang, P. J. (2008). The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology, 45(4), 602-607. [CrossRef]

- Brady, N. C., Anderson, C. J., Hahn, L. J., Obermeier, S. M., & Kapa, L. L. (2014). Eye tracking as a measure of receptive vocabulary in children with autism spectrum disorders. Augmentative and Alternative Communication, 30(2), 147-159. [CrossRef]

- Brock, J., Brown, C. C., Boucher, J., & Rippon, G. (2002). The temporal binding deficit hypothesis of autism. Development and Psychopathology, 14(2), 209-224.

- Bronkhorst, A. W. (2015). The cocktail-party problem revisited: early processing and selection of multi-talker speech. Attention, Perception, & Psychophysics, 77(5), 1465-1487. [CrossRef]

- Carolan, P. J., Heinrich, A., Munro, K. J., & Millman, R. E. (2022). Quantifying the effects of motivation on listening effort: A systematic review and meta-analysis. Trends in Hearing, 26, 23312165211059982. [CrossRef]

- Chawner, S., & Owen, M. J. (2022). Autism: A model of neurodevelopmental diversity informed by genomics. Frontiers in Psychiatry, 13, 981691. [CrossRef]

- Chen, Y., & Wong, L. L. N. (2020). Development of the mandarin hearing in noise test for children. International Journal of Audiology, 1-6. [CrossRef]

- Cooper, B. G., Steel, M. M., Papsin, B. C., & Gordon, K. A. (2015). Binaural fusion and listening effort in children who use bilateral cochlear implants: A psychoacoustic and pupillometric study. PLoS One, 10(2). [CrossRef]

- de Vries, L., Fouquaet, I., Boets, B., Naulaers, G., & Steyaert, J. (2021). Autism spectrum disorder and pupillometry: A systematic review and meta-analysis. Neuroscience and Biobehavioral Reviews, 120, 479-508. [CrossRef]

- Dubinsky, E., Wood, E. A., Nespoli, G., & Russo, F. A. (2019). Short-term choir singing supports speech-in-noise perception and neural pitch strength in older adults with age-related hearing loss. Frontiers in Neuroscience, 13, 1153. [CrossRef]

- Fan, Y. T., & Cheng, Y. (2014). Atypical mismatch negativity in response to emotional voices in people with autism spectrum conditions. PLoS One, 9(7), e102471. [CrossRef]

- Feldman, J. I., Thompson, E., Davis, H., Keceli-Kaysili, B., Dunham, K., Woynaroski, T., Tharpe, A. M., & Picou, E. M. (2022). Remote microphone systems can improve listening-in-noise accuracy and listening effort for youth with autism. Ear and Hearing, 43(2), 436-447. [CrossRef]

- Fernald, A., Marchman, V. A., & Weisleder, A. (2013). SES differences in language processing skill and vocabulary are evident at 18 months. Developmental Science, 16(2), 234-248. [CrossRef]

- Francis, A. L., & Love, J. (2020). Listening effort: Are we measuring cognition or affect, or both? Wiley Interdisciplinary Reviews - Cognitive Science, 11(1), e1514. [CrossRef]

- Gagné, J. P., Besser, J., & Lemke, U. (2017). Behavioral assessment of listening effort using a dual-task paradigm. Trends in Hearing, 21, 2331216516687287. [CrossRef]

- Gandal, M. J., Edgar, J. C., Ehrlichman, R. S., Mehta, M., Roberts, T. P., & Siegel, S. J. (2010). Validating gamma oscillations and delayed auditory responses as translational biomarkers of autism. Biological Psychiatry, 68(12), 1100-1106. [CrossRef]

- Gau, S. S., Lin, C. H., Hu, F. C., Shang, C. Y., Swanson, J. M., Liu, Y. C., & Liu, S. K. (2009). Psychometric properties of the Chinese version of the Swanson, Nolan, and Pelham, Version IV Scale-Teacher Form. Journal of Pediatric Psychology, 34(8), 850-861. [CrossRef]

- Geller, J., Winn, M. B., Mahr, T., & Mirman, D. (2020, Oct). GazeR: A package for processing gaze position and pupil size data. Behavior Research Methods, 52(5), 2232-2255. [CrossRef]

- Gervais, H., Belin, P., Boddaert, N., Leboyer, M., Coez, A., Sfaello, I., Barthélémy, C., Brunelle, F., Samson, Y., & Zilbovicius, M. (2004). Abnormal cortical voice processing in autism. Nature Neuroscience, 7(8), 801–802. [CrossRef]

- Goldknopf, E. J. (2013). Atypical resource allocation may contribute to many aspects of autism. Frontiers in Integrative Neuroscience, 7, 82. [CrossRef]

- Groen, W. B., van Orsouw, L., Huurne, N. t., Swinkels, S., van der Gaag, R.-J., Buitelaar, J. K., & Zwiers, M. P. (2009). Intact spectral but abnormal temporal processing of auditory stimuli in autism. Journal of Autism and Developmental Disorders, 39(5), 742-750. [CrossRef]

- Hand, B. N., Dennis, S., & Lane, A. E. (2017). Latent constructs underlying sensory subtypes in children with autism: A preliminary study. Autism Research, 10(8), 1364-1371. [CrossRef]

- Happé, F., & Frith, U. (2006). The weak coherence account: Detail-focused cognitive style in autism spectrum disorders. Journal of Autism and Developmental Disorders, 36(1), 5-25. [CrossRef]

- Huang, D., Yu, L., Wang, X., Fan, Y., Wang, S., & Zhang, Y. (2018). Distinct patterns of discrimination and orienting for temporal processing of speech and nonspeech in Chinese children with autism: An event-related potential study. European Journal of Neuroscience, 47(6), 662-668. [CrossRef]

- Hubert Lyall, I., & Jarvikivi, J. (2021). Listener's personality traits predict changes in pupil size during auditory language comprehension. Scientific Reports, 11(1), 5443. [CrossRef]

- Johnson, E. L., Miller Singley, A. T., Peckham, A. D., Johnson, S. L., & Bunge, S. A. (2014). Task-evoked pupillometry provides a window into the development of short-term memory capacity. Frontiers in Psychology, 5, 218. [CrossRef]

- Keehn, B., Muller, R. A., & Townsend, J. (2013). Atypical attentional networks and the emergence of autism. Neuroscience and Biobehavioral Reviews, 37(2), 164-183. [CrossRef]

- Keen, D., Webster, A., & Ridley, G. (2016). How well are children with autism spectrum disorder doing academically at school? An overview of the literature. Autism, 20(3), 276-294. [CrossRef]

- Kerlin, J. R., Shahin, A. J., & Miller, L. M. (2010). Attentional gain control of ongoing cortical speech representations in a "cocktail party". Journal of Neuroscience, 30(2), 620-628. [CrossRef]

- Key, A. P., & D'Ambrose Slaboch, K. (2021). Speech processing in autism spectrum disorder: An integrative review of auditory neurophysiology findings. Journal of Speech, Language, and Hearing Research, 64(11), 4192-4212. [CrossRef]

- Kirby, A. V., Dickie, V. A., & Baranek, G. T. (2015). Sensory experiences of children with autism spectrum disorder: In their own words. Autism, 19(3), 316-326. [CrossRef]

- Kjelgaard, M. M., & Tager-Flusberg, H. (2001). An investigation of language impairment in autism: Implications for genetic subgroups. Language and Cognitive Processes, 16(2-3), 287-308. [CrossRef]

- Koelewijn, T., de Kluiver, H., Shinn-Cunningham, B. G., Zekveld, A. A., & Kramer, S. E. (2015). The pupil response reveals increased listening effort when it is difficult to focus attention. Hearing Research, 323, 81-90. [CrossRef]

- Koelewijn, T., Versfeld, N. J., & Kramer, S. E. (2017). Effects of attention on the speech reception threshold and pupil response of people with impaired and normal hearing. Hearing Research, 354, 56-63. [CrossRef]

- Koelewijn, T., Zekveld, A. A., Festen, J. M., & Kramer, S. E. (2012). Pupil dilation uncovers extra listening effort in the presence of a single-talker masker. Ear and Hearing, 33(2), 291–300. [CrossRef]

- Koelewijn, T., Zekveld, A. A., Lunner, T., & Kramer, S. E. (2021). The effect of monetary reward on listening effort and sentence recognition. Hearing Research, 406, 108255. [CrossRef]

- Krug, D. A., Arick, J., & Almond, P. (1980). Behavior checklist for identifying severely handicapped individuals with high levels of autistic behavior. Journal of Child Psychology and Psychiatry, 21(3), 221-229. [CrossRef]

- Kuznetsova, A., Brockhoff, P. B., & Christensen, R. H. B. (2017). lmerTest Package: Tests in Linear Mixed Effects Models. Journal of Statistical Software, 82(13). [CrossRef]

- Landon, J., Shepherd, D., & Lodhia, V. (2016). A qualitative study of noise sensitivity in adults with autism spectrum disorder. Research in Autism Spectrum Disorders, 32, 43-52. [CrossRef]

- Leibold, L. J., & Buss, E. (2013). Children's identification of consonants in a speech-shaped noise or a two-talker masker. Journal of Speech, Language, and Hearing Research, 56(4), 1144-1155. [CrossRef]

- Lenth, R. V. (2020). emmeans: Estimated Marginal Means, aka Least-Squares Means. R package version 1.5.3. https://CRAN.R-project.org/package=emmeans.

- Lepistö, T., Kuitunen, A., Sussman, E., Saalasti, S., Jansson-Verkasalo, E., Nieminen-von Wendt, T., & Kujala, T. (2009). Auditory stream segregation in children with Asperger syndrome. Biological Psychology, 82(3), 301-307. [CrossRef]

- Madsen, J., & Parra, L. C. (2022). Cognitive processing of a common stimulus synchronizes brains, hearts, and eyes. PNAS Nexus, 1(1), pgac020. [CrossRef]

- Mair, K. R. (2013). Speech perception in autism spectrum disorder: Susceptibility to masking and interference [Dissertation, University College London]. London.

- Mallory, C., & Keehn, B. (2021). Implications of sensory processing and attentional differences associated with autism in academic settings: An integrative review. Frontiers in Psychiatry, 12, 695825. [CrossRef]

- Mamashli, F., Khan, S., Bharadwaj, H., Michmizos, K., Ganesan, S., Garel, K. A., Ali Hashmi, J., Herbert, M. R., Hamalainen, M., & Kenet, T. (2017). Auditory processing in noise is associated with complex patterns of disrupted functional connectivity in autism spectrum disorder. Autism Research, 10(4), 631-647. [CrossRef]

- Marquez-Garcia, A. V., Magnuson, J., Morris, J., Iarocci, G., Doesburg, S., & Moreno, S. (2021). Music therapy in Autism Spectrum Disorder: A systematic review. Review Journal of Autism and Developmental Disorders, 9(1), 91-107. [CrossRef]

- McGarrigle, R., Dawes, P., Stewart, A. J., Kuchinsky, S. E., & Munro, K. J. (2017). Measuring listening-related effort and fatigue in school-aged children using pupillometry. Journal of Experimental Child Psychology, 161, 95-112. [CrossRef]

- Nittrouer, S., & Boothroyd, A. (1990). Context effects in phoneme and word recognition by young children and older adults. The Journal of the Acoustical Society of America, 87(6), 2705-2715. [CrossRef]

- O'Connor, K. (2012). Auditory processing in autism spectrum disorder: A review. Neuroscience and Biobehavioral Reviews, 36(2), 836-854. [CrossRef]

- Ohlenforst, B., Wendt, D., Kramer, S. E., Naylor, G., Zekveld, A. A., & Lunner, T. (2018). Impact of SNR, masker type and noise reduction processing on sentence recognition performance and listening effort as indicated by the pupil dilation response. Hearing Research, 365, 90-99. [CrossRef]

- Orekhova, E. V., & Stroganova, T. A. (2014). Arousal and attention re-orienting in autism spectrum disorders: Evidence from auditory event-related potentials. Frontiers in Human Neuroscience, 8, 34. [CrossRef]

- Peelle, J. E. (2018). Listening effort: How the cognitive consequences of acoustic challenge are reflected in brain and behavior. Ear and Hearing, 39(2), 204-214. [CrossRef]

- Pichora-Fuller, M. K., Kramer, S. E., Eckert, M. A., Edwards, B., Hornsby, B. W., Humes, L. E., Lemke, U., Lunner, T., Matthen, M., Mackersie, C. L., Naylor, G., Phillips, N. A., Richter, M., Rudner, M., Sommers, M. S., Tremblay, K. L., & Wingfield, A. (2016). Hearing impairment and cognitive energy: The framework for understanding effortful listening (FUEL). Ear and Hearing, 37 Suppl 1, 5S-27S. Ear and Hearing, 37 Suppl 1, 5S-27S. [CrossRef]

- Porretta, V., Buchanan, L., & Jarvikivi, J. (2020). When processing costs impact predictive processing: The case of foreign-accented speech and accent experience. Attention, Perception and Psychophysics, 82(4), 1558-1565. [CrossRef]

- Prodi, N., Visentin, C., Peretti, A., Griguolo, J., & Bartolucci, G. B. (2019). Investigating listening effort in classrooms for 5- to 7-year-old children. Language, Speech, and Hearing Services in Schools, 50(2), 196-210. [CrossRef]

- R Core Team. (2022). R: A language and environment for statistical computing. https://www.R-project.org/.

- Rance, G., Chisari, D., Saunders, K., & Rault, J. L. (2017). Reducing listening-related stress in school-aged children with autism spectrum disorder. Journal of Autism and Developmental Disorders, 47(7), 2010-2022. [CrossRef]

- Reimer, J., McGinley, M. J., Liu, Y., Rodenkirch, C., Wang, Q., McCormick, D. A., & Tolias, A. S. (2016). Pupil fluctuations track rapid changes in adrenergic and cholinergic activity in cortex. Nature Communications, 7, 13289. [CrossRef]

- Rönnberg, J., Lunner, T., Zekveld, A., Sorqvist, P., Danielsson, H., Lyxell, B., Dahlstrom, O., Signoret, C., Stenfelt, S., Pichora-Fuller, M. K., & Rudner, M. (2013). The Ease of Language Understanding (ELU) model: Theoretical, empirical, and clinical advances. Frontiers in Systems Neuroscience, 7, 31. [CrossRef]

- Russo, N., Nicol, T., Trommer, B., Zecker, S., & Kraus, N. (2009). Brainstem transcription of speech is disrupted in children with autism spectrum disorders. Developmental Science, 12(4), 557-567. [CrossRef]

- Schafer, E. C., Aoyama, K., Ho, T., Castillo, P., Conlin, J., Jones, J., & Thompson, S. (2018). Speech recognition in noise in adults and children who speak English or Chinese as their first language. Journal of the American Academy of Audiology 29(10), 885-897. [CrossRef]

- Schafer, E. C., Mathews, L., Gopal, K., Canale, E., Creech, A., Manning, J., & Kaiser, K. (2020). Behavioral auditory processing in children and young adults with autism spectrum disorder. Journal of the American Academy of Audiology, 31(9), 680-689. Journal of the American Academy of Audiology, 31(9), 680-689. [CrossRef]

- Schopler, E., Reichler, R. J., DeVellis, R. F., & Daly, K. (1980). Toward objective classification of childhood autism: Childhood Autism Rating Scale (CARS). Journal of Autism and Developmental Disorders, 10(1), 91–103. //doi: (1), 91–103. https, 1007.

- Schwab, J. F., & Lew-Williams, C. (2016). Language learning, socioeconomic status, and child-directed speech. Wiley Interdisciplinary Reviews - Cognitive Science, 7(4), 264-275. [CrossRef]

- Schwartz, S., Shinn-Cunningham, B., & Tager-Flusberg, H. (2018). Meta-analysis and systematic review of the literature characterizing auditory mismatch negativity in individuals with autism. Neuroscience and Biobehavioral Reviews, 87, 106-117. [CrossRef]

- Shields, C., Willis, H., Nichani, J., Sladen, M., & Kluk-de Kort, K. (2022). Listening effort: WHAT is it, HOW is it measured and WHY is it important? Cochlear Implants International, 23(2), 114-117. [CrossRef]

- Simonoff, E., Pickles, A., Charman, T., Chandler, S., Loucas, T., & Baird, G. (2008). Psychiatric disorders in children with autism spectrum disorders: prevalence, comorbidity, and associated factors in a population-derived sample. Journal of the American Academy of Child and Adolescent Psychiatry, 47(8), 921-929. [CrossRef]

- Skoe, E., & Karayanidi, K. (2019). Bilingualism and speech understanding in noise: Auditory and linguistic factors. Journal of the American Academy of Audiology, 30(2), 115-130. [CrossRef]

- Stroganova, T. A., Kozunov, V. V., Posikera, I. N., Galuta, I. A., Gratchev, V. V., & Orekhova, E. V. (2013). Abnormal pre-attentive arousal in young children with autism spectrum disorder contributes to their atypical auditory behavior: an ERP study. PLoS One, 8(7), e69100. [CrossRef]

- Studebaker, G. A. Studebaker, G. A., Mcdaniel, D. M., & Sherbecoe, R. L. (1995). Evaluating relative speech recognition performance using the proficiency factor and rationalized arcsine differences. Journal of the American Academy of Audiology, 6.

- Thye, M. D., Bednarz, H. M., Herringshaw, A. J., Sartin, E. B., & Kana, R. K. (2018). The impact of atypical sensory processing on social impairments in autism spectrum disorder. Developmental Cognitive Neuroscience, 29, 151-167. [CrossRef]

- Travers, B. G., Adluru, N., Ennis, C., Tromp do, P. M., Destiche, D., Doran, S., Bigler, E. D., Lange, N., Lainhart, J. E., & Alexander, A. L. (2012). Diffusion tensor imaging in autism spectrum disorder: a review. Autism Research, 5(5), 289-313. [CrossRef]

- Ursache, A., & Noble, K. G. (2016). Neurocognitive development in socioeconomic context: Multiple mechanisms and implications for measuring socioeconomic status. Psychophysiology, 53(1), 71-82. [CrossRef]

- van der Meer, E., Beyer, R., Horn, J., Foth, M., Bornemann, B., Ries, J., Kramer, J., Warmuth, E., Heekeren, H. R., & Wartenburger, I. (2010). Resource allocation and fluid intelligence: Insights from pupillometry. Psychophysiology, 47(1), 158-169. [CrossRef]

- van Rij, J., Hendriks, P., van Rijn, H., Baayen, R. H., & Wood, S. N. (2019). Analyzing the time course of pupillometric data. Trends in Hearing, 23, 2331216519832483. [CrossRef]

- van Rij, J., Wieling, M., Baayen, R. H., & van Rijn, H. (2017). itsadug: Interpreting time series and autocorrelated data using GAMMs (published on the Comprehensive R Archive Network, CRAN). Retrieved Jan 28 from https://cran.r-project.org/web/packages/itsadug.

- Wang, X., Wang, S. Fan, Y., Huang, D., & Zhang, Y. (2017). Speech-specific categorical perception deficit in autism: An Event-Related Potential study of lexical tone processing in Mandarin-speaking children. Scientific Reports, 7, 43254.

- Wendt, D., Dau, T., & Hjortkjaer, J. (2016). Impact of background noise and sentence complexity on processing demands during sentence comprehension. Frontiers in Psychology, 7, 345. [CrossRef]

- Wendt, D., Hietkamp, R. K., & Lunner, T. (2017). Impact of noise and noise reduction on processing effort: A pupillometry study. Ear and Hearing, 38(6), 690-700. [CrossRef]

- Wendt, D., Koelewijn, T., Ksiazek, P., Kramer, S. E., & Lunner, T. (2018). Toward a more comprehensive understanding of the impact of masker type and signal-to-noise ratio on the pupillary response while performing a speech-in-noise test. Hearing Research, 369, 67-78. [CrossRef]

- Winn, M. B., & Moore, A. N. (2018). Pupillometry reveals that context benefit in speech perception can be disrupted by later-occurring sounds, especially in listeners with cochlear implants. Trends in Hearing, 22, 2331216518808962. [CrossRef]

- Wood, S. N. (2017). Generalized Additive Models: An Introduction with R (2nd Edition ed.). Chapman and Hall/CRC. [CrossRef]

-

(a)Xu, S., Fan, J., Zhang, M., Zhao, H., Jiang, X., Ding, H., & Zhang, Y. (2023). Hearing assistive technology facilitates sentence-in-noise recognition in children with autism spectrum disorder. Journal of Speech, Language and Hearing Research, 66(8), 2967–2987. [CrossRef]

- Yi, L., Wang, Q., Song, C., & Han, Z. R. (2022). Hypo- or hyperarousal? The mechanisms underlying social information processing in autism. Child Development Perspectives, 16(4), 215-222. [CrossRef]

- Yu, L., Fan, Y., Deng, Z., Huang, D., Wang, S., & Zhang, Y. (2015). Pitch processing in tonal-language-speaking children with autism: An event-related potential study. Journal of Autism and Developmental Disorders, 45(11), 3656-3667. [CrossRef]

- Yu, L., Wang, S., Huang, D., Wu, X., & Zhang, Y. (2018). Role of inter-trial phase coherence in atypical auditory evoked potentials to speech and nonspeech stimuli in children with autism. Clinical Neurophysiology, 129, 1374-1382. [CrossRef]

- Yu, L., Wang, S., Huang, D., Wu, X., & Zhang, Y. (2021). Evidence of altered cortical processing of dynamic lexical tone pitch contour in Chinese children with autism. Neuroscience Bulletin, 37(11), 1605-1608. [CrossRef]

- Yu, L., Huang, D., Wang, S., & Zhang, Y. (2022). Reduced Neural Specialization for Word-level Linguistic Prosody in Children with Autism. Journal of autism and developmental disorders. Advance online publication. [CrossRef]

-

(a)Zekveld, A. A., Heslenfeld, D. J., Johnsrude, I. S., Versfeld, N. J., & Kramer, S. E. (2014). The eye as a window to the listening brain: Neural correlates of pupil size as a measure of cognitive listening load. Neuroimage, 101, 76-86. [CrossRef]

- Zekveld, A. A., Koelewijn, T., & Kramer, S. E. (2018). The pupil dilation response to auditory stimuli: Current state of knowledge. Trends in Hearing, 22, 233121651877717. [CrossRef]

- Zekveld, A. A., Kramer, S. E., & Festen, J. M. (2010). Pupil response as an indication of effortful listening: The influence of sentence intelligibility. Ear and Hearing, 31(4), 480-490. [CrossRef]

- Zekveld, A. A., Kramer, S. E., & Festen, J. M. (2011). Cognitive load during speech perception in noise: The influence of age, hearing loss, and cognition on the pupil response. Ear and Hearing, 32(4), 498-510. [CrossRef]

- Zhang, H. (2009). The revision of WISC-IV Chinese version. Psychological Science, 32(5), 1177-1179. [CrossRef]

- Zhang, M., Xu, S., Chen, Y., Lin, Y., Ding, H., & Zhang, Y. (2021). Recognition of affective prosody in autism spectrum conditions: A systematic review and meta-analysis. Autism, 0(0), 1362361321995725. [CrossRef]

- Zhang, M., Ding, H., Naumceska, M., & Zhang, Y. (2022). Virtual Reality Technology as an Educational and Intervention Tool for Children with Autism Spectrum Disorder: Current Perspectives and Future Directions. Behavioral. Sciences, 12, 138. [CrossRef]

- Zhao, S., Liu, Y., & Wei, K. (2022). Pupil-linked arousal response reveals aberrant attention regulation among children with Autism Spectrum Disorder. Journal of Neuroscience, 42(27), 5427-5437. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).