1. Introduction

Computational topology and homological persistence provide swift algorithms to quantify geometrical and topological aspects of triangulable manifolds [

1]. Let

M be a triangulable manifold, given a continuous function

, persistent homology consists in studying the evolution of homological classes throughout the sublevel-set filtration induced by

f on

M. Indeed, in this context, the word

persistence refers to the lifespan of homological classes along the filtration. In intuitive terms, this procedure allows us to

see M using

f as a lens capable of highlighting features of interest in

M.

Many extensions and generalizations of topological persistence aim to augment the field of application of persistent homology. Such generalizations allow for studying objects different than triangulable manifolds, maintaining the valuable properties granted by the persistent homology framework, e.g., stability [

2], universality [

3], resistance to occlusions [

4], computability [

5].

In most cases, generalizations of persistent homology can be attained in two ways. On the one hand, it is possible to define explicit mappings from the object of interest to a triangulable manifold preserving the properties of the original object, see, e.g., [

6]. On the other hand, theoretical generalizations aim to extend the definition of persistence to objects other than triangulable manifolds and functors other than homology. These generalizations come in many flavors that we shall briefly recall in

Section 2.1. Here, we shall consider the rank-based persistence framework and its developments and applications detailed in [

7,

8,

9]. This framework revolves around an axiomatic approach, that yields usable definitions of persistence in categories such as graphs and quivers, without requiring the usage of auxiliary topological constructions.

In the current manuscript, following the approach described in [

9,

10], we provide strategies to compute rank-based persistence on weighted graphs (either directed or undirected). Weighted (di)graphs are widely used in many real-world scenarios ranging from analysis of interactions in social networks to context-based recommendation. Modern machine learning provides specific neural architectures to process directed graphs, e.g., [

11,

12,

13]. As neural networks assure maximum flexibility by learning directly from training data, graph theory offers a plethora of invariants that—albeit static—can be leveraged as descriptors of directed, weighted networks [

14,

15,

16]. We provide an algorithmic approach to encode such features as persistence diagrams carrying compact information that can be leveraged complementarily or even be integrated in modern neural analysis pipelines.

Organization of the paper

Section 2 briefly outlines various generalizations of persistence, and the current methods taking advantage of persistent homology on graphs and directed graphs (digraphs).

Section 3 recalls the essential concepts for the generalized theory of persistence used in this manuscript. Namely, categorical and indexing-aware persistence functions. These functions are based on graph-theoretical

features. In particular, we introduce the concept of

simple features in

Section 4, and single-vertex features in

Section 5. This latter class of features yields plug-and-play methods for applications: we provide an implementation of single-vertex feature persistence in

Section 6, and apply it in the context of trust networks.

Section 7 concludes the paper.

3. Graph-theoretical persistence

To the best of our knowledge, current applications of persistence to graphs and digraphs leverage homology of some kind. Here, we provide a non-necessarily-homological approach to the study of graphs and digraphs. We recall the basic notions of the extension of topological persistence to graph theory introduced in [

7,

8]. For an encompassing view on classical topological persistence, we refer the reader to [

39,

40].

3.1. Categorical persistence functions

Definition 1.

[7, Def. 3.2] Let

be a category. We say that a lower-bounded function

is a

categorical persistence function if, for all

, the following inequalities hold:

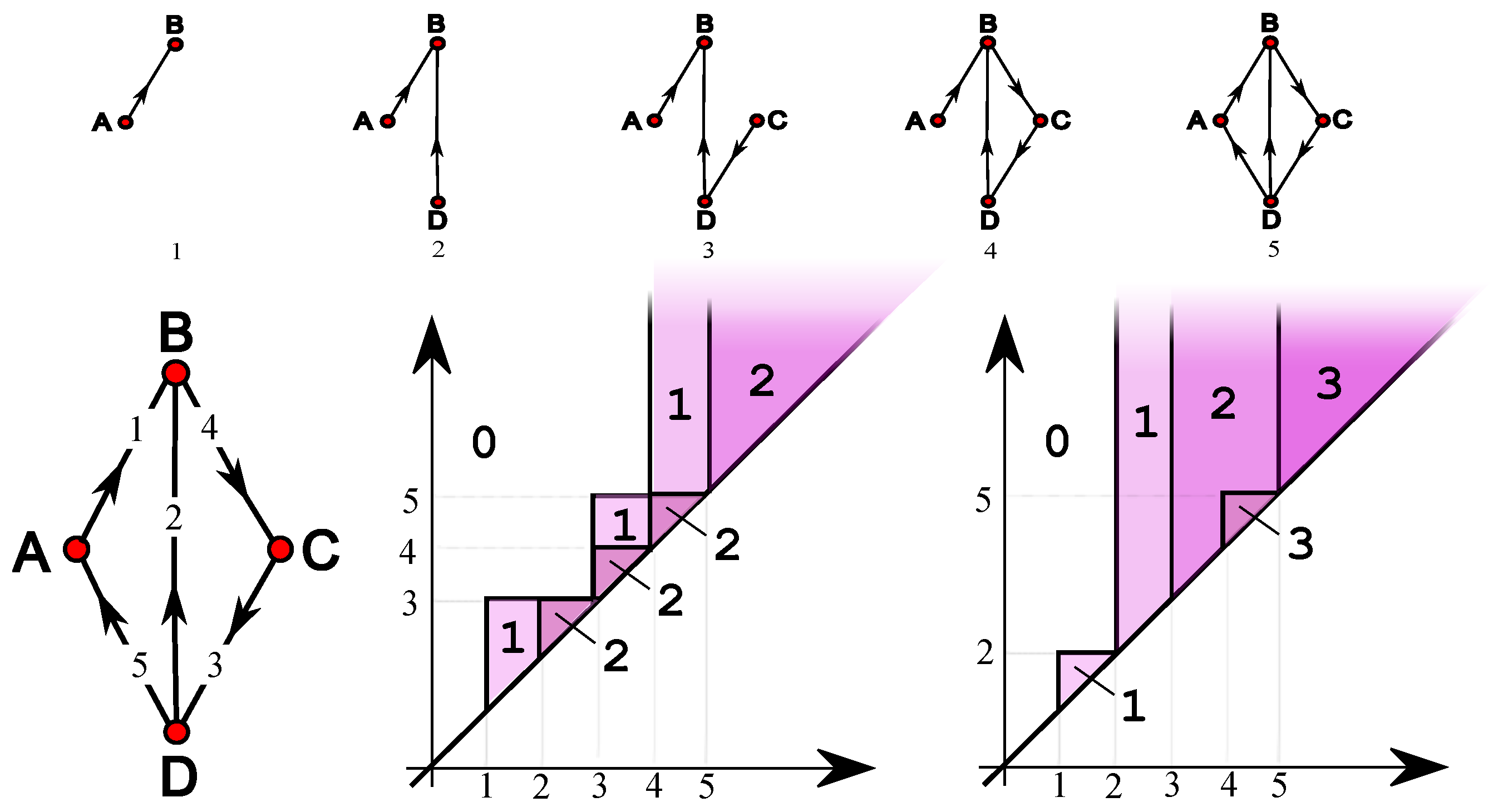

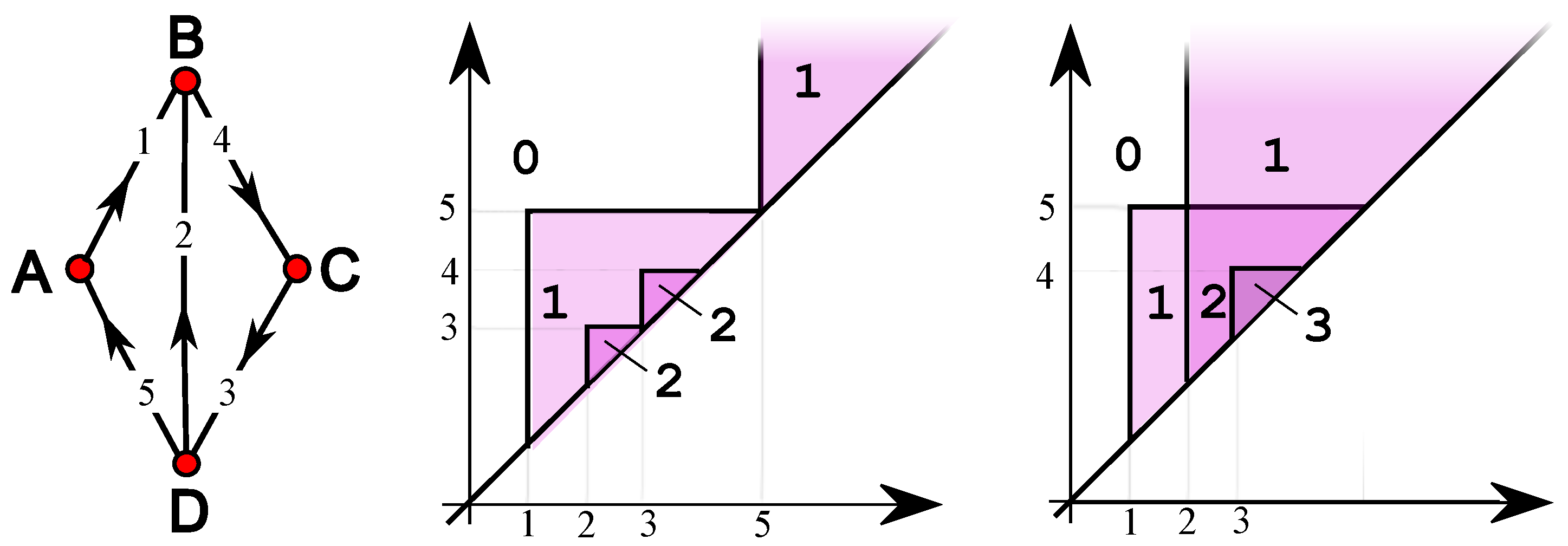

With

, the graphs of such functions have the appearance of superimposed triangles typical of Persistent Betti Number functions [

39, Sect. 2], so they can be condensed into

persistence diagrams [

7, Def. 3.16].

In the remainder, we shall write “(di)graph” for “graph (respectively digraph)”, extending this notation to cases such as sub(di)graph et similia. Symmetrically and when no confusion arises, we shall denote with the category or the category , with monomorphisms as morphisms. We shall use the noun edge for edges of a graph and arcs of a digraph.

Filtered (di)graphs are pairs consisting of a (di)graph and a filtering function on the edges and extended to each vertex as the minimum of the values of its incident edges. For any , the sublevel (di)graph (briefly if no confusion occurs) is the sub(di)graph induced by the edges e such that .

Definition 2.

[8, Def. 5] The

natural pseudodistance of filtered (di)graphs

and

is

where

is the set of (di)graph isomorphisms between

G and

.

Let

p be a categorical persistence function on

,

,

any filtered (di)graphs,

,

the respective persistence diagrams, and

d be the bottleneck distance [

39, Sect. 6][

7, Def. 3.24].

Definition 3.

[8, Sect. 2] The categorical persistence function

p is said to be

stable if the inequality

holds. Moreover, the bottleneck distance is said to be

universal with respect to

p, if it yields the best possible lower bound for the natural pseudodistance, among the possible distances between

and

, for any

,

.

3.2. Indexing-aware persistence functions

The difference between any of the categorical persistence functions introduced in [

8] and the functions of the present subsection (presented originally in [

9]) is that the former comes from a functor defined on

, while the latter strictly depends on the filtration, thus descending from a functor defined on

.

Definition 4.

[9, Def. 5] Let

p be a map assigning to each filtered (di)graph

a categorical persistence function

on

, such that

whenever an isomorphism between

and

compatible with the functions

exists.

All the resulting categorical persistence functions are called indexing-aware persistence functions (ip-functions for brevity). The map p itself is called an ip-function generator.

Definition 5.

[9, Def. 6, Prop. 1] Let

p be an ip-function generator on

. The map

p itself and the resulting ip-functions are said to be

balanced if the following condition is satisfied. Let

be any (di)graph,

f and

g two filtering functions on

G, and

and

their ip-functions. If a positive real number

h exists, such that

, then for all

the inequality

holds.

Proposition 6. [9, Thm. 1] Balanced ip-functions are stable.

We now recall our main ip-function generators: steady and ranging sets.

Definition 7.

[9, Def. 8] Given a (di)graph

, any function

is called a

feature. Conventionally, for

we set

in a sub(di)graph

if

.

Remark 8. The second part of Def. 7 was added for use in some of the following proofs.

Let be a feature.

Definition 9.

[9, Def. 8] We call

-set any

such that

. In a weighted (di)graph

G, we shall say that

is an

-set at level if it is an

-set of

.

Definition 10.

[9, Def. 9] We define the

maximal feature

associated with

as follows: for any

,

if and only if

and there is no

such that

and

.

Definition 11.

[9, Def. 10] A set

is a

steady-set (s

-set for brevity) at

if it is an

-set at all levels

w with

.

We call X a ranging-set (r-set) at if there exist levels and at which it is an -set.

Let be the set of s-sets at and let be the set of r-sets at .

Definition 12.

[9, Def. 11] For any filtered (di)graph

we define

(resp.

) as the function which assigns to

, the number

(resp.

).

We denote by and the maps assigning and respectively to .

Proposition 13. [9, Prop. 2] The maps

and

are ip-function generators.

The next definition will be generalized in

Section 4.

Definition 14.

[9, Def. 13] We say that a feature

is

monotone if

for any (di)graphs , and any , in implies in

in any (di)graph , for any , implies .

Proposition 15. [9, Prop. 3] If

is monotone, then

Proposition 16. [9, Prop. 4] If

is monotone, then the ip-function generators

are balanced.

4. Simple features

We now define a class of features extending the class of monotone features (Def. 14).

Definition 17. Let be a (di)graph. A feature is said to be simple if, for and for sub(di)graphs the following condition holds:

if in and in , then in

For the remainder of this section, let be a weighted (di)graph, , and a simple feature in G.

Lemma 18. Let . Then, either there is no value u for which in , or in for all , where is the lowest value u such that in the sub(di)graph one has in , and is either the lowest value for which in or .

Proof. Assume that there is at least a value such that in . There surely are values w such that in : at least the values beneath the minimum attained by f; let be such that in ; for any set , , . Then, by Def. 17, in . So between two values for which there cannot be one such that . □

Definition 19. The interval of Lemma 19, i.e. the widest interval for which in , is called the -interval of X in .

Proposition 20.

Proof. By Lemma 19, since and would differ only if, for at least one set X, there were values for which in and in but in . □

It is easy to prove that the following features are simple.

Example 21. For a graph G, the (rather trivial) feature for which if and only if X is a singleton containing a vertex of a fixed degree d is simple. Also the feature for which if and only if X is a singleton containing a vertex whose degree is is simple, with half-lines as -intervals. Analogous features are defined for a digraph in terms of indegree and outdegree.

Example 22. For a (di)graph G, if and only if X is a set of vertices inducing a connected subgraph (resp. a strongly connected subdigraph) of G. is simple and its -intervals are half-lines.

Example 23. For a (di)graph G, if and only if X is a set of vertices inducing a connected component (resp. a strong component) of G. is simple; for the -interval of a set X, the value either is or the value at which some other vertex joins the component (resp. strong component) induced by X.

Proposition 24. For a (di)graph G, any monotone feature is simple.

Proof. Let be a (di)graph and be a monotone feature. For and assume that in and in . By condition 1 of Def. 14, (recall the convention of Def. 7), so also and in . □

Lemma 25. Let be filtering functions on a (di)graph G, and assume that there exists a positive real number h such that . Then, for real numbers such that , the sublevel (di)graph is a sub(di)graph of and is a sub(di)graph of .

Proof. For any , if then ; analogously, if then . □

The following Proposition generalizes Prop. 16 (i.e. [

9, Prop. 4]) with essentially the same proof.

Proposition 26. The ip-function generators are balanced, hence stable.

Proof. With the same hypotheses as in Lemma 25, let be such that in at all levels , with . For any we now show that in . In fact, by Prop. 25 is a sub(di)graph of , which is a sub(di)graph of . Since is simple and both in and in , we necessarily have that in . Therefore there is an injective map (actually an inclusion map) from to , therefore . Stability comes from Prop. 6. □

Remark 27. The maximal version of a simple feature (Def. 10) is generally not simple: an example is the feature

identifying independent sets of vertices, which is monotone (hence simple) while the ip-functions corresponding to its maximal version

are not balanced ([

9, Sect. 2.4, Appendix, Fig.

14, Fig. 15]), so

is not simple. Still, some are.

Proposition 28. For a (di)graph

G, the feature

[

9, Sect. 2.4], i.e. such that

if and only if

X is a maximal matching, is simple.

Proof. A matching of a graph is a matching also of any supergraph. If, for , we have in and in , this means that X is a maximal matching in but is not in . There are only two ways of not being a maximal matching in : either or contains a matching . The latter is impossible because in that case, X would not be maximal in either. So and in . □

Two variations on the notion of matching for digraphs are the following.

Example 29. For a digraph , if and only if X is a set of arcs, any two of which have neither the same head nor the same tail. Such a set X is said to be path-like.

Example 30. For a digraph , if and only if X is a set of arcs, such that for any two of them the head of one is not the tail of the other. Such a set X is said to be path-less.

Proposition 31. The features and are monotone, hence simple.

Proof. Straightforward. □

Proposition 32. The features and are simple.

Proof. Same as for Prop. 28. □

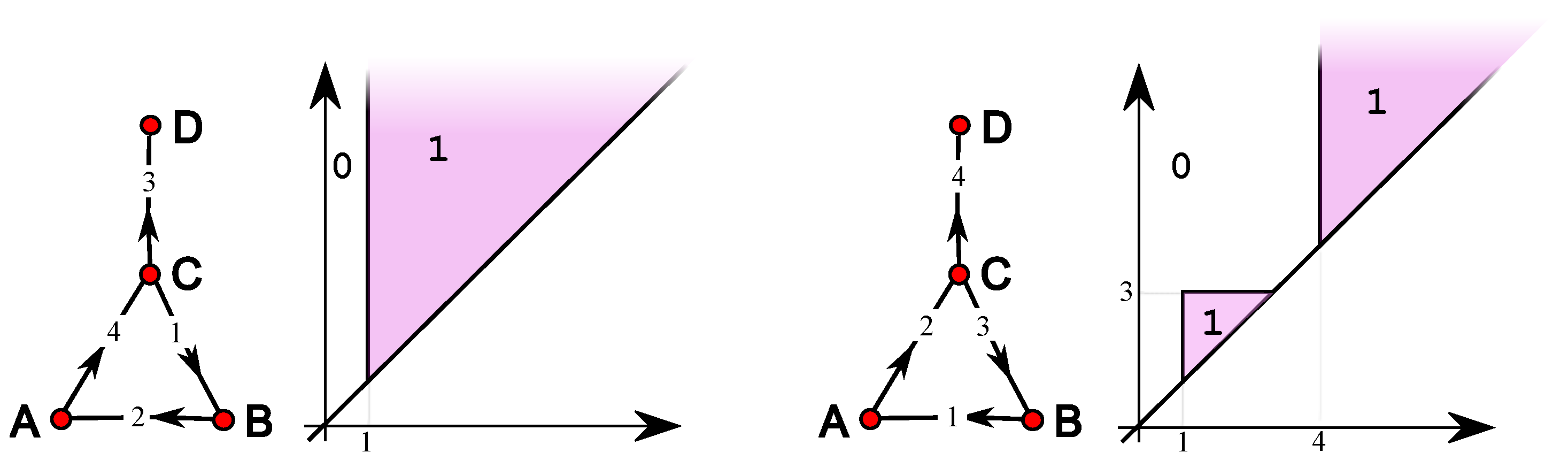

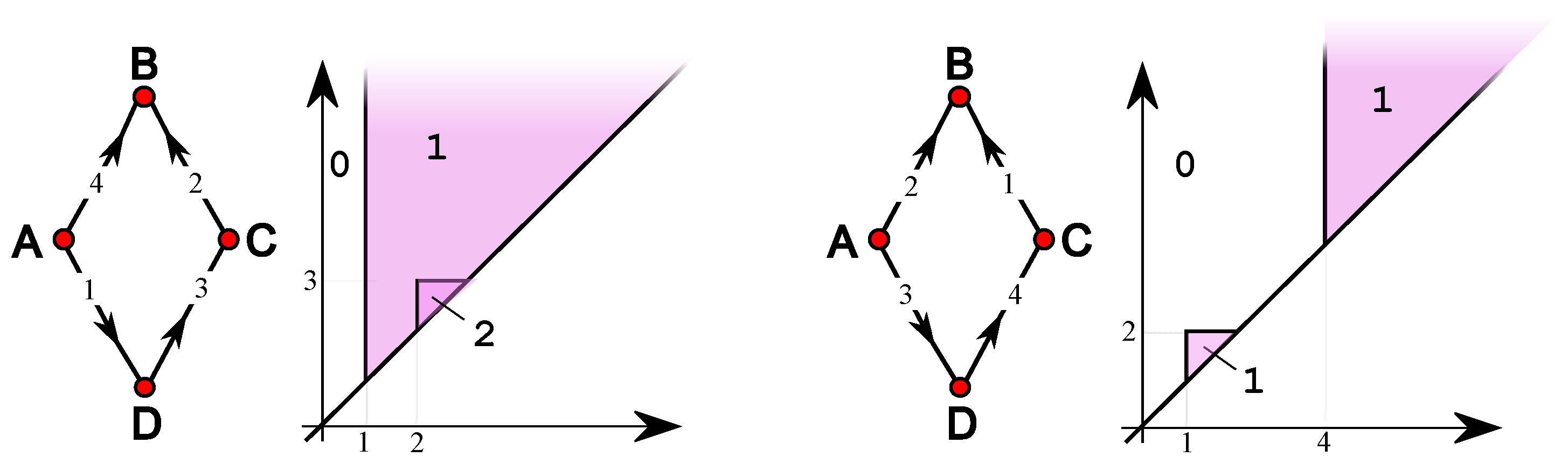

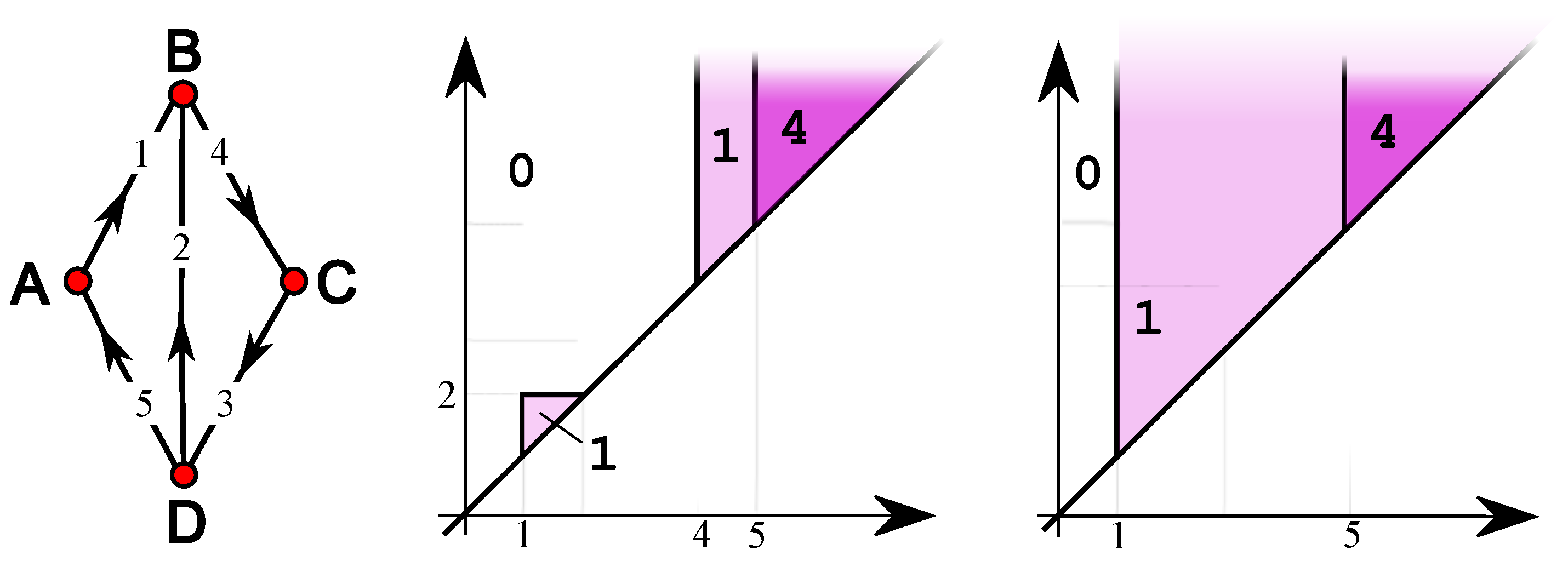

Figure 1 shows the functions

(middle) and

(right) for the same toy example (left) used in [

9].

7. Conclusions

The availability of massive data represented as graphs expedited the development of computational methods specialized in handling data endowed with the complex structures arising from pairwise interactions.

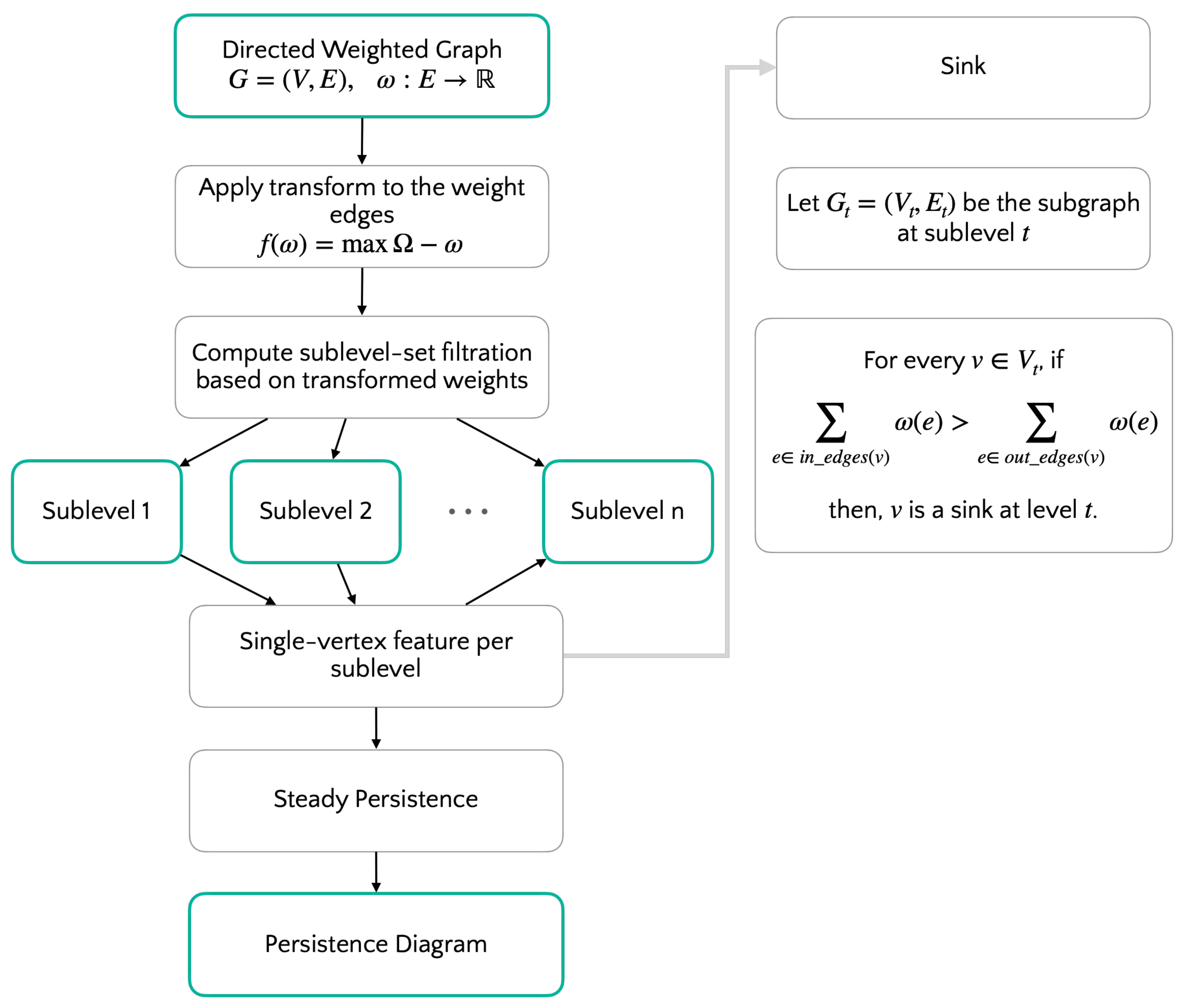

We provide a computational recipe to generate compact representations of large networks according to graph-theoretical features or custom properties defined on the networks’ vertices. To do this, we leverage a generalization of topological persistence adapted to work on weighted (di)graphs. In this framework, we widen the notion of monotone feature on (di)graphs—i.e., feature respecting inclusion—and thus, well suited to generate filtrations and persistence diagrams. Simple features are a special case of features, whose value can change from true to false only once in a filtration of a (di)graph. We prove that in a (di)graph any monotone feature is simple and that simple features give rise to stable indexing-aware persistence functions. We then provide examples of simple features. Turning our attention to application, single-vertex features allow the user to compute the persistence of a weighted (di)graph for any feature, focused on singletons. There, we provide several examples, characterizing single-vertex features as point-wise, local, or global descriptors of a network.

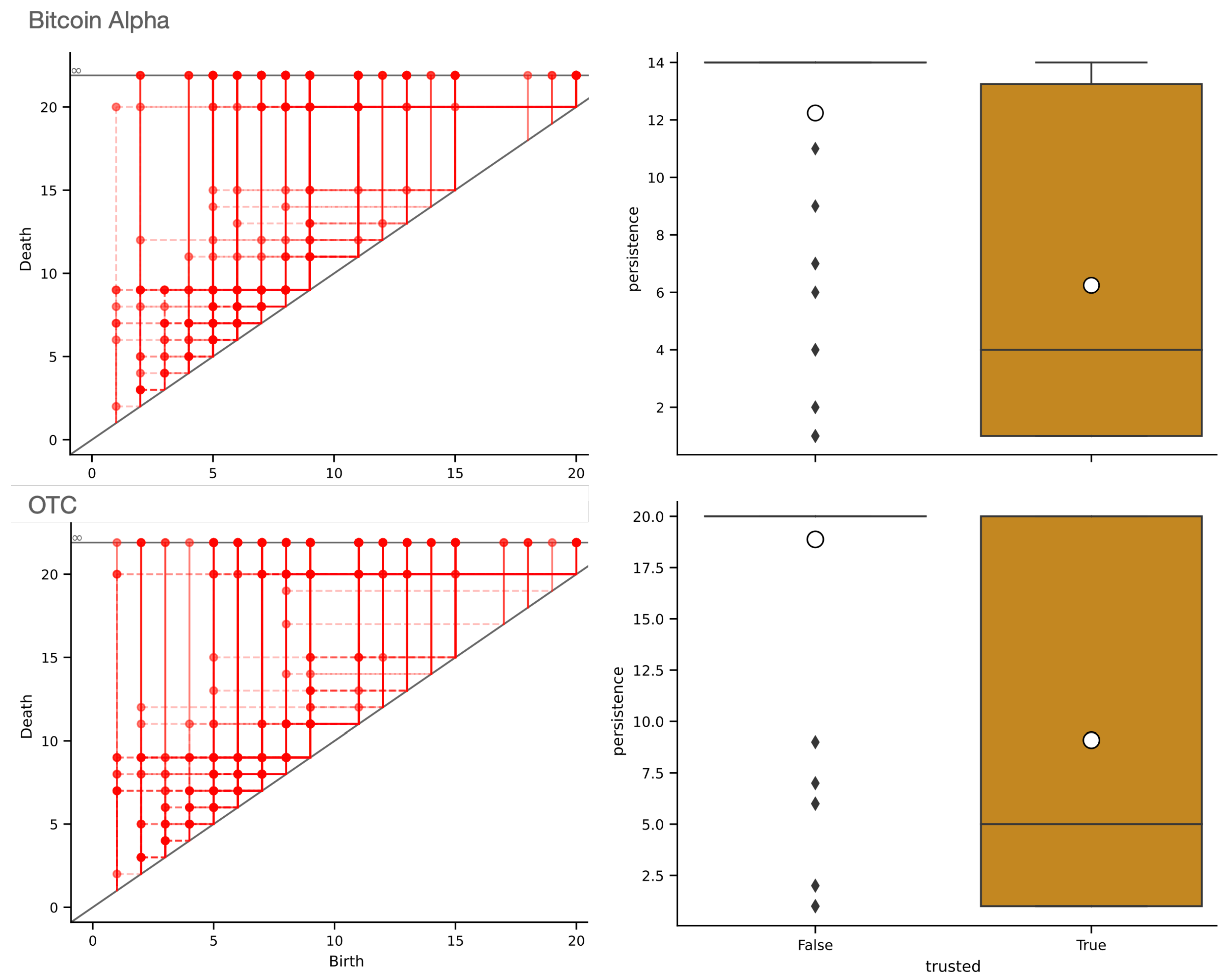

Computational experiments—supporting our belief that single-vertex feature can be easily integrated in applications—show how the

sink feature characterizes the users of trust networks. When considering the persistence of sink vertices, fraudulent users are, in the majority of cases, represented by half-lines with infinite persistence, while trusted users are associated with finite persistence. To replicate our results, we provide a Python implementation of the algorithm for the computation of single-vertex feature persistence integrating it in the package originally supporting [

8,

9].

We believe that straightforward implementation to compute local and global descriptors of networks arising from easily engineerable features can complement and help gaining control on current approaches based on artificial neural architectures and learned features.