Submitted:

08 July 2023

Posted:

10 July 2023

You are already at the latest version

Abstract

Keywords:

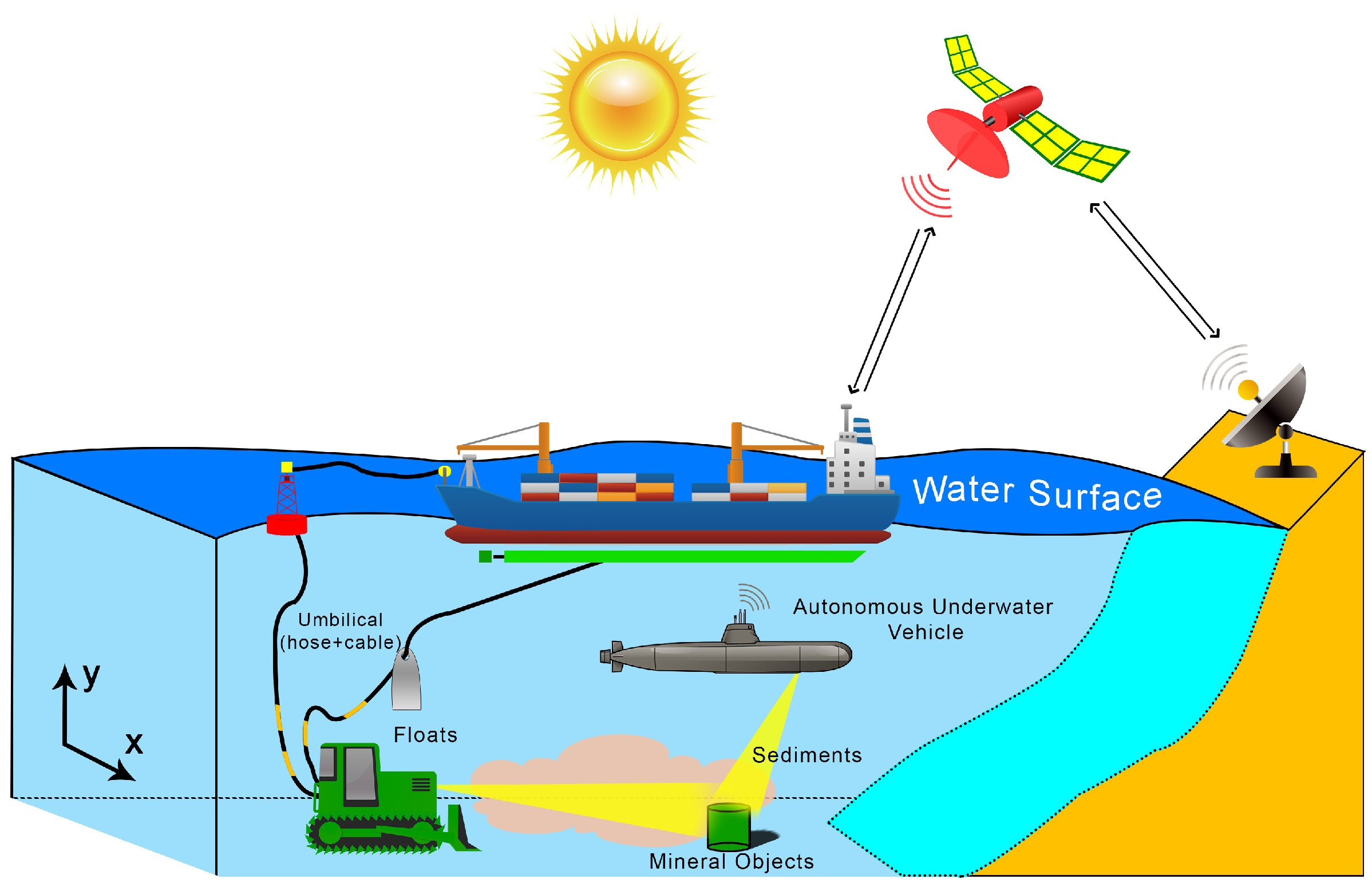

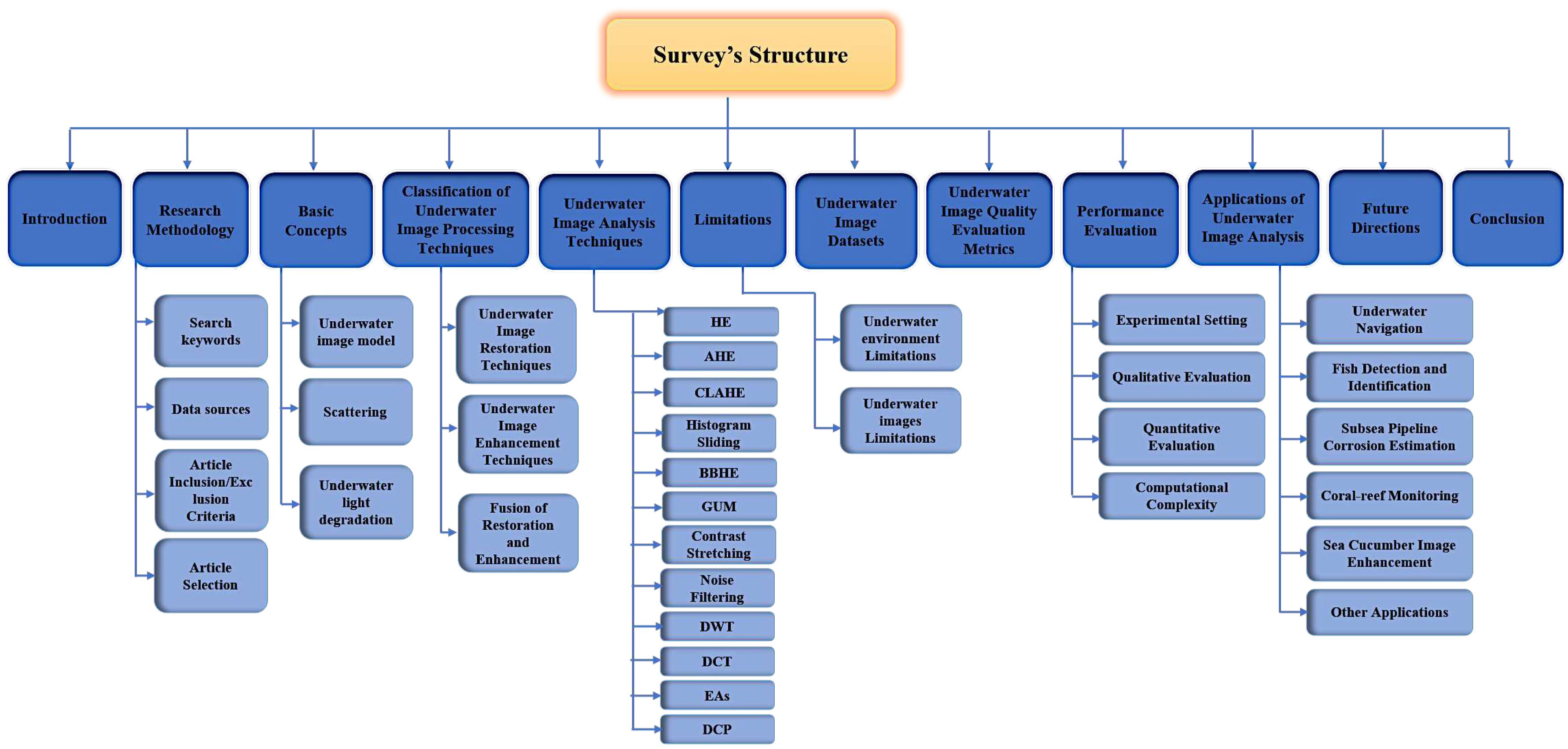

1. Introduction

- The basic concepts related to the underwater environment, including image formation and light degradation models, are explained.

- Recent underwater image enhancement and restoration methods are comprehensively discussed to identify their working methodologies, strengths, and limitations.

- The datasets applied for improving underwater image analysis and the existing evaluation metrics are discussed and compared.

- Different enhancement and restoration techniques are experimentally evaluated by using images from underwater images datasets.

- The main limitations that researchers face in underwater image analysis are summarized. These limitations are classified into two categories: those related to the underwater environment and those related to the underwater images.

- Several open issues for underwater image enhancement and restoration are presented to highlight potential future research directions.

| Abbreviation | Definition | Abbreviation | Definition |

|---|---|---|---|

| AD | Average Difference | AG | Average Gradient |

| AHE | Adaptive Histogram Equalization |

AMBE | Absolute Mean Brightness Error |

| AUVs | Autonomous Underwater Vehicles |

BBHE | Brightness Preserving Bi-Histogram Equalization |

| CCF | Colourfulness Contrast Fog density index |

CEF | Colour Enhancement Factor |

| CRBICMRD | Color Restoration depended on the Integrated Color Model with Rayleigh Distribution |

CLAHE | Contrast Limited Adaptive Histogram Equalization |

| CNN | Convolutional Neural Network | CNR | Contrast to Noise Ratio |

| DCP | Dark Channel Prior | DCT | Discrete Cosine Transform |

| DOP | Degrees of Polarization | DL | Deep Learning |

| DSNMF | Deep Sparse Non-negative Matrix Factorization |

DWT | Discrete Wavelet Transform |

| EAs | Evolutionary Algorithms | EME | Measure of Enhancement |

| EMEE | Measure of Enhancement by Entropy |

EUVP | Enhancement of Underwater Visual Perception |

| FR | Full Reference | GANs | Generative Adversarial Networks |

| GUM | Generalized Unsharp Masking | HE | Histogram Equalization |

| HIS | Hue-Saturation-Intensity | HR | High Resolution |

| HSV | Hue-Saturation Value | HVS | Human Visual System |

| IEM | Image Enhancement Metric | ICM | Integrated Color Model |

| IFM | Image Formation Model | JTF | Joint Trigonometric Filtering |

| LFR | Light Field Rendering | MARI | Marine Autonomous Robotics for Interventions |

| MAI | Maximum Attenuation Identification |

MCM | Multi-Color Model |

| MD | Maximum Difference | MILP | Minimum Information Loss Principal |

| MIP | Maximum Intensity Prior | MLP | Multilayer Perceptron |

| MSRCR | Multiscale Retinox with Color Restoration |

MSE | Mean Square Error |

| MTF | Modulation Transfer Function | NAE | Normalized Absolute Error |

| NCC | Normalized Cross-Correlation | NIQA | Natural Image Quality Assessment |

| NR | No Reference | NR-IQA | No-referenced Image Quality Metric |

| PCQI | Patch based Contrast Quality Index |

PDI | Polarization Differential Imaging |

| PSF | Point Spread Function | PSNR | Peak-Signal-to-Noise Ratio |

| PSO | Particle Swarm Optimization | RAHIM | Recursive Adaptive Histogram Modification |

| RCP | Red Channel Prior | RGB | Red-Green-Blue |

| RGHS | Relative Global Histogram Stretching |

RIP | Range Intensity Profile |

| RMSE | Root Mean Square Error | RNN | Recurrent Neural Networks |

| ROVs | Remotely Operated Vehicles | RR | Reduced Reference |

| RUIE | Real-World Underwater Image Enhancement |

SAUV | Sampling System-AUV |

| SCM | Single Color Model | SNR | Signal-to-Noise Ratio |

| SR | Super-Resolution | SSIM | Structure Similarity Index Measure |

| SSEQI | Spatial Spectral Entropy based Quality index |

SVM | Support Vector Machine |

| TM | Transmission Map | UCIQE | Underwater Colour Image Quality Evaluation metric |

| UDCP | Underwater Dark Channel Prior |

UHTS | underwater task-oriented test suite |

| UIEB | Underwater Image Enhancement Benchmark |

UIE | Underwater Image Enhancement |

| UIQS | Underwater Image Quality Set |

UIEB | Underwater Image Enhancement Benchmark |

| UIQM | Underwater Image Quality Measure | UISM | Underwater Image Sharpness Measurement |

| UICM | Underwater Image Color Measurement |

ULAP | Underwater Light Attenuation Prior |

| UIConM | Underwater Image Contrast Measurement |

UOI | Underwater Optical Imaging |

| UUVs | Unmanned Underwater Vehicles | WCID | Wavelength Compensation and Image Defogging |

2. Research Methodology

| No. | Method type | Method frequency % |

|---|---|---|

| 1 | Hardware-based Methods | 10% |

| 2 | Underwater Image Restoration | 30% |

| 3 | Underwater Image enhancement | 60% |

2.1. Search keywords

2.2. Data sources

| Academic database name | Link |

|---|---|

| Science direct | http://www.sciencedirect.com/ |

| Web of science | https://apps.webofknowledge.com/ |

| PubMed | https://pubmed.ncbi.nlm.nih.gov/ |

| IEEEXplore | https://ieeexplore.ieee.org/ |

| Springerlink | https://link.springer.com/ |

| PeerJ | https://peerj.com/ |

| Scopus | https://www.scopus.com/ |

2.3. Article Inclusion/Exclusion Criteria

| Inclusion Criteria | Exclusion Criteria |

|---|---|

|

|

2.4. Article Selection

3. Basic Concepts

3.1. Scattering

3.2. Underwater Image Model

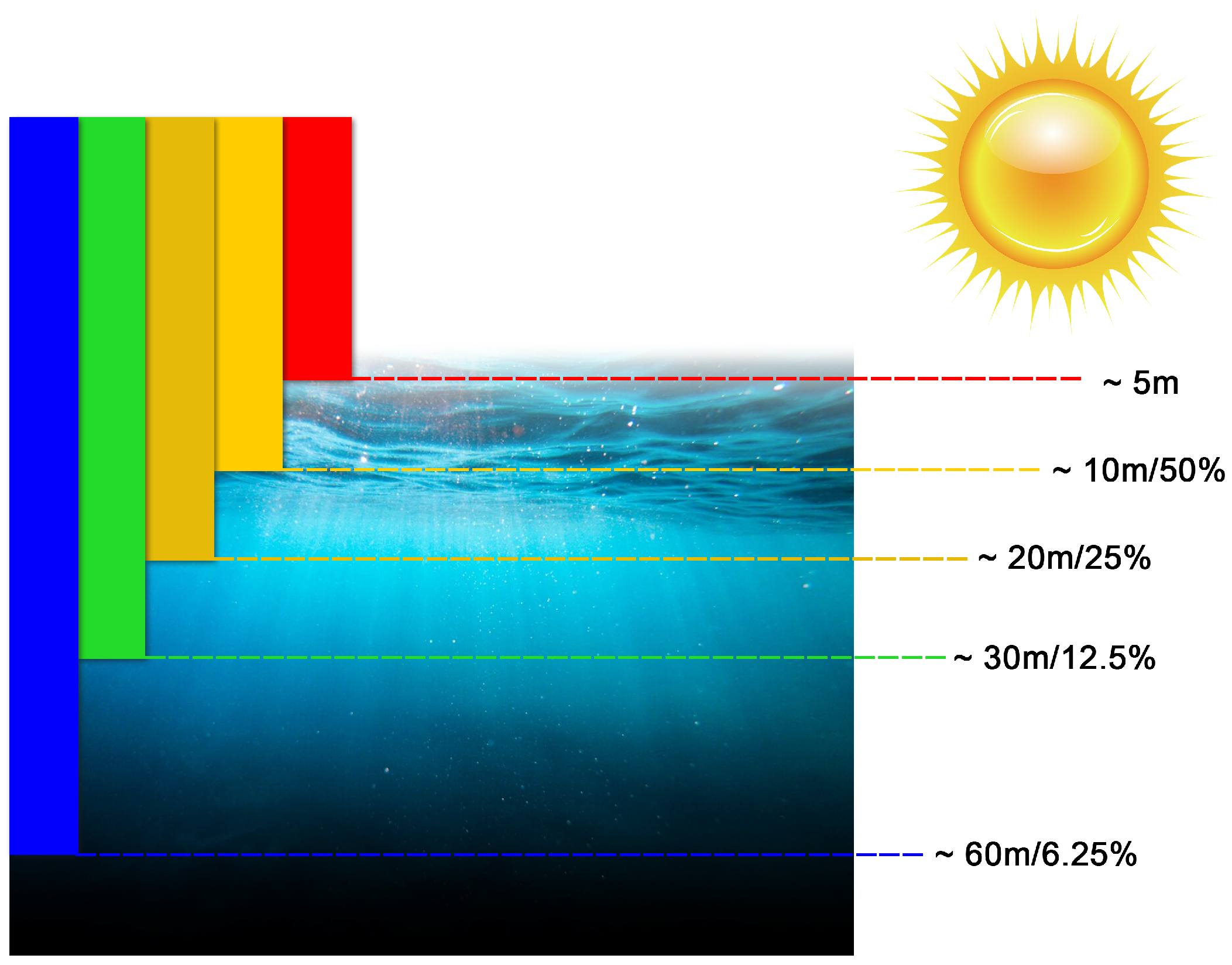

3.3. Underwater Light Degradation

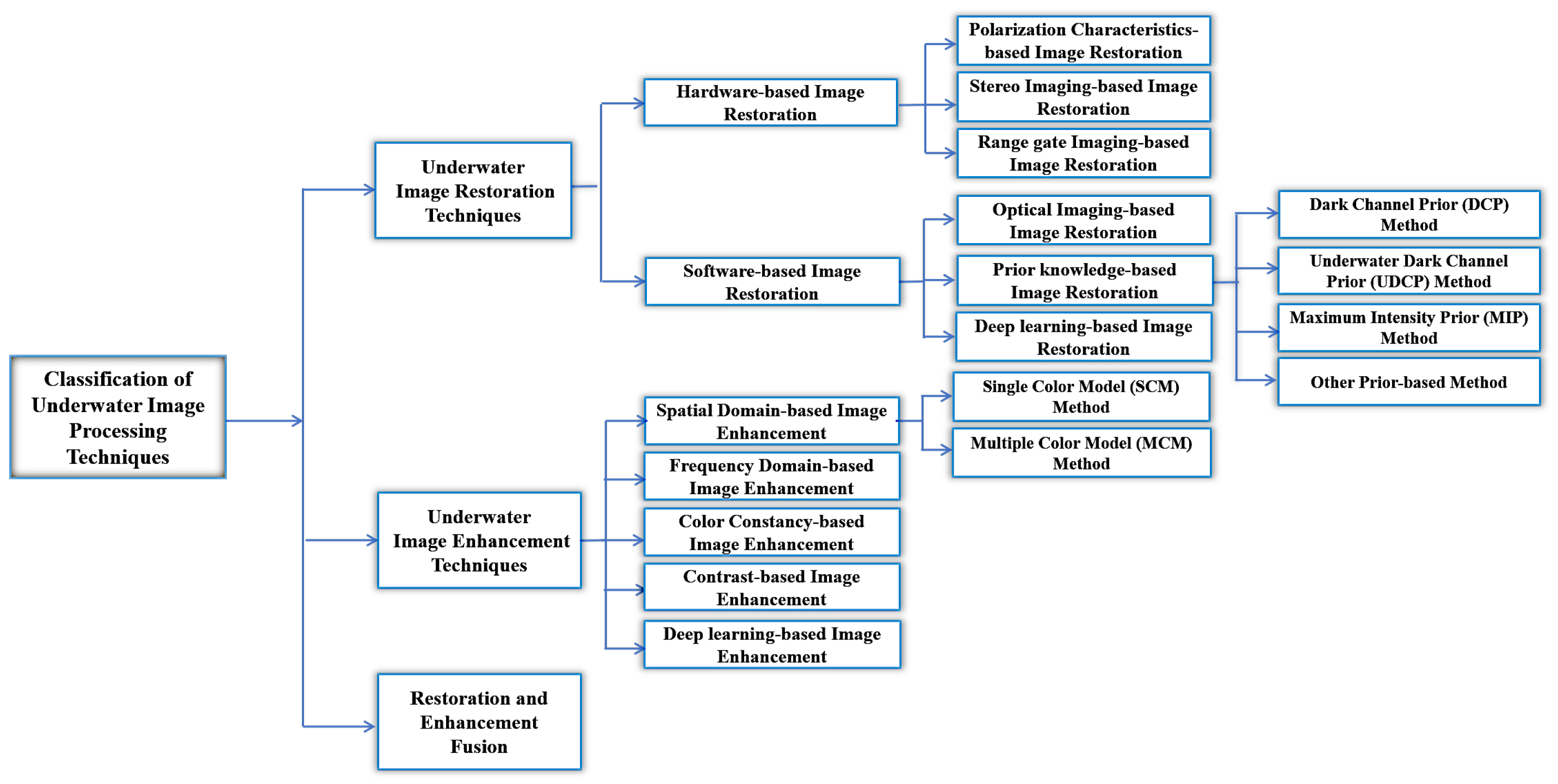

4. Classification of Underwater Image Processing Techniques

4.1. Underwater Image Restoration Techniques

| Reference | Method Based | Advantages | Disadvantages |

|---|---|---|---|

| Huang et al. (2016) |

Polarization | Effective in the cases of both scattered light and object radiance |

High computational complexity |

| Hu et al. (2017) |

Polarization | Enhanced visibility and low computational complexity |

Didn’t effectively remove noise and no application for color images |

| Han et al. (2017) |

Polarization | Suppressed backscattering and extracted edges |

No experiments were applied in real-life conditions |

| Hu et al. (2018) |

Polarization | Enhanced the underwater images even in turbid media |

Complex computational time |

| Hu et al. (2018) |

Polarization | Intensity and DCP of backscattering were suppressed |

Solving the and spatial distribution was very difficult |

| Ferreira et al. (2019) |

Polarization | Effective method for underwater images recovery |

Complicated the cost function and time-consuming |

| Yang et al. (2019) |

Polarization | Enhanced the contrast in underwater images |

Noise wasn’t removed |

| Wang et al. (2022) |

Polarization | Qualitatively and quantitatively improved the underwater images and removed noise |

High time complexity |

| Jin et al. (2020) |

Polarization | higher signal to noise ratio and higher contrast |

Noise wasn’t removed |

| Fu et al. (2020) |

Polarization | Enhanced visibility in underwater images |

High computational complexity |

| Burno et al. (2010) |

Stereo Imaging | Good quality underwater images | High time complexity |

| Roser et al. (2014) |

Stereo Imaging | improved stereo estimation | Didn’t work well in shallow water due to various light conditions |

| Lin et al. (2019) |

Stereo Imaging | Enhanced the stereo imaging system |

High computational time |

| Luczynski et al. (2019) |

Stereo Imaging | Effective method | Noise wasn’t removed |

| Tan et al. (2006) |

Rang Gated | Enhanced underwater images contrast | Noise wasn’t removed |

| Li et al. (2009) |

Rang Gated | Reduced speckle noise and preserved features details |

High computational complexity |

| Liu et al. (2018) |

Rang Gated | Enhanced image visibility | Didn’t effectively remove noise |

| Wang et al. (2020) |

Rang Gated | Enhanced image contrast and visibility |

High computational complexity |

| Wang et al. (2021) |

Rang Gated | Enhanced image contrast and worked well even if the estimated depth was smaller |

Complication of cost function |

| Trucco and Olmos-Antillon (2006) |

Optical | Optimized the computed parameters values automatically |

Increased the time and computation complexity |

| Hou et al. (2007) |

Optical | Effective method that depended on point spread function |

Importance of estimating the parameters of illumination scattering |

| Boffety et al. (2012) |

Optical | An effective smoothing method was used |

Low contrast in images |

| Wen et al. (2013) |

Optical | Enhanced the perception of underwater images |

Poor flexibility and adaptability |

| Ahn et al. (2018) |

Optical | Effective and accurate method | Increased time complexity |

| Chao and Wang (2010) |

DCP | Recovered the underwater images and removed scattering |

Underwater images suffered from color distortion |

| Yang et al. (2011) |

DCP | Fast method for underwater images restoration |

Only suitable for underwater images with rich colors. |

| Chiang and Chen (2011) |

DCP | Restored underwater images color balance and removed haze |

High computational complexity |

| Serikawa and Lu (2014) |

DCP | Improved the contrast and visibility | High computational time |

| Peng et al. (2015) |

DCP | Exploited the blurriness of underwater image |

Noise wasn’t removed |

| Lu et al. (2015) |

UDCP | Color correction of underwater images effectively |

Decreased the contrast |

| Lu et al. (2017) |

UDCP | Effective method for recovering the underwater images |

Increased noise |

| Galdran et al. (2015) |

UDCP | Enhanced the artificial light and contrast |

Colors of some restored images were unreal and incorrect |

| Carlevaris-Bianco et al. (2010) |

MIP | Reduced the haze effects and provided color correction |

Didn’t solve problems of attenuation and scattering |

| Zhao et al. (2015) |

MIP | Removed haze effect and corrected colours |

Illumination wasn’t considered |

| Li et al. (2016) |

MIP | Increased brightness and contrast of underwater images |

Noise wasn’t removed |

| Peng and Cosman (2017) |

Other Prior | Worked well for various underwater images |

Noise wasn’t removed |

| Peng et al. (2018) |

Other Prior | Restored degraded images and increased contrast |

High computational complexity |

| Li et al. (2016) |

Other Prior | Increased brightness and contrast |

Couldn’t remove noise effects |

| Wang et al. (2017) |

Other Prior | Enhanced contrast and corrected colours |

High time complexity |

| Song et al. (2018) |

Other Prior | improved quality of underwater images and Lowest running time |

Noise wasn’t removed |

| Ding et al. (2017) |

DL | Increased contrast | Highest running time |

| Cao et al. (2018) |

DL | Restored images effectively | Blurring and low visibility of underwater images |

| Barbosa et al. (2018) |

DL | Increased the underwater images quality |

Noise wasn’t removed |

| Hou et al. (2018) |

DL | Increased contrast, and restored natural appearance |

Noise and some blurring |

4.1.1. Hardware based Restoration

4.1.1.1. Polarization characteristic-based

4.1.1.2. Stereo imaging

4.1.1.3. Range gated imaging

4.1.2. Software based Restoration

4.1.2.1. Optical

4.1.2.2. Prior knowledge-based Image Restoration

-

Dark Channel Prior (DCP) Method[66] presented the DCP method that is used for dehazing the images. Haze is a normal phenomenon that reduces visibility, obscures scenes, and changes colors. It is a problem for photographers as it causes the degradation of image quality. It threatens the reliability of many applications, such as object detection, outdoor surveillance, and aerial imagery. Therefore, removing the haze from images is crucial in computer graphics/vision. The DCP-based dehazing technique is used for enhancing underwater images. This method depends on the observation that good quality and clear underwater images have some pixels at very low intensities in at least one color channel.For restoring clear underwater images, chao et al. [31] proposed an effective DCP-based method, which was used to reduce the effects of water scattering and attenuation in underwater images. DCP was used to compute the turbid water depth by assuming that multiple patches in water-free underwater images consist of a few pixels with very low intensities in at least one color channel. yang et al. [73] developed a low-complexity and efficient DCP-based method for restoration of underwater image. They calculated the depth maps of images by employing a media filter instead of soft matting. Color correction was also used to improve the contrast in the underwater image. This method was highly effective images restoration and reduced the execution time.Chiang et al. [33] presented a method for enhancing underwater images by applying Wavelength Compensation and Image Defogging (WCID). They used the dehazing algorithm to reduce for the attenuation discrepancy across the propagation path and to remove the possible light source influence presence. This method performed well in enhancing the underwater images objectively and subjectively. Serikawa et al. [74] proposed a new method that compensates for the attenuation discrepancy across the propagation path and used a fast dehazing algorithm named joint trigonometric filtering (JTF). JTF improves the transmission map (TM), which, estimated by the DCP affords many improvements, such as scatter reduction, edge information, and image contrast. This algorithm is characterized by noise reduction, better exposure to dark regions, and improved contrast.Peng et al. [75] developed a method for computing depth maps for underwater image restoration. It depended on the observation that an object that was further from the camera was more blurred. They combined image blurriness with the image formation model (IFM) to compute the distance between the scene points and the camera. It was much more effective than any other IFM-based enhancement method. The DCP is affected by selective light attenuation in the underwater environment, so various underwater enhancement methods based on DCP were developed and used.

-

Underwater Dark Channel Prior (UDCP) MethodThe underwater image red channel will dominate the dark channel because red light attenuates more rapidly than blue and green light as it travels through the water. To avoid the red influence, [68] introduced the UDCP, which evaluates only the green and blue (GB) channels to determine the underwater DCP. [76] proposed a new technique that compensates for the attenuation discrepancy in underwater images through the propagation path. They developed color lines depended on an ambient light estimator and adaptive filtering in shallow oceans for underwater image enhancement. They also presented a color correction algorithm for color restoration.Lu et al. [12] proposed a new technique for super-resolution (SR) and scattering in underwater images. First, based on self-similarity, a high resolution (HR) of the scattered and the de-scattered image is obtained through the SR algorithm. Then, the convex fusion rule is used for retrieving the HR image. This algorithm is highly effective in restoring underwater images. Galdran et al. [71] developed a new, automatic method for the restoring of underwater images that depends on RCP. This RCP extracts the dark channel in which the blue and red channels are reserved. Their experimental results indicate that this method effectively enhances degraded underwater images.

-

Maximum Intensity Prior (MIP) MethodSuspended particles that cause turbidity or fogging degrade the underwater images quality. The difference in attenuation between the underwater images’ red (R) and GB channels is significant. Carlevaris et al. [70] developed an effective algorithm that removes light scattering, known as dehazing, in underwater images. They presented a prior for estimating scene depth termed the maximum intensity prior (MIP). The MIP is the difference value between the R channel intensity and the maxima of the G and B channels. The closest point shift in the foreground represents the most significant difference between the color channels.Zhao et al. [77] developed a new method that derives the water’s optical properties. This method estimated the background light (BL) that depended on the DCP and MIP. First, it took the brightest 0.1% of the dark channel pixels and then chose the pixel that differed maximally in the B-G or G-R channels. Li et al. [78] developed a new method for restoring underwater images that determines the selected background light using its maximally different pixels. This method depends on dehazing the blue-green channels and correcting the red channel. First, by using a blending strategy as Li et al. [79,80], a flat background region was selected in the quad-tree subdivision. Then, 0.1% of the region candidate’s brightest pixels from the dark channel were taken. Finally, a pixel with the greatest difference in the R-B channel was selected as the global backlight.

-

Other Prior-based MethodIn addition to those listed above, some priors are not commonly applied but are helpful in underwater image restoration. For example, Peng et al. [81] developed a new technique for computing the underwater scenes depth that depended on light absorption and image blurring. This method was used in the IFM for image restoration and its experimental results were much more accurate and effective than any other.Peng et al. [82] developed a method for enhancing and restoring underwater images by reducing light absorption, scattering, low contrast, and color distortion caused by light traveling through a turbid medium. First, ambient light was computed by color change that depended on depth. Then, the scene transmission was computed by the differences between the observed intensity and the ambient light. In addition, adaptive color correction was calculated. Li et al. [79] developed a method for enhancing and restoring underwater images that depends on the minimum information loss principle (MILP). The dehazing algorithm was applied to recover underwater images’ color, natural appearance, and visibility. An effective contrast enhancement algorithm was applied to enhance underwater images’ contrast and brightness. It improved visual quality, accuracy, and other valuable information.Wang et al. [83] proposed the maximum attenuation identification (MAI) technique for deriving the depth map and backlight from degraded underwater images. Region background estimation was simultaneously applied to ensure optimal performance. Experiments were conducted on three image types: calibration plate, natural underwater scene, and colormap board. Song et al. [72] presented an accurate, effective, and rapid scene depth estimation model that depended on ULAP. It assumed that the differences between the R intensity value and the G and B intensity values in only one pixel of the underwater image were strongly related to depth changes in the scene. In estimating the R-G-B channels, this model was applied for the BL and TMs.

4.1.2.3. Deep Learning

4.2. Underwater Image Enhancement Techniques (IFM-free)

| Reference | Method Based | Advantages | Disadvantages |

|---|---|---|---|

| Ancuti et al. (2012) |

Spatial Domain (SCM) |

Increased contrast of underwater images |

Didn’t work well with poor artificial light |

| Ancuti et al. (2016) |

Spatial Domain (SCM) |

High accuracy in underwater images enhancement |

Noise wasn’t removed |

| Liu et al. (2017) |

Spatial Domain (SCM) |

Enhanced underwater images contrast and visibility |

Low accuracy |

| Torres-M´ endez and Dudek (2008) |

Spatial Domain (MCM) |

Depended on learned constraints for underwater images enhancement |

Some noise and blurring in underwater images |

| Iqbal et al. (2007) |

Spatial Domain (MCM) |

Solved the problem of light | Low contrast in underwater images |

| Ghani and Isa (2017) |

Spatial Domain (MCM) |

Enhanced underwater images qualitatively and quantitatively |

High time complexity |

| Hitam et al. (2013) |

Spatial Domain (MCM) |

Highest PSNR values and lowest MSE |

Blurring in underwater images |

| Huang et al. (2018) |

Spatial Domain (MCM) |

Enhanced the visibility of underwater images |

Not suitable for all types of underwater images |

| Petit et al. (2009) |

Frequency Domain |

Light attenuation was removed | Low contrast and visibility |

| Cheng et al. (2015) |

Frequency Domain |

Better Contrast and Higher visibility |

Highest time running |

| Sun et al. (2011) |

Frequency Domain |

Removed the noise from underwater images |

Poor quality in low light conditions |

| Ghani et al. (2018) |

Frequency Domain |

Highest contrast and visibility | Highest run time |

| Priyadharsini et al. (2018) |

Frequency Domain |

Better PSNR and SSIM results | Some Noise wasn’t removed |

| Joshi et al. (2008) |

Color Constancy |

Balance between machine and human vision |

Low color and contrast distortion |

| Fu et al. (2014) |

Color Constancy |

Enhanced contrast, color, and edges and details |

High time complexity |

| Zhang et al. (2017) |

Color Constancy |

Enhanced edges and reduced noise |

Couldn’t enhance the underwater images contrast |

| Wang et al. (2018) |

Color Constancy |

Increased image quality and balanced color |

Noise and high time complexity |

| Zhang et al. (2019) |

Color Constancy |

Good denoising and edge-preserving |

Low contrast |

| Tang et al. (2013) |

Color Constancy |

Intensity channel was applied in multi-scale Retinex |

Filtering techniques were in efficient |

| zhang et al. (2021) |

Color Constancy |

Increased contrast | Noise wasn’t removed |

| Dixit et al. (2016) |

Contrast | Removed noise and preserved details |

Low efficiency and highest time |

| Wang et al. (2016) |

Contrast | Increased contrast and precision value |

Didn’t remove noise |

| Bindhu and Maheswari (2017) |

Contrast | Noise was reduced | High computational complexity |

| Guraksin et al. (2019) |

Contrast | Visual information is more important |

Didn’t remove haze |

| Sankpal and Deshpande (2019) |

Contrast | Increased images’ contrast | Entropy was still less than other researches |

| Azmi et al. (2019) |

Contrast | Improved images details and reduced color cast |

Low efficiency and highest time |

| Wang et al. (2017) |

Deep Learning | Enhanced contrast and color correction |

Low efficiency and highest time |

| Fabbri et al. (2018) |

Deep Learning | Enhanced contrast | Noise and Light not solved |

| Anwar et al. (2018) | Deep Learning | Enhanced contrast | Didn’t remove haze values. |

| Li et al. (2018) | Deep Learning | Corrected color cast | Low contrast |

| Li et al. (2019) | Deep Learning | Enhanced contrast | Effects of attenuation and backscatter weren’t solved |

| Pritish et al. (2019) | Deep Learning | Enhanced contrast and visibility of underwater images |

Noise wasn’t removed |

| Li et al. (2020) | Deep Learning | Enhanced brightness and visibility | Low contrast and noise wasn’t removed |

| Hu et al. (2021) | Deep Learning | Enhanced contrast of underwater images |

Clarity of the image was far lower than that of the truth image |

| Tanget al. (2023) | Deep Learning | Enhanced contrast | The network was more weaker |

4.2.1. Spatial Domain-based Image Enhancement

-

Single Color Model (SCM) MethodAncuti et al. [93] presented a fusion-based method for underwater image enhancement. First, the two fused images were created from the input image. The first image was corrected by white balancing, and the contrast was improved for the second image using adaptive histogram equalization. Thereafter, the four fusion weights were defined relative to the salient features, contrast, and two fused image exposure. Finally, the two fused images and weights were combined to obtain the enhanced images. Ancuti et al. [34] proposed a method for color balance and the enhancement of underwater images. This method used a single image and did not need specified hardware, knowledge, or information about scene structure or underwater conditions. It relied on the fusion of two images derived from a white-balanced, color-compensated image of the original degraded and hazed image. This method improved the underwater images’ contrast, edge sharpness, and visibility.Liu et al. [94] developed an effective and accurate underwater image enhancement method. This method is known as Deep Sparse Non-negative Matrix Factorization (DSNMF) for estimating the underwater image illumination. First, the images were divided into small blocks. Each channel of this small block was an [R, G, B] matrix, then each depth of the matrix was divided into several layers using the DSNMF sparsity constraint. The last layer of this factoring matrix was applied as illumination, and the image was enhanced with sparse constraints.

-

Multiple Color Model (MCM) MethodTorres et al. [95] depended on the Markov random field (MRF) to represent the relations between underwater images before and after distortion and used maximum a posterior (MAP) estimation for enhancing the colors in underwater images. While computing the dissimilarity between image patches, the underwater images were transformed to the CIE-Lab color space to represent equally perceived differences. This method’s experimental results indicated its efficacy and feasibility. Iqbal et al. [96] developed a new and effective method for underwater image enhancement depended on the integrated color model (ICM). This method solved the problems of image degradation through light scattering and absorption. First, it applied the RGB contrast stretching algorithm for color contrast equalization. Second, HSI saturation and intensity stretch were applied to increase the true color and improve the brightness and saturation in the degraded underwater images.Ghani et al. [97] developed a technique for underwater image enhancement that depended on recursive adaptive histogram modification (RAHIM). This technique tended to enhance underwater images’ backgrounds to increase the contrast. They modified the brightness and the underwater image saturation in the HSV color model using the human visual system and the Rayleigh distribution. Then, the enhanced underwater image was converted to the RGB color model. Hitam et al. [98] developed a technique for enhancing underwater images that depended on contrast limited adaptive histogram equalization (CLAHE) and built the CLAHE mix to enhance the visibility of the underwater image. CLAHE was used for the RGB color model and the HSV color model for two underwater images. Thereafter, these images were merged using the Euclidean norm. This method enhanced the image contrast and reduced noise.Huang et al. [99] presented a method for underwater image enhancement that depended on relative global histogram stretching (RGHS) in two color models, RGB and CIE-Lab. First, the underwater images were preprocessed using gray world adaptive histogram stretching in the RGB color model with the help of RGB channels and selectively attenuated light propagation in the underwater images. Thereafter, the CIE-Lab color model, applied the brightness L and color a, b components for curve and linear adaptive stretching optimization.

4.2.2. Frequency Domain-based Image Enhancement

4.2.3. Color Constancy-based Image Enhancement

4.2.4. Contrast-based Image Enhancement

4.2.5. Deep Learning-based Image Enhancement

4.3. Fusion of Restoration and Enhancement

5. Underwater Image Analysis Techniques

5.1. Histogram Equalization (HE)

5.2. Adaptive Histogram Equalization (AHE)

5.3. Contrast Limited Adaptive Histogram Equalization (CLAHE)

5.4. Histogram Sliding

5.5. Brightness Preserving Bi-Histogram Equalization (BBHE)

5.6. Generalized Unsharp Masking (GUM)

5.7. Contrast Stretching

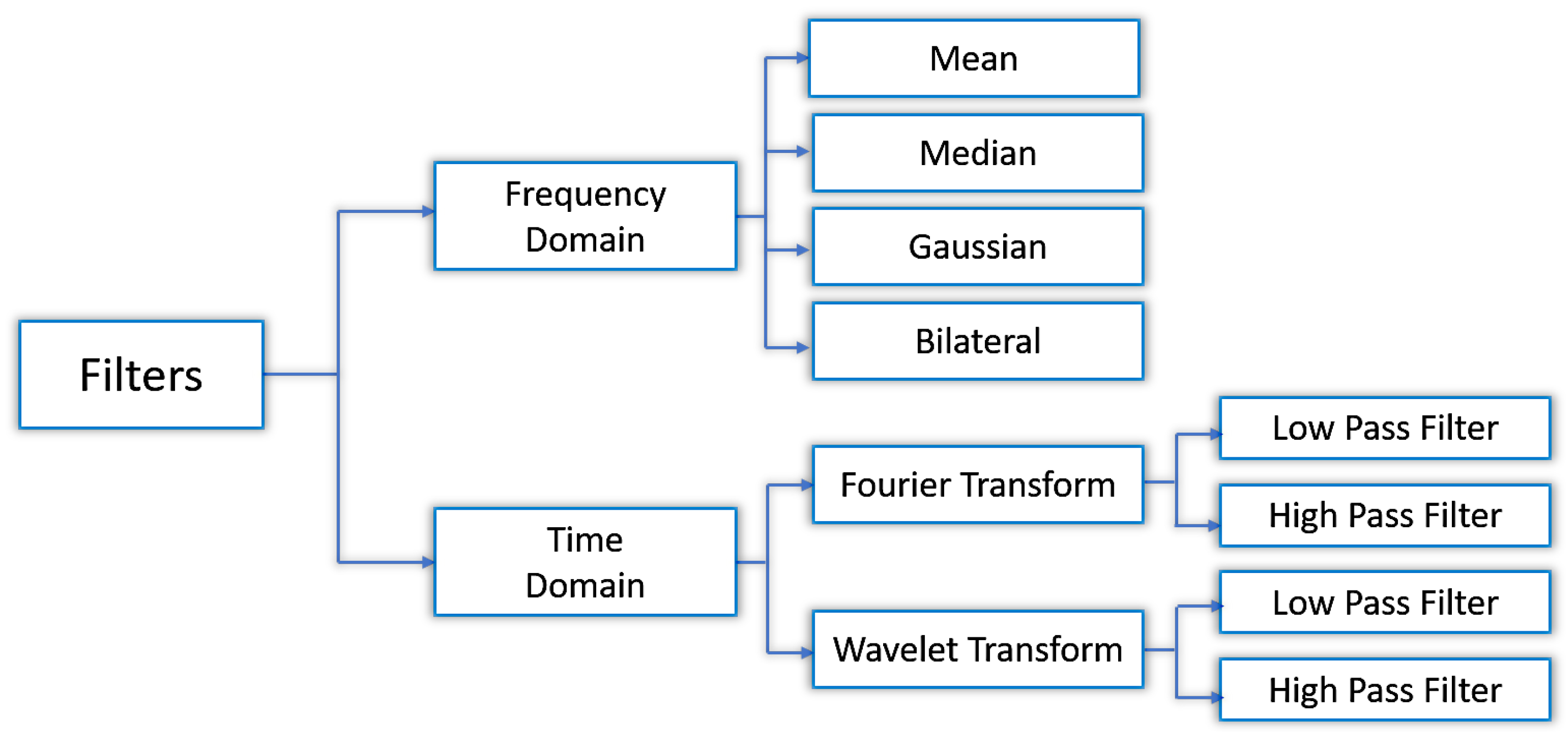

5.8. Noise Filtering

5.9. Discrete Wavelet Transform (DWT)

5.10. Discrete Cosine Transform (DCT)

5.11. Role of Evolutionary Algorithms in Contrast Enhancement

5.12. Dark Channel Prior (DCP)

6. Limitations

6.1. Underwater Environment-based Limitations

6.1.1. Equipment

6.1.2. Refraction

6.1.3. Non-uniform Illumination

6.1.4. Motion

6.1.5. Scattering

6.1.6. Absorption

6.2. Underwater Image-based Limitations

6.2.1. Low Contrast

6.2.2. Noise

- Salt and pepper noise: This signifies the smaller and larger grayscale values of a specified pixel or region.

- Gaussian noise: This is the most common noise type and is a statistical noise with a probability density function equal to normal distribution.

- Fixed mode noise: It is the underwater clutter that degrades the image.

6.2.3. Blurring

6.2.4. Poor visibility

6.2.5. Hazy

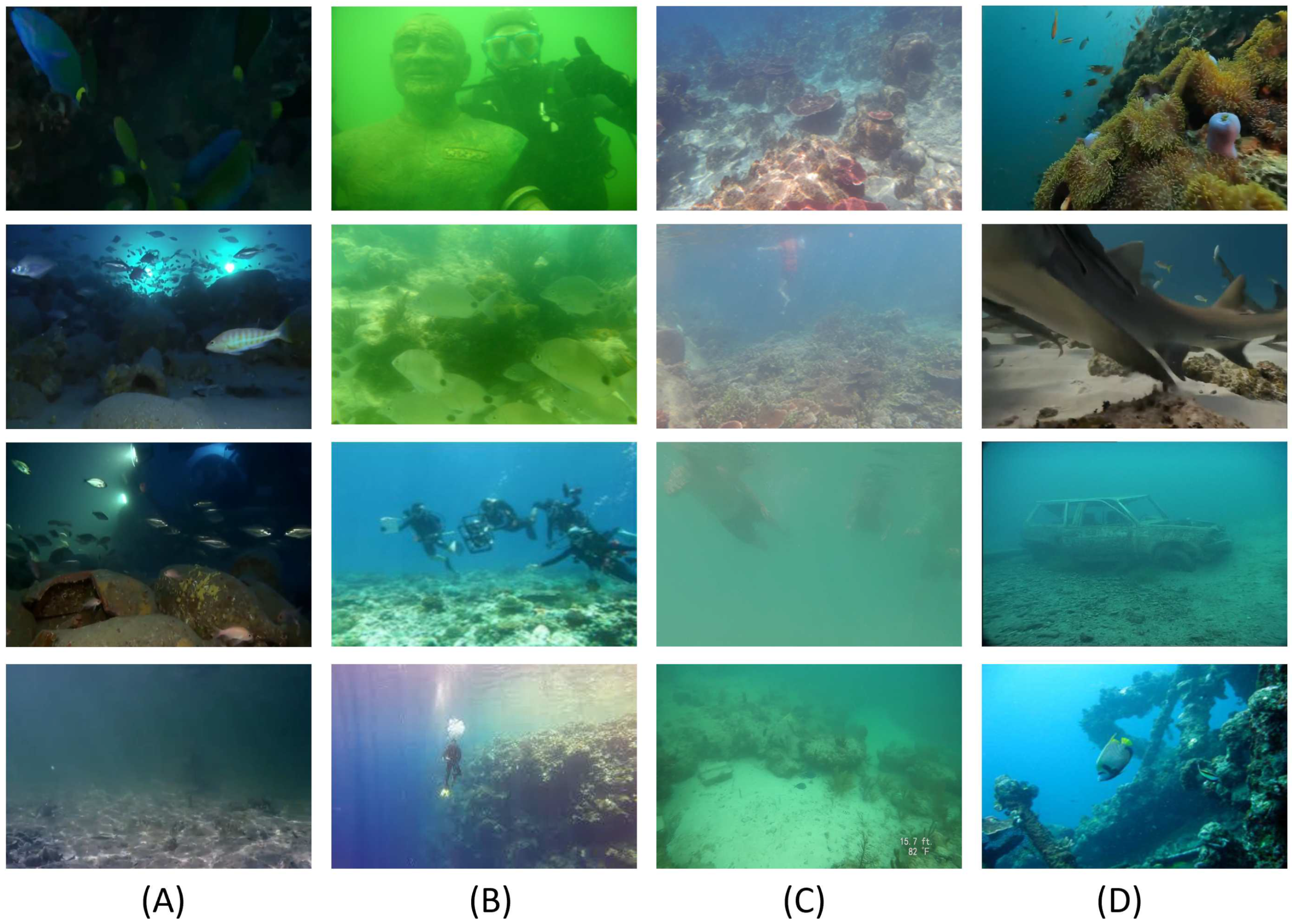

7. Underwater Image Datasets

-

Real-World Underwater Image Enhancement (RUIE) DatasetThe RUIE dataset [175] is a large-scale dataset that contains 4000 underwater images from multiple views. According to the underwater image enhancement network (UIE) algorithms, the RUIE dataset is classified into three subsets: the underwater image quality set (UIQS), underwater color cast set (UCCS), and underwater task-oriented test suite (UHTS), as presented in Table 9. These subsets are used to restore color cast, enhance visual appearance, and aid in computer vision detection/classification at a higher level.

-

Underwater Image Enhancement Benchmark (UIEB) DatasetThe UIEB dataset [130] contains 950 real-world underwater images, 890 of which have a corresponding reference image. The remaining 60 were retained as testing data. This dataset is used in qualitative and quantitative underwater image enhancement algorithms. The UIEB dataset includes many levels of resolution and covers several scene/main object categories.

-

Enhancement of Underwater Visual Perception (EUVP) DatasetThe EUVP dataset [176] is a large-scale dataset that includes a paired and unpaired collection of low and good-quality underwater images used for adversarial supervised learning. These images were collected using seven different cameras in different situations. The unpaired data was collected by six human assistants and the paired data was collected by relying on human perception. This dataset includes 12K paired and 8K unpaired images, as shown in Table 7 and Table 8.Table 7. EUVP paired dataset.

Dataset Name Training Images Validation Images Total Images Underwater Dark 5550 570 11670 Underwater ImageNet 3700 1270 8670 Underwater Scenes 2185 130 4500 Table 8. EUVP unpaired dataset.Poor quality Good quality Validation Total Images 3195 3140 330 6665 -

U-45 DatasetThe U-45 dataset [177] is an effective public underwater test dataset that includes 45 underwater images chosen from among real underwater images. This dataset contains the low contrast, color casts, and haze-like effects that contribute to image degradation.

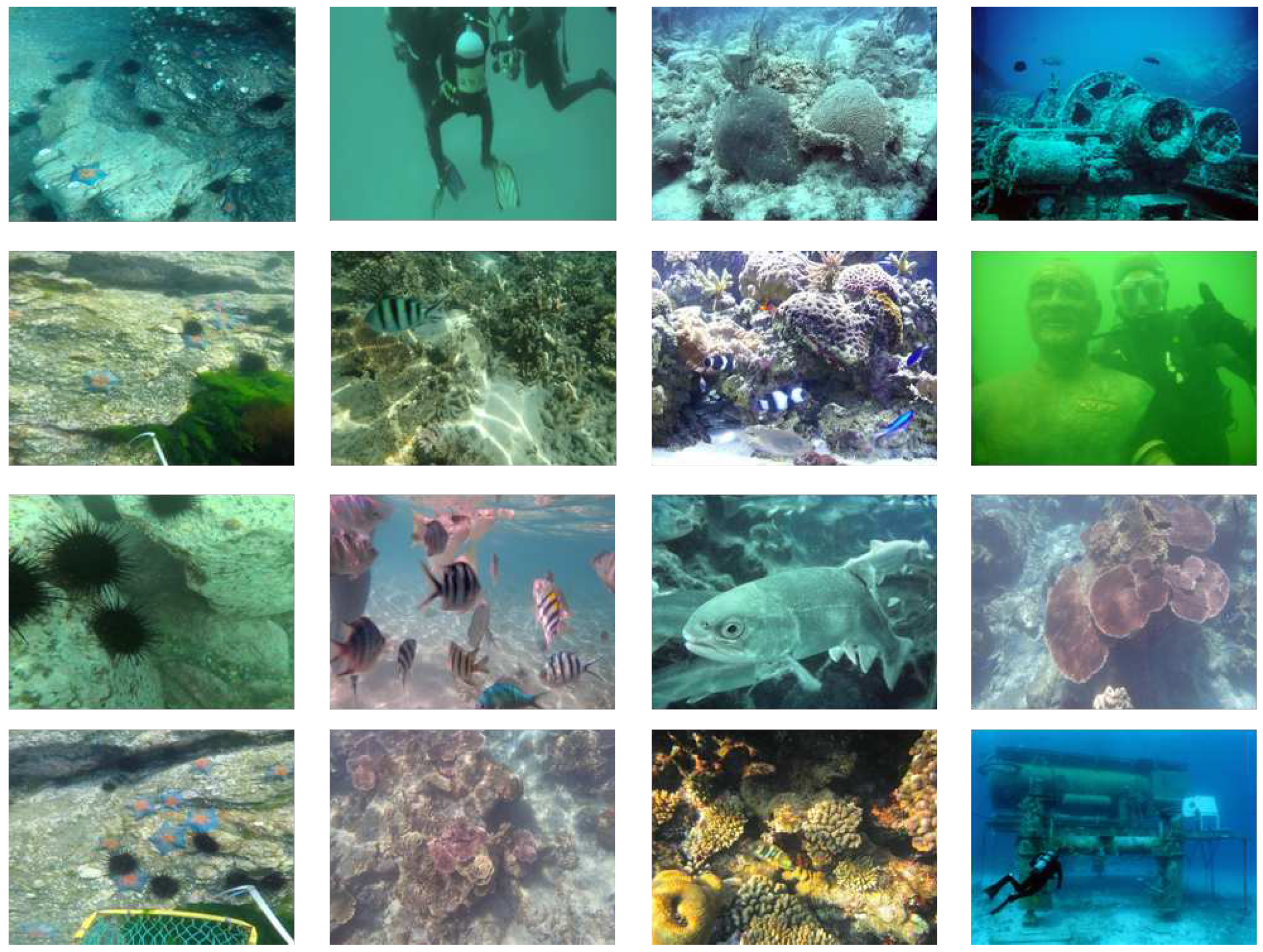

-

Jamaica Port Royal DatasetThe Jamaica Port Royal dataset [178] was gathered in Port Royal, Jamaica, at the site of a submerged city containing both natural and artificial structures. These images were collected using a handheld diver rig. Sixty-five hundred images were collected during a single dive at a maximum depth of 1.5 m above the seabed.

-

Marine Autonomous Robotics for Interventions (MARI) DatasetThe MARI dataset [179] aims to improve the development of cooperative AUVs for underwater interventions in offshore industries, rescue, search, and various types of scientific exploration tasks. This dataset presents diverse underwater videos and images captured underwater by a stereo vision system.

-

MOUSSThe MOUSS dataset [24] was obtained by using a stationary camera on the ocean floor. At 1–2 m, with sufficient ambient lighting, 159 images of fish and other relevant objects were acquired. The test dataset was a combination of images from training and new collections.

-

MBARI DatasetThe MBARI dataset [24] was collected from different regions and consisted of 666 images of fish and other relevant objects. This dataset was obtained by the Monterey Bay Aquarium Research Institute.

-

AFSC DatasetThe AFSC dataset [24] was collected from the ROV that was placed underwater and equipped with an RGB video camera. It consisted of numerous videos from various ROV missions and contained 571 images.

-

NWFSC DatasetThe NWFSC dataset [24] was collected using a remotely operated vehicle and looking downward at the ocean floor. The first dataset contained 123 images of fish and other objects near the seabed.

-

RGBD DatasetThe RGBD dataset [24] collected for underwater image restoration and enhancement contained a waterproof color chart in the underwater environment. It consisted of over 1100 images.

-

Fish4knowledge DatasetThe Fish4knowledge dataset [180] consisted of fish data collected from a live video dataset. It had 27370 fish images. The entire dataset was divided into 23 clusters, with each distinct cluster representing a particular species.

-

Wild Fish Marker DatasetThe Wild Fish Marker dataset [181] was collected using a remotely operated vehicle under different ocean conditions. This dataset contained fish images depending on the cascade classifiers of Haar-like features. These images were not unconstrained as the underwater environment was variable because of the moving recording platform. It included an annotated training and validation dataset and independent test data.

-

HabCam DatasetThe HabCam dataset [24] was collected from underwater images on the seafloor. The HabCam vehicle was used for recording. It flew over the ocean taking six images in one second. These images are critical for studying the ecosystem and advancing the marine sciences.

-

Port Royal Underwater Image DatasetThe Port Royal underwater image dataset [178] was collected using a GAN to create realistic underwater images. These images were taken using a camera onboard autonomous as well as operated vehicles. This method is capable of recording high-resolution underwater images.

-

OUCVISION DatasetThe OUCVISION dataset [182] is a large-scale underwater image enhancement and restoration dataset which is used for recognizing and detecting salient objects. It contains 4400 images of 220 objects. Each object was taken with four pose variations (right, left, back, and front) and five spatial regions (bottom right, bottom left, center, top right, top left) to obtain 20 images.

-

Underwater Rock Image DatabaseThe underwater rock image database [24] was collected to enhance and restore underwater images. It depended on the GAN to generate realistic underwater images.

-

Underwater Photography Fish DatabaseThe underwater photography fish database [24] was collected from reef- and fish-life photographs taken in locations all over the world, such as the Indian Ocean, Red Sea, etc. This dataset contained many reef fish species, including Parrotfish, Butterflyfish, Angelfish, Wrasse, and Groupers. It also includes non-fish subjects like nudibranchs, corals, and octopi.Some underwater image datasets are available in https://github.com/xahidbuffon/Awesome_Underwater_Datasets. Table 9 indicates comparison between all datasets.

| Dataset | Source | No. of Images | Objects | Resolution |

|---|---|---|---|---|

| RUIE [175] | Dalian Univ. of Technology | UIQS 3630 (726 × 5) UCCS 300 (100 × 3) UHTS 300 (60 × 5) |

Sea cucmbers, scallops, and urchins |

400 × 300 |

| UIEB [130] | —– | 950 | Diverse objects | Variable |

| EUVP [176] | —– | 31505 | Diverse objects | 256 × 256 |

| U-45 [177] | Nanjing Univ. of Information Science and Technology, China |

45 | Diverse objects | 256 × 256 |

| Jamaica Port Royal [178] |

—– | 6500 | Fishes and other related objects |

1360 × 1024 |

| MARI [179] | —– | variable | Fished and other related objects |

1292 × 964 |

| MOUSS [24] | CVPR AAMVEM workshop |

159 | Fishes | 968 × 728 |

| MBARI [24] | Monterey Bay Aquariam Research Insitute |

666 | Fishes | 1920 × 1080 |

| AFSC [24] | CVPR AAMVEM workshop |

571 | Fishes and other related objects |

2112 × 2816 |

| NWFSC [24] | Integrated by CVPR AAMVEM workshop |

123 | Fishes and other related objects |

2448 × 2050 |

| RGBD [24] | Tel Aviv Univ. | 1100 | Diverse objects | 1369 × 914 |

| Fish4knowledge [180] | The Fish4knowledge team |

images from underwater videos |

Diverse objects | Variable |

| Wild Fish Marker [181] |

NOAA Fisheries | 1934 positive images and 3167 negative images, 2061 fish images |

Fishes and other related objects |

Variable |

| HabCam [24] |

Integrated by CVPR AAMVEM workshop |

10465 | Sand dollars, scallops, rocks, and fishes |

2720 × 1024 |

| Port Royal Underwater image [178] |

Real scientific surveys in Port Royal |

18091 | Artificial and natural structures |

1360 × 1024 |

| OUCVISION [182] | Ocean Univ. of china |

4400 | Artificial targets or rocks |

2592 × 1944 |

| Underwater Rock Image Database [24] |

Univ. of Michigan | 15057 | Rocks in pool | 1360 × 1024 |

| The underwater Photography Fish Database [24] |

Amateur contribution | 8644 | Reef fishes, Coral, and others |

variable |

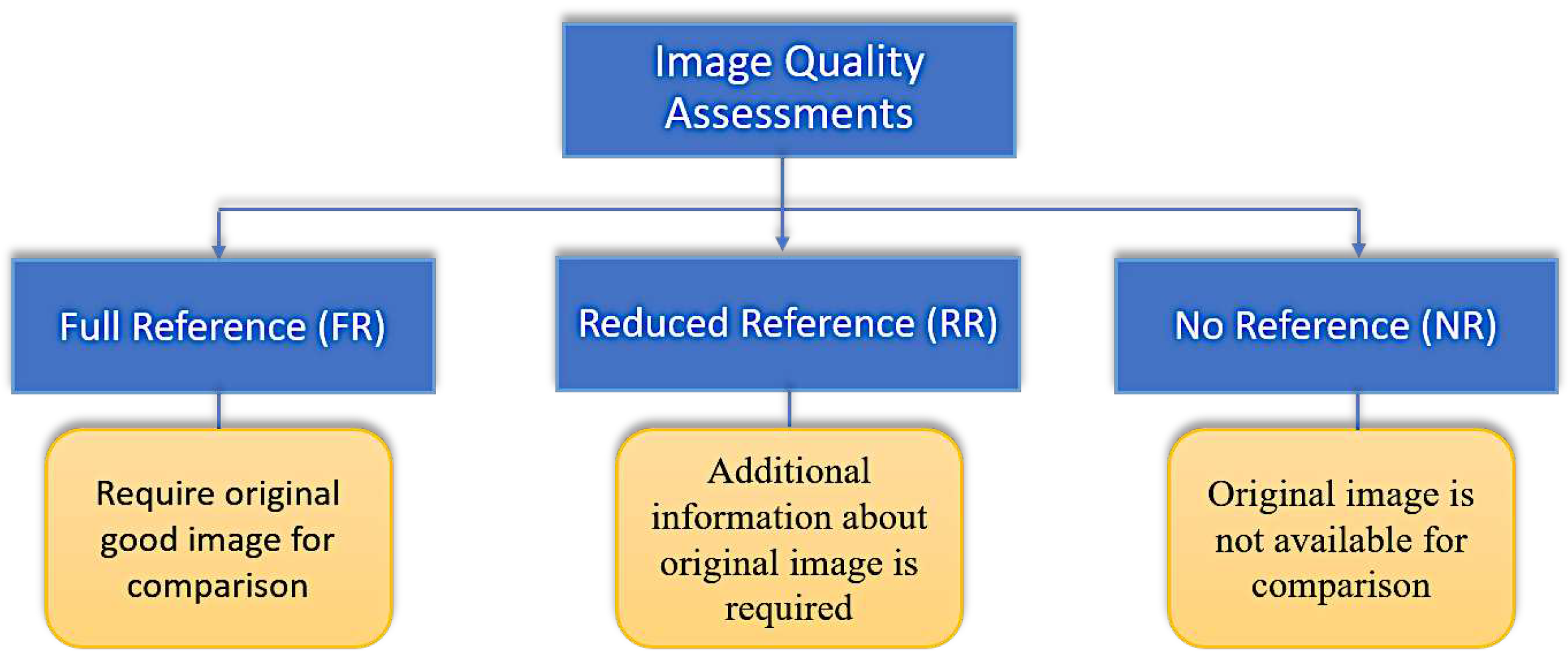

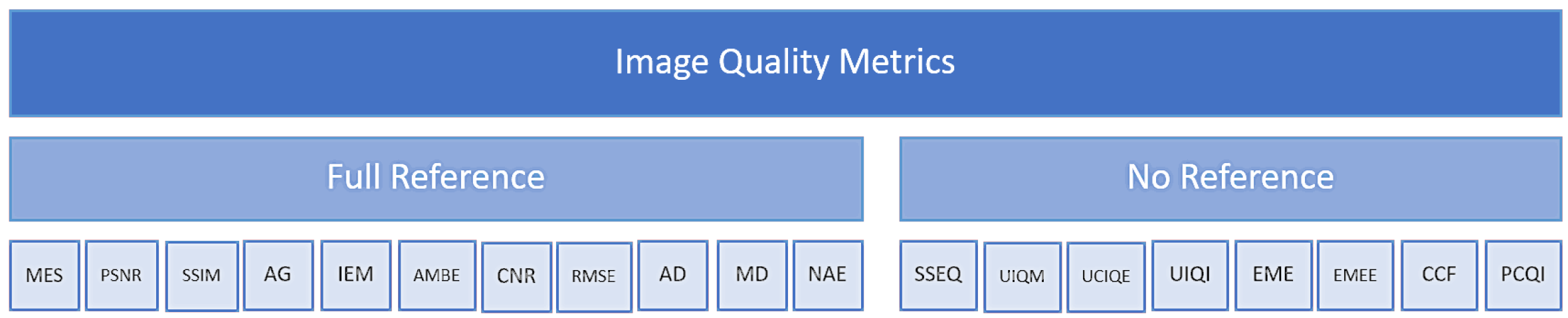

8. Underwater Image Quality Evaluation Metrics

- Peak-Signal-to-Noise Ratio (PSNR): computes the peak error and is the percentage of the quality measurement between the original, and enhanced images [98]. The greater the PSNR, the good the reconstructed or enhanced image quality. It is calculated from the MSE using Equation 12.where is the maximum pixel value in an image and is 255 in case of gray level image.

- Entropy: represents a statistical value of the information in the image. It represents the randomness degree in the image that can be applied to characterize the texture of the image [185,186]. The higher entropy value indicates that the image has minimal information loss. It is computed by using Equation 13.where i is the gray level number in a pixel in the image F and is the probability of intensity i.

- Structure Similarity Index Measure (SSIM): is applied to compute the similarity value between the original and enhanced images. It is presented by Wang [187] and formulated in [188], [189]. x and y are the patch locations of two different images. The SSIM involves three measures: contrasts C(x, y), brightness B(x, y), and structure S(x, y). The greater the SSIM value, the better the enhancement and the less distortion. The SSIM is computed by using Equation 14.where , are the values of means and the , are the values of standard deviation of x and y patches of pixels. is the covariance value of x and y patches of pixels and and are the small constant values to prevent the instability. L is the dynamic range value of pixels, and .

- Colour Enhancement Factor (CEF): This is used to represent the enhancement effect. The greater the CEF, the better quality of the enhanced image. It is calculated by using Equation 15.where and denote enhanced and original images. The . and are the standard deviation values, and and are average values of and .

- Contrast to Noise Ratio (CNR): This is used to compute the underwater image quality [190]. It is the signal amplitude associated with the surrounding noise in underwater images.where represents the original image average value, is the enhanced image average value, and is the standard deviation.

- Image Enhancement Metric (IEM): computes the sharpness and contrast in an underwater image by classifying the image into non-overlapping blocks [191]. It represents the mean value ratio of the center pixel’s absolute difference from eight neighbors in the original and enhanced images. It is calculated by using Equation 17.where and are non-overlapping blocks. SFS and e are original and improved images. and are the intensities of the center pixel. and are the neighbours intensities from the center pixel.

- Absolute Mean Brightness Error (AMBE): This indicates the brightness that is preserved after image enhancement [192]. It is the value of the absolute difference between the average of the original and improved underwater images. A median AMBE value denotes good brightness.where and are the average values of the original and improved image.

- Spatial Spectral Entropy based Quality index (SSEQ): This is an efficient and accurate image NR IQA model presented by [193]. It computes the underwater image quality when it is affected by many distorting factors. It is computed by using Equation 19.where P(i, j) represents the spectral probability map that is computed by Equation 20.

- Root Mean Square Error (RMSE): It is applied to compute the difference value between the original and enhanced images. It calculates the square root of MSE. The lower the RMSE value, the better contrast value for underwater images. It is calculated by using Equation 23.where F and e represent the original and improved images.

- Underwater Colour Image Quality Evaluation metric (UCIQE): It is a linear combination of saturation, contrast, and chroma [196]. It computes the effects of low contrast, non-uniform color cast, and blur issues that degrade underwater images. It converts the RGB space into the CLELAB color space as it approximates the human eye’s visual perception. The higher UCIQE value means that underwater images have a good balance between contrast, chroma, and saturation. It is computed using Equation 24.where , , and represent the weighted coefficients, indicates the standard deviation, is the contrast, and represents the average value of saturation.

- Underwater Image Quality Measure (UIQM): measures the quality of underwater images and depends on the model of the human visual system and functions without the reference image [197]. It relies on the feature or measuring component of the underwater images to represent the visual quality. It consists of three measurements, the underwater image sharpness measurement (UISM), the underwater image color measurement (UICM), and the underwater image contrast measurement (UIConM). A higher UIQM value denotes a higher quality value for underwater images.

-

Colourfulness Contrast Fog density index (CCF): This computes the color quality of underwater images and is the non-referenced IQA model [198]. It is a weighted combination of contrast, the colorfulness index, and fog density. It is calculated using Equation 26.The colorfulness results from absorption and blurring, whereas low contrast, caused by forwarding scattering and fog density, is due to backward scattering.

- Average Gradient (AG): This is a full reference method that measures the underwater images sharpness. It computes the rate change per minute as it presents in underwater images. It is computed using Equation 27.where L and M represent the width and height of the underwater image, , and are the gradient in the x and y directions.

- Patch-based Contrast Quality Index (PCQI): This predicts the perceived distortion of contrast to the human eye [199]. It is based on the patch model instead of relying on global statistics. It is based on three independent image quantities: structure, signal strength, and average. The greater the PCQI value, the better contrast values in underwater images. It is computed using Equation 28.where P represents the patch number in the underwater image. , , and are the comparison functions.

- Normalized Cross-Correlation (NCC): This evaluates the underwater images quality by calculating similarities between the enhanced and original images. It represents the correlation value in the image group [200]. The brightness of an underwater image varies due to lighting conditions, so this is the essential reason for normalizing the image. NCC produces a result value between -1 and 1. If the underwater images are uncorrelated, the value is 1; if the underwater images are perfectly correlated, the value is -1.where is the original image and is the enhanced image. i and j are the image coordinates. M and N are the pixel numbers in horizontal and vertical coordinates.

- Average Difference (AD): The average difference value calculates the differences between filtered and low-quality images [200]. It calculates the mean value between the original and the processed image. This measurement is quantitative and is applied for object detection and recognition applications. Many image processing applications find the average value of the difference value between images through this quantitative measure. The image quality is very poor when the AD value is too high. It is computed using Equation 30.where is the enhanced image and is the original image at i,j coordinates. M and N are the number of image pixels in the horizontal and vertical coordinates.where is the enhanced image and is the original image at i and j coordinates. M and N are pixels of image in horizontal and vertical direction.

- Maximum Difference (MD): This computes the maximum error signals by calculating the difference between the original and enhanced underwater images [201]. It uses a low-pass filter for the sharp edges of underwater images. It is similar to AD. The higher the MD value, the poorer the underwater images.where is the original image and is the enhanced image.

- Normalized Absolute Error (NAE): This computes the underwater images’ quality [202]. The NAE value is inversely proportional to the image quality. If the NAE value is higher, the quality of the underwater image is poorer.where is the original image and is the enhanced image. is the absolute error in the underwater image.

9. Performance Evaluation

9.1. Experimental Setting

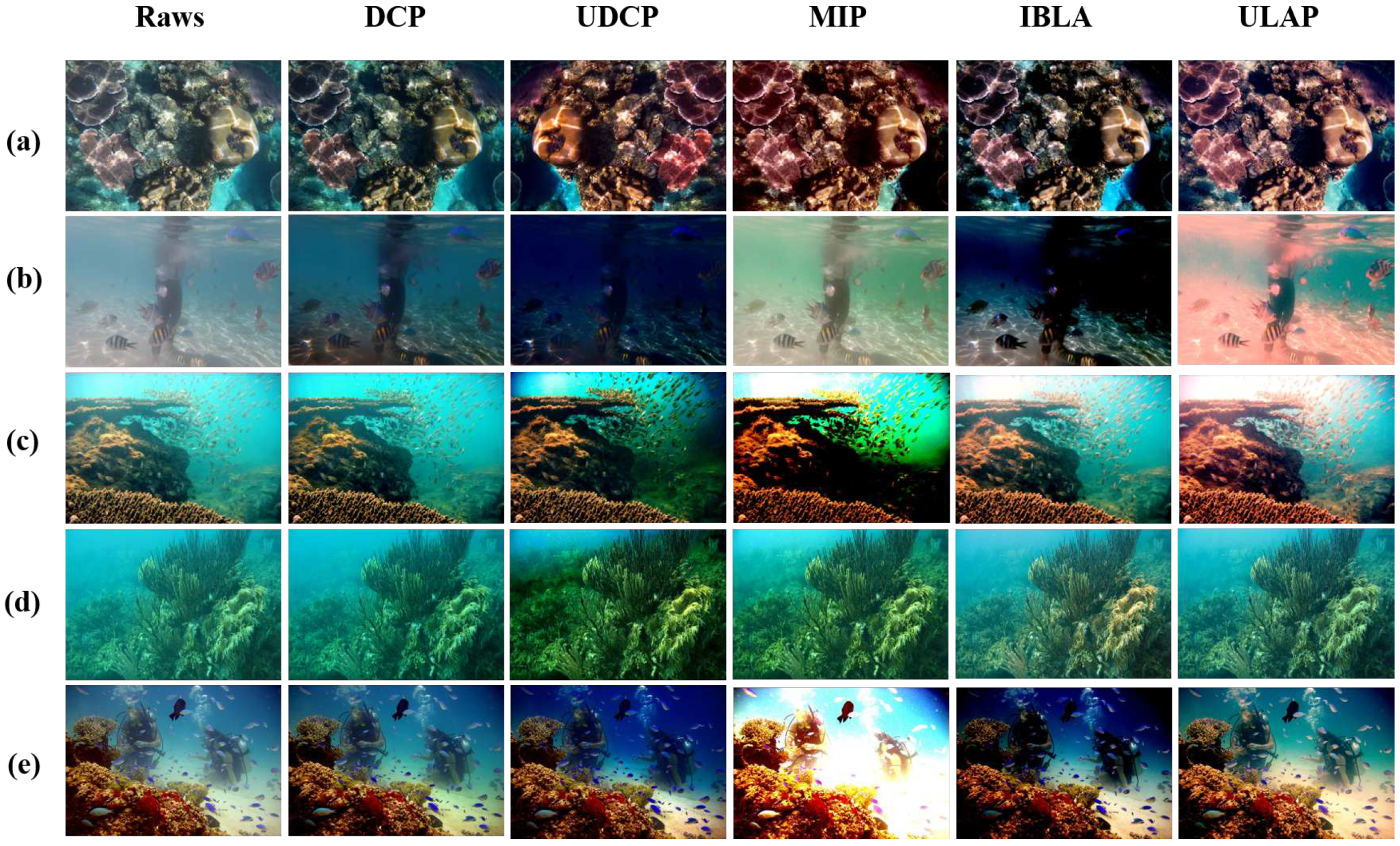

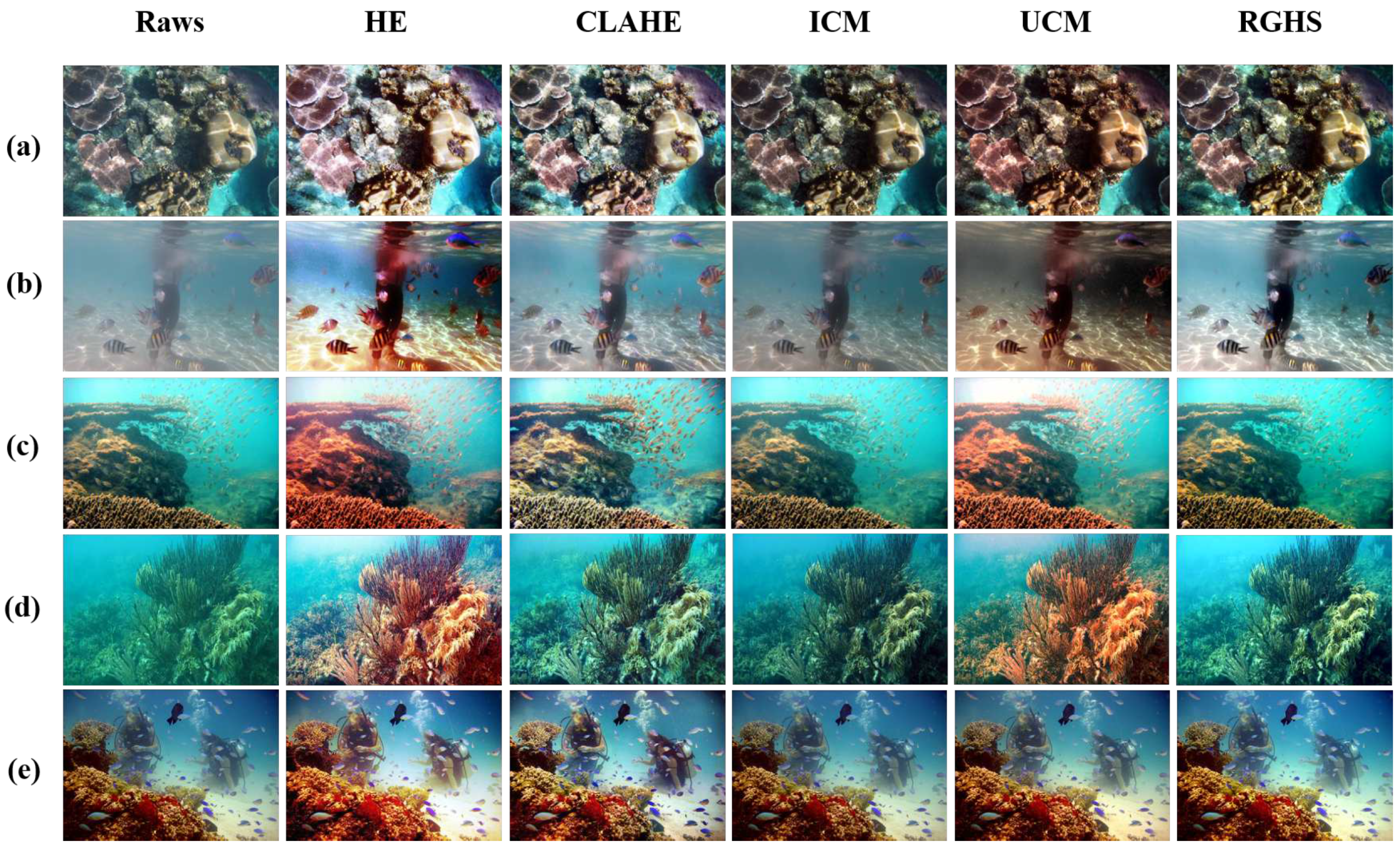

9.2. Qualitative Evaluation

9.3. Quantitative Evaluation

| Image | Algorithm | MSE | SSIM | PSNR | PIQE | UCIQE | UIQM |

|---|---|---|---|---|---|---|---|

| (a) | DCP | 838 | 0.6 | 18.89 | 18.43 | 0.48 | 3.11 |

| UDCP | 775 | 0.9 | 19.23 | 20.92 | 0.51 | 2.71 | |

| MIP | 1198 | 0.6 | 17.34 | 27.59 | 0.53 | 1.28 | |

| IBLA | 1116 | 0.8 | 17.65 | 31.60 | 0.51 | 1.57 | |

| ULAP | 237 | 0.8 | 24.38 | 26.30 | 0.56 | 2.35 | |

| (b) | DCP | 5886 | 0.7 | 10.43 | 29.68 | 0.40 | 2.25 |

| UDCP | 12963 | 0.4 | 7.03 | 30.40 | 0.36 | 1.98 | |

| MIP | 1655 | 0.2 | 15.94 | 25.18 | 0.51 | 2.65 | |

| IBLA | 10530 | 0.3 | 7.90 | 30.57 | 0.42 | 1.33 | |

| ULAP | 3703 | 0.3 | 12.44 | 17.13 | 0.60 | 2.92 | |

| (c) | DCP | 314 | 0.7 | 23.16 | 28.61 | 0.55 | 2.15 |

| UDCP | 3655 | 0.6 | 12.50 | 28.35 | 0.52 | 2.03 | |

| MIP | 4661 | 0.5 | 11.44 | 45.58 | 0.63 | 0.96 | |

| IBLA | 1600 | 0.8 | 16.08 | 26.26 | 0.60 | 2.40 | |

| ULAP | 3675 | 0.8 | 12.47 | 27.10 | 0.63 | 2.46 | |

| (d) | DCP | 2807 | 0.6 | 13.64 | 51.66 | 0.47 | 2.30 |

| UDCP | 4617 | 0.5 | 11.48 | 53.30 | 0.46 | 1.79 | |

| MIP | 2025 | 0.7 | 15.06 | 50.96 | 0.50 | 1.76 | |

| IBLA | 974 | 0.6 | 18.24 | 47.02 | 0.50 | 1.37 | |

| ULAP | 1359 | 0.7 | 16.79 | 49.24 | 0.49 | 2.12 | |

| (e) | DCP | 1864 | 0.8 | 15.42 | 22.43 | 0.52 | 2.30 |

| UDCP | 4160 | 0.6 | 11.93 | 25.66 | 0.52 | 1.79 | |

| MIP | 7004 | 0.5 | 9.67 | 36.25 | 0.68 | 1.76 | |

| IBLA | 5152 | 0.5 | 11.01 | 35.72 | 0.54 | 1.37 | |

| ULAP | 1550 | 0.6 | 16.22 | 25.05 | 0.58 | 2.12 |

| Image | Algorithm | MSE | SSIM | PSNR | PIQE | UCIQE | UIQM |

|---|---|---|---|---|---|---|---|

| (a) | HE | 2472 | 0.7 | 14.19 | 19.61 | 0.60 | 3.31 |

| CLAHE | 1055 | 0.8 | 17.89 | 18.74 | 0.57 | 3.53 | |

| ICM | 276 | 0.9 | 23.71 | 27.29 | 0.50 | 3.41 | |

| UCM | 146 | 0.9 | 26.47 | 18.05 | 0.52 | 3.17 | |

| RGHS | 156 | 0.9 | 26.19 | 19.93 | 0.53 | 2.08 | |

| (b) | HE | 1530 | 0.6 | 16.28 | 15.96 | 0.61 | 3.30 |

| CLAHE | 815 | 0.7 | 19.01 | 18.01 | 0.50 | 3.22 | |

| ICM | 1333 | 0.7 | 16.88 | 26.65 | 0.46 | 3.56 | |

| UCM | 3826 | 0.2 | 12.30 | 23.52 | 0.48 | 3.03 | |

| RGHS | 515 | 0.9 | 21.01 | 15.24 | 0.57 | 3.12 | |

| (c) | HE | 2672 | 0.7 | 13.86 | 24.23 | 0.60 | 2.91 |

| CLAHE | 1812 | 0.9 | 15.54 | 21.51 | 0.58 | 3.17 | |

| ICM | 594 | 0.9 | 20.39 | 16.76 | 0.56 | 2.71 | |

| UCM | 2781 | 0.8 | 13.68 | 18.25 | 0.61 | 2.88 | |

| RGHS | 531 | 0.9 | 20.87 | 23.42 | 0.58 | 2.27 | |

| (d) | HE | 1575 | 0.7 | 16.15 | 45.38 | 0.59 | 2.70 |

| CLAHE | 735 | 0.8 | 19.46 | 49.06 | 0.53 | 2.44 | |

| ICM | 1705 | 0.7 | 15.81 | 48.33 | 0.49 | 2.03 | |

| UCM | 1005 | 0.8 | 18.10 | 47.62 | 0.54 | 2.59 | |

| RGHS | 1274 | 0.7 | 17.07 | 49.73 | 0.55 | 2.71 | |

| (e) | HE | 948 | 0.7 | 18.36 | 22.33 | 0.61 | 2.83 |

| CLAHE | 506 | 0.9 | 21.08 | 23.63 | 0.56 | 2.98 | |

| ICM | 700 | 0.9 | 19.67 | 21.03 | 0.54 | 2.72 | |

| UCM | 654 | 0.8 | 19.96 | 20.65 | 0.56 | 2.78 | |

| RGHS | 413 | 0.9 | 21.96 | 21.13 | 0.58 | 2.71 |

9.4. Computational Complexity

| Image | DCP | UDCP | MIP | IBLA | ULAP | HE | CLAHE | ICM | UCM | RGHS |

|---|---|---|---|---|---|---|---|---|---|---|

| (a) | 13 | 11.88 | 15.2 | 30 | 0.02 | 0.03 | 0.03 | 3 | 7.2 | 4 |

| (b) | 13 | 12 | 24 | 90 | 0.1 | 0.04 | 0.04 | 3.3 | 7 | 4 |

| (c) | 48 | 43 | 48 | 130 | 0.6 | 0.07 | 0.16 | 11.19 | 24 | 14 |

| (d) | 91 | 85 | 60 | 190 | 0.12 | 0.13 | 0.12 | 22.2 | 40 | 28 |

| (e) | 7 | 14 | 7 | 50 | 0.1 | 0.02 | 0.02 | 3 | 4 | 3 |

10. Applications of Underwater Image Analysis

10.1. Underwater Navigation

10.2. Fish Detection and Identification

10.3. Corrosion Estimation of Subsea Pipelines

10.4. Coral-reef Monitoring

10.5. Sea Cucumber Image Enhancement

10.6. Other applications

11. Future Directions

- Efforts should be directed into noise removal as some research experiments cause high noise in underwater videos and images.

- Studies should be dedicated to real-time object tracking and detection from enhanced underwater images.

- Reducing the high computational cost and execution time required for restoring and enhancing underwater images.

- The performance of the enhancement methods of contrast is still poor in many aspects. Therefore, increasing the contrast in underwater images and videos is critical research that has attracted considerable attention in recent years.

- Underwater image datasets are primarily used for model testing rather than training. Although there are many underwater image datasets, a limited number of them contain a finite number of underwater images. Therefore, a more compact dataset that can enhance underwater images is needed.

- Evaluation metrics must be developed to consider more features in underwater videos and images, such as texture, noise, and depth estimation.

- Lightweight instruments and tools must be constructed to capture and take underwater images in challenging conditions.

- The computational efficiency and robustness of underwater imaging methods must be improved. The desired methods must be adaptable to diverse underwater conditions and effective strategies for different types of underwater applications should be developed. For recovering realistic scenes, the fusion of restoration and enhancement techniques improves the computational efficiency of underwater imaging. However, it is time-consuming to compute the two main parameters. Conversely, IFM-free methods can improve image quality by redistributing the pixel values and produce optimal color distributions.

- Several deep learning techniques, such as GAN to create the white balance and RNN to increase detail and decrease noise, should be used for underwater image enhancement. Learning-based underwater image enhancement methods depend heavily on datasets. These datasets require multiple numbers of paired and referenced images. Therefore, compiling a public benchmark dataset of various enhanced and hazed underwater images is essential.

- In the future, high-level tasks such as target detection through visibility degradation will be applied to evaluate underwater image enhancement methods. Current methods for underwater imaging focus on enhancing the perceptual effects but ignore whether enhanced imaging increases the accuracy and quality of analysis of high-level features for classification and detection. Therefore, the relationships between low-level underwater image enhancement and high-level classification and detection should be advanced.

- The methods for the enhancement of deep-sea underwater images differ from those used for shallow-water environments. The natural light propagated underwater is absorbed below 1000 meters; therefore, only artificial light sources strongly affect the images. The existing underwater image restoration and enhancement methods cannot recover deep sea underwater images. Therefore, to improve image quality and reduce halo effects, a new and effective imaging model for deep-sea imaging is required to resolve uneven illumination, light attenuation, scatter interference, and low brightness.

Conclusion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

References

- McLellan, B.C. Sustainability assessment of deep ocean resources. Procedia Environmental Sciences 2015, 28, 502–508. [Google Scholar] [CrossRef]

- Lu, H.; Wang, D.; Li, Y.; Li, J.; Li, X.; Kim, H.; Serikawa, S.; Humar, I. CONet: A cognitive ocean network. IEEE Wireless Communications 2019, 26, 90–96. [Google Scholar] [CrossRef]

- Jian, M.; Liu, X.; Luo, H.; Lu, X.; Yu, H.; Dong, J. Underwater image processing and analysis: A review. Signal Processing: Image Communication 2020, p. 116088.

- Krishnapriya, T.; Kunju, N. Underwater Image Processing using Hybrid Techniques. In Proceedings of the 2019 1st International Conference on Innovations in Information and Communication Technology (ICIICT). IEEE; 2019; pp. 1–4. [Google Scholar]

- Dharwadkar, N.V.; Yadav, A.M. Survey on Techniques in Improving Quality of Underwater Imaging. In Computer Networks and Inventive Communication Technologies; Springer, 2021; pp. 243–256.

- Zhang, W.; Dong, L.; Pan, X.; Zou, P.; Qin, L.; Xu, W. A survey of restoration and enhancement for underwater images. IEEE Access 2019, 7, 182259–182279. [Google Scholar] [CrossRef]

- Jaffe, J.S. Underwater optical imaging: the past, the present, and the prospects. IEEE Journal of Oceanic Engineering 2014, 40, 683–700. [Google Scholar] [CrossRef]

- Fan, J.; Wang, X.; Zhou, C.; Ou, Y.; Jing, F.; Hou, Z. Development, calibration, and image processing of underwater structured light vision system: A survey. IEEE Transactions on Instrumentation and Measurement 2023, 72, 1–18. [Google Scholar] [CrossRef]

- Singh, N.; Bhat, A. A systematic review of the methodologies for the processing and enhancement of the underwater images. Multimedia Tools and Applications 2023, pp. 1–26.

- Ahn, J.; Yasukawa, S.; Sonoda, T.; Ura, T.; Ishii, K. Enhancement of deep-sea floor images obtained by an underwater vehicle and its evaluation by crab recognition. Journal of Marine Science and Technology 2017, 22, 758–770. [Google Scholar] [CrossRef]

- Johnsen, G.; Ludvigsen, M.; Sørensen, A.; Aas, L.M.S. The use of underwater hyperspectral imaging deployed on remotely operated vehicles-methods and applications. IFAC-PapersOnLine 2016, 49, 476–481. [Google Scholar] [CrossRef]

- Lu, H.; Li, Y.; Zhang, Y.; Chen, M.; Serikawa, S.; Kim, H. Underwater optical image processing: a comprehensive review. Mobile networks and applications 2017, 22, 1204–1211. [Google Scholar] [CrossRef]

- Schettini, R.; Corchs, S. Underwater image processing: state of the art of restoration and image enhancement methods. EURASIP Journal on Advances in Signal Processing 2010, 2010, 1–14. [Google Scholar] [CrossRef]

- Lu, H.; Li, Y.; Serikawa, S. Computer vision for ocean observing. In Artificial Intelligence and Computer Vision; Springer, 2017; pp. 1–16.

- Wang, Y.; Song, W.; Fortino, G.; Qi, L.Z.; Zhang, W.; Liotta, A. An experimental-based review of image enhancement and image restoration methods for underwater imaging. IEEE Access 2019, 7, 140233–140251. [Google Scholar] [CrossRef]

- Sahu, P.; Gupta, N.; Sharma, N. A survey on underwater image enhancement techniques. International Journal of Computer Applications 2014, 87. [Google Scholar] [CrossRef]

- Han, M.; Lyu, Z.; Qiu, T.; Xu, M. A review on intelligence dehazing and color restoration for underwater images. IEEE Transactions on Systems, Man, and Cybernetics: Systems 2018, 50, 1820–1832. [Google Scholar] [CrossRef]

- Liu, R.; Fan, X.; Zhu, M.; Hou, M.; Luo, Z. Real-world underwater enhancement: Challenges, benchmarks, and solutions under natural light. IEEE Transactions on Circuits and Systems for Video Technology 2020, 30, 4861–4875. [Google Scholar] [CrossRef]

- Almutiry, O.; Iqbal, K.; Hussain, S.; Mahmood, A.; Dhahri, H. Underwater images contrast enhancement and its challenges: a survey. Multimedia Tools and Applications 2021, pp. 1–26.

- Raveendran, S.; Patil, M.D.; Birajdar, G.K. Underwater image enhancement: a comprehensive review, recent trends, challenges and applications. Artificial Intelligence Review 2021, pp. 1–55.

- Zhou, J.; Wei, X.; Shi, J.; Chu, W.; Lin, Y. Underwater image enhancement via two-level wavelet decomposition maximum brightness color restoration and edge refinement histogram stretching. Optics Express 2022, 30, 17290–17306. [Google Scholar] [CrossRef]

- Papadopoulos, C.; Papaioannou, G. Realistic real-time underwater caustics and godrays. In Proceedings of the Proc. GraphiCon, 2009; Vol. 9, pp. 89–95. [Google Scholar]

- Sedlazeck, A.; Koch, R. Simulating deep sea underwater images using physical models for light attenuation, scattering, and refraction 2011.

- Yang, M.; Hu, J.; Li, C.; Rohde, G.; Du, Y.; Hu, K. An in-depth survey of underwater image enhancement and restoration. IEEE Access 2019, 7, 123638–123657. [Google Scholar] [CrossRef]

- Anwar, S.; Li, C. Diving deeper into underwater image enhancement: A survey. Signal Processing: Image Communication 2020, 89, 115978. [Google Scholar] [CrossRef]

- Vasamsetti, S.; Mittal, N.; Neelapu, B.C.; Sardana, H.K. Wavelet based perspective on variational enhancement technique for underwater imagery. Ocean Engineering 2017, 141, 88–100. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, H.; Shen, J.; Li, X.; Xu, L. Region-specialized underwater image restoration in inhomogeneous optical environments. Optik 2014, 125, 2090–2098. [Google Scholar] [CrossRef]

- Berman, D.; Levy, D.; Avidan, S.; Treibitz, T. Underwater single image color restoration using haze-lines and a new quantitative dataset. IEEE transactions on pattern analysis and machine intelligence 2020. [Google Scholar] [CrossRef]

- Schechner, Y.Y.; Karpel, N. Recovery of underwater visibility and structure by polarization analysis. IEEE Journal of oceanic engineering 2005, 30, 570–587. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE transactions on pattern analysis and machine intelligence 2011, 33, 2341–2353. [Google Scholar] [PubMed]

- Chao, L.; Wang, M. Removal of water scattering. In Proceedings of the 2010 2nd international conference on computer engineering and technology. IEEE, 2010; Vol. 2, pp. 2–35. [Google Scholar]

- Wu, X.; Li, H. A simple and comprehensive model for underwater image restoration. In Proceedings of the 2013 IEEE International Conference on Information and Automation (ICIA). IEEE; 2013; pp. 699–704. [Google Scholar]

- Chiang, J.Y.; Chen, Y.C. Underwater image enhancement by wavelength compensation and dehazing. IEEE transactions on image processing 2011, 21, 1756–1769. [Google Scholar] [CrossRef] [PubMed]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color balance and fusion for underwater image enhancement. IEEE Transactions on image processing 2017, 27, 379–393. [Google Scholar] [CrossRef] [PubMed]

- Chang, H.H.; Cheng, C.Y.; Sung, C.C. Single underwater image restoration based on depth estimation and transmission compensation. IEEE Journal of Oceanic Engineering 2018, 44, 1130–1149. [Google Scholar] [CrossRef]

- Cronin, T.W.; Marshall, J. Patterns and properties of polarized light in air and water. Philosophical Transactions of the Royal Society B: Biological Sciences 2011, 366, 619–626. [Google Scholar] [CrossRef]

- Schechner, Y.Y.; Narasimhan, S.G.; Nayar, S.K. Polarization-based vision through haze. Applied optics 2003, 42, 511–525. [Google Scholar] [CrossRef]

- Li, Y.; Ruan, R.; Mi, Z.; Shen, X.; Gao, T.; Fu, X. An underwater image restoration based on global polarization effects of underwater scene. Optics and Lasers in Engineering 2023, 165, 107550. [Google Scholar] [CrossRef]

- Huang, B.; Liu, T.; Hu, H.; Han, J.; Yu, M. Underwater image recovery considering polarization effects of objects. Optics express 2016, 24, 9826–9838. [Google Scholar] [CrossRef]

- Hu, H.; Zhao, L.; Huang, B.; Li, X.; Wang, H.; Liu, T. Enhancing visibility of polarimetric underwater image by transmittance correction. IEEE Photonics Journal 2017, 9, 1–10. [Google Scholar] [CrossRef]

- Han, P.; Liu, F.; Yang, K.; Ma, J.; Li, J.; Shao, X. Active underwater descattering and image recovery. Applied optics 2017, 56, 6631–6638. [Google Scholar] [CrossRef]

- Hu, H.; Zhao, L.; Li, X.; Wang, H.; Yang, J.; Li, K.; Liu, T. Polarimetric image recovery in turbid media employing circularly polarized light. Optics Express 2018, 26, 25047–25059. [Google Scholar] [CrossRef] [PubMed]

- Hu, H.; Zhao, L.; Li, X.; Wang, H.; Liu, T. Underwater image recovery under the nonuniform optical field based on polarimetric imaging. IEEE Photonics Journal 2018, 10, 1–9. [Google Scholar] [CrossRef]

- Sánchez-Ferreira, C.; Coelho, L.; Ayala, H.V.; Farias, M.C.; Llanos, C.H. Bio-inspired optimization algorithms for real underwater image restoration. Signal Processing: Image Communication 2019, 77, 49–65. [Google Scholar] [CrossRef]

- Yang, L.; Liang, J.; Zhang, W.; Ju, H.; Ren, L.; Shao, X. Underwater polarimetric imaging for visibility enhancement utilizing active unpolarized illumination. Optics Communications 2019, 438, 96–101. [Google Scholar] [CrossRef]

- Wang, J.; Wan, M.; Gu, G.; Qian, W.; Ren, K.; Huang, Q.; Chen, Q. Periodic integration-based polarization differential imaging for underwater image restoration. Optics and Lasers in Engineering 2022, 149, 106785. [Google Scholar] [CrossRef]

- Jin, H.; Qian, L.; Gao, J.; Fan, Z.; Chen, J. Polarimetric Calculation Method of Global Pixel for Underwater Image Restoration. IEEE Photonics Journal 2020, 13, 1–15. [Google Scholar] [CrossRef]

- Fu, X.; Liang, Z.; Ding, X.; Yu, X.; Wang, Y. Image descattering and absorption compensation in underwater polarimetric imaging. Optics and Lasers in Engineering 2020, 132, 106115. [Google Scholar] [CrossRef]

- Bruno, F.; Bianco, G.; Muzzupappa, M.; Barone, S.; Razionale, A.V. Experimentation of structured light and stereo vision for underwater 3D reconstruction. ISPRS Journal of Photogrammetry and Remote Sensing 2011, 66, 508–518. [Google Scholar] [CrossRef]

- Roser, M.; Dunbabin, M.; Geiger, A. Simultaneous underwater visibility assessment, enhancement and improved stereo. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA). IEEE; 2014; pp. 3840–3847. [Google Scholar]

- Lin, Y.H.; Chen, S.Y.; Tsou, C.H. Development of an image processing module for autonomous underwater vehicles through integration of visual recognition with stereoscopic image reconstruction. Journal of Marine Science and Engineering 2019, 7, 107. [Google Scholar] [CrossRef]

- uczyński, T.; uczyński, P.; Pehle, L.; Wirsum, M.; Birk, A. Model based design of a stereo vision system for intelligent deep-sea operations. Measurement 2019, 144, 298–310. [Google Scholar] [CrossRef]

- Tan, C.; Sluzek, A.; GL, G.S.; Jiang, T. Range gated imaging system for underwater robotic vehicle. In Proceedings of the OCEANS 2006-Asia Pacific. IEEE; 2006; pp. 1–6. [Google Scholar]

- Li, H.; Wang, X.; Bai, T.; Jin, W.; Huang, Y.; Ding, K. Speckle noise suppression of range gated underwater imaging system. In Proceedings of the Applications of Digital Image Processing XXXII. SPIE, 2009; Vol. 7443, pp. 641–648. [Google Scholar]

- Liu, W.; Li, Q.; Hao, G.y.; Wu, G.j.; Lv, P. Experimental study on underwater range-gated imaging system pulse and gate control coordination strategy. In Proceedings of the Ocean Optics and Information Technology. SPIE, 2018; Vol. 10850, pp. 201–212. [Google Scholar]

- Wang, M.; Wang, X.; Sun, L.; Yang, Y.; Zhou, Y. Underwater 3D deblurring-gated range-intensity correlation imaging. Optics Letters 2020, 45, 1455–1458. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Wang, X.; Zhang, Y.; Sun, L.; Lei, P.; Yang, Y.; Chen, J.; He, J.; Zhou, Y. Range-intensity-profile prior dehazing method for underwater range-gated imaging. Optics Express 2021, 29, 7630–7640. [Google Scholar] [CrossRef] [PubMed]

- McGlamery, B. A computer model for underwater camera systems. In Proceedings of the Ocean Optics VI. International Society for Optics and Photonics, 1980; Vol. 208, pp. 221–231. [Google Scholar]

- Shen, Y.; Zhao, C.; Liu, Y.; Wang, S.; Huang, F. Underwater optical imaging: key technologies and applications review. IEEE Access 2021. [Google Scholar] [CrossRef]

- Trucco, E.; Olmos-Antillon, A.T. Self-tuning underwater image restoration. IEEE Journal of Oceanic Engineering 2006, 31, 511–519. [Google Scholar] [CrossRef]

- Jaffe, J.S. Computer modeling and the design of optimal underwater imaging systems. IEEE Journal of Oceanic Engineering 1990, 15, 101–111. [Google Scholar] [CrossRef]

- Hou, W.; Gray, D.J.; Weidemann, A.D.; Fournier, G.R.; Forand, J. Automated underwater image restoration and retrieval of related optical properties. In Proceedings of the 2007 IEEE international geoscience and remote sensing symposium. IEEE; 2007; pp. 1889–1892. [Google Scholar]

- Boffety, M.; Galland, F.; Allais, A.G. Color image simulation for underwater optics. Applied optics 2012, 51, 5633–5642. [Google Scholar] [CrossRef]

- Wen, H.; Tian, Y.; Huang, T.; Gao, W. Single underwater image enhancement with a new optical model. In Proceedings of the 2013 IEEE International Symposium on Circuits and Systems (ISCAS). IEEE; 2013; pp. 753–756. [Google Scholar]

- Ahn, J.; Yasukawa, S.; Sonoda, T.; Nishida, Y.; Ishii, K.; Ura, T. An optical image transmission system for deep sea creature sampling missions using autonomous underwater vehicle. IEEE Journal of Oceanic Engineering 2018, 45, 350–361. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE transactions on pattern analysis and machine intelligence 2010, 33, 2341–2353. [Google Scholar]

- Fayaz, S.; Parah, S.A.; Qureshi, G. Efficient underwater image restoration utilizing modified dark channel prior. Multimedia Tools and Applications 2023, 82, 14731–14753. [Google Scholar] [CrossRef]

- Drews, P.; Nascimento, E.; Moraes, F.; Botelho, S.; Campos, M. Transmission estimation in underwater single images. In Proceedings of the Proceedings of the IEEE international conference on computer vision workshops, 2013, pp.; pp. 825–830.

- Drews, P.L.; Nascimento, E.R.; Botelho, S.S.; Campos, M.F.M. Underwater depth estimation and image restoration based on single images. IEEE computer graphics and applications 2016, 36, 24–35. [Google Scholar] [CrossRef]

- Carlevaris-Bianco, N.; Mohan, A.; Eustice, R.M. Initial results in underwater single image dehazing. In Proceedings of the Oceans 2010 Mts/IEEE Seattle. IEEE; 2010; pp. 1–8. [Google Scholar]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic red-channel underwater image restoration. Journal of Visual Communication and Image Representation 2015, 26, 132–145. [Google Scholar] [CrossRef]

- Song, W.; Wang, Y.; Huang, D.; Tjondronegoro, D. A rapid scene depth estimation model based on underwater light attenuation prior for underwater image restoration. In Proceedings of the Pacific Rim Conference on Multimedia. Springer; 2018; pp. 678–688. [Google Scholar]

- Yang, H.Y.; Chen, P.Y.; Huang, C.C.; Zhuang, Y.Z.; Shiau, Y.H. Low complexity underwater image enhancement based on dark channel prior. In Proceedings of the 2011 Second International Conference on Innovations in Bio-inspired Computing and Applications. IEEE; 2011; pp. 17–20. [Google Scholar]

- Serikawa, S.; Lu, H. Underwater image dehazing using joint trilateral filter. Computers & Electrical Engineering 2014, 40, 41–50. [Google Scholar]

- Peng, Y.T.; Zhao, X.; Cosman, P.C. Single underwater image enhancement using depth estimation based on blurriness. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP). IEEE; 2015; pp. 4952–4956. [Google Scholar]

- Lu, H.; Li, Y.; Zhang, L.; Serikawa, S. Contrast enhancement for images in turbid water. JOSA A 2015, 32, 886–893. [Google Scholar] [CrossRef]

- Zhao, X.; Jin, T.; Qu, S. Deriving inherent optical properties from background color and underwater image enhancement. Ocean Engineering 2015, 94, 163–172. [Google Scholar] [CrossRef]

- Li, C.; Quo, J.; Pang, Y.; Chen, S.; Wang, J. Single underwater image restoration by blue-green channels dehazing and red channel correction. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE; 2016; pp. 1731–1735. [Google Scholar]

- Li, C.Y.; Guo, J.C.; Cong, R.M.; Pang, Y.W.; Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Transactions on Image Processing 2016, 25, 5664–5677. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Chen, S.; Tang, Y.; Pang, Y.; Wang, J. Underwater image restoration based on minimum information loss principle and optical properties of underwater imaging. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP). IEEE; 2016; pp. 1993–1997. [Google Scholar]

- Peng, Y.T.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE transactions on image processing 2017, 26, 1579–1594. [Google Scholar] [CrossRef]

- Peng, Y.T.; Cao, K.; Cosman, P.C. Generalization of the dark channel prior for single image restoration. IEEE Transactions on Image Processing 2018, 27, 2856–2868. [Google Scholar] [CrossRef]

- Wang, N.; Zheng, H.; Zheng, B. Underwater image restoration via maximum attenuation identification. IEEE Access 2017, 5, 18941–18952. [Google Scholar] [CrossRef]

- Ding, X.; Wang, Y.; Zhang, J.; Fu, X. Underwater image dehaze using scene depth estimation with adaptive color correction. In Proceedings of the OCEANS 2017-Aberdeen. IEEE; 2017; pp. 1–5. [Google Scholar]

- Cao, K.; Peng, Y.T.; Cosman, P.C. Underwater image restoration using deep networks to estimate background light and scene depth. In Proceedings of the 2018 IEEE Southwest Symposium on Image Analysis and Interpretation (SSIAI). IEEE; 2018; pp. 1–4. [Google Scholar]

- Barbosa, W.V.; Amaral, H.G.; Rocha, T.L.; Nascimento, E.R. Visual-quality-driven learning for underwater vision enhancement. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP). IEEE; 2018; pp. 3933–3937. [Google Scholar]

- Hou, M.; Liu, R.; Fan, X.; Luo, Z. Joint residual learning for underwater image enhancement. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP). IEEE; 2018; pp. 4043–4047. [Google Scholar]

- Wang, Z.; Shen, L.; Xu, M.; Yu, M.; Wang, K.; Lin, Y. Domain adaptation for underwater image enhancement. IEEE Transactions on Image Processing 2023, 32, 1442–1457. [Google Scholar] [CrossRef]

- Xu, S.; Zhang, M.; Song, W.; Mei, H.; He, Q.; Liotta, A. A systematic review and analysis of deep learning-based underwater object detection. Neurocomputing 2023. [Google Scholar] [CrossRef]

- Xu, Y.; Wen, J.; Fei, L.; Zhang, Z. Review of video and image defogging algorithms and related studies on image restoration and enhancement. Ieee Access 2015, 4, 165–188. [Google Scholar] [CrossRef]

- Abdullah-Al-Wadud, M.; Kabir, M.H.; Dewan, M.A.A.; Chae, O. A dynamic histogram equalization for image contrast enhancement. IEEE Transactions on Consumer Electronics 2007, 53, 593–600. [Google Scholar] [CrossRef]

- Kim, T.; Paik, J. Adaptive contrast enhancement using gain-controllable clipped histogram equalization. IEEE Transactions on Consumer Electronics 2008, 54, 1803–1810. [Google Scholar] [CrossRef]

- Ancuti, C.; Ancuti, C.O.; Haber, T.; Bekaert, P. Enhancing underwater images and videos by fusion. In Proceedings of the 2012 IEEE conference on computer vision and pattern recognition. IEEE; 2012; pp. 81–88. [Google Scholar]

- Liu, X.; Zhong, G.; Liu, C.; Dong, J. Underwater image colour constancy based on DSNMF. IET Image Processing 2017, 11, 38–43. [Google Scholar] [CrossRef]

- Torres-Méndez, L.A.; Dudek, G. Color correction of underwater images for aquatic robot inspection. In Proceedings of the International Workshop on Energy Minimization Methods in Computer Vision and Pattern Recognition. Springer; 2005; pp. 60–73. [Google Scholar]

- Iqbal, K.; Salam, R.A.; Osman, A.; Talib, A.Z. Underwater Image Enhancement Using an Integrated Colour Model. IAENG International Journal of computer science 2007, 34. [Google Scholar]

- Ghani, A.S.A.; Isa, N.A.M. Automatic system for improving underwater image contrast and color through recursive adaptive histogram modification. Computers and electronics in agriculture 2017, 141, 181–195. [Google Scholar] [CrossRef]

- Hitam, M.S.; Awalludin, E.A.; Yussof, W.N.J.H.W.; Bachok, Z. Mixture contrast limited adaptive histogram equalization for underwater image enhancement. In Proceedings of the 2013 International conference on computer applications technology (ICCAT). IEEE; 2013; pp. 1–5. [Google Scholar]

- Huang, D.; Wang, Y.; Song, W.; Sequeira, J.; Mavromatis, S. Shallow-water image enhancement using relative global histogram stretching based on adaptive parameter acquisition. In Proceedings of the International conference on multimedia modeling. Springer; 2018; pp. 453–465. [Google Scholar]

- Agaian, S.S.; Panetta, K.; Grigoryan, A.M. Transform-based image enhancement algorithms with performance measure. IEEE Transactions on image processing 2001, 10, 367–382. [Google Scholar] [CrossRef]

- Asmare, M.H.; Asirvadam, V.S.; Hani, A.F.M. Image enhancement based on contourlet transform. Signal, Image and Video Processing 2015, 9, 1679–1690. [Google Scholar] [CrossRef]

- Panetta, K.; Samani, A.; Agaian, S. A robust no-reference, no-parameter, transform domain image quality metric for evaluating the quality of color images. IEEE Access 2018, 6, 10979–10985. [Google Scholar] [CrossRef]

- Wang, Y.; Ding, X.; Wang, R.; Zhang, J.; Fu, X. Fusion-based underwater image enhancement by wavelet decomposition. In Proceedings of the 2017 IEEE International Conference on Industrial Technology (ICIT). IEEE; 2017; pp. 1013–1018. [Google Scholar]

- Grigoryan, A.M.; Agaian, S.S. Color image enhancement via combine homomorphic ratio and histogram equalization approaches: Using underwater images as illustrative examples. International Journal on Future Revolution in Computer Science & Communication Engineering 2018, 4, 36–47. [Google Scholar]

- Kaur, G.; Kaur, M. A study of transform domain based image enhancement techniques. International Journal of Computer Applications 2016, 152. [Google Scholar] [CrossRef]

- Petit, F.; Capelle-Laizé, A.S.; Carré, P. Underwater image enhancement by attenuation inversionwith quaternions. In Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing. IEEE; 2009; pp. 1177–1180. [Google Scholar]

- Cheng, C.Y.; Sung, C.C.; Chang, H.H. Underwater image restoration by red-dark channel prior and point spread function deconvolution. In Proceedings of the 2015 IEEE international conference on signal and image processing applications (ICSIPA). IEEE; 2015; pp. 110–115. [Google Scholar]

- Feifei, S.; Xuemeng, Z.; Guoyu, W. An approach for underwater image denoising via wavelet decomposition and high-pass filter. In Proceedings of the 2011 Fourth International Conference on Intelligent Computation Technology and Automation. IEEE, 2011; Vol. 2, pp. 417–420. [Google Scholar]

- Ghani, A.S.A. Image contrast enhancement using an integration of recursive-overlapped contrast limited adaptive histogram specification and dual-image wavelet fusion for the high visibility of deep underwater image. Ocean Engineering 2018, 162, 224–238. [Google Scholar] [CrossRef]

- Priyadharsini, R.; Sharmila, T.S.; Rajendran, V. A wavelet transform based contrast enhancement method for underwater acoustic images. Multidimensional Systems and Signal Processing 2018, 29, 1845–1859. [Google Scholar] [CrossRef]