1. Introduction

Autonomous mobile robots are becoming increasingly popular in industrial applications such as logistics, manufacturing, and warehousing. One of the key requirements for the operation of such robots is the ability to navigate safely and efficiently in a dynamic environment, repeatedly doing the same task all day for days or months. A mobile robot’s autonomous navigation is achieved using Simultaneous Localisation and Mapping (SLAM) techniques, in which the robot maps the environment, navigates, and locates itself by merely ”looking” at this environment, without the need for additional hardware to be installed in the workplace. This is achieved by using sensor measurements (such as LIDAR, cameras, or other sensors) to update the map and estimate the robot’s pose at the same time [

2]. However, these methods face significant challenges in dynamic environments, mainly when the environment changes over time due to the movement of obstacles or people. Obstacles may appear, disappear, or move around, and the robot’s map needs to be updated continuously to reflect these changes. While localisation and local obstacle avoidance algorithms can handle small changes in the environment, the robot’s map can diverge from the present working area, reducing navigation performance [

3]. Conventional SLAM methods struggle to update the map accurately and efficiently over long periods of time due to the accumulation of errors in the robot’s pose estimates and in the map. This is because small errors in the robot’s pose estimation can accumulate over time, leading to significant inaccuracies in the map. Moreover, the robot needs to constantly re-localise itself, which can be challenging and time-consuming. Indeed, traditional SLAM algorithms are computationally intensive and require significant processing power, memory, and time [

4]. This can limit the robot’s ability to operate for extended periods, particularly in industrial environments where robots need to operate continuously.

Therefore, the long-term operation of autonomous mobile robots in dynamic industrial environments requires continuous map updating to ensure accurate navigation and obstacle avoidance and achieve good localisation performance [

5,

6]. Moreover, having an updated static map, coherent with the current environment, allows the robot to reduce its effort in re-planning over time to adjust its trajectory. Updating the map in real-time requires the robot to quickly detect and track changes in the environment, such as the movement of people, equipment, and obstacles. For this reason, and in order to consider long-term operations, the map updating algorithm has to be computationally light and use limited memory [

7].

In our paper [

1], we proposed a 2D LIDAR-Based map updating to detect possible changes over time in the environment and to accordingly update a previously built map with limiting memory usage and neglecting the presence of highly dynamic obstacles. The system was developed using the Robot Operating System framework [

8] using a 2D occupancy grid map representation. This map representation is commonly used in the industrial field due to its ability to facilitate path-planning [

9,

10]. In fact, companies such as AgileX RoboticsAgileX,

https://global.agilex.ai/ , AvidbotsRobotnik,

https://robotnik.eu/ , and BoschBosch,

https://www.bosch.com/, currently employ 2D occupancy grid maps in their assets, demonstrating the technology’s high level of industrial readiness. As such, it is important to continuously improve and strengthen the robustness of this technology for long-term use.

1.1. Paper Contribution

Although the proposed map updating [

1] provide promising result in map quality and localisation performance in a dynamic environment, the method relies heavily on accurate knowledge about the initial pose of the robot in a previously built map. That information might not be available with high accuracy, and due to changes in the map, it might be hard to estimate immediately. This could lead to bias in the estimated pose of the robot, which could result in overwriting free and occupied cells, effectively corrupting the occupancy grid and the resulting map. Indeed, as the robot moves through its environment, small errors in its position estimate can accumulate, resulting in a significant deviation from its true position over time. This issue is often referred to as drift and can be caused by a variety of factors, such as wheel slippage, sensor noise, and environmental changes.

To overcome this drawback and make the approach more robust, in this paper, we first introduce a fail-safe mechanism based on localisation performance to prevent erroneous map updating when the localisation error increases, and then we integrate a pose update algorithm that is activated when the robot effectively gets lost.

In our work, we propose a map updating algorithm in the presence of localisation error able to integrate localisation recovery procedures preventing degradation of the map in long-term operations. Extensive simulations have been conducted to validate the system in its components and as a whole. Finally, real-world experiments results are provided to highlight the performance of the approach in a real environment and to show its robustness in realistic uncertain conditions.

3. Problem Formulation

Consider a mobile robot , represented by with and be the robot position and orientation, is equipped with a 2D lidar and encoders and operates in a dynamic environment . To navigate autonomously, requires a map and a generic localisation algorithm that provides an estimate global robot pose , where and are the robot estimated position and orientation, such that the localisation error is smaller than a threshold to allow the robot completing its task. In the case of dynamic environments, the arrangement of the obstacles present in the environment changes in time, effectively creating new environments with that are similar to the precedent ones . What happens is that over time a static map no longer reflects the current configuration of leading to localisation errors hence degrading the operational performance of . One solution is to provide a map that is updated over time to reflect , and such that .

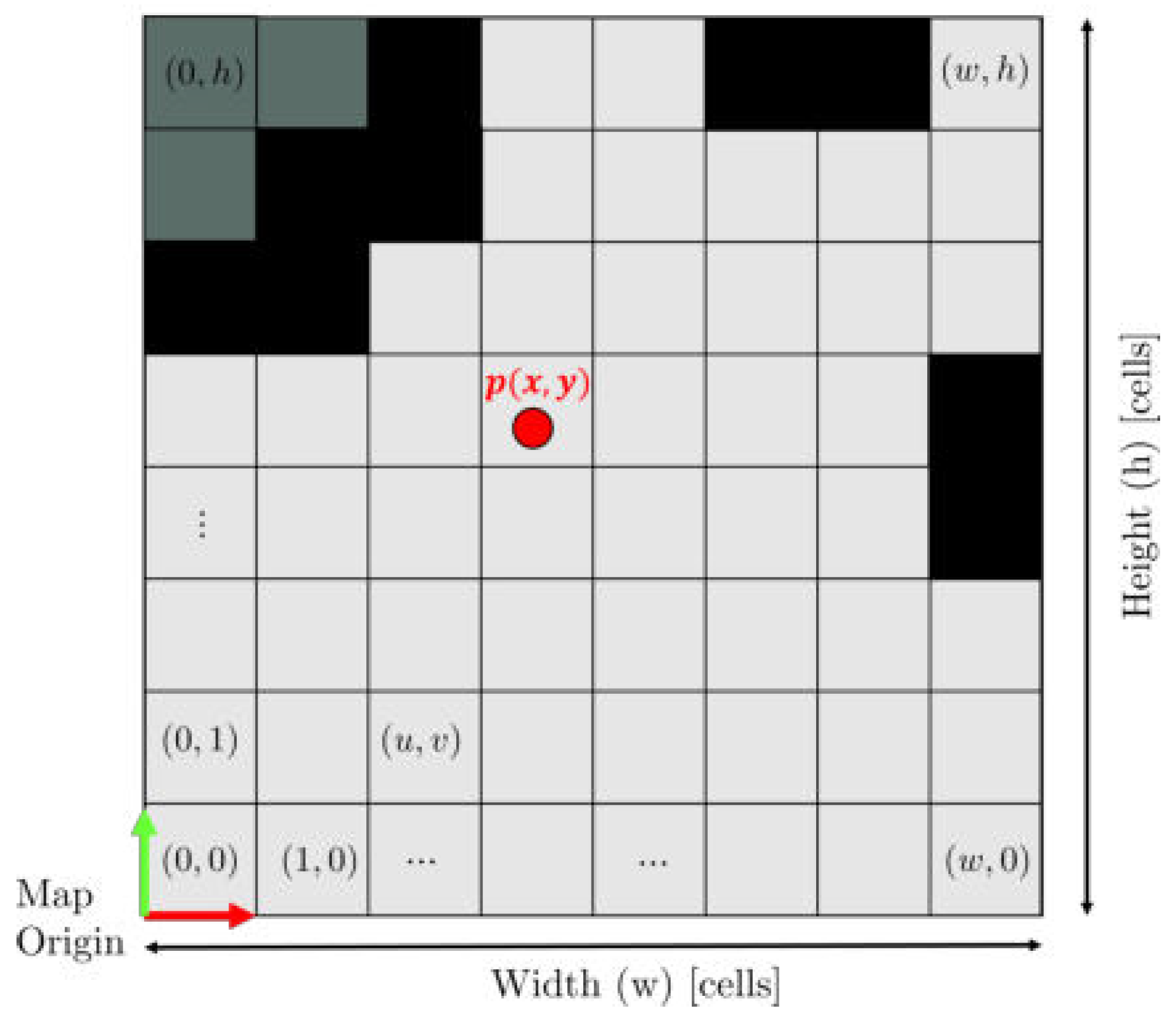

Occupancy Grid Map: In this paper, we consider the occupancy grids technique to represent and model the environment in which the robot operates. A 2D occupancy grid map is a grid that represents the space around the robot through cells

that contains information about the area associated with its position

in the space. Each cell in the grid represents the probability of the cell being occupied by an obstacle (probability equal to 1, black cells in

Figure 1), free (probability equal to 0, grey cells in

Figure 1), or unknown (otherwise, dark grey cells in

Figure 1).

Figure 1 shows the 2D occupancy grid map representation considered in this paper for a map of 8x8 cells. The map’s cells are identified through the grid coordinates

that define the actual resolution

r of the occupancy grid and the finite locations of obstacles. The origin of grid coordinates

is in the bottom-left corner of the grid, with the first location having an index of (0,0). Given the 2D occupancy grid map, the cell identified by a point

has the following grid coordinates:

There are several sensors that can be used to build 2D occupancy grid maps such as Lidars, cameras, sonar, infrared sensors, etc…, but in our work, we considered only 2D Lidars to generate a 2D point cloud. It is worth noting that our method is also suitable for any sensors able to provide this kind of information.

Lidar Point Cloud: Given a laser range measurement

with

n laser beams at time

k, it is possible to transform the data in a point cloud

, where

is the

i-th hit point at time step

k, i.e. the point in which the

i-th beam hit an obstacle. Moreover, given

and the estimated robot’s pose

, it is possible to obtain the coordinates of

in a fixed frame asto ease the notation, the time dependence

k is omitted:

where with

we represent the cartesian coordinates of the hit point

along the

i-th laser range measurement

. The angular offset of the first laser beam with respect to the orientation of the laser scanner is represented by

considering that the angular rate between adjacent beams is

. Finally, given

the set of cells passed through by the measurement is

where

is the cell associated to

.

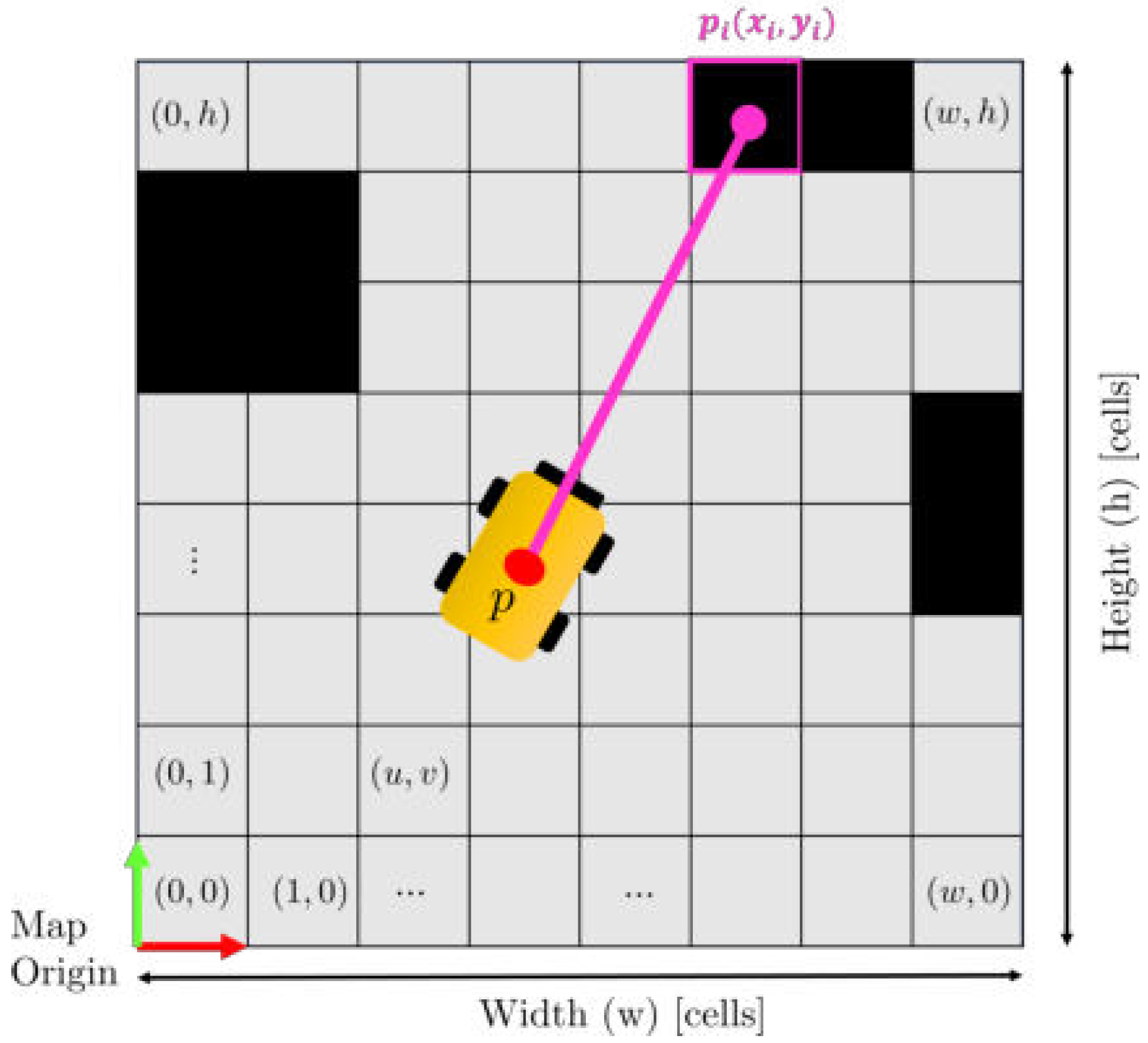

3.1. Ideal Scenario Vs Real Scenario

Given a mobile base

, equipped with a 2D lidar, operating in an environment represented by a 2D occupancy grid map, the ideal simplified scenario is depicted in

Figure 2: a laser beam hits an obstacle in a cell that is occupied, and it is identified as the only cell selected by the laser measurement thanks to an accurate knowledge of the robot pose.

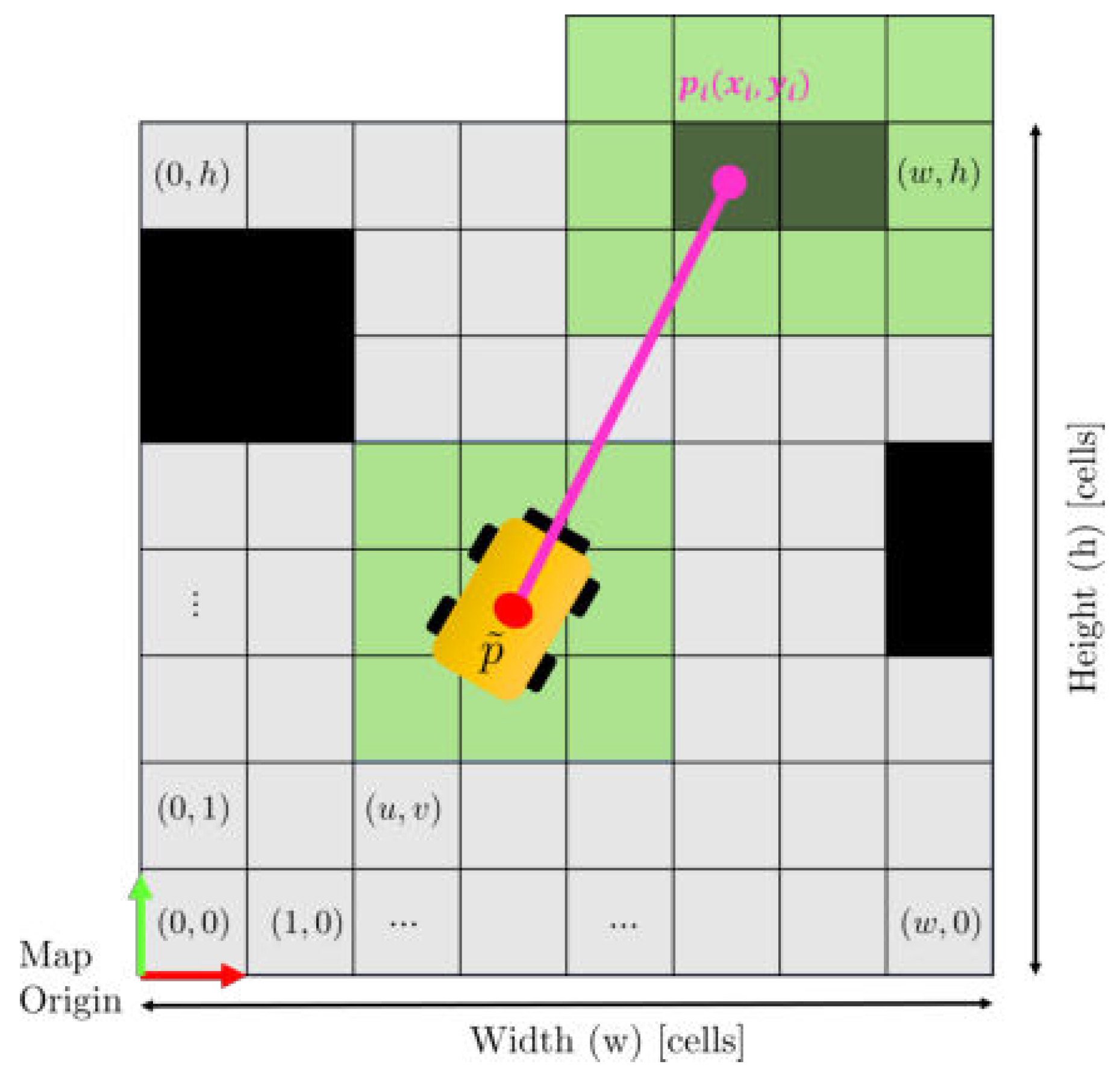

However, the real scenario presents a different situation, depicted in

Figure 3. Since there are localisation errors, the robot’s pose is affected by uncertainty, represented in the figure by the green cells around the robot. The localisation error and the addition of measurement errors and noises lead to the possibility that a laser beam may not identify the correct cell but another one in its neighborhood (green cells around

). For this reason, a direct check on the occupancy of the identified cell may lead to errors in the map update process. Hence, a procedure that is robust with respect to such localisation and measurement errors and noises must be developed.

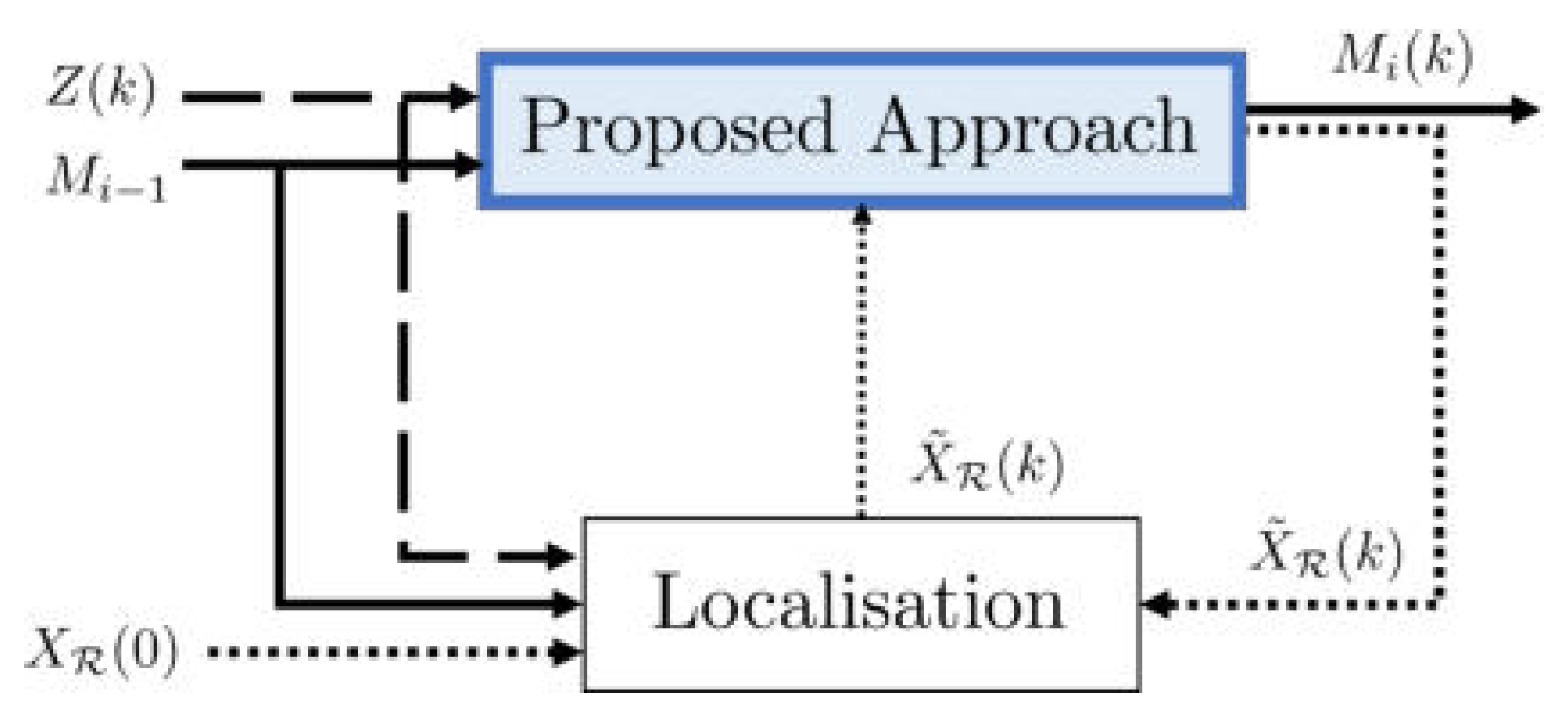

4. Method

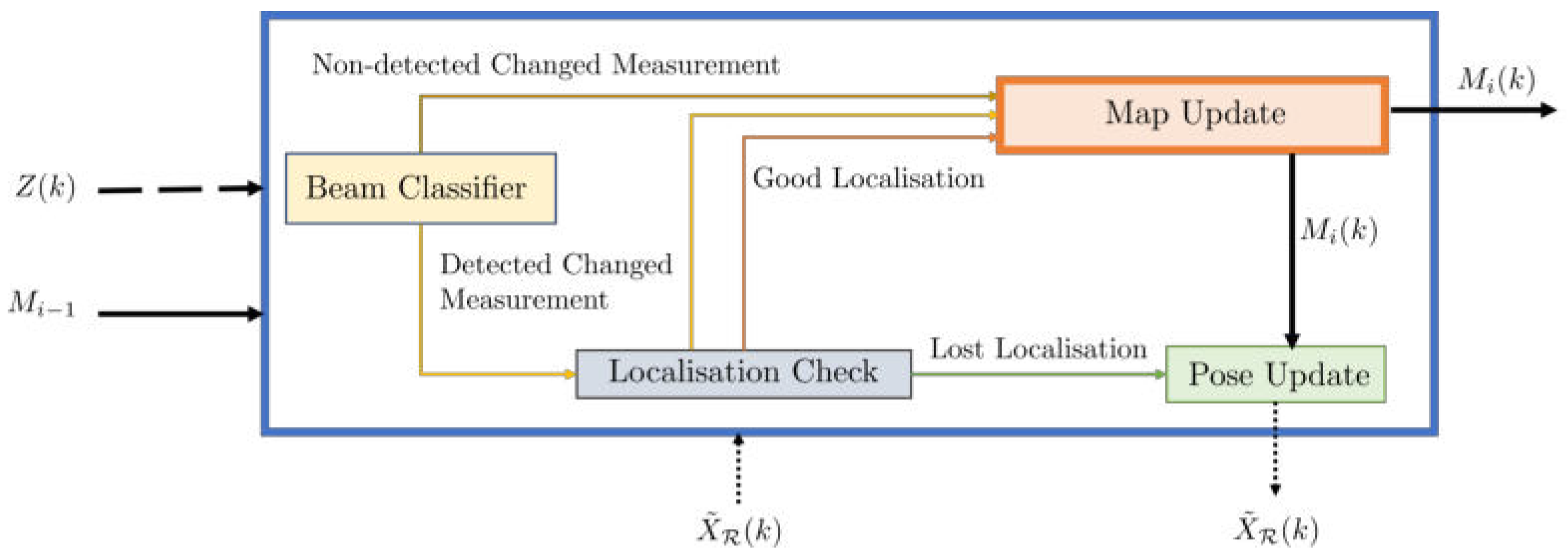

The proposed system architecture is represented in

Figure 4, where based on an initially provided occupancy grid map

, the robot pose

provided by any localisation algorithm, and the current laser measurements

, our approach computes a newly updated map

and a new robot pose

accordingly to the localisation error. Indeed, as shown in

Figure 5, only state of the cells that correspond to relevant changes in the environment are updated in case the localisation error is limited. Otherwise, the map updating process is suspended until the localisation algorithm recovers the right estimated pose. If the latter is not able to provide a new

and the drift accumulation is too big, a pose updating process is activated to estimate a new robot pose.

To evaluate the localisation error, we first classify with the

Beams Classifier the laser measurement as either “detected change measurement” or “non-detected change measurement”, this characterization is computed checking for discrepancy of the measurements with the initial map

as described in

Section 4.1. Then, the detected change measurements are evaluated by the

Localisation Check as described in

Section 4.2 to assess the localisation error. If the error is small enough, the map updating process can continue. Otherwise, the system stores the last updated map

and the correct

and continues to monitor the localisation error, pausing the map update process. If the error continues to increase, the pose updating process is activated to provide a new

based on

,

, and

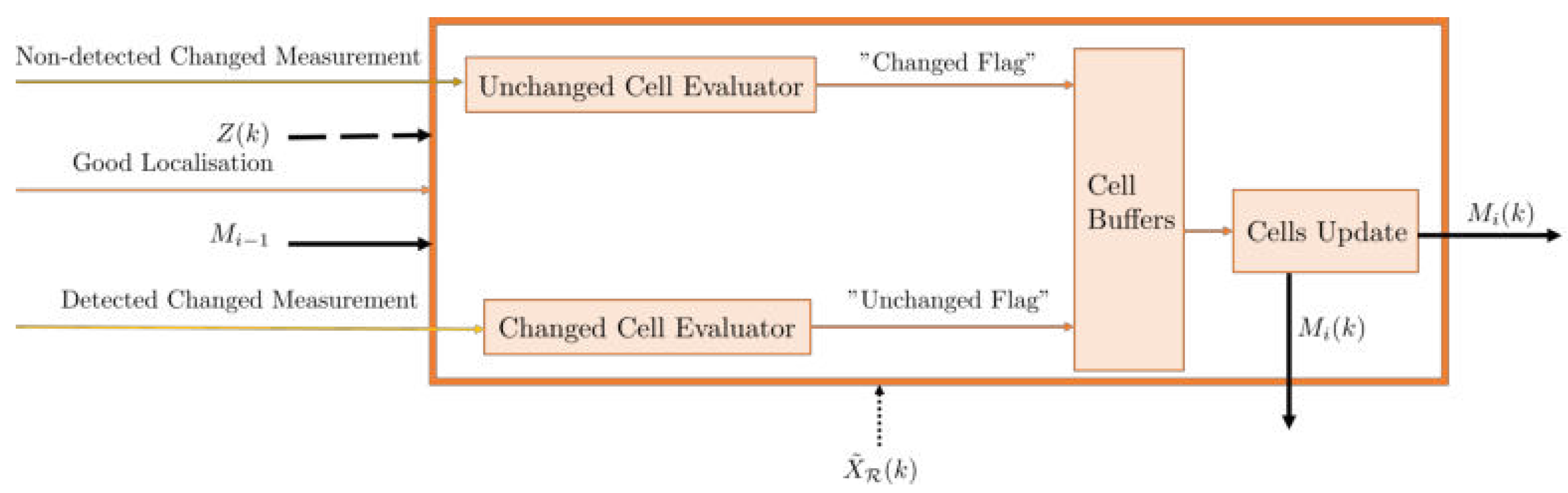

. The map updating process, depicted in

Figure 6, is responsible of the detection of changes in static obstacles (i.e., removal or addition) while it neglects the possible presence of dynamic obstacles in the environment that can lead to a corrupted map, e.g. people or other robots. If the Localisation Check is successful, it means that the localisation error is limited and hence the outputs of the Beam Classifier together with

and

are used by the

Changed Cells Evaluator and the

Unchanged Cells Evaluator to evaluate possible changes in the cells associated with current measurements

with respect to the initial map

. Confirmation procedures are necessary to address the localisation errors, even if limited, and possible noises that can affect the information retrieved by measurements. To simplify detection, all unknown cells in

are considered occupied during the measurement process to reduce to two the possible type of cell’s states. A rolling buffer

of a fixed size

is created for each cell

, and to record the outcomes of the evaluator blocks at different time instants. The

Changed Cells Evaluator fills

with a "changed" flag only if the associated measurement

is classified as "detected" and the change is confirmed as described in

Section 4.3. The

Unchanged Cells Evaluator fills

with an "unchanged" flag only if the associated measurement

is classified as "non-detected" and the evaluation is confirmed as described in

Section 4.4. In the end, the

Cell Update module changed between free and occupied the state of evaluated cells

only if a sufficiently large amount of "changed" flags are in the associated buffer

, as described in

Section 4.5. This module is the one responsible for the detection of changes in the environment while neglecting dynamic obstacles.

4.1. Beam Classifier

To detect a change in the environment, the outcomes of measurement

must be compared to the ones the robot would obtain if it were in an environment fully corresponding to what memorized in map

. The correct hit point as in (

2) is hence compared to the one computed with

that is the expected value for the

i-th range measurement

obtained from the initial map

. A ray casting approach, [

28], is hence used to compute the corresponding expected hit point

:

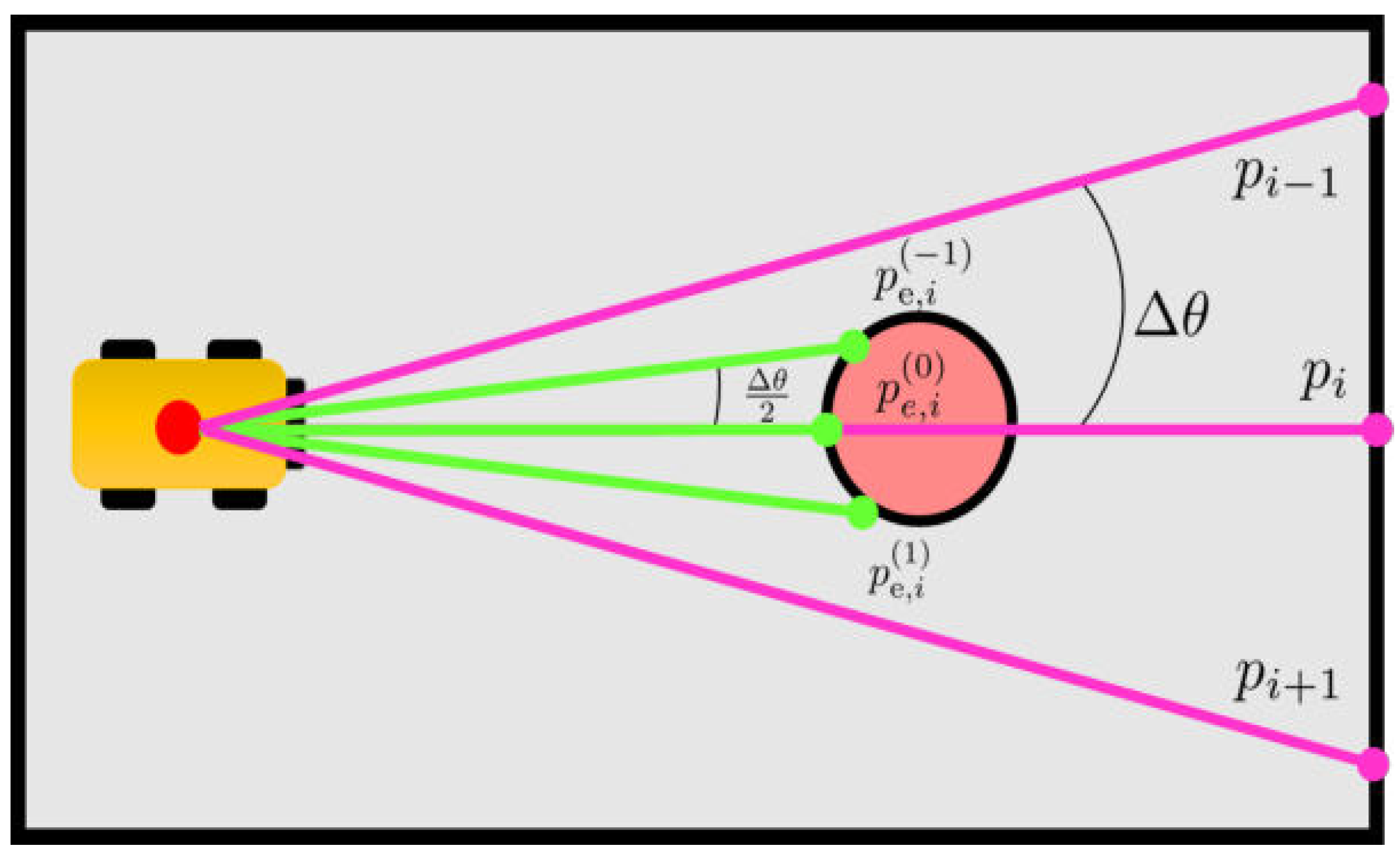

To detect a change, we do not directly compare the measured hit point with the expected hit one . Indeed, we use a 1-to-N comparison strategy with a set of expected hit points consisting of hit points along virtual neighbouring range measurements.

Note that (

3) computes the expected hit point

as the sum of an offset

and a point with polar coordinates

, the set

is generated by adding different perturbations

v to the angular second point. More in detail, the set

of

points is generated by adding a perturbation

v to the angular components of

in (

3):

where

l is integer and

, while

and

are design parameters. The distance

is defined, in an analogous way as

, as the measurement in

along the virtual laser ray of amplitude

with respect to the

i-th real ray. Note that,

v is chosen so that perturbations

, and hence between the

-th and the

-th laser rays. An example of

,

and hence,

is reported in

Figure 7).

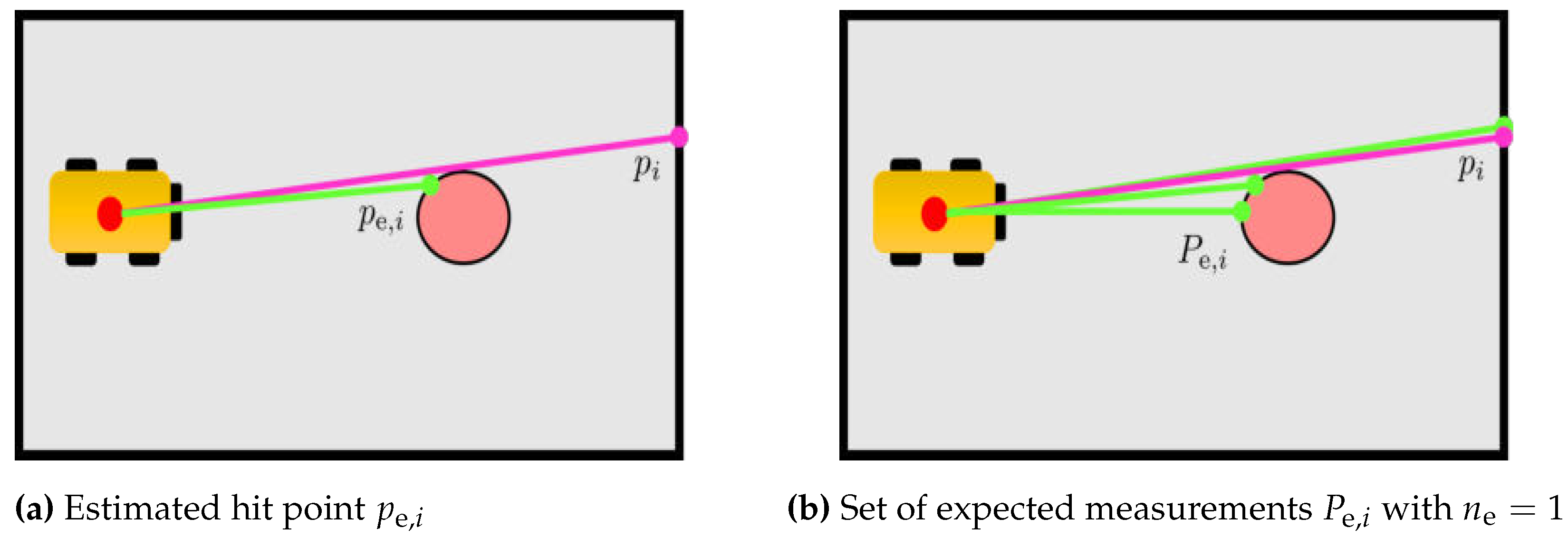

For the 1-to-N comparison, each point

is compared to the entire set

by computing the minimum Euclidean distance between

and the

N points in

. Finally, a change is detected only if the minimum distance between

and the expected points in

is greater than a threshold

:

As described in detail in

Appendix A.1, the threshold can depend linearly on the distance

in order to take into account errors coming from localisation and measurement noises.

The motivation for the 1-to-N comparison is represented in

Figure 8: the removal of an obstacle (in red) creates a change in a portion of the map, and a false change detection associated with the hit point computation may occur.

Figure 8 shows the hit point

identifying a cell of the new map that has not changed compared to the previous map

. On the other hand, small localisation errors may lead to an associated

identifying an occupied cell belonging to the obstacle in

. A direct comparison between

and

creates an incorrect change detection. This does not happen in

Figure 8, where thanks to the 1-to-N comparison with the set

the presence of a

close to

prevent the incorrect change detection.

4.2. Localisation Check

Changes in the map may have two drawbacks, first, they may prevent the robot from correctly localising itself in the changed map, second, as a consequence, large localisation errors may lead to an incorrect update of the map. Hence, monitoring the amount of localisation error is fundamental for a correct map update. For this purpose, the proposed dedicated Localisation Check module takes in input all the "detected change" measurements from the Beam Classifier and provides a quantification of the current localisation error. This module is important to make more robust the entire system by preventing corrupted maps update and by triggering a pose updating algorithm to decrease the localisation error. The idea is to evaluate the amount of localisation error and until this is below a given threshold the map can be updated neglecting the error. On the other hand, if it is too large, the robot is considered to be definitely lost and a pose update is requested to a dedicated algorithm. On the other hand, in between those two extreme cases and thresholds, it is possible to have a sufficiently large localisation error that would provide a corrupted map update but not so large to consider the robot definitely lost. In this case, the map update is interrupted and restarted once the localisation error decreases below the corresponding threshold.

More formally, given the number

n of hit points in

, derived from laser measurement

, and the number

of the "detected change" measurements, the map is updated as described next if

while the robot is considered lost but with the possibility of recovering the localisation and the map is not updated, if the following holds:

Finally, the localisation system is considered not able to recover the pose and this must be updated by a dedicated algorithm if

where

is the minimum fraction of acceptable changed measurements with respect to the number of laser beams that allows a good localisation performance, while

is the maximum fraction of acceptable changed measurements with respect to the number of laser beams in addition to which the robot has definitively lost its localisation. The critical parameter to choose is

because it is a trade-off between the great change between the previous and current environments and the likelihood that you have actually lost your localisation. In this paper, the values

and

are considered based on the results of several experimental tests.

4.3. Changed Cells Evaluator

Let

represents the occupancy state of a cell

in the initial map

. Once a change is detected by the

Beam classifier along the

i-th range measurement

, it is necessary to evaluate whose states of cells in

must be modified, i.e. the cells passed through by

i-th laser ray. The

Changed cells evaluator is the module that determines which are the cells in

involved in the detected change. Once a change is confirmed in a cell, the module stores a “changed” flag in the buffer of each cell. The status of a cell will be changed based on how many “changed” flags are in its buffer at the end of the procedure, as described in

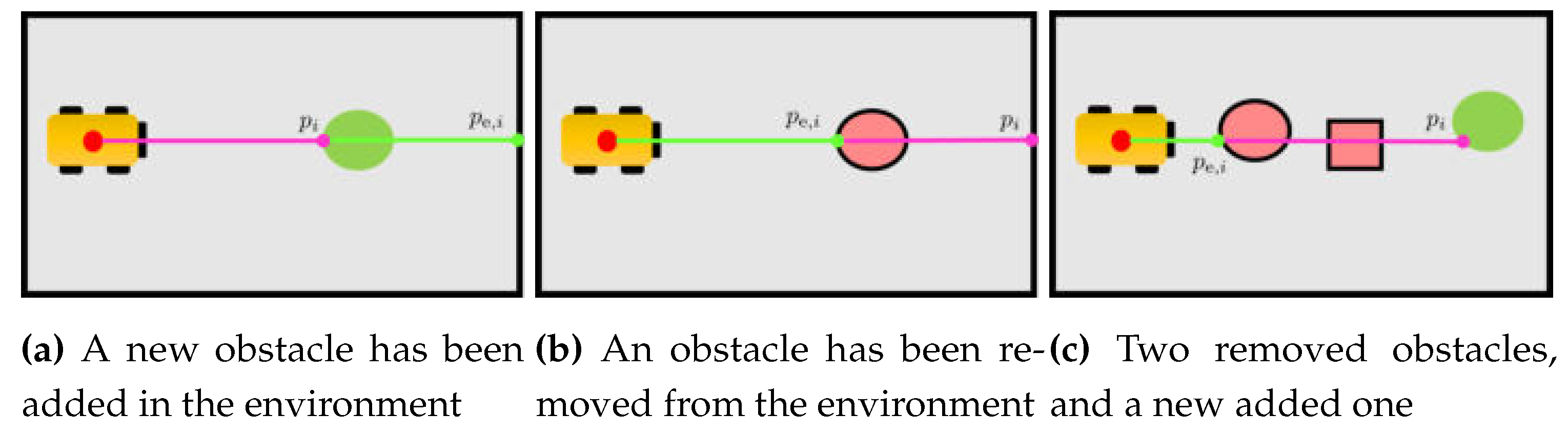

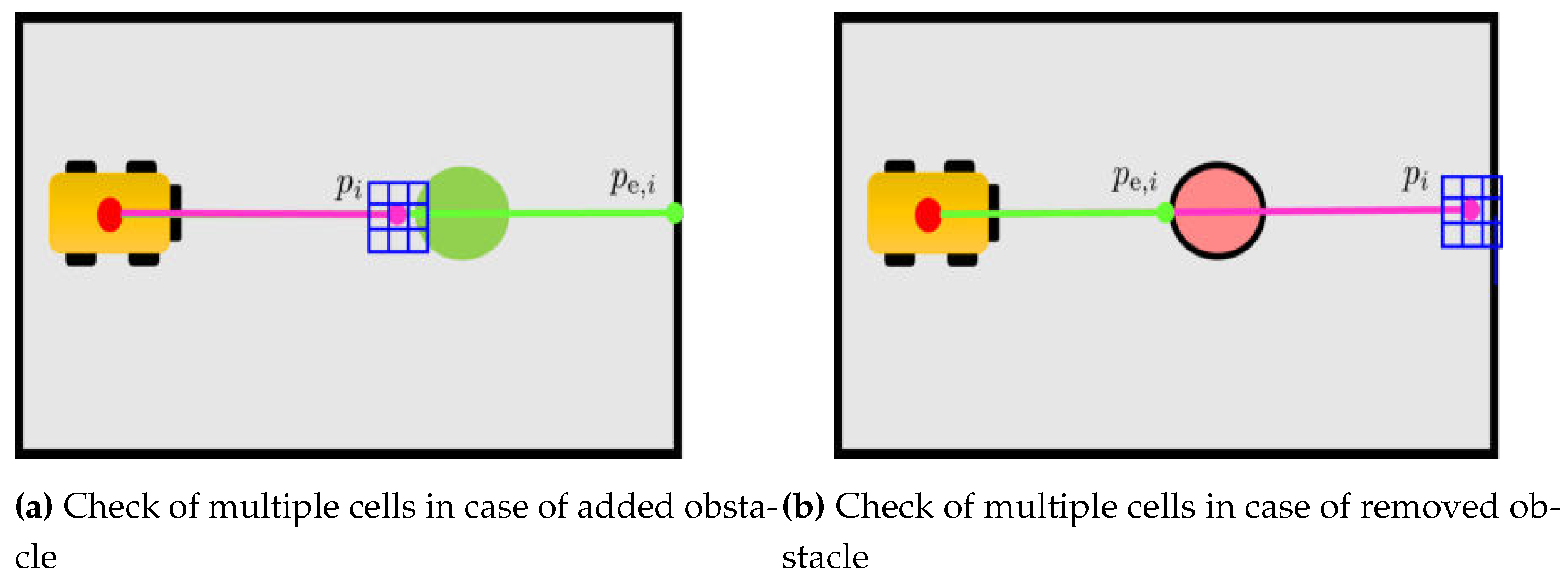

Section 4.5. The two possible events that cause a change in the environment are illustrated in

Figure 9: the appearance of a new obstacle (

Figure 9) and the removal of an existing one (

Figure 9). A combination of these two events may also occur (

Figure 9). The following paragraphs will describe how the cells in

, i.e. along the beam, are checked by the

Changed cells evaluator module in the occurrence of such events. A distinction between the cell

and all other cells along the ray, i.e.

, is necessary for the evaluation as described next.

4.3.1. Change detection of cells in

A first evaluation is conducted on the cells along the ray, except for

that corresponds to the hit point

. Indeed, all those cells should have a

free status, since they correspond to points of the laser beam that are not hit points. To increase algorithm robustness, we choose to not modify the status of the cells corresponding to the laser beam final chunk, since such cells may correspond to points that actually lay on obstacles due to localisation errors. For this reason, only cells between the robot’s estimated position

and a point

that lay on the laser beam (i.e. on the segment with extremes

and

) are evaluated, see

Figure 10. More formally, given a fixed parameter

and

, we analyse only the cells

that satisfy:

where

is the point in the centre of the cell

. If a cell

satisfies (

9) with

it means that an obstacle has been removed with respect to

and a “changed” flag is hence added to the cell’s buffer to report this change.

4.3.2. Change detection of

The status of

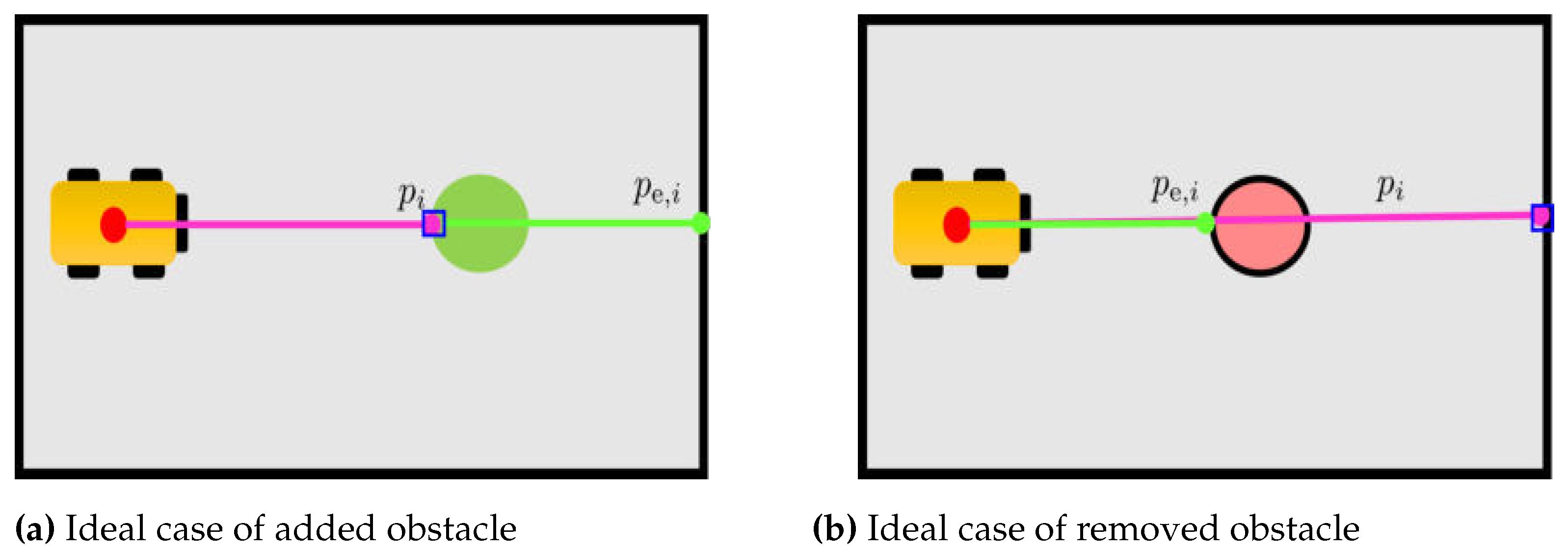

must also be evaluated. However, in this case, the events of a newly added obstacle and of a removed one must be distinguished. Indeed, in an ideal situation, when a new obstacle is added the hit point

lies on the new obstacle, and hence its associated cell

has a

free status in

, and hence it should be changed to

occupied, see

Figure 11. On the other hand, when an obstacle is removed, the hit point

lies on another obstacle or part of the environment hence its associated cell

has an

occupied status in

, and hence it should not be changed, see

Figure 11.

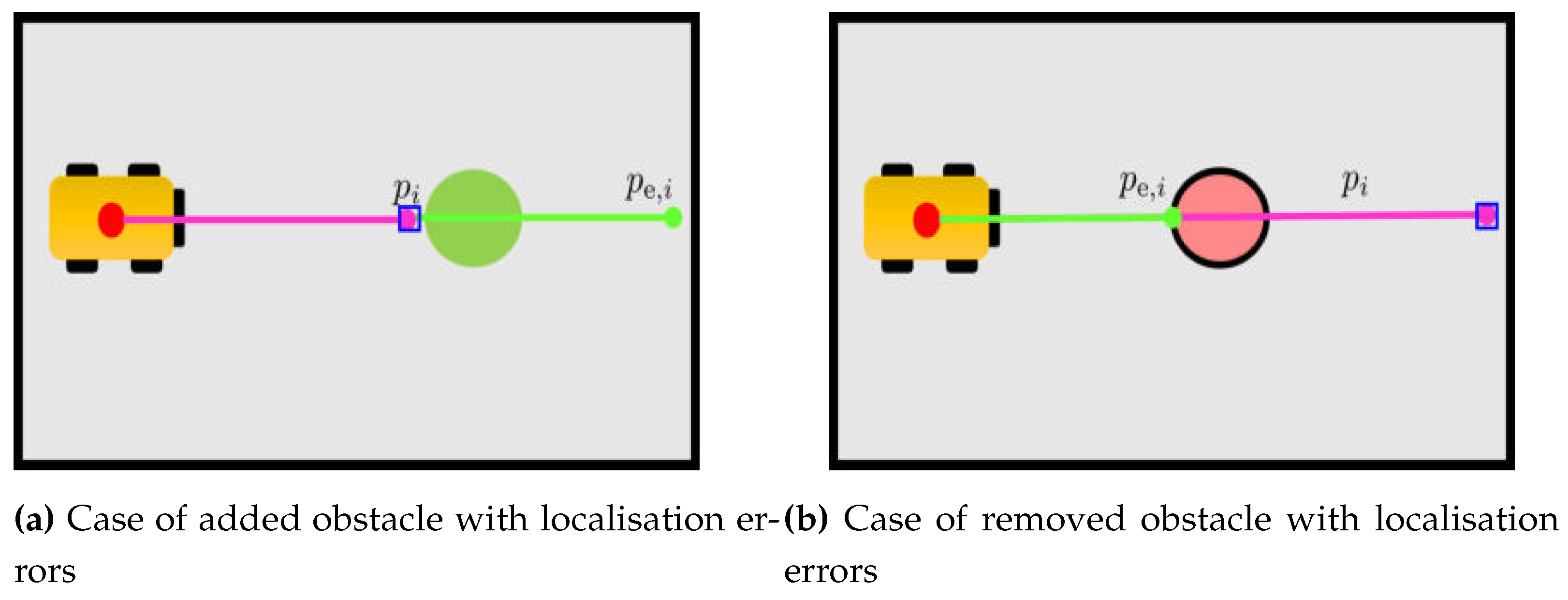

Unfortunately, in case of localisation errors it may occur that the (not ideally computed) cell

corresponding to the measured hit point may be

free in both cases of added or removed obstacles, see

Figure 12. Hence, in case of localisation errors, the distinction among added or removed obstacles is not possible with a direct evaluation of single-cell occupancy. On the other hand, in case of added obstacle also all cells sufficiently close to

are

free in

while in case of a removed one some of those cells would be

occupied and hence a distinction between the two cases is possible if closed cells status are also checked, see

Figure 13.

To conclude, a new "changed" flag is effectively added to the buffer of

if the added obstacle is detected, i.e. the state of neighbouring cells is

free, more formally if:

where

is a point in the space and

is a function of the measured range as

in (

5).

4.4. Unchanged Cells Evaluator

In case the Beam Classifier does not detect changes along the i-th range measurement , none of the maps cells associated with has undergone any changes with respect to the initial map . To take into account localisation errors, a similar procedure to the one described in the previous section can be applied, and only cells between the robot’s estimated position and the point that lay on the laser beam are evaluated. In particular, for such cells, the Unchanged Cells Evaluator add an “unchanged” flag to their buffer. Also for cell localisation errors must be taken into account. Indeed, since the Beams Classifier did not detect any change, the status of cell should be occupied in map but localisation and noise errors may lead to a measured hit point with associated free cell and nearby occupied cells. Therefore, if , an “unchanged” flag is added to the buffers of all occupied cells adjacent to . Otherwise, an “unchanged” flag is added only to the buffer of .

4.5. Cells Update

Once each ray has been processed by the Beam classifier and cells along the ray have been evaluated by the two previously described modules, the Cells Updating module is responsible for updating/changing the state of each cell in for all measurements with an update rate that depends on both linear and angular speed of the robot. For each cell , this update is based on the occurrence of “changed” flags in the corresponding buffer . Let denote the size of the rolling buffers, and let count the occurrences of the “changed” flag in . A cell’s occupancy state is changed only if exceeds a given threshold, which balances the likelihood of false positives with the speed of map updates and change detection. This threshold, and itself, are critical parameters; the threshold is used to make the cell updated status more robust with respect to dynamic events in the environment while represents the memory of past measurements. Indeed, with a good balance in the choice of such parameters, using this approach, highly dynamic obstacles are initially detected as changes in measurements but discarded during cell update if there is an insufficient number of “changed” flags in the corresponding buffer, and hence are not considered in the new map .

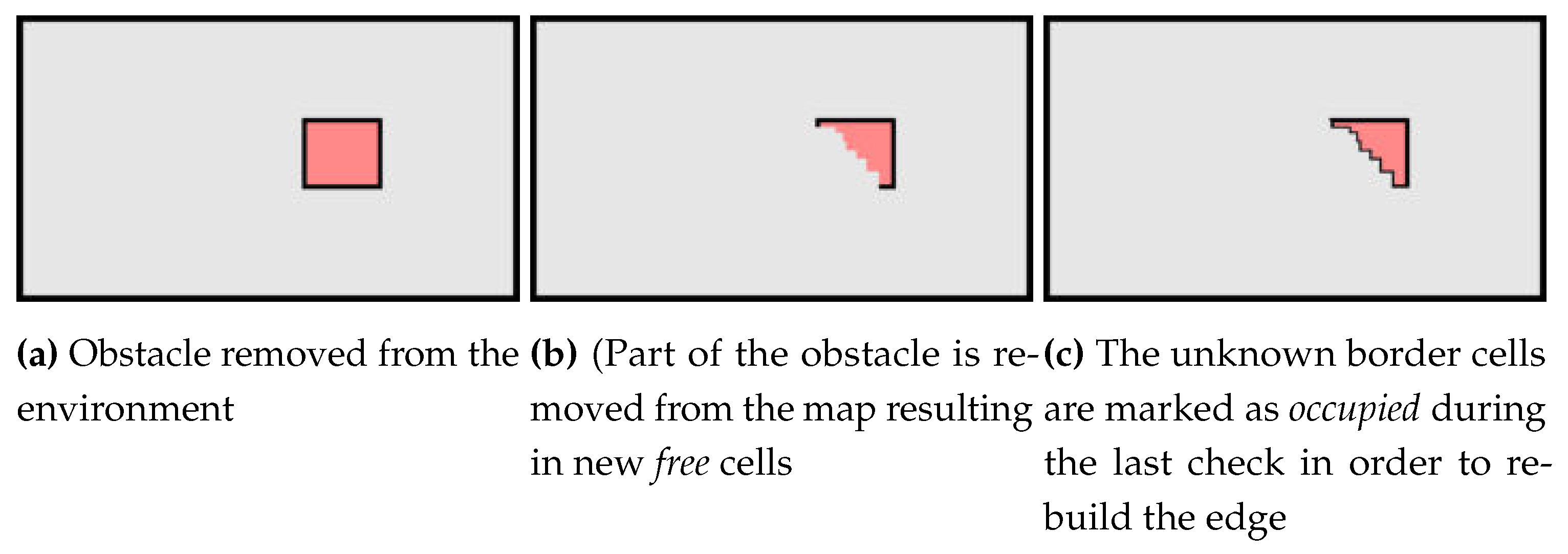

It is worth noting, that a change of cell state from

occupied to

free can lead to a partial removal of an obstacle. Referring to

Figure 14, cells corresponding to the border of an obstacle can hence be set as

free leading to an updated map

with obstacles without borders characterized by measurable cells with

unknown state, see

Figure 14 where black cells are

occupied while red ones are

unknown. To avoid this, the state of those cells must be set to

occupied, leading to the creation of the new border as in

Figure 14. At this point, the cell state update procedure is finished, and the new map

can be created. To do so, it is important to recall that cells in the initial map

with “unknown” state have been considered, and treated, as

occupied cells in the

Beam Classifier module. However, if their state has not been changed to

occupied to recreate an obstacle border, they are represented in

still as “unknown” cells.

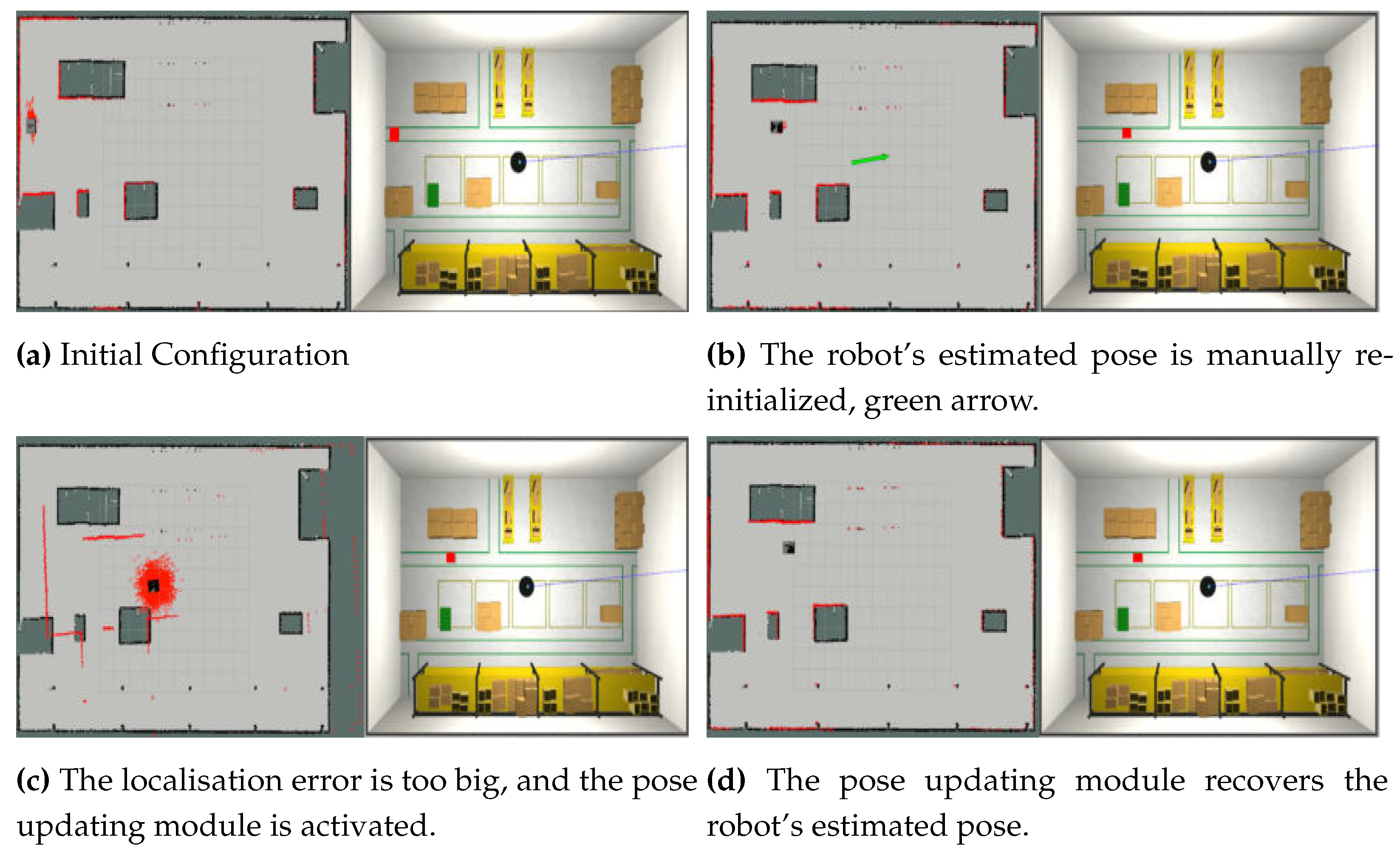

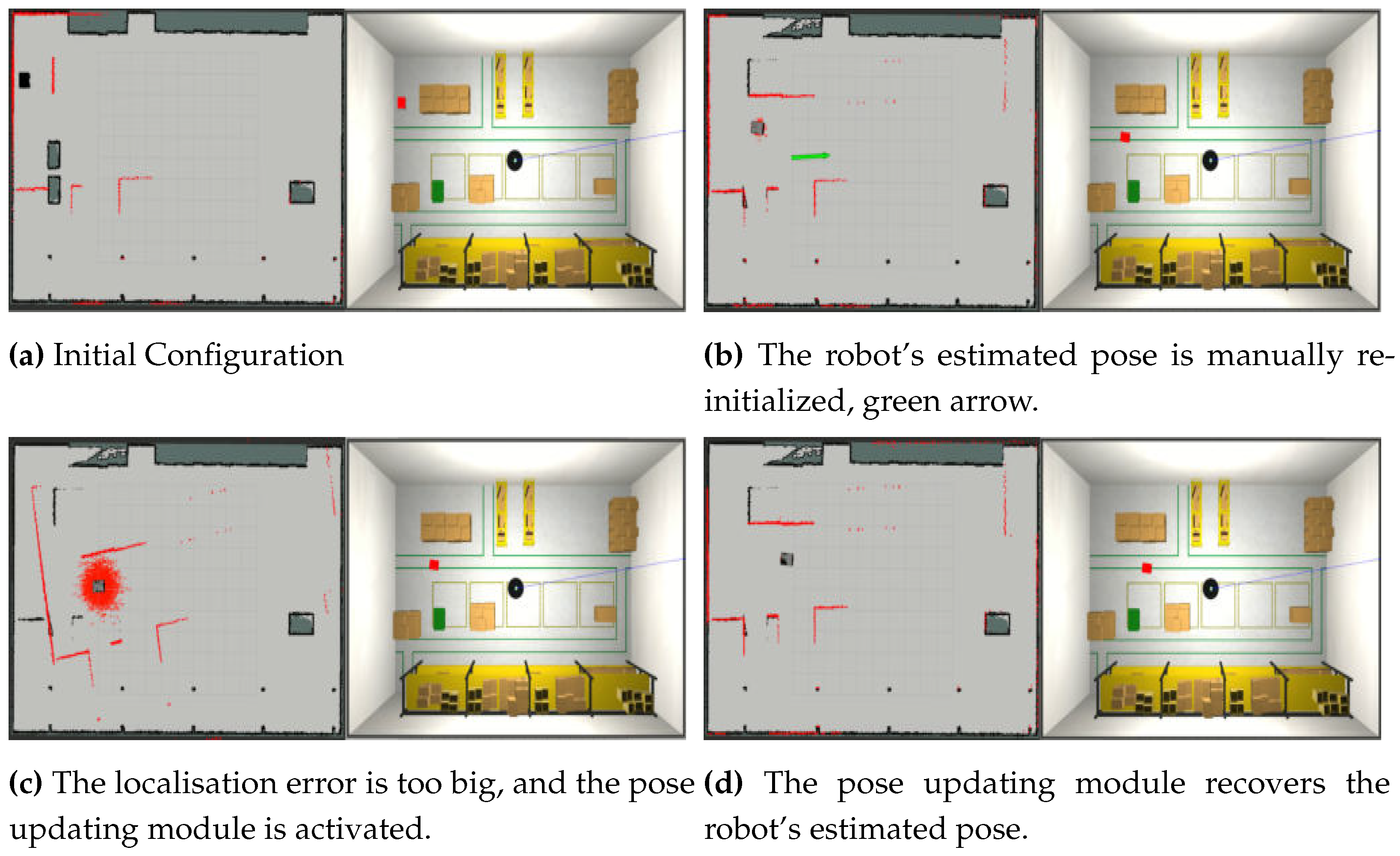

4.6. Pose Updating

The Pose Updating module is activated when the Localisation Check one detects that the robot is lost with no possibility to recover its pose. To avoid possible collisions, when the localisation error is too high, the robot is stopped until the end of the pose updating process.

The module takes as input: the last estimated pose of the localisation algorithm

, the last updated map

, and the current laser measurement

. To retrieve the robot pose, the idea is to compare what the robot currently sees, i.e. the laser measurements

, with what it would see if it were in the last correctly estimated pose

, i.e. the expected point cloud

computed from the last updated map

from the estimated pose. Practically, the

Pose updating module estimates the rigid transformation

between the point cloud

, obtained from

, and the point cloud

, computed from map

in the last correct pose

. To compute

, we used the same approach as in

Section 4.1, while to estimate

it is possible to apply any methods that can be used to find a rigid transformation between two point clouds, e.g. Iterative Closest Point [

29], or Coherent Point Drift [

30].

6. Discussion

The aim of this work was to extend our previous work in order to update the map robustly with respect to big localisation and measurement errors. Although our previous method gave promising results in terms of map quality and localisation improvement, in Sec

Section 5.3.1 we presented the big limitation of that approach: without a perfect localisation system, the map updating process provides a corrupted map. We have therefore developed a safety mechanism to pause the map update in case of big localisation errors. This mechanism is related to the number of detected changes measurements in the current environment compared to the previous map, as shown in

Section 5.3.1. As numerical validation confirmed, the mechanism was sufficient to prove both the map quality and localisation improvement. It is worth noting that, to the authors’ best knowledge, as in all other available approaches, in case of substantial changes in the environment the proposed system would detect numerous detected change measurements and would not update the map even in the absence of localisation errors. The only solution, in this case, would be to recompute the map from scratch.

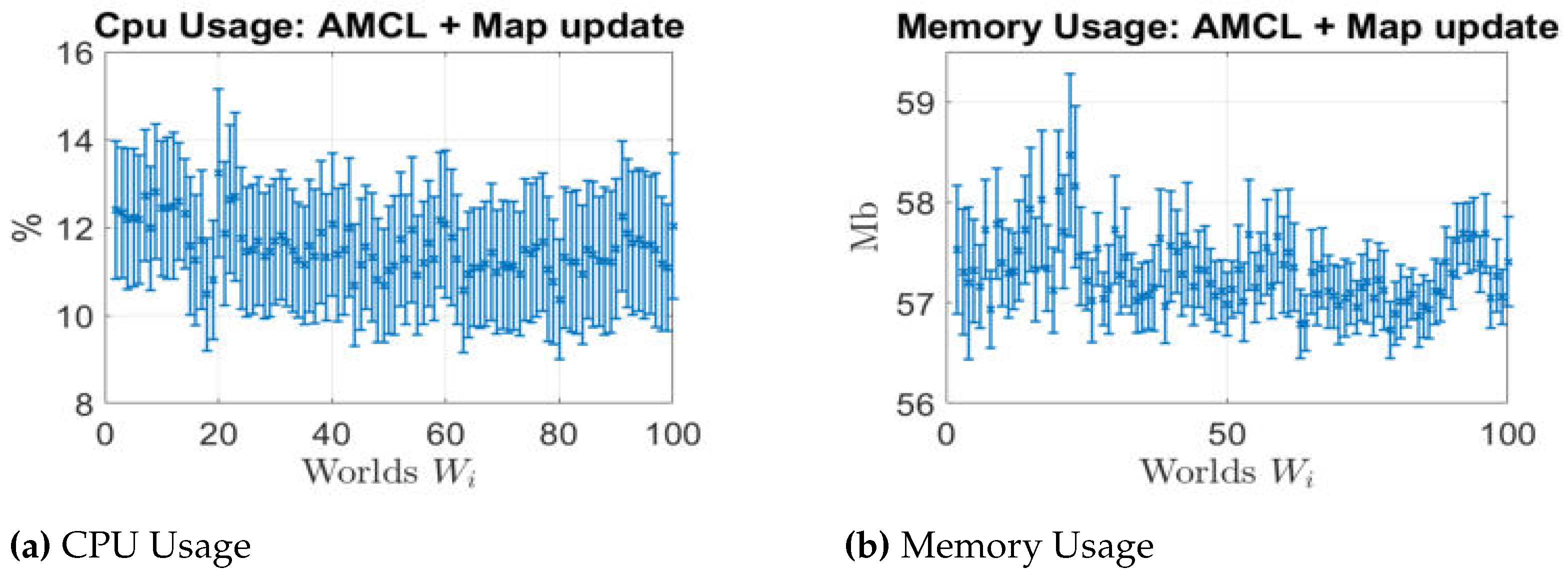

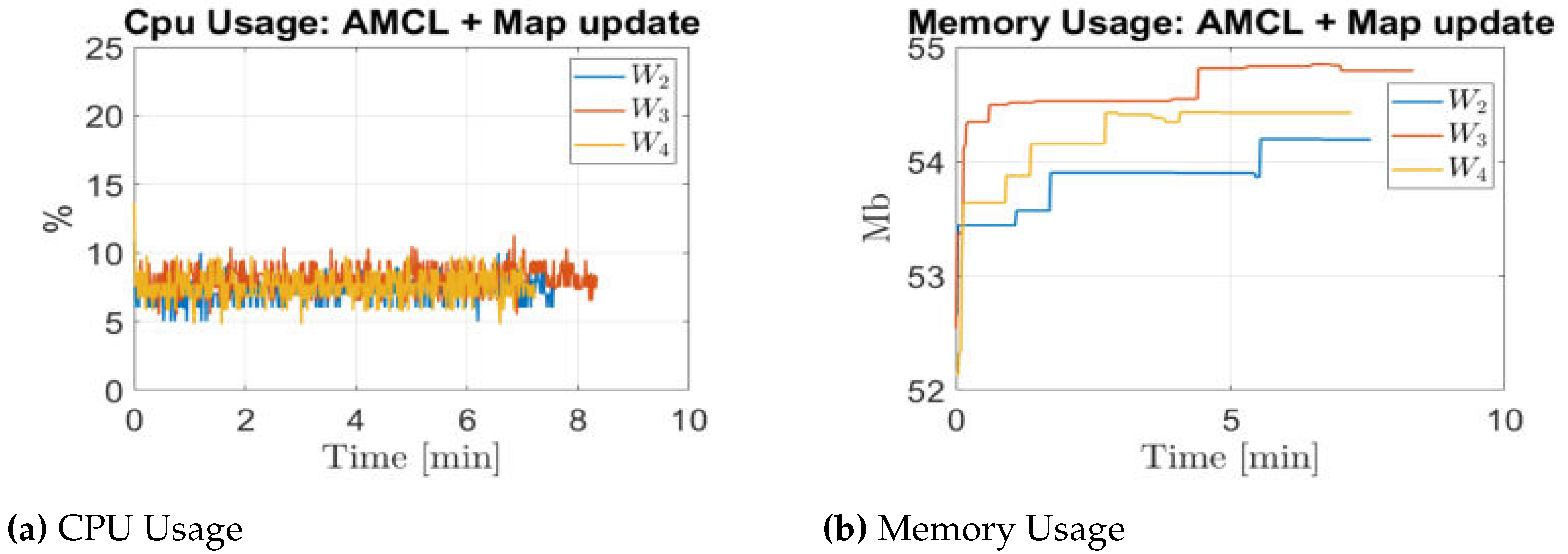

From the hardware resource consumption point of view, the proposed algorithm does not require high computation capabilities. Indeed, differently from other approaches such as those based on graphs, the resource consumption does not depend on the robot’s travelled path but only on the laser scan measurements processing. In this case, the CPU usage can be further significantly reduced by simply discarding some range measurements from the processed laser scan. Moreover, the memory usage depends only on the number of changed cells and the size of the buffers and not on the environment dimension. Concluding, as both the numerical validation and real-data experiments confirmed, the proposed memory-limited solution is suitable for life-long operation scenarios.

So far, we used only the lidar measurements to update the map, since they are the sensors more common to build a 2D occupancy grid map. As future work, we plan to extend our system to manage also 3D sensors in order to update the 3D occupancy grid map. Moreover, the validation of the localisation performance in a real environment could be improved with an external tracking system as a benchmark.

Figure 1.

2D Occupancy Grid Map representation: The origin of grid coordinates is in the bottom-left corner, with the first location having an index of (0,0).

Figure 1.

2D Occupancy Grid Map representation: The origin of grid coordinates is in the bottom-left corner, with the first location having an index of (0,0).

Figure 2.

Ideal case: the laser beam hits an obstacle in . The point identifies the right occupied cell in the map thanks to a correct estimation of the robot position p.

Figure 2.

Ideal case: the laser beam hits an obstacle in . The point identifies the right occupied cell in the map thanks to a correct estimation of the robot position p.

Figure 3.

Real case: due to localisation errors on the robot pose and measurement errors or noises, the laser beams can be prevented to identify the right cell associated with the hit obstacle but another neighbouring cell.

Figure 3.

Real case: due to localisation errors on the robot pose and measurement errors or noises, the laser beams can be prevented to identify the right cell associated with the hit obstacle but another neighbouring cell.

Figure 4.

System Overview: our proposed approach takes as inputs an initial occupancy grid map , the robot pose provided by a localisation algorithm, and the current laser measurements to computes a newly updated map and a new robot pose accordingly to the localisation error.

Figure 4.

System Overview: our proposed approach takes as inputs an initial occupancy grid map , the robot pose provided by a localisation algorithm, and the current laser measurements to computes a newly updated map and a new robot pose accordingly to the localisation error.

Figure 5.

Proposed Approach Overview: First, the laser measurements are classified with the Beams Classifier as either "detected change measurement" or "non-detected change measurement" based on their discrepancy with respect to the initial map . Then, the detected change measurements are evaluated by the Localisation Check to assess the localisation error. If the error is small enough, the map updating process is activated. Otherwise, the system continues to monitor the localisation error, pausing the map update process until the error goes below a given threshold. On the contrary, if the error continues to increase, the pose updating process is enabled to provide a new based on the last , , and .

Figure 5.

Proposed Approach Overview: First, the laser measurements are classified with the Beams Classifier as either "detected change measurement" or "non-detected change measurement" based on their discrepancy with respect to the initial map . Then, the detected change measurements are evaluated by the Localisation Check to assess the localisation error. If the error is small enough, the map updating process is activated. Otherwise, the system continues to monitor the localisation error, pausing the map update process until the error goes below a given threshold. On the contrary, if the error continues to increase, the pose updating process is enabled to provide a new based on the last , , and .

Figure 6.

Overview of Map Updating: Based on the Beams Classifier categorization, the Changed Cells Evaluator and the Unchanged Cells Evaluator fill a rolling buffer of each cell with ”changed” and ”unchanged” flags. Finally, the cells’ status is updated if the number of ”changed” flags in the buffer exceeds a predefined threshold.

Figure 6.

Overview of Map Updating: Based on the Beams Classifier categorization, the Changed Cells Evaluator and the Unchanged Cells Evaluator fill a rolling buffer of each cell with ”changed” and ”unchanged” flags. Finally, the cells’ status is updated if the number of ”changed” flags in the buffer exceeds a predefined threshold.

Figure 7.

Example of (in green) with , , and associated to the measured hit point (pink dot). v is chosen so that perturbations , and hence, between and . In this map, the red obstacle has been removed with respect to .

Figure 7.

Example of (in green) with , , and associated to the measured hit point (pink dot). v is chosen so that perturbations , and hence, between and . In this map, the red obstacle has been removed with respect to .

Figure 8.

Example of a measured hit point (pink dot) and differences between single hit point and set of expected measurements (in green) in presence of an obstacle removed from previous map .

Figure 8.

Example of a measured hit point (pink dot) and differences between single hit point and set of expected measurements (in green) in presence of an obstacle removed from previous map .

Figure 9.

Examples of ”detected change” measurements caused by an environmental change, such as the addition or removal of obstacles. Pink lines represent laser beams, while pink dots represent the measured hit point. The expected hit points computed on are highlighted in green.

Figure 9.

Examples of ”detected change” measurements caused by an environmental change, such as the addition or removal of obstacles. Pink lines represent laser beams, while pink dots represent the measured hit point. The expected hit points computed on are highlighted in green.

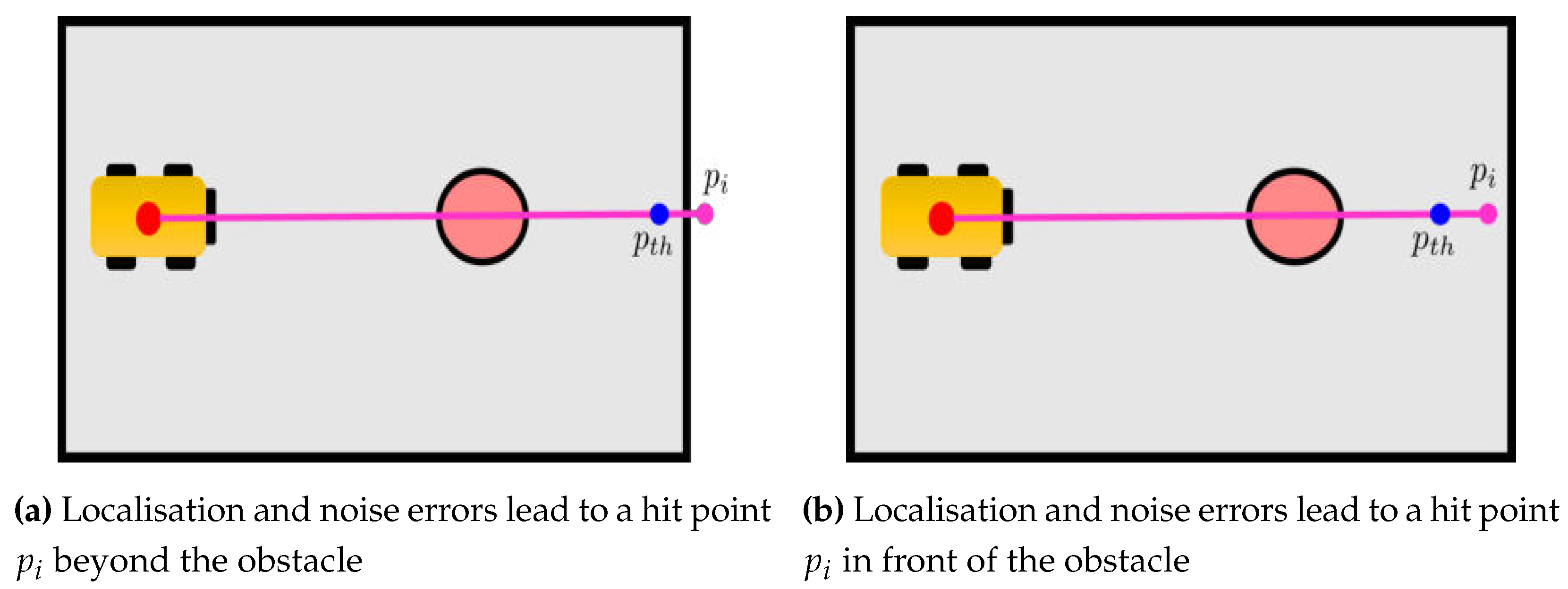

Figure 10.

Example of localisation and noise errors leading to incorrect detection of hit point (in pink), for this reason only cells up to (blue) are examined for change detection.

Figure 10.

Example of localisation and noise errors leading to incorrect detection of hit point (in pink), for this reason only cells up to (blue) are examined for change detection.

Figure 11.

Ideal case where no localisation error occurs: by checking the current status of the cell associated to the hit point we may distinguish between (a) added obstacle if in , (b) removed obstacle if in

Figure 11.

Ideal case where no localisation error occurs: by checking the current status of the cell associated to the hit point we may distinguish between (a) added obstacle if in , (b) removed obstacle if in

Figure 12.

Case in which localisation error may occur: by checking the current status of the cell associated to the hit point we may not distinguish between (a) added obstacle with in , (b) removed obstacle with in

Figure 12.

Case in which localisation error may occur: by checking the current status of the cell associated to the hit point we may not distinguish between (a) added obstacle with in , (b) removed obstacle with in

Figure 13.

Considering cells sufficiently close to the system is able to distinguish between an added obstacle case from a removed obstacle one. Case (a), an obstacle has been added, and all cells close to are free in . Case (b), an obstacle has been removed and some cells close to are occupied in .

Figure 13.

Considering cells sufficiently close to the system is able to distinguish between an added obstacle case from a removed obstacle one. Case (a), an obstacle has been added, and all cells close to are free in . Case (b), an obstacle has been removed and some cells close to are occupied in .

Figure 14.

Last check of free cells to reconstruct obstacle borders

Figure 14.

Last check of free cells to reconstruct obstacle borders

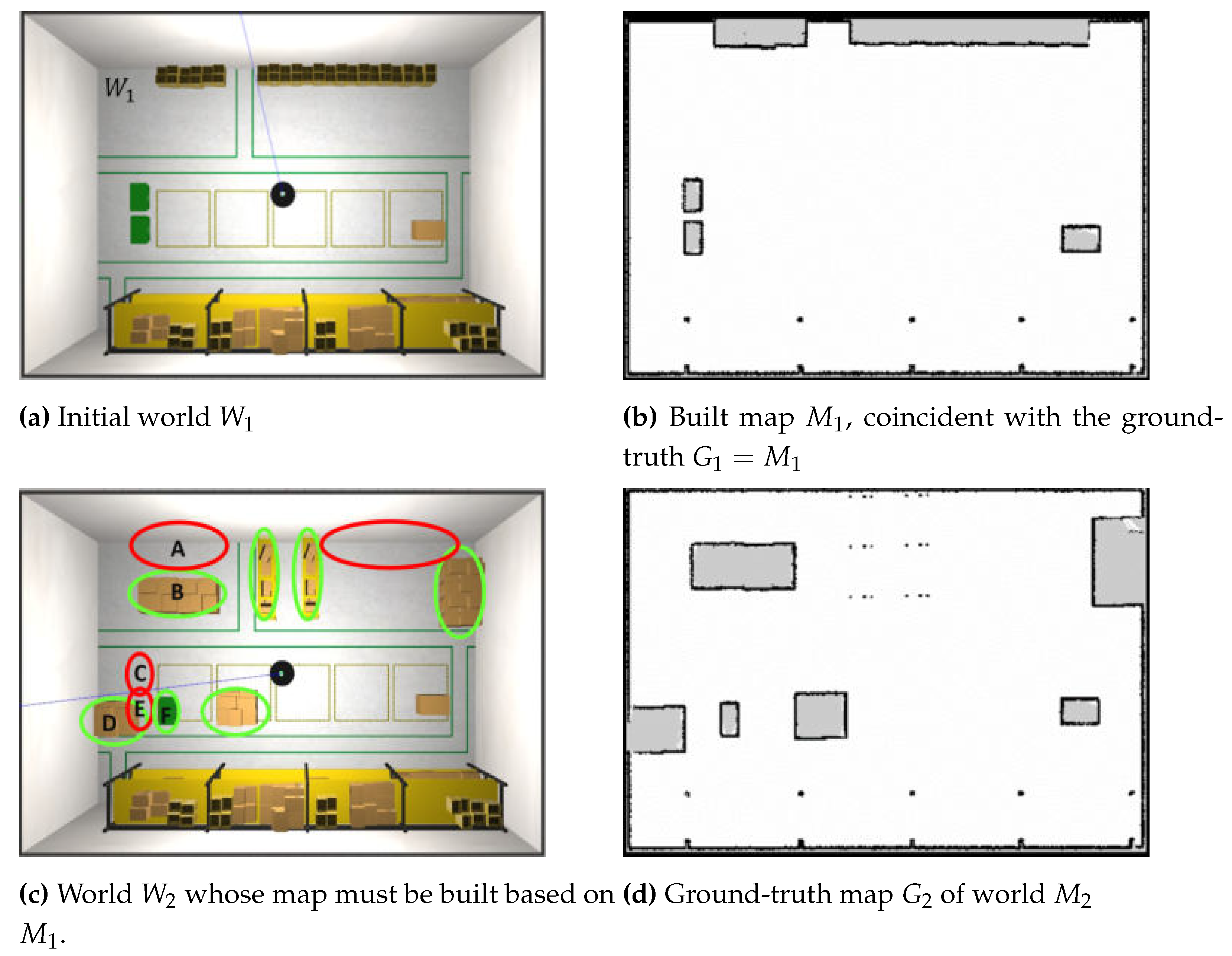

Figure 15.

Scenario for the Localisation Check module testing

Figure 15.

Scenario for the Localisation Check module testing

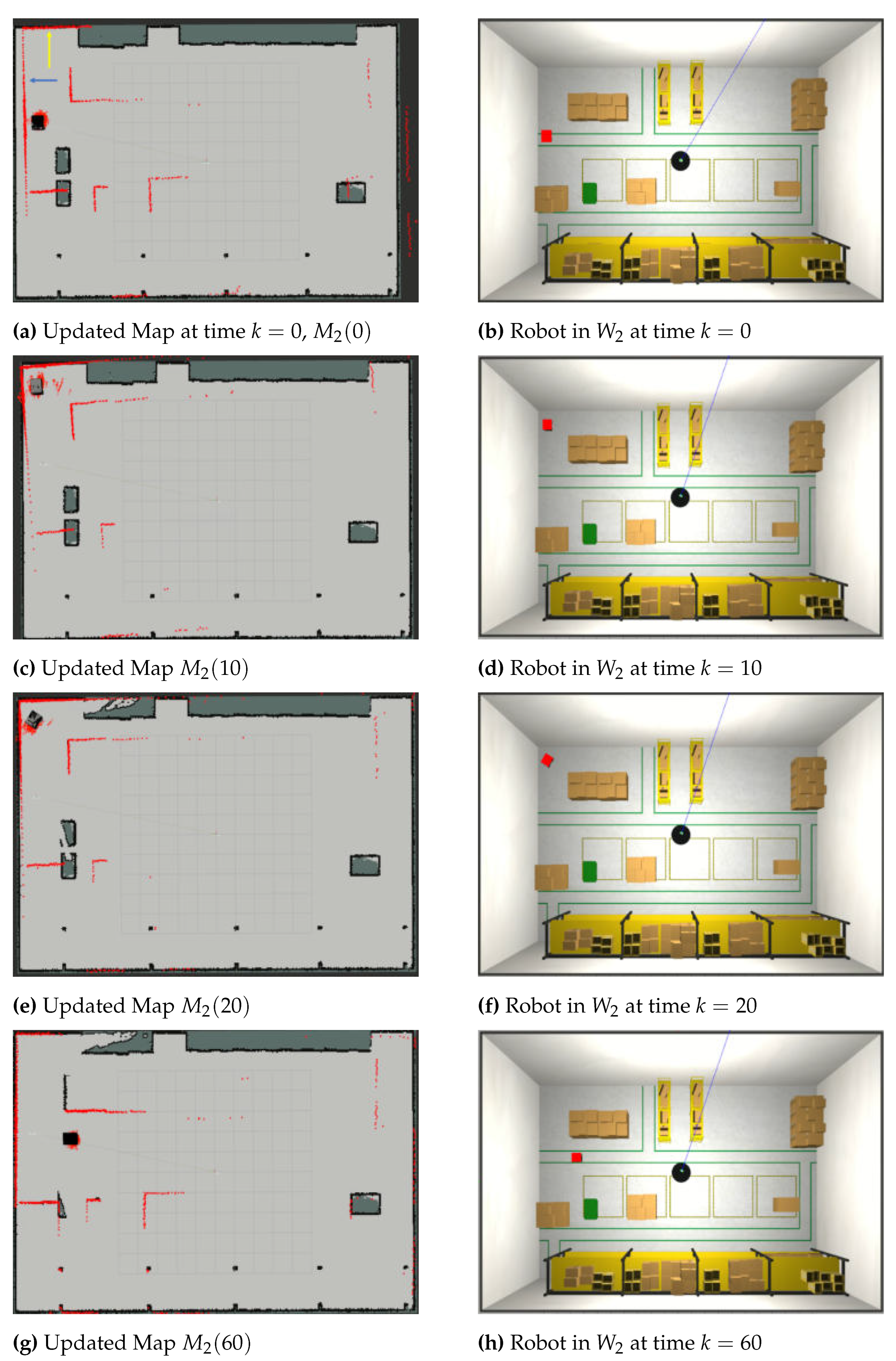

Figure 16.

System evolution at the initial time (a)-(b), after 10 secs (c)-(d), 20 secs (e)-(f) and 60 secs (g)-(h).

Figure 16.

System evolution at the initial time (a)-(b), after 10 secs (c)-(d), 20 secs (e)-(f) and 60 secs (g)-(h).

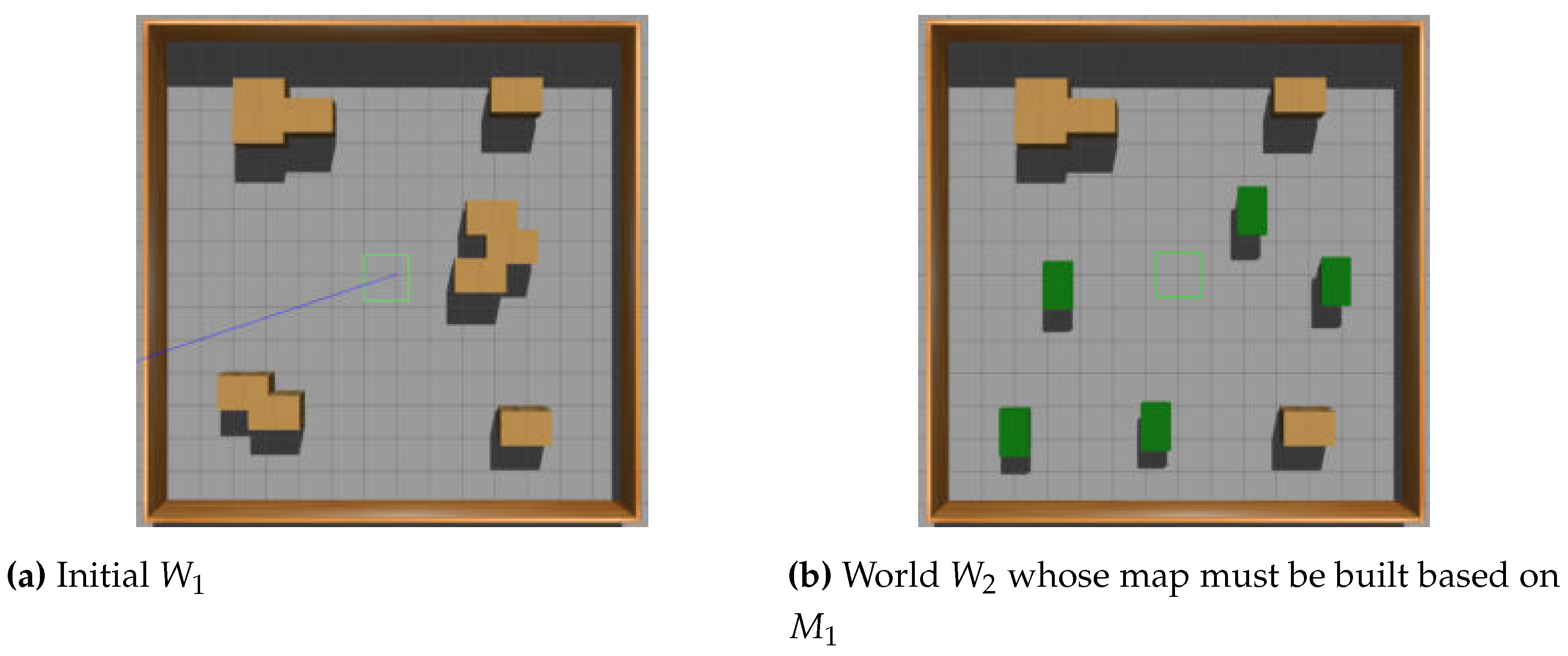

Figure 17.

Scenario for comparison of active Localisation Check module Vs an always active map updating process

Figure 17.

Scenario for comparison of active Localisation Check module Vs an always active map updating process

Figure 18.

Initial example configuration

Figure 18.

Initial example configuration

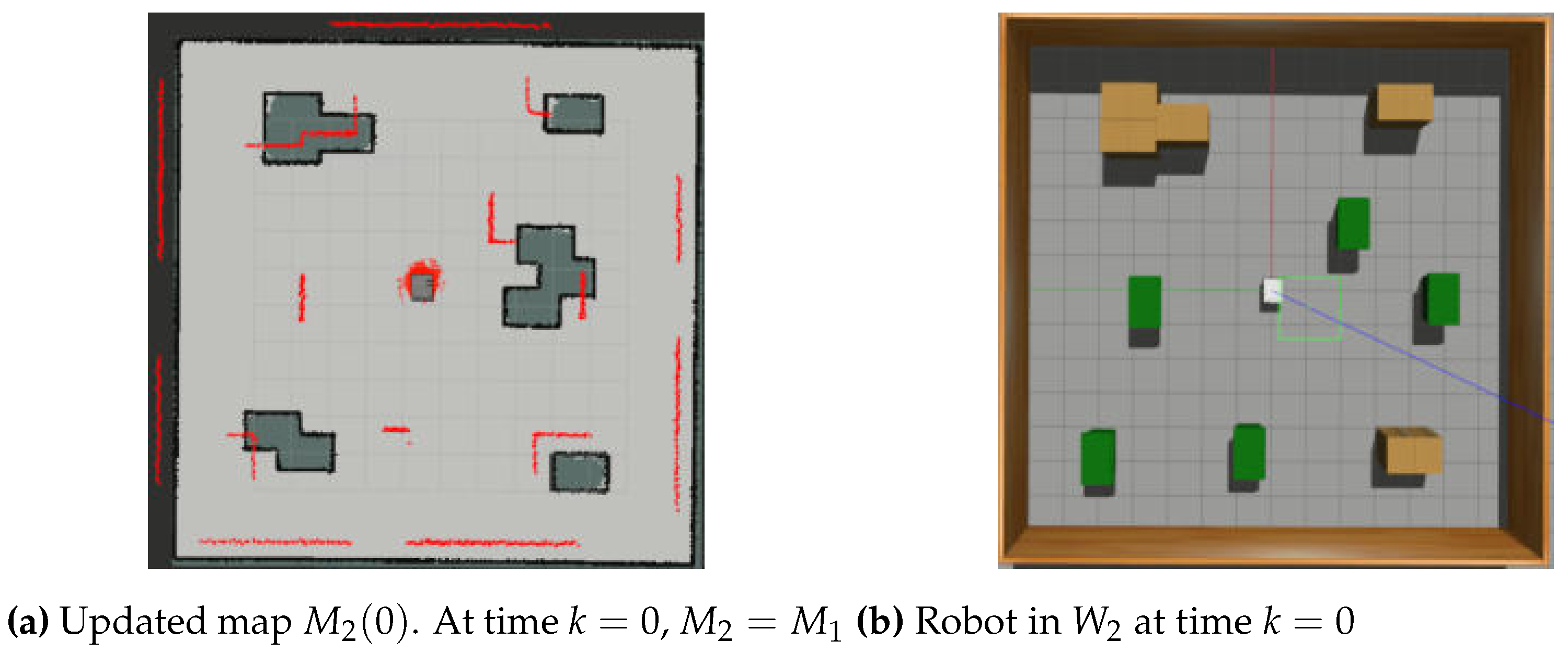

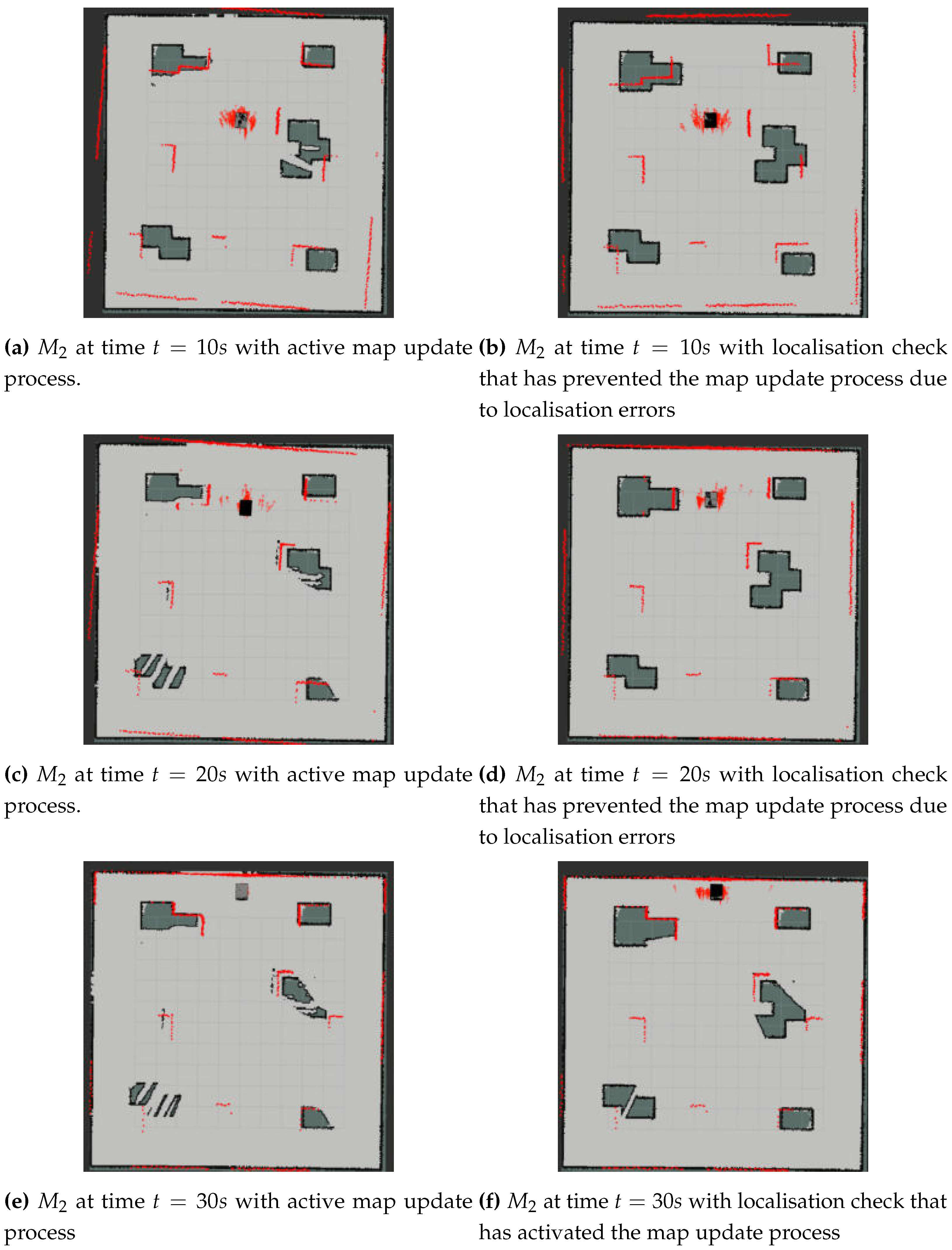

Figure 19.

Updated Mapping Approaches Comparison

Figure 19.

Updated Mapping Approaches Comparison

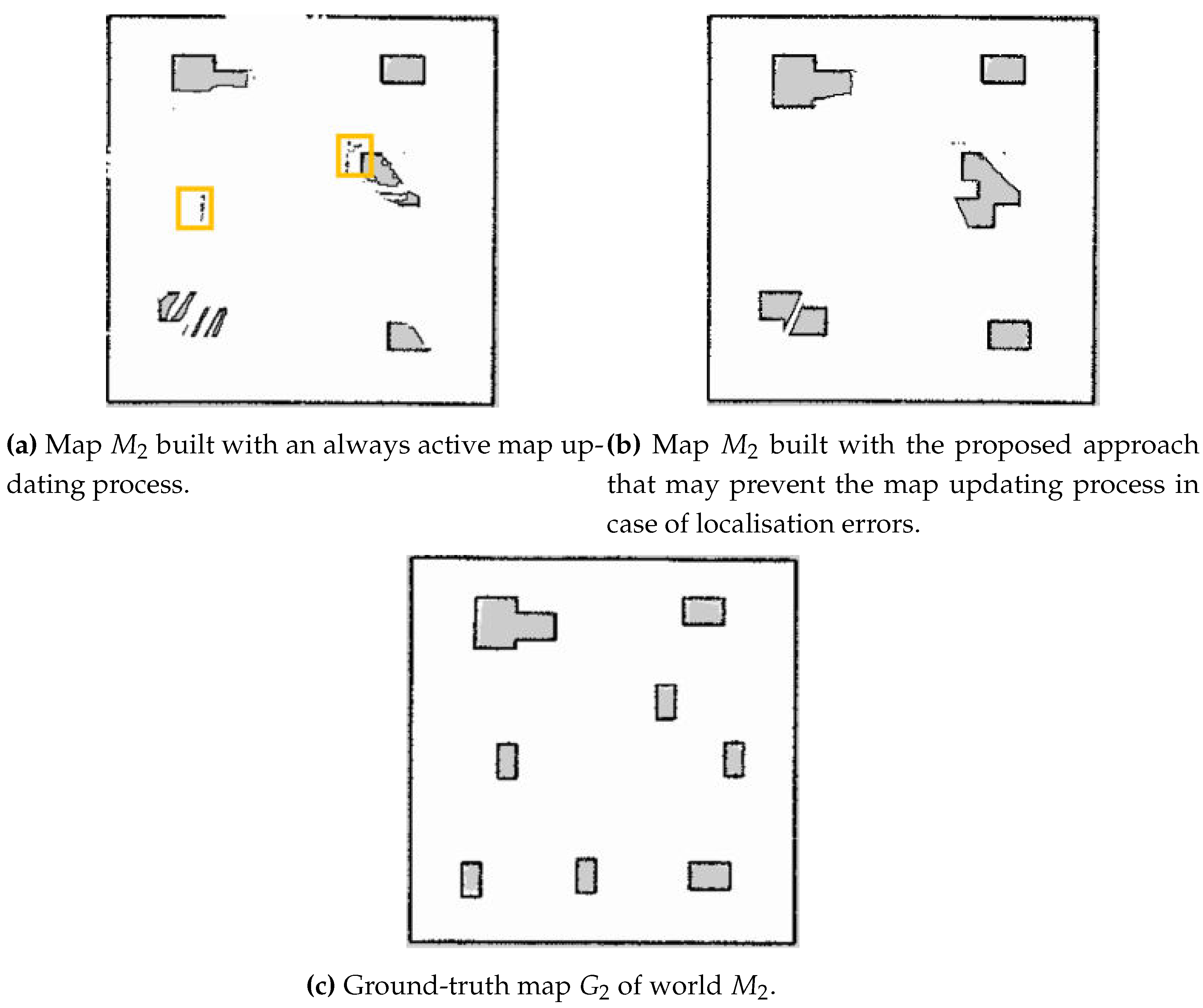

Figure 20.

Maps obtained with the two approaches and the corresponding ground-truth

Figure 20.

Maps obtained with the two approaches and the corresponding ground-truth

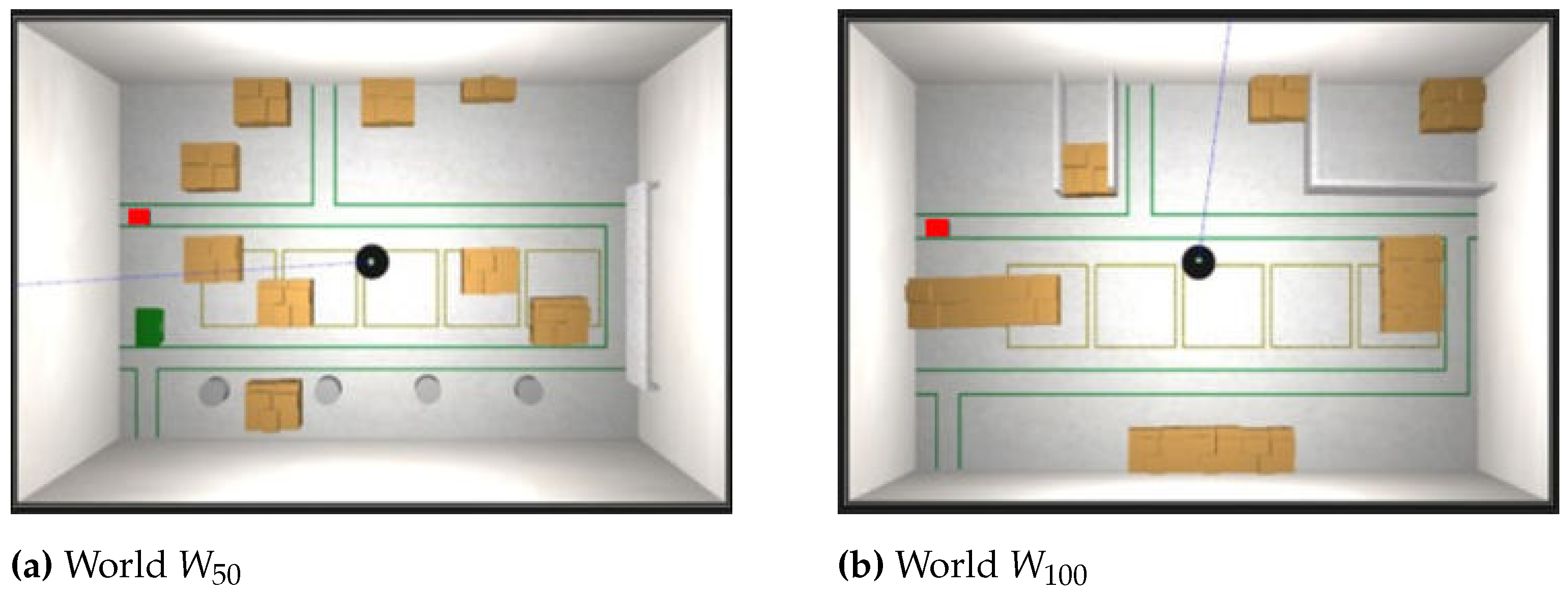

Figure 21.

Warehouse Gazebo environments for worlds 50 and 100.

Figure 21.

Warehouse Gazebo environments for worlds 50 and 100.

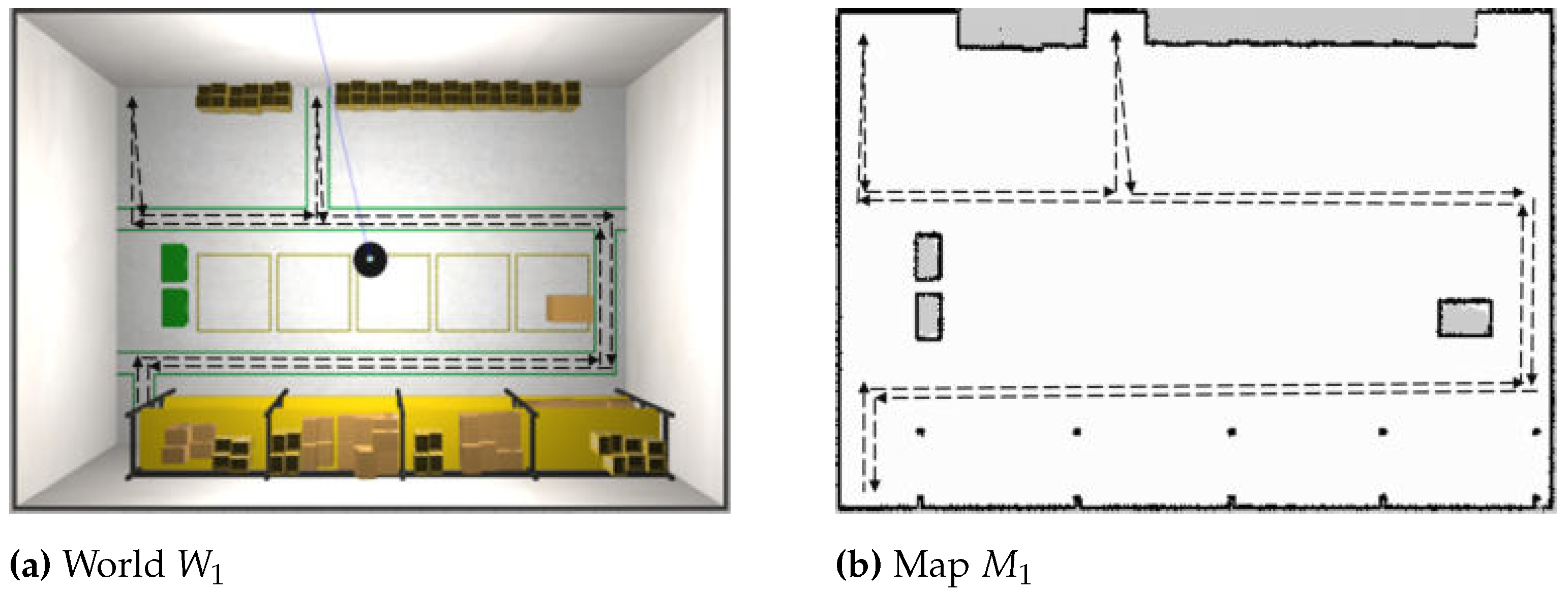

Figure 22.

Autonomous trajectory path (black) in first world (a) and the relative initial map (b)

Figure 22.

Autonomous trajectory path (black) in first world (a) and the relative initial map (b)

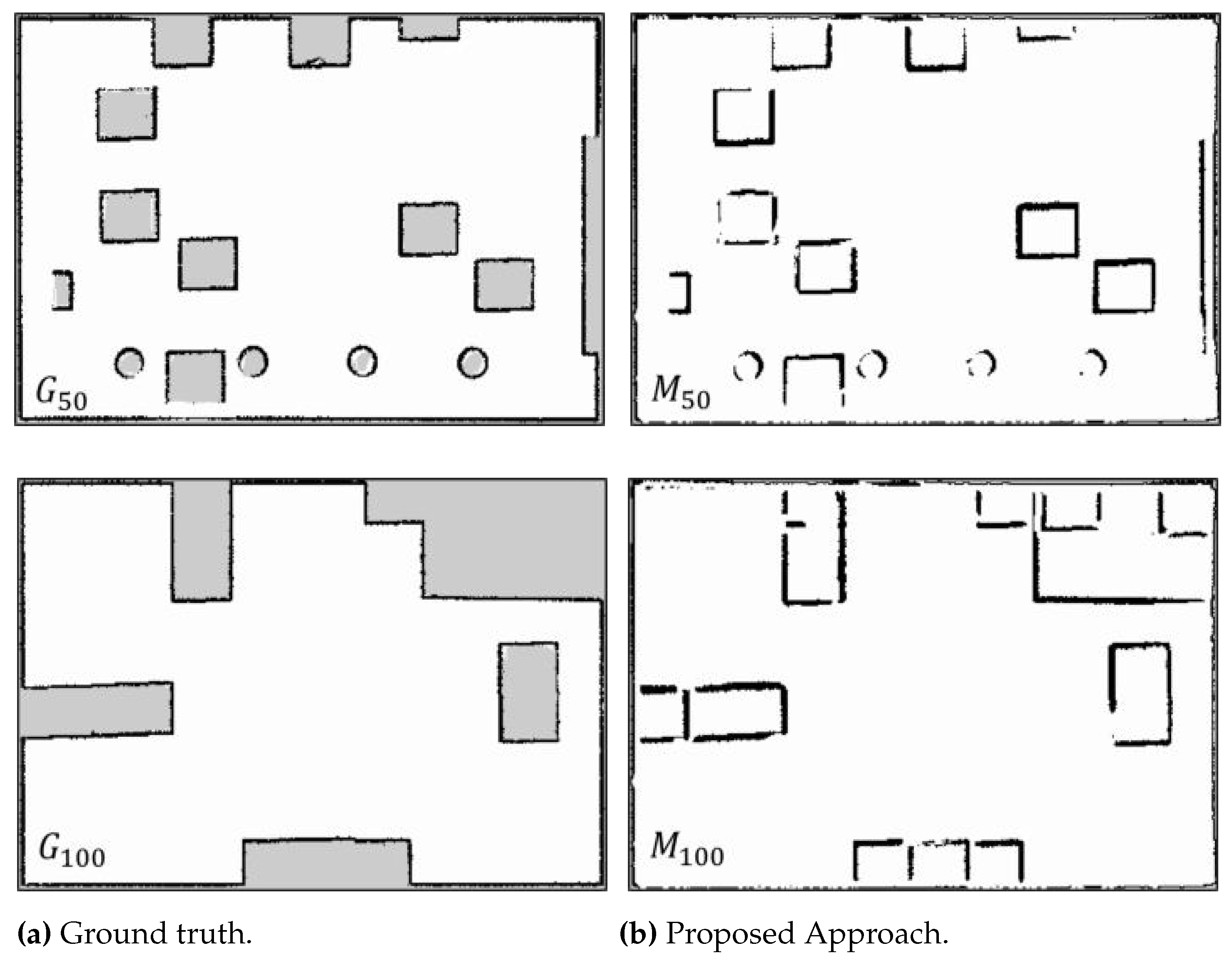

Figure 23.

Map comparisons

Figure 23.

Map comparisons

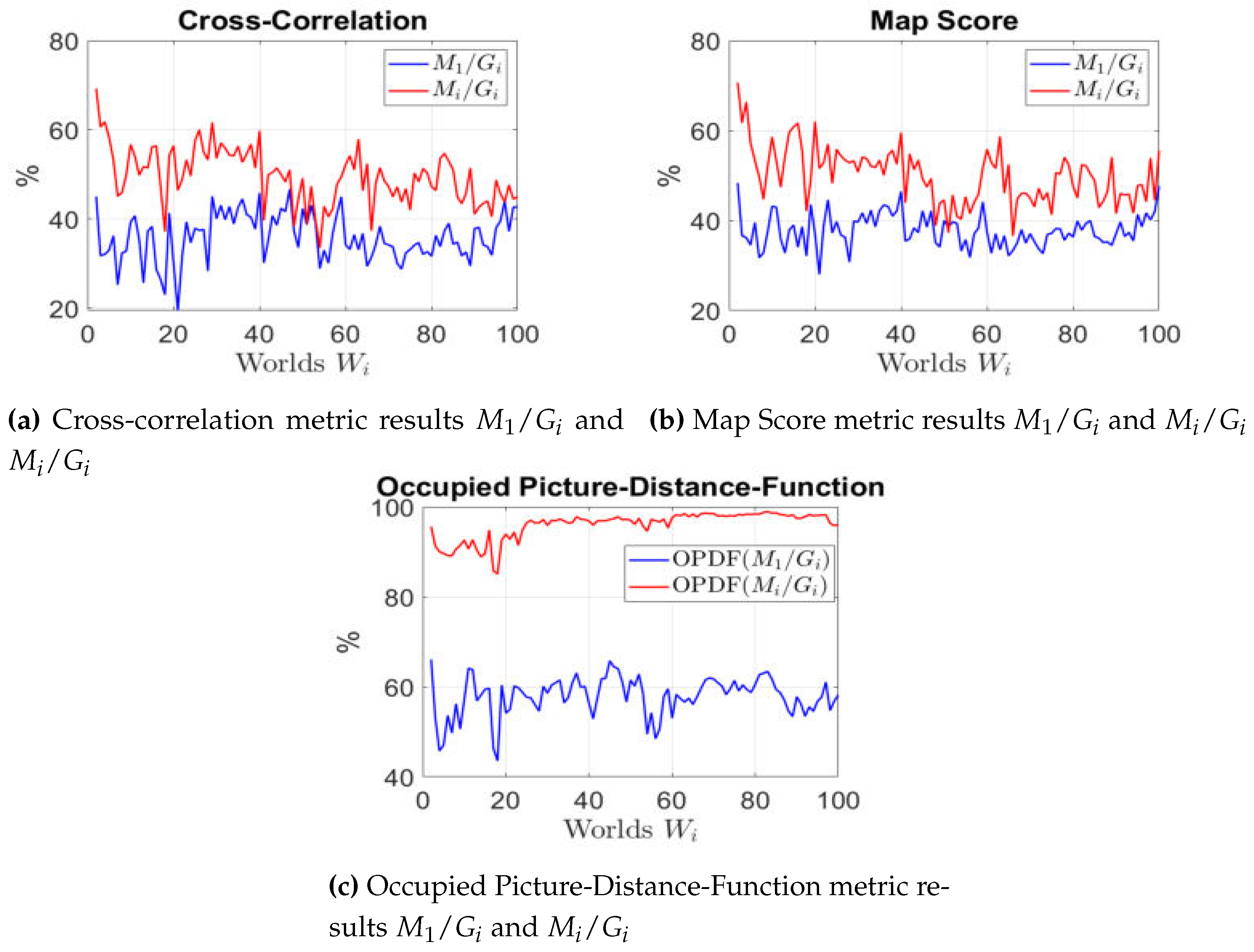

Figure 24.

Quantitative maps evaluation in each simulation scenario.

Figure 24.

Quantitative maps evaluation in each simulation scenario.

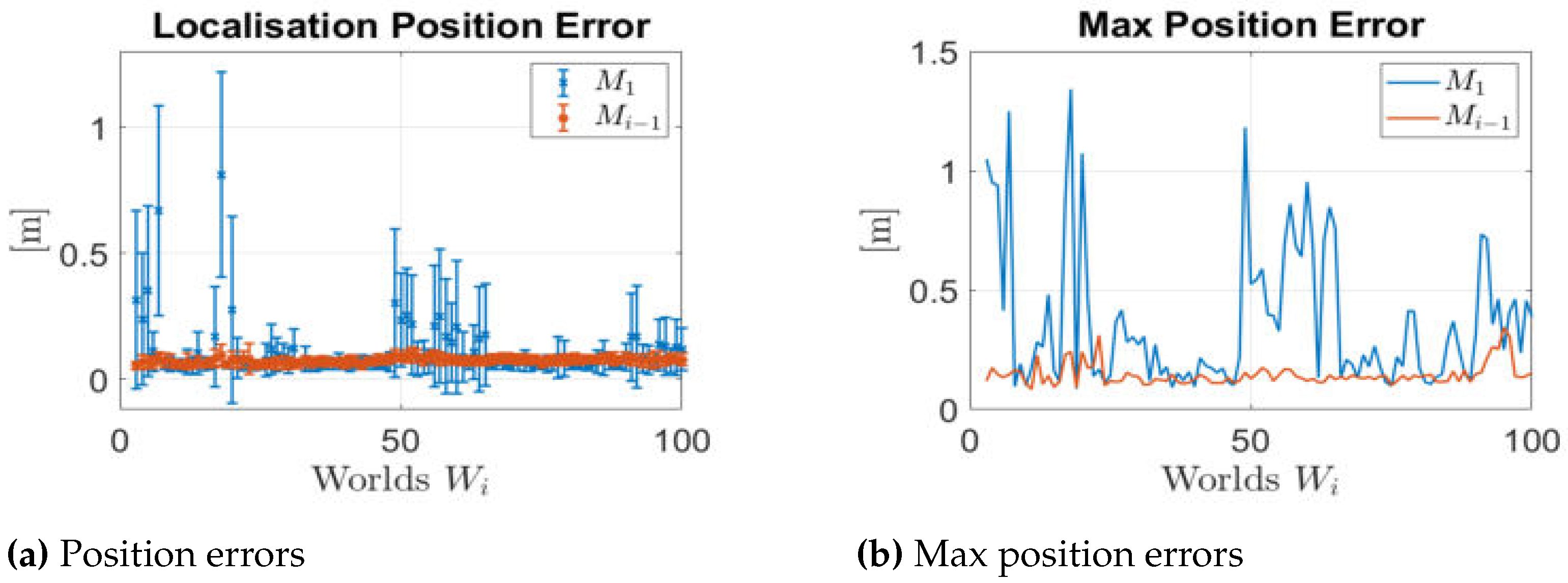

Figure 25.

Localisation Performance: localisation with old map (blue), localisation with last updated map (red).

Figure 25.

Localisation Performance: localisation with old map (blue), localisation with last updated map (red).

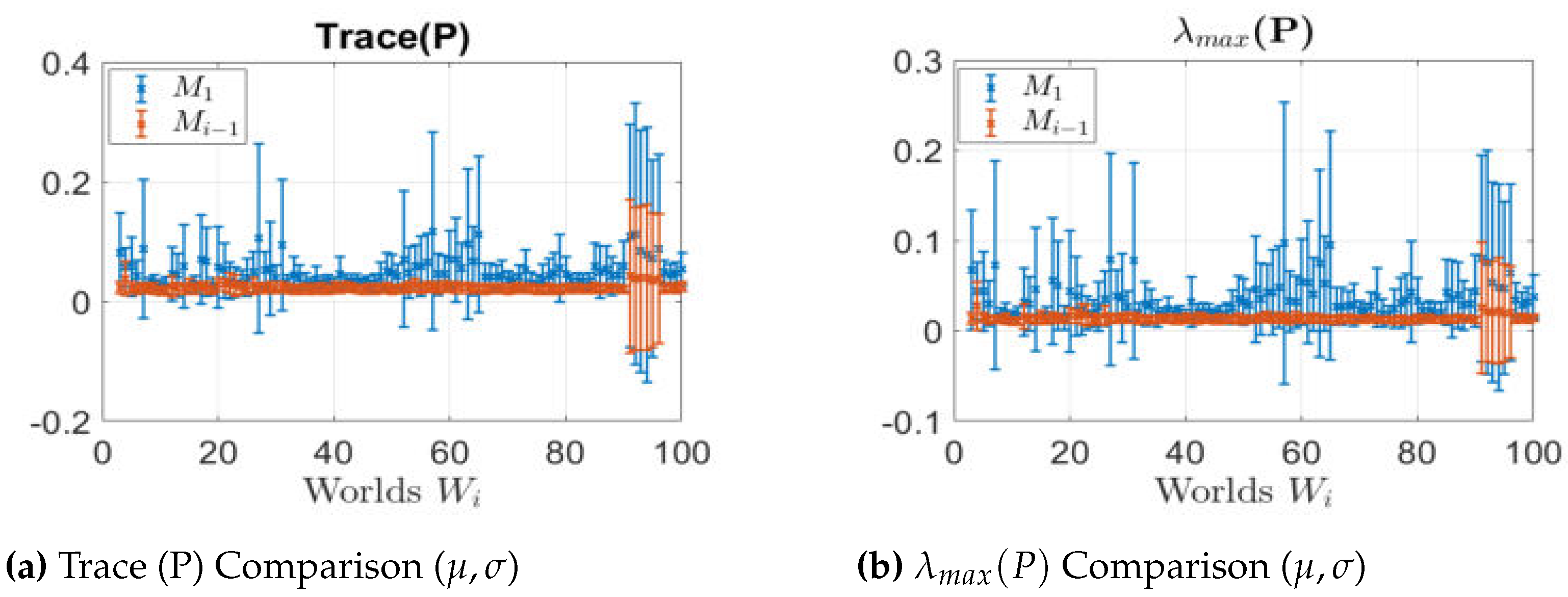

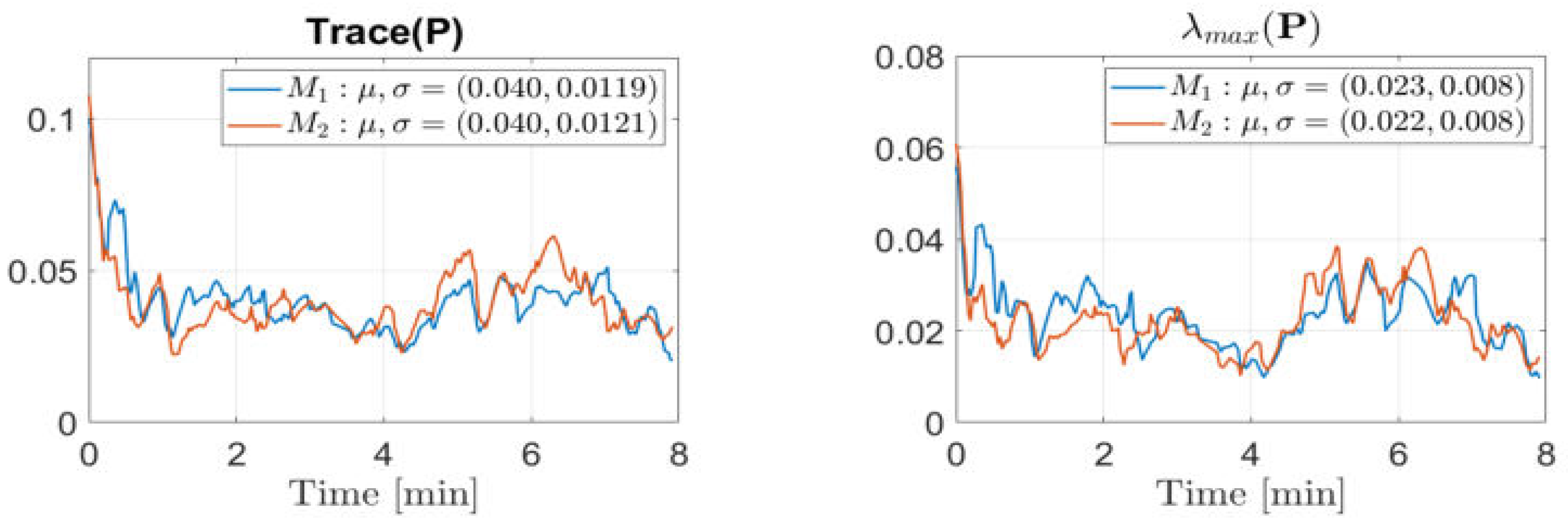

Figure 26.

Estimated Pose Covariance Matrix (P) Results: localisation with old map (blue), localisation with last updated map (red).

Figure 26.

Estimated Pose Covariance Matrix (P) Results: localisation with old map (blue), localisation with last updated map (red).

Figure 27.

Resource Consumption

Figure 27.

Resource Consumption

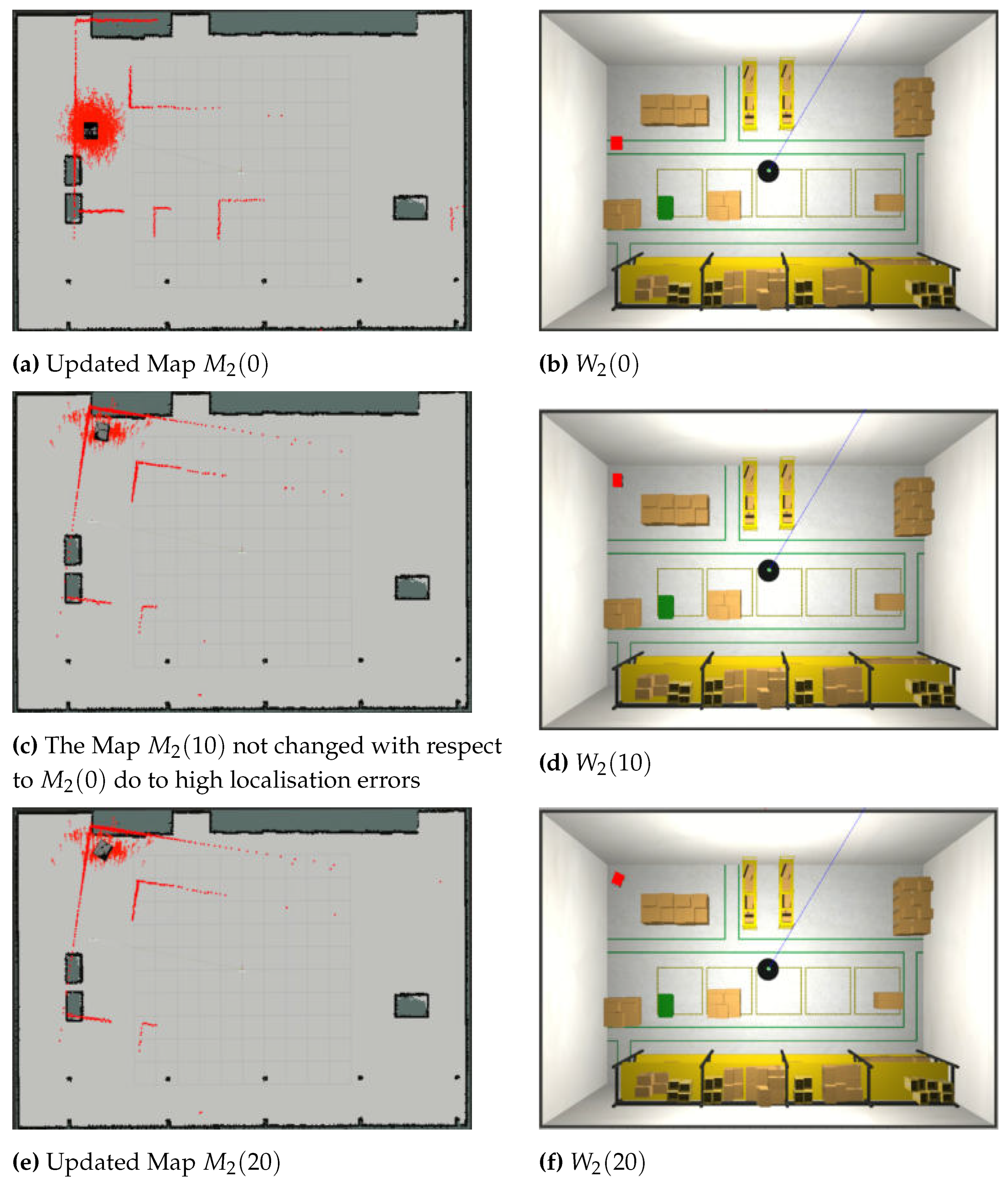

Figure 28.

System evolution at the initial time (a)-(b), after 10 secs (c)-(d) and 20 secs (e)-(f).

Figure 28.

System evolution at the initial time (a)-(b), after 10 secs (c)-(d) and 20 secs (e)-(f).

Figure 29.

First case scenario: the map used by the robot to locate itself reflects the current environment, i.e. .

Figure 29.

First case scenario: the map used by the robot to locate itself reflects the current environment, i.e. .

Figure 30.

Second Case Scenario: the map used by the robot to locate itself does not reflect the current environment .

Figure 30.

Second Case Scenario: the map used by the robot to locate itself does not reflect the current environment .

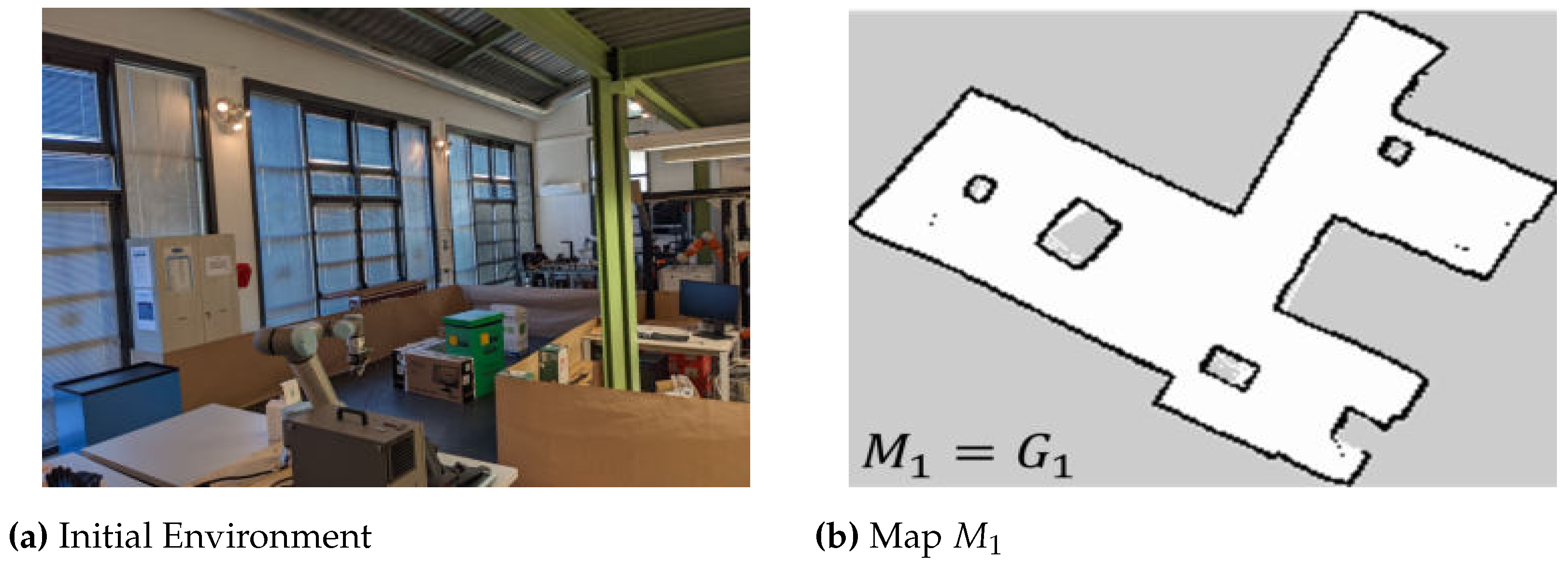

Figure 31.

Lab environment for the experiments

Figure 31.

Lab environment for the experiments

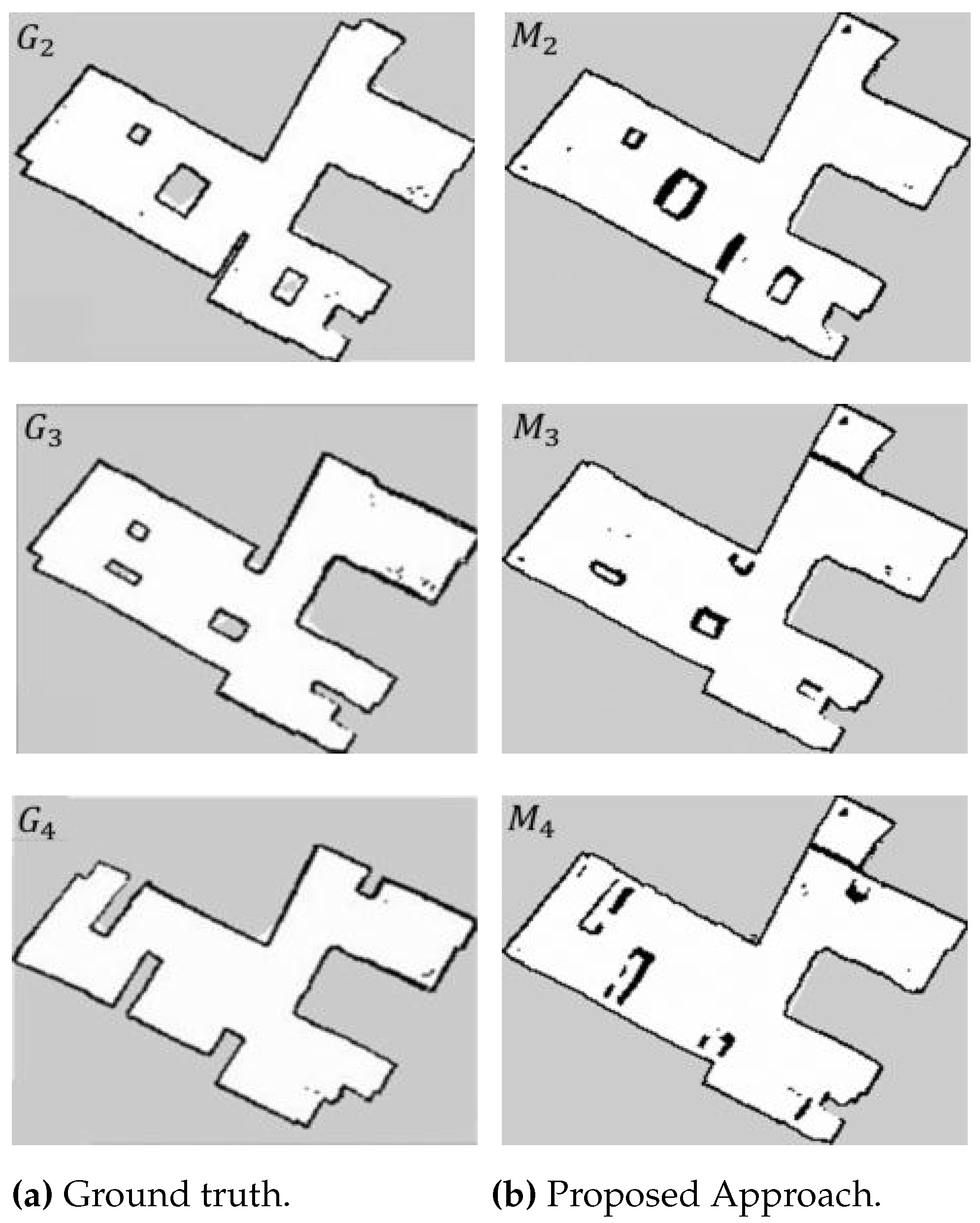

Figure 32.

Map comparisons

Figure 32.

Map comparisons

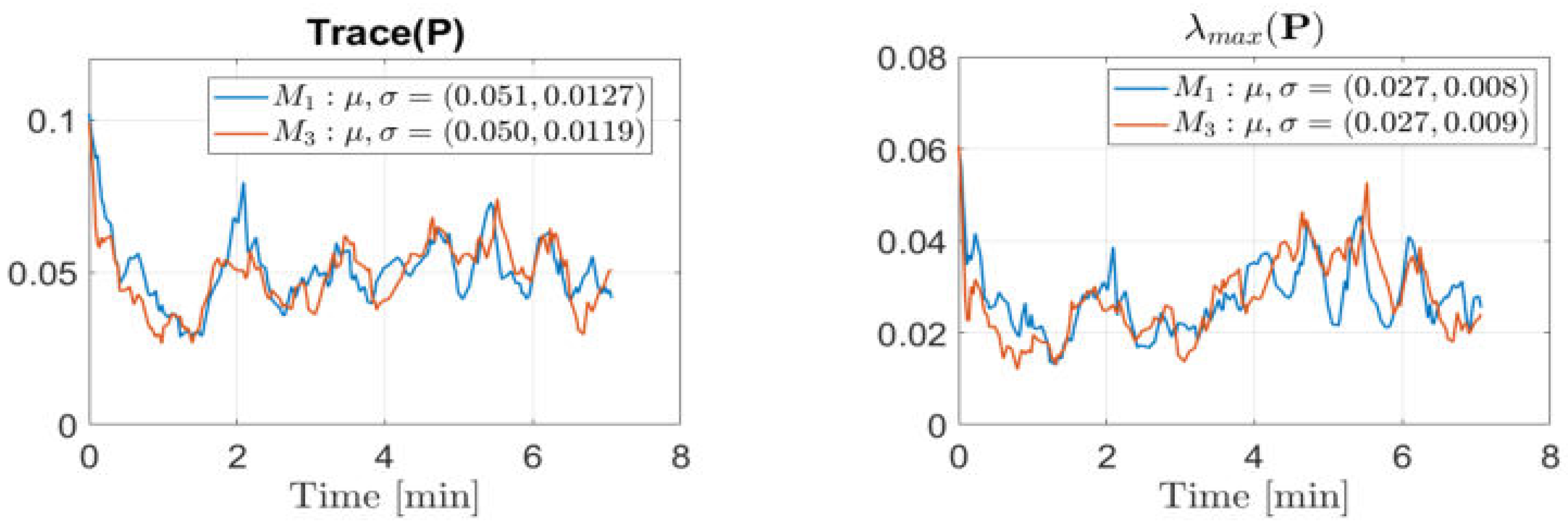

Figure 33.

Estimated Pose Covariance Matrix (P) Results in : localisation with old map (blue), localisation with last updated map (red).

Figure 33.

Estimated Pose Covariance Matrix (P) Results in : localisation with old map (blue), localisation with last updated map (red).

Figure 34.

Estimated Pose Covariance Matrix (P) Results in : localisation with old map (blue), localisation with last updated map (red).

Figure 34.

Estimated Pose Covariance Matrix (P) Results in : localisation with old map (blue), localisation with last updated map (red).

Figure 35.

Resource Consumption

Figure 35.

Resource Consumption

Table 1.

Map Quality Comparison on different metrics

Table 1.

Map Quality Comparison on different metrics

| |

Old /

|

New /

|

| CC(%) |

66.86 |

70.03 |

| MS(%) |

55.11 |

61.24 |

| OPDF(%) |

90.40 |

95.87 |

Table 2.

Quantitative maps evaluation.

Table 2.

Quantitative maps evaluation.

| |

|

|

|

| |

|

|

|

|

|

|

|

CC (%) |

69.69 |

78.95 |

63.77 |

68.80 |

60.87 |

67.14 |

|

MS (%) |

54.63 |

74.57 |

51.44 |

72.58 |

50.45 |

70.26 |

|

OPDF (%) |

84.61 |

97.25 |

78.92 |

95.37 |

82.18 |

94.33 |