Submitted:

11 May 2023

Posted:

12 May 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Modes of Convergence and the Law of Large Numbers

2.1. Standard Modes of Convergence

2.2. Complete and r-Complete Convergence

2.3. r-Quick Convergence

2.4. Further Remarks on r-Complete Convergence, r-Quick Convergence and Rates of Convergence in SLLN

3. Applications of r-Complete and r-Quick Convergences in Statistics

3.1. Sequential Hypothesis Testing

3.1.1. Asymptotic Optimality of Walds’s SPRT

3.1.2. Asymptotic Optimality of the Multihypothesis SPRT

- (i)

- For ,

- (ii)

- If the thresholds are so selected that and , in particular as , then for all

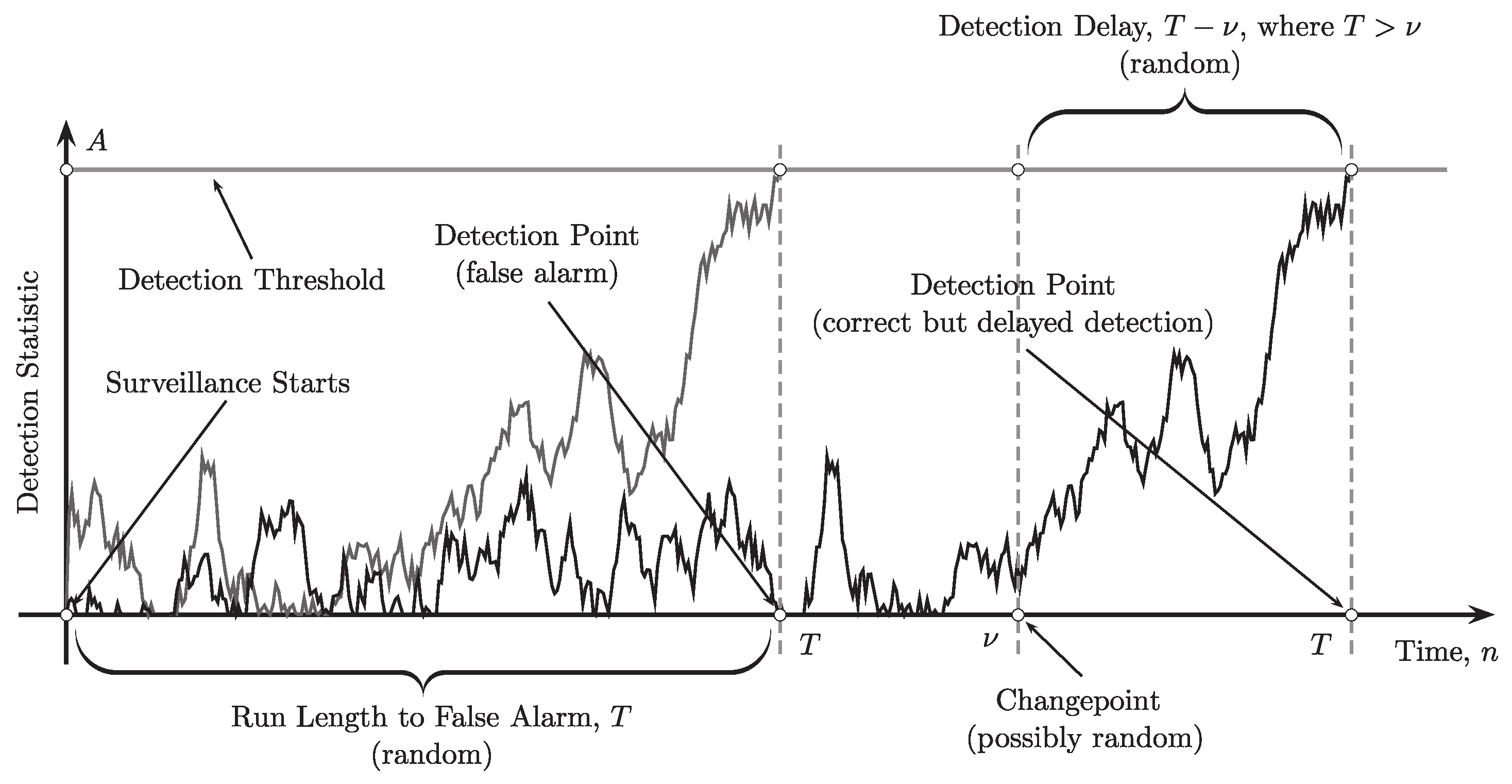

3.2. Sequential Changepoint Detection

3.2.1. Changepoint Models

3.2.2. Popular Changepoint Detection Procedures

The CUSUM Procedure

Shiryaev’s Procedure

Shiryaev–Roberts Procedure

3.2.3. Optimality Criteria

Minimax Changepoint Optimization Criteria

Bayesian Changepoint Optimization Criterion

Uniform Optimality Under Local Probabilities of False Alarm

3.2.4. Asymptotic Optimality for General Non-i.i.d. Models via r-Quick and r-Complete Convergence

Complete Convergence and General Bayesian Changepoint Detection Theory

Complete Convergence and General Non-Bayesian Changepoint Detection Theory

4. Quick and Complete Convergence for Markov and Hidden Markov Models

5. Conclusion

Short Biography of the Author

|

Alexander G Tartakovsky received the Ph.D. degree in statistics and information theory and the advanced D.Sc. degree from the Moscow Institute of Physics and Technology (PhysTech), Russia, in 1981 and 1990, respectively. From 1981 to 1992, he was first a Senior Research Scientist and then the Department Head at the Moscow Institute of Radio Technology and a Professor at PhysTech, where he worked on the application of statistical methods to the optimization and modeling of information systems. From 1993 to 1996, he was a Professor at the University of California, Los Angeles (UCLA), first with the Department of Electrical Engineering and then with the Department of Mathematics. From 1997 to 20013, he was a Professor at the Department of Mathematics and an Associate Director of the Center for Applied Mathematical Sciences, University of Southern California (USC). In the late 1990s, he organized one of America’s first master’s programs in Mathematical Finance (a joint program of the Mathematics and Economics departments at USC). From 2013 to 2015, he was a Professor of statistics with the Department of Statistics at the University of Connecticut, Storrs. From 2016 to 2021, he was the Head of the Space Informatics Laboratory at PhysTech. He is currently the President of AGT StatConsult, Los Angeles, CA, USA. Dr. Tartakovsky also served as visiting faculty at various universities such as Universite de Rouen, France; University of Technology, Sydney, Australia; The Hebrew University of Jerusalem, Israel; University of North Carolina, Chapel Hill; Columbia University; and Stanford University. Dr. Tartakovsky is an internationally recognized researcher in theoretical and applied statistics, applied probability, sequential analysis, and changepoint detection. He is the author of three books, several book chapters, and over 100 papers across a range of subjects, including theoretical and applied statistics, applied probability, and sequential analysis. His research focuses on a variety of applications including statistical image and signal processing; video surveillance and object detection and tracking; information integration/fusion; intrusion detection and network security; detection and tracking of malicious activity; mathematical/engineering finance applications; pharmacokinetics/ pharmacodynamics; and early detection of epidemics using changepoint methods. Dr. Tartakovsky has provided statistical consulting and developed algorithms and software for many companies and U.S. federal agencies. Dr. Tartakovsky is a Fellow of the Institute of Mathematical Statistics (IMS) and Senior Member of IEEE. He is an Award-Winning Statistician. He received numerous awards for his work, including the Abraham Wald Prize in Sequential Analysis. He presented several keynote and plenary talks at leading conferences. |

References

- Hsu, P.L.; Robbins, H. Complete convergence and the law of large numbers. Proceedings of the National Academy of Sciences of the United States of America 1947, 33, 25–31. [CrossRef]

- Baum, L.E.; Katz, M. Convergence rates in the law of large numbers. Transactions of the American Mathematical Society 1965, 120, 108–123. [CrossRef]

- Strassen, V. Almost sure behavior of sums of independent random variables and martingales. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, June 21–July 18, 1965 and December 27, 1965–January 7, 1966; Le Cam, L.M.; Neyman, J., Eds.; University of California Press: Berkeley, CA, USA, 1967; Vol. 2: Contributions to Probability Theory. Part 1, pp. 315–343.

- Tartakovsky, A.G. Asymptotic optimality of certain multihypothesis sequential tests: Non-i.i.d. case. Statistical Inference for Stochastic Processes 1998, 1, 265–295. [CrossRef]

- Tartakovsky, A.G. Sequential Change Detection and Hypothesis Testing: General Non-i.i.d. Stochastic Models and Asymptotically Optimal Rules; Monographs on Statistics and Applied Probability 165, Chapman & Hall/CRC Press, Taylor & Francis Group: Boca Raton, London, New York, 2020.

- Tartakovsky, A.G.; Nikiforov, I.V.; Basseville, M. Sequential Analysis: Hypothesis Testing and Changepoint Detection; Monographs on Statistics and Applied Probability 136, Chapman & Hall/CRC Press, Taylor & Francis Group: Boca Raton, London, New York, 2015.

- Lai, T.L. On r-quick convergence and a conjecture of Strassen. Annals of Probability 1976, 4, 612–627.

- Lai, T.L. Asymptotic optimality of invariant sequential probability ratio tests. Annals of Statistics 1981, 9, 318–333. [CrossRef]

- Chow, Y.S.; Lai, T.L. Some one-sided theorems on the tail distribution of sample sums with applications to the last time and largest excess of boundary crossings. Transactions of the American Mathematical Society 1975, 208, 51–72. [CrossRef]

- Fuh, C.D.; Zhang, C.H. Poisson equation, moment inequalities and quick convergence for Markov random walks. Stochastic Processes and Their Applications 2000, 87, 53–67. [CrossRef]

- Wald, A. Sequential tests of statistical hypotheses. Annals of Mathematical Statistics 1945, 16, 117–186.

- Wald, A. Sequential Analysis; John Wiley & Sons, Inc: New York, USA, 1947.

- Wald, A.; Wolfowitz, J. Optimum character of the sequential probability ratio test. Annals of Mathematical Statistics 1948, 19, 326–339.

- Burkholder, D.L.; Wijsman, R.A. Optimum properties and admissibility of sequential tests. Annals of Mathematical Statistics 1963, 34, 1–17.

- Matthes, T.K. On the optimality of sequential probability ratio tests. Annals of Mathematical Statistics 1963, 34, 18–21.

- Ferguson, T.S. Mathematical Statistics: A Decision Theoretic Approach; Probability and Mathematical Statistics, Academic Press, 1967.

- Lehmann, E.L. Testing Statistical Hypotheses; John Wiley & Sons, Inc: New York, USA, 1968.

- Shiryaev, A.N. Optimal Stopping Rules; Vol. 8, Series on Stochastic Modelling and Applied Probability, Springer-Verlag: New York, USA, 1978.

- Tartakovsky, A.G. Sequential Methods in the Theory of Information Systems; Radio i Svyaz’: Moscow, RU, 1991. In Russian.

- Golubev, G.K.; Khas’minskii, R.Z. Sequential testing for several signals in Gaussian white noise. Theory of Probability and its Applications 1984, 28, 573–584. [CrossRef]

- Tartakovsky, A.G. Asymptotically optimal sequential tests for nonhomogeneous processes. Sequential Analysis 1998, 17, 33–62. [CrossRef]

- Verdenskaya, N.V.; Tartakovskii, A.G. Asymptotically optimal sequential testing of multiple hypotheses for nonhomogeneous Gaussian processes in an asymmetric situation. Theory of Probability and its Applications 1991, 36, 536–547. [CrossRef]

- Fellouris, G.; Tartakovsky, A.G. Multichannel sequential detection – Part I: Non-i.i.d. data. IEEE Transactions on Information Theory 2017, 63, 4551–4571. [CrossRef]

- Armitage, P. Sequential analysis with more than two alternative hypotheses, and its relation to discriminant function analysis. Journal of the Royal Statistical Society - Series B Methodology 1950, 12, 137–144.

- Chernoff, H. Sequential design of experiments. Annals of Mathematical Statistics 1959, 30, 755–770.

- Kiefer, J.; Sacks, J. Asymptotically optimal sequential inference and design. Annals of Mathematical Statistics 1963, 34, 705–750.

- Lorden, G. Integrated risk of asymptotically Bayes sequential tests. Annals of Mathematical Statistics 1967, 38, 1399–1422.

- Lorden, G. Nearly-optimal sequential tests for finitely many parameter values. Annals of Statistics 1977, 5, 1–21. [CrossRef]

- Pavlov, I.V. Sequential procedure of testing composite hypotheses with applications to the Kiefer-Weiss problem. Theory of Probability and its Applications 1990, 35, 280–292. [CrossRef]

- Baron, M.; Tartakovsky, A.G. Asymptotic optimality of change-point detection schemes in general continuous-time models. Sequential Analysis 2006, 25, 257–296. Invited Paper in Memory of Milton Sobel. [CrossRef]

- Mosteller, F. A k-sample slippage test for an extreme population. Annals of Mathematical Statistics 1948, 19, 58–65.

- Bakut, P.A.; Bolshakov, I.A.; Gerasimov, B.M.; Kuriksha, A.A.; Repin, V.G.; Tartakovsky, G.P.; Shirokov, V.V. Statistical Radar Theory; Vol. 1 (G. P. Tartakovsky, Editor), Sovetskoe Radio: Moscow, USSR, 1963. In Russian.

- Basseville, M.; Nikiforov, I.V. Detection of Abrupt Changes – Theory and Application; Information and System Sciences Series, Prentice-Hall, Inc: Englewood Cliffs, NJ, USA, 1993. Online.

- Jeske, D.R.; Steven, N.T.; Tartakovsky, A.G.; Wilson, J.D. Statistical methods for network surveillance. Applied Stochastic Models in Business and Industry 2018, 34, 425–445. Discussion Paper. [CrossRef]

- Jeske, D.R.; Steven, N.T.; Wilson, J.D.; Tartakovsky, A.G. Statistical network surveillance. Wiley StatsRef: Statistics Reference Online 2018, pp. 1–12. [CrossRef]

- Tartakovsky, A.G.; Brown, J. Adaptive spatial-temporal filtering methods for clutter removal and target tracking. IEEE Transactions on Aerospace and Electronic Systems 2008, 44, 1522–1537. [CrossRef]

- Szor, P. The Art of Computer Virus Research and Defense; Addison-Wesley Professional: Upper Saddle River, NJ, USA, 2005.

- Tartakovsky, A.G. Rapid detection of attacks in computer networks by quickest changepoint detection methods. In Data Analysis for Network Cyber-Security; Adams, N.; Heard, N., Eds.; Imperial College Press: London, UK, 2014; pp. 33–70.

- Tartakovsky, A.G.; Rozovskii, B.L.; Blaźek, R.B.; Kim, H. Detection of intrusions in information systems by sequential change-point methods. Statistical Methodology 2006, 3, 252–293.

- Tartakovsky, A.G.; Rozovskii, B.L.; Blaźek, R.B.; Kim, H. A novel approach to detection of intrusions in computer networks via adaptive sequential and batch-sequential change-point detection methods. IEEE Transactions on Signal Processing 2006, 54, 3372–3382. [CrossRef]

- Siegmund, D. Change-points: from sequential detection to biology and back. Sequential Analysis 2013, 32, 2–14. [CrossRef]

- Moustakides, G.V. Sequential change detection revisited. Annals of Statistics 2008, 36, 787–807. [CrossRef]

- Page, E.S. Continuous inspection schemes. Biometrika 1954, 41, 100–114.

- Shiryaev, A.N. On optimum methods in quickest detection problems. Theory of Probability and its Applications 1963, 8, 22–46. [CrossRef]

- Moustakides, G.V.; Polunchenko, A.S.; Tartakovsky, A.G. A numerical approach to performance analysis of quickest change-point detection procedures. Statistica Sinica 2011, 21, 571–596.

- Moustakides, G.V.; Polunchenko, A.S.; Tartakovsky, A.G. Numerical comparison of CUSUM and Shiryaev–Roberts procedures for detecting changes in distributions. Communications in Statistics – Theory and Methods 2009, 38, 3225–3239. [CrossRef]

- Lorden, G. Procedures for reacting to a change in distribution. Annals of Mathematical Statistics 1971, 42, 1897–1908.

- Moustakides, G.V. Optimal stopping times for detecting changes in distributions. Annals of Statistics 1986, 14, 1379–1387. [CrossRef]

- Pollak, M. Optimal detection of a change in distribution. Annals of Statistics 1985, 13, 206–227. [CrossRef]

- Tartakovsky, A.G.; Pollak, M.; Polunchenko, A.S. Third-order asymptotic optimality of the generalized Shiryaev–Roberts changepoint detection procedures. Theory of Probability and its Applications 2012, 56, 457–484. [CrossRef]

- Polunchenko, A.S.; Tartakovsky, A.G. On optimality of the Shiryaev–Roberts procedure for detecting a change in distribution. Annals of Statistics 2010, 38, 3445–3457.

- Shiryaev, A.N. The problem of the most rapid detection of a disturbance in a stationary process. Soviet Mathematics – Doklady 1961, 2, 795–799. Translation from Doklady Akademii Nauk SSSR, 138:1039–1042, 1961.

- Tartakovsky, A.G. Discussion on “Is Average Run Length to False Alarm Always an Informative Criterion?” by Yajun Mei. Sequential Analysis 2008, 27, 396–405. [CrossRef]

- Liang, Y.; Tartakovsky, A.G.; Veeravalli, V.V. Quickest change detection with non-stationary post-change observations. IEEE Transactions on Information Theory 2023, 69, 3400–3414. [CrossRef]

- Pergamenchtchikov, S.; Tartakovsky, A.G. Asymptotically optimal pointwise and minimax quickest change-point detection for dependent data. Statistical Inference for Stochastic Processes 2018, 21, 217–259. [CrossRef]

- Fuh, C.D.; Tartakovsky, A.G. Asymptotic Bayesian theory of quickest change detection for hidden Markov models. IEEE Transactions on Information Theory 2019, 65, 511–529. [CrossRef]

- Kolessa, A.; Tartakovsky, A.; Ivanov, A.; Radchenko, V. Nonlinear estimation and decision-making methods in short track identification and orbit determination problem. IEEE Transactions on Aerospace and Electronic Systems 2020, 56, 301–312. [CrossRef]

- Tartakovsky, A.; Berenkov, N.; Kolessa, A.; Nikiforov, I. Optimal sequential detection of signals with unknown appearance and disappearance points in time. IEEE Transactions on Signal Processing 2021, 69, 2653–2662. [CrossRef]

- Pergamenchtchikov, S.M.; Tartakovsky, A.G.; Spivak, V.S. Minimax and pointwise sequential changepoint detection and identification for general stochastic models. Journal of Multivariate Analysis 2022, 190, 1–22. [CrossRef]

| 1 | In many practical problems, K is substantially smaller than the total number of streams N, which can be very large. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).