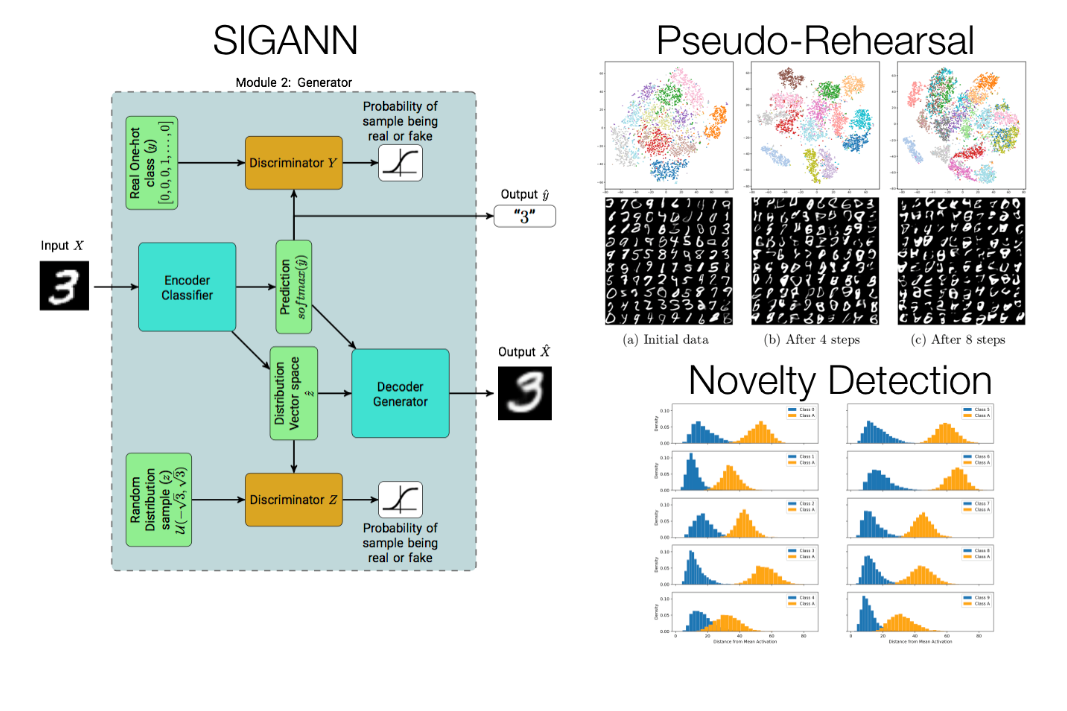

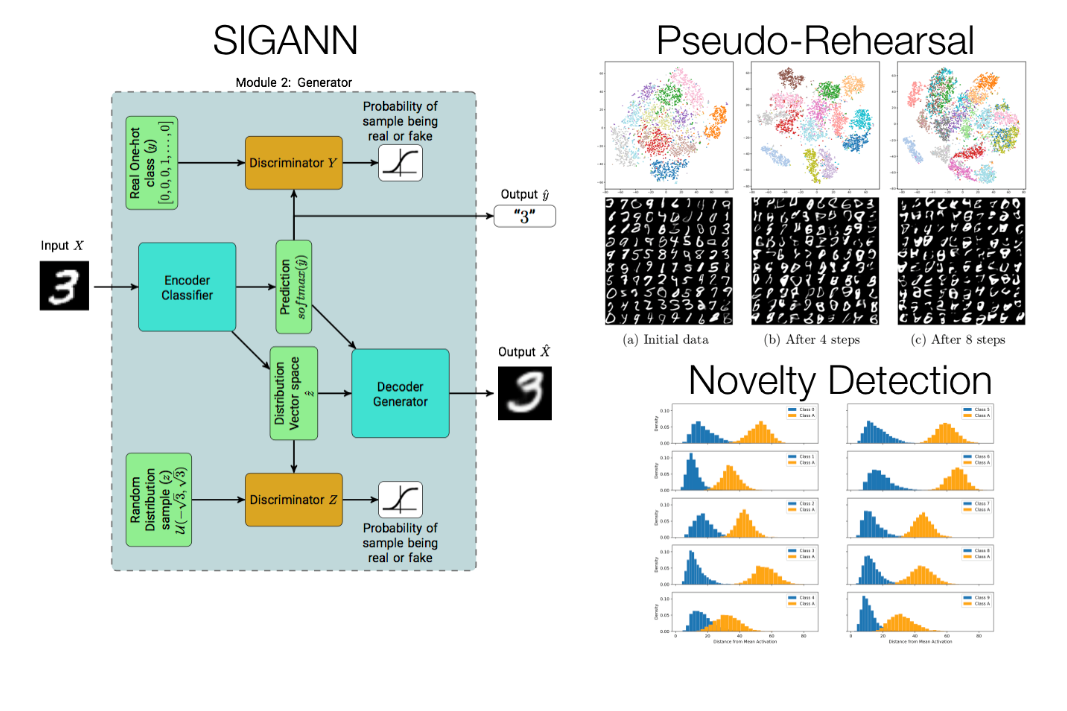

Deep learning models are part of the family of artificial neural networks and, as such, it suffers of catastrophic interference when they learn sequentially. In addition, most of these models have a rigid architecture which prevents the incremental learning of new classes. To overcome these drawbacks, in this article we propose the Self-Improving Generative Artificial Neural Network (SIGANN), a type of end-to-end Deep Neural Network system which is able to ease the catastrophic forgetting problem when leaning new classes. In this method, we introduce a novelty detection model to automatically detect samples of new classes, moreover an adversarial auto-encoder is used to produce samples of previous classes. This system consists of three main modules: a classifier module implemented using a Deep Convolutional Neural Network, a generator module based on an adversarial autoencoder; and a novelty detection module, implemented using an OpenMax activation function. Using the EMNIST data set, the model was trained incrementally, starting with a small set of classes. The results of the simulation show that SIGANN is able to retain previous knowledge with a gradual forgetfulness for each learning sequence. Moreover, SIGANN can detect new classes that are hidden in the data and, therefore, proceed with incremental class learning.