A. Dataset

In this study, the selection of the dataset plays a decisive role. This work adopts the CloudBench Dataset, which collects performance monitoring logs, task scheduling information, and system call traces from multiple cloud platforms. It covers diverse computing types and resource configuration scenarios. Its main feature is the inclusion of both structured numerical attributes and unstructured sequential records. This enables a comprehensive reflection of task characteristics and cross-domain variability in cloud environments. The dataset is publicly available and transparent, which facilitates reproducibility and method comparison. It also provides sufficient samples to support research on cross-domain optimization.

For the source domain, the data are mainly collected from a relatively stable cloud platform environment. They include CPU-intensive tasks, memory-sensitive applications, and several general-purpose services. These source domain data have been collected and summarized over a long period of time. They show strong regularity and contain complete annotations. This offers a solid knowledge base for transfer learning models. By building an effective representation space in the source domain, the model can achieve strong initial performance and be adapted to target domain tasks through transfer and generalization.

For the target domain, the data are collected from another type of cloud platform. This domain is characterized by more complex resource scheduling patterns and unstable performance fluctuations. Compared with the source domain, the data distribution shows significant differences. These differences are reflected in task scale, workload intensity, and sequential patterns. Since the target domain suffers from limited labels and sparse data, training on it alone often fails to achieve ideal performance. Therefore, this study enhances feature representation through self-supervised learning. It also introduces transfer mechanisms to reduce the distribution gap between the source and target domains. In this way, cross-domain cloud computing tasks can be optimized and effectively modeled.

B. Experimental Results

A comparative experiment is carried out initially, and the results are presented in

Table 1.

From an overall comparison, the proposed method demonstrates superior performance on three core metrics. Specifically, Domain Adaptation Accuracy improves from 0.842–0.886 in traditional methods to 0.912. This indicates stronger generalization ability in the target domain and effectively alleviates performance degradation caused by cross-domain distribution differences. These results show that the combination of transfer learning and self-supervised learning can significantly enhance task adaptability in complex cloud computing scenarios, providing a more reliable solution for optimization in cross-domain environments.

Regarding the MMD metric, its value drops from 0.132–0.105 in conventional approaches to 0.089, indicating improved feature distribution alignment. A smaller MMD reflects greater consistency between the source and target embedding spaces after optimization, thereby alleviating the adverse influence of domain shifts on training. Harmonizing feature distributions not only enhances the efficiency of transfer learning but also boosts the model's generalization across cross-platform cloud environments, maintaining robustness under heterogeneous data and dynamic resource settings.

From the perspective of H-Score, the proposed method achieves 0.854, which is significantly higher than other methods that range from 0.781 to 0.827. This indicates that the model shows better balance between the source domain and the target domain. It avoids overfitting to a single domain while ensuring stable overall transfer performance. Such cross-domain balance is especially critical for cloud computing tasks, as real applications often require consistent performance across multiple platforms and regions to support large-scale deployment.

Considering the performance across all three metrics, the proposed framework achieves a unified improvement in accuracy, robustness, and balance in cross-domain task optimization. This provides an effective method for intelligent scheduling and resource management in cross-domain cloud computing and lays the foundation for larger-scale task expansion. By realizing high-quality knowledge transfer and feature alignment between the source and target domains, the model demonstrates stronger adaptability, highlighting the application potential and theoretical value of combining transfer learning with self-supervised learning in cross-domain cloud computing research.

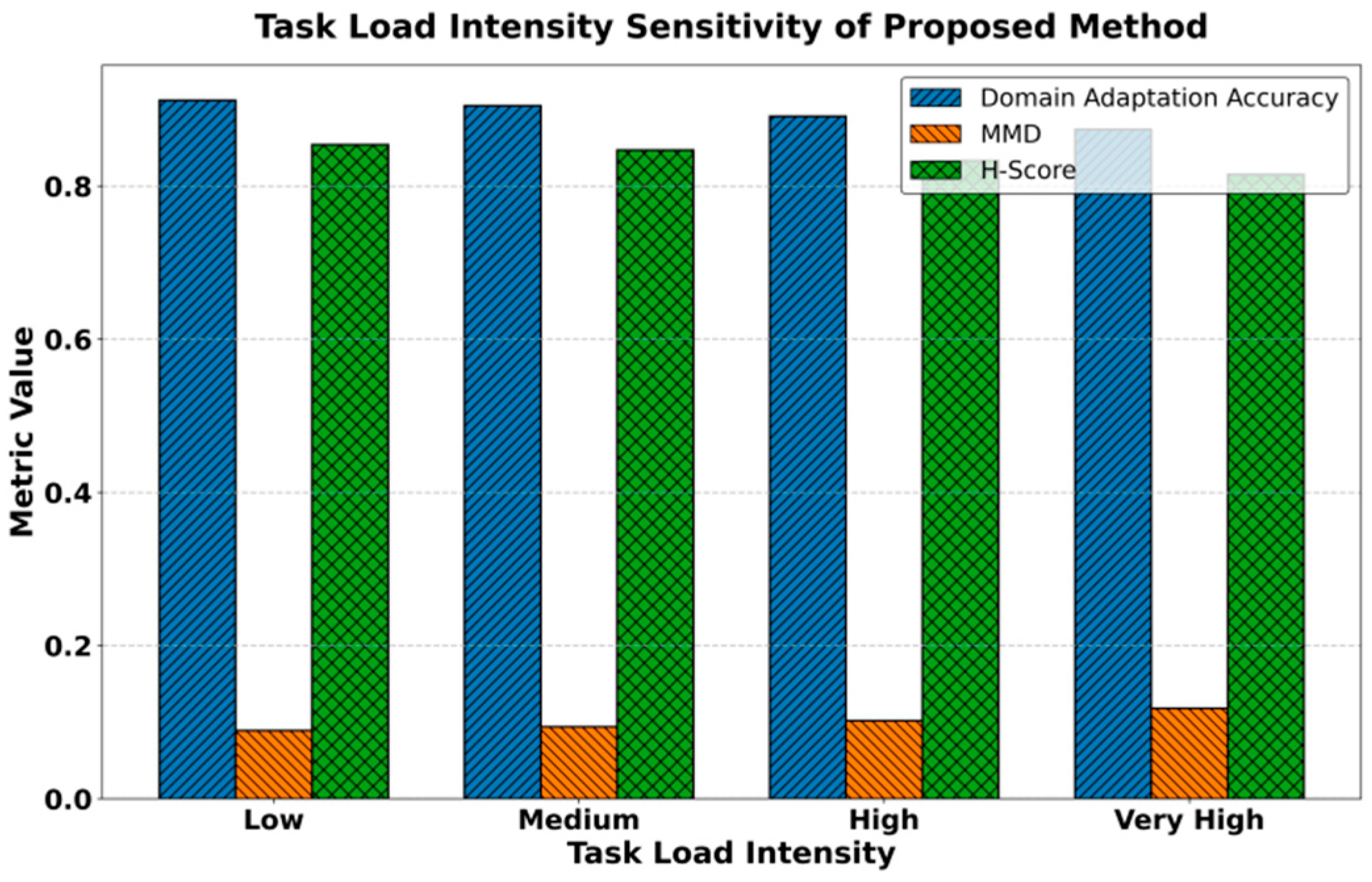

The adaptability of the model to variations in task load intensity is further evaluated, with the corresponding results illustrated in

Figure 2.

From the figure, it can be observed that as task load intensity gradually increases, the Domain Adaptation Accuracy of the model decreases slowly from around 0.91 to about 0.87. This indicates that under higher computational pressure and task complexity, the performance of the model in the target domain is affected to some extent. However, the overall accuracy remains at a relatively high level, confirming that the method maintains strong adaptability and stability in cross-domain cloud computing tasks. This shows that the combination of transfer learning and self-supervised mechanisms enables the model to sustain reasonable performance under varying load conditions.

At the same time, the MMD metric shows a gradual upward trend as task load increases, rising from 0.089 to about 0.118. This reflects that the feature distribution differences between the source and target domains become more evident under high-intensity tasks. The change reveals that feature alignment across domains becomes more challenging when resources are constrained and environmental fluctuations intensify. Nevertheless, since the values remain within a relatively low range, the results suggest that the optimization framework can still effectively control distribution discrepancies and ensure stable cross-domain transfer.

For the H-Score, the value decreases from 0.854 to 0.815 as the load increases, showing some performance degradation. Yet the overall performance remains superior to that of traditional methods. This result indicates that even in complex load environments, the method can still maintain a good balance between the source and target domains. Such cross-domain balance is of practical significance for cloud computing task optimization, as it provides more robust technical support for task deployment across multiple platforms and scenarios.

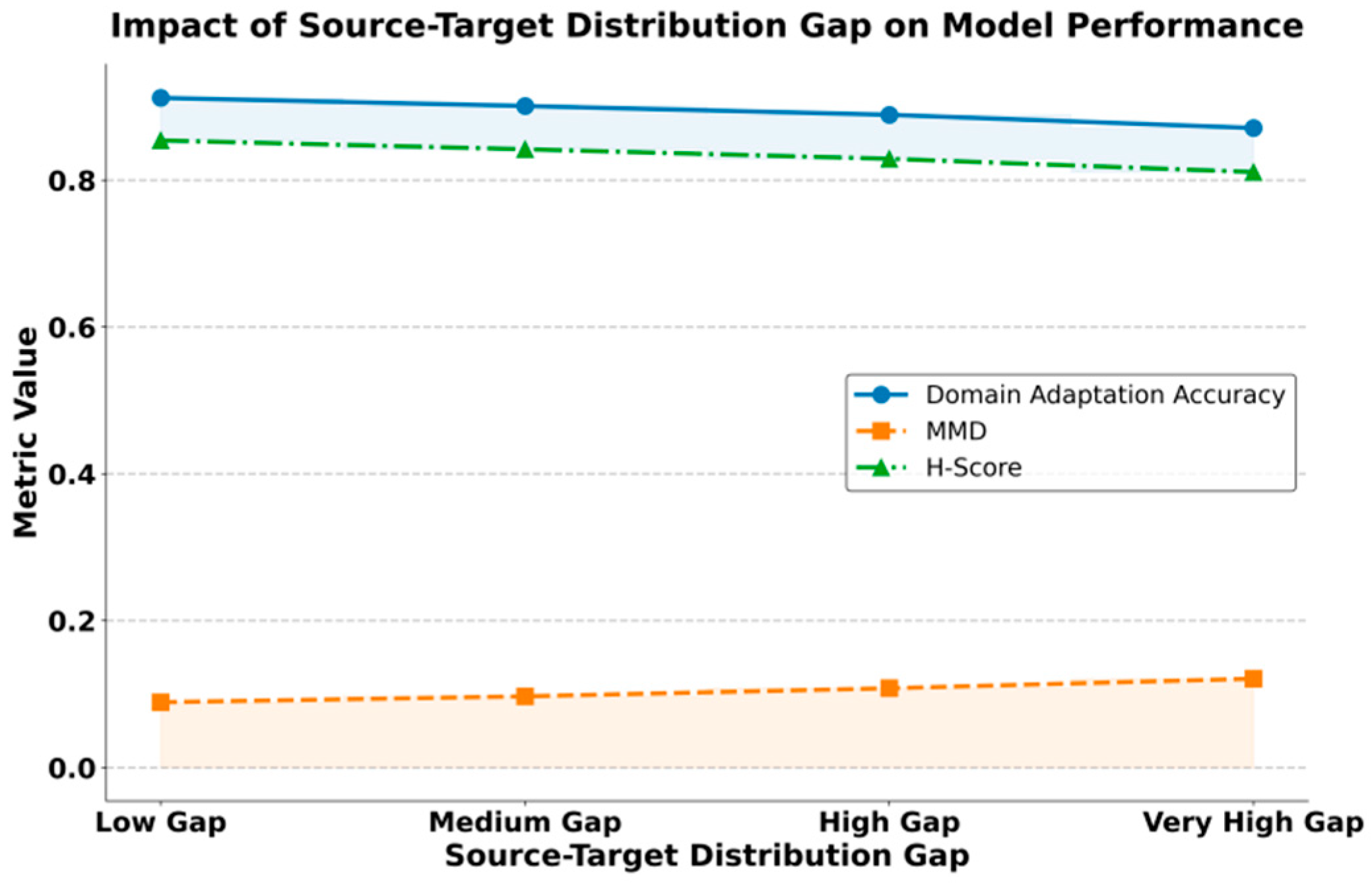

The influence of distribution discrepancies between the source and target domains on model performance is also examined, and the results are displayed in

Figure 3.

From the figure, it can be observed that as the distribution gap between the source and target domains increases, the Domain Adaptation Accuracy of the model shows a gradual decline. The value decreases from about 0.91 to around 0.87. This indicates that distribution differences weaken the generalization ability of the model in the target domain. However, the overall accuracy remains at a relatively high level, which reflects that the proposed method retains strong stability and adaptability in cross-domain scenarios. This phenomenon shows that the method can maintain acceptable performance even when the distribution gap becomes larger.

At the same time, the MMD metric increases from 0.089 under low distribution difference to 0.121 under high distribution difference. This result reveals the problem of inconsistency in the feature space caused by larger distribution gaps. The increase in MMD directly shows that feature alignment between the source and target domains becomes much more difficult in high-difference scenarios. Nevertheless, since the values remain within a relatively low range, the results indicate that the introduced self-supervised and transfer mechanisms are still effective in aligning feature distributions and can reduce the negative impact of domain mismatch under larger differences.

From the trend of H-Score, the value decreases from 0.854 to 0.811 as the distribution gap increases. This means that although the method is still able to maintain a certain level of consistency between the source and target domains, its balance is challenged under extreme differences. However, compared with traditional methods, the overall level remains higher. This proves that the framework can better balance performance across domains in cross-domain tasks.

Considering the performance across the three metrics, it can be seen that although the method shows some performance degradation as the distribution gap widens, it still demonstrates stronger robustness and adaptability than the compared methods. This is of great significance for cross-domain cloud computing task optimization, since in real applications, distribution shifts between different platforms and environments are inevitable. The results verify the practical value of the method in complex scenarios and provide a solid foundation for its extension to larger-scale and more complex cross-domain environments.

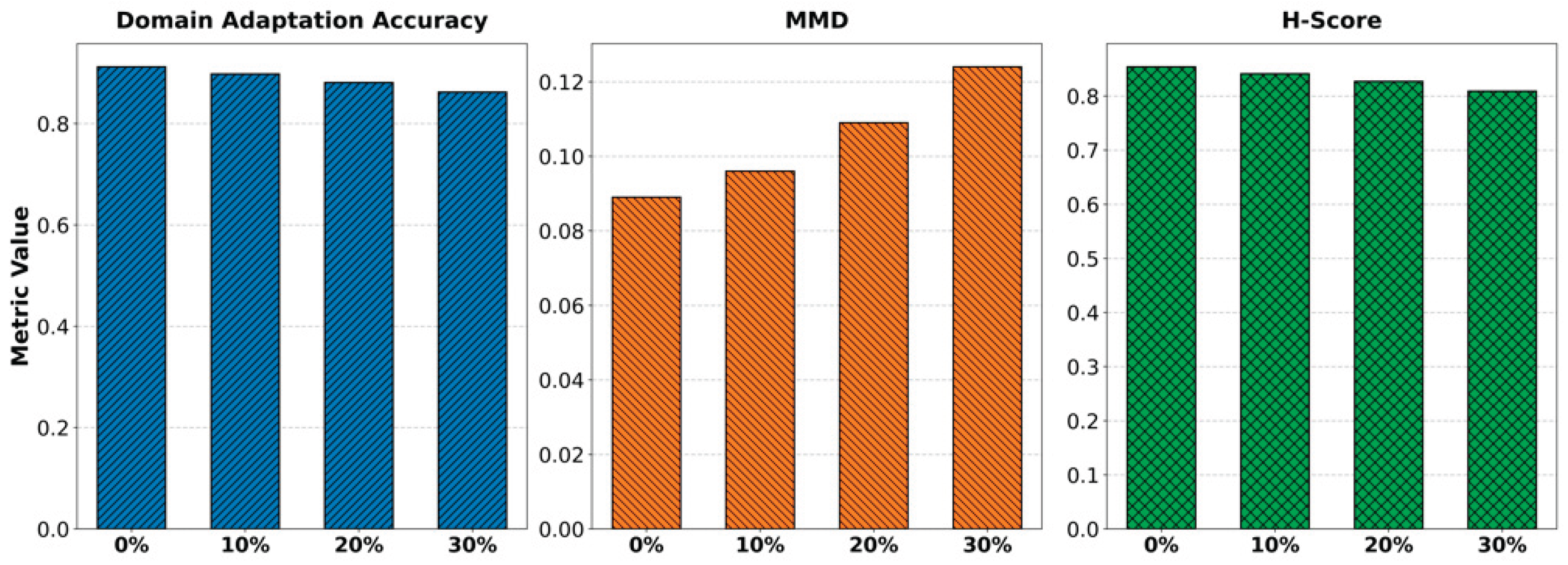

Finally, the effect of varying noise ratios on cross-domain feature alignment is analyzed, with the results presented in

Figure 4.

From the figure, it can be observed that as the noise ratio gradually increases, Domain Adaptation Accuracy shows a slight downward trend, decreasing from about 0.91 to around 0.86. This indicates that the introduction of noise weakens the discriminative ability of the model in the target domain. However, the overall accuracy remains at a high level, which proves that the method maintains a certain degree of robustness under noise interference. Such stability is particularly important for cross-domain cloud computing tasks, since data in real environments often cannot avoid noise contamination. The MMD metric increases gradually with the growth of the noise ratio, rising from 0.089 to 0.124. This indicates that the feature distribution differences between the source and target domains become more significant in high-noise environments. This phenomenon shows that noise disrupts cross-domain feature alignment and reduces feature consistency across domains. Nevertheless, the overall MMD value remains within a relatively low range, which demonstrates that the proposed method has strong noise resistance in the alignment mechanism and can mitigate the impact of distribution shifts to a certain extent. The H-Score trend reveals that performance balance declines as noise grows, dropping from 0.854 to around 0.809. This suggests that noise impacts not only the target domain performance but also the equilibrium between source and target domains. Even so, the results remain above the baseline of conventional methods. This demonstrates that the proposed framework sustains relatively stable cross-domain transfer performance under complex conditions. Moreover, the findings validate the method's effectiveness and practicality in addressing uncertainty and noise interference in multi-source, multi-environment cloud computing tasks.