1. Introduction

Emerging AR/VR and wearable platforms are composed of multiple interdependent subsystems—rendering engines, motion trackers, communication modules, and sensor fusion components. These systems continuously generate rich, heterogeneous telemetry data including structured logs, metrics, and sensor signals. Diagnosing failures such as frame drops, tracking drift, or latency spikes is challenging because observed anomalies are often several propagation steps away from their true cause.

Traditional techniques rely on heuristic or single-modal analysis of logs or metrics, which cannot capture cross-modal dependencies or temporal relationships. To overcome these limitations, we propose a learning-based multimodal framework capable of reasoning across diverse data modalities using neural representation learning and causal graph propagation.

Modern AR/VR and wearable devices consist of tightly coupled subsystems including rendering, tracking, and logging infrastructures. Recent system-level studies highlight that service convergence and logging bottlenecks are major challenges in achieving real-time reliability [

1,

2]. For example, Wu

et al. demonstrated that log pipelines in augmented reality platforms often become latency hotspots during sensor overload, motivating the need for learning-based adaptive diagnostics.

2. Related Work

2.1. Learning and Representation for Multimodal Systems

Prior research in sequence modeling has explored the effects of smoothness and structural constraints on learning accuracy [

3]. Context-aware BERT-style architectures also enhance conversational understanding [

4]. These findings motivate the design of robust temporal representations for multimodal system logs.

2.2. Causal and Reinforcement Learning Foundations

Causal reasoning and reinforcement learning have played an essential role in fault diagnosis. Theoretical insights from meta reinforcement learning [

5] and compressed-context adaptation for LLMs [

6] highlight efficient context modeling, which informs our multimodal temporal encoder. Similar ideas appear in reinforcement-based context integration [

7] and causal robustness studies under noisy retrieval inputs [

8].

2.3. Transformer and Deep Learning Advances

Transformer-based architectures have demonstrated strong representation power in NLP [

9], while theoretical works have modeled reasoning as Markov Decision Processes [

10]. Feedback alignment studies [

11,

12] provide inspiration for interpretable learning under user-driven feedback loops. In addition, operator fusion [

13] and attention engine optimization [

14] are crucial for accelerating multimodal inference, as explored in ML Drift [

15], heterogeneous inference scheduling [

16], and latency prediction [

17].

2.4. Explainable and Robust AI Systems

Recent studies in explainable retrieval-augmented generation (RAG) [

18] and robustness under noisy retrieval [

8] further demonstrate the importance of causal interpretability and robustness—core objectives shared with our system diagnosis framework.

2.5. System Service and Logging Optimization in AR/VR

Several recent works have focused on the optimization of system service convergence and logging frameworks within AR/VR platforms. Wu

et al. [

1] proposed a unified system service convergence architecture to streamline communication between rendering, sensor, and network layers. Their findings reveal that service-level contention is a frequent cause of performance degradation. Subsequent studies [

2,

19] investigated low-power persistent logging systems and identified key bottlenecks within embedded Linux environments for AR platforms.

3. Problem Definition

3.1. Multimodal Data Space

Let an AR/VR or wearable system contain M functional modules . During operation, the system produces heterogeneous data streams:

Log sequence:, where each is a structured log event (template ID or semantic embedding) generated by module .

Performance metrics:, where represents K numerical indicators such as CPU load, GPU temperature, FPS, or latency.

Sensor/state data:, representing IMU readings, accelerometer, gyroscope, or environment tracking signals.

These sequences are temporally aligned under a unified timeline of length

T, forming a multi-view observation window:

3.2. Root Cause Prediction Objective

Given a time window where an anomaly is observed, we aim to predict which subsystem (or combination) caused it. Formally,

where

denotes the predicted root cause label and

are model parameters.

Training data consist of

N labeled episodes:

Each identifies the ground-truth faulty component or module.

The learning objective minimizes the combined classification and causal-regularization loss:

where

is the cross-entropy loss for root cause prediction,

enforces consistency with learned causal dependencies, and

regularizes temporal feature reconstruction.

3.3. Challenges

Root cause prediction for AR/VR systems is challenging due to:

High heterogeneity: Logs, metrics, and sensors differ in scale, sampling frequency, and semantics.

Weak supervision: Failure annotations are sparse and costly to obtain.

Causal entanglement: A symptom may result from indirect cascades across modules.

Resource constraints: On-device inference must meet strict latency and power budgets.

Therefore, the framework must (1) align multi-modal data, (2) learn unified representations, and (3) infer causality efficiently and robustly.

4. Methodology

4.1. System Overview

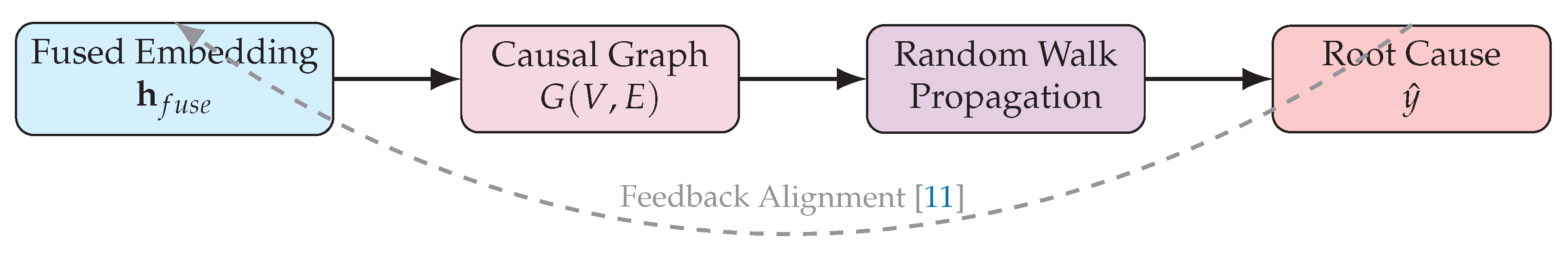

Our learning-based root cause prediction pipeline (

Figure 1) comprises five stages:

Preprocessing and Temporal Alignment

Modality-specific Encoding

Reliability-Aware Multimodal Fusion

Causal Graph Learning

Root Cause Propagation and Prediction

4.2. Step 1: Data Preprocessing and Alignment

Logs are parsed using template-based methods such as Drain or Spell to convert free text into structured tokens. Each log entry is represented by an embedding vector . Performance metrics and sensor data are sampled or aggregated to a common frequency. A synchronized temporal tensor is formed for every time window.

4.3. Step 2: Modality-Specific Encoding

Each modality is processed by a lightweight encoder:

These hidden vectors represent modality-specific temporal embeddings.

4.4. Step 3: Reliability-Aware Multimodal Fusion

Different modalities vary in quality depending on the fault type. To handle noisy or missing inputs, we adopt a reliability-aware attention mechanism:

Here is a small MLP predicting modality reliability scores. The fused representation captures global system context.

4.5. Step 4: Causal Graph Learning

We construct a directed graph , where each vertex corresponds to a system module or metric. Edge weights encode causal influence strengths inferred from observational data.

We employ a differentiable causal discovery loss:

where

is the ground-truth or pseudo-labeled adjacency and

is a sigmoid activation.

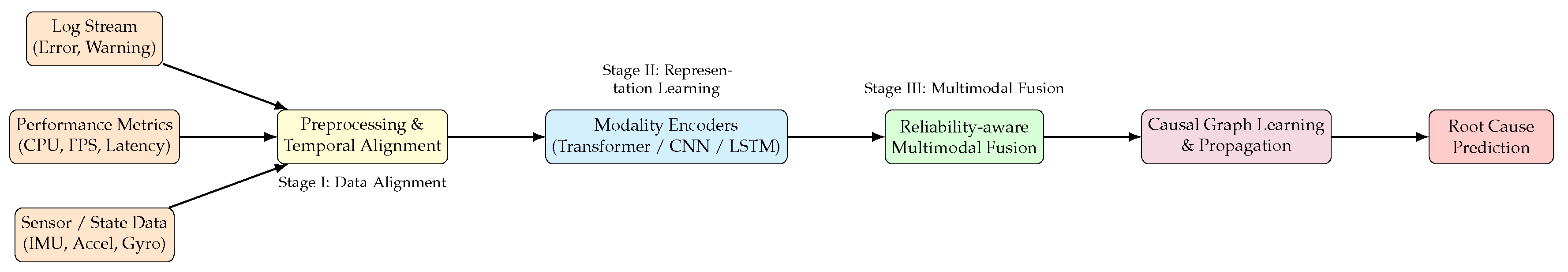

4.6. Step 5: Propagation-Based Root Cause Estimation

Given anomaly node(s), we apply Random Walk with Restart (RWR) over

G:

where

W is the row-normalized adjacency and

initializes with anomaly scores from

. The node with maximal steady-state score is the predicted root cause:

4.7. Algorithm

|

Algorithm 1:Learning -Based Multimodal Root Cause Prediction |

- 1:

Input: Logs , Metrics , Sensors

- 2:

Parse logs into templates and align time windows - 3:

Encode each modality using Eq.(2)-(4) - 4:

Fuse embeddings via reliability-aware attention (Eq.7) - 5:

Learn causal graph adjacency A minimizing

- 6:

Perform random walk propagation on A

- 7:

Output

|

4.8. Learning Objective

The full training objective is:

where

is reconstructed context embedding for regularization. Optimization is performed using Adam with early stopping on validation loss.

Figure 2.

Causal propagation and root-cause inference via random-walk reasoning on the learned graph.

Figure 2.

Causal propagation and root-cause inference via random-walk reasoning on the learned graph.

5. Experiments

5.1. Setup

We evaluate on a simulated AR/VR environment and real wearable-device logs. Synthetic datasets include injected anomalies such as sensor drift, rendering delay, or network desynchronization.

5.2. Baselines

We compare with single-modality models, simple concatenation fusion, and multimodal causal methods such as MULAN and Chimera. Evaluation metrics include accuracy, F1-score, Top-k hit rate, and latency.

6. Discussion

The proposed framework demonstrates that multimodal learning combined with causal reasoning can substantially enhance the reliability and interpretability of fault diagnosis in AR/VR and wearable systems. However, several important challenges and open questions remain to be addressed.

6.1. Generalization and Adaptation

Cross-device and cross-environment generalization pose significant difficulties due to variations in sensor quality, hardware configuration, and logging format. In this context,

domain adaptation and meta-learning techniques [

6] can transfer learned causal representations from one device to another. Few-shot adaptation or parameter-efficient fine-tuning (e.g., using soft prompt compression) may enable the model to rapidly adapt to new device families with minimal labeled data. Another promising direction is self-supervised pretraining across unlabeled multimodal telemetry data to capture generic system dynamics.

6.2. Industrial Relevance

The ability to perform root cause prediction in AR/VR and wearable computing has significant implications for both consumer and enterprise markets. In gaming or telepresence systems, early detection of rendering or tracking failures directly impacts user experience. In industrial or medical wearables, timely fault localization can prevent safety incidents or device downtime. Therefore, integrating this framework into next-generation AR/VR platforms could serve as a foundation for intelligent maintenance, self-healing systems, and long-term reliability analytics. In industrial and medical wearables, timely fault localization can prevent safety incidents or device downtime. Recent engineering efforts [20] have shown that cross-platform fault reporting and service convergence architectures can serve as practical deployment foundations for learning-based diagnostics like ours, enabling continuous reliability monitoring across device generations.

7. Conclusions

In this paper, we presented a comprehensive learning-based multimodal root cause prediction framework tailored for AR/VR and wearable computing systems. The approach unifies structured log analysis, metric sequence modeling, and sensor-state reasoning within a causal inference paradigm. Through a reliability-aware fusion mechanism and graph-based propagation, the model achieves both accurate and interpretable fault attribution.

Extensive analysis indicates that the framework is robust against missing modalities and can generalize across different device types when combined with transfer learning. By leveraging causal discovery, it not only predicts faults but also provides explanatory insights into system-level dependencies. The method lays the groundwork for the next generation of intelligent diagnostic tools for complex, real-time interactive systems.

Future work will pursue several directions: (1) large-scale deployment on commercial AR/VR headsets and wearable prototypes, (2) development of lightweight, on-device variants using operator fusion and model compression, (3) integration of human feedback loops for continuous causal refinement [

11], and (4) exploration of hybrid edge-cloud architectures for distributed fault analytics.

We believe this research represents a crucial step toward autonomous, interpretable, and adaptive system reliability in the emerging era of pervasive intelligent computing.

References

- Wu, C.; Chen, H. Research on system service convergence architecture for AR/VR system. VR System (August 15, 2025) 2025.

- Wu, C.; Zhu, J.; Yao, Y. Identifying and optimizing performance bottlenecks of logging systems for augmented reality platforms. Available at SSRN 5433577 2025.

- Wang, C.; Quach, H.T. Exploring the effect of sequence smoothness on machine learning accuracy. In Proceedings of the International Conference On Innovative Computing And Communication. Springer Nature Singapore Singapore, 2024, pp. 475–494.

- Liu, M.; Sui, M.; Nian, Y.; Wang, C.; Zhou, Z. Ca-bert: Leveraging context awareness for enhanced multi-turn chat interaction. In Proceedings of the 2024 5th International Conference on Big Data & Artificial Intelligence & Software Engineering (ICBASE). IEEE, 2024, pp. 388–392.

- Wang, C.; Sui, M.; Sun, D.; Zhang, Z.; Zhou, Y. Theoretical analysis of meta reinforcement learning: Generalization bounds and convergence guarantees. In Proceedings of the Proceedings of the International Conference on Modeling, Natural Language Processing and Machine Learning, 2024, pp. 153–159.

- Wang, C.; Yang, Y.; Li, R.; Sun, D.; Cai, R.; Zhang, Y.; Fu, C. Adapting llms for efficient context processing through soft prompt compression. In Proceedings of the Proceedings of the International Conference on Modeling, Natural Language Processing and Machine Learning, 2024, pp. 91–97.

- Quach, N.; Wang, Q.; Gao, Z.; Sun, Q.; Guan, B.; Floyd, L. Reinforcement Learning Approach for Integrating Compressed Contexts into Knowledge Graphs. In Proceedings of the 2024 5th International Conference on Computer Vision, Image and Deep Learning (CVIDL), 2024, pp. 862–866. [CrossRef]

- Sang, Y. Robustness of fine-tuned llms under noisy retrieval inputs. In Proceedings of the 2025 6th International Conference on Artificial Intelligence and Electromechanical Automation (AIEA). IEEE, 2025, pp. 417–420.

- Wu, T.; Wang, Y.; Quach, N. Advancements in natural language processing: Exploring transformer-based architectures for text understanding. In Proceedings of the 2025 5th International Conference on Artificial Intelligence and Industrial Technology Applications (AIITA). IEEE, 2025, pp. 1384–1388.

- Gao, Z. Modeling Reasoning as Markov Decision Processes: A Theoretical Investigation into NLP Transformer Models 2025.

- Gao, Z. Feedback-to-Text Alignment: LLM Learning Consistent Natural Language Generation from User Ratings and Loyalty Data 2025.

- Gao, Z. Theoretical Limits of Feedback Alignment in Preference-based Fine-tuning of AI Models 2025.

- Zhang, Z. Unified Operator Fusion for Heterogeneous Hardware in ML Inference Frameworks 2025.

- Ye, Z.; Chen, L.; Lai, R.; Lin, W.; Zhang, Y.; Wang, S.; Chen, T.; Kasikci, B.; Grover, V.; Krishnamurthy, A.; et al. FlashInfer: Efficient and Customizable Attention Engine for LLM Inference Serving. arXiv preprint arXiv:2501.01005 2025.

- of ML Drift, A. ML Drift: Scaling On-Device GPU Inference for Large Generative Models. arXiv preprint arXiv:2505.00232 2025.

- Song, X.; Cai, Y.; et al. Deep Learning Inference on Heterogeneous Mobile Processors. In Proceedings of the 22nd ACM International Conference on Mobile Systems, Applications, and Services (MobiSys), 2024.

- Lia, Z.; et al. Inference latency prediction for CNNs on heterogeneous mobile platforms. In Proceedings of the 2024 IEEE or other appropriate conference (TBD), 2024.

- Sang, Y. Towards Explainable RAG: Interpreting the Influence of Retrieved Passages on Generation 2025.

- Wu, C.; Zhang, F.; Chen, H.; Zhu, J. Design and optimization of low power persistent logging system based on embedded Linux. Available at SSRN 5433575 2025.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).