I. Introduction

The rapid development of Internet of Things (IoT) technologies is reshaping operational paradigms across various industries. From smart manufacturing and smart cities to home automation, billions of intelligent devices are interconnected through sensing, communication, and computing capabilities. As the number of devices continues to grow, managing and predicting their energy consumption has become an urgent challenge[

1]. Effective energy management not only relates to economic efficiency and environmental sustainability but also directly impacts the scalability and long-term viability of IoT systems. In resource-constrained edge environments in particular, accurate energy consumption forecasting supports system design, task scheduling, and power allocation. It is a fundamental step toward realizing the vision of “green IoT.”

Traditional energy modeling approaches mainly rely on physical models or empirical formulas[

2]. While these methods are effective in specific domains, they often fail to adapt to the complexity and variability of IoT applications. Energy consumption in IoT devices is influenced by multiple factors, including task load, operating status, transmission frequency, external environmental conditions, and inter-device coordination. These factors often exhibit nonlinear and temporal dependencies, making modeling highly challenging. Additionally, the heterogeneous and distributed nature of IoT systems results in data that is multimodal, high-dimensional, and time-dependent. These characteristics significantly increase the difficulty of predictive model design. Modeling from a single perspective cannot effectively capture the dynamic interactions among these complex features[

3].

In recent years, data-driven methods have shown growing advantages in energy consumption modeling. Among them, Long Short-Term Memory (LSTM) networks, a type of recurrent neural network (RNN), have demonstrated strong performance in handling time series data, making them a popular tool for IoT energy modeling. LSTM networks can capture long-term dependencies in historical states, enabling dynamic modeling of device energy use[

4,

5]. However, most existing LSTM-based methods primarily focus on intrinsic temporal patterns, often overlooking the integration and modeling of external causal features[

6]. In IoT scenarios, many variables affecting energy use have explicit causal structures. For example, task type directly influences CPU load, and network conditions affect transmission power. Failure to extract and model these causal factors can limit a model’s generalization ability and interpretability, reducing its practical value in deployment.

Incorporating causal features into modeling can enhance the model’s structural understanding of input variables and improve the robustness and controllability of energy forecasting. In complex systems, causal relationships provide reasoning paths that help models maintain effectiveness under anomalies, interventions, or deployment shifts. For IoT energy forecasting, causal features act as a structural enhancement mechanism. They help reduce data redundancy, improve sensitivity to key variables, and mitigate issues such as overfitting or excessive reliance on historical data. Additionally, causal modeling improves the interpretability of predictions. This allows system developers to better understand the model’s decision-making process and design more effective energy control strategies[

7].

Against this background, exploring energy forecasting methods that combine LSTM architectures with causal features is a promising direction. It complements existing time series modeling approaches and supports the development of intelligent energy management systems in IoT. This approach integrates the modeling capacity of deep learning with the structural advantages of causal reasoning. It holds strong theoretical significance and practical potential. As edge computing, low-power communication protocols, and intelligent sensing devices continue to evolve, energy forecasting models will play an increasingly critical role. They will support decision-making in smart cities, industrial automation, and smart homes. Therefore, research into energy prediction based on LSTM and causal features not only aligns with the trend of AI-IoT integration but also lays a solid foundation for efficient, reliable, and interpretable energy management.

III. Proposed Approach

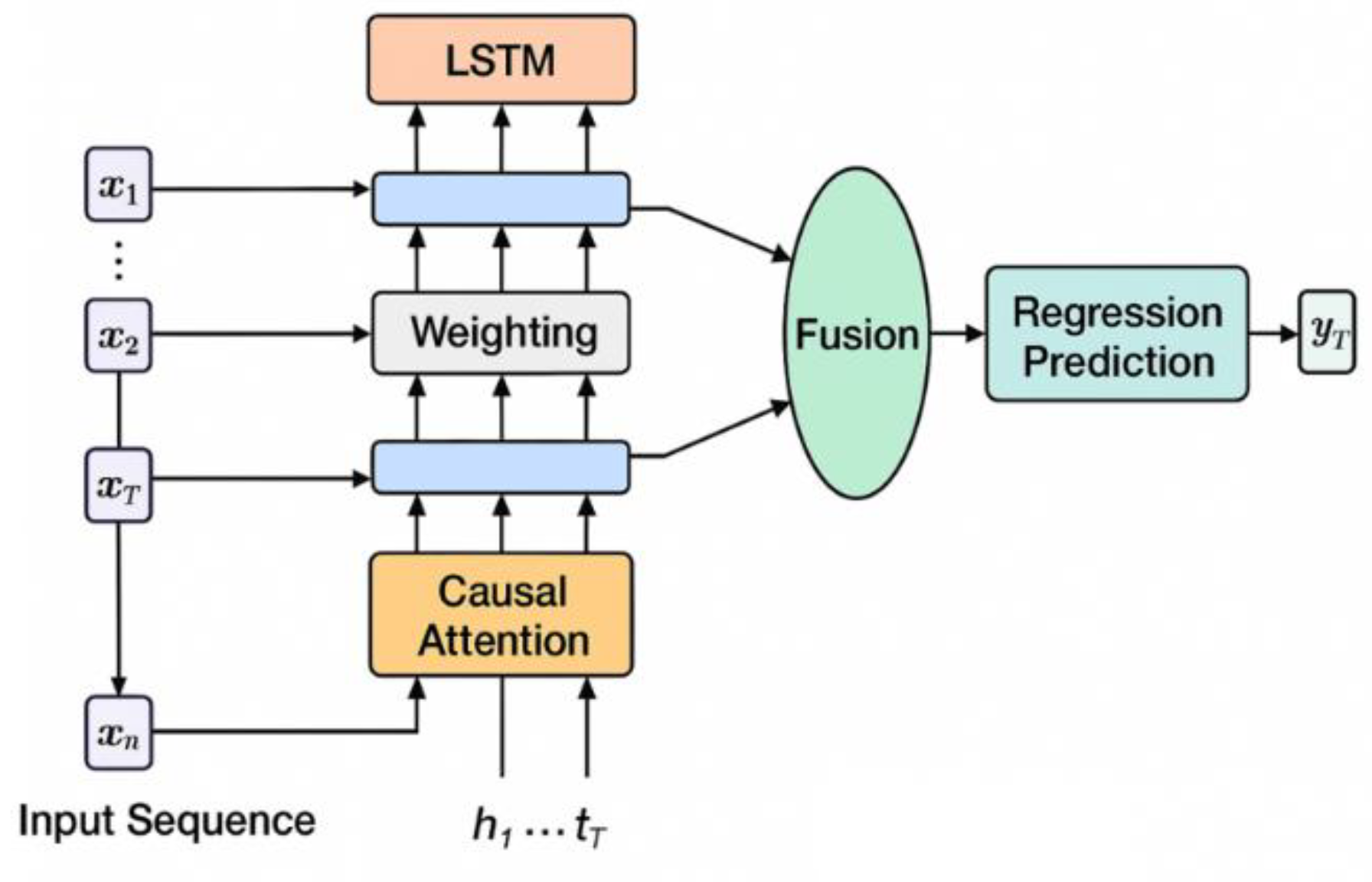

This research method focuses on integrating the powerful long short-term memory (LSTM) network with structurally instructive causal features to improve the prediction performance of IoT device energy consumption. First, an input sequence

is assumed, where each item

represents a multidimensional feature vector collected at time step t. This feature vector consists of two components: traditional historical state variables, such as CPU usage, temperature, and humidity, and channel quality; and external factors with causal inference value, such as task type and resource scheduling flags. The overall model architecture is shown in

Figure 1.

To model temporal dependencies, the input sequence is first fed into the LSTM unit, whose internal recursive structure can be expressed as follows:

In the above formula, represents the activation vectors of the forget gate, input gate, and output gate, respectively; represents the memory cell state; represents the hidden state output at the current time step; represents the sigmoid function; and represents the Hadamard product. This structure effectively extracts short-term and long-term dependencies in time series processes, establishing an information foundation for energy consumption prediction.

To further integrate causal information, this study introduces a structure-guided causal attention mechanism to perform weighted modeling of different causal features [

20]. Specifically, a causal mask matrix

is constructed to indicate which input dimensions have explicit causal relationships. Based on this, a causal weight vector

is defined, which is calculated as follows:

This mechanism can guide the model to prioritize feature dimensions with causal relationships, enhancing the rationality and interpretability of feature selection. Ultimately, the final regression prediction result is obtained by fusing the weighted causal features with the LSTM output:

Here, represents the feature concatenation operation, and is the prediction of the energy consumption value corresponding to time step t. This fusion strategy enables the model to possess both temporal memory and causal perception, enabling more stable and accurate regression output in a multi-source heterogeneous IoT data environment.

To optimize the model learning process, the objective function is defined as the standard mean square error (MSE) loss function to measure the difference between the predicted value and the actual energy consumption value. Its expression is as follows:

Where is the true value, and is the predicted value. This loss function effectively guides the model to minimize bias and improve prediction accuracy. The overall method framework offers excellent scalability and modularity, allowing for flexible adaptation to different types of IoT devices and task scenarios, providing theoretical and model support for practical deployment.

V. Conclusion

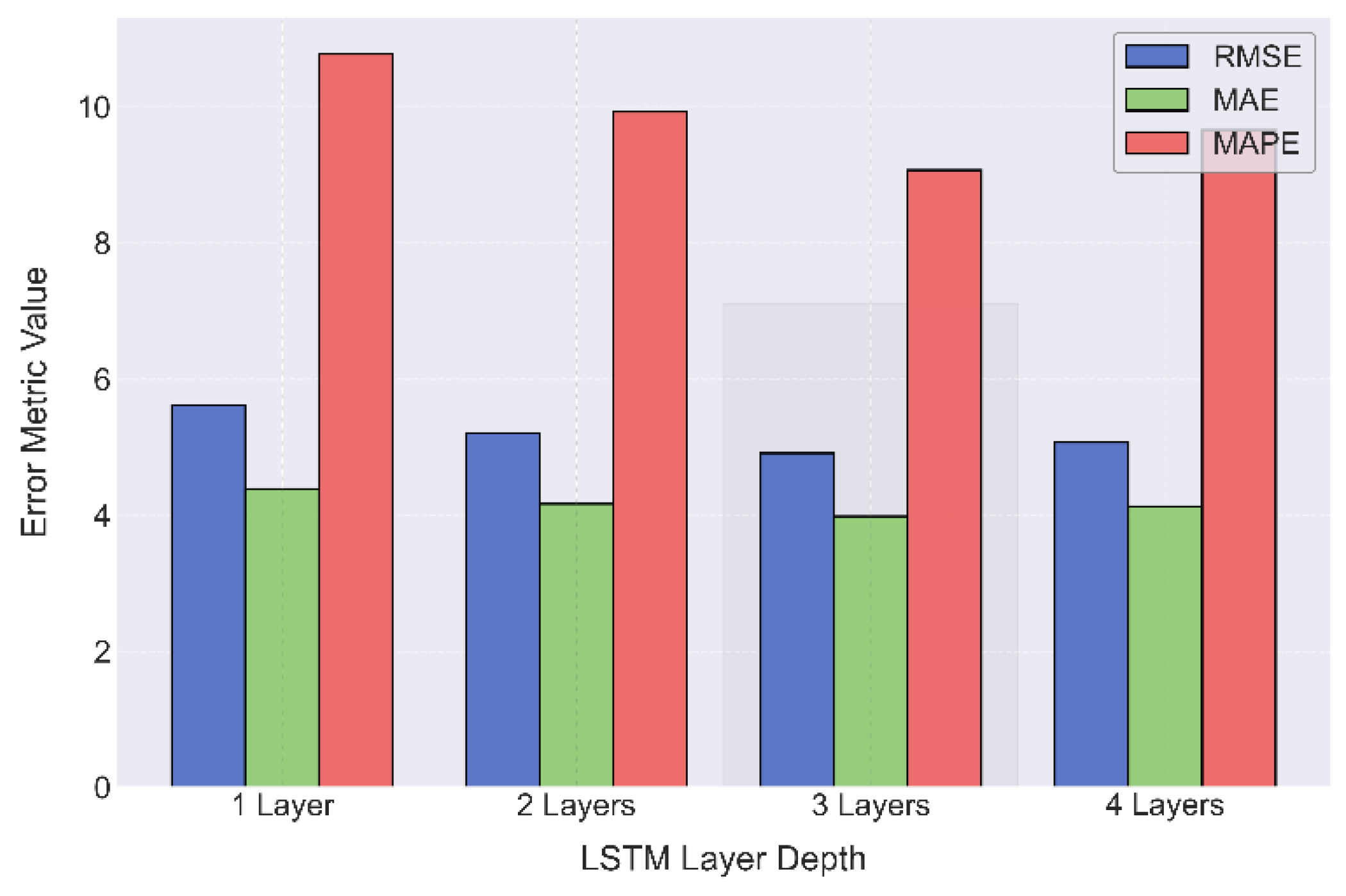

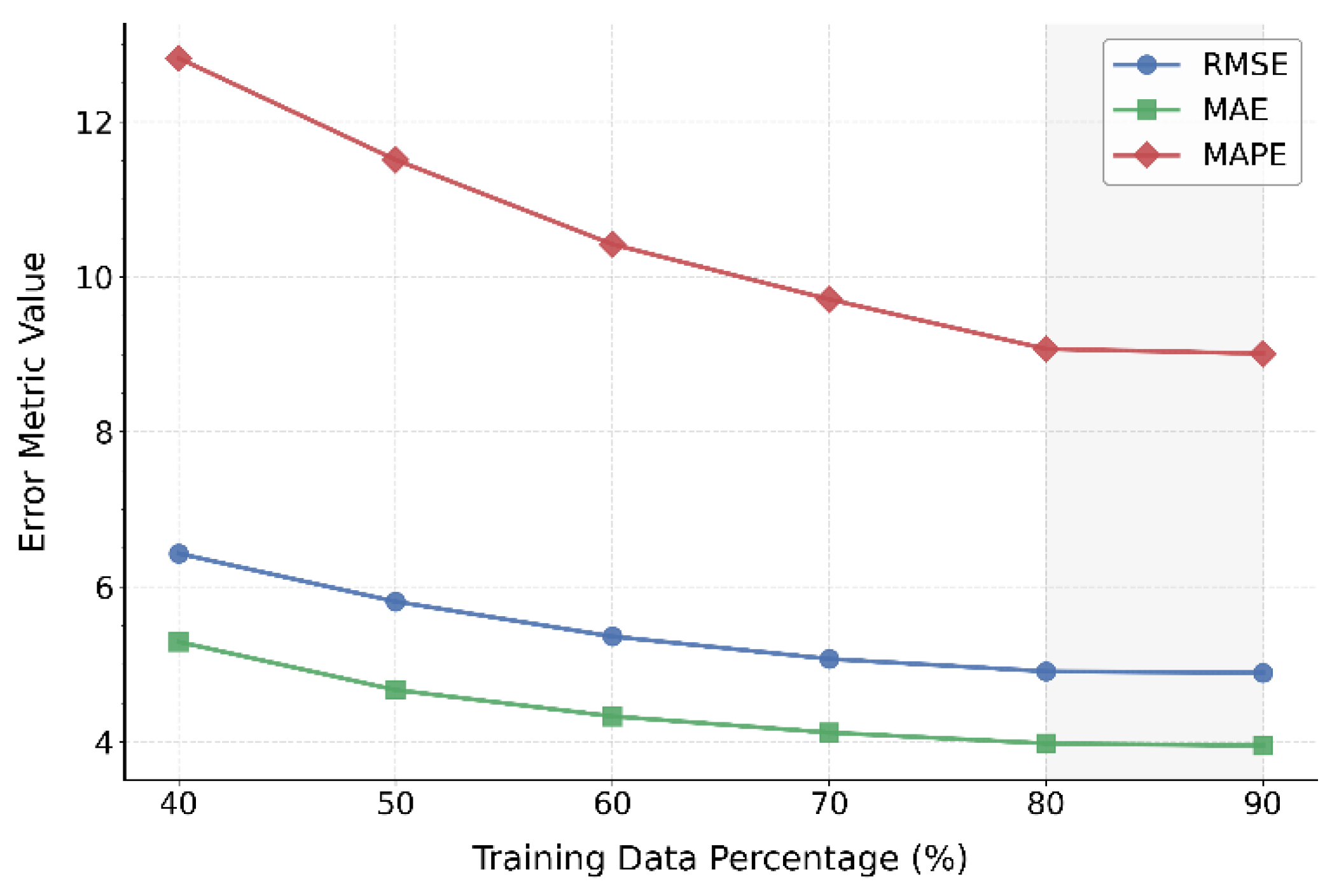

This paper focuses on energy consumption prediction for Internet of Things (IoT) devices and proposes a deep learning model that integrates causal features with an LSTM architecture. The method combines the dynamic nature of time-series modeling with the structural guidance of causal attention mechanisms. It demonstrates strong predictive performance in complex and dynamic IoT scenarios. By constructing an end-to-end learning framework, the model captures deep interactions between historical states and external causal variables. This enables accurate modeling and efficient regression of device energy consumption. The proposed approach addresses limitations of traditional time-series models in feature selection and interpretability.. In a series of comparative experiments and sensitivity analyses, the proposed method outperforms existing approaches on key regression metrics including RMSE, MAE, and MAPE. These results confirm that integrating causal features significantly improves model robustness and generalization. The model also shows good adaptability to variations in training data size, structural parameters, and environmental conditions. It maintains high prediction accuracy even under data scarcity or heterogeneous device distributions. This highlights the practical value of the method in industrial and edge computing scenarios.

From an application perspective, the results of this study can benefit several key areas, including smart manufacturing, edge deployment, and green energy management. In resource-constrained and task-distributed IoT environments, accurate energy consumption prediction supports dynamic scheduling and energy optimization. Moreover, causal feature modeling enhances system interpretability, making the decision-making process more transparent. This helps improve deployment security and system trustworthiness. The integration of deep learning with structural modeling provides a new direction for IoT energy prediction. It also offers solid support for intelligent sensing and energy-driven computing in future smart IoT systems.

Future research can extend this work in several directions. First, more advanced causal discovery mechanisms can be explored to improve the model’s ability to detect latent causal relationships. Second, the model can be integrated with federated learning or edge intelligence frameworks. This would enable cross-device energy modeling and shared optimization under data privacy constraints. Third, incorporating multimodal sensing data such as images, audio, and environmental states can further enrich the input and enhance both accuracy and robustness. In conclusion, this study presents an innovative solution for energy modeling in IoT systems and provides technical insights for the deep integration of artificial intelligence and the Internet of Things.

References

- Alıoghlı, A.A.; Okay, F.Y. IoT-Based Energy Consumption Prediction Using Transformers. Gazi University Journal of Science Part A: Engineering and Innovation 2024, 11, 304–323. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Hawash, H.; Chakrabortty, R.K.; et al. Energy-net: a deep learning approach for smart energy management in IoT-based smart cities. IEEE Internet of Things Journal 2021, 8, 12422–12435. [Google Scholar] [CrossRef]

- Natarajan, Y.; K. R., S.P.; Wadhwa, G.; Choi, Y.; Chen, Z.; Lee, D.-E.; Mi, Y. Enhancing building energy efficiency with IoT-driven hybrid deep learning models for accurate energy consumption prediction. Sustainability 2024, 16, 1925. [Google Scholar] [CrossRef]

- Binbusayyis, A.; Sha, M. Energy consumption prediction using modified deep CNN-Bi LSTM with attention mechanism. Heliyon 2025, 11. [Google Scholar] [CrossRef] [PubMed]

- Somu, N.; M. R., G.R.; Ramamritham, K. A deep learning framework for building energy consumption forecast. Renewable and Sustainable Energy Reviews 2021, 137, 110591. [Google Scholar] [CrossRef]

- Ma, C.; Pan, S.; Cui, T.; et al. Energy consumption prediction for office buildings: Performance evaluation and application of ensemble machine learning techniques. Journal of Building Engineering 2025, 102, 112021. [Google Scholar] [CrossRef]

- Yang, T.; Cheng, Y.; Ren, Y.; Lou, Y.; Wei, M.; Xin, H. A Deep Learning Framework for Sequence Mining with Bidirectional LSTM and Multi-Scale Attention. Proceedings of the 2025 2nd International Conference on Innovation Management and Information System, pp. 472-476, 2025.

- Lian, L.; Li, Y.; Han, S.; Meng, R.; Wang, S.; Wang, M. Artificial Intelligence-Based Multiscale Temporal Modeling for Anomaly Detection in Cloud Services. arXiv e-prints arXiv:2508.14503, 2025. [CrossRef]

- Zhang, X.; Wang, X.; Wang, X. A Reinforcement Learning-Driven Task Scheduling Algorithm for Multi-Tenant Distributed Systems. arXiv e-prints arXiv:2508.08525, 2025.

- Wang, X.; Zhang, X.; Wang, X. Deep Skin Lesion Segmentation with Transformer-CNN Fusion: Toward Intelligent Skin Cancer Analysis. arXiv e-prints arXiv:2508.14509, 2025. [CrossRef]

- Li, M.; Hao, R.; Shi, S.; Yu, Z.; He, Q.; Zhan, J. A CNN-Transformer Approach for Image-Text Multimodal Classification with Cross-Modal Feature Fusion. Proceedings of the 2025 8th International Conference on Advanced Algorithms and Control Engineering (ICAACE), pp. 1182-1186, 2025.

- Zi, Y.; Gong, M.; Xue, Z.; Zou, Y.; Qi, N.; Deng, Y. Graph Neural Network and Transformer Integration for Unsupervised System Anomaly Discovery. arXiv e-prints arXiv:2508.09401, 2025. [CrossRef]

- He, J.; Liu, G.; Zhu, B.; Zhang, H.; Zheng, H.; Wang, X. Context-Guided Dynamic Retrieval for Improving Generation Quality in RAG Models. arXiv e-prints arXiv:2504.19436, 2025. [CrossRef]

- Zhang, R.; Lian, L.; Qi, Z.; Liu, G. Semantic and Structural Analysis of Implicit Biases in Large Language Models: An Interpretable Approach. arXiv e-prints arXiv:2508.06155, 2025. [CrossRef]

- Zheng, H.; Zhu, L.; Cui, W.; Pan, R.; Yan, X.; Xing, Y. Selective Knowledge Injection via Adapter Modules in Large-Scale Language Models. 2025. [CrossRef]

- Gao, D. High Fidelity Text to Image Generation with Contrastive Alignment and Structural Guidance. arXiv e-prints arXiv:2508.10280, 2025. [CrossRef]

- Zhang, R. AI-Driven Multi-Agent Scheduling and Service Quality Optimization in Microservice Systems. Transactions on Computational and Scientific Methods 2025, 5. [Google Scholar] [CrossRef]

- Yao, G.; Liu, H.; Dai, L. Multi-Agent Reinforcement Learning for Adaptive Resource Orchestration in Cloud-Native Clusters. arXiv e-prints arXiv:2508.10253, 2025.

- Wu, Q. Task-Aware Structural Reconfiguration for Parameter-Efficient Fine-Tuning of LLMs. Journal of Computer Technology and Software 2024, 3. [Google Scholar] [CrossRef]

- Hoendarto, G.; Saikhu, A.; Hari Ginardi, R. V. Bridging IoT devices and machine learning for predicting power consumption: case study Universitas Widya Dharma Pontianak. Energy Informatics 2025, 8, 87. [Google Scholar] [CrossRef]

- Kim, S.; Kang, M. Financial series prediction using Attention LSTM. arXiv e-prints 2019, arXiv:1902.10877. [Google Scholar] [CrossRef]

- Suebsombut, P.; Sekhari, A.; Sureephong, P.; et al. Field data forecasting using LSTM and Bi-LSTM approaches. Applied Sciences 2021, 11, 11820. [Google Scholar] [CrossRef]

- Lu, W.; Li, J.; Li, Y.; et al. A CNN-LSTM-based model to forecast stock prices. Complexity 2020, 2020, 6622927. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).