1. Introduction

Scholars and practitioners generally concur that companies must advance their efforts and investments in digitization, digitalization, and digital transformations [

1]. Hereby, data monetization has emerged as a principal justification for investments in these endeavors [

2,

3]. However, despite the proven benefits of data monetization, company success in leveraging data to achieve competitive advantage remains inconsistent. While some pioneering companies have successfully utilized data, substantial evidence indicates that many organizations continue to struggle with effective data utilization and data monetization [

4,

5,

6]. These challenges are not confined to the monetization of "big" data and advanced analytics, such as artificial intelligence, but also extend to the monetization of basic data and data analytics.

A consensus among various studies indicates that only a minority of companies have achieved success with their data initiatives. The majority of companies experience only modest benefits, and some even incur losses from their advanced data analytics initiatives. Nevertheless, the few companies that successfully implement data monetization strategies are reported to outperform their less data-centric counterparts in terms of revenue growth, profit margins, and returns on equity [

4,

5,

6].

This is particularly surprising given that data monetization has rapidly emerged as a prominent research topic and dominates the agendas of many companies. Previous research has highlighted various data monetization approaches, such as direct and indirect data monetization, elucidated the necessary conditions for successful data monetization initiatives, and provided use cases across various industries [

8,

16]. However, prior studies overlook two critical aspects. First, it remains unclear how companies’ legacy information and communication systems influence the outcomes of data monetization initiatives. Legacy systems naturally shape the starting points of these initiatives, either inhibiting or facilitating company goals, roadmaps, strategic plans, and implementations. Legacy systems can impose burdens related to data quality, harmonization, and consistency, thereby delaying the achievement of expected outcomes in data initiatives [

2,

7].

Second, existing research frequently examines data monetization initiatives at the company level while emphasizing the importance of implementing lighthouse projects and developing concrete use cases to create and sustain momentum for data initiatives. The literature often highlights the successes of individual use cases without delving deeply into selected examples or exploring the reasons behind the failures of these use cases. This oversight is notable given that many companies report difficulties during the implementation and eventual discontinuation of promising use cases [

8].

We contend that the existing evidence and theoretical considerations indicate a significant gap in understanding how companies can effectively benefit from data. To address these fundamental issues, we investigated the following research question through a single case study on customer analytics in collaboration with a medical technology company (Medical Inc.): How should companies prepare their customer master data to monetize their customer analytics efforts?

In answering this question, we make three important contributions. First, we identify key activities necessary for the successful deployment of data initiatives in customer analytics. Rather than aiming for an exhaustive list, we focus on specific key activities deemed most crucial for advancing data utilization and monetization through customer analytics. Second, we integrate these key activities into an overall framework, illustrating how companies can advance their data and advanced analytics initiatives throughout their digitization and digital transformation efforts. Third, we address a gap in the 3D concept—

digitization,

digitalization, and

digital transformation—highlighting that digitization alone is insufficient for achieving the next step in digital advancement [

1,

9].

The paper proceeds by first explaining the theoretical background and then outlining the research methodology applied in collaboration with our case company. We then present our findings, introducing the phase termed datatization in the company's journey through i) digitization, ii) digitalization, and iii) digital transformation. This phase is translated into a nine-step framework that establishes digitalization as a better foundation for digital transformation. The paper concludes with a concise summary of the primary findings and implications.

3. Research Methodology

To extract insights on the utilization of customer analytics by companies, we conducted an in-depth case study of a leading global medical technology provider, referred to here as Medical Inc. for confidentiality purposes. Medical Inc.'s diverse product portfolio includes syringes, needles, lab automation systems, and cell sorters. Headquartered in the United States, the company maintains regional head offices and geographical hubs across major markets worldwide. It operates as a centralized organization, granting minimal autonomy to its geographical markets in altering the operating model or redefining data management processes.

The strategic objective of Medical Inc. is to enhance customer centricity by prioritizing and proactively serving its customers. Consequently, the company has been striving to integrate customer analytics within its sales department. This case study adopts an interorganizational perspective, examining the company's internal departments and their interactions. Specifically, the sales department acts as a customer to the analytics department, which contributes to the customer journey from within the organization.

Our methodology combined traditional case study methods [

40] with a processual view and process theorizing [

41]. We examined the evolution of Medical Inc.'s IT landscape and data structures over the past years. Data were collected through a series of interviews with technical subject matter experts who have been with the company for several years, and participation in internal workshops. The primary interview questions focused on Medical Inc.'s past, present, and future customer analytics initiatives, targeting the IT landscape, supported processes, and involved data.

These primary data were supplemented with secondary data from internal documents, technical specifications, and project reports. Data collection spanned the entire data cleansing project and included input from both the core team and a broader project support team (e.g., analytic project leaders, customer master data managers, solution architects, data scientists, analysts, and operations managers). The project advanced through internal brainstorming workshops, categorizing key issues into five main topic areas. All collected data were synthesized into a comprehensive case study description.

A content analysis of the case description was conducted to identify the phases of the customer analytics initiatives and pinpoint turning points within these phases, segmenting the journey into phases to elucidate the interconnected events and simplify temporal flows. During phase development, we identified where issues arose, how they were addressed, and anticipated future states. The framework, developed during brainstorming sessions, was iterated through several cycles, incorporating after-action reviews each time. Initially, the problem-solving team focused on German data, providing an opportunity to apply the framework to Austria and Switzerland, thus refining the theory [

40].

Recognizing the inherent complexity of process data in data cleansing projects [42], our data analysis began with constructing a timeline of key dates and milestones. Subsequently, an inductive method was used to create a chronological, detailed narrative of the data cleansing initiative, triangulating data from documents, observations, and interviews. Hereby, we applied temporal bracketing strategy [

41] to the examination of the chronological process of the data cleansing initiative.

4. Results—Insights Into the Data Cleansing Initiative

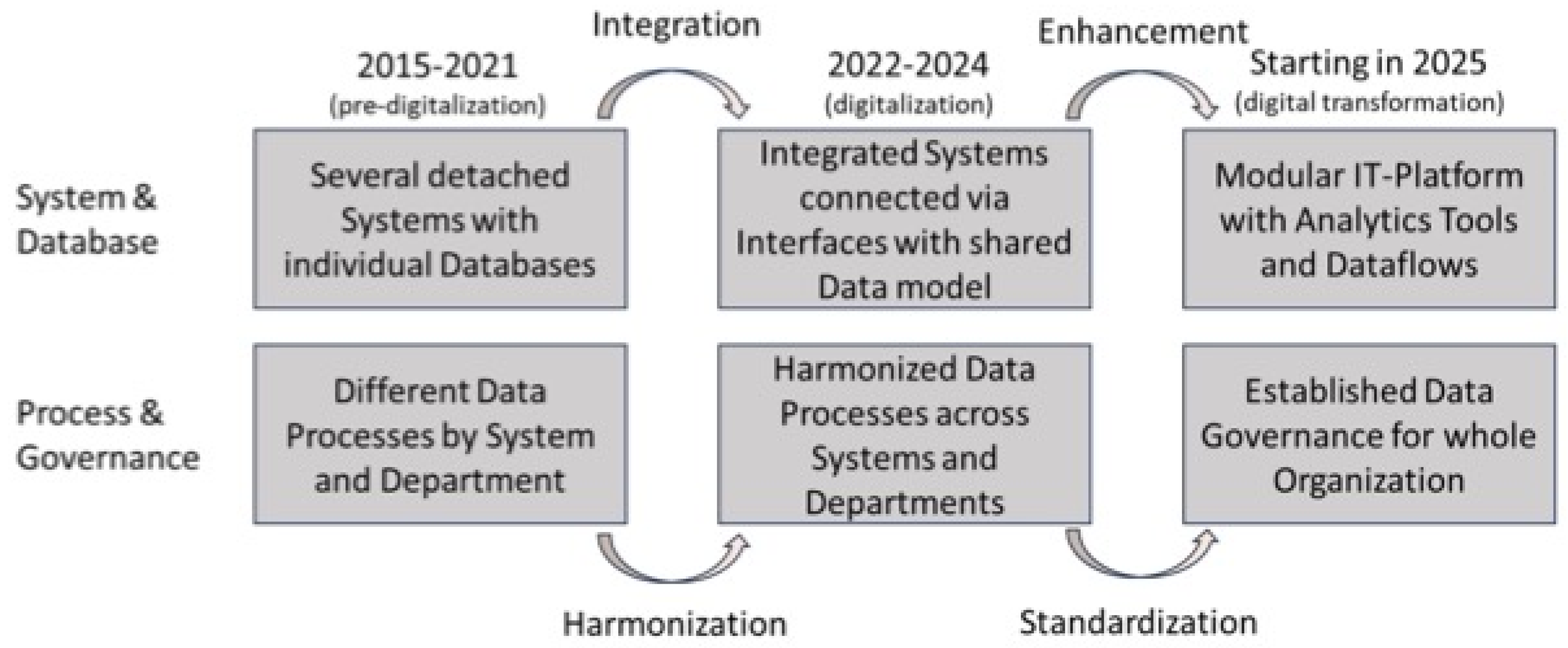

Our data analysis revealed that the data cleansing initiative for enhancing customer analytics encompasses three broad phases. These phases interact with other strategic milestones and events driven by Medical Inc. Each phase exhibited distinct characteristics in Medical Inc.'s systems, databases, processes, and governance (see

Figure 1).

4.1. Three Main Phases to Utilize Data for Customer Analytics

Phase 1 commenced with the culmination of two significant acquisitions, precipitating a scenario wherein Medical Inc. found itself operating disparate IT systems, each reliant on individual databases. The transition from Phase 1 to Phase 2 ensued as Medical Inc. embarked on the integration of these disjointed systems and databases, concurrently endeavoring to standardize data processes and governance protocols.

Phase 2 was instigated by the implementation of a new ERP system within the framework of an integration initiative, which went live in 2022, with the master data cleansing project slated for completion by 2025. These successive phases were characterized by distinct focal points and actions. Phase 1 was marked by the ramifications of the fragmented system landscape on data and operational processes, a predicament initially sparked by the inaugural acquisition but further exacerbated by subsequent acquisitions. Unlike the larger acquisition, the decision was made during the smaller acquisition in 2015 to refrain from full integration. This smaller acquisition catered to the same customer base, operating on autonomous systems that necessitated disparate data processes across departments, leading to duplicative and disjointed treatment of customer data. Consequently, challenges in customer master data management surfaced.

The transition to Phase 2 was underpinned by concerted integration and harmonization endeavors. The objective here was to establish a unified system with standardized data processing across all departments, culminating in the creation of a singular data model encompassing all integrated systems. However, the severity of master data issues became evident during this phase. While processes were harmonized and an integrated system was implemented, data migration into the new source system proved to be fraught with cleanliness issues. The attainment of the final state of Phase 2 was contingent upon the rectification and alignment of data, thus leading to the subsequent development of a framework.

Phase 3 heralds a forward-looking perspective, envisaging the utilization of refined master data for advanced analytics, facilitated by the establishment of robust data governance frameworks and delineated data flows. This phase will witness enhancements to the BI system and database infrastructure, alongside the standardization of processes. It will encompass detailed descriptions of data structures and delineate contributors for specific analytics tools and functions.

4.2. Data Cleanliness, Integration and Harmonization As A Key Challenge

Medical Inc.’s revenue growth through mergers and acquisitions (phases 1 and 2) let the data cleanliness, integration and harmonization become increasingly intricate, transitioning from the mere amalgamation of physical assets to the incorporation of the digital ecosystem into an established closed ecosystem. Given that Medical Inc. as well as the acquired companies relied heavily on digital processes to support their operations, integrating these processes into existing systems entails disruptions and necessitates meticulous planning. Despite adopting a greenfield approach by establishing a new system for the parent (Medical Inc.) environment, the integration process was not truly greenfield for the data aspect. Recognizing data as one of the most significant assets today, it was imperative to migrate data into new systems to preserve historical transactions.

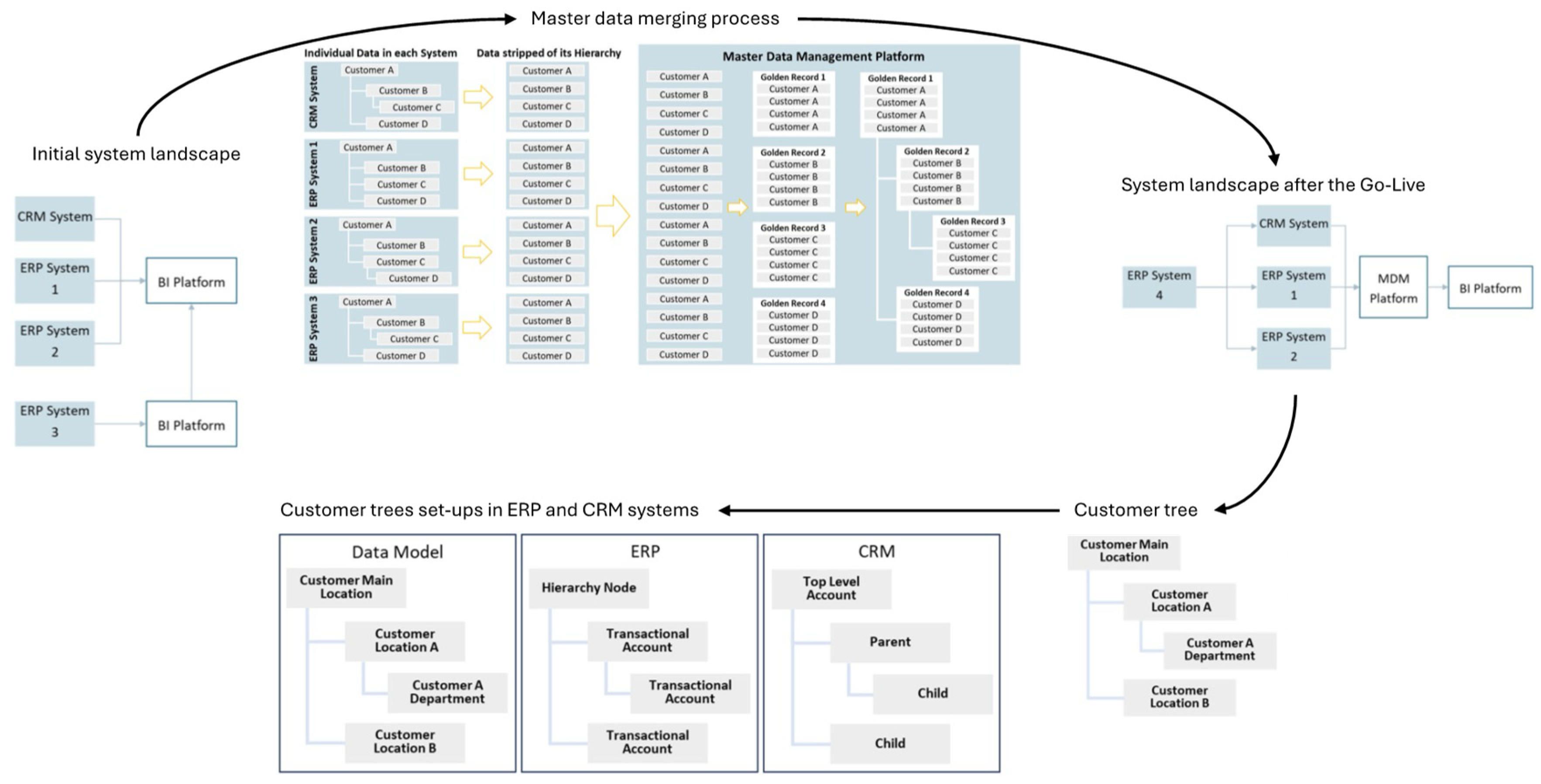

Medical Inc. made the strategic decision to integrate and modernize its operations by implementing a new ERP system while also integrating the latest acquisition. The project was structured with an implementation plan in waves, gradually rolling out components of the parent company and the majority of the newest acquisition. As depicted in

Figure 2, the Medical Inc. operated on one CRM system and two ERP systems, each serving distinct purposes. One ERP system primarily handled financial tasks, while the other managed other operational aspects. These ERP systems, sourced from different timeframes and based on different software platforms (SAP from 2017 and IBM from the 90s), fed into a business intelligence (BI) system. The third ERP system, introduced with the latest acquisition, was an Oracle system that fed into its own BI module, subsequently integrated into the Medical Inc.’s own BI system. The project aimed to consolidate all functions into a single ERP system. Initially, the acquired company and the financial segment of the Medical Inc. were integrated, followed by the consolidation of the remaining operational functions managed by the last ERP system. Given the disparate data models and datasets across these systems, the first crucial step was the data migration. For the integration and migration of customer master data, a cloud-based Master Data Management (MDM) platform was employed, enabling the aggregation of customer master data from various systems while standardizing it by eliminating hierarchies.

As depicted in

Figure 2, all customer records were uniformly inputted into the platform. Subsequently, the Master Data Management (MDM) platform commenced the creation of golden records, consolidating identical customers from different systems into singular entities. This process, automated and facilitated by an underlying algorithm, compared various customer-related data elements such as names and addresses.

The establishment of customer hierarchies ensued, utilizing the CRM hierarchies as a blueprint and replicating them within the MDM platform. On a specified date, all pertinent and refined data was migrated into the new ERP system.

With the initial phase and subsequent go-live of the new ERP system, challenges pertaining to master data became pervasive. Notably, erroneous orders were dispatched to incorrect customers, pricing discrepancies for specific customers emerged, and the consignment process encountered impediments. A thorough examination of the Business Intelligence (BI) landscape pinpointed the root cause: inadequate cleanliness and organization of customer master data. Instances arose where unrelated customers were merged, resulting in convoluted customer hierarchies and discrepancies between billing and delivery addresses across systems. These challenges necessitated a temporary suspension of the project.

The illustrative depiction of the fragmented IT landscape (

Figure 2) highlights the ongoing efforts to streamline operations. ERP 3 has been replaced by ERP 4, while existing ERP systems are now fed by the new ERP system. All systems are interconnected through the MDM platform, acting as a unifying conduit, and efforts are underway to identify and resolve key data-related issues before resuming work on the original project.

All systems (CRM, ERP, BI) are synchronized, with the MDM platform managing data sources and ensuring alignment across systems. The data model for customer master data entails hierarchical structuring, delineating customer trees with up to eight levels. This hierarchical structure, exemplified by a hospital setting, necessitates meticulous alignment between CRM and ERP systems.

Technical disparities between CRM and ERP systems, particularly in level setup and nomenclature, necessitate precise alignment. The ERP system designates the top customer as a hierarchy node, responsible for grouping accounts and imparting terms to associated transactional accounts. Conversely, the CRM system employs the Top Level Account (TLA) as the highest level, with subsequent sub-accounts categorized based on hierarchy. Specific accounts must be harmonized in their roles across systems.

The MDM platform harmonizes information from both systems, ensuring consistency in account roles and levels across all systems (see

Figure 2). This process is guided by a framework developed in response to identified issues and structured around their severity and hierarchical sequence.

4.3. Challenges Identification—Impeding Progress

The data cleansing project team identified five primary areas of concern: i) hierarchy, ii) business Partners, iii) golden records, iv) data quality monitoring, and customer structure. Furthermore, the expansion of the customer database resulted in a significant increase, with 235,000 customer records exclusively in the Germany, Switzerland, and Austria (GSA) region. This surge was attributed to the decision to migrate all customer records, both active and inactive, from all systems, exacerbating the pre-existing challenges within the customer master database. It is worth noting that these issues often manifested concurrently within individual customer records, complicating the identification of accurate data segments and the associated customer identities.

Hierarchy issues stem from discrepancies in the legal customer hierarchies established within the Master Data Management (MDM) platform. Faulty hierarchies originating from the CRM system led to instances where customers were either not linked to any hierarchy, creating "orphans," or linked to incorrect hierarchies, resulting in the amalgamation of unrelated customers within hierarchies. The absence of a clear understanding of the top hierarchy customer's role further compounded these issues, leading to the formation of disparate customer hierarchies with varying structures. Moreover, the hierarchical complexity, reaching up to eight levels, was deemed excessive by the project team.

Business Partner concerns arose from disparities across systems, wherein the MDM platform aimed to unify and cleanse data sources for migration to the new ERP system, while the existing systems remained unaltered, perpetuating data discrepancies. Additionally, inconsistencies in the functions attributed to each customer within systems, along with misaligned naming conventions, contributed to the divergence of data across systems.

The concept of Golden Records within the MDM platform entails consolidating multiple records of the same customer into a single entity. However, discrepancies among customer records, such as slight variations in addresses or post codes, posed challenges in identifying accurate information for the creation of Golden Records, resulting in erroneous customer setups and duplicate Golden Records.

Data Quality Monitoring and Customer Structure issues were identified as separate yet interconnected challenges. While data quality monitoring was hindered by the absence of a governance framework, customer structure issues stemmed from a lack of guidelines on how customer master data should be structured. Although these issues were not directly linked to data cleanliness, they served as foundational factors contributing to data discrepancies.

4.4. Proposed Solution for Overcoming These Challenges

To address these challenges, the project team proposed a multi-faceted approach. Initially, a comprehensive cleansing of existing data was proposed to rectify hierarchy, business partner, and Golden Record issues. Subsequently, the establishment of a governance process aimed at preventing future instances of data uncleanliness was recommended. Additionally, the creation of a data handbook outlining guidelines for structuring customer master data was proposed to ensure consistency and accuracy in future data management efforts.

At the start of the rollout, there were 235,000 customer records in the system. Within a few months, 126,000 inactive accounts were removed. Beginning with Germany, the GSA team conducted an in-depth analysis to further reduce unnecessary customer records. They defined "needed" and "not needed" records based on an activity list, which included CRM object usage, sales from the last three years, open invoices older than three years, technical service records, and asset placements.

The team interviewed stakeholders from each business unit to understand the actions taken with each customer record in the CRM system. They created reports from the relevant systems and mapped this information to each customer record. Records with no activity were considered unnecessary and were deactivated. This reduced the German customer base from 74,000 to 34,000 records.

Next, the team began cleansing activities by addressing the hierarchical customer setup. They started at the top of the customer trees, breaking down the customer base into manageable parts and creating a new basis for each customer tree. They created an overview of all Top-Level Accounts (TLAs), including orphans, and included records from ERP4 (the new ERP system), MDM, CRM, ERP1, and ERP2. ERP4, MDM, and CRM records had a one-to-one relationship, but MDM to ERP2 and ERP1 to ERP2 records had many-to-one relationships, adding complexity. Therefore, the team focused on cleansing the newest ERP system and the CRM system first.

Each team member reviewed assigned packages of TLA records to decide if they were true TLAs, orphans needing an upgrade, needed to be attached to an existing TLA, or required the creation of a new TLA. These decisions were based on the customer's name and address information found in the systems and online. Duplicate records were identified and consolidated. This first validation round created a new TLA base to work with.

The next step was validating the “child” accounts linked to the TLAs. The customer record overview was updated with the new TLAs, including all levels of the customer trees, their respective IDs, addresses, and sales data. The team checked if the parent account was correct and remapped it if necessary. This step ensured clean reporting at the TLA level, which was critical for business intelligence and other functions that determine prices and agreements.

The first part of the data handbook was prepared to guide teams on how customers should be set up in the system. This enabled clean reporting for group purchasing organizations or lab groups, ensuring that pricing and terms set at the TLA level translated correctly to child accounts, solving pricing and delivery issues caused by incorrect parenting.

The next stage involved correcting the levels within each customer tree, merging duplicate accounts, assigning customer categories, and adding specific data points like the number of beds for hospitals and the German unique hospital identifier. This required visualizing and sorting the customer tree correctly according to the data handbook, understanding each entity, and enriching the tree with additional data. Customer categories helped in strategic business planning by addressing the needs of different markets, such as hospitals, laboratories, or outpatient care.

Finally, the team corrected names, addresses, and other customer information and aligned business partners across systems. This step improved the readability and transparency of customer records, making it easier to identify the correct customer for any function, such as order management, and reducing the creation of new accounts due to incorrect information. Streamlining the business partner ensured consistency across systems.

4.5. A framework for a Customer Master Data Cleansing Process

The insights emerging from the Medical Inc. can be translated into a more general framework about the customer master data cleansing process. The emerging framework would consist of two core activities with nine subsequent key tasks. The core activities capture first the 1) problem collection activities and afterwards 2) master data cleansing activities. The initial problem collection activity included 1a) problem identification, 1b) major problem categorization, 1c) problem prioritization, 1d) problem preparation tasks. The later master data cleansing activity consists of five tasks: 2a) setting up a customer base overview, 2b) deactivating unnecessary customer, 2c) reassigning top customers, 2d) assigning sub-customers to top customers, and 2e) cleansing up customer trees.

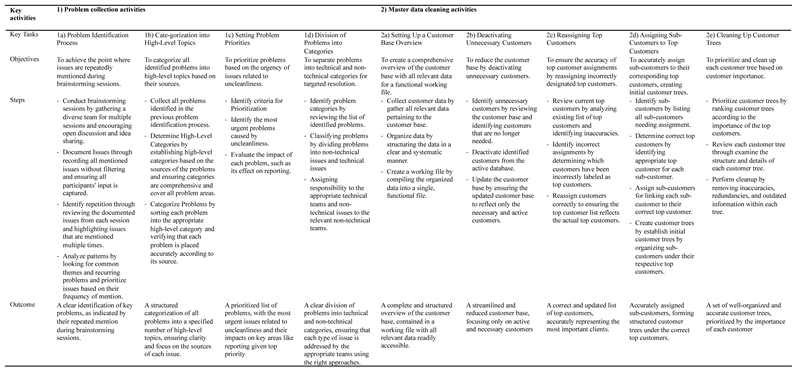

Table 2 summarizes these key activities and key tasks according to their objectives, steps, and outcomes.

Table 2.

A framework for customer master data cleansing.

Table 2.

A framework for customer master data cleansing.

The process begins with problem identification, which entails recognizing recurring issues during brainstorming sessions. Following this, problems are categorized into high-level topics, emphasizing the importance of aligning categories with their respective problem sources. Subsequently, problem priorities are adjusted to address the most urgent issues, particularly those stemming from uncleanliness, such as its impact on reporting. Problems are then classified into technical and non-technical categories to facilitate tailored solutions by different teams. Non-technical issues, closely linked to uncleanliness, diverge into a separate path. This path involves establishing a comprehensive overview of the customer base, deactivating unnecessary customers, and correcting top customer assignments. Next, sub-customers are assigned to their correct top customers to form initial customer trees. Finally, each customer tree is systematically cleaned up, with priority given to customers based on their importance.

5. Conclusions

This study highlights the challenges and critical activities necessary for successful data monetization through customer analytics [

20], especially within the context of a legacy IT environment [

16,

18]. Our case study of Medical Inc. reveals that data cleaning, preparation, and harmonization are foundational for deriving value from customer data [

22]. Insights gleaned from Medical Inc.'s endeavors offer a valuable framework for a generalized customer master data cleansing process, comprising problem identification, categorization, prioritization, and subsequent cleansing activities. This structured framework underscores the iterative nature of data management, emphasizing continuous evaluation and refinement to maintain data integrity and drive informed decision-making.

We propose a nine-step framework within the phase of pre-digitalization (datatization), emphasizing that digitization alone is insufficient for achieving digital transformation. Companies must integrate key activities into a comprehensive approach, addressing both the theoretical and practical aspects of data utilization. We phrase this as datatization. Datatization refers to the process of converting various forms of data into data that can be quantified, analyzed, and utilized in analytics systems. This involves capturing, storing, and organizing data from diverse sources, transforming it into structured formats suitable for computational analysis and decision-making processes. Datatization enables the extraction of actionable insights, supports data monetization strategies, and facilitates the integration of data into broader digital ecosystems.

This research not only fills gaps in existing literature but also offers a structured path for companies aiming to leverage customer analytics effectively. Future research should further explore the dynamic interactions between legacy systems and data monetization initiatives, providing deeper insights into overcoming the associated challenges [

2,

3].

In conclusion, the comprehensive analysis of Medical Inc.'s data cleansing initiative for enhancing customer analytics has shed light on a structured approach encompassing three vital phases: initial assessment and planning, implementation and execution, and evaluation and refinement. These phases have unfolded within the context of Medical Inc.'s strategic milestones and events, elucidating distinct characteristics across its systems, databases, processes, and governance.