1. Introduction

This paper explores the time-optimal control in systems subject to viscous friction, an aspect vital across various domains including robotics and economic systems. It particularly examines the application of Pontryagin’s Maximum Principle (PMP) to provide a fundamental understanding necessary for mastering time-optimal control strategies [

1,

2,

3,

4]. The literature cited includes a broad range of sources from theoretical frameworks to practical applications, highlighting both the complexities and strategies for optimizing control processes to enhance time efficiency.

Incorporating the damping term, denoted as

, significantly increases the complexity of solving the optimal problem and understanding the dynamics of the system. Despite this complexity, including the damping term is vital for developing methods to experimentally determine modal characteristics, such as eigenmodes, eigenfrequencies, and generalized masses. The cited references [

5,

6,

7] specifically address the behavior of the damped system for computational and, more importantly, for experimental analysis purposes. It is well known that transient simulation of systems with friction requires excessive computational power due to the nonlinear constitutive laws and the high stiffnesses involved. In [

8] authors proposed control laws for friction dampers which maximize energy dissipation in an instantaneous sense by modulating the normal force at the friction interface. Besides optimization of the mechanical design or various types of passive damping treatments, active structural vibration control concepts are efficient means to reduce unwanted vibrations [

9]. The conclusion from this broad survey is that the system model and friction model are fundamentally coupled, and they cannot be chosen independently.

Viscous dampers work by converting mechanical energy from motion (kinetic energy) into heat energy through viscous fluids. As a part of the damping process, they oppose the relative motion through fluid resistance, effectively controlling the speed and motion of connected components. Viscous dampers are essential for managing dynamic systems where control of movement and stability is necessary, making them indispensable in many high-stakes environments like automotive engineering and structural design. These dampers are increasingly sophisticated, incorporating technologies like electrorheological and magnetorheological fluids, which allow for variable stiffness and damping properties. This adaptability enhances their ability to mitigate vibrations across various earthquake intensities [

10]. By integrating dampers into the structural design using mathematical models, engineers can significantly improve a building’s ability to absorb and dissipate energy during earthquakes. This includes detailed discussions on the calculation of damping coefficients and their impact on the building’s overall dynamic response to seismic events [

11]. It is noteworthy that optimizing this type of damper (friction damper) remains a relatively unexplored subject worldwide, which highlights the innovative nature of our paper and serves as the driving motivation for our research.

Within the sphere of optimal control, the time-varying harmonic oscillator garners particular interest for its ability to reach designated energy levels effectively in the form

Systems that are linear with respect to their variables and exhibit bounded control

from the right side (

1) often resort to a bang-bang control strategy. The oscillations in such a system significantly differ both from the natural oscillations in a system described by an equation with constant coefficients and from the forced oscillations due to an external force that depends only on time. This approach toggles the system’s excitation between two extremities at precisely calculated switching intervals, which are essential as they mark the instances of control adjustments. These intervals are visually represented by a switching curve within the state space, directing the oscillator’s management for any given state combination (position

and velocity

). An extensive examination of time-optimality for both undamped and damped harmonic oscillators, including simulations that illustrate their practicality, is detailed in references [

13,

14]. A complex nonlinear system under state feedback control with a time delay corresponding to two coupled nonlinear oscillators with a parametric excitation is investigated by an asymptotic perturbation method based on Fourier expansion and time rescaling [

12]. Given that the present investigation focuses on the optimal control of the coefficient

and

, the issue assumes a bilinear form. In the field of engineering, particularly in nonlinear dynamics, parametric excitation is used to control vibrations in complex mechanical systems. The pendulum with periodically varying length which is also treated as a simple model of a child’s swing is investigated in [

15]. Simulations were performed in [

16] on a double obstacle pendulum system to investigate the effects of various parameters, including the positions and quantities of obstacle pins, and the initial release angles, on the pendulum’s motion through numerical simulations. The pendulum with vertically oscillating support and the pendulum with periodically varying length was considered as two forced dissipative pendulum systems, with a view to draw comparisons between their behaviour [

17]. Varying length pendulum is studied to address its oscillations damping using conveniently generated Coriolis force [

18]. By applying the homotopy analysis method to the governing equation of the pendulum, a closed-form approximate solution was obtained [

19].

The damping results in prolonged oscillations until equilibrium is achieved. Adjusting the damping coefficient,

, can expedite the damping process. Time-optimal control problems, known for their inverse characteristics, are prone to instability [

20], which challenges traditional analytical approaches and necessitates regularization of solutions. To complement complex analytical solutions, numerical methods are employed, offering a tangible presentation of results. This research unveils an analytical solution for the control function

and the optimal duration of the process across a wide range of parameters. It also introduces bang-bang relay type controls and defines the system’s reachability set.

Moreover, the paper underscores the critical role of time-optimal control in contemporary industrial and technological realms, stressing the urgency for durable solutions where time efficiency is pivotal to the sustainability of robot-technical systems [

21].

To summarize, we address the time-optimal control problem, which must primarily be solved analytically due to its inverse nature. This research addresses whether the time optimal process exhibits periodicity and whether the control function shows symmetry throughout its period, with findings confirming the former and refuting the latter. This focus on the control coefficient opens new avenues for inquiry, especially concerning the periodicity of the optimal process and the nature of the control function, providing both confirming and challenging insights into established assumptions.

The rest of this paper is organized as follows.

Section 2 contains the formulation of the optimal control problem.

Section 3 contains a preliminary study of the considered controlled system and reveals some of its properties.

Section 4 reveals the local properties of the problem and the application of the maximum principle (PMP) to a single semi-oscillation.

Section 5 concludes the study and establishes the global properties of the optimal solution.

Section 6 presents the main result of the study: a step-by-step optimization algorithm for solving the problem.

Section 6 also presents numerical examples and a discussion of the results obtained. The full text of the paper is summarized in

Section 7.

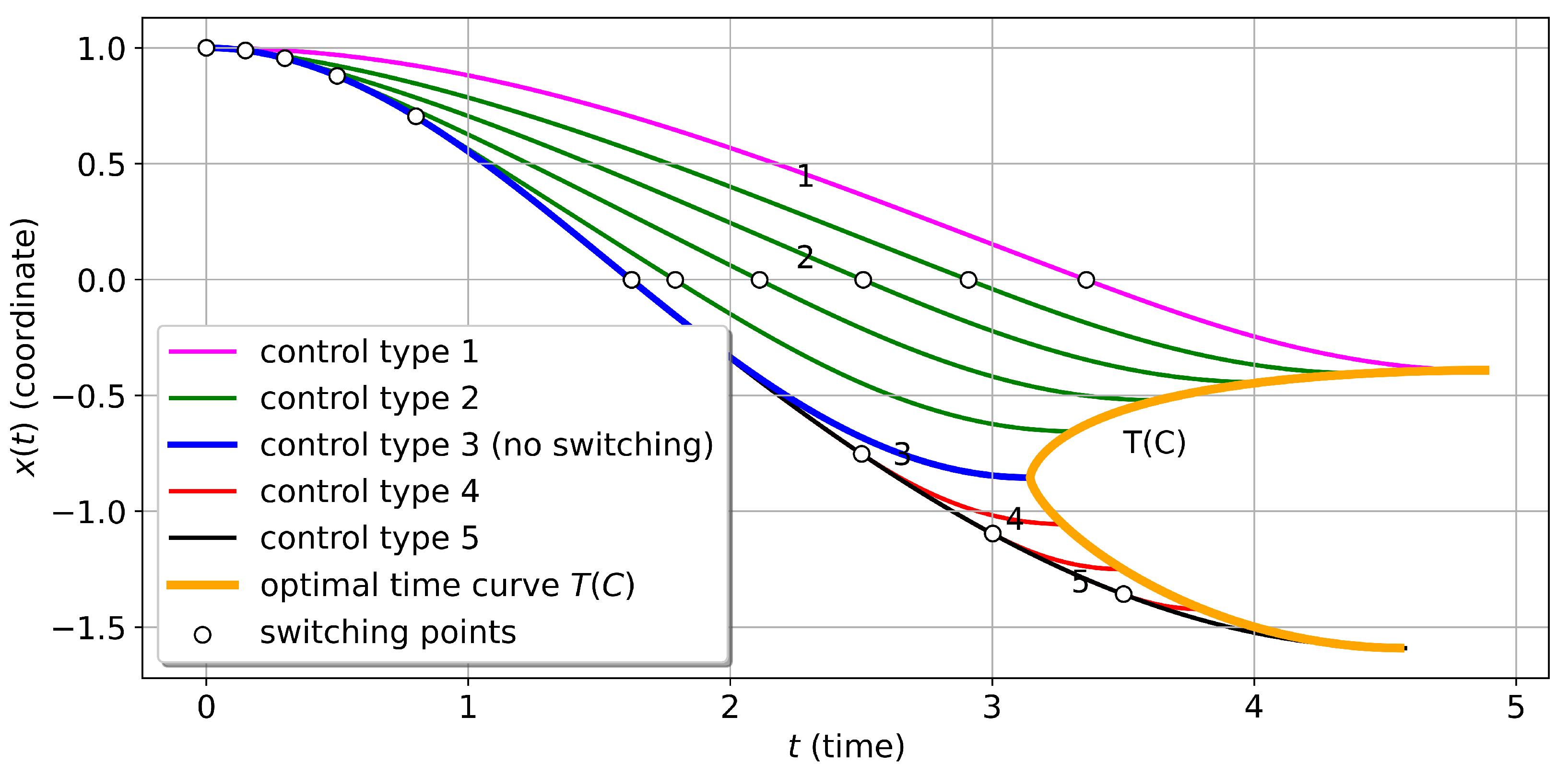

3. General Properties of the Controlled System (2)

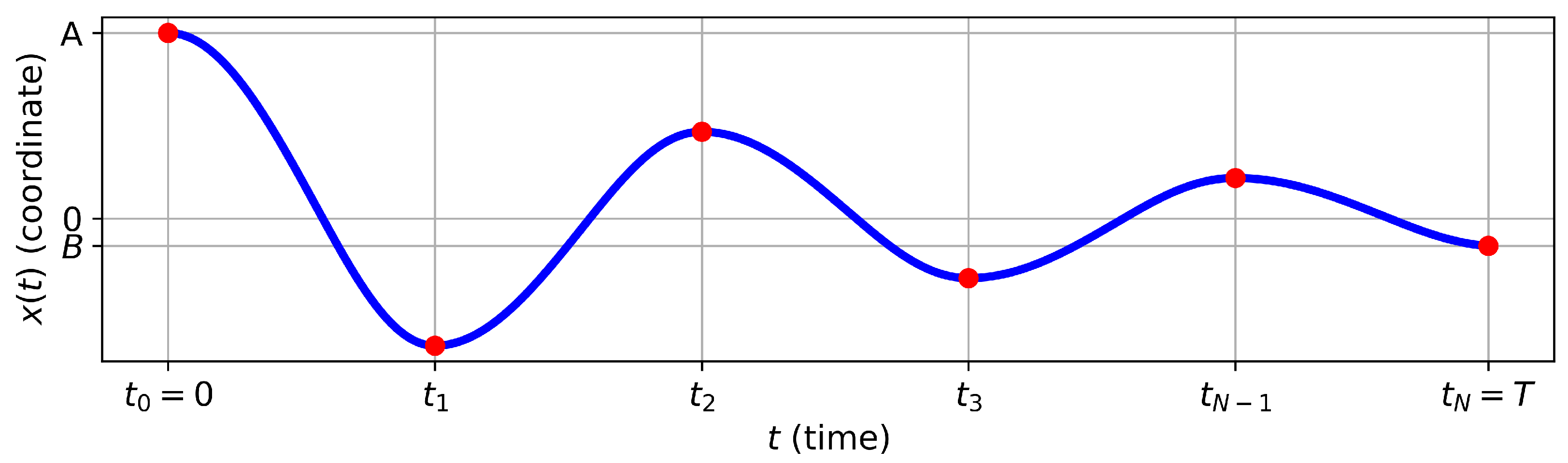

With any permissible control

, it is observed that the trajectory

of the controlled system in (

2) oscillates around the starting coordinate with successive intervals of monotonic increase and decrease (

Figure 1). The amplitude and duration of each oscillation can vary, based on the chosen control function

(typically discontinuous). Indeed if the conditions

and

are satisfied at some moment in time

, it can be derived from the differential equation of problem (

2) that

. Given that the functions

and

are continuous, the sign of the second derivative will match the sign of

in a small vicinity of point

, except possibly at a finite number of discontinuity points of the function

. This implies that for

the trajectory will have a point of local maximum, and for

a point of local minimum.

From the boundary conditions, it is understood that the speeds

and

at the initial and final moments of time equal zero, a situation that occurs only at the extreme points of the oscillatory process. These moments in time are denoted as

(

Figure 1), and the time intervals

are referred to as semi-oscillations. From this point, it is inferred that the optimal trajectory comprises a whole number of semi-oscillations

N, being an even number when

, and an odd number when

.

To investigate the total optimal control problem, let’s divide the trajectory into separate semi-oscillations and first solve the problem for one semi-oscillation

. We will denote

(

,

). It leads to the following

N subproblems for

:

Utilizing the linearity and homogeneity of the differential equation allows for the normalization of the variable

by dividing it by its initial value

. It’s also taken into account that the coefficient of friction

is independent of time

t, meaning the initial moment in time can be considered as zero. This approach transforms all subproblems (

3) for

into a unified auxiliary mini-problem of optimal control

Given probem (

2) and knowing the numbers

and

, the equation for optimal time in task (

4) will be accurately represented by

, and the optimal trajectories and control in the auxiliary task (

4) will coincide with the optimal trajectories and control in task (

2) over the interval

[

1] . It will be demonstrated below that the optimal process is broken down into individual equal time intervals, calculated using analytical formulas.

Furthermore, for convenience in solving (

4), instead of

and

, the notations

x and

will be used.

4. Solution of the Optimal Control Problem for a Single Semi-Oscillation

In the previous section, it was demonstrated how to resolve the initial problem (

2) by first solving an auxiliary problem

and find the dependency of the optimal time

T on the terminal value

C.

Here the condition denotes the monotonicity of the trajectory , which corresponds to one semi-oscillation.

First, the question of controllability will be examined, and the range of values for

C for which problem (

5) has a solution will be defined.

The following notations will be introduced

The largest value

can be attained with the control

because with such control, acceleration is maximized when

and deceleration is minimized when

.

Similarly, the smallest value

can be reached analogously with the control

Solving the differential equation with the boundary conditions from system (

5) and with control (

6) or (

7), it is obtained

where

To apply PMP [

1] introduce the notation

and rewrite (

5) in the form of a system of first-order differential equations

Now let the terminal value

C satisfy condition (

8), which ensures the controllability of the system.

Write the Pontryagin function

and denote its upper boundary

If

,

, and

constitute a solution to the optimal control problem (

10), then the following three conditions are satisfied:

I) There exist continuous functions

and

, which never simultaneously become zero and are solutions to the adjoint system.

II) For any

, the maximum condition is satisfied

III) For any

, a specific inequality is occured

From condition (

12) for the maximum of the function

H, the optimal control is obtained in the form

Let us show that the case of singular control in Formula (

13), specifically when

over a non-zero length interval of time is impossible, assuming the opposite. This means considering the existence of a time interval during which

. In such an interval, determining the value of optimal control from the maximum condition would not be feasible.

Given the continuity of the functions and , it is possible either for over some interval or for over a certain time period.

If

, then

must also be identically zero. However, this conclusion, derived from the second equation of the adjoint system (

11), implies that

, contradicting the maximum principle’s condition I).

In the scenario where

, it follows that

. Such a case is deemed impossible, as the controlled system cannot stay in a zero state under any control value, given that the term of the system’s differential Equation (

5), which includes the control, would also equate to zero.

This reasoning leads to the formulation of a statement:

Lemma 1. Optimal control is limited to only two values, 1 and , dictated by the sign of the product . Considering the case where this product equals zero as non-existent is justified by the fact that the control value at a single point or a finite number of points lacks any impact on the trajectory of the controlled system.

Now, consider condition III. It represents the greatest interest at values and .

At

, the condition is expressed as

At

, the condition becomes

Given the boundary conditions that

, and considering the control value

is always positive, with

and

, the following additional conditions are derived from (

14) and (

15)

Now, exploring the potential form of optimal control and the number of switches. It is already known that the value of optimal control is determined by the sign of the product .

The trajectory , due to its monotonic nature, crosses zero only once. This moment in time is denoted as .

Thus, control may only change its value at the point and at points where the sign of the adjoint variable changes. If at point , both and change their signs simultaneously, then the control value remains unchanged.

Firstly, consider an interval of time where control

. Then, the general solution

of the differential equation from system (4) and

from the adjoint system (

5) will take a specific form

where constants

,

,

,

must be determined from the boundary conditions on the interval of constant control. The value of the adjoint variable

is not of interest, as it does not enter into Formula (

13).

Now, consider an interval of time during which control

. Similarly, it is obtained that

where constants

,

,

,

are also to be determined from the boundary conditions.

It’s now proposed that the adjoint variable

turns to zero at most twice within the interval

, either in

. For instance, let

, where

. Then, within the interval

, the control value does not change, and this leads to a contradiction with Formulas (

17) and (

18) because the distance between zeros of the function

(for example

for Formula (

17)) exceeds the maximum length of an interval of constancy of sign and monotonicity of the function

(for example

or

).

Thus, it is proven that

Lemma 2. In problem 5, optimal control can have no more than one switch in each of the intervals and

The function

has a continuous derivative (as the right-hand side of the second equation of the adjoint system (

11) is continuous) and turns to zero no more than twice within the interval

. Moreover, these zeros cannot both lie within the same subinterval

or

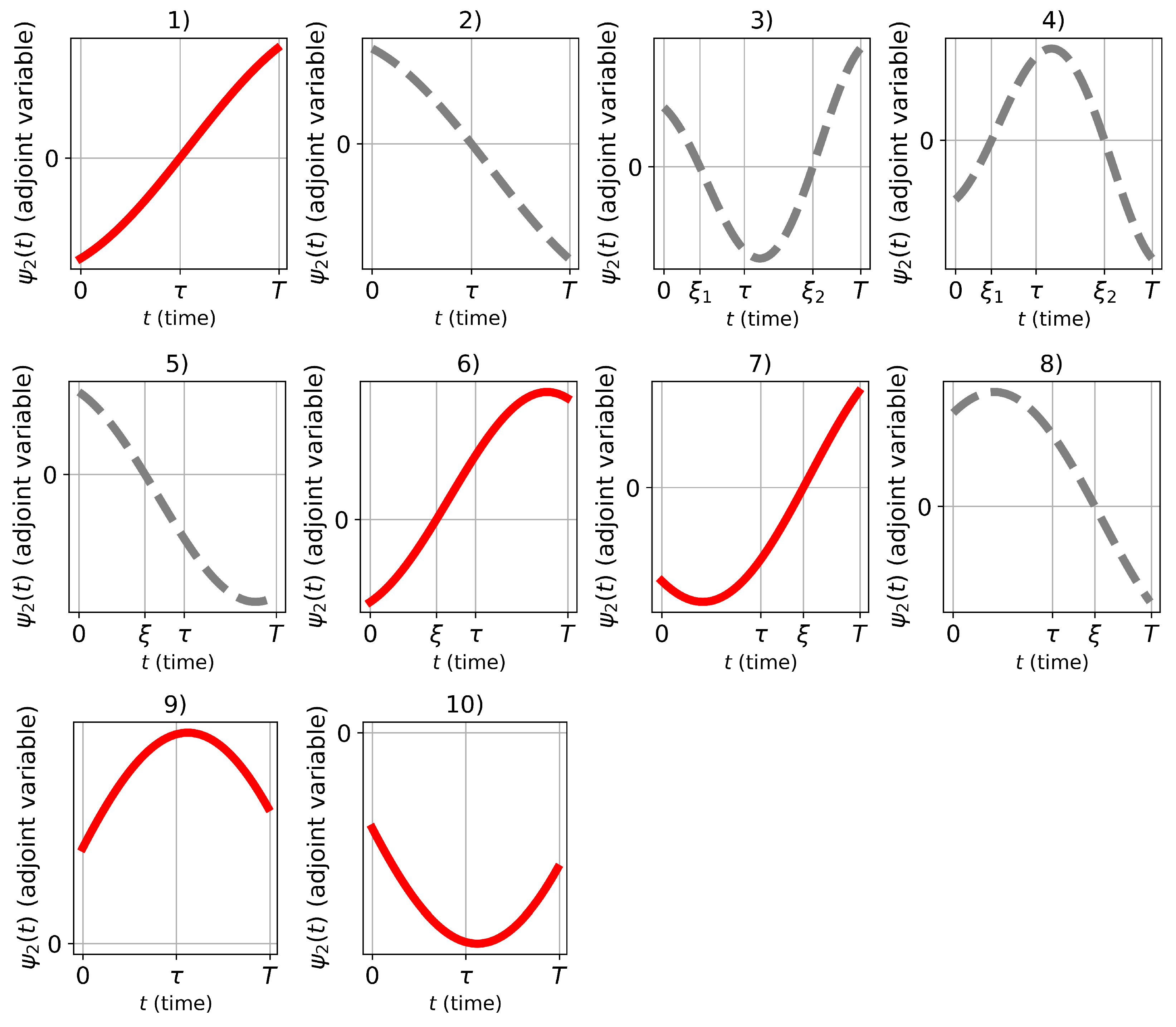

. This leads to 10 different cases (

Figure 2) of sign changes for the function

over the interval

. Dashed gray lines on the graph indicate scenarios that contradict the PMP, while solid red lines indicate cases with no contradiction with PMP found. A detailed analysis of these cases is provided.

If

, then

for

, leading to cases 1) and 2). In case 1), a constant control equal to 1 is maintained throughout the entire time interval. Case 2) is not possible, as

and does not satisfy condition (

16).

If

turns to zero twice within the interval

, there exist

and

such that

and

, leading to cases 3) and 4). These cases contradict condition (

16) since

and

have the same sign.

If turns to zero once at a point and does not equal zero within the interval , cases 5) and 6) are obtained. Case 5) is impossible because .

If turns to zero once at a point and does not equal zero within the interval , cases 7) and 8) emerge. Case 8) is not feasible, as .

Finally, if does not turn to zero within the interval , cases 9) and 10) are considered. Case 9) is possible if . Case 10) is possible if .

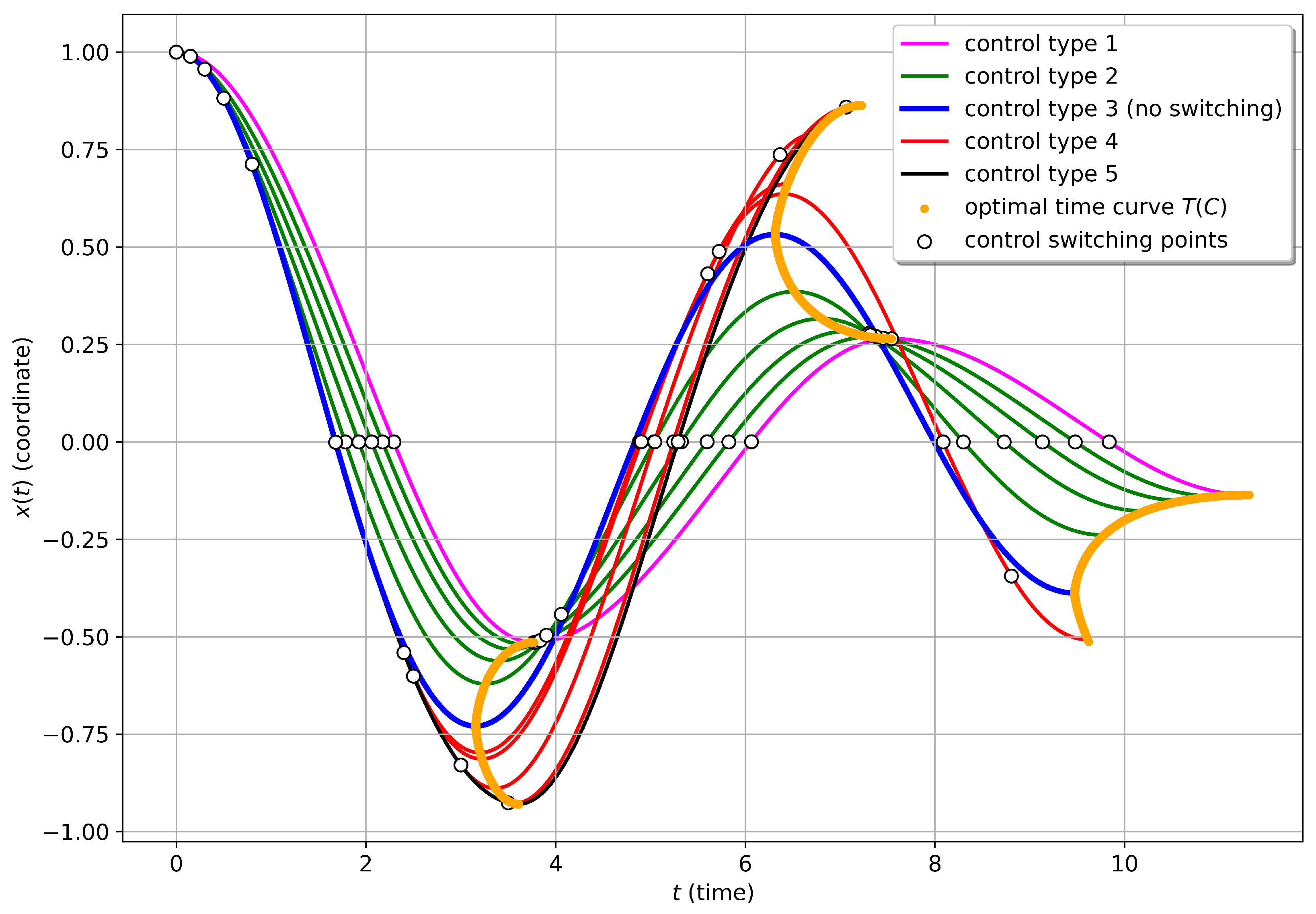

After analyzing cases 1)-10), it is determined that the following statement holds

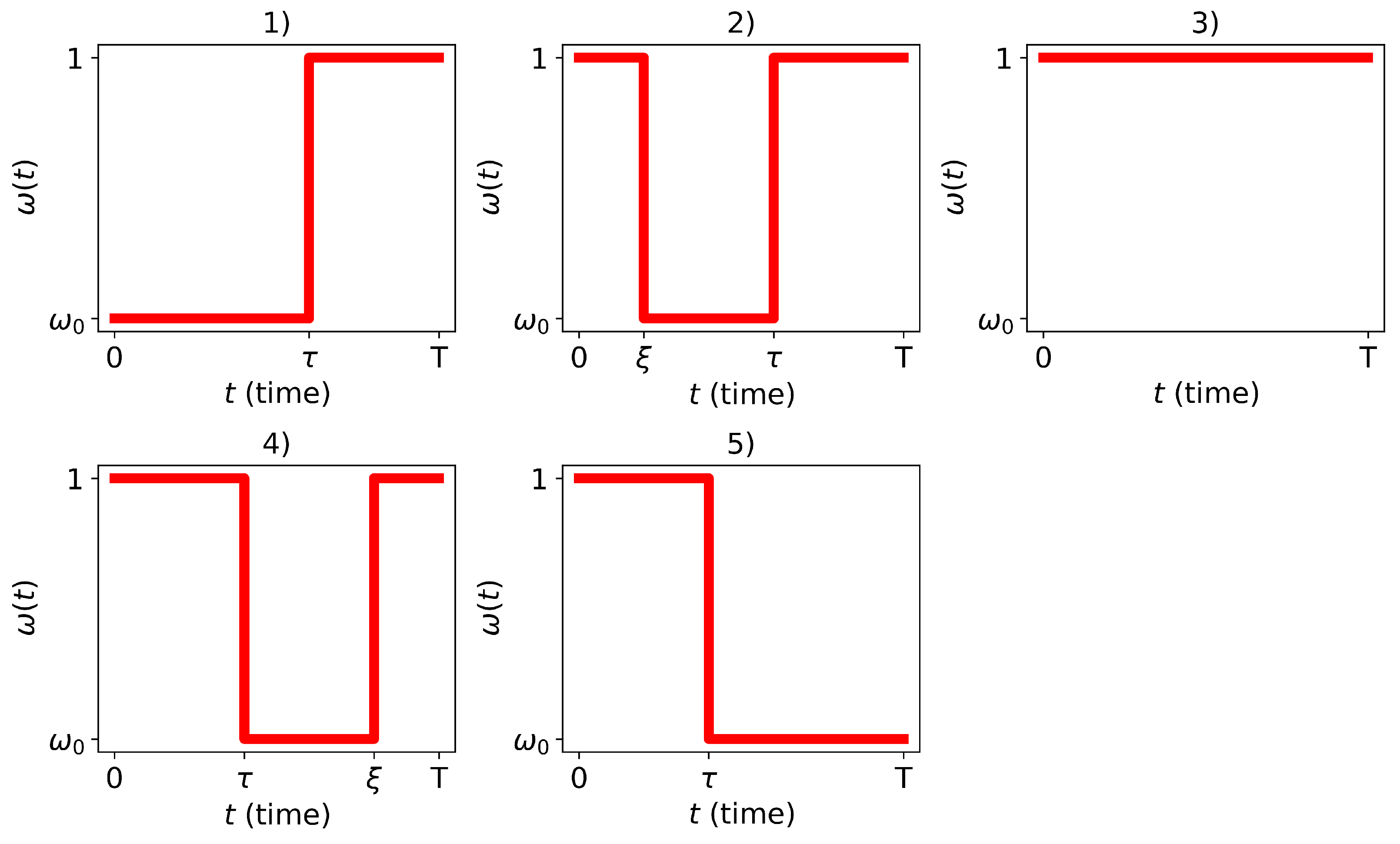

Lemma 3. Optimal control (bang-bang) in the problem (5) can be one of the five types represented in Figure 3.

It is noted that all types of control satisfying the maximum principle (illustrated in

Figure 3) differ in the length of the segment where the control value equals

, and its placement respectively to the point

.

Introducing the parameter

, the values of

and

T can be distinctly determined from the equation and three boundary conditions (excluding the condition

) of problem (

5) by substituting the corresponding control. This results in the determination of the end time

and the terminal value

as functions of the unknown parameter

s.

For control type 3 (illustrated in

Figure 3),

corresponds, and for control type 1, the smallest value

. For control type 5 the largest value

is obtained as the longest possible duration of motion under constant control

, that is

, with the moments of time

and

T derived from Formula (

18) and the conditions

,

,

,

, aiming to minimize

. Similarly, from Formula (

18), the smallest value of

is obtained. Controls of type 2 and 4 correspond to intermediate values of

s within intervals

and

.

Knowing the switching moment of control and having an analytical solution (Formulas (

17) and (

18)), the end time

T and the terminal trajectory value

can be explicitly calculated as functions of the parameter

s.

Let us consider

, then for

,

and from Formula (

17) and the initial condition

it’s found

,

leading to

and

.

Subsequently, for

,

and from Formula (

18) and the continuity of

at

, similarly,

.

Finally, for

,

and from Formula (

17) and the continuity of

at

, it’s found

where

.

From Formula (

19) and the condition

, the end moment of time

Simplifying expressions (

19)–(

20), ultimately, for

, one obtains

Conducting analogous calculations for the case

, one obtains

Noting that Formulas (

21) and (

22) parametrically define a certain curve

depicting the dependency of the end time on the terminal value

C when utilizing controls that satisfy the maximum principle. The parametric formulation of the function allows for the calculation of the first two derivatives of

as functions of the variable

C. Thus, the following properties of the function

are established

Lemma 4.

Uniquely determine the function , defined for .

The function is continuous for .

The function is differentiable for . At the endpoints of the interval, the derivative equals infinity, while at the point corresponding to the parameter , the derivative equals zero. Let be denoted.

The function decreases on the interval and increases on the interval .

The second derivative of the function is negative on the intervals . This condition signifies that the function is concave down for .

Remark 1. Note that the constancy of the sign of the second derivative was established by calculations via symbolic mathematics by Wolfram.

Investigating the properties of the function , it was found that each permissible terminal value C corresponds to a unique control that satisfies the PMP. Therefore the statement is following

Lemma 5. The function , defined by Formulas (21) and (22), determines the optimal time in problem (5).

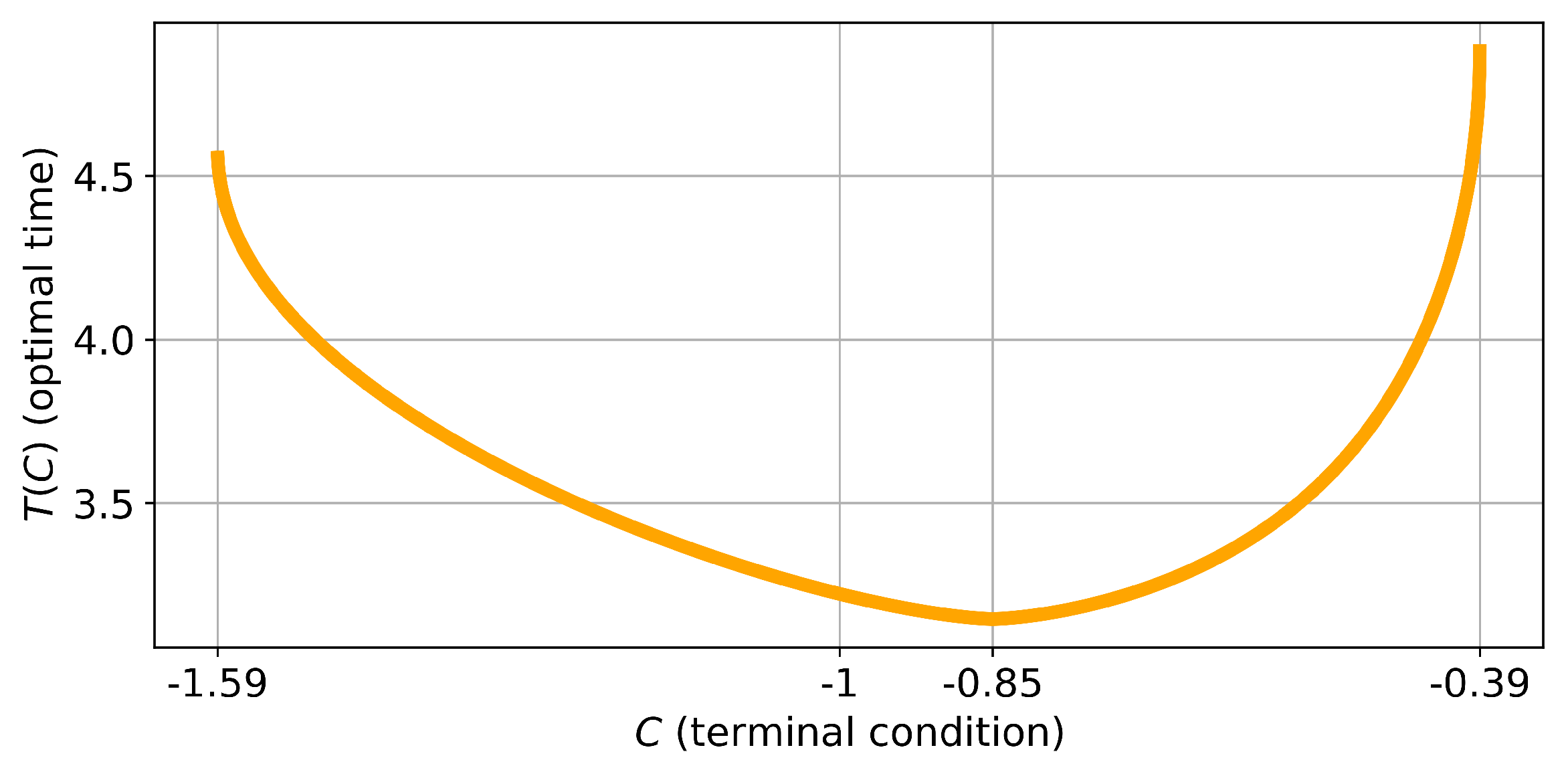

Example 1. Considering an example with given parameters , , it’s calculated that , . From (21), it’s found that . Figure 4 would illustrate the graph of the function , demonstrating how the optimal time varies with different terminal values C within the specified range.

It has been demonstrated that each value of

s unequivocally corresponds to a specific optimal control and an optimal trajectory, leading to a particular terminal point

. Different optimal trajectories, corresponding to various types of controls, are presented in

Figure 5. Controls of types 1 and 5 correspond to trajectories reaching the extreme points of the reachability set. Control of type 2 corresponds to the upper branch of the

curve (left branch on

Figure 4). Control of type 3, which has no switches, corresponds to the trajectory with the minimum possible time. Control of type 4 corresponds to the lower branch of the

curve (right branch on

Figure 4).

Trajectories are constructed for the given parameter values on the

Figure 4, but the general character of the drawing does not change with different parameter values.

5. Solution to the General Timing Optimal Problem

Applying the results of the previous section to solve the original problem (

2). Let’s first explore the question of controllability and determine under what boundary conditions

A and

B the system is controllable.

Using the estimate (

8), we obtain an estimate for

depending on the number of semi-oscillations

N.

Thus, the lemma is following.

Lemma 6.

The system is controllable if and only if there exists an even natural number N (for ) or an odd natural number N (for ), such that

Since and , it follows that and as .

Therefore, the system will be controllable for any non-zero values of

A and

B provided that

Utilizing formula (

9), this inequality can be expressed as follows

Having resolved the question of controllability, we now return to the original problem of optimal control (

2). Given

the solution of the optimal control problem (

2), let’s consider two consecutive semi-oscillations

. This segment of the optimal trajectory satisfies the boundary conditions of the original problem and must itself be optimal. Using the results of the previous section and normalizing variable

, the time for this segment can be expressed by the formula

Fixing

and

(noting that they have the same sign) and finding the minimum of the last expression by the variable

. Denoting

and introducing a new variable

, the time

can be expressed by the function

where

parametrically defined using Formulas (

21) and (

22). Let

q and

belong to the domain of definition of the function

. We find the first derivative of the function

It’s easy to notice that this derivative becomes zero at the point

. Let’s compute the second derivative at the point

.

Given that

, the function

decreases and is concave downwards. Therefore, all terms in the above expression are positive, and the found point is a point of minimum. At the boundary points of the domain of definition, the function

is not differentiable, but in this case, there exists a unique control (either Equation (

6) or Equation (

7)), leading the controlled system to its extreme position. For the remaining values of

, the positivity of the above expression (

26) follows from complex algebraic manipulations using the parametric setting of the function

with the help of Formulas (

21) and (

22).

We have shown that the numbers , , form a geometric progression. Applying this reasoning to the entire trajectory, we obtain the following statement

Lemma 7.

The numbers for the optimal process satisfy the condition

where the number of semi-oscillations is determined as the smallest N satisfying Lemma 6.

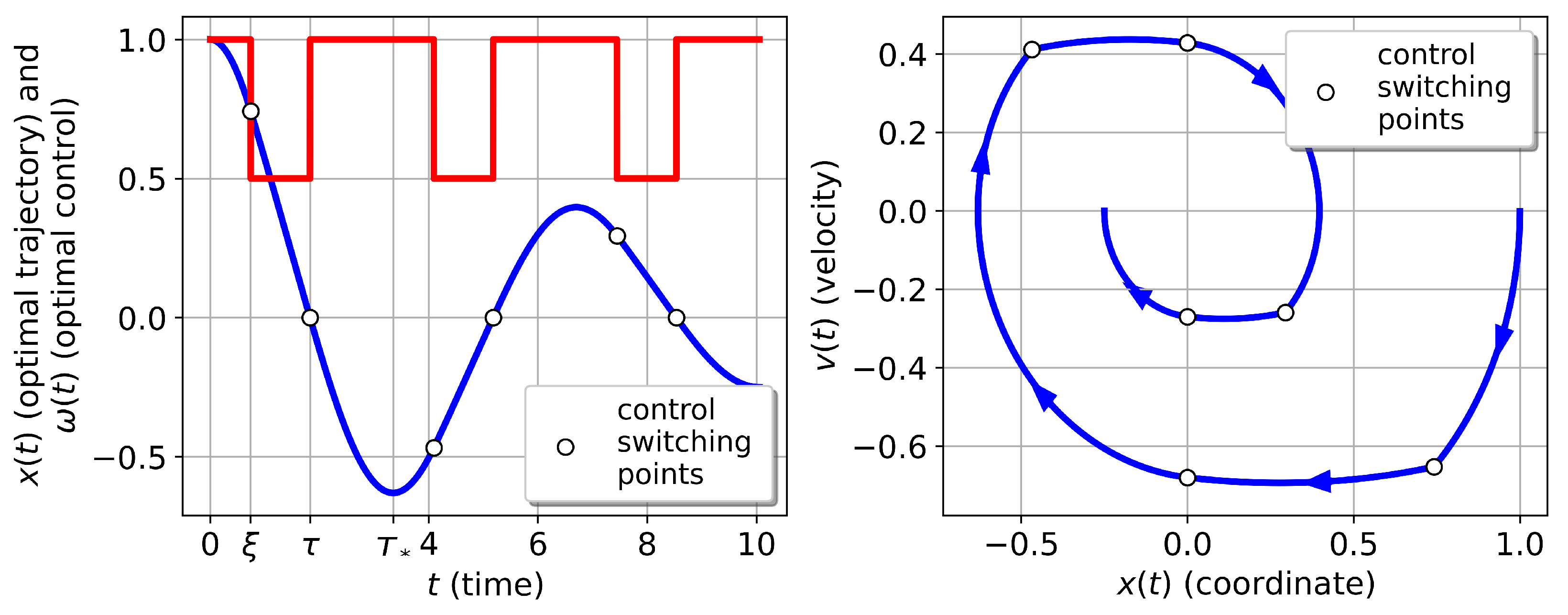

Since the ratio is constant for the optimal trajectory, the optimal control on each segment will be the same. Hence, if the number of semi-oscillations required to reach the end point is more than one, then the optimal control is a periodic function, where the period is one semi-oscillation.