Submitted:

20 March 2023

Posted:

21 March 2023

You are already at the latest version

Abstract

Keywords:

Introduction

Verbal Insight Problem-Solving

A Brief Overview of Cutting-Edge Sequence Classification Methods in Text or Speech Contests

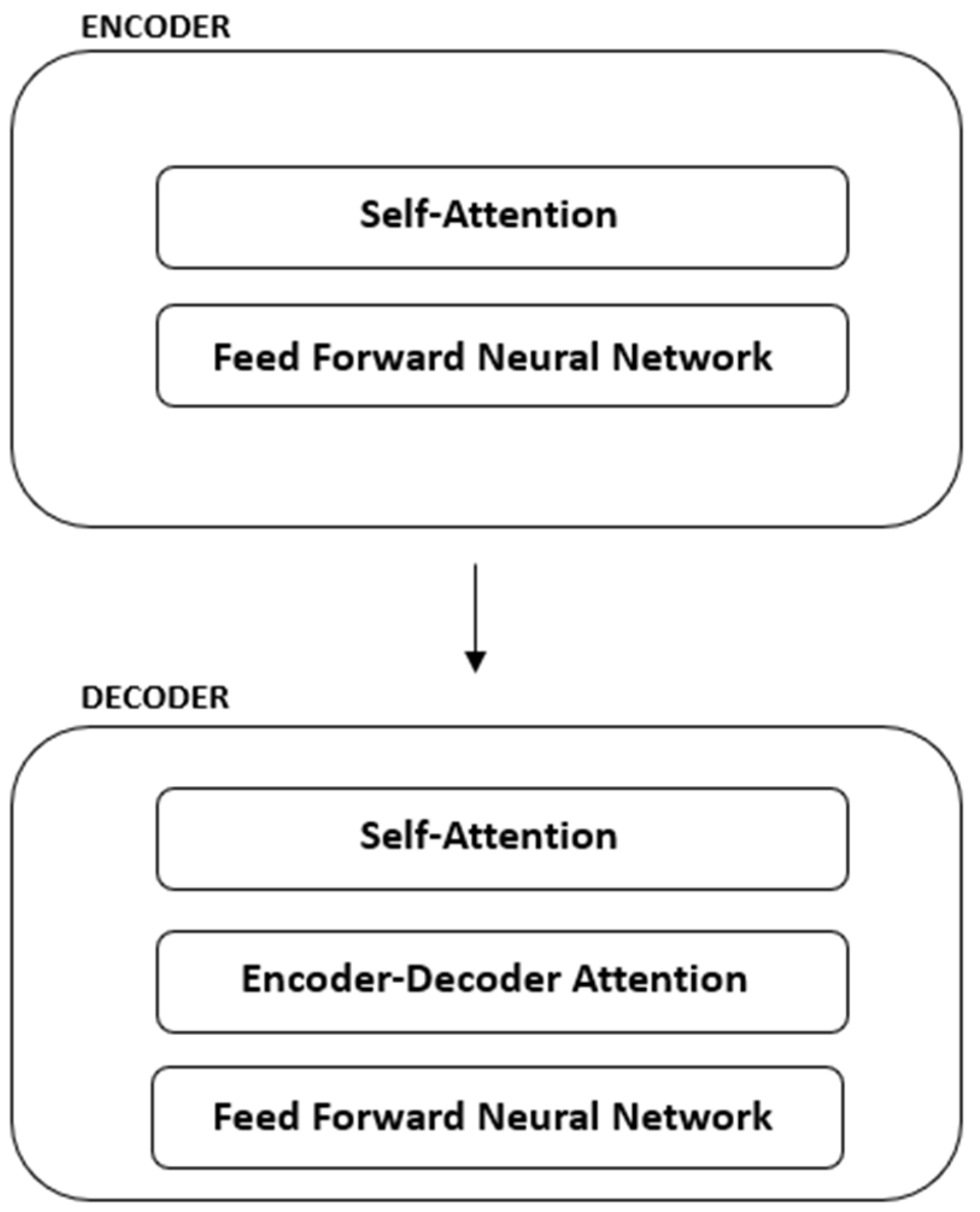

Encoding and Decoding Components

Materials and Methods

Language-Based Instructions

Methods

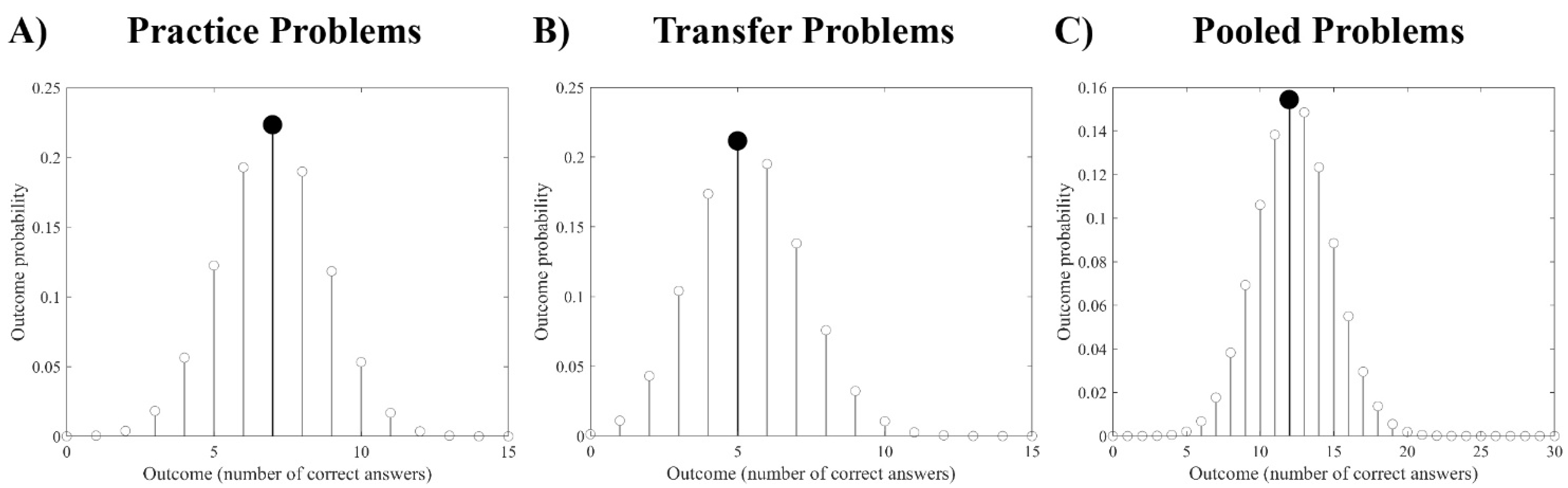

Probability Distributions of the Possible Outcomes for the Human Samples on Each Set of Problems

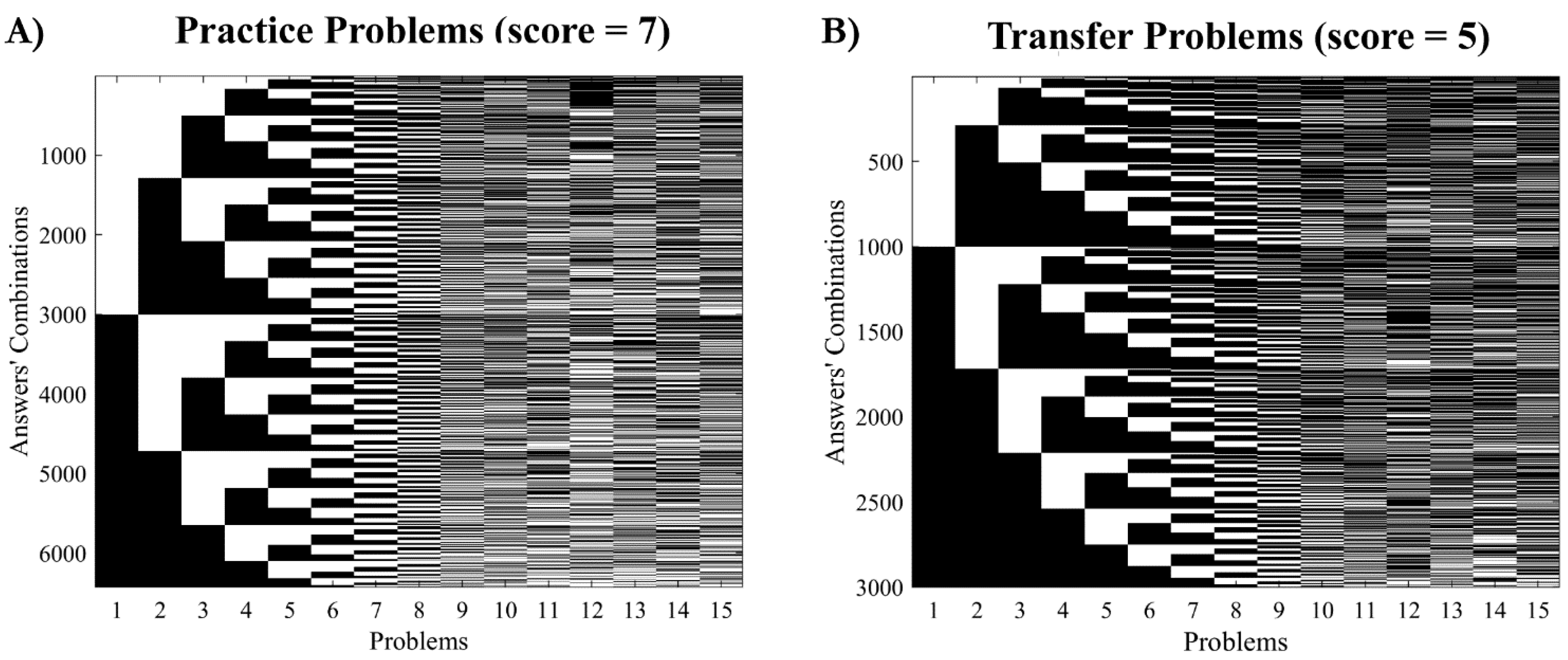

- The set of all possible answer combinations was first identified, producing the desired score. As an example, let us consider a score of three (three correct answers out of fifteen): such a score can be obtained by various combinations of answers, their number equalling the binomial coefficient . Given a set of n items (problems/answers, n = 15), for each kth score (kth ranging from 0 to 15), all answer combinations composed by k correct answers were identified (the total number of combinations is equal to: ).

- As a second step, the probability associated with the ki combination was computed, ( as:

- Finally, the total probability of obtaining a total score of kth was obtained as the sum of the related probabilities over the entire set of combinations ():

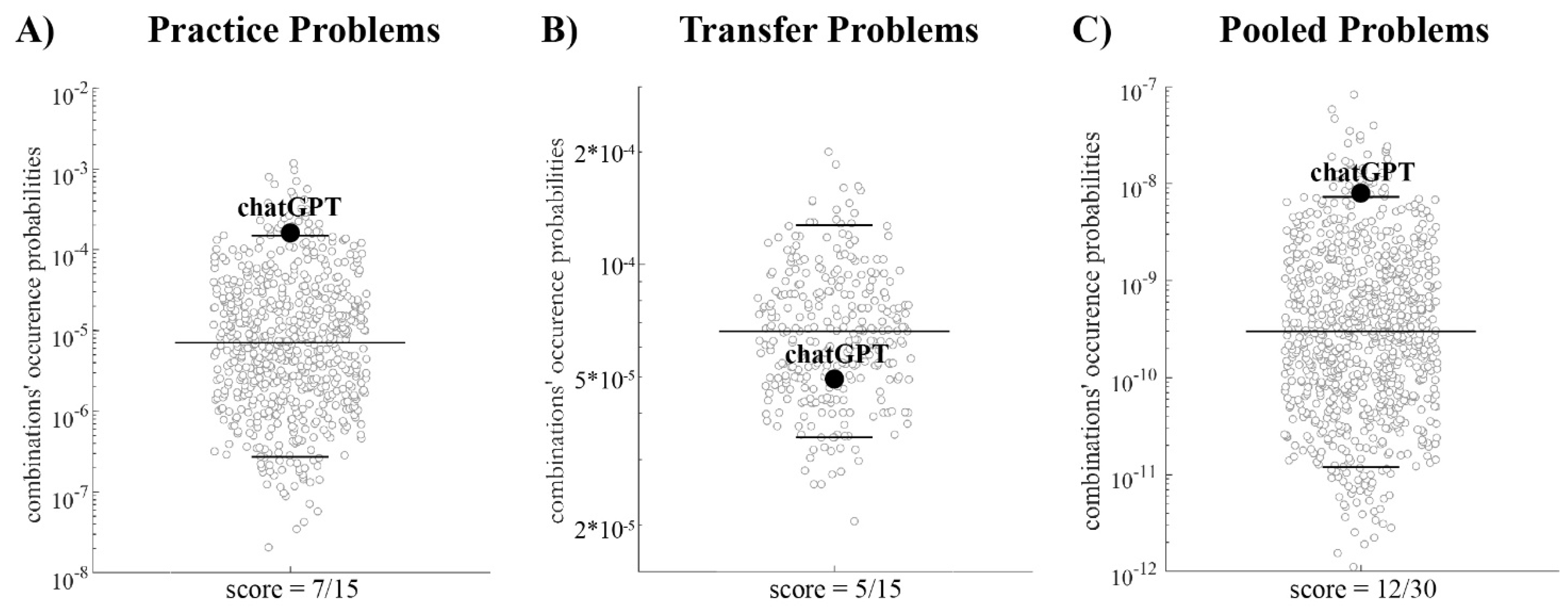

Problem-Wise Associations between Human Population Solution Rates and ChatGPT Performance

Probability Distributions of the Possible Outcomes and Associations between Human Population Solution Rates and ChatGPT Performance on the Pooled Dataset

Results

Pooled Problems Set

- The number of problems was balanced across the two sets (15 problems each).

- ChatGPT performance on the Practice set was not significantly different from that obtained on the Transfer set (Fischer Exact Test, p < 0.72, see Supplementary Material A).

- The median solution rate of the human population on the Practice set was not significantly different from that obtained on the transfer set (Wilcoxon rank sum test, z = 1.53, p < 0.13, Supplementary Material B).

Discussion and Conclusions

Supplementary Materials

Funding

Conflicts of Interest

References

- 1. Ankur Parikh, Oscar Täckström, Dipanjan Das, and Jakob Uszkoreit. A decomposable attention model. In Empirical Methods in Natural Language Processing, 2016.

- Ansburg, P. I., & Dominowski, R. I. (2000). Promoting insightful problem solving. The Journal of Creative Behavior, 34(1), 30-60. [CrossRef]

- Bahdanau, D., Cho, K., & Bengio, Y. (2014). Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473. [CrossRef]

- Chomsky, N. (2006). Language and mind. Cambridge University Press.

- Dell'Osso, L., Bazzichi, L., Baroni, S., Falaschi, V., Conversano, C., Carmassi, C., & Marazziti, D. (2015). The inflammatory hypothesis of mood spectrum broadened to fibromyalgia and chronic fatigue syndrome. Clinical and experimental rheumatology, 33(1 Suppl 88), S109-16.

- Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2018). Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805. [CrossRef]

- Ferrucci, R., Mameli, F., Ruggiero, F., Reitano, M., Miccoli, M., Gemignani, A., ... & Orrù, G. (2022). Alternate fluency in Parkinson’s disease: A machine learning analysis. Plos one, 17(3), e0265803. [CrossRef]

- James, W. (1890). The Principles of Psychology Volume II By William James (1890).

- James, W. (2007). The principles of psychology. Cosimo, Inc.

- Kahneman, D. (2011). Thinking, fast and slow. macmillan.

- Le, Q. V., Jaitly, N., & Hinton, G. E. (2015). A simple way to initialize recurrent networks of rectified linear units. arXiv preprint arXiv:1504.00941. [CrossRef]

- Li, S., Li, W., Cook, C., Zhu, C., & Gao, Y. (2018). Independently recurrent neural network (indrnn): Building a longer and deeper rnn. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5457-5466).

- Loconte, Riccardo and Orrù, Graziella and Tribastone, Mirco and Pietrini, Pietro and Sartori, Giuseppe, Challenging ChatGPT ' Intelligence' with Human Tools: A Neuropsychological Investigation on Prefrontal Functioning of a Large Language Model. Available at SSRN: https://ssrn.com/abstract=4377371. [CrossRef]

- Mayer, R. E. (1992). Thinking, problem solving, cognition. WH Freeman/Times Books/Henry Holt & Co.

- Mayer R. E., Wittrock M. C. (2006). Problem solving. In Alexander P. A., Winne P. H. (Eds.), Handbook of educational psychology (pp. 287-303). Mahwah, NJ: Erlbaum.

- Mazza, C., Monaro, M., Orrù, G., Burla, F., Colasanti, M., Ferracuti, S., & Roma, P. (2019). Introducing machine learning to detect personality faking-good in a male sample: a new model based on Minnesota multiphasic personality inventory-2 restructured form scales and reaction times. Frontiers in psychiatry, 10, 389. [CrossRef]

- Newell, A., & Simon, H. A. (1972). Human problem solving (Vol. 104, No. 9). Englewood Cliffs, NJ: Prentice-hall.

- Orru, G., Pettersson-Yeo, W., Marquand, A. F., Sartori, G., & Mechelli, A. (2012). Using support vector machine to identify imaging biomarkers of neurological and psychiatric disease: a critical review. Neuroscience & Biobehavioral Reviews, 36(4), 1140-1152. [CrossRef]

- Orrù, G., Gemignani, A., Ciacchini, R., Bazzichi, L., & Conversano, C. (2020). Machine learning increases diagnosticity in psychometric evaluation of alexithymia in fibromyalgia. Frontiers in medicine, 6, 319. [CrossRef]

- Orrù, G., Monaro, M., Conversano, C., Gemignani, A., & Sartori, G. (2020b). Machine learning in psychometrics and psychological research. Frontiers in psychology, 10, 2970. [CrossRef]

- Orrù, G., Conversano, C., Ciacchini, R., & Gemignani, A. (2021). A Brief Overview on the Contribution of Machine Learning in Systems Neuroscience. Current Psychiatry Research and Reviews Formerly: Current Psychiatry Reviews, 17(2), 66-71. [CrossRef]

- Pace, G., Orrù, G., Monaro, M., Gnoato, F., Vitaliani, R., Boone, K. B., ... & Sartori, G. (2019). Malingering detection of cognitive impairment with the B test is boosted using machine learning. Frontiers in Psychology, 10, 1650. [CrossRef]

- Polya, G. (2004). How to solve it: A new aspect of mathematical method (No. 246). Princeton university press.

- Sartori, G., Zangrossi, A., Orrù, G., & Monaro, M. (2017). Detection of malingering in psychic damage ascertainment. P5 Medicine and Justice: Innovation, Unitariness and Evidence, 330-341.

- Slav Petrov, Leon Barrett, Romain Thibaux, and Dan Klein. Learning accurate, compact, and interpretable tree annotation. In Proceedings of the 21st International Conference on Computational Linguistics and 44th Annual Meeting of the ACL, pages 433–440. ACL, July, 2006.

- Sutskever, I., Vinyals, O., & Le, Q. V. (2014). Sequence to sequence learning with neural networks. Advances in neural information processing systems, 27.

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., ... & Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30.

- Wallas, G. (1926). The art of thought (Vol. 10). Harcourt, Brace.

| Problem-Solving Tasks | |||

|---|---|---|---|

| Practice Problems | Correct Answer | ChatGPT Response | Answer Accuracy |

| (1) A farmer in California owns a beautiful pear tree. He supplies the fruit to a nearby grocery store. The store owner has called the farmer to see how much fruit is available for him to purchase. The farmer knows that the main trunk has 24 branches. Each branch has exactly 6 twigs. Since each twig bears one piece of fruit, how many plums will the farmer be able to deliver? | None, plums do not grow on pear trees |

To solve this problem, we need to find the total number of twigs on the pear tree. According to the information given in the problem, the main trunk has 24 branches and each branch has 6 twigs. This means that the total number of twigs is 24 branches * 6 twigs/branch = <<24*6=144>>144 twigs. Since each twig bears one piece of fruit, the farmer will be able to deliver 144 twigs * 1 fruit/twig = <<144*1=144>>144 plums. Answer: \boxed{144}. |

0 |

| Solution rate: 50% | |||

| (2) Hardy Pyle was bragging about his church’s baseball team. He said, “Three of our players hit home runs and two of those home runs were hit with the bases loaded. Our guys won 9 to 0 and not a single man crossed home plate”. How was this possible? | The men were all married, or the players were all female. | It is possible that Hardy Pyle's church's baseball team won the game 9 to 0 without any men crossing home plate because they scored all of their runs through home runs. If three players hit home runs and two of those home runs were hit with the bases loaded, then the team would have scored at least 5 runs (3 home runs + 2 home runs with the bases loaded = 5 runs). If the team scored a total of 9 runs, then the remaining 4 runs could have been scored through additional home runs or other means such as errors or walks. Therefore, it is possible for a team to win a baseball game 9 to 0 without any men crossing home plate if they score all of their runs through home runs or other means. | 0 |

| Solution rate: 21.1% | |||

| (3) In what year did Christmas and New Year’s fall in the same year? | They always do. | It is not possible to determine the year in which Christmas and New Year's fell in the same year based on the information provided in the problem. Christmas and New Year's always fall in the same year, as they are consecutive holidays that are celebrated on December 25th and January 1st, respectively. Therefore, every year, Christmas and New Year's fall in the same year. | 1 |

| Solution rate: 75% | |||

| (4) How many cubic meters of dirt are in a hole 6 meters long, 2 meters wide and one meter deep? |

There is no dirt in a hole. | To find the volume of a rectangular prism (such as a hole), you can use the formula: volume = length * width * height. In this case, the hole is 6 meters long, 2 meters wide and one meter deep, so the volume of the hole is 6 meters * 2 meters * 1 meter = <<621=12>>12 cubic meters. Therefore, there are 12 cubic meters of dirt in the hole. Answer: \boxed{12}. | 0 |

| Solution rate: 20% | |||

| (5) There is an ancient invention still used in some parts of the world today that allows people to see through walls. What is it? | Windows | It is not possible to determine the ancient invention that allows people to see through walls based on the information provided in the problem. There is no such invention that allows people to see through walls. Some forms of technology, such as certain types of radar or thermal imaging cameras, can be used to detect objects or people behind walls or other barriers, but they do not allow people to actually see through the walls. Therefore, it is not possible to accurately answer this problem. | 0 |

| Solution rate: 65% | |||

| (6) Captain Frank was out for a walk when it started to rain. He did not have an umbrella and he wasn’t wearing a hat. His clothes were soaked yet not a hair on his head got wet. How could this happen? | He was bald | It is possible that Captain Frank's hair did not get wet because he was bald. If a person is bald, they do not have any hair on their head, so it is not possible for their hair to get wet. Therefore, it is possible for Captain Frank's hair to not get wet even though his clothes were soaked in the rain, if he is bald. This is the only explanation that can be inferred from the information provided in the problem. | 1 |

| Solution rate: 65% | |||

| (7) Mr. and Mrs. Plum have six daughters and each daughter has one brother. How many people are in the Plum family? | There are nine people in the Plum family. | The Plum family consists of Mr. Plum, Mrs. Plum, and their six daughters. Each daughter has one brother, so there are an additional 6 brothers in the family. In total, there are 6 daughters + 6 brothers + Mr. Plum + Mrs. Plum = <<6+6+1+1=14>>14 people in the Plum family. Answer: \boxed{14}. | 0 |

| Solution rate: 19% | |||

| (8) If you drop a rock, would it fall more rapidly through water at 40 degrees Fahrenheit or 20 degrees Fahrenheit? Explain your answer. | 40 degrees because at 20 water is frozen | A rock would fall more rapidly through water at 40 degrees Fahrenheit than at 20 degrees Fahrenheit. The density of a substance is a measure of the mass of the substance per unit volume. The density of water decreases as the temperature increases, which means that warm water is less dense than cold water. This means that a rock will fall more slowly through warm water than through cold water because the warm water is less dense and offers more resistance to the movement of the rock. At 40 degrees Fahrenheit, the water is warmer and less dense than at 20 degrees Fahrenheit, so the rock will fall more rapidly through the water at 20 degrees Fahrenheit than at 40 degrees Fahrenheit. This is because the denser, colder water at 20 degrees Fahrenheit will offer more resistance to the movement of the rock, causing it to fall more slowly through the water. | 1 |

| Solution rate: 55% | |||

| (9) Shadow opened the door to Dr. Apple’s office and surveyed the scene. Dr. Apple’s head lay on his desk in a pool of blood. On the floor to his right lay a gun. There were powder burns on his right temple indicating that he was shot at close range. On his desk was a suicide note, and in his right hand the pen that had written it. Shadow noted that death had occurred in the past hour. All of a sudden Dr. Apple’s wife burst into the office and screamed “My husband’s been shot!” She ran toward the body and saw the note and cried, “Why would he want to kill himself?” Shadow replied “This was no suicide, it is a clear case of murder.” How does Shadow know? | The gun would be the last thing in his right hand not the pen if it were suicide. | Shadow knows that the death of Dr. Apple was a case of murder because there are powder burns on his right temple, indicating that he was shot at close range. A suicide victim typically shoots themselves at close range, so the presence of powder burns suggests that the shooter was in close proximity to the victim when the shot was fired. In a suicide, the victim is the one who fires the shot, so there would not be any powder burns on their temple. Therefore, the presence of powder burns on Dr. Apple's temple suggests that someone else was present when the shot was fired and that Dr. Apple was murdered. | 0 |

| Solution rate: 65% | |||

| (10) A man who lived in a small town in the United States married 20 different women of the same town. All are still living and he never divorced any of them. In this town, polygamy is unlawful; yet, he has broken no law. Can you explain this? | The man is a minister or justice of the peace. | It is not possible to explain how the man in the small town in the United States has married 20 different women without breaking the law on polygamy, as polygamy is the practice of having more than one spouse at the same time, and it is unlawful in the United States. It is not legal for a person to be married to multiple spouses at the same time in the United States. Therefore, it is not possible for the man in the small town to have married 20 different women without breaking the law. The information provided in the problem is contradictory and cannot be reconciled. | 0 |

| Solution rate: 10% | |||

| (11) Can a man marry his widow’s sister? Explain your answer. | No, the man would be dead. | It is not possible for a man to marry his widow's sister, as the man would have to be deceased in order for his spouse to be a widow. A widow is a woman whose spouse has died, and a person cannot marry their own spouse if they are deceased. Therefore, it is not possible for a man to marry his own widow's sister. | 1 |

| Solution rate: 55.6% | |||

| (12) Sid Shady was working for a large construction company that was very concerned about employee theft. Someone tipped the company security that Shady was the man to watch. Each night, he passed through security with a wheelbarrow full of scrap lumber, discarded electrical wires and chunks of concrete. The security guards checked the contents daily, but could find nothing of value. What was Shady stealing? | He was stealing wheelbarrows. | It is not possible to determine what Sid Shady was stealing based on the information provided in the problem. The security guards checked the contents of Shady's wheelbarrow each night and found nothing of value, so it is possible that Shady was not stealing anything at all. The company may have been mistaken in its suspicion of Shady or the person who tipped off the company may have provided incorrect or misleading information. Without additional information, it is not possible to accurately answer this problem. | 0 |

| Solution rate: 57.9% | |||

| (13) At 7 a.m., a train moving 90 kph leaves Montreal heading for Toronto. At 8 a.m., a train running 110 kph leaves Toronto heading for Montreal. Which train will be closer to Montreal when they meet? | When they meet, the trains will be at the same spot. | When the two trains meet, they will be the same distance from Montreal. This is because the trains are traveling in opposite directions and will cover equal distances from their respective starting points. At 7 a.m., the train leaving Montreal is 90 kph and at 8 a.m., the train leaving Toronto is 110 kph. If the trains are traveling in opposite directions, they will cover equal distances from their respective starting points, so when they meet, they will be the same distance from Montreal. | 1 |

| Solution rate: 28.6% | |||

| (14) A woman said to her husband: “This morning, one of my earrings fell into my coffee. Even though my cup was full, the earring did not get wet.” How could this be true? | It fell into coffee grounds. | Yes, it is possible that the woman's earring fell into the coffee grounds rather than the coffee itself, which would explain why the earring did not get wet even though the cup was full. When coffee is brewed, the water passes through the coffee grounds and the liquid coffee is produced. The coffee grounds remain in the filter or coffee pot and do not get wet. If the earring fell into the coffee grounds rather than the liquid coffee, it would not get wet. This is a possible explanation for the woman's statement that her earring fell into her coffee but did not get wet. | 1 |

| Solution rate: 50% | |||

| (15) Barney Dribble is carrying a pillow case full of feathers. Hardy Pyle is carrying three pillow cases the same size as Barney’s, yet Hardy’s load is lighter. How can this be? | Hardy’s pillow cases are empty | Yes, if Hardy's pillow cases are empty, they would be lighter than Barney's pillow case filled with feathers, which would explain how Hardy's load is lighter even though he is carrying three pillow cases the same size as Barney's. I'm glad that I could help clarify this for you. If you have any other questions, please don't hesitate to ask. | 1 |

| Solution rate: 60% | |||

| Total score | 7/15 | ||

| Problem-Solving Tasks | |||

|---|---|---|---|

| Transfer Problems | Correct Answer | GPT’s Response | Answer Accuracy |

| (1) Professor Bumble was driving along in his old car when suddenly it shifted gears by itself. He paid no attention and kept on driving. Why wasn’t he concerned? | He was driving an automatic | The Professor Bumble was not concerned when the car shifted gears by itself because the car was most likely an Automatic gear shifting car. In an automatic car, gears are changed automatically by the car itself without the need for manual shifting from the driver. This means that it's normal for the car to shift gears by itself and the driver doesn't need to take any action, Professor Bumble would expect this behavior from the car. Therefore, in this case, he didn't pay attention and kept on driving because he was not concerned that anything was wrong. | 1 |

| Solution rate: 40% | |||

| (2) Which would be worth more, a pound of $10 pure gold coins or half a pound of $20 pure gold coins; or would they be worth the same amount? Explain your answer. | A pound of gold is always worth more than half a pound of gold. | Both a pound of $10 pure gold coins and half a pound of $20 pure gold coins would be worth the same amount. Even though the $20 pure gold coins are worth twice as much as the $10 pure gold coins, half a pound of $20 pure gold coins would have half the weight as a pound of $10 pure gold coins. Since the value of the gold coins is based on their weight and gold's value is per ounce, the weight of the coins will determine the value of it. And since the weight of both options is the same, the value would be the same as well. Therefore, the answer is that both options would be worth the same amount, regardless of the face value of the coins. | 0 |

| Solution rate: 45% | |||

| (3) Dee Septor, the famous magician, claimed to be able to throw a ping-pong ball so that it would go a short distance, come to a dead stop, and then reverse itself. He also added that he would not bounce the ball against any object or tie anything to it. How could he perform this feat? | He could throw it straight up |

Dee Septor, the magician, could perform this feat by using a hidden magnet. He could throw a ping-pong ball that has a small magnet embedded inside it, and as it comes to a stop, the magnet would be attracted to a hidden magnet that he has positioned in the opposite direction. This would cause the ball to reverse its direction without bouncing against any object or having anything tied to it. Another way, he could use air pressure to change the direction of the ball, by blowing air to the ball as it comes to a stop, it will change its direction. This is a common trick used by magicians to make it look like the ball is changing direction on its own. Both of these explanations involve the use of hidden and unseen elements to create the illusion of the ball reversing itself, and are not actually using the ball's properties to reverse it, they are just hidden tricks to make the audience think that the ball is reversing its direction. |

0 |

| Solution rate: 36.4% | |||

| (4) A prisoner was attempting to escape from a tower. He found in his cell a rope that was half long enough to permit him to reach the ground safely. He divided the rope in half, tied the two parts together, and escaped. How could he have done this? |

He unravelled the rope and tied the two pieces together |

The prisoner could have done this by tying the two halves of the rope together, forming a knot and making it whole again. By doing this he could have doubled the strength of the rope, and this would have made it long enough to safely reach the ground. Another way, he could have used the two halves of the rope as two separate ropes to make a ladder by tying one end of each rope on a different level of the tower, and then climb down each step of the ladder. This way he can combine the two halves to reach the ground. In this problem, the prisoner used the limited resources he had at his disposal, his intelligence and creativity to overcome the obstacle and find a solution to escape. |

0 |

| Solution rate: 40% | |||

| (5) Uriah Fuller, the famous super-psychic, can tell you the score of any baseball game before it starts. What is his secret? | The score is always zero to zero before a game starts |

As a language model AI, I do not have the ability to access current or past information about any baseball games, because my training data goes until 2021 and my browsing ability is disabled. But to answer your question, Uriah Fuller the super-psychic would have some kind of ability to predict the future, such as precognition, clairvoyance or divination. He could use this ability to know the outcome of a baseball game before it starts. It is important to note that while some people may claim to have psychic abilities, there is no scientific evidence to support the existence of these abilities, and Uriah Fuller's "super-psychic" abilities are also fictional and not real. |

0 |

| Solution rate: 30% | |||

| (6) Two mothers and two daughters were fishing. They managed to catch one big fish, one small fish and one fat fish. Since only three fish were caught, how is it possible that they each took home a fish? | One of the mothers was also a daughter |

This question is a classic riddle, the solution to this problem is not a straightforward one. The key to the answer is to realize that the mothers are the two daughters' mother, and the daughters are the two mothers' daughters. So, they are a family of three: a grandmother, a mother, and a daughter. With this information, it becomes clear that each of them is able to take a fish home, because they are three different people, not four. The riddle is describing a scenario that is not intended to be taken literally, instead, it is meant to present a problem in a way that requires a different way of thinking, to find the solution. |

1 |

| Solution rate: 30% | |||

| (7) Calendars made in England do not show Lincoln’s Birthday, of course. Do these calendars show the fourth of July? Explain your answer. | Yes, July always has a fourth day | The answer is no, calendars made in England do not show the fourth of July. The reason is that the fourth of July is Independence Day in the United States, and it is not a national holiday in England. Lincoln's Birthday is also not a national holiday in England, as it is in the United States. This means that neither Lincoln's Birthday nor the 4th of July are typically included on calendars made in England. As such, both holidays are specific to the United States and not recognized in England. | 0 |

| Solution rate: 42.1% | |||

| (8) A dealer in antique coins got an offer to buy a beautiful bronze coin. The coin had an emperor’s head on one side and the date 544 BC stamped on the other. The dealer examined the coin and realized that it was a fake. How did he know the coin was phony? | Christ was had not been born, so a coin from that time would not be marked BC | The dealer knew the coin was a fake because the date 544 BC was stamped on the other side. This is not possible because the concept of "BC" (Before Christ) was not created until several centuries later, and the concept of using "BC" as a notation to indicate a date before the birth of Jesus Christ was not widely used until the Middle Ages. Additionally, the concept of a calendar based on the birth of Jesus was not established until several centuries after the death of Jesus. This means that the concept of "BC" would not have existed at the time period represented by the date 544 BC. Therefore, the dealer realized that the coin was a fake because the date stamped on it was not accurate for the time period it purported to be from. | 1 |

| Solution rate: 35% | |||

| (9) There is a town in Northern Ontario where 5% of all the people living in the town have unlisted phone numbers. If you selected 100 names at random from the town’s phone directory, on average, how many of these people selected would have unlisted phone numbers? | Zero, they would not be listed in the directory | If 5% of all the people in the town have unlisted phone numbers, that means for every 100 people, 5 of them will have unlisted phone numbers. So, if you select 100 names at random from the phone directory, on average, 5 of those people selected will have unlisted phone numbers. | 0 |

| Solution rate: 30% | |||

| (10) It is estimated that the earth weighs 6 sextillion tons. How much more would the earth weigh if one sextillion tons of concrete and stone were used to build a wall? | It would weigh the same since all materials are taken from the original weight of the earth | A sextillion is a very large number, it is equivalent to 1 followed by 21 zeroes (10^21). Since the wall is made of concrete and stone, the weight of the wall will be added to the earth's weight. If one sextillion tons of concrete and stone were used to build the wall, the earth would weigh 7 sextillion tons, which is one sextillion tons more than its current weight. | 0 |

| Solution rate: 31.8% | |||

| (11) A young boy turned off the light in his bedroom and managed to get into bed before the room was dark. If the bed is ten feet from the light switch and he used no wires, strings or other contraptions to turn off the light, how did he do it? | It was still daylight | The answer is that the light was turned off remotely. The boy is able to turn the light off before the room is dark because he is using a remote control or some other form of wireless technology to turn off the light instead of physically moving to the switch and turning it off. | 0 |

| Solution rate: 35% | |||

| (12) While on safari in the wild jungles of Africa, Professor Quantum woke one morning and felt something in the pocket of his shorts. It had a head and tail, but no legs. When Quantum got up he could feel it move inside his pocket. Quantum, however, showed little concern and went about his morning rituals. Why such a casual attitude toward the thing in his pocket? |

It was a coin | Answer: The object in question was a coin. | 1 |

| Solution rate: 36.8% | |||

| (13) Professor Bumble, who is getting on in years, is growing absent minded. On the way to a lecture one day, he went through a red light and turned down a one-way street in the wrong direction. A policeman observed the entire scene but did nothing about it. How could Professor Bumble get away with such behaviour? Montreal when they meet? | He was on foot | Answer: He was walking not driving | 1 |

| Solution rate: 30% | |||

| (14) A giant inverted steel pyramid is perfectly balanced on its point. Any movement of the pyramid will cause it to topple over. Underneath the pyramid is a $100 bill. How would you remove the bill without disturbing the pyramid? | Burn or tear the dollar bill | You could use a long, thin tool, such as a chopstick or a pencil, to slide the bill out from underneath the pyramid without physically touching the pyramid itself. This way, the pyramid remains balanced and undisturbed. | 0 |

| Solution rate: 40% | |||

| (15) Hardy Pyle was washing windows on a high-rise office building when he slipped and fell off a sixty-foot ladder onto the concrete sidewalk below. Incredibly, he did not injure himself in any way. How was this possible? | He was on the bottom rung of the ladder | The ladder was leaning against a wall on the first floor, Hardy was not at a high altitude when he fell. | 0 |

| Solution rate: 31.8% | |||

| Total score | 5/15 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).