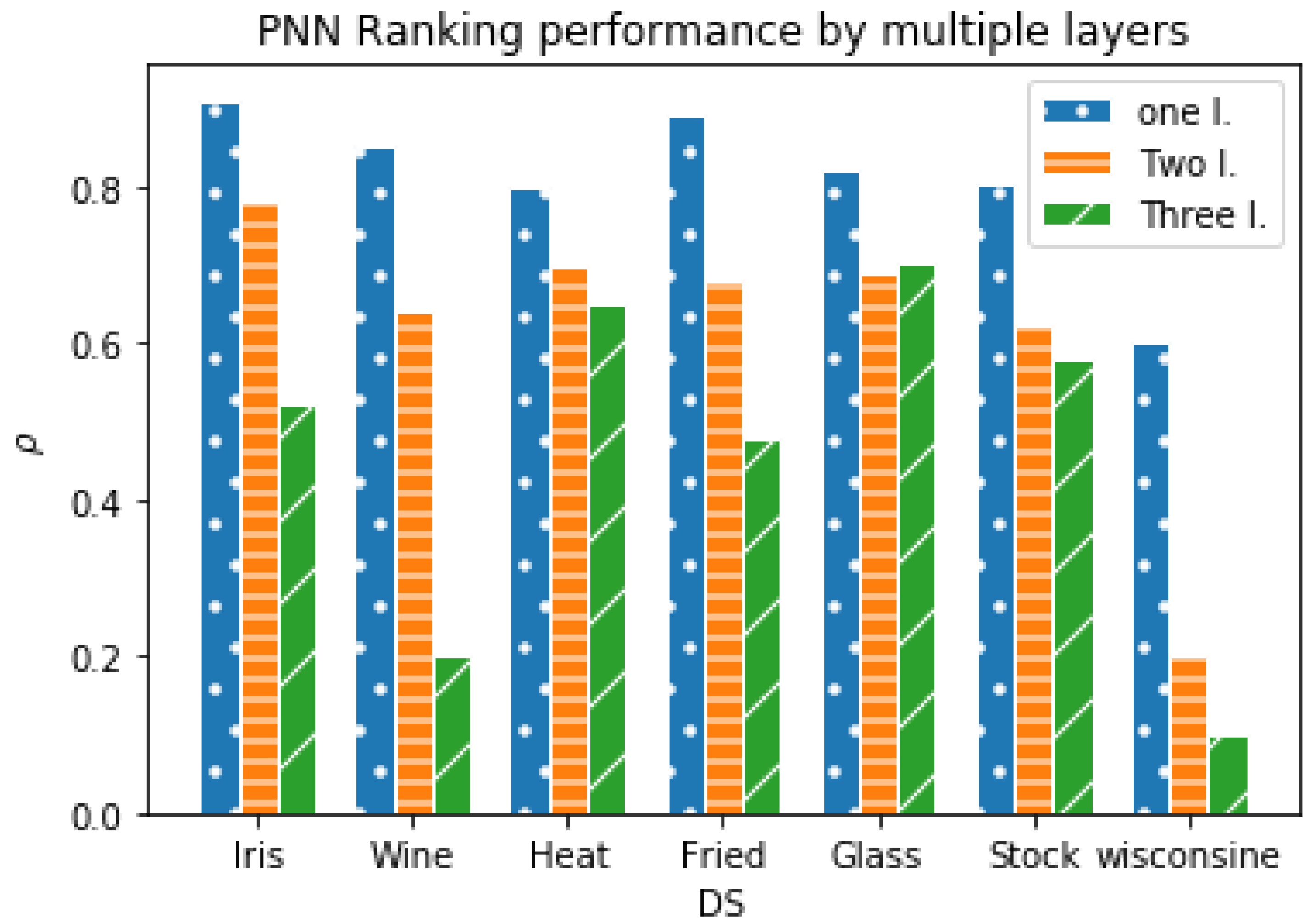

1. Introduction

PREFERENCE learning (

PL) is an extended paradigm in machine learning that induces predictive preference models from experimental data [

1,

2,

3].

PL has applications in various research areas such as knowledge discovery and recommender systems [

4]. Objects, instances, and label ranking are the three main categories of

PL domain. Of those, label ranking (

LR) is a challenging problem that has gained importance in information retrieval by search engines [

5,

6]. Unlike the common problems of regression and classification [

7,

8,

9,

10,

11,

12,

13], label ranking involves predicting the relationship between multiple label orders. For a given instance

from the instance space

, there is a label

L associated with

,

, where

}, and

n is the number of labels.

LR is an extension of multi-class and multi-label classification, where each instance

is assigned an ordering of all the class labels in the set

L. This ordering gives the ranking of the labels for the given

object. This ordering can be represented by a permutation set

. The label order has the following three features. irreflexive where

,transitive where (

)

and asymmetric

. Label preference takes one of two forms, strict and non-strict order. The strict label order (

) can be represented as

and for non-restricted total order

can be represented as

, where

are the label indexes and

and

are the ranking values of these labels.

For the non-continuous permutation space, The order is represented by the relations mentioned earlier and the ⊥ incomparability binary relation. For example the partial order can be represented as where 0 represents an incomparable relation since is not comparable to ().

Various label ranking methods have been introduced in recent years [

14], such as decomposition-based methods, statistical methods, similarity, and ensemble-based methods. Decomposition methods include pairwise comparison [

15,

16], log-linear models and constraint classification [

17]. The pairwise approach introduced by Hüllermeier [

18] divides the label ranking problem into several binary classification problems to predict the pairs of labels

or

for an input

x. Statistical methods includes decision trees [

19], instance-based methods (Plackett-Luce) [

20] and Gaussian mixture model based approaches. For example, Mihajlo uses Gaussian mixture models to learn soft pairwise label preferences [

21].

The artificial neural network (

ANN) for ranking was first introduced as (RankNet) by Burge to solve the problem of object ranking for sorting web documents by a search engine [

22]. Rank net uses gradient descent and probabilistic ranking cost function for each object pair. The multilayer perceptron for label ranking (

MLP-LR) [

23] employs a network architecture using a

sigmoid activation function to calculate the error between the actual and expected values of the output labels. However, It uses a local approach to minimize the individual error per output neuron by subtracting the actual-predicted value and using Kendall error as a global approach. Neither direction uses a ranking objective function in backpropagation (

BP) or learning steps.

The deep neural network (

DNN) is introduced for object ranking to solve document retrieval problems. RankNet [

22], RankBoost [

24], and Lambda MART [

25], and deep pairwise label ranking models [

26], are convolution neural Network (

CNN) approaches for the vector representation of the query and document-based.

CNN is used for image retrieval [

27] and label classification for remote sensing and medical diagnosing [

28,

29,

30,

31,

32,

33,

34,

35]. A multi-valued activation function has been proposed by Moraga and Heider [

36] to propose a Generalized Multiple–valued Neuron with a differentiable soft staircase activation function, which is represented by a sum of a set of sigmoidal functions. In addition, Aizenberg proposed a generalized multiple-valued neuron using a convex shape to support complex numbers neural network and multi-values numbers [

37]. Visual saliency detection using the Markov chain model is one approach that simulates the human visual system by highlighting the most important area in an image and calculating superpixels as absorbing nodes [

38,

39,

40]. However, this approach needs a saliency optimization on the results and has calculation cost [

41,

42].

Particle Swarm Optimization in movement detection is based on the concept of variation and inter-frame difference for feature selection. The swarm algorithms are mainly used in human motion detection in sports, and it is used based on probabilistic optimization algorithm [

43,

44,

45,

46] and CNN [

47].

Some of the methods mentioned above and their variants have some issues that can be broadly categorized into three types:

- 1)

The ANN Predictive probability can be enhanced by limiting the output ranking values in the SS functions to a discrete value instead of a range of values of the rectified linear unit (Relu), Sigmoid, or Softmax activation functions. The predictive is enhanced by using the SS function slope as a step function to create discrete values, accelerating the learning by reducing the output values to accelerate the ranking convergence.

- 2)

The drawback of ranking based on the classification technique ignores the relation between multiple labels: When the ranking model is constructed using binary classification models, these methods cannot consider the relationship between labels because the activation functions do not provide deterministic multiple values. Such ranking based on minimizing pairwise classification errors differs from maximizing the label ranking’s performance considering all labels. This is because pairs have multiple models that may reduce ranking unification by increasing ranking pairs conflicts where there is no ground truth, which has no generalized model to rank all the labels simultaneously. For example, for and for the ranking is unique; however, pairwise classification creates no ground truth ranking for the pair and which adds more complexity to the learning process.

- 3)

Ignoring the relation between features. The convolution kernel has a fixed size that detects one feature per kernel. Thus, it ignores the relationship between different parts of the image. For example, CNN detects the face by combining features (the mouth, two eyes, the face oval, and a nose) with a high probability of classifying the subject without learning the relationship between these features. For example, the proposed PN kernel start attention to the important features that have a high number of pixel ranking variation.

The main contribution of the proposed neural network is

Where PNN has several advantages over existing label ranking methods and CNN classification approaches.

- 1)

PNN uses the smooth staircase SS as an activation function that enhances the predictive probability over the sigmoid and Softmax due to the step shape that enhances the predictive probability from a range from -1 to 1 in the sigmoid to almost discrete multi-values.

- 2)

PNN uses gradient ascent to maximize the spearman ranking correlation coefficient. In contrast, other classification-based methods such as MLP-LR use the absolute difference of root mean square error (RMS) by calculating the differences between actual and predicted ranking and other RMS optimization, which may not give the best ranking results.

- 3)

PNN is implemented directly as a label ranker. It uses staircase activation functions to rank all the labels together in one model. The SS or PSS functions provide multiple output values during the conversions; however, MLP-LR and RankNet use sigmoid and Relu activation functions. These activation functions have a binary output. Thus, it ranks all the labels together in one model instead of pairwise ranking by classification.

- 4)

PN uses a novel approach for learning the feature selection by ranking the pixels and using different sizes of weighted kernels to scan the image and generate the features map.

The next section explains the Ranker network experiment, problem formulation, and the PNN components (Activation functions, Objective function, and network structure) that solve the Ranker problems and comparison between Ranker network and PNN.

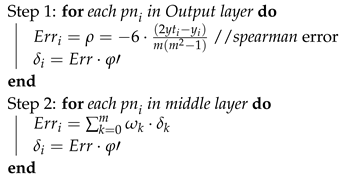

Figure 1.

activation function where and step width and and 5 in (a,b) respectively.

Figure 1.

activation function where and step width and and 5 in (a,b) respectively.

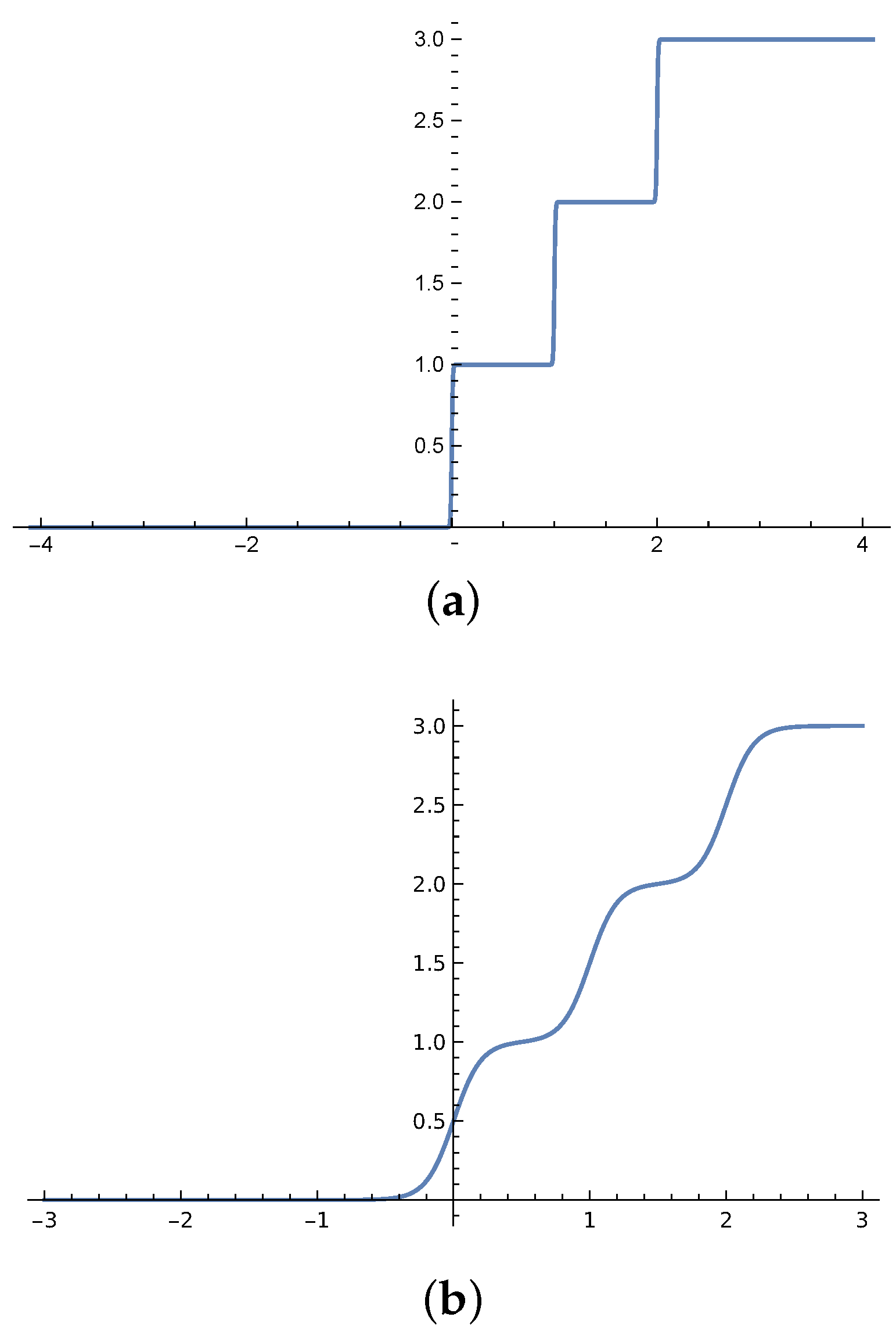

Figure 2.

activation function where , 30 and 20 and boundary , 30 and 1 and scale factor for the decimal place is and 10 for ranking/classification, extreme label ranking/classification and regression in (a–c) respectively.

Figure 2.

activation function where , 30 and 20 and boundary , 30 and 1 and scale factor for the decimal place is and 10 for ranking/classification, extreme label ranking/classification and regression in (a–c) respectively.

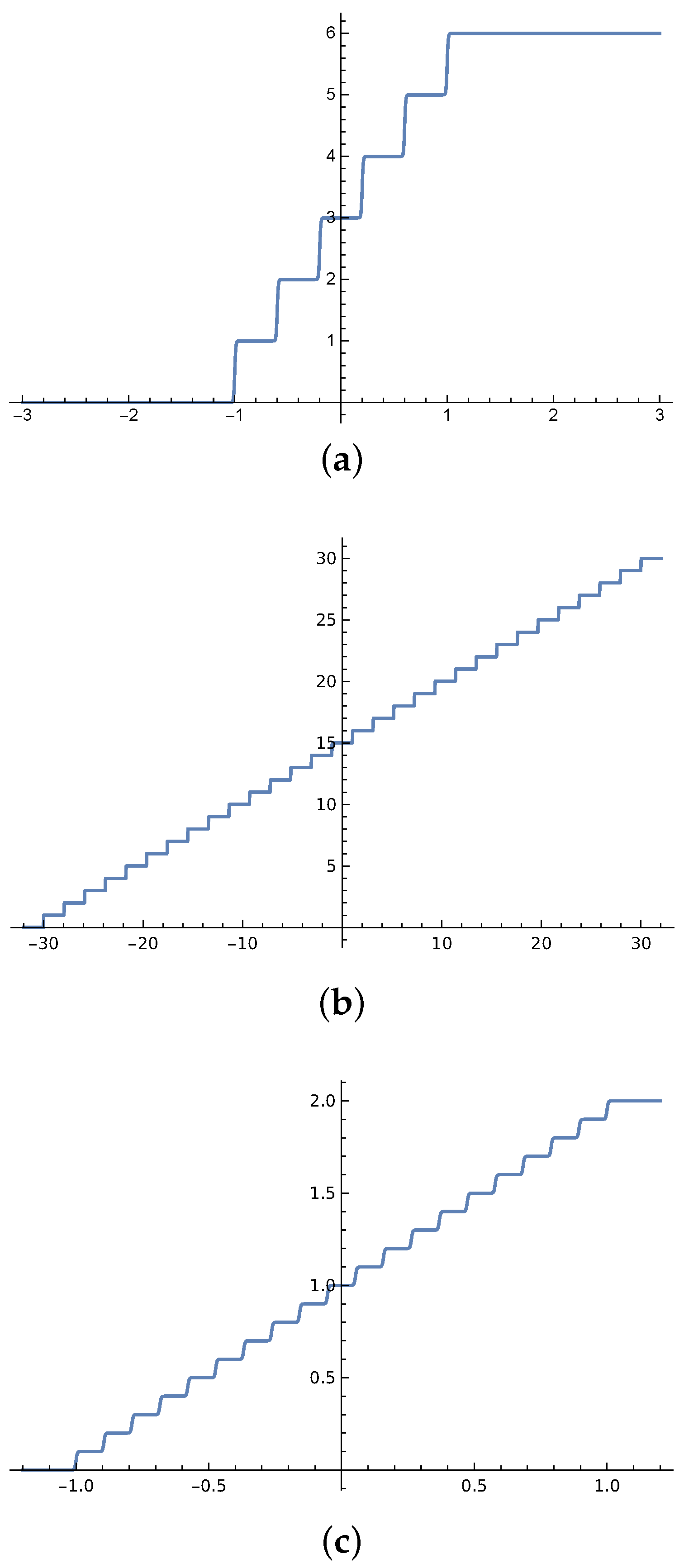

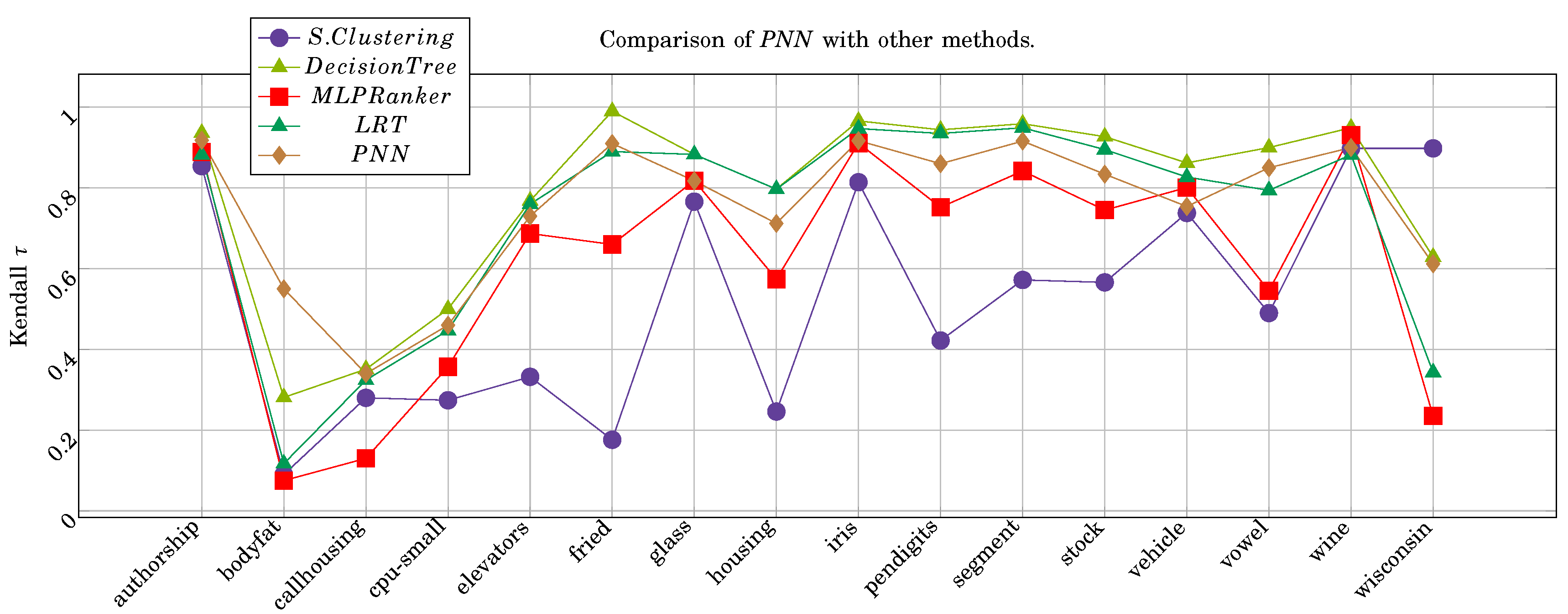

Figure 4.

Multiple layer label ranking comparison of benchmark data sets [

52] results using the

PNN and

SS functions after 100 epochs and learning rate = 0.007.

Figure 4.

Multiple layer label ranking comparison of benchmark data sets [

52] results using the

PNN and

SS functions after 100 epochs and learning rate = 0.007.

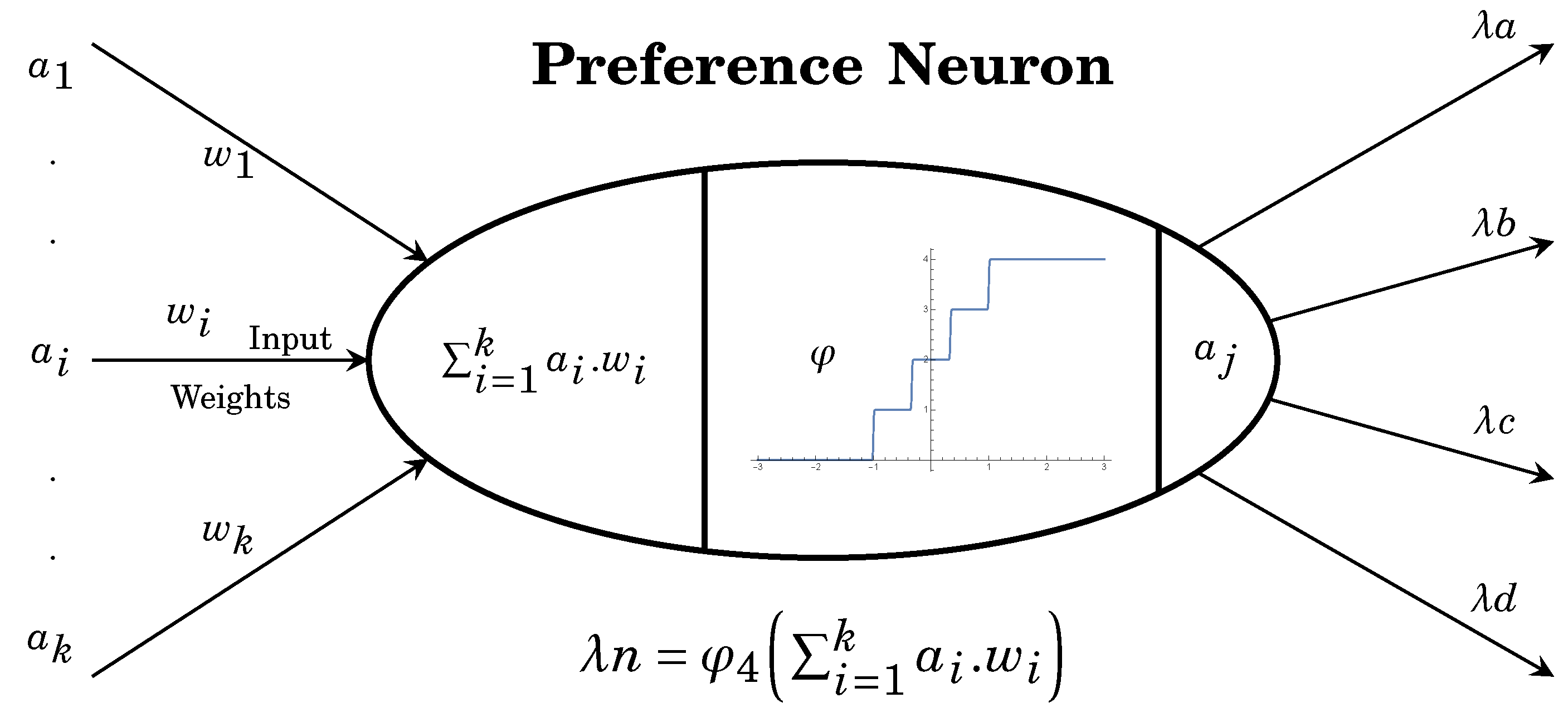

Figure 5.

The structure of preference neuron where .

Figure 5.

The structure of preference neuron where .

Figure 7.

The structure used in both ranker

ANN and

PNN where

,

and

, per

,

} where

. and comparison of the convergence for both NN’s. The demo video of convergence of two NN in the link [

48].

Figure 7.

The structure used in both ranker

ANN and

PNN where

,

and

, per

,

} where

. and comparison of the convergence for both NN’s. The demo video of convergence of two NN in the link [

48].

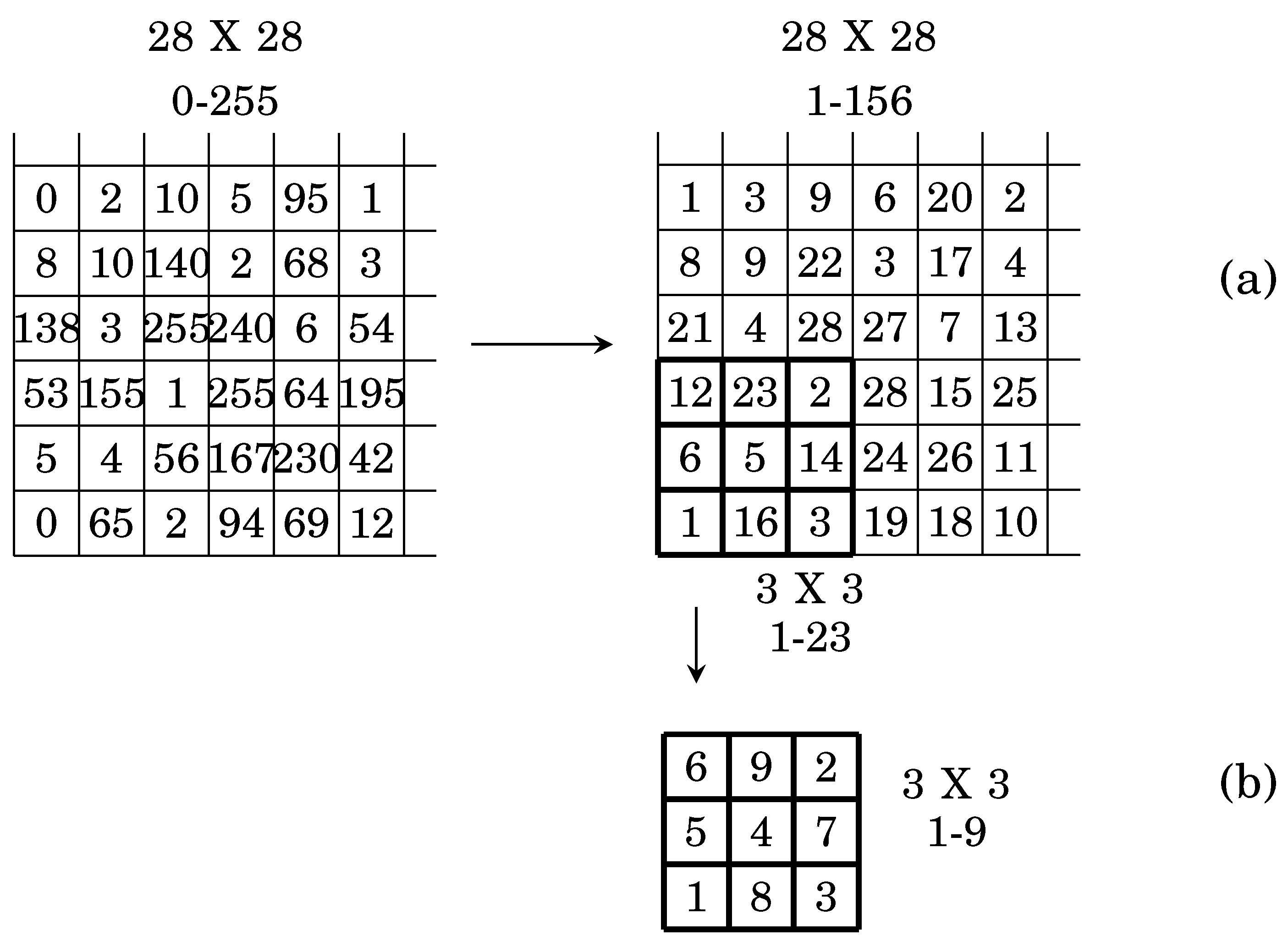

Figure 8.

Image pixel sorting for the flattened windows in (a,b) respectively.

Figure 8.

Image pixel sorting for the flattened windows in (a,b) respectively.

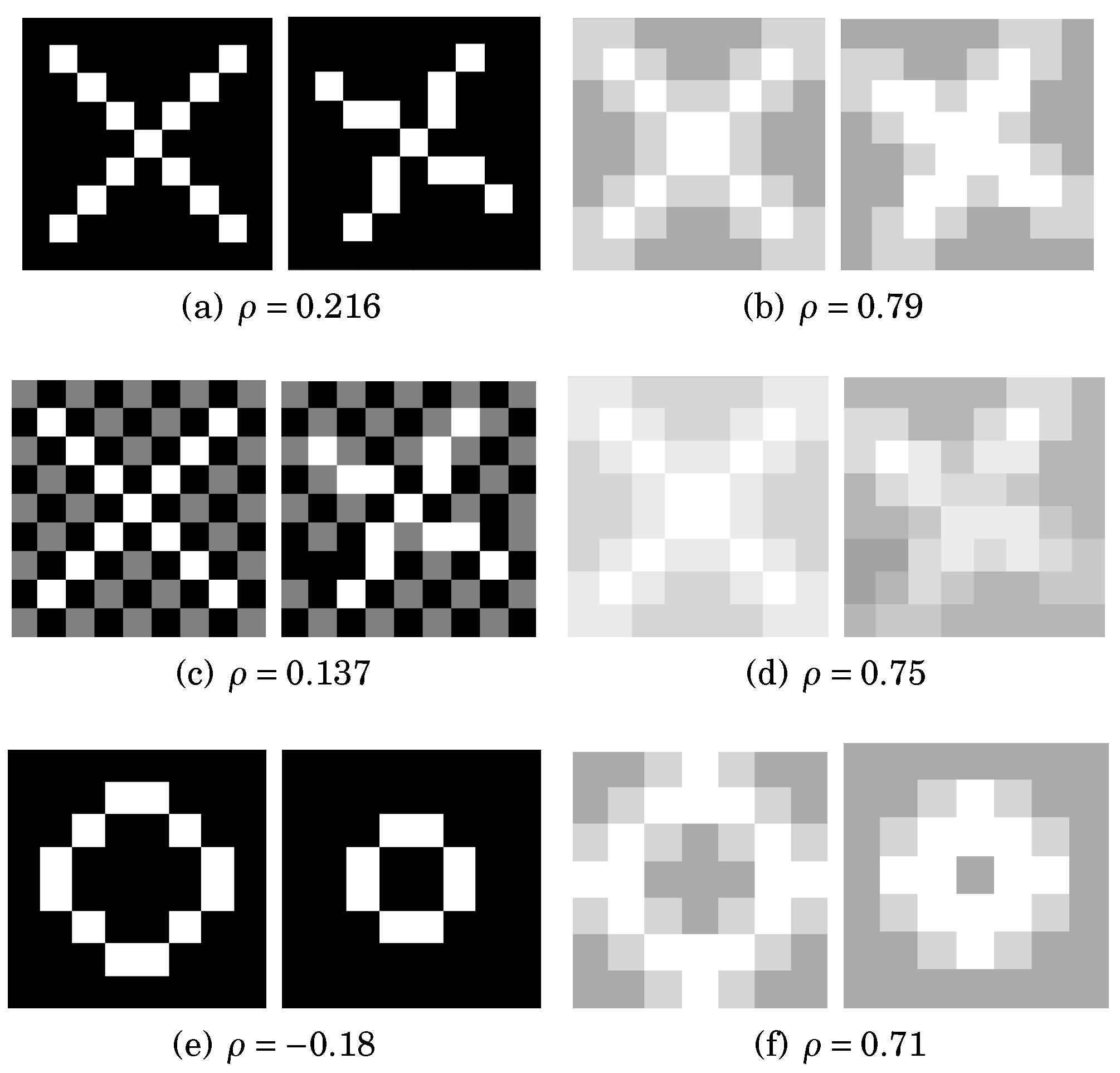

Figure 9.

Sample of moving objects in (a,b) without and with averaging by window 2x2. The ranking of two flattened images are and in (a,b), respectively. Sample of moving noisy object in (c,d) without and with image averaging by a window of 2x2. The ranking of two flattened images are , and in (c,d) respectively. ranking scaled circle in (e,f), respectively.

Figure 9.

Sample of moving objects in (a,b) without and with averaging by window 2x2. The ranking of two flattened images are and in (a,b), respectively. Sample of moving noisy object in (c,d) without and with image averaging by a window of 2x2. The ranking of two flattened images are , and in (c,d) respectively. ranking scaled circle in (e,f), respectively.

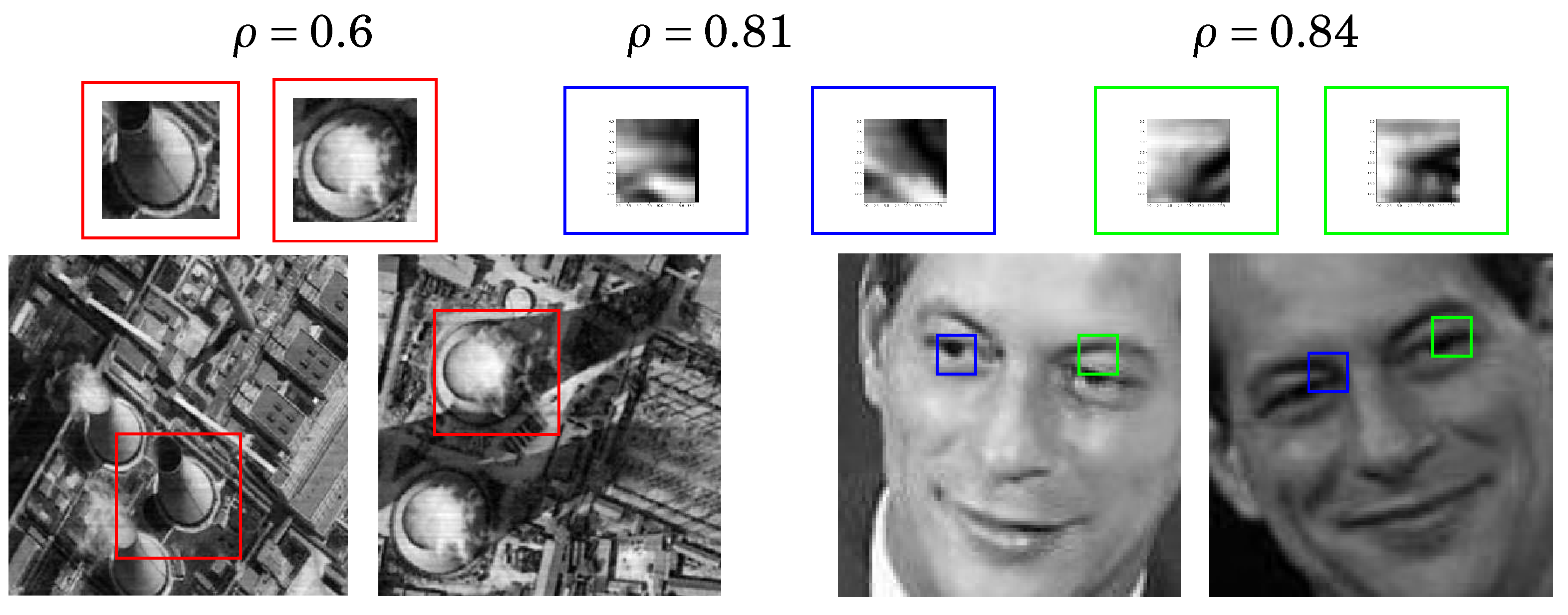

Figure 10.

Detecting the similarity in remote sensing and face recognition by ranking the image pixels after averaging the pixels using a 2x2 window.

Figure 10.

Detecting the similarity in remote sensing and face recognition by ranking the image pixels after averaging the pixels using a 2x2 window.

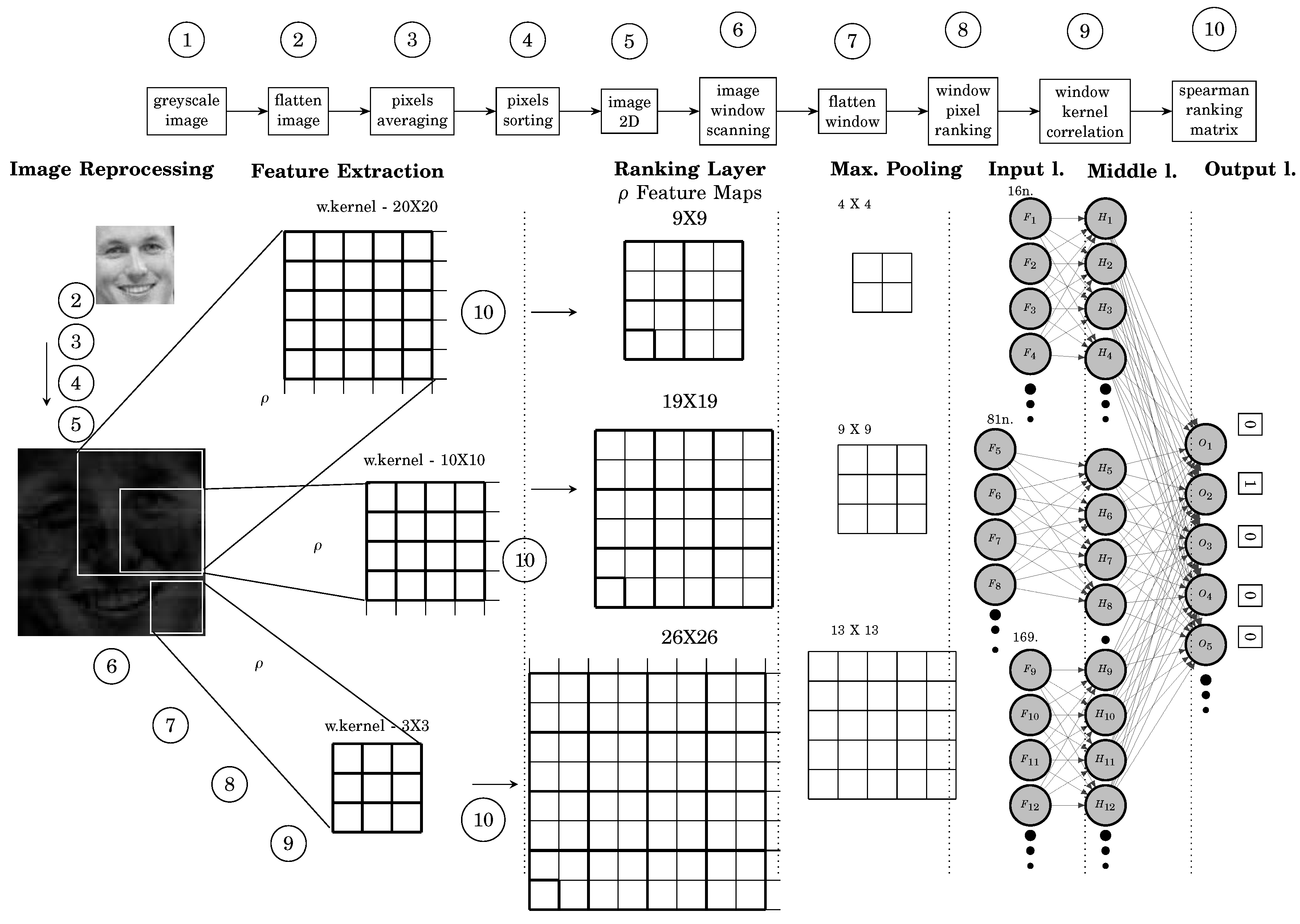

Figure 11.

The PN structure has three kernels and three PNNs where , and , per ,}.

Figure 11.

The PN structure has three kernels and three PNNs where , and , per ,}.

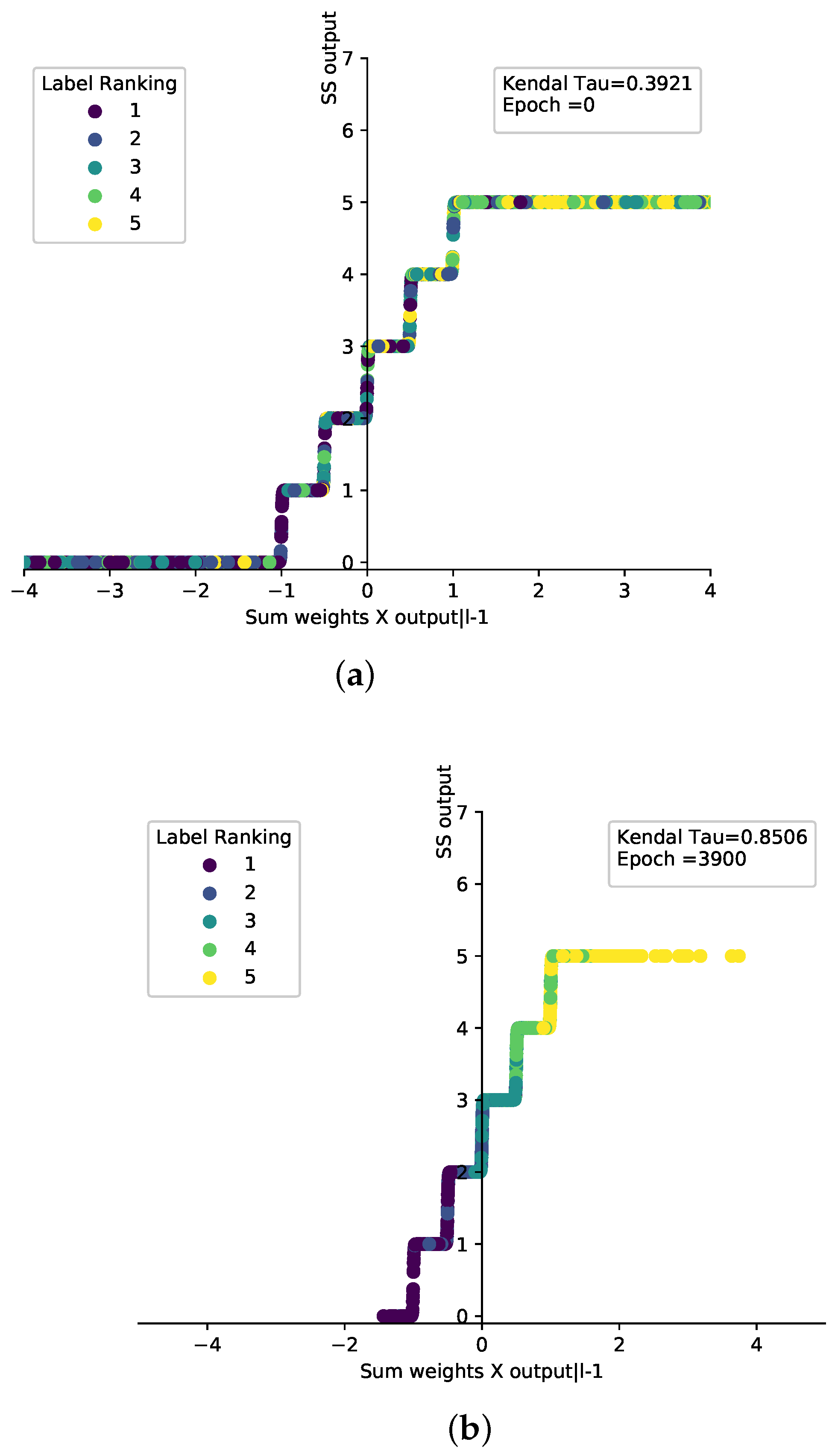

Figure 12.

Visualizing the ranking of stock dataset [

52] has five labels using

SS activation function of stock data set at epoch 0 and 3900 in (

a,

b) respectively.

Figure 12.

Visualizing the ranking of stock dataset [

52] has five labels using

SS activation function of stock data set at epoch 0 and 3900 in (

a,

b) respectively.

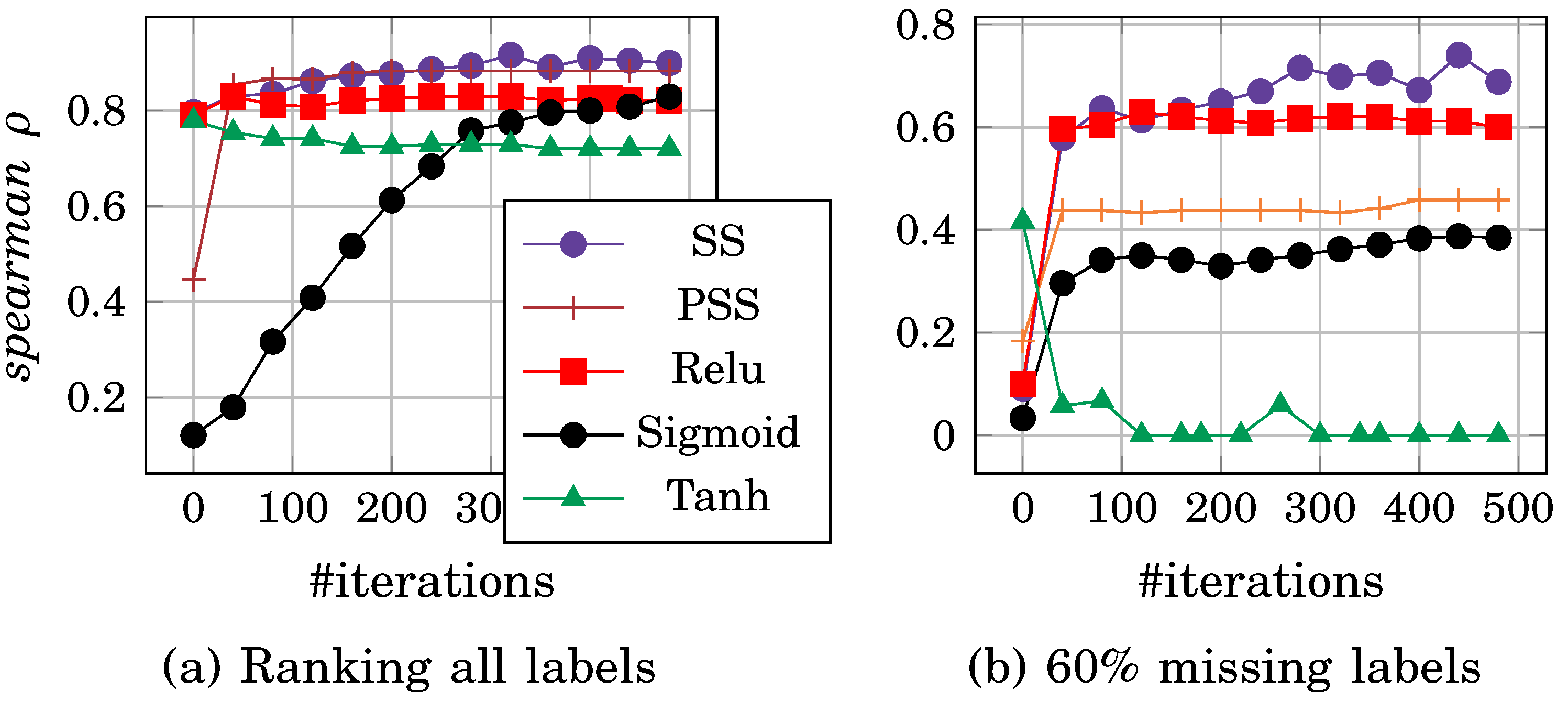

Figure 13.

PNN activation function comparison using complete labels and 60% missing labels in (a,b), respectively.

Figure 13.

PNN activation function comparison using complete labels and 60% missing labels in (a,b), respectively.

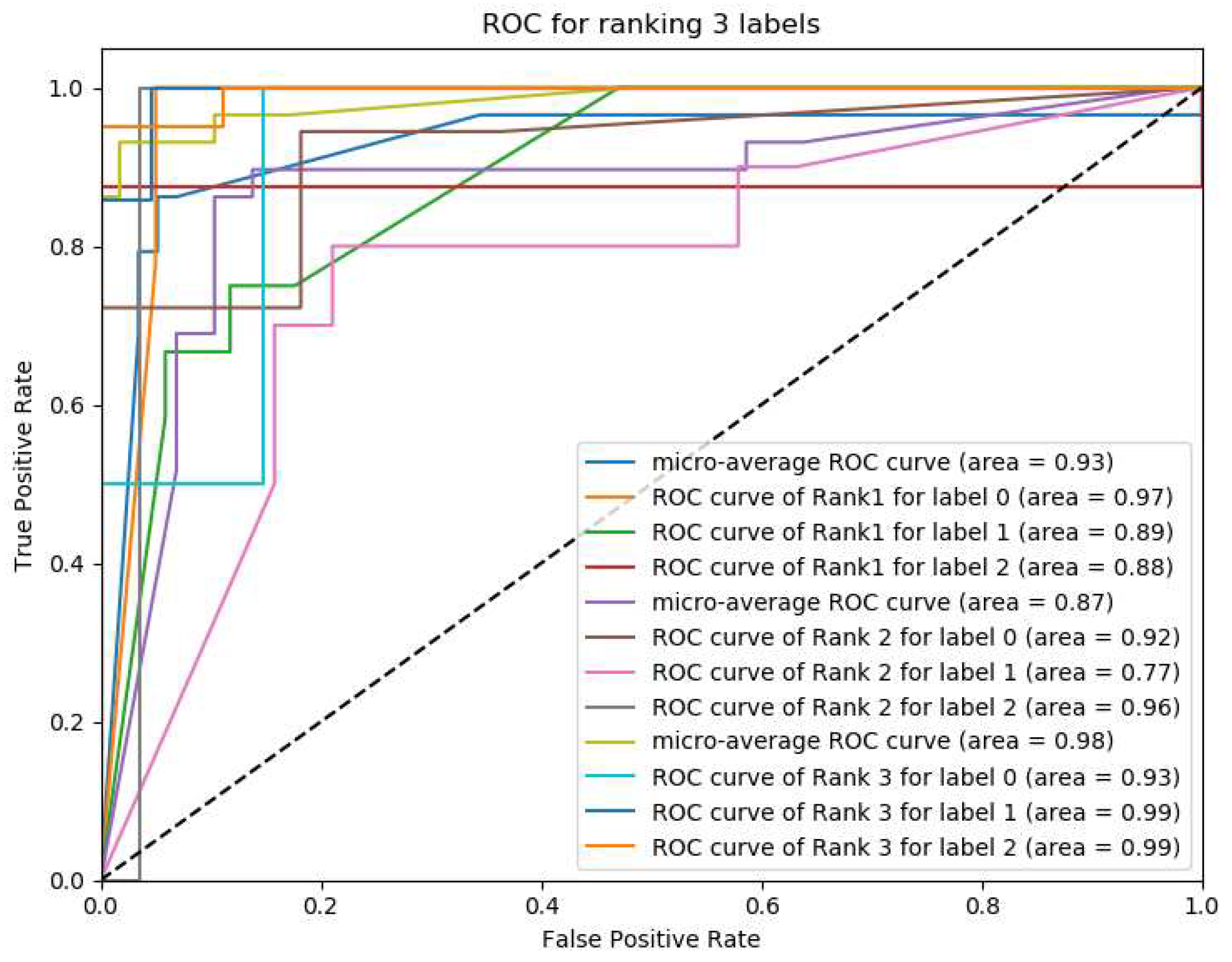

Figure 14.

ROC of three labels ranking on the wine data set using PNN h.n=100 and 50 epochs.

Figure 14.

ROC of three labels ranking on the wine data set using PNN h.n=100 and 50 epochs.

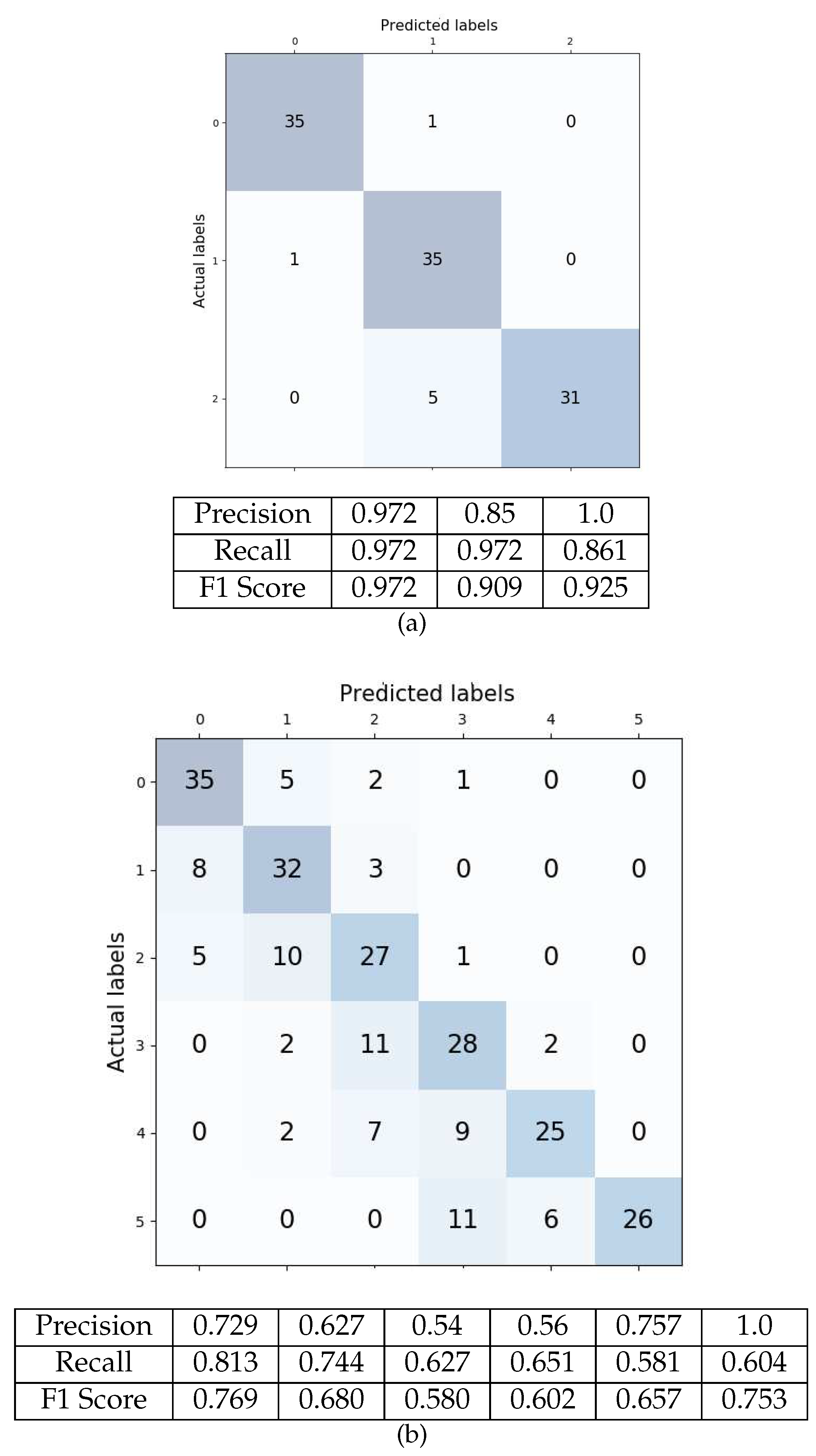

Figure 15.

The confusion matrix of testing the wine, glass data sets where = 0.947, 0.84, Accuracy = 0.935 and 0.8 in (a,b) respectively.

Figure 15.

The confusion matrix of testing the wine, glass data sets where = 0.947, 0.84, Accuracy = 0.935 and 0.8 in (a,b) respectively.

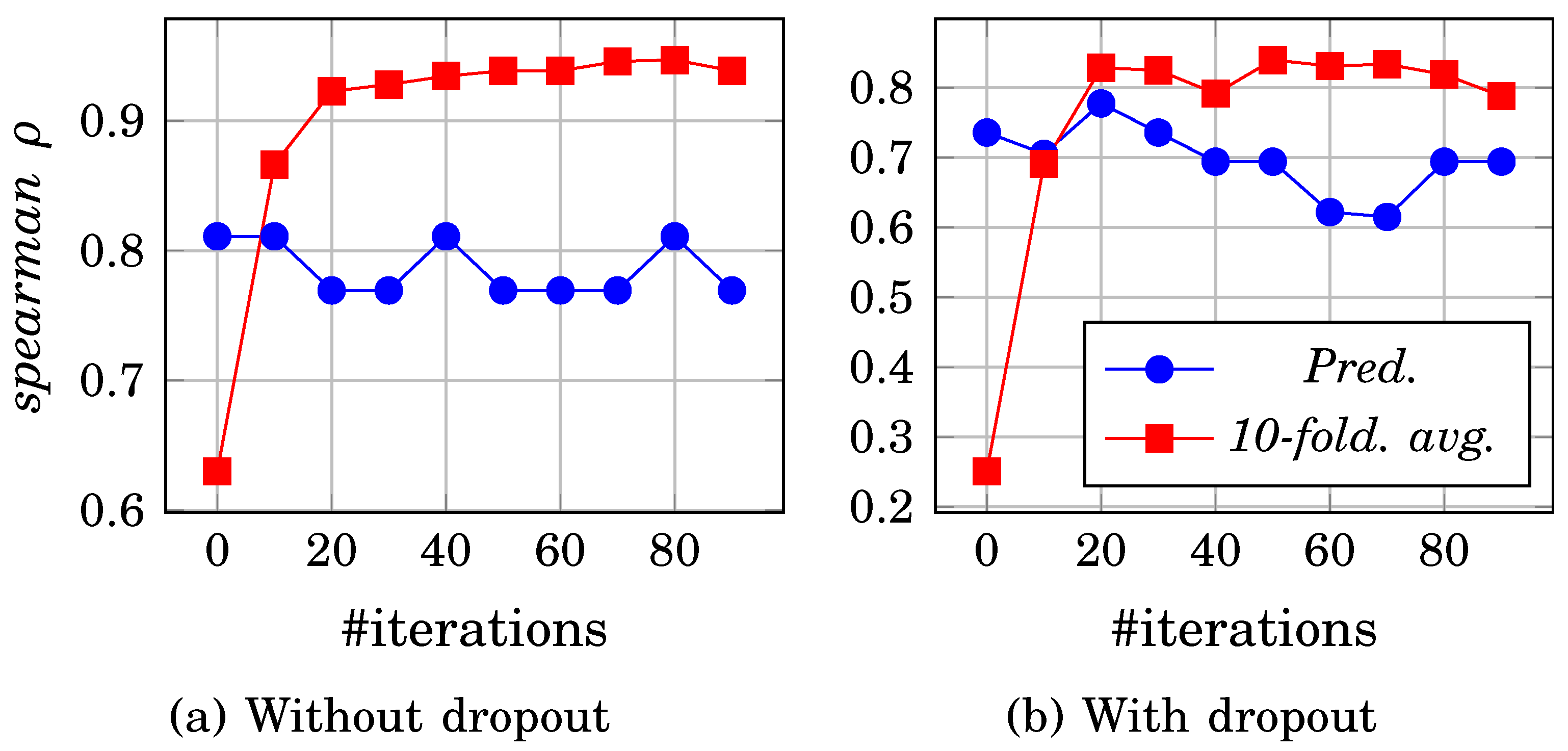

Figure 16.

Training and validation performance without and with dropout regulation approach in (a,b) respectively.

Figure 16.

Training and validation performance without and with dropout regulation approach in (a,b) respectively.

Figure 17.

Ranking performance comparison of PNN with other approaches.

Figure 17.

Ranking performance comparison of PNN with other approaches.

Table 1.

ANN types used in initial experiment.

Table 1.

ANN types used in initial experiment.

| Type |

Ranker ANN

|

PNN |

| Activation Fun. |

ReLU,Sigmoid |

PSS, SS |

| Gradient |

Descent |

Ascent |

| Objective Fun. |

RMS |

|

| Stopping Criteria. |

|

|

Table 2.

Benchmark data sets for label ranking; preference mining [

57], real-world data sets [

58] and semi-synthetic (

s-s) [

52].

Table 2.

Benchmark data sets for label ranking; preference mining [

57], real-world data sets [

58] and semi-synthetic (

s-s) [

52].

| Type |

DS |

Cat. |

#Inst. |

#Attr. |

#lbl. |

| Mining |

algae |

chemical stat. |

317 |

11 |

7 |

| |

german.2005 |

user pref. |

413 |

31 |

5 |

| |

german.2009 |

user pref. |

413 |

31 |

5 |

| |

sushi |

user pref. |

5000 |

13 |

7 |

| |

top7movies |

user pref. |

602 |

7 |

7 |

| Real |

cold |

biology |

2,465 |

24 |

4 |

| |

diau |

biology |

2,465 |

24 |

7 |

| |

dtt |

biology |

2,465 |

24 |

4 |

| |

heat |

biology |

2,465 |

24 |

6 |

| |

spo |

biology |

2,465 |

24 |

11 |

| Semi-Synthesized |

authorship |

A |

841 |

70 |

4 |

| |

bodyfat |

B |

252 |

7 |

7 |

| |

calhousing |

B |

20,640 |

4 |

4 |

| |

cpu-small |

B |

8192 |

6 |

5 |

| |

elevators |

B |

16,599 |

9 |

9 |

| |

fried |

B |

40,769 |

9 |

5 |

| |

glass |

A |

214 |

9 |

6 |

| |

housing |

B |

506 |

6 |

6 |

| |

iris |

A |

150 |

4 |

3 |

| |

pendigits |

A |

10,992 |

16 |

10 |

| |

segment |

A |

2310 |

18 |

7 |

| |

stock |

B |

950 |

5 |

5 |

| |

vehicle |

A |

846 |

18 |

4 |

| |

vowel |

A |

528 |

10 |

11 |

| |

wine |

A |

178 |

13 |

3 |

| |

wisconsin |

B |

194 |

16 |

16 |

Table 3.

Comparison of classification on CIFAR-100 [

54] and Fashion-Mnist data set [

55] using different convolution models

Table 3.

Comparison of classification on CIFAR-100 [

54] and Fashion-Mnist data set [

55] using different convolution models

| DS |

Model |

Baseline |

MixUp |

| CIFAR-100 |

ResNet [59] |

72.22 |

78.9 |

| |

WRN [60] |

78.26 |

82.5 |

| |

Dense [61] |

81.73 |

83.23 |

| |

EfficientNetV2-M [62] |

92.2 |

- |

| |

EffNet-L2 (SAM) [63] |

96.08 |

- |

| |

CvT [64] |

94.39 |

- |

| |

PrefNet |

80.6 |

- |

| Fashion-MNIST |

MLP |

0.871 |

- |

| |

RandomForest |

0.873 |

- |

| |

LogisticRegression |

0.842 |

- |

| |

SVC |

0.897 |

- |

| |

SGDClassifier |

0.81 |

- |

| |

LSTM [65] |

0.8757 |

- |

| |

DART [66] |

0.965 |

- |

| |

PrefNet |

0.91 |

- |

Table 4.

PNN performance comparison with various approaches: supervised clustering [

58], supervised decision tree [

52],

MLP label ranking [

23] and label ranking tree forest (

LRT) [

67].

Table 4.

PNN performance comparison with various approaches: supervised clustering [

58], supervised decision tree [

52],

MLP label ranking [

23] and label ranking tree forest (

LRT) [

67].

| Label Ranking Methods |

|---|

| DS |

S.Clust. |

DT |

MLP-LR |

LRT |

PNN |

| authorship |

0.854 |

0.936(IBLR) |

0.889(LA) |

0.882 |

0.918 |

| bodyfat |

0.09 |

0.281(CC) |

0.075(CA) |

0.117 |

0.5591 |

| calhousing |

0.28 |

0.351(IBLR) |

0.130(SSGA) |

0.324 |

0.34 |

| cpu-small |

0.274 |

0.50(IBLR) |

0.357(CA) |

0.447 |

0.46 |

| elevators |

0.332 |

0.768(CC) |

0.687(LA) |

0.760 |

0.73 |

| fried |

0.176 |

0.99(CC) |

0.660(CA) |

0.890 |

0.91 |

| glass |

0.766 |

0.883(LRT) |

0.818(LA) |

0.883 |

0.8175 |

| housing |

0.246 |

0.797(LRT) |

0.574(CA) |

0.797 |

0.712 |

| iris |

0.814 |

0.966(IBLR) |

0.911(LA) |

0.947 |

0.917 |

| pendigits |

0.422 |

0.944(IBLR) |

0.752(CA) |

0.935 |

0.86 |

| segment |

0.572 |

0.959(IBLR) |

0.842(CA) |

0.949 |

0.916 |

| stock |

0.566 |

0.927(IBLR) |

0.745(CA) |

0.895 |

0.834 |

| vehicle |

0.738 |

0.862(IBLR) |

0.801(LA) |

0.827 |

0.754 |

| vowel |

0.49 |

0.90(IBLR) |

0.545(CA) |

0.794 |

0.85 |

| wine |

0.898 |

0.949(IBLR) |

0.931(LA) |

0.882 |

0.90 |

| wisconsin |

0.09 |

0.629(CC) |

0.235(CA) |

0.343 |

0.612 |

| Average |

0.475 |

0.79 |

0.621 |

0.730 |

0.755 |