Article

Version 1

Preserved in Portico This version is not peer-reviewed

Engineering A Large Language Model From Scratch

Version 1

: Received: 29 January 2024 / Approved: 30 January 2024 / Online: 31 January 2024 (02:55:47 CET)

How to cite: Oketunji, A.F. Engineering A Large Language Model From Scratch. Preprints 2024, 2024012120. https://doi.org/10.20944/preprints202401.2120.v1 Oketunji, A.F. Engineering A Large Language Model From Scratch. Preprints 2024, 2024012120. https://doi.org/10.20944/preprints202401.2120.v1

Abstract

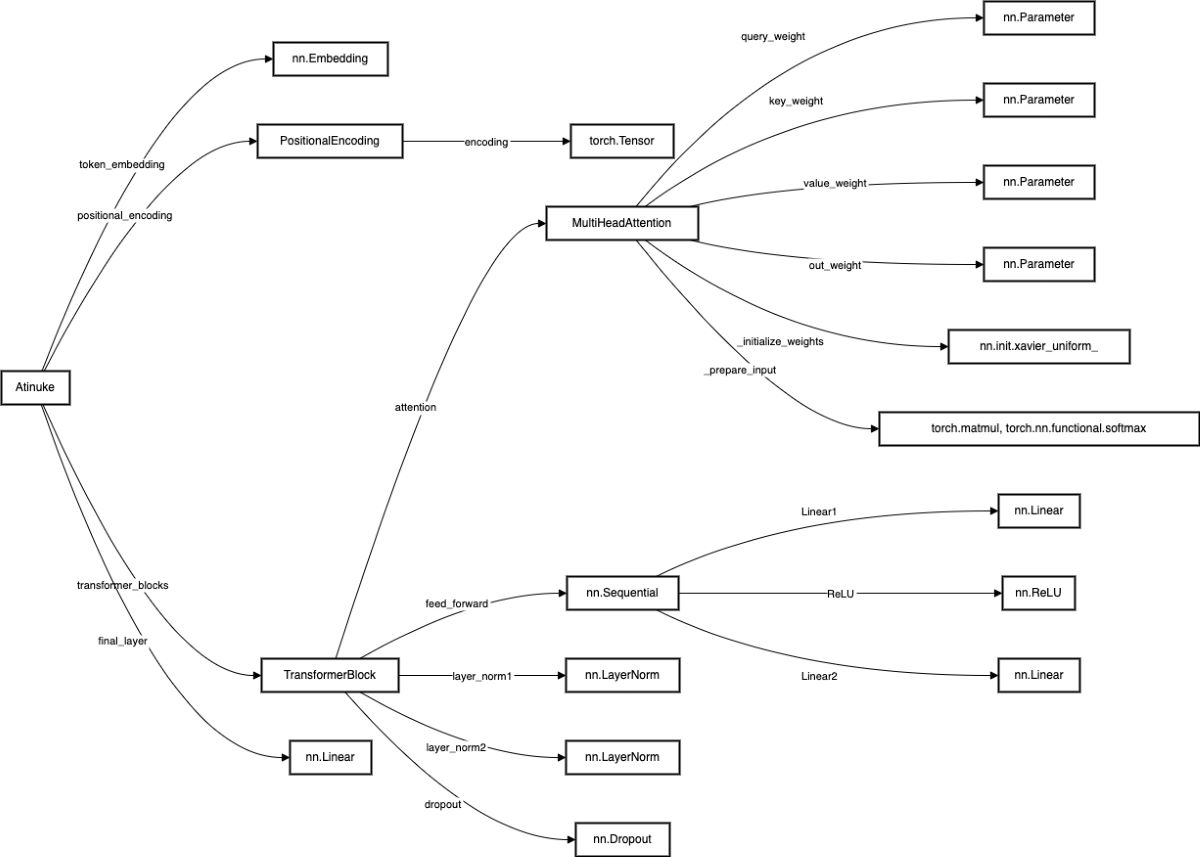

The proliferation of deep learning in natural language processing (NLP) has led to the development and release of innovative technologies capable of understanding and generating human language with remarkable proficiency. Atinuke, a Transformer-based neural network, optimises performance across various language tasks by utilising a unique configuration. The architecture interweaves layers for processing sequential data with attention mechanisms to draw meaningful affinities between inputs and outputs. Due to the configuration of its topology and hyperparameter tuning, it can emulate human-like language by extracting features and learning complex mappings. Atinuke is modular, extensible, and integrates seamlessly with existing machine learning pipelines. Advanced matrix operations like softmax, embeddings, and multi-head attention enable nuanced handling of textual, acoustic, and visual signals. By unifying modern deep learning techniques with software design principles and mathematical theory, the system achieves state-of-the-art results on natural language tasks whilst remaining interpretable and robust.

Keywords

Deep Learning; Natural Language Processing; Transformer-based Network; Atinuke; Attention Mechanisms; Hyperparameter Tuning; Multi-Head Attention; Embeddings; Machine Learning; Pipelines; State-of-the-Art (SOTA) Results

Subject

Computer Science and Mathematics, Artificial Intelligence and Machine Learning

Copyright: This is an open access article distributed under the Creative Commons Attribution License which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Comments (0)

We encourage comments and feedback from a broad range of readers. See criteria for comments and our Diversity statement.

Leave a public commentSend a private comment to the author(s)

* All users must log in before leaving a comment